LEA Detection and Tracking Method for Color-Independent Visual-MIMO

Abstract

:1. Introduction

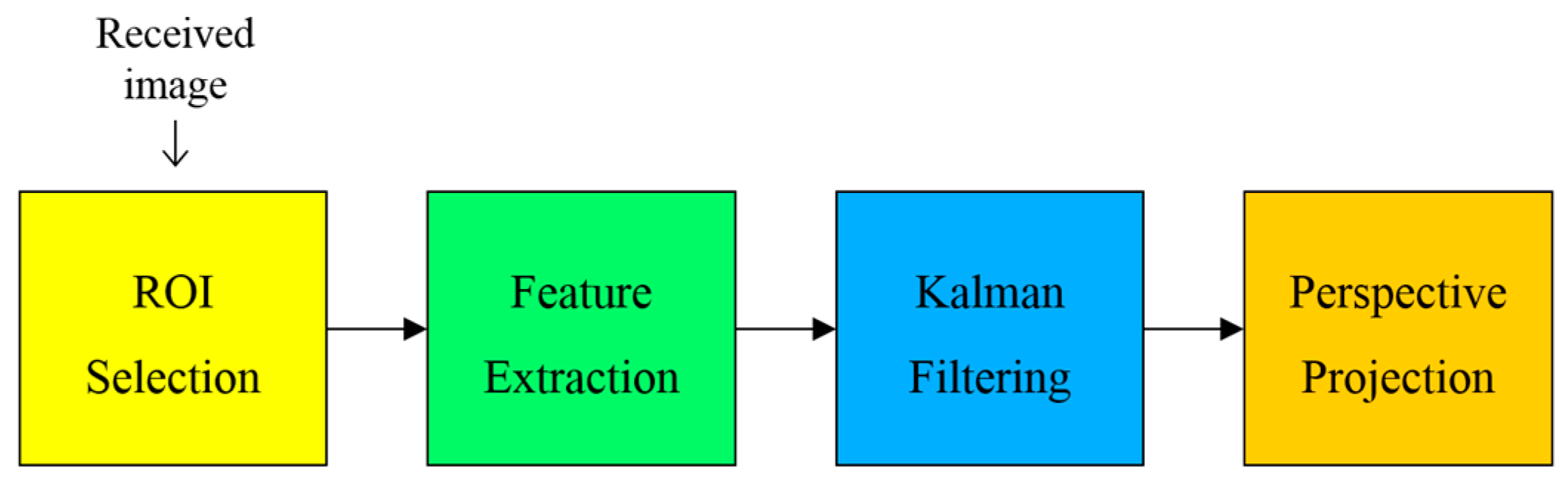

2. LEA Detection and Tracking

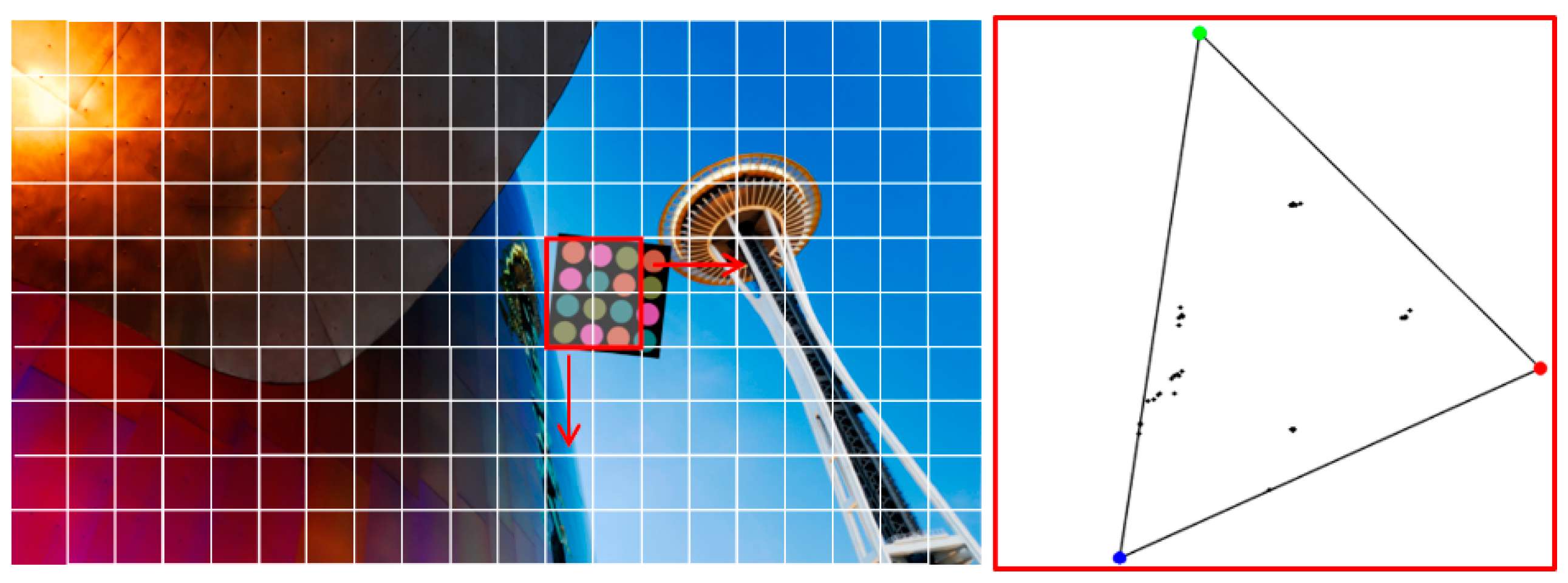

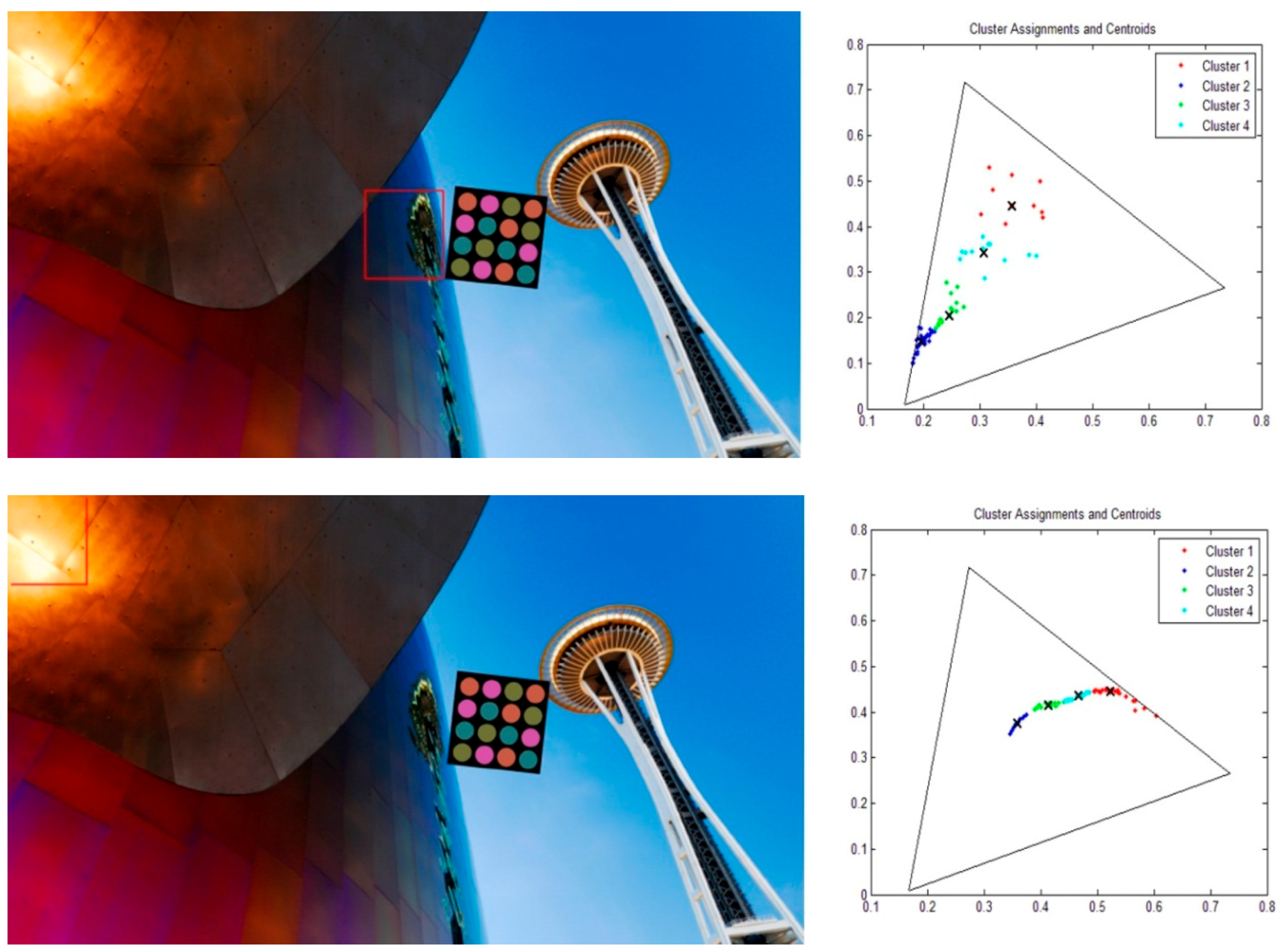

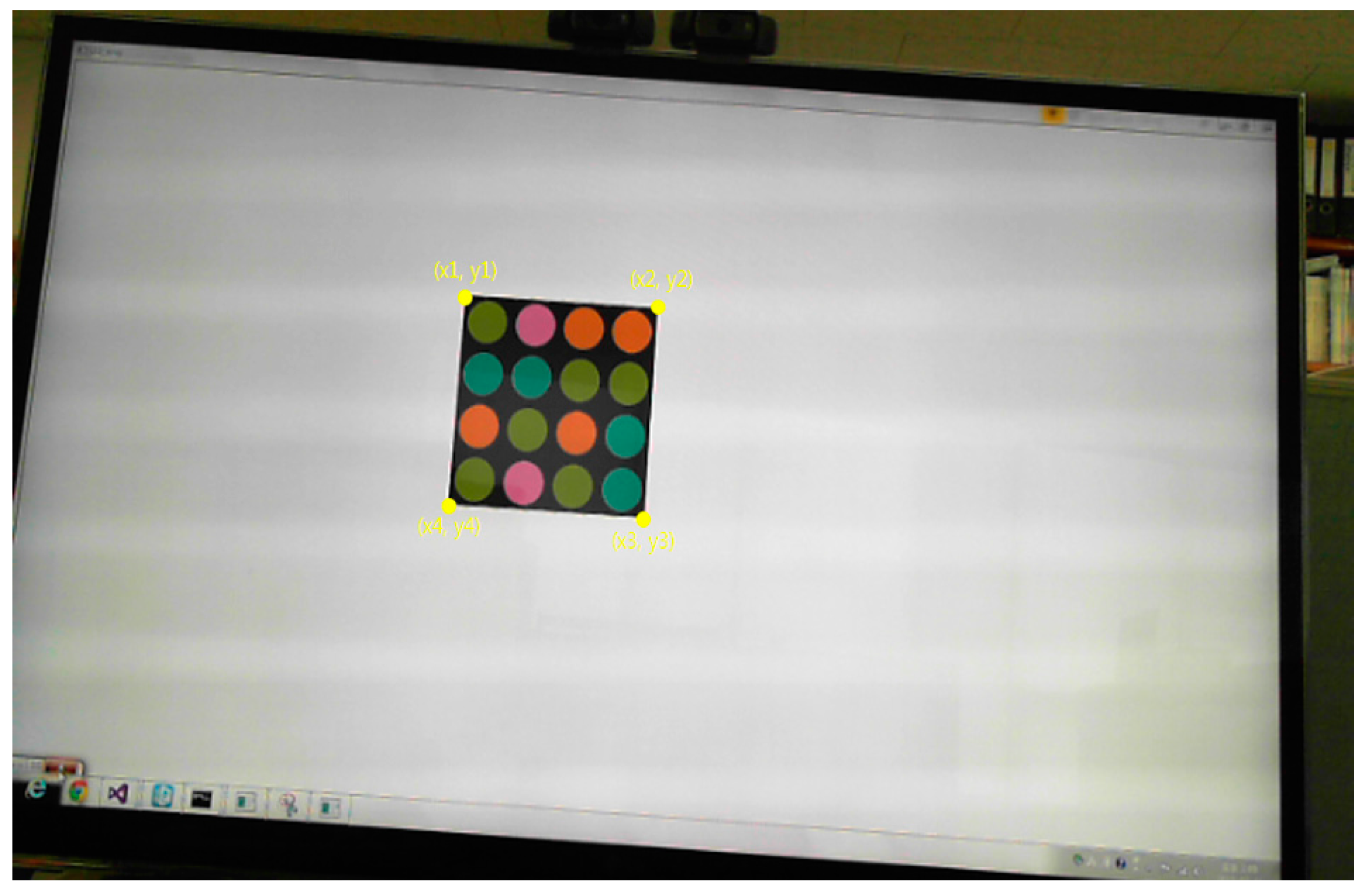

2.1. ROI Selection for LEA Detection

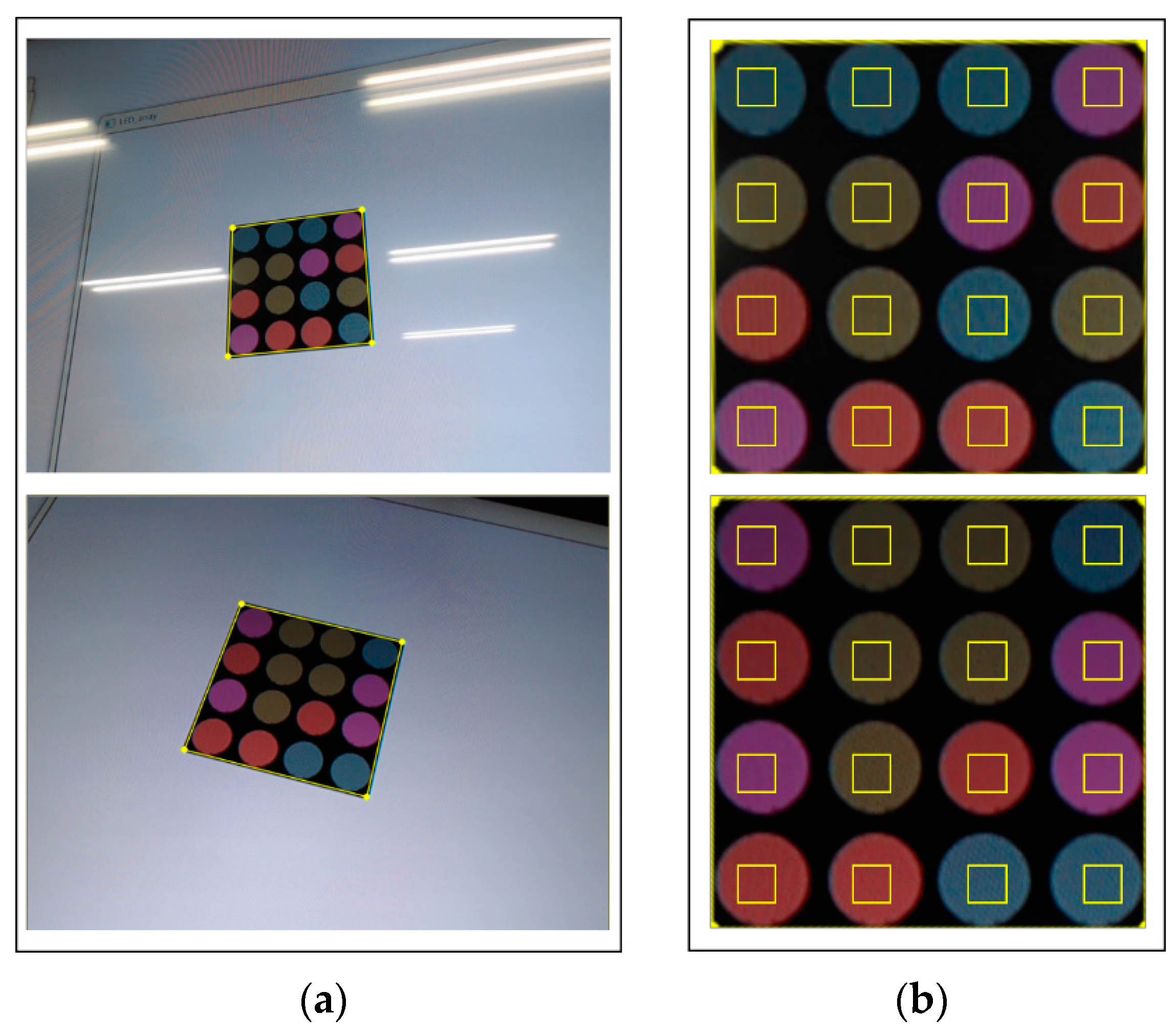

2.2. LEA Detection Using the Harris Corner Method

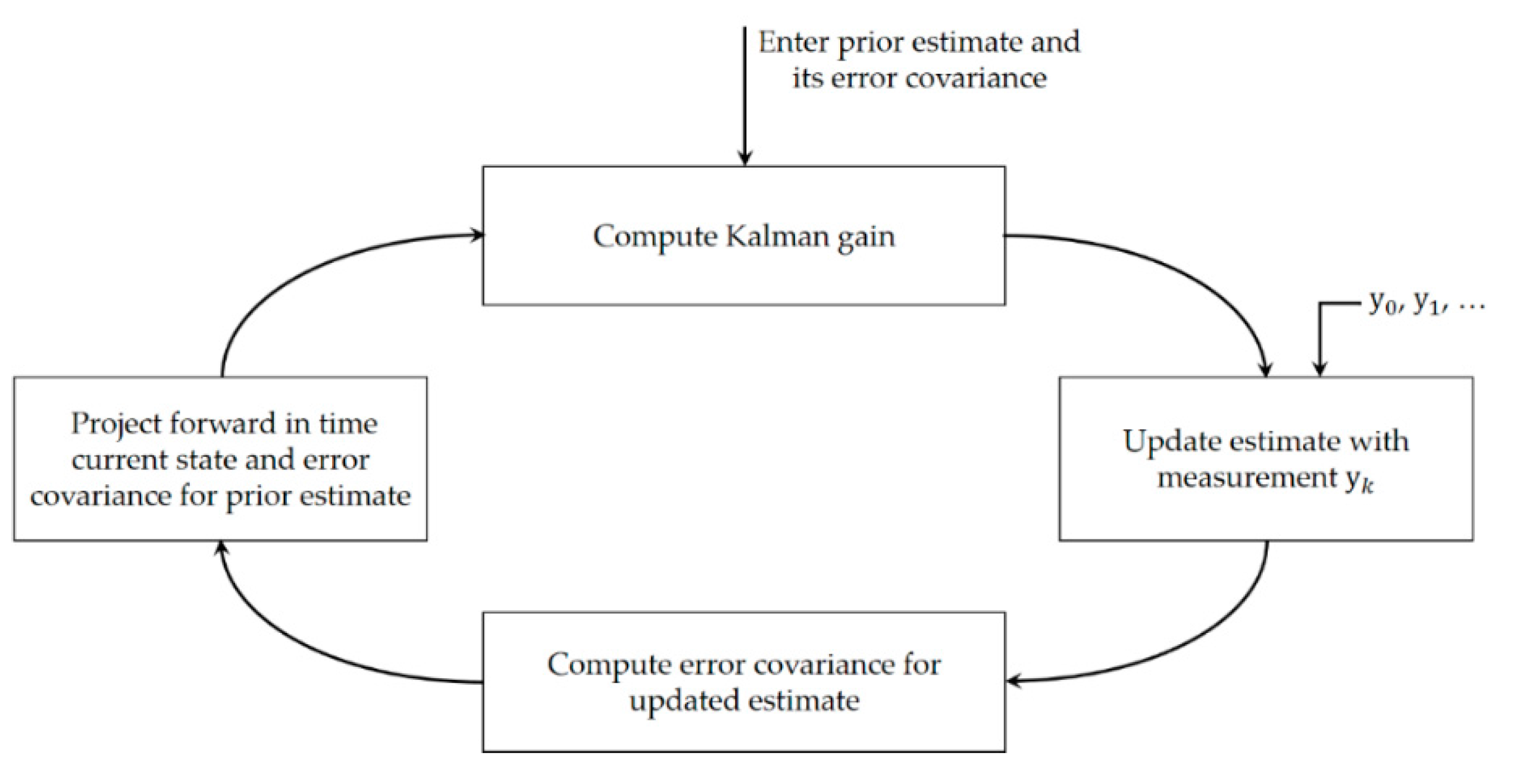

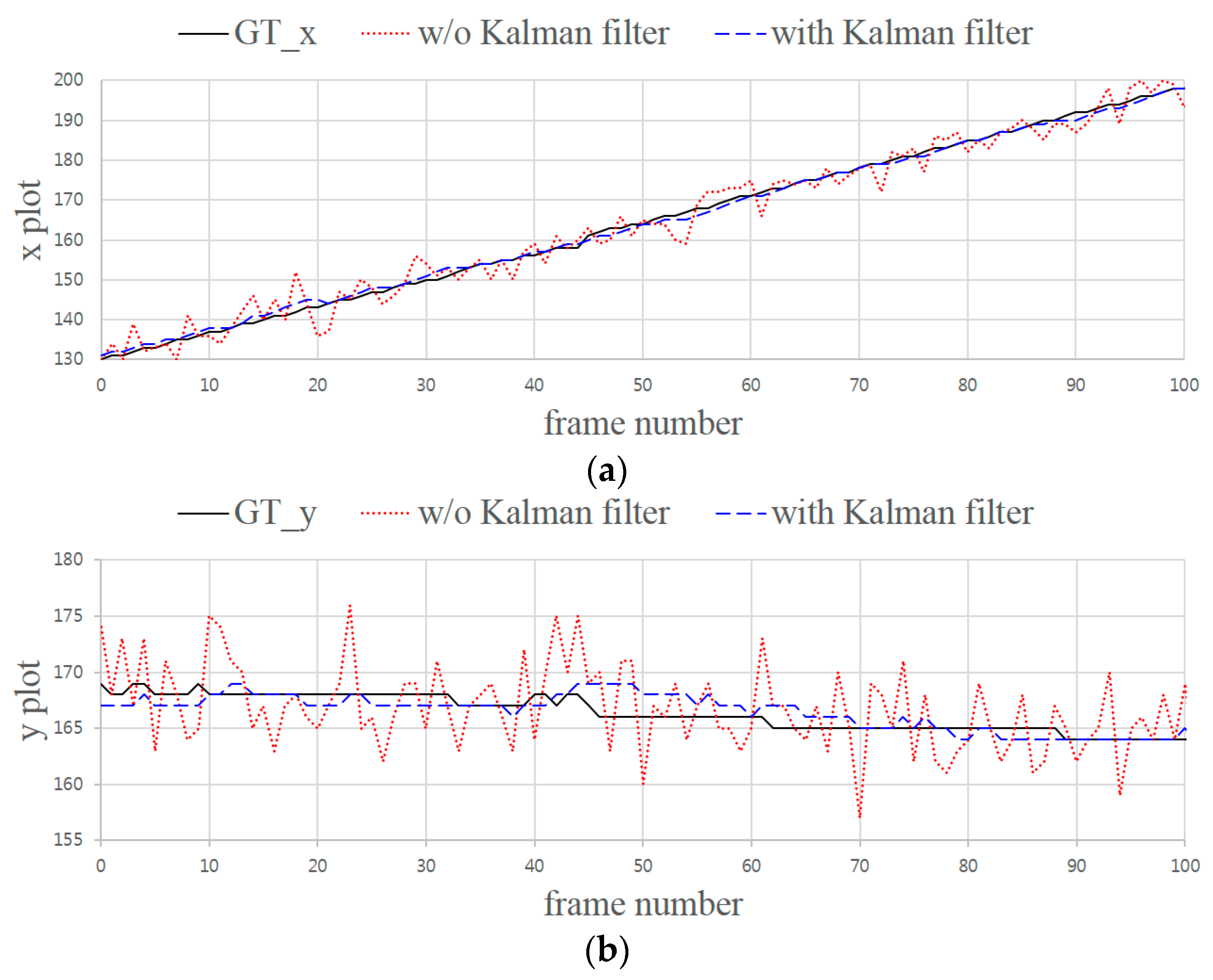

2.3. LEA Tracking with Kalman Filtering

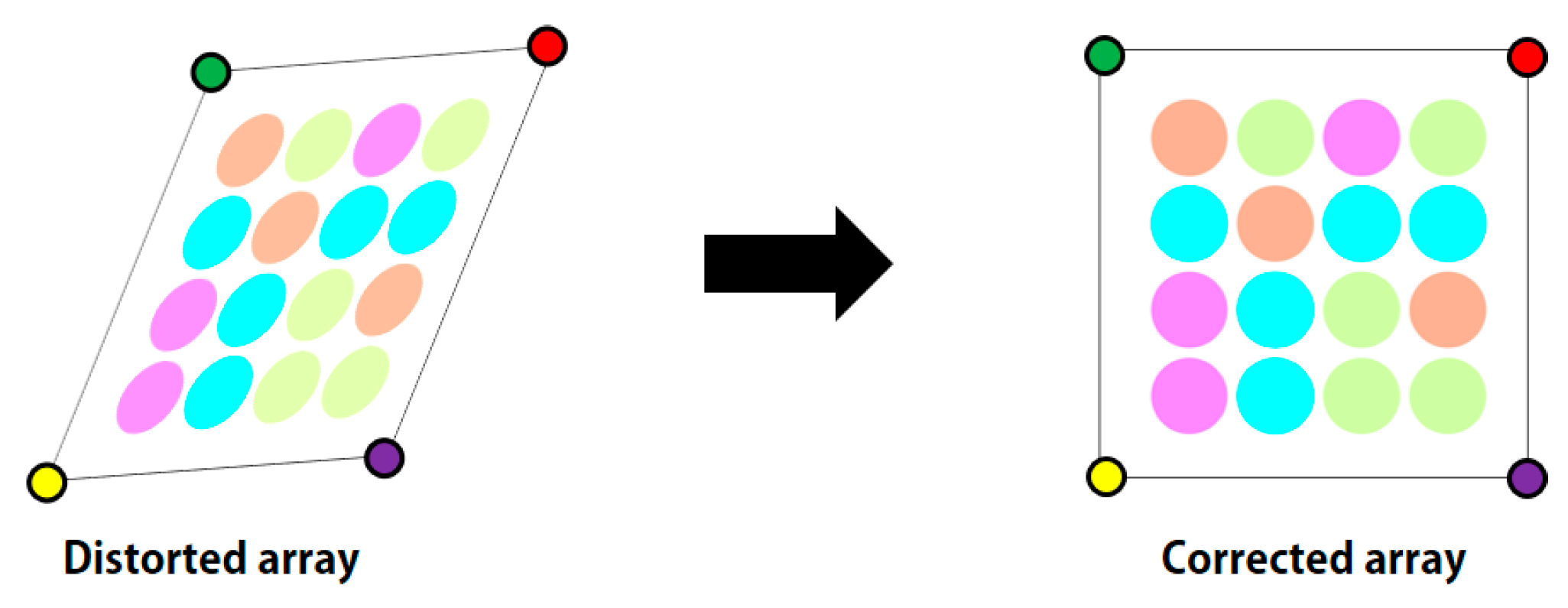

2.4. Perspective Projection for Correcting Image Distortion

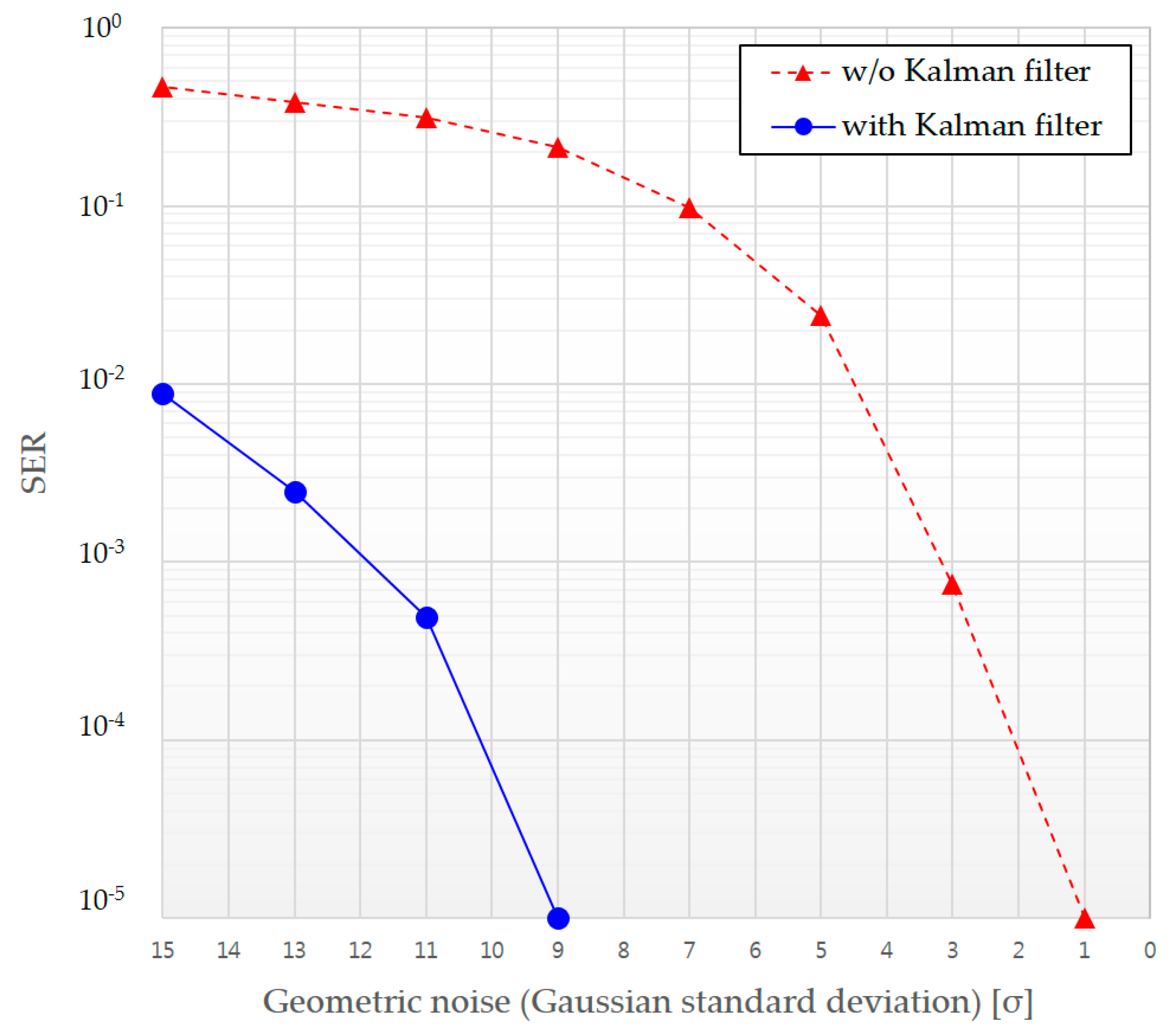

3. Results and Discussion

4. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| BER | bit error rate |

| GCM | generalized color modulation |

| CSBM | color-space-based modulation |

| LEA | light emitting array |

| LED | light emitting diode |

| LOS | line-of-sight |

| MIMO | multiple-input multiple-output |

| ROI | region of interest |

| SER | symbol error rate |

| SNR | signal-to-noise ratio |

| V2V | vehicle-to-vehicle |

| V2X | vehicle-to-everything |

| VLC | visible light communication |

References

- Ashokz, A.; Gruteserz, M.; Mandayamz, N.; Dana, K. Characterizing Multiplexing and Diversity in Visual MIMO. In Proceedings of the IEEE 45th Annual Conference on Information Sciences and Systems, Baltimore, MD, USA, 23–25 March 2011.

- Yuan, W.; Dana, K.; Ashok, A.; Varga, M.; Gruteser, M.; Mandayam, N. Dynamic and Invisible Messaging for Visual MIMO. In Proceedings of the IEEE Workshop on the Applications of Computer Vision, Breckenridge, CO, USA, 9–11 January 2012.

- Nagura, T.; Yamazato, T.; Katayama, M.; Yendo, T. Tracking an LED Array Transmitter for Visible Light Communications in the Driving Situation. In Proceedings of the IEEE 7th International Symposium on Wireless Communication Systems, New York, NY, USA, 19–22 September 2010.

- Nagura, T.; Yamazato, T.; Katayama, M.; Yendo, T. Improved Decoding Methods of Visible Light Communication System for ITS using LED Array and High-Speed Camera. In Proceedings of the 2010 IEEE 71st Vehicular Technology Conference, Taipei, Taiwan, 16–19 May 2010.

- Yoo, J.-H.; Jung, S.-Y. Cognitive Vision Communication Based on LED Array and Image Sensor. In Proceedings of the IEEE 56th International Midwest Symposium on Circuits and Systems, Columbus, OH, USA, 4–7 August 2013.

- Premachandra, H.C.N.; Yendo, T.; Tehrani, M.P.; Yamazato, T.; Okada, H.; Fujii, T.; Tanimot, M. High-speed-camera Image Processing Based LED Traffic Light Detection for Road-to-Vehicle Visible Light Communication. In Proceeding of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010.

- Yamazato, T.; Takai, I.; Okada, H.; Fujii, T. Image-sensor-based visible light communication for automotive applications. IEEE Mag. 2014, 52, 88–97. [Google Scholar] [CrossRef]

- Takai, I.; Harada, T.; Andoh, M.; Yasutomi, K.; Kagawa, K.; Kawahito, S. Optical Vehicle-to-Vehicle Communication System Using LED Transmitter and Camera Receiver. IEEE Photonics J. 2014, 6. [Google Scholar] [CrossRef]

- Li, T.; An, C.; Xiao, X.; Campbell, A.T.; Zhou, X. Real-Time Screen-Camera Communication Behind Any Scene. In Proceedings of the 13th Annual International Conference on Mobile Systems, Florence, Italy, 18–22 May 2015.

- Kim, J.-E.; Kim, J.-W.; Park, Y.; Kim, K.-D. Color-Space-Based Visual-MIMO for V2X Communication. Sensors 2016, 16, 898–901. [Google Scholar] [CrossRef] [PubMed]

- Das, P.; Kim, B.Y.; Park, Y.; Kim, K.D. Color-independent VLC based on a color space without sending target color information. Opt. Commun. 2013, 286, 69–73. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the 4th Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988.

- Brown, R.G.; Hwang, P.Y.C. Introduction to Random Signals and Applied Kalman Filtering with Filtering with Matlab Exercises, 4th ed.; Wiley: New Jersey, NJ, USA, 2012. [Google Scholar]

- Hartley, I.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Das, P.; Park, Y.; Kim, K.-D. Performance Analysis of Color-Independent Visible Light Communication Using a Color-Space-Based Constellation Diagram and Modulation Scheme. Wirel. Pers. Commun. 2014, 74, 665–682. [Google Scholar] [CrossRef]

- Berns, R.S. Principles of Color Technology, 3rd ed.; Wiley: New Jersey, NJ, USA, 2000; pp. 44–62. [Google Scholar]

- Shi, N.; Liu, X.; Guan, Y. Research on k-means Clustering Algorithm: An Improved k-means Clustering Algorithm. In Proceedings of the 2010 3rd International Symposium on Intelligent Information Technology and Security Informatics, Jinggangshan, China, 2–4 April 2010.

- Soo, S.T.; Thomas, B. A Reliability Point and Kalman Filter-Based Vehicle Tracking Technique. In Proceedings of the International Conference on Intelligent Systems, Penang, Malaysia, 19–20 May 2012.

- Affine and Projective Transformations. Available online: http://www.graphicsmill.com/docs/gm5/Transformations.htm (accessed on 23 February 2016).

| Parameter | Value |

|---|---|

| Color space | CIE1931 |

| RGB model | CIE RGB |

| Reference white | E |

| LED array size | 4 × 4 (16) |

| Number of constellation points | 4 |

| Intensity (Y value) | 0.165 |

| Total number of symbols transmitted | 16,000 |

| Three positions of RGB LEDs in the CIE1931 space | R: (0.0735, 0.265) |

| G: (0.274, 0.717) | |

| B: (0.167, 0.009) |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.-E.; Kim, J.-W.; Kim, K.-D. LEA Detection and Tracking Method for Color-Independent Visual-MIMO. Sensors 2016, 16, 1027. https://doi.org/10.3390/s16071027

Kim J-E, Kim J-W, Kim K-D. LEA Detection and Tracking Method for Color-Independent Visual-MIMO. Sensors. 2016; 16(7):1027. https://doi.org/10.3390/s16071027

Chicago/Turabian StyleKim, Jai-Eun, Ji-Won Kim, and Ki-Doo Kim. 2016. "LEA Detection and Tracking Method for Color-Independent Visual-MIMO" Sensors 16, no. 7: 1027. https://doi.org/10.3390/s16071027