Journal Description

Computation

Computation

is a peer-reviewed journal of computational science and engineering published monthly online by MDPI.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), CAPlus / SciFinder, Inspec, dblp, and other databases.

- Journal Rank: JCR - Q2 (Mathematics, Interdisciplinary Applications) / CiteScore - Q1 (Applied Mathematics)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 14.8 days after submission; acceptance to publication is undertaken in 5.6 days (median values for papers published in this journal in the second half of 2025).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

- Journal Cluster of Mathematics and Its Applications: AppliedMath, Axioms, Computation, Fractal and Fractional, Geometry, International Journal of Topology, Logics, Mathematics and Symmetry.

Impact Factor:

1.9 (2024);

5-Year Impact Factor:

1.9 (2024)

Latest Articles

The Health-Wealth Gradient in Labor Markets: Integrating Health, Insurance, and Social Metrics to Predict Employment Density

Computation 2026, 14(1), 22; https://doi.org/10.3390/computation14010022 - 15 Jan 2026

Abstract

Labor market forecasting relies heavily on economic time-series data, often overlooking the “health–wealth” gradient that links population health to workforce participation. This study develops a machine learning framework integrating non-traditional health and social metrics to predict state-level employment density. Methods: We constructed a

[...] Read more.

Labor market forecasting relies heavily on economic time-series data, often overlooking the “health–wealth” gradient that links population health to workforce participation. This study develops a machine learning framework integrating non-traditional health and social metrics to predict state-level employment density. Methods: We constructed a multi-source longitudinal dataset (2014–2024) by aggregating county-level Quarterly Census of Employment and Wages (QCEW) data with County Health Rankings to the state level. Using a time-aware split to evaluate performance across the COVID-19 structural break, we compared LASSO, Random Forest, and regularized XGBoost models, employing SHAP values for interpretability. Results: The tuned, regularized XGBoost model achieved strong out-of-sample performance (Test

(This article belongs to the Special Issue Applications of Machine Learning and Data Science Methods in Social Sciences)

►

Show Figures

Open AccessArticle

Solving the Synthesis Problem Self-Organizing Control System in the Class of Elliptical Accidents Optics for Objects with One Input and One Output

by

Maxot Rakhmetov, Ainagul Adiyeva, Balaussa Orazbayeva, Shynar Yelezhanova, Raigul Tuleuova and Raushan Moldasheva

Computation 2026, 14(1), 21; https://doi.org/10.3390/computation14010021 - 14 Jan 2026

Abstract

Nonlinear single-input single-output (SISO) systems operating under parametric uncertainty often exhibit bifurcations, multistability, and deterministic chaos, which significantly limit the effectiveness of classical linear, adaptive, and switching control methods. This paper proposes a novel synthesis framework for self-organizing control systems based on catastrophe

[...] Read more.

Nonlinear single-input single-output (SISO) systems operating under parametric uncertainty often exhibit bifurcations, multistability, and deterministic chaos, which significantly limit the effectiveness of classical linear, adaptive, and switching control methods. This paper proposes a novel synthesis framework for self-organizing control systems based on catastrophe theory, specifically within the class of elliptic catastrophes. Unlike conventional approaches that stabilize a predefined system structure, the proposed method embeds the control law directly into a structurally stable catastrophe model, enabling autonomous bifurcation-driven transitions between stable equilibria. The synthesis procedure is formulated using a Lyapunov vector-function gradient–velocity method, which guarantees aperiodic robust stability under parametric uncertainty. The definiteness of the Lyapunov functions is established using Morse’s lemma, providing a rigorous stability foundation. To support practical implementation, a data-driven parameter tuning mechanism based on self-organizing maps (SOM) is integrated, allowing adaptive adjustment of controller coefficients while preserving Lyapunov stability conditions. Simulation results demonstrate suppression of chaotic regimes, smooth bifurcation-induced transitions between stable operating modes, and improved transient performance compared to benchmark adaptive control schemes. The proposed framework provides a structurally robust alternative for controlling nonlinear systems in uncertain and dynamically changing environments.

Full article

(This article belongs to the Topic A Real-World Application of Chaos Theory)

►▼

Show Figures

Figure 1

Open AccessArticle

AFAD-MSA: Dataset and Models for Arabic Fake Audio Detection

by

Elsayed Issa

Computation 2026, 14(1), 20; https://doi.org/10.3390/computation14010020 - 14 Jan 2026

Abstract

As generative speech synthesis produces near-human synthetic voices and reliance on online media grows, robust audio-deepfake detection is essential to fight misuse and misinformation. In this study, we introduce the Arabic Fake Audio Dataset for Modern Standard Arabic (AFAD-MSA), a curated corpus of

[...] Read more.

As generative speech synthesis produces near-human synthetic voices and reliance on online media grows, robust audio-deepfake detection is essential to fight misuse and misinformation. In this study, we introduce the Arabic Fake Audio Dataset for Modern Standard Arabic (AFAD-MSA), a curated corpus of authentic and synthetic Arabic speech designed to advance research on Arabic deepfake and spoofed-speech detection. The synthetic subset is generated with four state-of-the-art proprietary text-to-speech and voice-conversion models. Rich metadata—covering speaker attributes and generation information—is provided to support reproducibility and benchmarking. To establish reference performance, we trained three AASIST models and compared their performance to two baseline transformer detectors (Wav2Vec 2.0 and Whisper). On the AFAD-MSA test split, AASIST-2 achieved perfect accuracy, surpassing the baseline models. However, its performance declined under cross-dataset evaluation. These results underscore the importance of data construction. Detectors generalize best when exposed to diverse attack types. In addition, continual or contrastive training that interleaves bona fide speech with large, heterogeneous spoofed corpora will further improve detectors’ robustness.

Full article

(This article belongs to the Special Issue Recent Advances on Computational Linguistics and Natural Language Processing)

►▼

Show Figures

Figure 1

Open AccessArticle

Multifidelity Topology Design for Thermal–Fluid Devices via SEMDOT Algorithm

by

Yiding Sun, Yun-Fei Fu, Shuzhi Xu and Yifan Guo

Computation 2026, 14(1), 19; https://doi.org/10.3390/computation14010019 - 12 Jan 2026

Abstract

Designing thermal–fluid devices that reduce peak temperature while limiting pressure loss is challenging because high-fidelity (HF) Navier–Stokes–convection simulations make direct HF-driven topology optimization computationally expensive. This study presents a two-dimensional, steady, laminar multifidelity topology design framework for thermal–fluid devices operating in a low-to-moderate

[...] Read more.

Designing thermal–fluid devices that reduce peak temperature while limiting pressure loss is challenging because high-fidelity (HF) Navier–Stokes–convection simulations make direct HF-driven topology optimization computationally expensive. This study presents a two-dimensional, steady, laminar multifidelity topology design framework for thermal–fluid devices operating in a low-to-moderate Reynolds number regime. A computationally efficient low-fidelity (LF) Darcy–convection model is used for topology optimization, where SEMDOT decouples geometric smoothness from the analysis field to produce CAD-ready boundaries. The LF optimization minimizes a P-norm aggregated temperature subject to a prescribed volume fraction constraint; the inlet–outlet pressure difference and the P-norm parameter are varied to generate a diverse candidate set. All candidates are then evaluated using a steady incompressible HF Navier–Stokes–convection model in COMSOL 6.3 under a consistent operating condition (fixed flow; pressure drop reported as an output). In representative single- and multi-channel case studies, SEMDOT designs reduce the HF peak temperature (e.g., ~337 K to ~323 K) while also reducing the pressure drop (e.g., ~18.7 Pa to ~12.6 Pa) relative to conventional straight-channel layouts under the same operating point. Compared with a conventional RAMP-based pipeline under the tested settings, the proposed approach yields a more favorable Pareto distribution (normalized hypervolume 1.000 vs. 0.923).

Full article

(This article belongs to the Special Issue Advanced Topology Optimization: Methods and Applications)

►▼

Show Figures

Graphical abstract

Open AccessArticle

Finite Element Analysis of Stress and Displacement in the Distal Femur: A Comparative Study of Normal and Osteoarthritic Bone Under Knee Flexion

by

Kamonchat Trachoo, Inthira Chaiya and Din Prathumwan

Computation 2026, 14(1), 18; https://doi.org/10.3390/computation14010018 - 12 Jan 2026

Abstract

Osteoarthritis (OA) is a progressive degenerative joint disease that fundamentally alters the mechanical environment of the knee. This study utilizes a finite element framework to evaluate the biomechanical response of the distal femur in healthy and osteoarthritic conditions across critical functional postures. To

[...] Read more.

Osteoarthritis (OA) is a progressive degenerative joint disease that fundamentally alters the mechanical environment of the knee. This study utilizes a finite element framework to evaluate the biomechanical response of the distal femur in healthy and osteoarthritic conditions across critical functional postures. To isolate the bone’s inherent structural stiffness and avoid numerical artifacts, a free-body computational approach was implemented, omitting external surface fixations. The distal femur was modeled as a linearly elastic domain with material properties representing healthy tissue and OA-induced degradation. Simulations were performed under passive gravitational loading at knee flexion angles of

(This article belongs to the Section Computational Biology)

►▼

Show Figures

Figure 1

Open AccessArticle

Approximate Analytical Solutions of Nonlinear Jerk Equations Using the Parameter Expansion Method

by

Gamal M. Ismail, Galal M. Moatimid and Stylianos V. Kontomaris

Computation 2026, 14(1), 17; https://doi.org/10.3390/computation14010017 - 12 Jan 2026

Abstract

The Parameter Expansion Method (PEM) is employed to study nonlinear Jerk equations, which are often difficult to solve because of their strong nonlinearity. This method provides higher accuracy and broader applicability, enabling analytical insights and closed-form approximations. This study explores the use of

[...] Read more.

The Parameter Expansion Method (PEM) is employed to study nonlinear Jerk equations, which are often difficult to solve because of their strong nonlinearity. This method provides higher accuracy and broader applicability, enabling analytical insights and closed-form approximations. This study explores the use of Prof. He’s PEM to derive approximate analytical solutions of the nonlinear third-order Jerk equation, this model is commonly encountered in the analysis of complex dynamical systems across physics and engineering. Owing to the strong nonlinearity inherent in Jerk equations, exact solutions are often unattainable. The PEM provides a simple, effective framework by expanding the solution with respect to an embedding parameter, allowing accurate approximations without the need of small parameters or linearization. The method’s reliability and precision are validated through comparisons with numerical simulations, demonstrating its practicality and robustness in tackling nonlinear problems. The results indicate that PEM provides highly accurate approximations of nonlinear Jerk equation, showcasing greater simplicity and efficiency relative to other analytical methods, along with excellent concordance with numerical simulations. Additionally, the nonlinear Jerk equation demonstrates exact approximate solutions via PEM, closely mirroring numerical results and surpassing several contemporary analytical techniques in efficiency and usability. Furthermore, the study indicates that PEM is a straightforward and effective approach in solving nonlinear Jerk equation. It generates accurate estimates that nearly align with numerical simulations and surpass numerous other analytical methods.

Full article

(This article belongs to the Special Issue Nonlinear System Modelling and Control)

►▼

Show Figures

Figure 1

Open AccessCommunication

Linguistic Influence on Multidimensional Word Embeddings: Analysis of Ten Languages

by

Anna V. Aleshina, Andrey L. Bulgakov, Yanliang Xin and Larisa S. Skrebkova

Computation 2026, 14(1), 16; https://doi.org/10.3390/computation14010016 - 9 Jan 2026

Abstract

Understanding how linguistic typology shapes multilingual embeddings is important for cross-lingual NLP. We examine static MUSE word embedding for ten diverse languages (English, Russian, Chinese, Arabic, Indonesian, German, Lithuanian, Hindi, Tajik and Persian). Using pairwise cosine distances, Random Forest classification, and UMAP visualization,

[...] Read more.

Understanding how linguistic typology shapes multilingual embeddings is important for cross-lingual NLP. We examine static MUSE word embedding for ten diverse languages (English, Russian, Chinese, Arabic, Indonesian, German, Lithuanian, Hindi, Tajik and Persian). Using pairwise cosine distances, Random Forest classification, and UMAP visualization, we find that language identity and script type largely determine embedding clusters, with morphological complexity affecting cluster compactness and lexical overlap connecting clusters. The Random Forest model predicts language labels with high accuracy (≈98%), indicating strong language-specific patterns in embedding space. These results highlight script, morphology, and lexicon as key factors influencing multilingual embedding structures, informing linguistically aware design of cross-lingual models.

Full article

(This article belongs to the Special Issue Recent Advances on Computational Linguistics and Natural Language Processing)

►▼

Show Figures

Figure 1

Open AccessArticle

Numerical Simulation of Diffusion in Cylindrical Pores: The Influence of Pore Radius on Particle Capture Kinetics

by

Valeriy E. Arkhincheev, Bair V. Khabituev, Daniil F. Deriugin and Stanislav P. Maltsev

Computation 2026, 14(1), 15; https://doi.org/10.3390/computation14010015 - 8 Jan 2026

Abstract

The diffusion and trapping of particles in complex porous media are fundamental processes in materials science and bioengineering. This study systematically investigates the influence of pore radius on particle capture kinetics within a three-dimensional cylindrical pore containing randomly distributed absorbing traps. Numerical simulations

[...] Read more.

The diffusion and trapping of particles in complex porous media are fundamental processes in materials science and bioengineering. This study systematically investigates the influence of pore radius on particle capture kinetics within a three-dimensional cylindrical pore containing randomly distributed absorbing traps. Numerical simulations were performed for a wide range of pore radii (from 3a to 81a, a is a minimal length of the problem, arbitrary unit) and trap concentrations M (from 100 to 5090, these numbers are determined by the pore geometry) using a random walk algorithm. The particle lifetime (τ), characterizing the capture rate, was calculated and analyzed. Results reveal three distinct capture regimes dependent on trap concentration: a diffusion-limited regime at low concentration M (<1000), a transition regime at medium M (1000 < M < 2000), and a trap-density-dominated saturation regime at high M (>2000). For each regime, optimal approximating functions for τ(M) were identified. Furthermore, empirical relationships between the approximating coefficients and the pore radius were derived, which enable the prediction of particle lifetimes. The findings demonstrate that while the pore radius significantly impacts capture kinetics at low trap densities, its influence diminishes as trap concentration increases, converging towards a universal behavior dominated by trap density.

Full article

(This article belongs to the Section Computational Engineering)

►▼

Show Figures

Graphical abstract

Open AccessArticle

Ab Initio Computational Investigations of Low-Lying Electronic States of Yttrium Lithide and Scandium Lithide

by

Jean Tabet, Nancy Zgheib, Sylvie Magnier and Fadia Taher

Computation 2026, 14(1), 14; https://doi.org/10.3390/computation14010014 - 8 Jan 2026

Abstract

Ab initio studies using CASSCF/MRCI calculations have been performed to investigate the spectroscopic properties of YLi and ScLi molecules. Our calculations have computed 25 singlet and triplet states for YLi and 37 electronic states for ScLi. The lowest lying states, including the ground

[...] Read more.

Ab initio studies using CASSCF/MRCI calculations have been performed to investigate the spectroscopic properties of YLi and ScLi molecules. Our calculations have computed 25 singlet and triplet states for YLi and 37 electronic states for ScLi. The lowest lying states, including the ground state 1∑+ of YLi, have been investigated for the first time. The spin–orbit coupling in YLi has also been assessed from the splitting between Ω components generated from the lowest triplet lying Λ–S states. Regarding ScLi, the ground state is found to be the (1)3Δ state. Spectroscopic constants, energy levels at equilibrium, permanent dipole moments, and transition dipole moments have also been calculated. The potential energy curves for all calculated states have been displayed to large bond internuclear distances. In both ScLi and YLi, the potential energy curves have shown a small dissociation energy for the lowest states (1) 1,3Δ, (1) 1,3Π and (1) 1,3∑+.

Full article

(This article belongs to the Special Issue Feature Papers in Computational Chemistry)

►▼

Show Figures

Figure 1

Open AccessArticle

Numerical Error Analysis of the Poisson Equation Under RHS Inaccuracies in Particle-in-Cell Simulations

by

Kai Zhang, Tao Xiao, Weizong Wang and Bijiao He

Computation 2026, 14(1), 13; https://doi.org/10.3390/computation14010013 - 7 Jan 2026

Abstract

Particle-in-Cell (PIC) simulations require accurate solutions of the electrostatic Poisson equation, yet accuracy often degrades near irregular Dirichlet boundaries on Cartesian meshes. While prior work has focused on left-hand-side (LHS) discretization errors, the impact of right-hand-side (RHS) inaccuracies arising from charge deposition near

[...] Read more.

Particle-in-Cell (PIC) simulations require accurate solutions of the electrostatic Poisson equation, yet accuracy often degrades near irregular Dirichlet boundaries on Cartesian meshes. While prior work has focused on left-hand-side (LHS) discretization errors, the impact of right-hand-side (RHS) inaccuracies arising from charge deposition near boundaries remains largely unexplored. This study analyzes numerical errors induced by underestimated RHS values at near-boundary nodes when using embedded finite difference schemes with linear and quadratic boundary treatments. Analytical results in one dimension and truncation error analyses in two dimensions show that RHS inaccuracies affect the two schemes in fundamentally different ways: They reduce boundary-induced errors in the linear scheme but introduce zeroth-order truncation errors in the quadratic scheme, leading to larger global errors. Numerical experiments in one, two, and three dimensions confirm these predictions. In two-dimensional tests, RHS inaccuracies reduce the

(This article belongs to the Section Computational Engineering)

►▼

Show Figures

Graphical abstract

Open AccessArticle

A Physics-Informed Neural Network Aided Venturi–Microwave Co-Sensing Method for Three-Phase Metering

by

Jinhua Tan, Yuxiao Yuan, Ying Xu, Jingya Wang, Zirui Song, Rongji Zuo, Zhengyang Chen and Chao Yuan

Computation 2026, 14(1), 12; https://doi.org/10.3390/computation14010012 - 5 Jan 2026

Abstract

►▼

Show Figures

Addressing the challenges of online measurement of oil-gas-water three-phase flow under high gas–liquid ratio (GVF > 90%) conditions (fire-driven mining, gas injection mining, natural gas mining), which rely heavily on radioactive sources, this study proposes an integrated, radiation-source-free three-phase measurement scheme utilizing a

[...] Read more.

Addressing the challenges of online measurement of oil-gas-water three-phase flow under high gas–liquid ratio (GVF > 90%) conditions (fire-driven mining, gas injection mining, natural gas mining), which rely heavily on radioactive sources, this study proposes an integrated, radiation-source-free three-phase measurement scheme utilizing a “Venturi tube-microwave resonator”. Additionally, a physics-informed neural network (PINN) is introduced to predict the volumetric flow rate of oil-gas-water three-phase flow. Methodologically, the main features are the Venturi differential pressure signal (

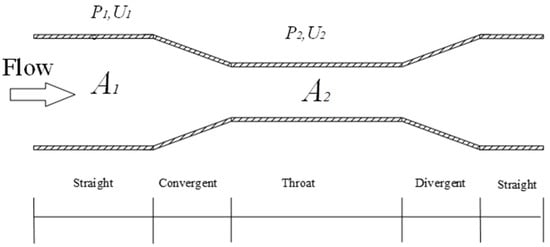

Figure 1

Open AccessArticle

A Hybrid Gradient-Based Optimiser for Solving Complex Engineering Design Problems

by

Jamal Zraqou, Riyad Alrousan, Zaid Khrisat, Faten Hamad, Niveen Halalsheh and Hussam Fakhouri

Computation 2026, 14(1), 11; https://doi.org/10.3390/computation14010011 - 4 Jan 2026

Abstract

This paper proposes JADEGBO, a hybrid gradient-based metaheuristic for solving complex single- and multi-constraint engineering design problems as well as cost-sensitive security optimisation tasks. The method combines Adaptive Differential Evolution with Optional External Archive (JADE), which provides self-adaptive exploration through p-best mutation,

[...] Read more.

This paper proposes JADEGBO, a hybrid gradient-based metaheuristic for solving complex single- and multi-constraint engineering design problems as well as cost-sensitive security optimisation tasks. The method combines Adaptive Differential Evolution with Optional External Archive (JADE), which provides self-adaptive exploration through p-best mutation, an external archive, and success-based parameter learning, with the Gradient-Based Optimiser (GBO), which contributes Newton-inspired gradient search rules and a local escaping operator. In the proposed scheme, JADE is first employed to discover promising regions of the search space, after which GBO performs an intensified local refinement of the best individuals inherited from JADE. The performance of JADEGBO is assessed on the CEC2017 single-objective benchmark suite and compared against a broad set of classical and recent metaheuristics. Statistical indicators, convergence curves, box plots, histograms, sensitivity analyses, and scatter plots show that the hybrid typically attains the best or near-best mean fitness, exhibits low run-to-run variance, and maintains a favourable balance between exploration and exploitation across rotated, shifted, and composite landscapes. To demonstrate practical relevance, JADEGBO is further applied to the following four well-known constrained engineering design problems: welded beam, pressure vessel, speed reducer, and three-bar truss design. The algorithm consistently produces feasible high-quality designs and closely matches or improves upon the best reported results while keeping computation time competitive.

Full article

(This article belongs to the Section Computational Engineering)

►▼

Show Figures

Figure 1

Open AccessArticle

Do LLMs Speak BPMN? An Evaluation of Their Process Modeling Capabilities Based on Quality Measures

by

Panagiotis Drakopoulos, Panagiotis Malousoudis, Nikolaos Nousias, George Tsakalidis and Kostas Vergidis

Computation 2026, 14(1), 10; https://doi.org/10.3390/computation14010010 - 4 Jan 2026

Abstract

Large Language Models (LLMs) are emerging as powerful tools for automating business process modeling, promising to streamline the translation of textual process descriptions into Business Process Model and Notation (BPMN) diagrams. However, the extent to which these Al systems can produce high-quality BPMN

[...] Read more.

Large Language Models (LLMs) are emerging as powerful tools for automating business process modeling, promising to streamline the translation of textual process descriptions into Business Process Model and Notation (BPMN) diagrams. However, the extent to which these Al systems can produce high-quality BPMN models has not yet been rigorously evaluated. This paper presents an early evaluation of five LLM-powered BPMN generation tools that automatically convert textual process descriptions into BPMN models. To assess the external quality of these Al-generated models, we introduce a novel structured evaluation framework that scores each BPMN diagram across three key process model quality dimensions: clarity, correctness, and completeness, covering both accuracy and diagram understandability. Using this framework, we conducted experiments where each tool was tasked with modeling the same set of textual process scenarios, and the resulting diagrams were systematically scored based on the criteria. This approach provides a consistent and repeatable evaluation procedure and offers a new lens for comparing LLM-based modeling capabilities. Given the focused scope of the study, the results should be interpreted as an exploratory benchmark that surfaces initial observations about tool performance rather than definitive conclusions. Our findings reveal that while current LLM-based tools can produce BPMN diagrams that capture the main elements of a process description, they often exhibit errors such as missing steps, inconsistent logic, or modeling rule violations, highlighting limitations in achieving fully correct and complete models. The clarity and readability of the generated diagrams also vary, indicating that these Al models are still maturing in generating easily interpretable process flows. We conclude that although LLMs show promise in automating BPMN modeling, significant improvements are needed for them to consistently generate both syntactically and semantically valid process models.

Full article

(This article belongs to the Special Issue Applied Large Language Models for Science, Engineering, and Mathematics: Reasoning, Reliability and Efficient Systems)

►▼

Show Figures

Figure 1

Open AccessCommunication

A Consumer Digital Twin for Energy Demand Prediction: Development and Implementation Under the SENDER Project (HORIZON 2020)

by

Dimitra Douvi, Eleni Douvi, Jason Tsahalis and Haralabos-Theodoros Tsahalis

Computation 2026, 14(1), 9; https://doi.org/10.3390/computation14010009 - 3 Jan 2026

Abstract

This paper presents the development and implementation of a consumer Digital Twin (DT) for energy demand prediction under the SENDER (Sustainable Consumer Engagement and Demand Response) project, funded by HORIZON 2020. This project aims to engage consumers in the energy sector with innovative

[...] Read more.

This paper presents the development and implementation of a consumer Digital Twin (DT) for energy demand prediction under the SENDER (Sustainable Consumer Engagement and Demand Response) project, funded by HORIZON 2020. This project aims to engage consumers in the energy sector with innovative energy service applications to achieve proactive Demand Response (DR) and optimized usage of Renewable Energy Sources (RES). The proposed DT model is designed to digitally represent occupant behaviors and energy consumption patterns using Artificial Neural Networks (ANN), which enable continuous learning by processing real-time and historical data in different pilot sites and seasons. The DT development incorporates the International Energy Agency (IEA)—Energy in Buildings and Communities (EBC) Annex 66 and Drivers-Needs-Actions-Systems (DNAS) framework to standardize occupant behavior modeling. The research methodology consists of the following steps: (i) a mock-up simulation environment for three pilot sites was created, (ii) the DT was trained and calibrated using the artificial data from the previous step, and (iii) the DT model was validated with real data from the Alginet pilot site in Spain. Results showed a strong correlation between DT predictions and mock-up data, with a maximum deviation of ±2%. Finally, a set of selected Key Performance Indicators (KPIs) was defined and categorized in order to evaluate the system’s technical effectiveness.

Full article

(This article belongs to the Special Issue Experiments/Process/System Modeling/Simulation/Optimization (IC-EPSMSO 2025))

►▼

Show Figures

Graphical abstract

Open AccessArticle

Attention Bidirectional Recurrent Neural Zero-Shot Semantic Classifier for Emotional Footprint Identification

by

Karthikeyan Jagadeesan and Annapurani Kumarappan

Computation 2026, 14(1), 8; https://doi.org/10.3390/computation14010008 - 2 Jan 2026

Abstract

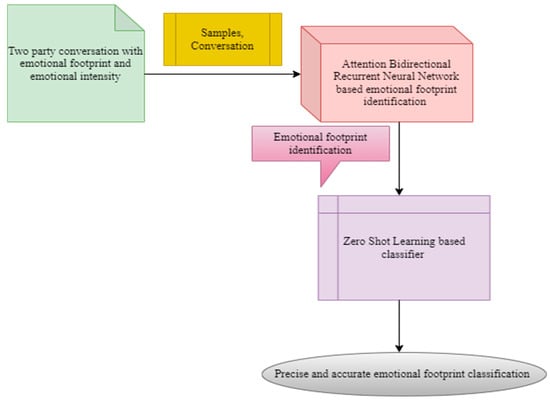

Exploring emotions in organization settings, particularly in feedback on organizational welfare programs, is critical for understanding employee experiences and enhancing organizational policies. Recognizing emotions from a conversation (i.e., leaving an emotional footprint) is a predominant task for a machine to comprehend the full

[...] Read more.

Exploring emotions in organization settings, particularly in feedback on organizational welfare programs, is critical for understanding employee experiences and enhancing organizational policies. Recognizing emotions from a conversation (i.e., leaving an emotional footprint) is a predominant task for a machine to comprehend the full context of the conversation. While fine-tuning of pre-trained models has invariably provided state-of-the-art results in emotion footprint recognition tasks, the prospect of a zero-shot learned model in this sphere is, on the whole, unexplored. The objective here remains to identify the emotional footprint of the members participating in the conversation after the conversation is over with improved accuracy, time and minimal error rate. To address these gaps, in this work, a method called Attention Bidirectional Recurrent Neural Zero-Shot Semantic Classifier (ABRN-ZSSC) for emotional footprint identification is proposed. The ABRN-ZSSC for emotional footprint identification is split into two sections. First, the raw data from a Two-Party Conversation with Emotional Footprint and Emotional Intensity are subjected to the Attention Bidirectional Recurrent Neural Network model with the intent of identifying the emotional footprint for each party near the conclusion of the conversation and, second, with the identified emotional footprint in a conversation. The Zero-Shot Learning-based classifier is applied to train and classify emotions both accurately and precisely. We verify the utility of these approaches (i.e., emotional footprint identification and classification) by performing an extensive experimental evaluation on two corpora on four aspects, training time, accuracy, precision, and error rate for varying samples. Experimental results demonstrate that the ABRN-ZSSC method outperforms two existing baseline models in emotion inference tasks across the dataset. An outcome of the proposed ABRN-ZSSC method is that it obtains superior performance in terms of 10% precision, 17% accuracy and 8% recall as well as 19% training time and 18% error rate compared to the conventional methods.

Full article

(This article belongs to the Section Computational Social Science)

►▼

Show Figures

Figure 1

Open AccessArticle

Multiphysics Modelling and Experimental Validation of Road Tanker Dynamics: Stress Analysis and Material Characterization

by

Conor Robb, Gasser Abdelal, Pearse McKeefry and Conor Quinn

Computation 2026, 14(1), 7; https://doi.org/10.3390/computation14010007 - 2 Jan 2026

Abstract

Crossland Tankers is a leading manufacturer of bulk-load road tankers in Northern Ireland. These tankers transport up to forty thousand litres of liquid over long distances across diverse road conditions. Liquid sloshing within the tank has a significant impact on driveability and the

[...] Read more.

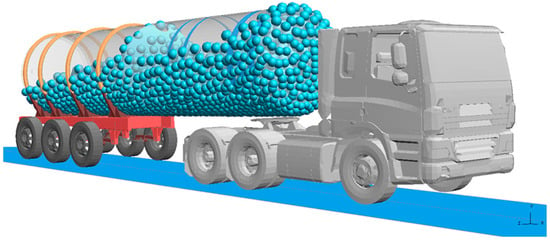

Crossland Tankers is a leading manufacturer of bulk-load road tankers in Northern Ireland. These tankers transport up to forty thousand litres of liquid over long distances across diverse road conditions. Liquid sloshing within the tank has a significant impact on driveability and the tanker’s lifespan. This study introduces a novel Multiphysics model combining Smooth Particle Hydrodynamics (SPH) and Finite Element Analysis (FEA) to simulate fluid–structure interactions in a full-scale road tanker, validated with real-world road test data. The model reveals high-stress zones under braking and turning, with peak stresses at critical chassis locations, offering design insights for weight reduction and enhanced safety. Results demonstrate the approach’s effectiveness in optimising tanker design, reducing prototyping costs, and improving longevity, providing a valuable computational tool for industry applications.

Full article

(This article belongs to the Section Computational Engineering)

►▼

Show Figures

Figure 1

Open AccessReview

Advances in Single-Cell Sequencing for Understanding and Treating Kidney Disease

by

Jose L. Agraz, Amit Verma and Claudia M. Agraz

Computation 2026, 14(1), 6; https://doi.org/10.3390/computation14010006 - 2 Jan 2026

Abstract

The fields of medical diagnostics, nephrology, and the sequencing of cellular genetic material are pivotal for precise quantification of kidney diseases. Single-cell sequencing, enhanced by automation and software tools, enables efficient examination of biopsies at the individual cell level. This approach shows the

[...] Read more.

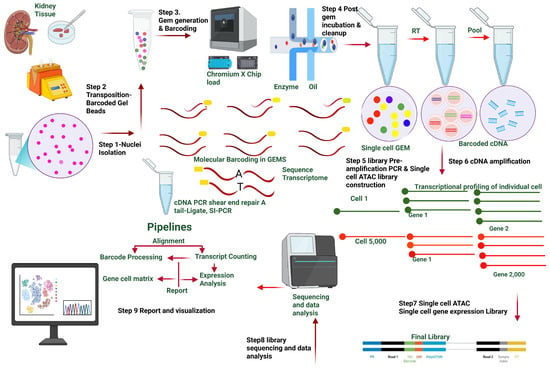

The fields of medical diagnostics, nephrology, and the sequencing of cellular genetic material are pivotal for precise quantification of kidney diseases. Single-cell sequencing, enhanced by automation and software tools, enables efficient examination of biopsies at the individual cell level. This approach shows the complex cellular mosaic that shapes organ function. By quantifying gene expression following injury, single-cell analysis provides insight into disease progression. In this review, new developments in single-cell analysis methods, spatial integration of single-cell analysis, single-nucleus RNA sequencing, and emerging methods, including expression quantitative trait loci, whole-genome sequencing, and whole-exome sequencing in nephrology, are discussed. These advancements are poised to enhance kidney disease diagnostic processes, therapeutic strategies, and patient prognosis.

Full article

(This article belongs to the Special Issue Integrative Computational Methods for Second-and Third-Generation Sequencing Data)

►▼

Show Figures

Figure 1

Open AccessArticle

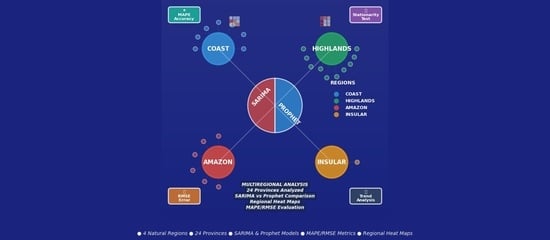

SARIMA vs. Prophet: Comparative Efficacy in Forecasting Traffic Accidents Across Ecuadorian Provinces

by

Wilson Chango, Ana Salguero, Tatiana Landivar, Roberto Vásconez, Geovanny Silva, Pedro Peñafiel-Arcos, Lucía Núñez and Homero Velasteguí-Izurieta

Computation 2026, 14(1), 5; https://doi.org/10.3390/computation14010005 - 31 Dec 2025

Abstract

This study aimed to evaluate the comparative predictive efficacy of the SARIMA statistical model and the Prophet machine learning model for forecasting monthly traffic accidents across the 24 provinces of Ecuador, addressing a critical research gap in model selection for geographically and socioeconomically

[...] Read more.

This study aimed to evaluate the comparative predictive efficacy of the SARIMA statistical model and the Prophet machine learning model for forecasting monthly traffic accidents across the 24 provinces of Ecuador, addressing a critical research gap in model selection for geographically and socioeconomically heterogeneous regions. By integrating classical time series modeling with algorithmic decomposition techniques, the research sought to determine whether a universally superior model exists or if predictive performance is inherently context-dependent. Monthly accident data from January 2013 to June 2025 were analyzed using a rolling-window evaluation framework. Model accuracy was assessed through Mean Absolute Percentage Error (MAPE) and Root Mean Square Error (RMSE) metrics to ensure consistency and comparability across provinces. The results revealed a global tie, with 12 provinces favoring SARIMA and 12 favoring Prophet, indicating the absence of a single dominant model. However, regional patterns of superiority emerged: Prophet achieved exceptional precision in coastal and urban provinces with stationary and high-volume time series—such as Guayas, which recorded the lowest MAPE (4.91%)—while SARIMA outperformed Prophet in the Andean highlands, particularly in non-stationary, medium-to-high-volume provinces such as Tungurahua (MAPE 6.07%) and Pichincha (MAPE 13.38%). Computational instability in MAPE was noted for provinces with extremely low accident counts (e.g., Galápagos, Carchi), though RMSE values remained low, indicating a metric rather than model limitation. Overall, the findings invalidate the notion of a universally optimal model and underscore the necessity of adopting adaptive, region-specific modeling frameworks that account for local geographic, demographic, and structural factors in predictive road safety analytics.

Full article

(This article belongs to the Topic Intelligent Optimization Algorithm: Theory and Applications)

►▼

Show Figures

Graphical abstract

Open AccessArticle

Experimental and Numerical Investigation of Hydrodynamic Characteristics of Aquaculture Nets: The Critical Role of Solidity Ratio in Biofouling Assessment

by

Wei Liu, Lei Wang, Yongli Liu, Yuyan Li, Guangrui Qi and Dawen Mao

Computation 2026, 14(1), 4; https://doi.org/10.3390/computation14010004 - 30 Dec 2025

Abstract

►▼

Show Figures

Biofouling on aquaculture netting increases hydrodynamic drag and restricts water exchange across net cages. The solidity ratio is introduced as a quantitative parameter to characterize fouling severity. Towing tank experiments and computational fluid dynamics (CFD) simulations were used to assess the hydrodynamic behavior

[...] Read more.

Biofouling on aquaculture netting increases hydrodynamic drag and restricts water exchange across net cages. The solidity ratio is introduced as a quantitative parameter to characterize fouling severity. Towing tank experiments and computational fluid dynamics (CFD) simulations were used to assess the hydrodynamic behavior of netting under different fouling conditions. Experimental results indicated a nonlinear increase in drag force with increasing solidity. At a flow velocity of 0.90 m/s, the drag force increased by 112.2%, 195.1%, and 295.7% for netting with solidity ratios of 0.445, 0.733, and 0.787, respectively, compared to clean netting (Sn = 0.211). The drag coefficient remained stable within 1.445–1.573 across Re of 995–2189. Numerical simulations demonstrated the evolution of flow fields around netting, including jet flow formation in mesh openings and reverse flow regions and vortex structures behind knots. Under high solidity (Sn = 0.733–0.787), complex wake patterns such as dual-peak vortex streets appeared. Therefore, this study confirmed that the solidity ratio is an effective comprehensive parameter for evaluating biofouling effects, providing a theoretical basis for antifouling design and cleaning strategy development for aquaculture cages.

Full article

Figure 1

Open AccessArticle

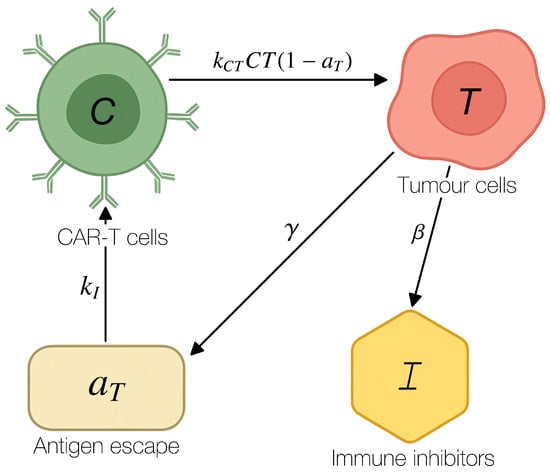

Reaction-Diffusion Model of CAR-T Cell Therapy in Solid Tumours with Antigen Escape

by

Maxim V. Polyakov and Elena I. Tuchina

Computation 2026, 14(1), 3; https://doi.org/10.3390/computation14010003 - 30 Dec 2025

Abstract

Developing effective CAR-T cell therapy for solid tumours remains challenging because of biological barriers such as antigen escape and an immunosuppressive microenvironment. The aim of this study is to develop a mathematical model of the spatio-temporal dynamics of tumour processes in order to

[...] Read more.

Developing effective CAR-T cell therapy for solid tumours remains challenging because of biological barriers such as antigen escape and an immunosuppressive microenvironment. The aim of this study is to develop a mathematical model of the spatio-temporal dynamics of tumour processes in order to assess key factors that limit treatment efficacy. We propose a reaction–diffusion model described by a system of partial differential equations for the densities of tumour cells and CAR-T cells, the concentration of immune inhibitors, and the degree of antigen escape. The methods of investigation include stability analysis and numerical solution of the model using a finite-difference scheme. The simulations show that antigen escape produces a resistant tumour core and relapse after an initial regression; increasing the escape rate from

(This article belongs to the Section Computational Biology)

►▼

Show Figures

Figure 1

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Topic in

AppliedMath, Axioms, Computation, Mathematics, Symmetry

A Real-World Application of Chaos Theory

Topic Editors: Adil Jhangeer, Mudassar ImranDeadline: 28 February 2026

Topic in

Axioms, Computation, Fractal Fract, Mathematics, Symmetry

Fractional Calculus: Theory and Applications, 2nd Edition

Topic Editors: António Lopes, Liping Chen, Sergio Adriani David, Alireza AlfiDeadline: 30 May 2026

Topic in

Brain Sciences, NeuroSci, Applied Sciences, Mathematics, Computation

The Computational Brain

Topic Editors: William Winlow, Andrew JohnsonDeadline: 31 July 2026

Topic in

Sustainability, Remote Sensing, Forests, Applied Sciences, Computation

Artificial Intelligence, Remote Sensing and Digital Twin Driving Innovation in Sustainable Natural Resources and Ecology

Topic Editors: Huaiqing Zhang, Ting YunDeadline: 31 January 2027

Conferences

Special Issues

Special Issue in

Computation

Computational Social Science and Complex Systems—2nd Edition

Guest Editors: Minzhang Zheng, Pedro ManriqueDeadline: 31 January 2026

Special Issue in

Computation

Integrated Computer Technologies in Mechanical Engineering—Synergetic Engineering IV

Guest Editors: Oleksii Lytvynov, Volodymyr Pavlikov, Dmytro KrytskyiDeadline: 31 January 2026

Special Issue in

Computation

Evolutionary Computation for Smart Grid and Energy Systems

Guest Editors: Jesús María López-Lezama, Oscar Danilo MontoyaDeadline: 31 January 2026

Special Issue in

Computation

Applications of Machine Learning and Data Science Methods in Social Sciences

Guest Editors: Zhiyong Zhang, Xin (Cynthia) Tong, Jiashang TangDeadline: 1 February 2026