PL-VIO: Tightly-Coupled Monocular Visual–Inertial Odometry Using Point and Line Features

Abstract

:1. Introduction

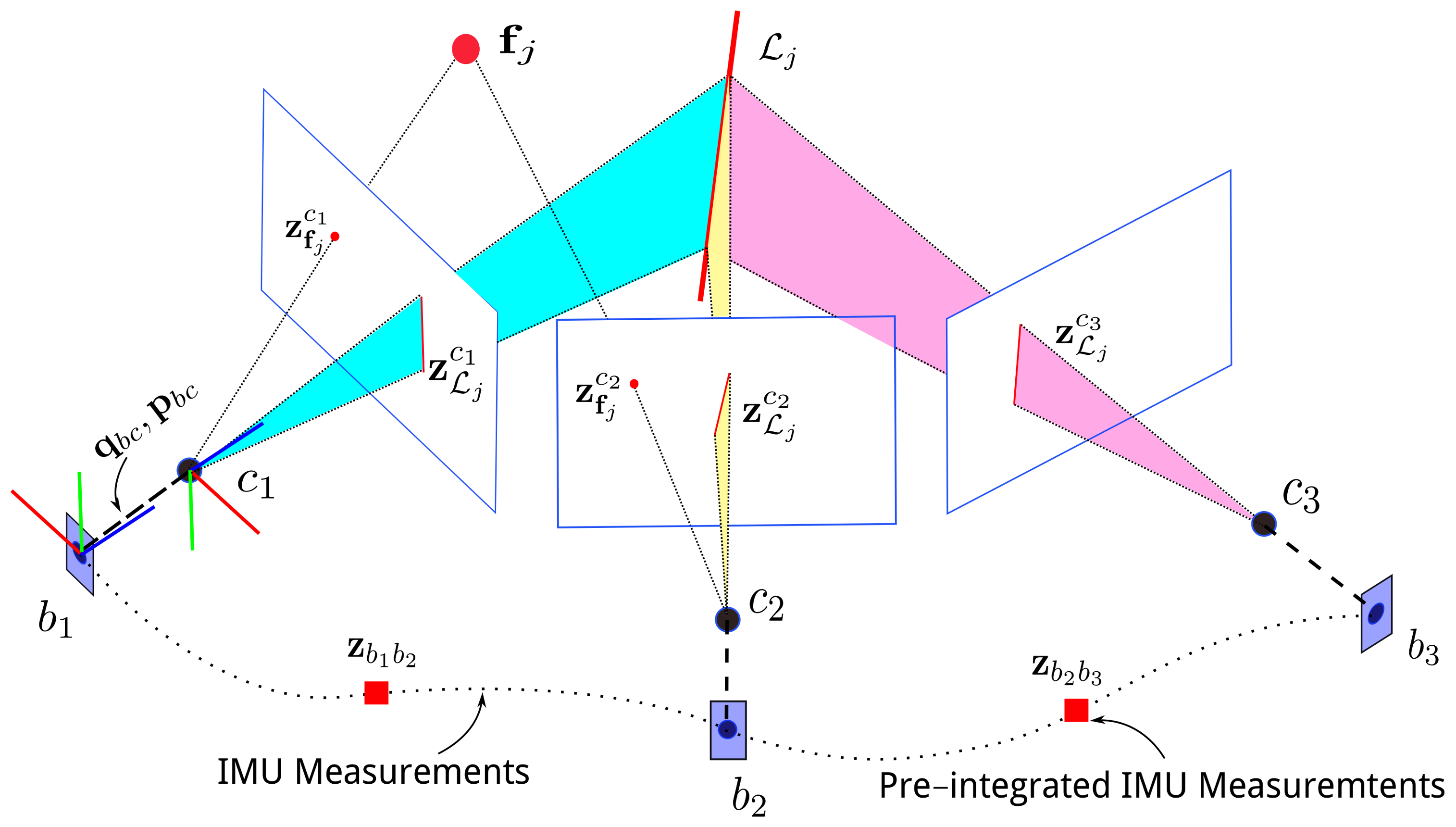

- To the best of our knowledge, the proposed PL-VIO is the first optimization-based monocular VIO system using both points and lines as landmarks.

- To tightly and efficiently fuse the information from visual and inertial sensors, we introduce a sliding window model with IMU pre-integration constraints and point/line features. To represent a 3D spatial line compactly in optimization, the orthonormal representation for a line is employed. All the Jacobian matrices of error terms with respect to IMU body states are derived for solving the sliding window optimization efficiently.

2. Mathematical Formulation

2.1. Notations

2.2. IMU Pre-Integration

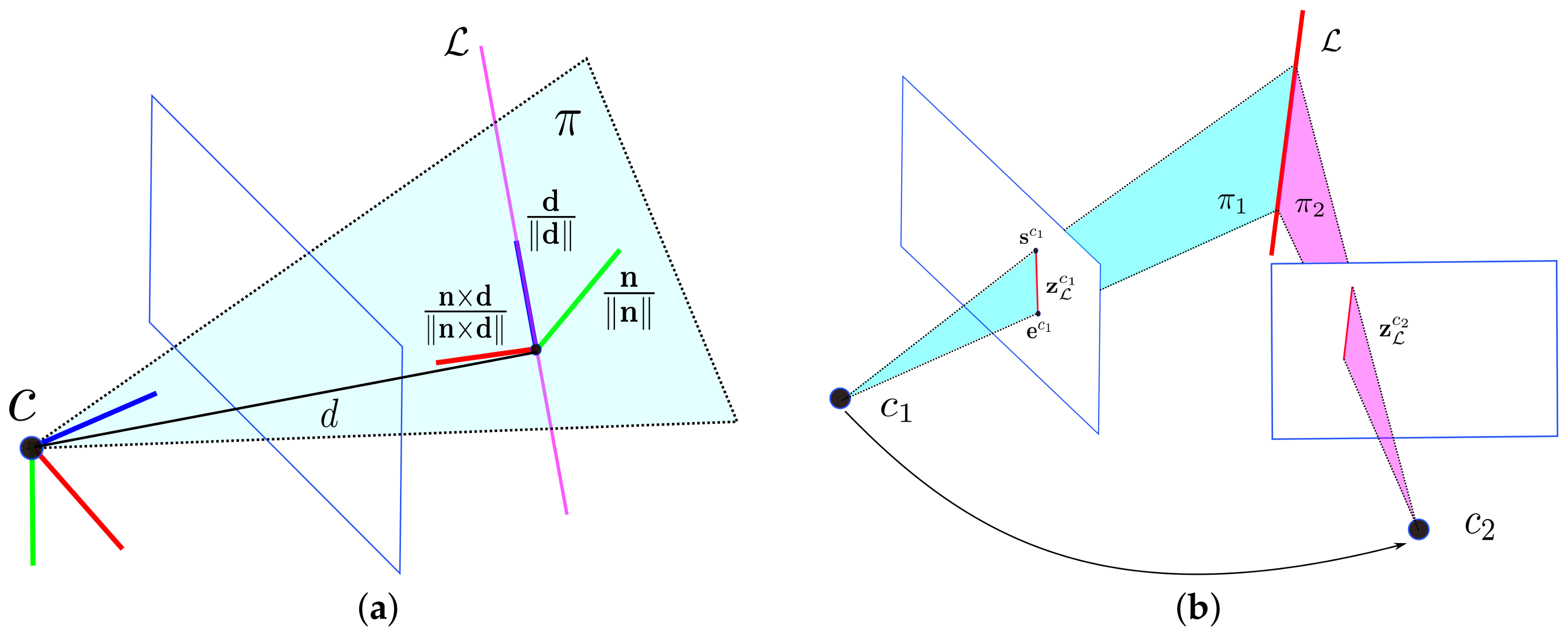

2.3. Geometric Representation of Line

2.3.1. Plücker Line Coordinates

2.3.2. Orthonormal Representation

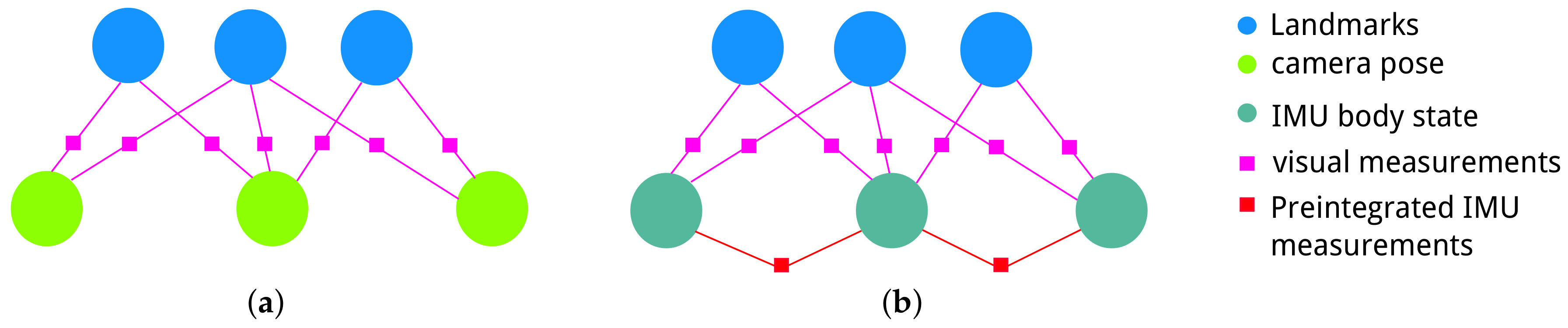

3. Tightly-Coupled Visual–Inertial Fusion

3.1. Sliding Window Formulation

3.2. IMU Measurement Model

3.3. Point Feature Measurement Model

3.4. Line Feature Measurement Model

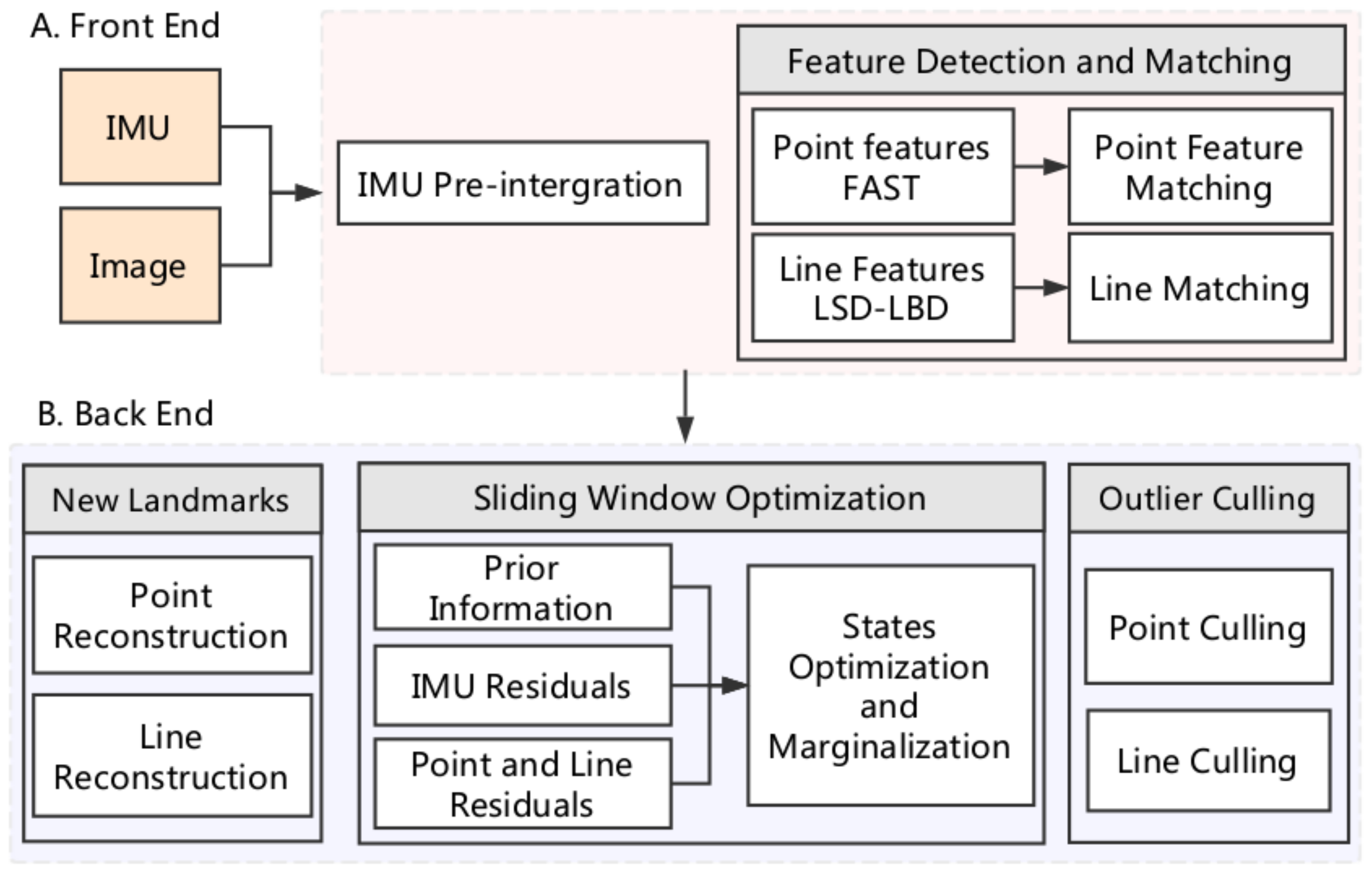

4. Monocular Visual Inertial Odometry with Point and Line Features

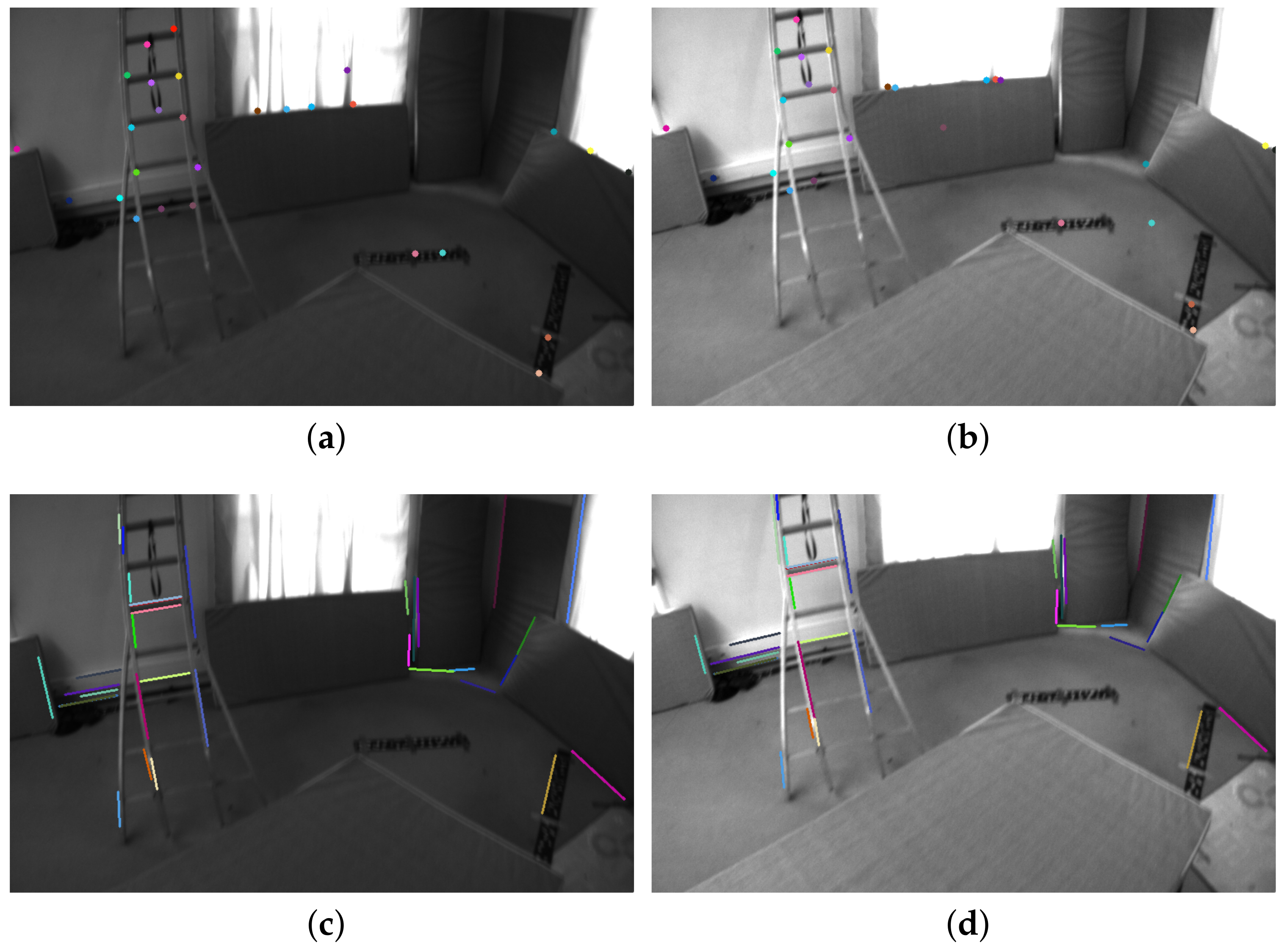

4.1. Front End

4.2. Back End

4.3. Implementation Details

5. Experimental Results

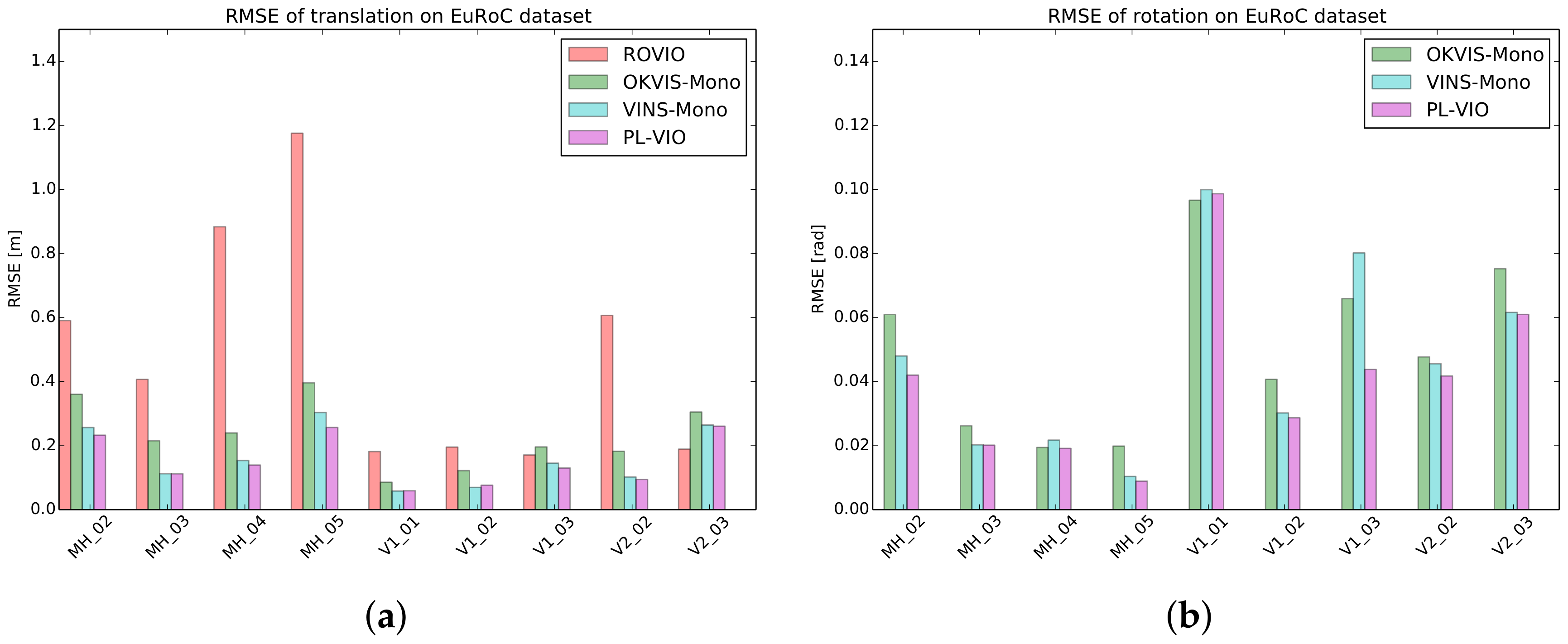

5.1. EuRoc MAV Dataset

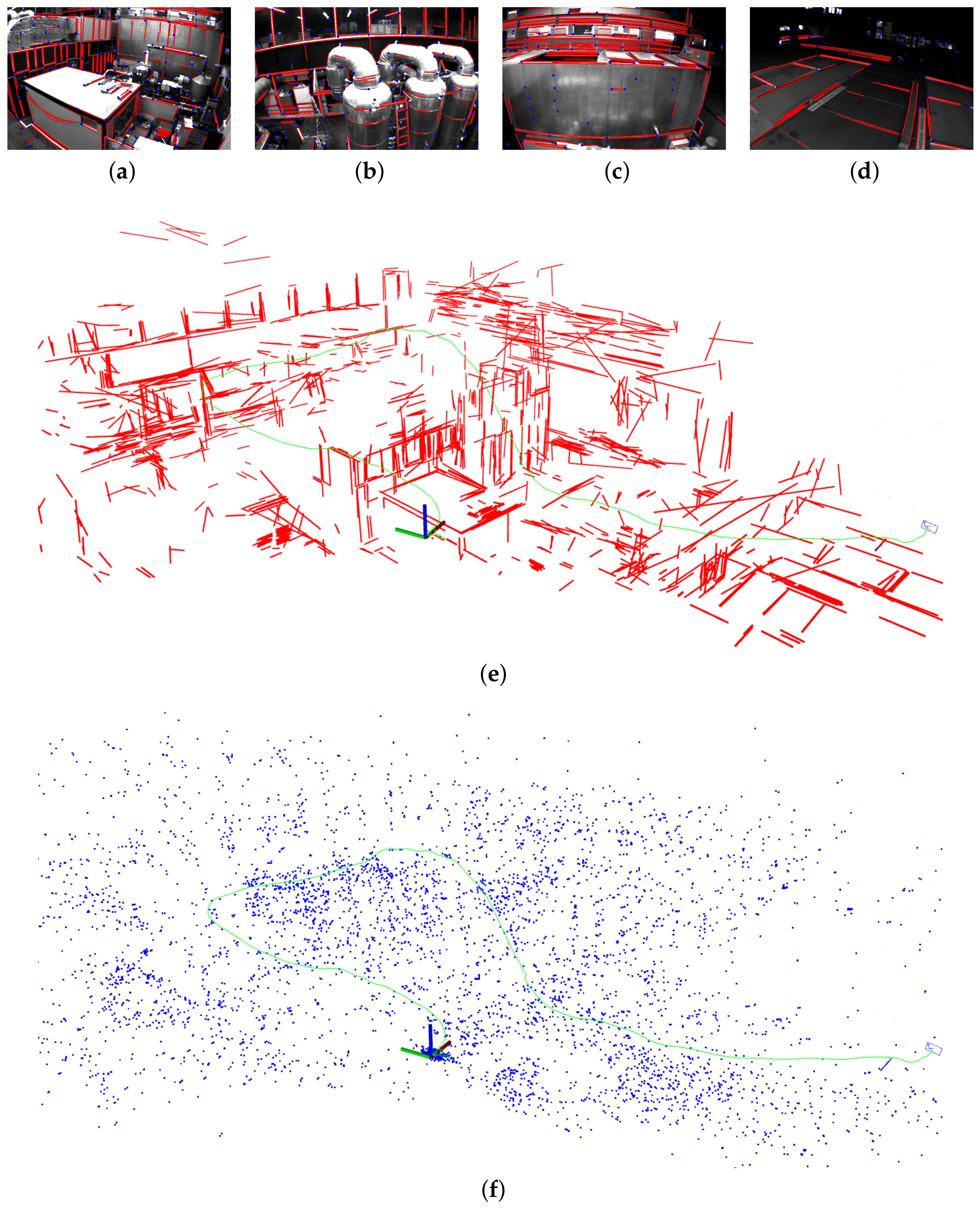

5.2. PennCOSYVIO Dataset

5.3. Computing Time

6. Conclusions

- The reconstructed 3D map with line features can provide geometrical information with respect to the environment, and thus semantic information could be extracted from the map. This is useful for robot navigation.

- Line features can improve the system accuracy both for translation and rotation, especially in illumination-changing scenes. However, the line detection and matching are time-consuming and become the bottlenecks in the efficiency of the system.

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

Appendix B

References

- Groves, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems; Artech House: Norwood, MA, USA, 2013. [Google Scholar]

- Martínez, J.L.; Morán, M.; Morales, J.; Reina, A.J.; Zafra, M. Field Navigation Using Fuzzy Elevation Maps Built with Local 3D Laser Scans. Appl. Sci. 2018, 8, 397. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Liu, L.; Mei, T.; Niu, R.; Wang, J.; Liu, Y.; Chu, S. RBF-Based Monocular Vision Navigation for Small Vehicles in Narrow Space below Maize Canopy. Appl. Sci. 2016, 6, 182. [Google Scholar] [CrossRef]

- Valiente, D.; Gil, A.; Payá, L.; Sebastián, J.M.; Reinoso, Ó. Robust Visual Localization with Dynamic Uncertainty Management in Omnidirectional SLAM. Appl. Sci. 2017, 7, 1294. [Google Scholar] [CrossRef]

- Borraz, R.; Navarro, P.J.; Fernández, C.; Alcover, P.M. Cloud Incubator Car: A Reliable Platform for Autonomous Driving. Appl. Sci. 2018, 8, 303. [Google Scholar] [CrossRef]

- Wang, X.; Wang, J. Detecting glass in simultaneous localisation and mapping. Robot. Auton. Syst. 2017, 88, 97–103. [Google Scholar] [CrossRef]

- Titterton, D.; Weston, J.L. Strapdown Inertial Navigation Technology; The Institution of Engineering and Technology: Stevenage, UK, 2004; Volume 17. [Google Scholar]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Gui, J.; Gu, D.; Wang, S.; Hu, H. A review of visual inertial odometry from filtering and optimisation perspectives. Adv. Robot. 2015, 29, 1289–1301. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, Z.; Zheng, W.; Wang, H.; Liu, J. Monocular Visual-Inertial SLAM: Continuous Preintegration and Reliable Initialization. Sensors 2017, 17, 2613. [Google Scholar] [CrossRef] [PubMed]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-Manifold Preintegration for Real-Time Visual–Inertial Odometry. IEEE Trans. Robot. 2017, 33, 1–21. [Google Scholar] [CrossRef]

- Weiss, S.; Siegwart, R. Real-time metric state estimation for modular vision-inertial systems. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 4531–4537. [Google Scholar]

- Kneip, L.; Weiss, S.; Siegwart, R. Deterministic initialization of metric state estimation filters for loosely-coupled monocular vision-inertial systems. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 2235–2241. [Google Scholar]

- Bloesch, M.; Burri, M.; Omari, S.; Hutter, M.; Siegwart, R. Iterated extended Kalman filter based visual-inertial odometry using direct photometric feedback. Int. J. Robot. Res. 2017, 36, 1053–1072. [Google Scholar] [CrossRef]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual–inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Jones, E.S.; Soatto, S. Visual-inertial navigation, mapping and localization: A scalable real-time causal approach. Int. J. Robot. Res. 2011, 30, 407–430. [Google Scholar] [CrossRef]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Lupton, T.; Sukkarieh, S. Visual-inertial-aided navigation for high-dynamic motion in built environments without initial conditions. IEEE Trans. Robot. 2012, 28, 61–76. [Google Scholar] [CrossRef]

- Shen, S.; Michael, N.; Kumar, V. Tightly-coupled monocular visual-inertial fusion for autonomous flight of rotorcraft MAVs. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5303–5310. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef]

- Kong, X.; Wu, W.; Zhang, L.; Wang, Y. Tightly-coupled stereo visual-inertial navigation using point and line features. Sensors 2015, 15, 12816–12833. [Google Scholar] [CrossRef] [PubMed]

- Kottas, D.G.; Roumeliotis, S.I. Efficient and consistent vision-aided inertial navigation using line observations. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 1540–1547. [Google Scholar]

- Zhang, G.; Lee, J.H.; Lim, J.; Suh, I.H. Building a 3-D Line-Based Map Using Stereo SLAM. IEEE Trans. Robot. 2015, 31, 1364–1377. [Google Scholar] [CrossRef]

- Pumarola, A.; Vakhitov, A.; Agudo, A.; Sanfeliu, A.; Moreno-Noguer, F. PL-SLAM: Real-time monocular visual SLAM with points and lines. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4503–4508. [Google Scholar]

- Gomez-Ojeda, R.; Moreno, F.A.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A Stereo SLAM System through the Combination of Points and Line Segments. arXiv, 2017; arXiv:1705.09479. [Google Scholar]

- Bartoli, A.; Sturm, P. The 3D line motion matrix and alignment of line reconstructions. Int. J. Comput. Vis. 2004, 57, 159–178. [Google Scholar] [CrossRef]

- Zuo, X.; Xie, X.; Liu, Y.; Huang, G. Robust Visual SLAM with Point and Line Features. arXiv, 2017; arXiv:1711.08654. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. arXiv, 2017; arXiv:1708.03852. [Google Scholar]

- Furgale, P.; Rehder, J.; Siegwart, R. Unified temporal and spatial calibration for multi-sensor systems. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 1280–1286. [Google Scholar]

- Kok, M.; Hol, J.D.; Schön, T.B. Using inertial sensors for position and orientation estimation. arXiv, 2017; arXiv:1704.06053. [Google Scholar]

- Kaess, M.; Johannsson, H.; Roberts, R.; Ila, V.; Leonard, J.J.; Dellaert, F. iSAM2: Incremental smoothing and mapping using the Bayes tree. Int. J. Robot. Res. 2012, 31, 216–235. [Google Scholar] [CrossRef] [Green Version]

- Sola, J. Quaternion kinematics for the error-state Kalman filter. arXiv, 2017; arXiv:1711.02508. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence (IJCAI), Vancouver, BC, Canada, 24–28 August 1981. [Google Scholar]

- Rosten, E.; Porter, R.; Drummond, T. Faster and better: A machine learning approach to corner detection. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Koch, R. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. J. Vis. Commun. Image Represent. 2013, 24, 794–805. [Google Scholar] [CrossRef]

- Yang, Z.; Shen, S. Monocular visual–inertial state estimation with online initialization and camera–imu extrinsic calibration. IEEE Trans. Autom. Sci. Eng. 2017, 14, 39–51. [Google Scholar] [CrossRef]

- Agarwal, S.; Mierle, K. Ceres Solver. Available online: http://ceres-solver.org (accessed on 9 April 2018).

- Kaehler, A.; Bradski, G. Learning OpenCV 3: Computer Vision in C++ with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2016. [Google Scholar]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Pfrommer, B.; Sanket, N.; Daniilidis, K.; Cleveland, J. PennCOSYVIO: A challenging visual inertial odometry benchmark. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June2017; pp. 3847–3854. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An Open-Source Robot Operating System; ICRA Workshop on Open Source Software; ICRA: Kobe, Japan, 2009; p. 5. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Lu, Y.; Song, D.; Yi, J. High level landmark-based visual navigation using unsupervised geometric constraints in local bundle adjustment. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1540–1545. [Google Scholar]

- Yang, Z.; Shen, S. Tightly-Coupled Visual–Inertial Sensor Fusion Based on IMU Pre-Integration; Technical Report; Hong Kong University of Science and Technology: Hong Kong, China, 2016. [Google Scholar]

| Seq. | ROVIO | OKVIS-Mono | VINS-Mono | PL-VIO | ||||

|---|---|---|---|---|---|---|---|---|

| Trans. | Rot. | Trans. | Rot. | Trans. | Rot. | Trans. | Rot. | |

| MH_02_easy | 0.59075 | 2.21181 | 0.36059 | 0.06095 | 0.25663 | 0.04802 | 0.23274 | 0.04204 |

| MH_03_medium | 0.40709 | 1.92561 | 0.21534 | 0.02622 | 0.11239 | 0.02027 | 0.11224 | 0.02016 |

| MH_04_difficult | 0.88363 | 2.30330 | 0.23984 | 0.01943 | 0.15366 | 0.02173 | 0.13942 | 0.01915 |

| MH_05_difficult | 1.17578 | 2.27213 | 0.39644 | 0.01987 | 0.30351 | 0.01038 | 0.25687 | 0.00892 |

| V1_01_easy | 0.18153 | 2.03399 | 0.08583 | 0.09665 | 0.05843 | 0.09995 | 0.05916 | 0.09869 |

| V1_02_medium | 0.19563 | 1.93652 | 0.12207 | 0.04073 | 0.06970 | 0.03022 | 0.07656 | 0.02871 |

| V1_03_difficult | 0.17091 | 2.02069 | 0.19613 | 0.06591 | 0.14531 | 0.08021 | 0.13016 | 0.04382 |

| V2_02_medium | 0.60686 | 1.84458 | 0.18253 | 0.04773 | 0.10218 | 0.04558 | 0.09450 | 0.04177 |

| V2_03_difficult | 0.18912 | 1.92380 | 0.30513 | 0.07527 | 0.26446 | 0.06162 | 0.26085 | 0.06098 |

| Seq. | ROVIO | OKVIS-Mono | VINS-Mono | PL-VIO |

|---|---|---|---|---|

| MH_02_easy | 15 | 31 | 63 | 127 |

| MH_03_medium | 15 | 30 | 62 | 112 |

| MH_04_difficult | 15 | 24 | 54 | 108 |

| MH_05_difficult | 15 | 27 | 58 | 102 |

| V1_01_easy | 14 | 26 | 45 | 93 |

| V1_02_medium | 15 | 23 | 37 | 86 |

| V1_03_difficult | 15 | 20 | 29 | 82 |

| V2_02_medium | 15 | 22 | 33 | 86 |

| V2_03_difficult | 15 | 21 | 27 | 85 |

| Algorithm | Translation Error (m) | Rotation Error (rad) |

|---|---|---|

| VINS-Mono | 1.14690 | 0.04156 |

| PL-VIO | 1.05975 | 0.03742 |

| Algorithm | APE | RPE | ||||||

|---|---|---|---|---|---|---|---|---|

| x | y | z | Rot. | x | y | z | Rot. | |

| VINS-Mono | 0.423 | 0.173 | 0.861 | 2.3477 | 2.807 | 1.844 | 4.663 | 1.9337 |

| PL-VIO | 0.524 | 0.070 | 0.769 | 2.0782 | 2.375 | 1.844 | 4.361 | 1.7350 |

| Module | Operation | Times (ms) | Rate (Hz) | Thread ID |

|---|---|---|---|---|

| front end | point feature detection and matching | 4 | 25 | 1 |

| line feature detection and matching | 86 | 11 | 2 | |

| IMU forward propagation | 1 | 100 | 3 | |

| back end | nonlinear optimization | 28 | 15 | 4 |

| marginalization | 35 | 15 | 4 | |

| feature triangulation and culling | 2 | 15 | 4 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Y.; Zhao, J.; Guo, Y.; He, W.; Yuan, K. PL-VIO: Tightly-Coupled Monocular Visual–Inertial Odometry Using Point and Line Features. Sensors 2018, 18, 1159. https://doi.org/10.3390/s18041159

He Y, Zhao J, Guo Y, He W, Yuan K. PL-VIO: Tightly-Coupled Monocular Visual–Inertial Odometry Using Point and Line Features. Sensors. 2018; 18(4):1159. https://doi.org/10.3390/s18041159

Chicago/Turabian StyleHe, Yijia, Ji Zhao, Yue Guo, Wenhao He, and Kui Yuan. 2018. "PL-VIO: Tightly-Coupled Monocular Visual–Inertial Odometry Using Point and Line Features" Sensors 18, no. 4: 1159. https://doi.org/10.3390/s18041159