1. Introduction

Defect detection plays a vital role in automatic inspection in most production processes (food, textile, bottling, timber, steel industries, etc.). In many of these processes, quality controls still depend to a large extent on the training of specialized inspectors. Manual inspection involves limitations in terms of accuracy, coherence and efficiency when detecting defects. This is due to the fact that inspectors are prone to suffer fatigue, boredom or simply fail to pay sufficient attention because of the repetitive nature of their tasks [

1]. To deal with these problems, human inspectors are being substituted by automatic visual inspection systems [

2,

3,

4].

Texture analysis provides a very powerful tool to detect defects in applications for visual inspection, since textures provide valuable information about the features of different materials.

In computer vision, texture is broadly classified into two main categories: statistical and structural [

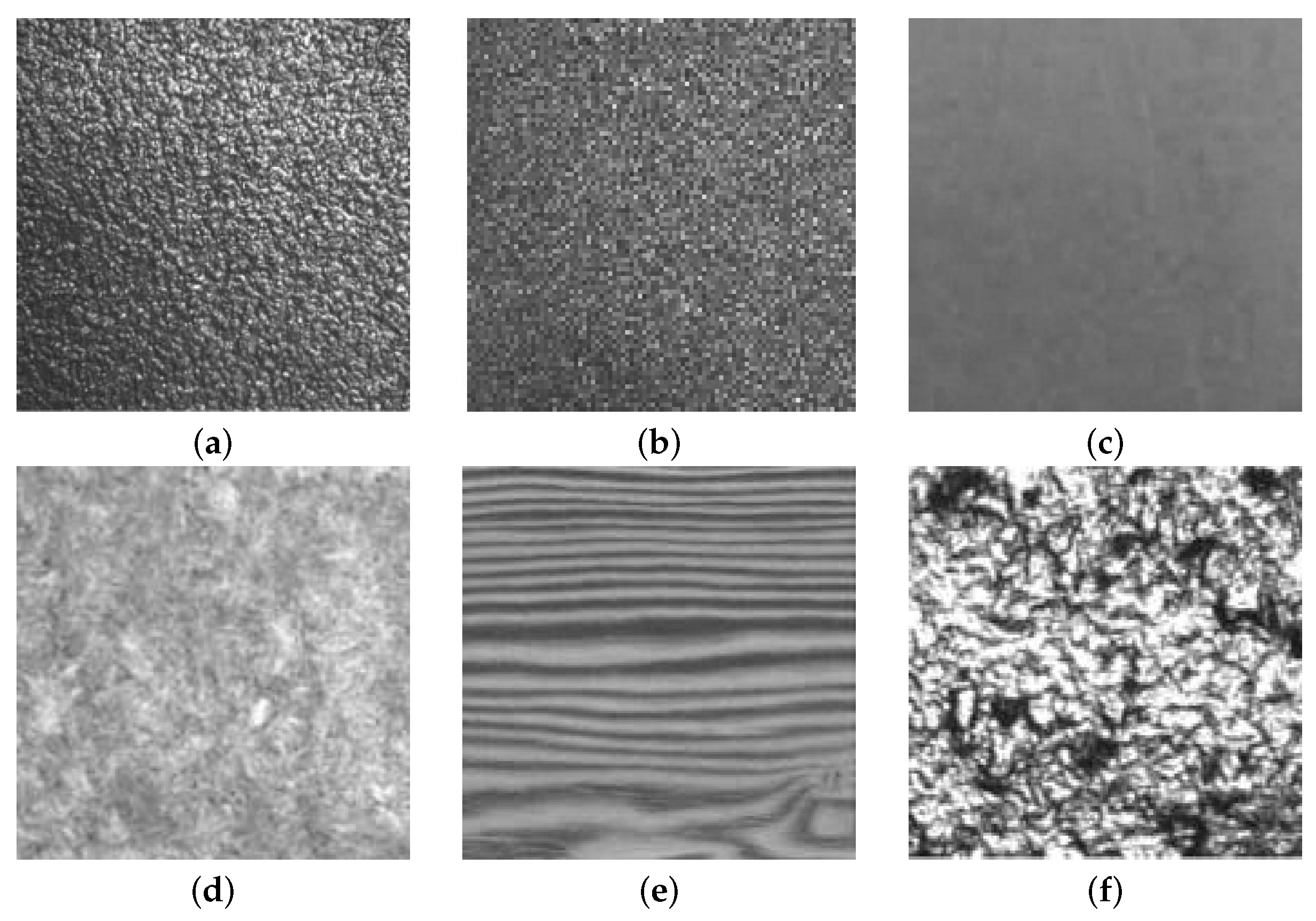

5]. Textures that are random in nature are well suited for statistical characterization. Statistical textures do not have easily identifiable primitives; however, some visual properties can usually be observed, such as directionality (directional versus isotropic), appearance (coarse versus fine) or regularity (regular versus irregular) (e.g., wool, sand, wood, etc.) (

Figure 1).

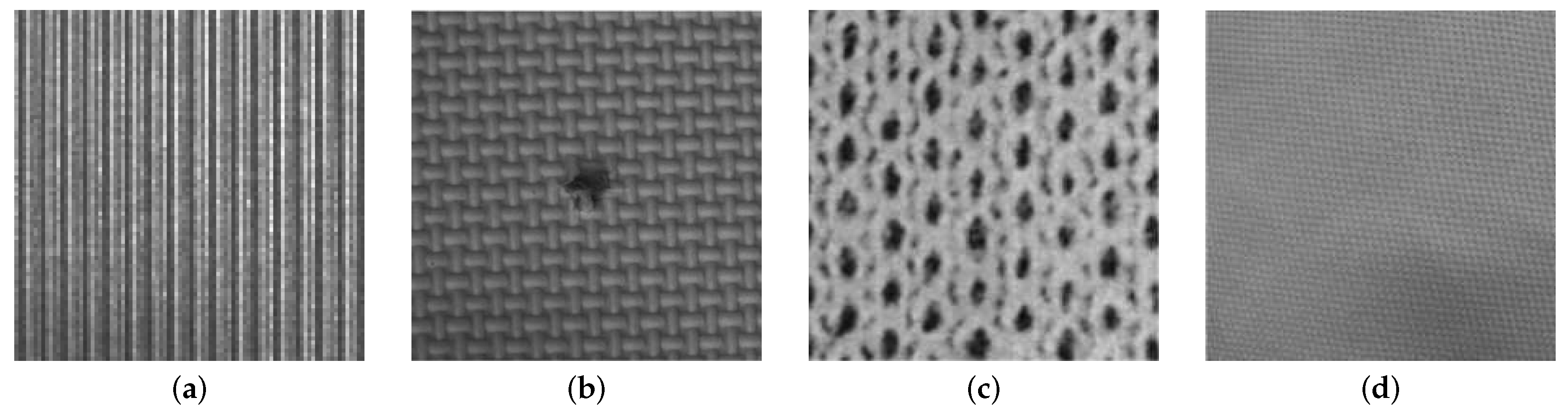

On the other hand, structural textures, also called patterned textures, are characterized by a set of primitives (texels) and placement rules. The placement rules define the spatial relationships between the texels, and these spatial relationships may be expressed in terms of adjacency, closest distance or periodicities. The texels themselves may be defined by their gray level, shape or homogeneity of some local property (e.g., milled surfaces, fabric, etc.) (

Figure 2). Statistical texture patterns are isotropic, while patterned textures can be classified into oriented (directional) or non-oriented (isotropic) [

6] (see

Figure 3). A homogeneous texture contains repetitive properties everywhere in an image [

7]; if repetitive self-similar patterns can be found, it is possible to talk about homogeneous structural texture (

Figure 4a). A homogeneous statistical texture cannot be described with texture primitives and displacement rules; the spatial distribution of gray levels is rather stochastic, but the repetition, self-similarity properties still hold (

Figure 4b). If there is no repetition or spatial self-similarity, a texture may be defined as inhomogeneous (

Figure 4c).

More recently, Ngan [

8] provided a new approach, which defines patterned textures in terms of an underlying lattice, composed of one or more motifs, whose symmetry properties are governed by 17 wallpaper groups; wallpaper groups, also known as crystallographic groups, are well defined in mathematic algebra [

9].

Figure 2c,d shows two patterned textures classified in the p1 wallpaper group.

In a recent and complete review of defect detection in textures, Xie [

10] classified texture analysis techniques in the following categories: statistical techniques [

11,

12,

13], structural techniques [

14,

15], filter-based techniques [

16,

17,

18] and model-based techniques [

19,

20,

21], while in his review, Kumar [

22] sets three categories of defect detection in fabric: the statistical approach, the spectral approach and the model-based approach. Ngan [

23] proposes a new classification of techniques for defect detection in textures into motif and non-motif-based approaches. While the traditional (non-motif) techniques can be sub-divided into statistical methods, spectral methods, model-based methods, learning and structural methods (since texture is composed of one main lattice with only one motif), the motive-based techniques [

23,

24] use the difference and energy variance among different motifs.

Clustering techniques are used in many defect detection methods; these methods are mainly based on the extraction of texture features. Such features are obtained using different techniques, such as co-occurrence matrix [

25,

26,

27,

28], Fourier transform [

7,

29,

30,

31,

32], Gabor transform [

33,

34,

35,

36,

37] or the wavelet transform [

38,

39,

40,

41].

Spectral-approach techniques provide either the frequency contents of a texture image (Fourier transform) or spatial-frequency analysis (Gabor filters, wavelet transform (WT)).

Fourier transform shows good results when applied over texture patterns with high directionality or regularity, because the information about the directionality and periodicity of the texture pattern is well recognizable in the 2D spectrum, although it fails when attempting to determine the spatial localization of such patterns. Concerning spatial localization, Gabor filters provide better accuracy, but they show a lack of reliability when processing natural textures, since there is no single filter resolution that can localize a structure. The main advantage that WT has over the Gabor transform is that it makes it possible to represent the textures in the appropriate scale because of the variation of the spatial resolution it provides.

The suitability of WT for use in image analysis is well established: a representation in terms of the frequency content of local regions over a range of scales provides an ideal framework for the analysis of image features, which in general are of different sizes and can often be characterized by their frequency domain properties [

42]. This makes the wavelet transform an attractive option when segmenting textures, as reported by Truchetet [

43] in his review of industrial applications of wavelet-based image processing. He reported different uses of wavelet analysis in successful machine vision applications: detecting defects for manufacturing applications for the production of furniture, textiles, integrated circuits, etc., from their wavelet transformation and vector quantization-related properties of the associated wavelet coefficients; the sorting of ceramic tiles and the recognition of metallic paints for car refinishing by combining color and texture information through a multiscale decomposition of each color channel in order to feed a classifier; printing defect identification and classification (applied to printed decoration and tampoprint images) by analyzing the fractal properties of a textured image; image database retrieval algorithms basing texture matching on energy coefficients in the pyramid wavelet transform using Daubechies wavelets; face recognition using the “symlet” wavelet because of its symmetry and regularity; online inspection of a loom under construction using a specific class of the 2D discrete wavelet transform called the multiscale wavelet representation with the objectives of attenuating the background texture and accentuating the defects; online fabric inspection device performing an independent component analysis on a sub-band decomposition provided by a two-level DWT in order to increase the defect detection rate.

Background and Contributions

Wavelet transform has resulted in two groups of techniques for detecting defects: (1) direct thresholding methods [

25,

44,

45,

46,

47], whose operation is based on the background attenuation provided by the WT in the successive decomposition levels (such attenuation allows enhancing defects, which can be segmented by utilizing direct thresholding [

48]); and (2) methods based on extracting texture features by means of WT [

41,

42]. After obtaining texture features, feature vectors are formed, and different classifiers are used on them (neural networks, Bayes classifiers, etc.).

In the present paper, a direct thresholding method was decided to be used due to the several disadvantages that methods based on feature vectors present (high computing costs and difficulties to set stop criteria from the proximity-based techniques or training difficulties posed by learning-based techniques). If direct thresholding methods are used, a couple of important challenges have to be faced: (a) how to select the optimal wavelet decomposition level; and (b) determining which bands enable the elimination of the maximum information relative to the texture pattern, which involves two dangerous risks: (a) features of the texture pattern can merge with those of the defects due to an excessive wavelet decomposition; and (b) false positives may appear due to a defective reconstruction scheme.

A review of the literature on texture defect detection methods using wavelet transforms shows a great deal of interest in methods for automatic selection of the optimal wavelet decomposition level due to the potential industrial applications. Of these, the best performance is offered by the methods proposed by [

25,

46,

47], the Tsai and Han methods being the ones that report better results. Tsai proposes an image reconstruction approach based on the analysis and synthesis of wavelet transforms well suited for inspecting surface defects embedded in homogeneous structural and statistical textures. To achieve this, the method removes all regular and repetitive texture patterns from the restored image by selecting proper approximation or detail subimages for wavelet synthesis. In this way, an image where defects are highlighted is obtained. Finally, the method carries out a binarization based on the average and the variance to segment the defects. To find defects in statistical textures with isotropic patterns, the Tsai method uses the approximation image obtained with an optimal resolution level calculated by means of a ratio between two consecutive energy levels. Han reports a method for defect detection in images with high-frequency texture background. Wavelet transform is used to decompose the texture images into approximation, horizontal, vertical and diagonal subimages. This method uses only the approximation subimage, due to the fact that textures are always high-frequency elements of the image. The optimal decomposition level is the maximum variation of the local homogeneity calculated at two successive wavelet decomposition levels. Local homogeneity is one of the 28 features defined by Haralik et al. for texture characterization computed over the co-occurrence matrix [

49]. Once the appropriate decomposition level has been selected, Han uses the approximation subimage to reconstruct the image. After that, Otsu’s method is applied to segment the defects. However, as will be seen further in this text, both methods present serious difficulties when processing the large set of textures presented in this paper and when quantifying the size of the defect. This last feature is crucial for many industrial applications: for example in maintenance, tasks where the product is not discarded, but repaired.

This paper proposes a new approach to face the aforementioned inconveniences based on the normalized absolute function value (NABS) and Shannon’s entropy calculation. The main contributions can be summarized as follows:

A numeric analysis of the normalized absolute function value (NABS) at different wavelet decomposition levels, which makes it possible to determine the texture directionality. The proposed algorithm makes it possible to classify into high and low directionality patterns, depending on the variation in the slope of the NABS.

A novel use of the normalized Shannon’s entropy has been formulated, calculated over different detail subimages, in order to determine the optimal decomposition level in textures with low directionality. For this purpose, it is proposed to calculate this optimal decomposition level as the maximum ratio between the entropy of the approximation subimage and the total entropy, as the sum of entropies calculated for every subimage. This ratio provides better results when detecting defects in a wider range of textures.

This article is organized as follows:

Section 1 gives a brief overview of the concerned problem and highlights the paper contribution; also, it examines the background of wavelet decomposition and presents the mathematical notation that will be used throughout the rest of the paper;

Section 2 shows a brief description about the wavelet transform;

Section 3 presents the proposed method: entropy-based automatic selection of the wavelet decomposition level (EADL);

Section 4 presents the results when the developed method was applied to different real textures; finally,

Section 5 presents the conclusions.

2. Wavelet Decomposition and Mathematical Notation

Wavelet transform can be applied on an image in 256 gray levels and of size by means of a convolution (linear, periodic or cyclic), through two filters (a low-pass filter: L; and a band-pass filter: H), and by taking, from the resulting image, a sample out of two. This process is applied to all the rows, and then, with the resulting rows to all of the columns, provides four subimages defined as (L filter on rows and columns), (H filter on rows and L filter on columns), (L filter on rows and H filter on columns) and (H filter on columns and rows). These four subimages share the same origin, and their size is a quarter of the original size. One of them () is referred to as the approximation subimage. It is defined as and represents an approximation of the original image. The other three subimages are referred to as detail subimages: horizontal detail subimage or ; vertical detail subimage or ; and diagonal detail subimage or . According to this notation, the original image can be represented in this way: .

From this first decomposition level, wavelet transform can be applied again on the previous approximation image, achieving in this way, again, a new approximation image and three detail subimages.

represents the approximation image obtained in the decomposition level

j. From that image, subimages

,

,

and

can then be obtained. Such subimages form the set of images of the decomposition level

. In [

50], there is a detailed description of the algorithm.

The recovery process of the approximation image

, from the four subimages of the decomposition level

, is also described in the basic literature on wavelet transforms. Such a reconstructed image is called

F:

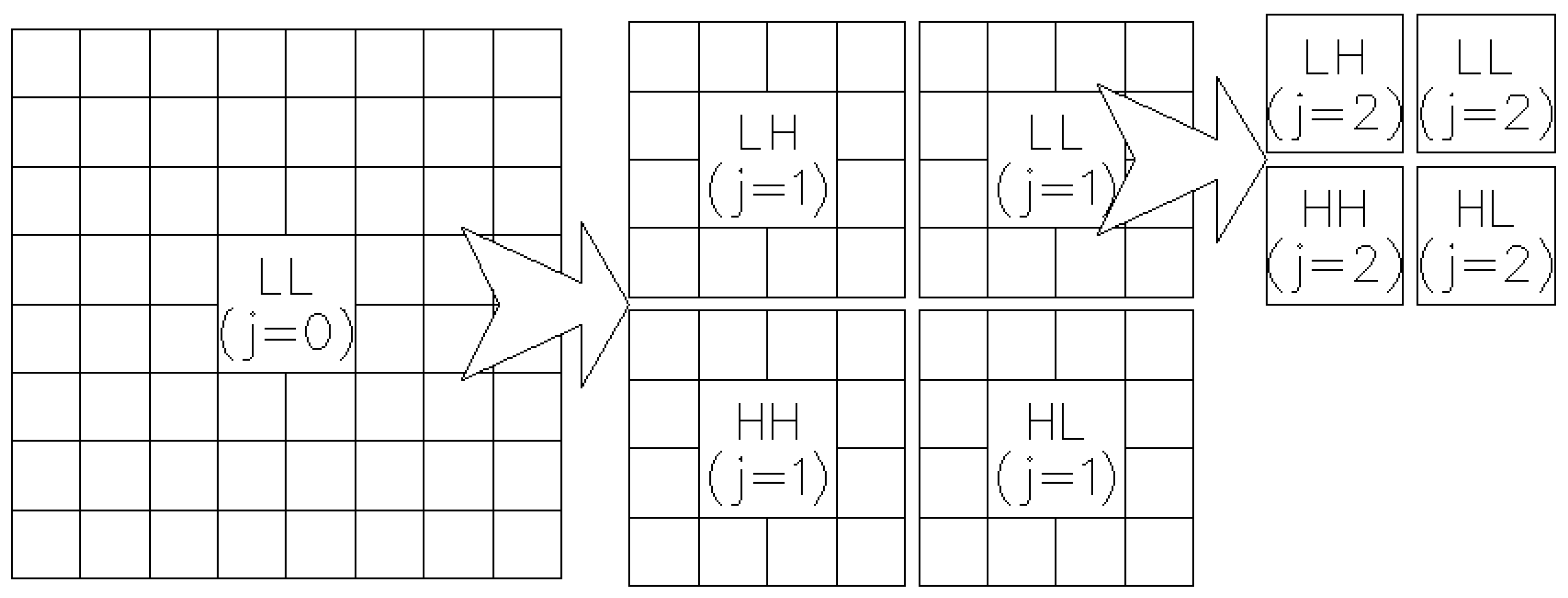

This procedure can be recursively applied until reaching the starting level ().

Figure 5 graphically represents the decomposition process of wavelet transform. Image

is decomposed into the three detail subimages of level

, as well as the new approximation image of level

) that, at the same time, is decomposed into four subimages at level

. Every subimage of level

j is of size

, where

X and

Y represent the dimensions of the original image at level

. Thus, a multiresolution analysis of the original image is achieved at different scales, and according to the wavelet signal used in the convolution, it is possible then to find periodic structures, at one scale or another, in a horizontal, vertical or diagonal distribution.

3. Entropy-Based Method for Automatic Selection of the Wavelet Decomposition Level

Entropy has been used in many image processing methods: image segmentation [

51,

52]; thresholding methods [

53,

54]; Haralick’s texture descriptor, utilized as parameter for gray-level co-occurrence matrices; measurement incorporated with feature vectors [

49,

55], etc.

A three-stage method for detecting defects in textures is proposed:

In order to determine if the texture has high directionality, the NABS value is calculated. If so, the subimage that contains the maximum directionality information is removed, together with the approximation subimage.

If the directionality value is low, then the optimum decomposition level is estimated by means of Shannon’s entropy.

To determine the optimum thresholding method.

3.1. Automatic Detection of High Directionality in Texture

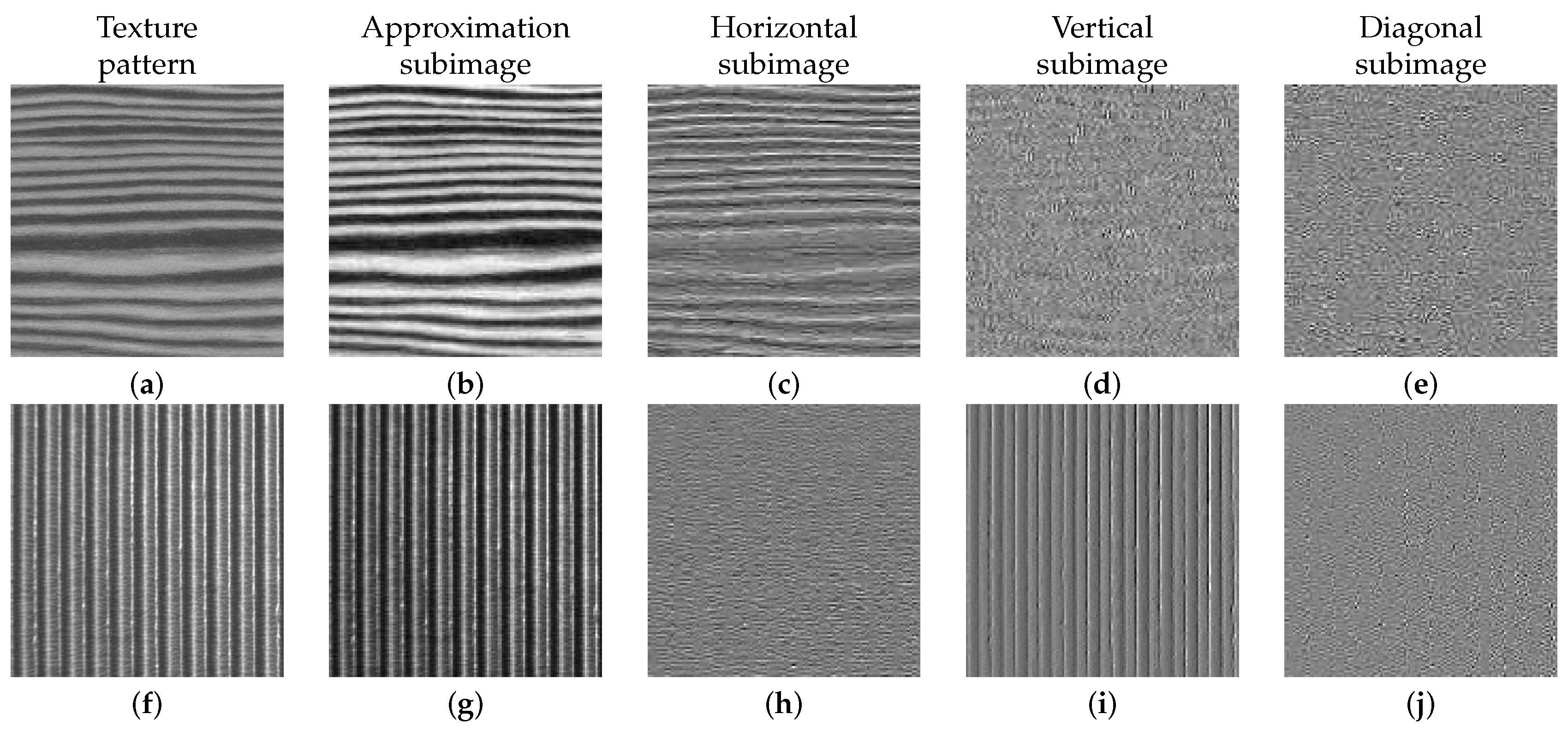

In high horizontal and vertical directionality patterns, texture information relies to a great extent on the approximation subimage and either in the horizontal or in the vertical subimage, as shown in

Figure 6. In these cases, to isolate defects, it is enough to reconstruct the image eliminating the approximation detail (

Figure 6b,g) together with the subimage, which contains the maximum information about the pattern directionality in the first decomposition level (

Figure 6c,i).

In this work, the normalized absolute function value (NABS) was used to separate patterns of structural textures with high directionality from the rest. To determine the directionality of a texture, the values of the NABS of the detail subimages (

,

,

) are calculated at different decomposition levels (see its graphical representation in

Figure 7), and the trend line slopes for each of the three sets of values are obtained (

,

and

). If one of these three slopes is very superior to the other two, it can be considered that the texture has great directionality (h, v or d). Thus, the corresponding subimage (h, v or d) together with the approximation subimage of the first decomposition level are eliminated. The approximation subimage is eliminated in the reconstruction process because it constitutes a rough representation of the original image and, therefore, contains a great deal of information about the pattern’s directionality [

46].

The normalized expressions of the absolute value (NABS) of the horizontal, vertical and diagonal detail subimages in level

j are given by Equations (

2)–(

4):

for

(where

).

is the number of pixels at each decomposition level

j.

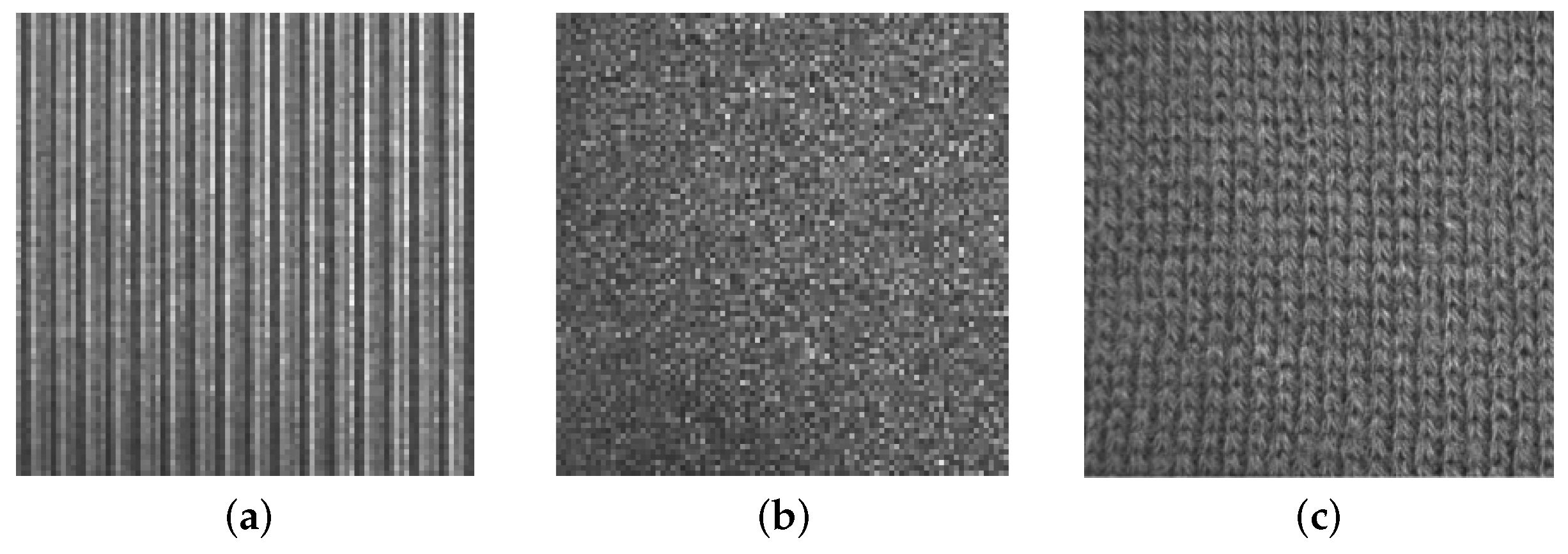

Figure 7 shows representative images of statistical (

Figure 7a,c,d) and structural (

Figure 7b) textures. The first two images represent a pattern with high horizontal and vertical directionality respectively, while the other two images present a statistical texture with a coarse-to-fine appearance.

The graphs to right of each image show the NABS values of the horizontal, vertical and diagonal details (, , ) as a function of the decomposition level . Analysis of the graphs shows considerable differences between the NABS values for the high directionality texture patterns and for no directional patterns. This difference is very noticeable in each of the trend line equation slopes, which were obtained using the least squares approach.

It can therefore be concluded that a pattern presents high horizontal, vertical or diagonal directionality if the first-order trend line equation coefficients () show variations greater than a given percentage of the maximum of the coefficients.

To simplify the programming, a scale change on the ratio between each of the coefficients and the maximum is applied, expressed as a percentage (Equation (

5)), so that the maximum slope value for the three trend lines is obtained when the numerical value

is zero. Based on the empirical results, a texture may be considered highly directional (horizontal, vertical or diagonal) if the two values

different from zero are higher than 70%.

Table 1 shows the significant difference between the vertical (

,

) and diagonal (

,

) details of the image in

Figure 7a and the horizontal detail (

,

). The table also shows the variation of the horizontal detail (

,

) and diagonal detail (

,

) with respect to the vertical detail (

,

), of the image in

Figure 7b. The images in

Figure 7a,b can therefore be said to show a high degree of horizontal and vertical directionality, respectively.

The resulting image

F will be derived from the composition of the rest of detail subimages. In the case of the textures in

Figure 7a,b, the new images will be derived through the following expressions:

As regarding the behavior of the first-order coefficients of the trend line equation on texture patterns shown in

Figure 7a–d, it can be noticed that in the statistical and structural textures with high directionality, there are variations greater than 90% in the trend line equation of the horizontal and diagonal details with respect to the other detail subimages (

Figure 7a,b. Therefore, as indicated above, a texture may be considered highly directional if there are two values

nonzero and higher than

.

3.2. Automatic Selection of the Appropriate Decomposition Level Using Shannon’s Entropy

In the process described in

Section 3.1, it is proven that, at certain decomposition levels, image textures enjoying high directionality are clearly detected and, at the same time, the defects on them. It is very useful to count on algorithms that automatically determine the optimal decomposition level, in which textures and defects are more easily detected. An algorithm based on Shannon’s entropy has been developed, and it is presented below.

It is already known that Shannon’s entropy describes the level of randomness or uncertainty in an image, i.e., how much information such an image provides. It is possible to state that the higher the value of the entropy, the greater the image quality [

56].

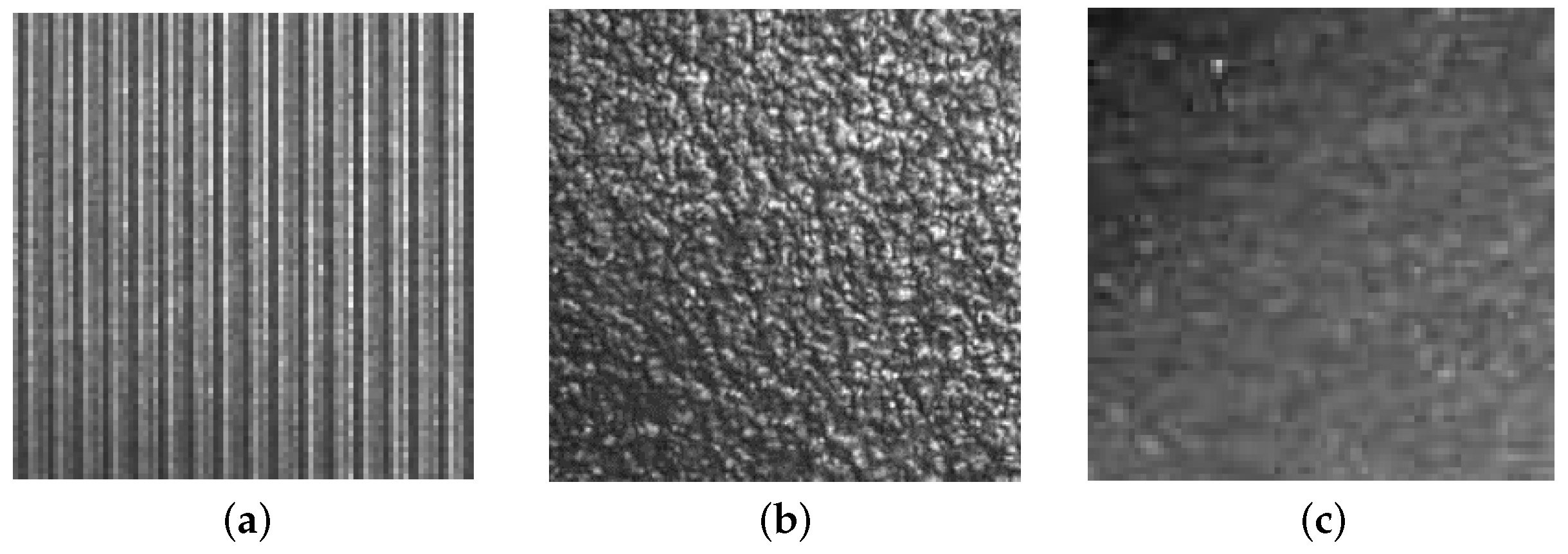

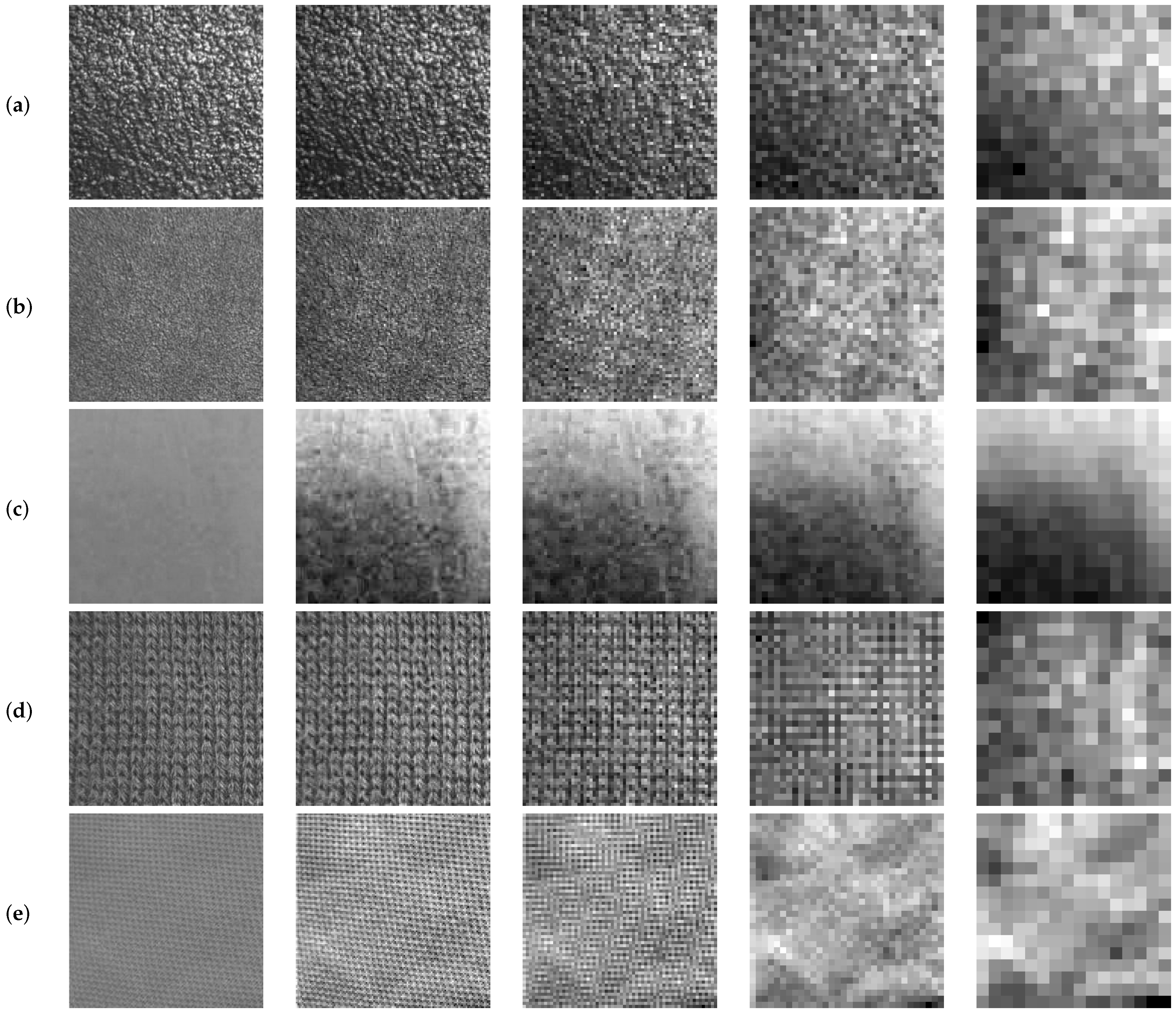

Figure 8 shows how texture patterns decrease as the decomposition level of the image is increased. Image degradation level can be measured and quantified at successive levels through Shannon’s entropy.

The entropy value [

57,

58] is calculated according to the following Equation (

6):

where

is the set of

T values on which the entropy function is applied and where the function

calculates the probability of occurrence associated with the value

; as our analysis image set

X (256 gray-level images) consists of these 256 possible values and the probability

will derive from the total number of pixels in the image that have, indeed, the gray level

, divided by the total number of pixels in the image (also referred to as

).

In performing the convolution process of the image with a specific wavelet signal, unbounded real values, positive and negative ones, are obtained. Thus, in order to calculate Shannon’s entropy function in each subimage and for each decomposition level j, the values of each subimage are firstly transformed over the range of integer values between zero and 255. Therefore, in addition to allowing the visualization of the resulting subimages, entropy can be calculated, as well. For each decomposition level, Shannon’s entropy provides four values: , , and . Each of these normalized values will be divided by the total number of pixels the subimage has in the decomposition level j. Such values are referred to as or for the entropy of the approximation image and as , and (or, respectively, , and ) for the entropy values of the horizontal detail, vertical detail and diagonal detail subimages.

The function value of Shannon’s entropy indicates how much information on the texture from the original image remains in each subimage. This function value is calculated on each subimage derived from each decomposition level. According to Equation (

6), it can be stated that entropy provides a measure of the histogram: the higher the entropy, the greater the uniformity of the histogram, i.e., the greater the information that the image contains on the texture. As the decomposition level increases, subimages lose information about the texture patterns.

In an optimal decomposition level, it would be possible to eliminate the texture pattern with no significant loss of information on the defects. In order to determine the optimal decomposition level, a ratio value

will be used, calculated between the entropy value of the approximation subimage and the sum of the four subimages:

Variations of this ratio allow detecting changes in the amount of texture information between two consecutive decomposition levels. The goal is to find the decomposition level causing the maximum variation of the value expressed in Equation (

7) with regard to the value in the previous decomposition level. This would indicate that the texture pattern is still present at level

j and that it disappears at level

, where, however, information on the defects would remain.

For this calculation process, the coefficient

is defined as the difference between two values of

corresponding to two consecutive decomposition levels.

The optimal decomposition level (

) is defined as the level in which

takes the highest value.

From that level, the decomposition process may end. Beyond that value (for values ), it is possible to assume that the approximation image remains sufficiently smoothed: most of the texture patterns have been eliminated, and from now on, going ahead with the decomposition would lead to a loss of information on the defects.

In

Table 2, the values of Shannon’s entropy are gathered. They were calculated for the images in

Figure 8. The values of the coefficients

, calculated for each image and in each decomposition level, were also gathered, as well as the calculated values of

.

Once the optimal decomposition level is determined, the process ends with the reconstruction of the image according to Equation (

10).

3.3. Optimum Thresholding Method

The wavelet-based methods of texture defect detection reviewed here conclude with a thresholding stage. Most authors use recognized methods, such as [

59], or methods based on empirical adjustment [

46]. These methods do not specify the criteria for the selection of the thresholding technique, and they do not provide objective calculations on the performance of the method. Sezgin’s survey [

48] reviews a large number of thresholding methods for defect detection. Sezgin categorizes the thresholding methods into six groups according to the information they use. These categories are:

histogram shape-based methods,

clustering-based methods,

entropy-based methods,

object attribute-based methods,

spatial methods and

local methods.

Sezgin’s findings show that the best thresholding results were achieved with the three first groups of methods.

Several thresholding methods in the final stage of the EADL method have been used to determine the following:

To answer the above questions, the following thresholding methods were selected, attending to the three first groups proposed by Sezgin:

Histogram shape-based methods: thresholding methods based on gray level average (Ave) and the thresholding method based on computing the minima of the maxima of the histogram (MiMa) [

60].

Clustering-based methods: Ridler’s method [

61], Trussell’s method [

62], Otsu’s method [

59] and Kittle’s method [

63].

Entropy-based methods: Pun’s method [

64], Kapur’s method [

52] and Johanssen’s method [

51].

In order to perform a quantitative analysis of the segmentation method proposed in this paper, the adequate metrics are necessary to be selected. From the set of metrics proposed by Sezgin [

48] and Zhang [

65,

66], the misclassification error (ME) has been selected. ME represents a measurement of the number of misclassified pixels; ME occurs if the foreground (defect) is identified as the background (texture pattern), or vice versa).

ME is calculated as the relation between the pixels of the test image (segmented by means of the method proposed in this paper) and those of a pattern image (manually segmented). Equation (

11) is used to calculate it.

(background test) and

(object test) respectively indicate the number of pixels of the texture pattern image and those of the defect in the test image.

(background pattern) and

(object pattern) respectively indicate the number of pixels of the texture pattern and those of the defect in the pattern image. The equation proves that the more both images look alike, the smaller

will be, being zero in the case of a perfect match between the manual segmentation and the automatic segmentation. This indicates, therefore, a maximum efficiency of the method proposed in the present paper.

Equation (

12) is used to assess the yield of the segmentation method.

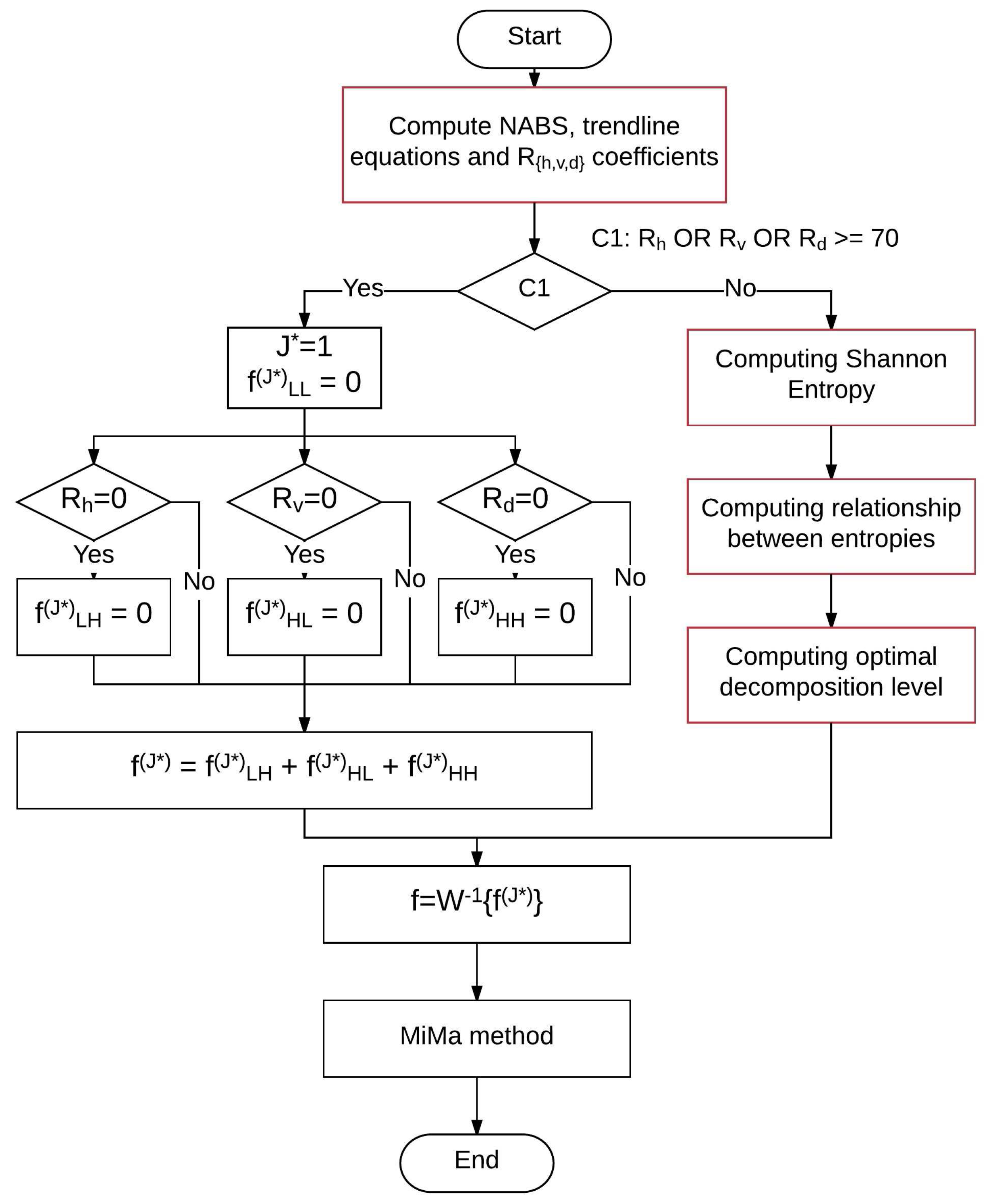

Figure 9 contains the flowchart that summarizes the EADL method. The most expensive computational processes are those colored red in the shown flowchart. The computational complexity of each one is:

Compute : .

Shannon entropy: , where ; for values : .

Compute and : .

From above individual computational complexities, a total computational complexity of order is derived. On the other hand, the computational complexity of the algorithm used for wavelet decomposition is , being in the most favorable case and in the worst case; the value of β indicates the number of instructions executed by function MbufPut2d() of the Matrox Imagin Library (MIL). Therefore, the computational complexity of the EADL method is . From these calculations, it can be concluded that EADL does not increase the complexity of the process for calculating the Wavelet transforms.

4. Results

The EADL method, whose algorithm is shown in

Figure 9, was implemented using the C programming language. The EADL method, the automatic band selection method [

46] and the adaptive level-selecting method [

25] for wavelet reconstruction were tested over a set of 223 images of texture: 115 structural textures: milled surface (29), fabric (67) and bamboo weave (19); and 108 statistical textures: sandpaper surface (29), wood surface (19), wool surface (19), painted surface (21) and cast metal (20).

After checking different mother wavelets (Haar, symlets, biorthonormal, Meyer and coiflets), the Haar-based function with two coefficients has been used as the mother wavelet, because it shows the best ratio between yield and computational cost. The Haar wavelet has been applied until the fourth decomposition level. Higher decomposition levels have proven to lead to the merger of the defects with the texture pattern, which prevents their segmentation.

4.1. Statistical Textures

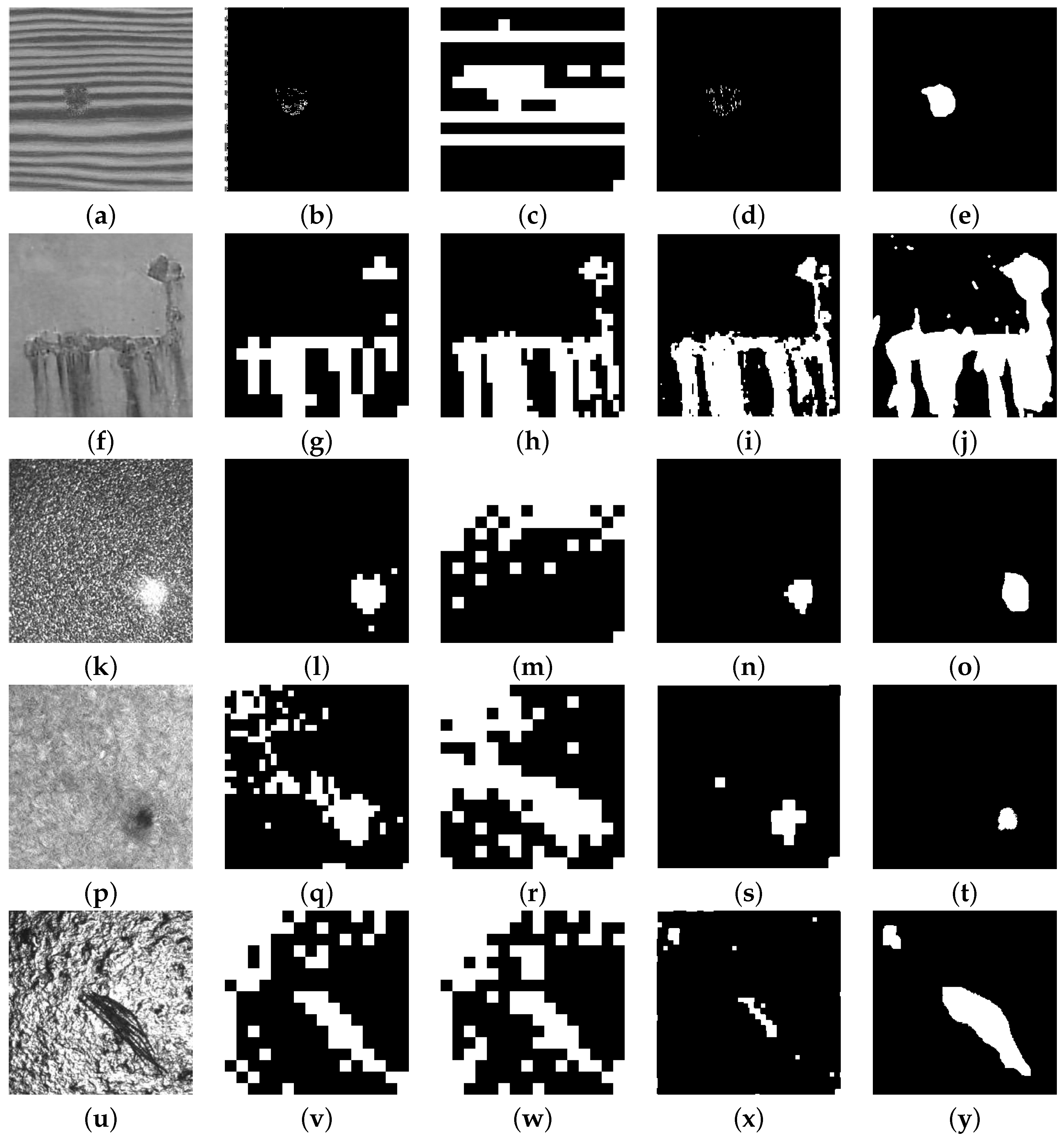

Figure 10 shows a representative set of different types of defects in statistical textures obtained from the group of 108 images used to test the EADL method: wood (a), painted surfaces (j), sandpaper (k), wool (p) and cast metal (u).

Table 3 shows the value

calculated from the Shannon entropy ratio at different decomposition levels for the images of

Figure 10 classified as statistical textures; it also shows the optimal decomposition level calculated by means of the EADL method, the automatic band selection method and the adaptive level-selecting method, respectively. Image (a) does not show the

and

figures since the optimal decomposition level was found with the NABS algorithm (

).

Metric analysis (

Table 4) showed that if only one thresholding method is used in EADL programming for the group of statistical textures considered, that method should be MiMa, since it was the one that offered the best average performance in defect detection (

). The maximum performance for each type of texture is underlined. On the other hand, if the thresholding method with the best performance is used according to the kind of texture—wood, sandpaper, wool, painted surface or cast metal—two thresholding methods should be used:

The Kapur method for natural directional textures wood (), sandpaper () and wool ().

The MiMa method for artificial irregular isotropic statistical textures painted surface () and cast metal ().

4.2. Structural textures

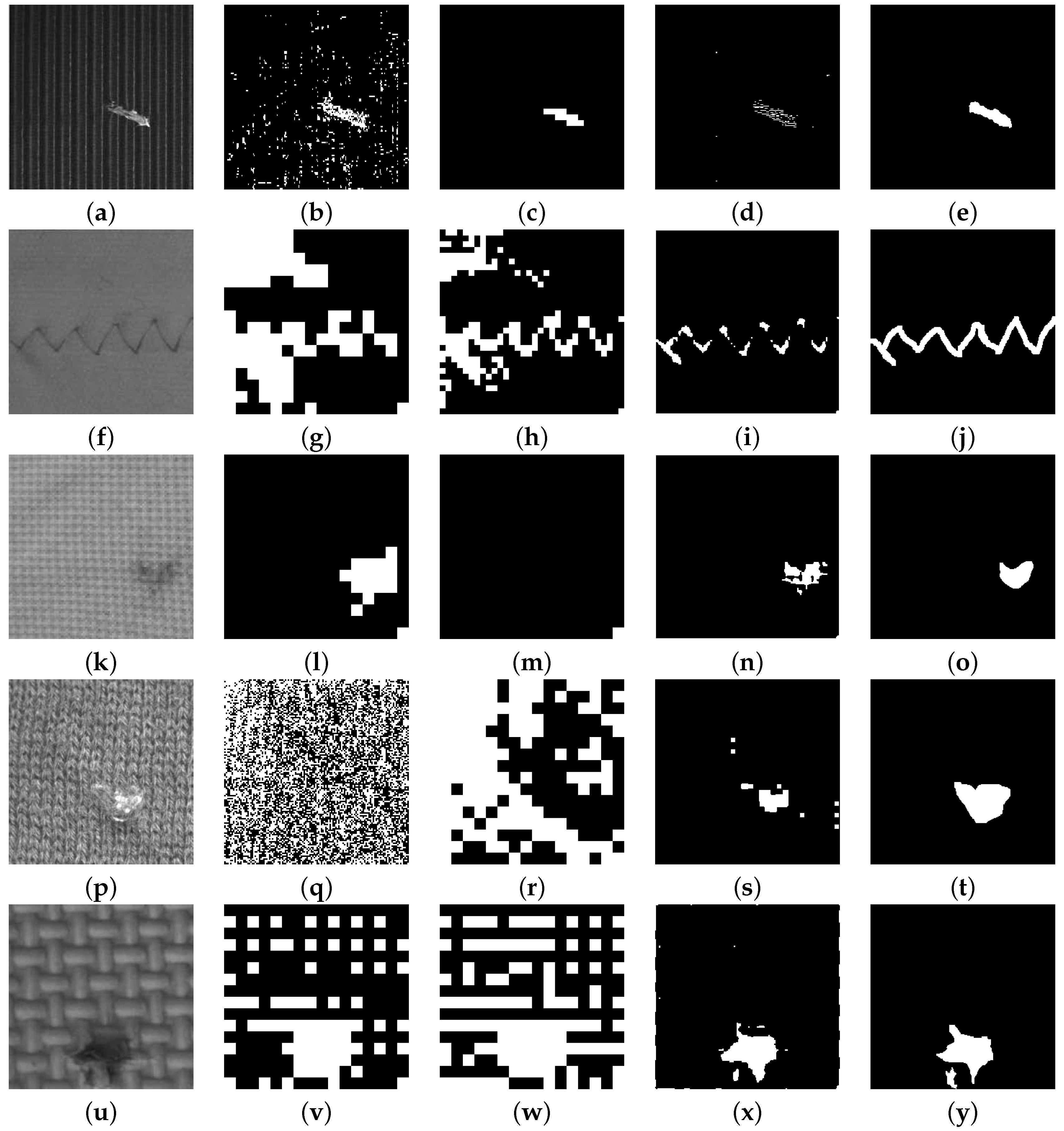

Figure 11 shows five types of defects in patterns of structural texture obtained from a group of 108 images used to test the EADL method.

Figure 11 shows: (a) milled surface, (f) fabric fine-appearance, (k) fabric medium-appearance, (p) fabric coarse-appearance and (u) bamboo weave.

Table 5 shows the value

calculated from the Shannon entropy ratio at different decomposition levels for the images of

Figure 11 classified as structural textures. It also shows the optimal decomposition level calculated by means of the EADL method, the automatic band selection method and the adaptive level-selecting method, respectively. Image (a) does not show the

and

figures since the optimal decomposition level was found with the NABS algorithm (

).

Table 6 depicts the numeric values obtained for the thresholding algorithms selected: a value of

indicates that the defect detected by EADL fully matches with the same defect when segmented by a qualified human inspector. The maximum performance for each type of texture is underlined.

As in the previous

Section 4.1, the efficiency of the EADL method is determined as compared to the use of a single or multiple thresholding methods. The metric analysis results (

Table 6) show that MiMa achieved the best average performance (

). However, if the best-performing thresholding method according to the type of texture—milled surfaces, bamboo weave and fabric—needs to be selected, it is best to select:

Kapur’s method for artificial directional structural textures milled surfaces () and bamboo weave ().

The MiMa method for natural isotropic structural textures fabric appearance fine () and fabric appearance course ().

Table 7 shows the yields obtained in defect detection with the Tsai, Ngan and EADL methods on 115 structural and 108 statistical texture images.

Table 7 also shows the optimal levels of wavelet decomposition (

) obtained by each method. As can be seen, the EADL method performance is higher for the two groups of analyzed textures. It can also be seen that EADL method provides a lower average level of decomposition than the other methods discussed. A lower decomposition level means less degradation of the original image and, therefore, greater detail in detecting defects.

5. Conclusions

This paper presents a robust method for detecting defects in a wide variety of structural and statistical textures. An image reconstruction scheme based on the automatic selection of (1) the band, using NABS and (2) the optimal wavelet transform resolution level, using Shannon’s entropy, has been used.

Valuable information about the directionality of the texture patterns can be extracted from the analysis of the NABS value of the horizontal, vertical and diagonal details at different decomposition levels.

A correct wavelet reconstruction scheme has been implemented to remove the texture patterns and highlight the defects in the resulting images.

It is demonstrated that the optimal decomposition levels computed from the Shannon entropy are lower than the ones provided by other methods based on the co-occurrence matrix (Han method) or on energy calculation (Tsai method). This fact implies an increment of information in the image resulting from the wavelet reconstruction scheme. This characteristic, together with the optimal selection of a thresholding method (MiMa), has allowed the EADL method to achieve high performances in defect detection: a in statistical textures and a in structural textures.

An analysis of the results of the EADL method with nine different thresholding algorithms showed that selecting the appropriate thresholding method is important for achieving optimum performance in defect detection. On the basis of a metric analysis of 223 images, the most appropriate thresholding algorithm for each texture is proposed. The MiMa method proved to be the most appropriate for the textures, such as painted surfaces, cast metal and fabric. However, the Kapur method has been demonstrated to be better with wood, sandpaper surface, wool, milled surface and bamboo weave.