Robust Image Restoration for Motion Blur of Image Sensors

Abstract

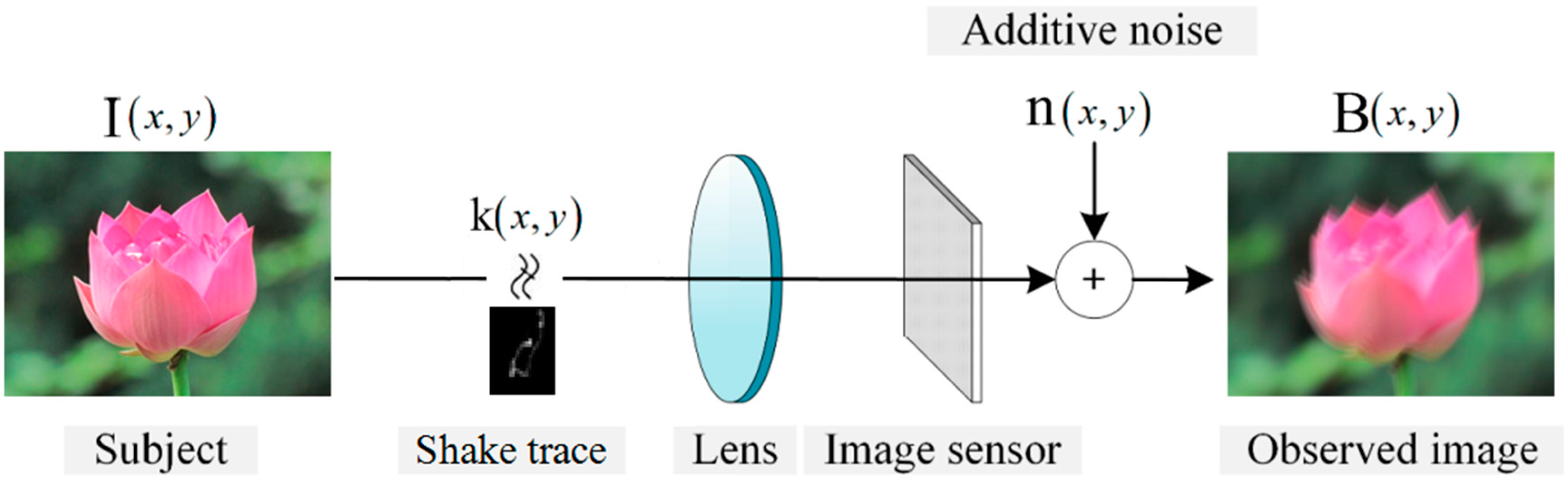

:1. Introduction

- In order to eliminate the effect of noise and ambiguous structures which may damage kernel estimation, we propose a novel structure extraction method with which the reliable structures can be selected adaptively and effectively;

- As motion blur kernel is sparse and delineates the motion trace between the subject and image sensors, we introduce a two-step method for the kernel estimation process to eliminate the noise and guarantee the sparsity and continuity.

2. Related Work

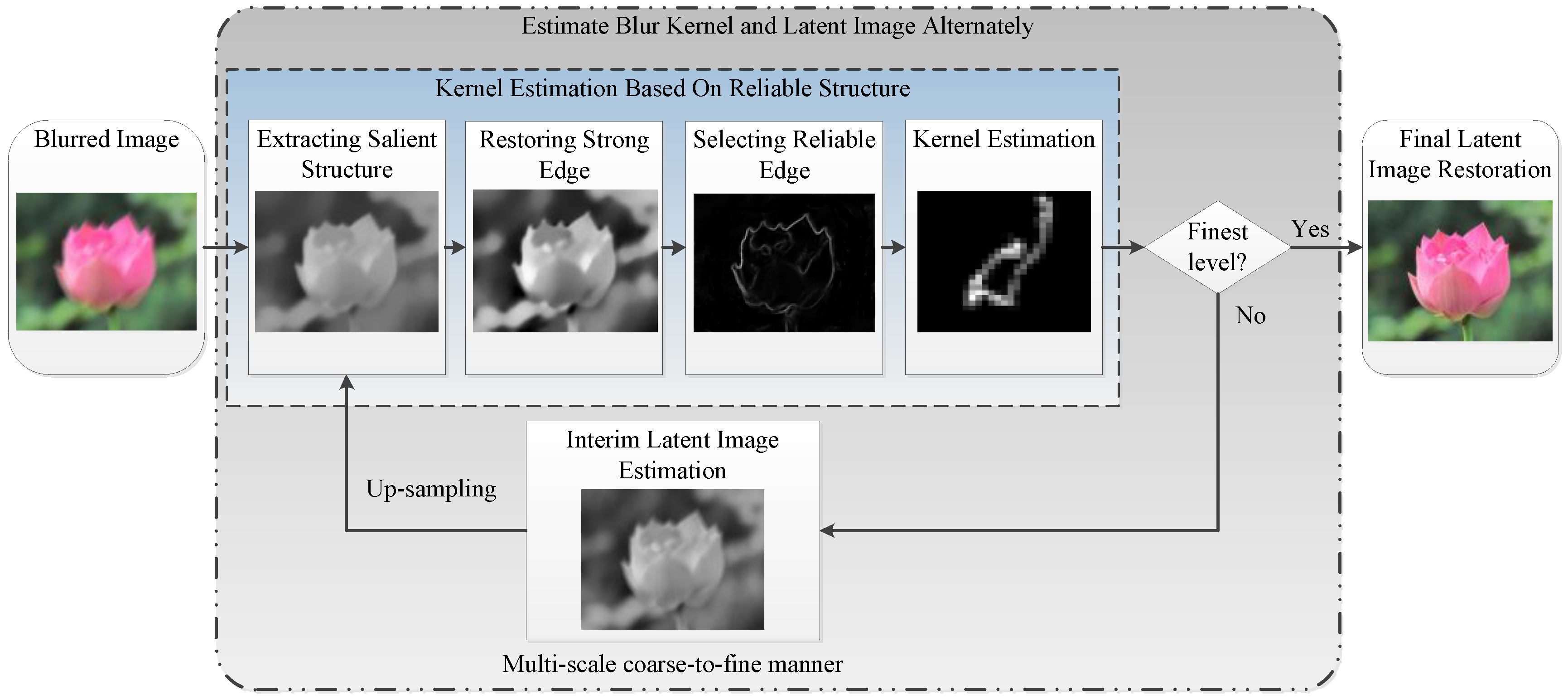

3. Single Image Blind Deconvolution Using Reliable Structure

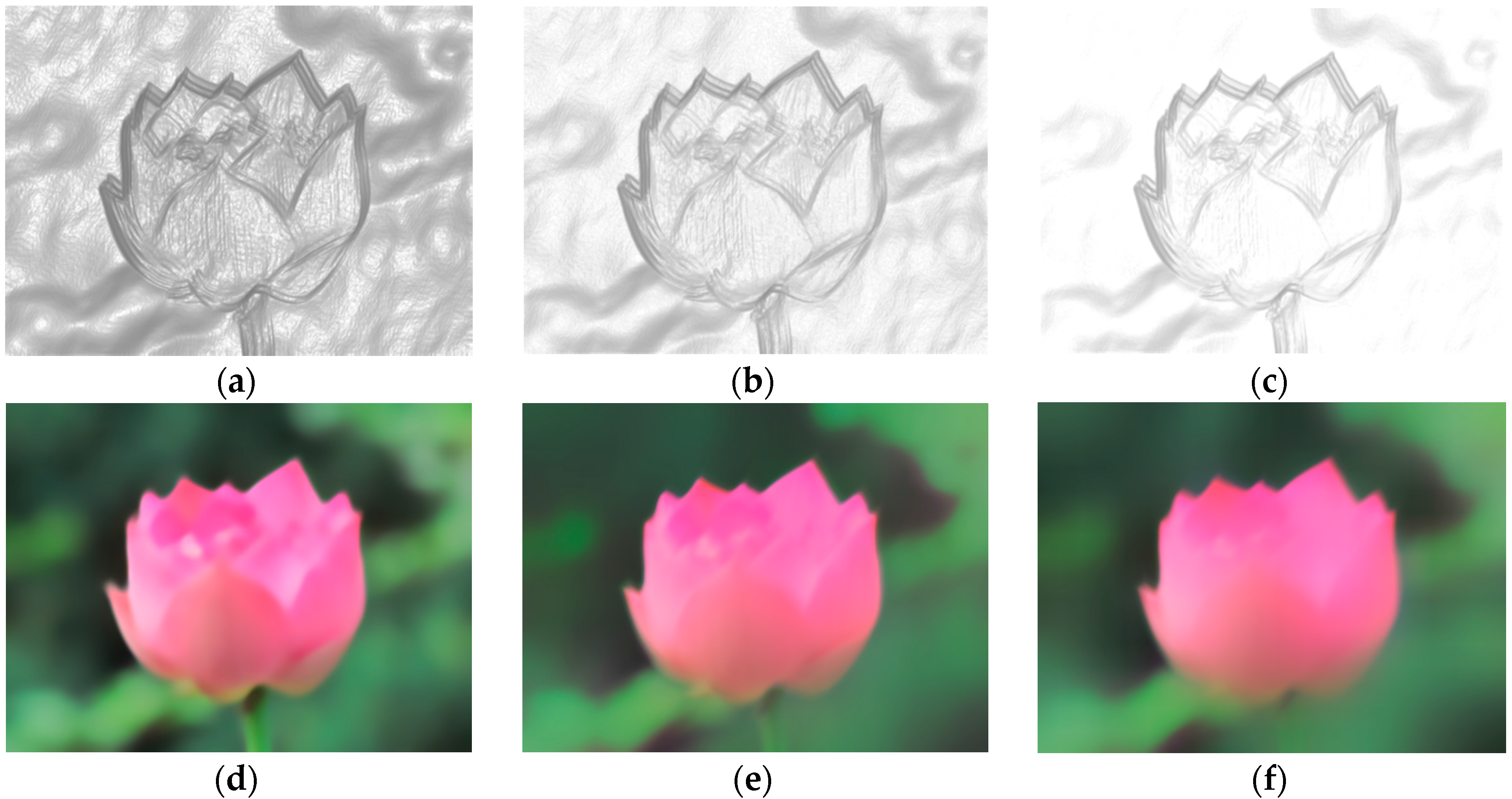

3.1. Reliable Structure Extraction from Blurred Image

3.2. Kernel Estimation and Refinement

| Algorithm 1. Blur Kernel Estimation. |

| Input: Blurred image, reliable structures and the initial value of k from previous iterations or previous level; |

| for n = 1 to Itr (Itr: number of iterations) do |

| Estimate the blur kernel using the reliable structures. (Equation (7)) |

| Refine the blur kernel by solving Equations (10) and (11) alternately. |

| ; |

| end for |

| Output: Blur kernel k. |

3.3. Interim Latent Image Restoration

3.4. Final Non-Blind Deconvolution

3.5. Multi-Scale Implementation

| Algorithm 2. Overall Algorithm. |

| Input: Blurred image B, parameters , and the size of blur kernel; |

| Build an image pyramid {Bs} and all-zero kernel pyramid {ks} with level index {1, 2, …, n} according to the size of blur kernel; |

| 1. Blind estimation of blur kernel |

| for = 1 to n do |

| Compute adaptive weight M(Bs) (Equation (4)). |

| for i = 1 to m (m iterations) do |

| Extract salient structure Is (Equation (2)). |

| Select reliable structure for kernel estimation (Equation (6)) |

| Estimate blur kernel according to Algorithm 1. |

| Restore interim latent image L (Equation (14)) |

| , |

| end for |

| Up-sample latent image: . |

| Porject onto the constraints (Equation (8)) and up-sample blur kernel: . |

| end for |

| 2. Image restoration using MR-based Wiener Filter. |

| -Recover I using k from B in full-scale resolution(Equation (15)) |

| Output: Blur kernel k and latent sharp image I. |

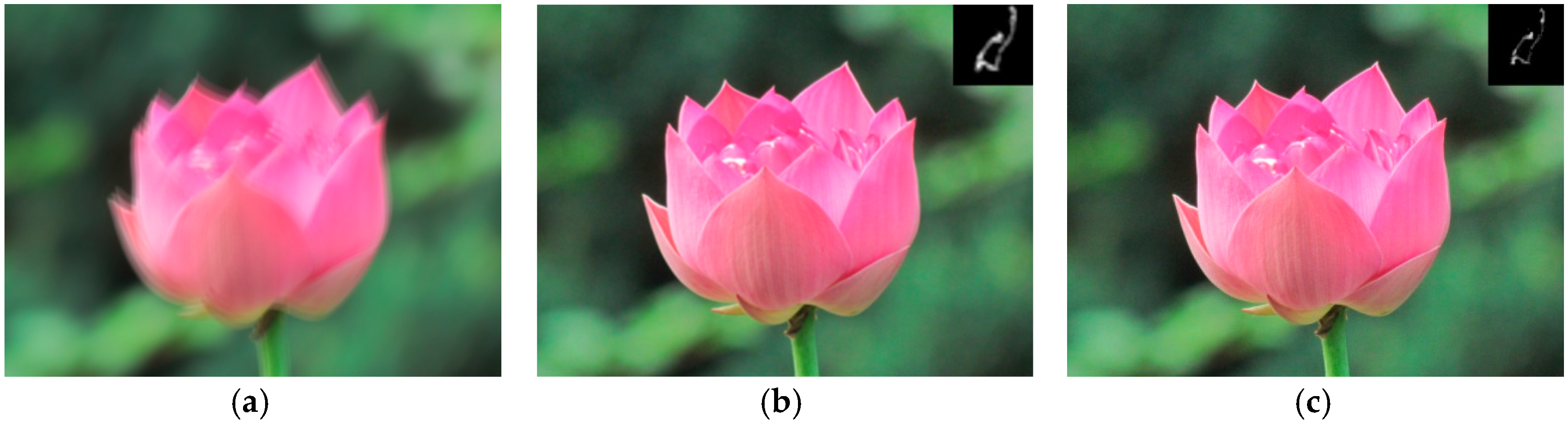

4. Experiments

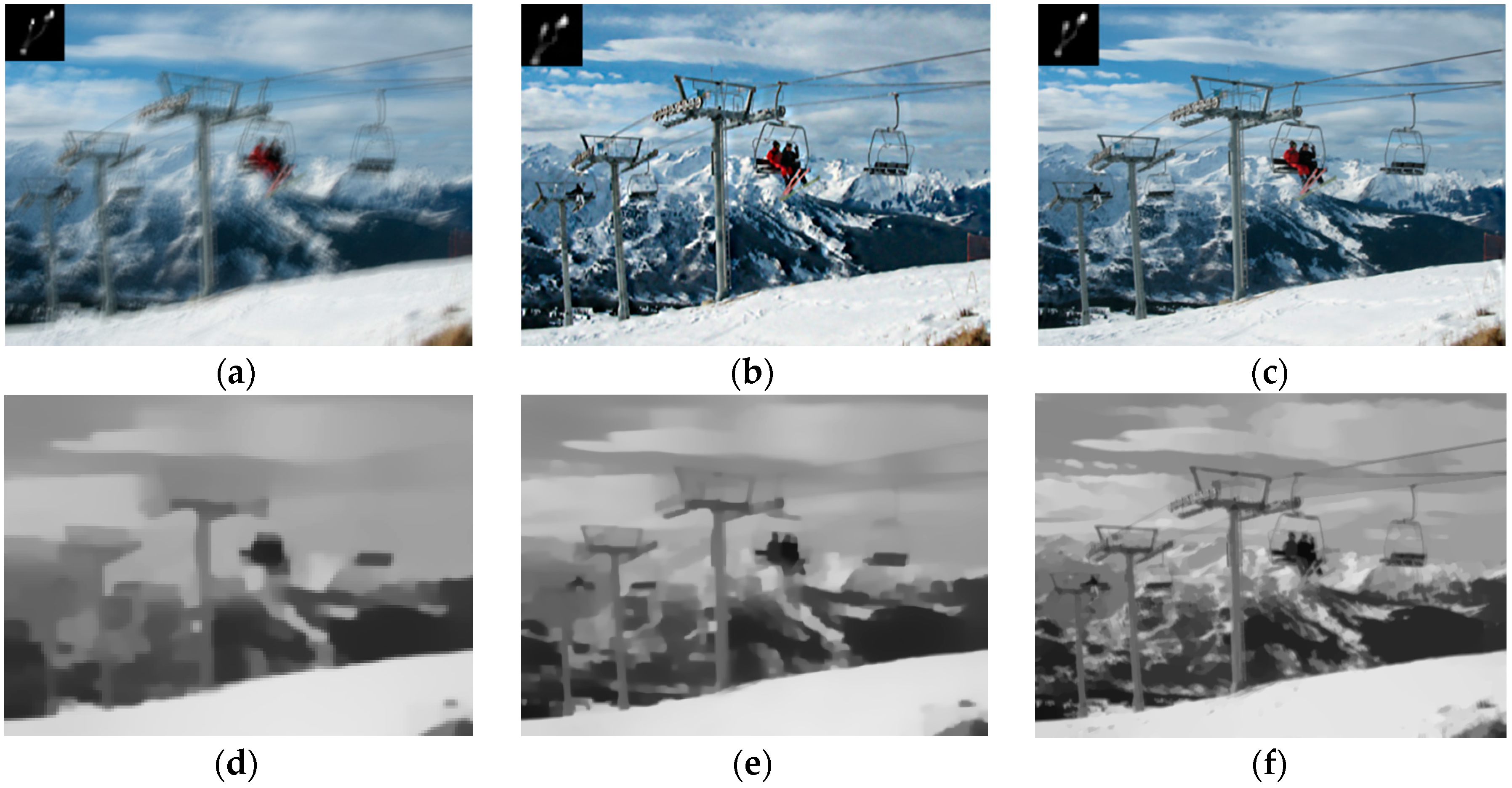

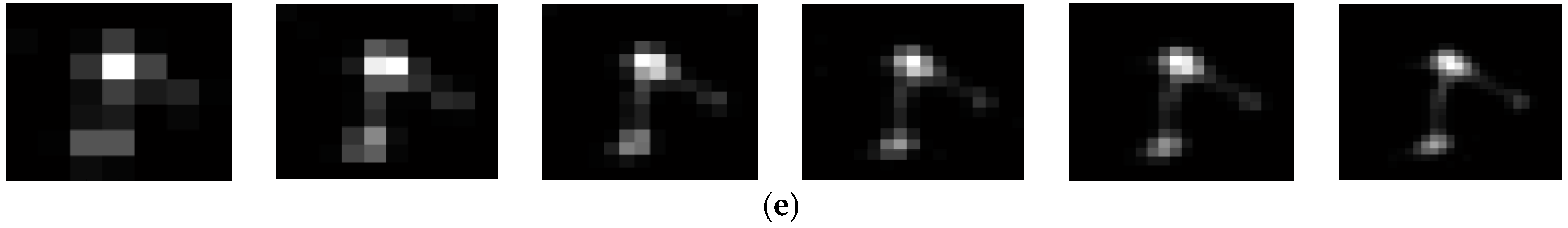

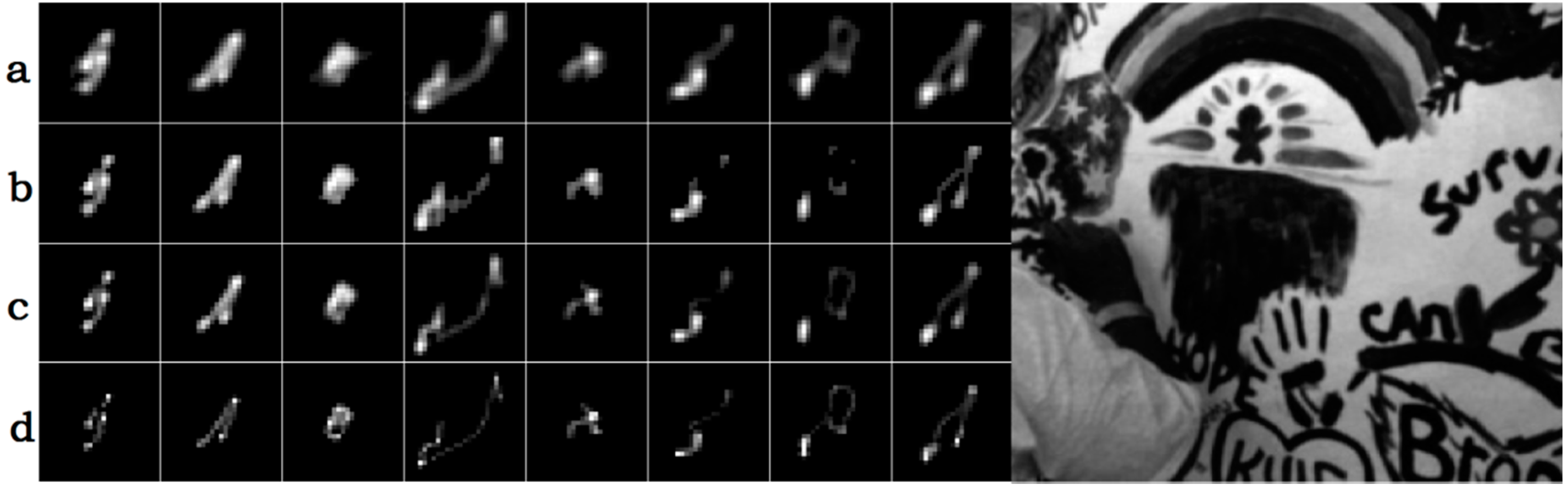

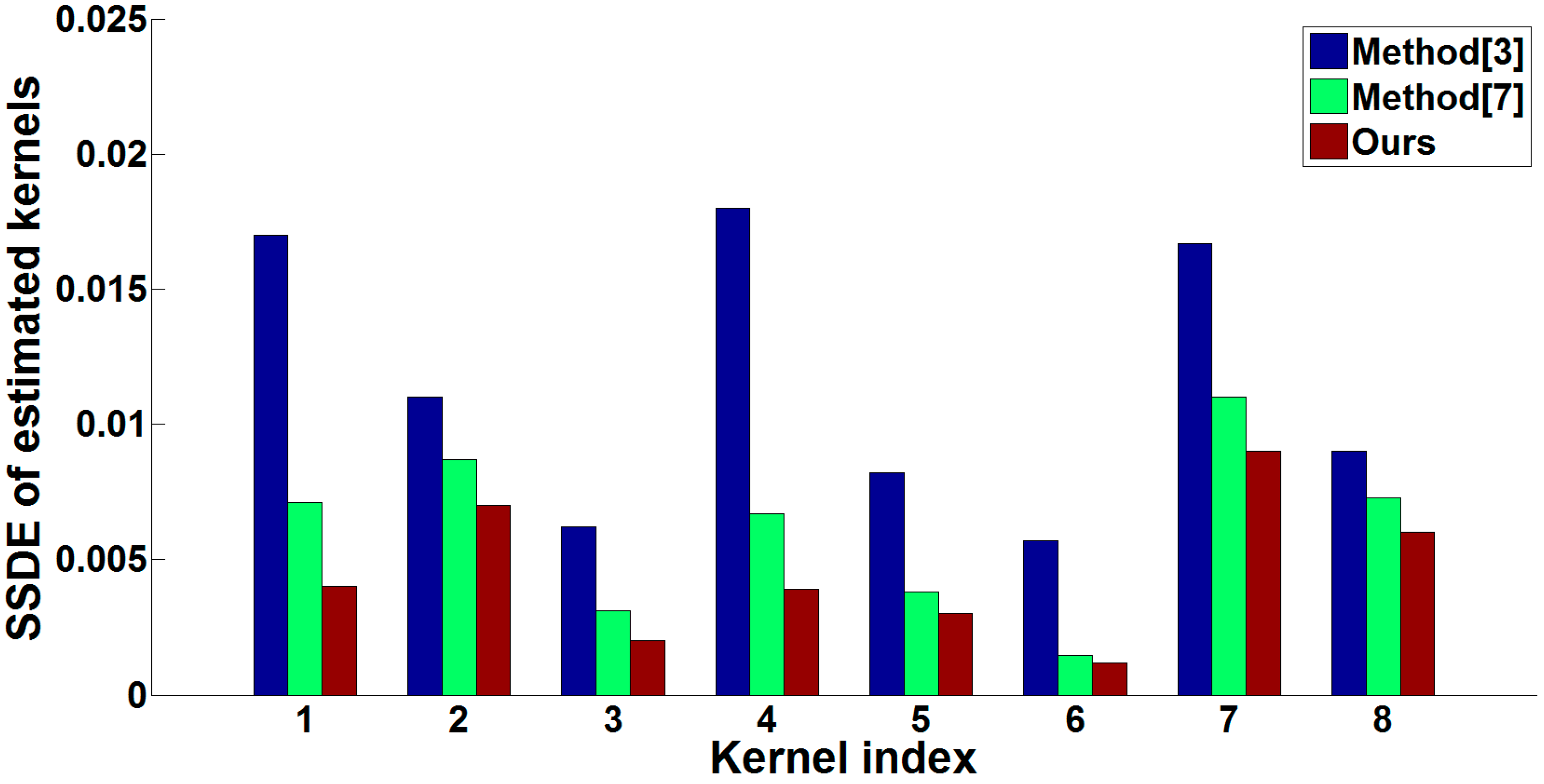

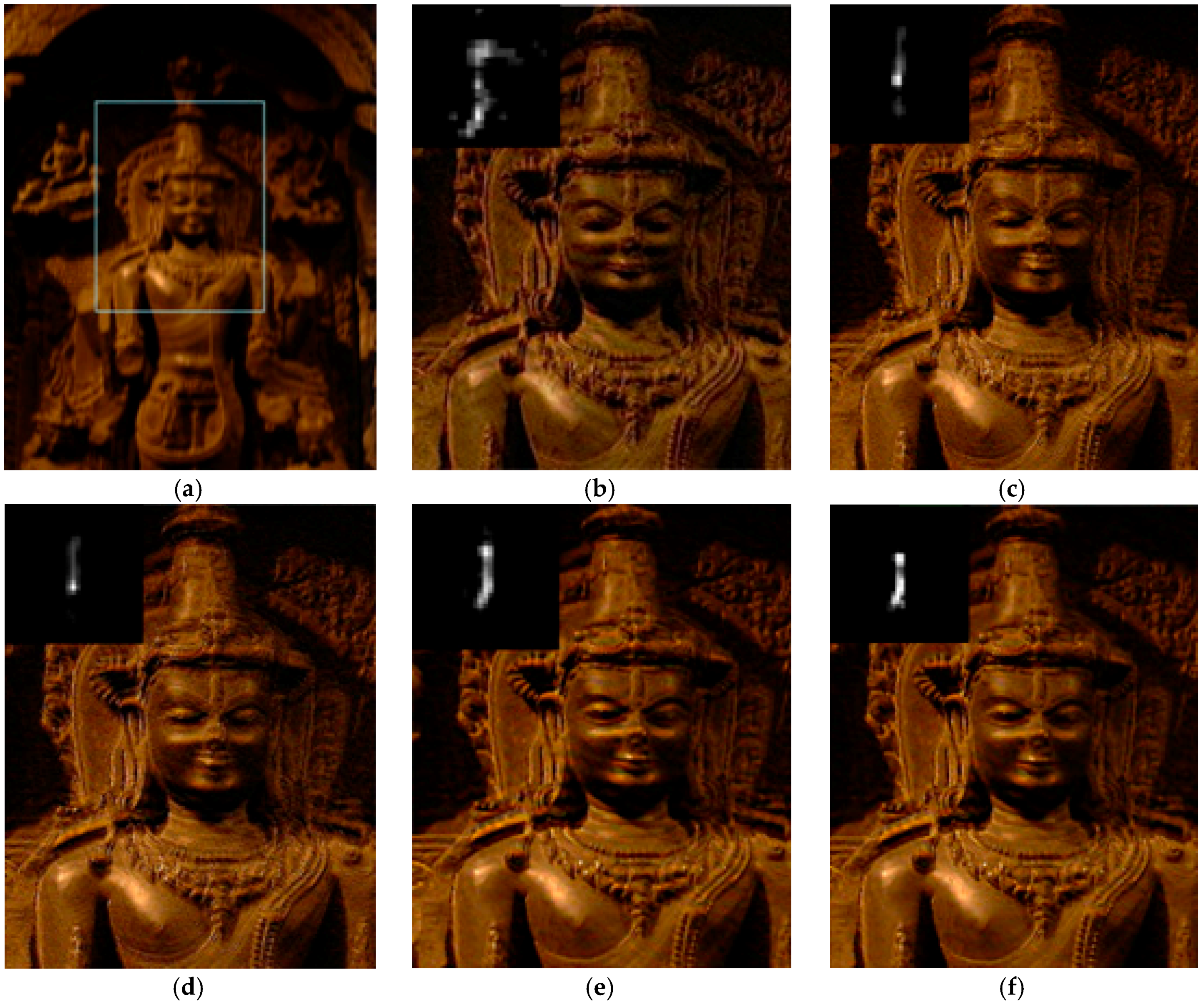

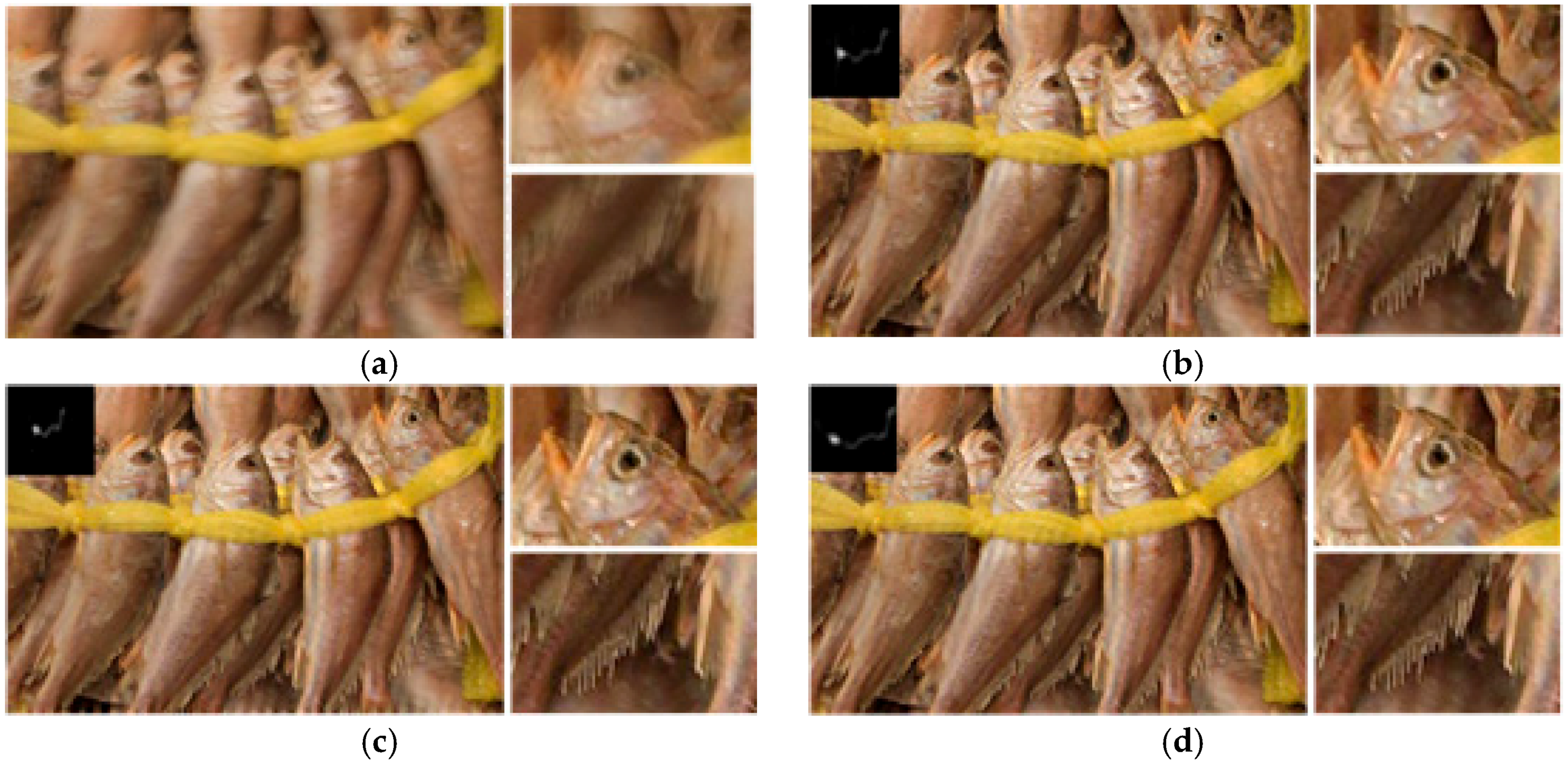

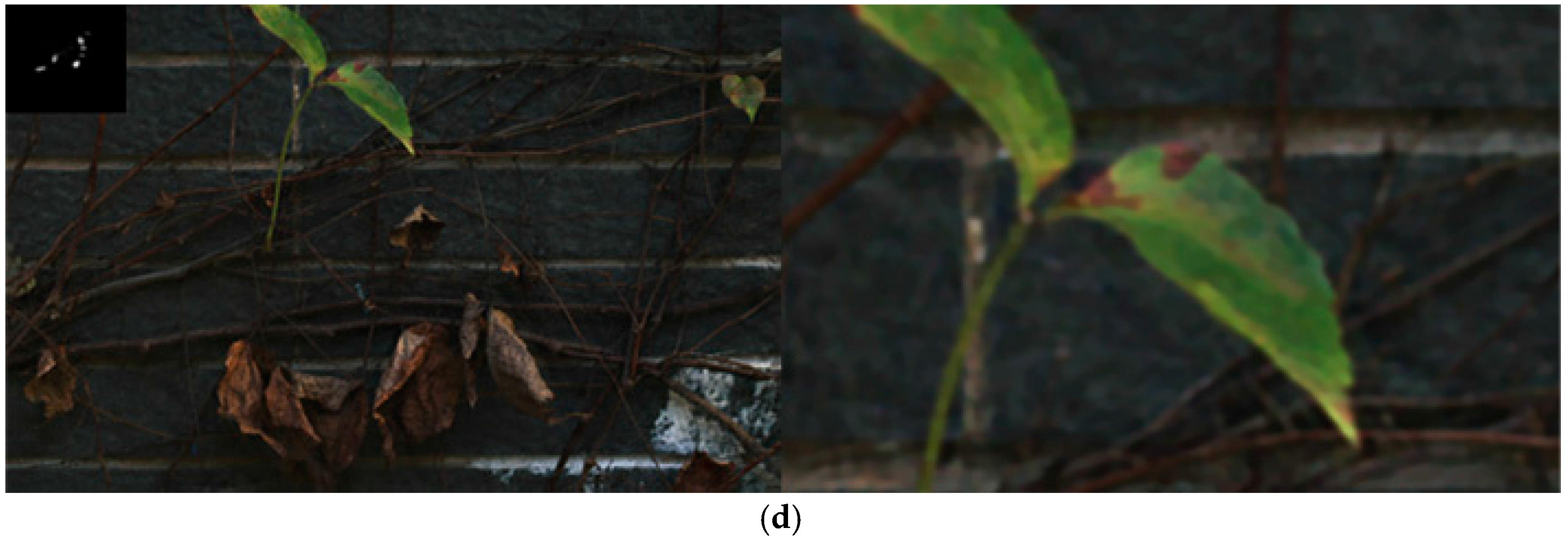

4.1. Experimental Results and Evaluation

4.2. Operation Speed

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Richardson, W. Bayesian-based iterative method of image restoration. J. Opt. Soc. Am. 1972, 62, 55–59. [Google Scholar] [CrossRef]

- Wiener, N. Extrapolation, Interpolation, and Smoothing of Stationary Time Series; The MIT Press: Cambridge, MA, USA, 1964. [Google Scholar]

- Fergus, R.; Singh, B.; Hertzmann, A.; Roweis, S.; Freeman, W. Removing camera shake from a single photograph. ACM Trans. Graph. 2006, 25, 787–794. [Google Scholar] [CrossRef]

- Shan, Q.; Jia, J.; Agarwala, A. High-quality motion deblurring from a single image. ACM Trans. Graph. 2008, 27, 73. [Google Scholar] [CrossRef]

- Jia, J. Single image motion deblurring using transparency. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8.

- Cho, S.; Lee, S. Fast motion deblurring. ACM Trans. Graph. 2009, 28. [Google Scholar] [CrossRef]

- Xu, L.; Jia, J. Two-phase kernel estimation for robust motion deblurring. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 157–170.

- Joshi, N.; Szeliski, R.; Kriegman, D. PSF estimation using sharp edge prediction. In Proceedings of the CVPR, Anchorage, AK, USA, 23–28 June 2008.

- Yang, J.; Zhang, B.; Shi, Y. Scattering Removal for Finger Vein Image Restoration. Sensors 2012, 12, 3627–3640. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Quan, W.; Guo, L. Blurred Star Image Processing for Star Sensors under Dynamic Conditions. Sensors 2012, 12, 6712–6726. [Google Scholar] [CrossRef] [PubMed]

- Manfredi, M.; Bearman, G.; Williamson, G.; Kronkright, D.; Doehne, E.; Jacobs, M.; Marengo, E. A New Quantitative Method for the Non-Invasive Documentation of Morphological Damage in Painting Using RTI Surface Normals. Sensors 2014, 14, 12271–12284. [Google Scholar] [CrossRef] [PubMed]

- Cheong, H.; Chae, E.; Lee, E.; Jo, G.; Paik, J. Fast Image Restoration for Spatially Varying Defocus Blur of Imaging Sensor. Sensors 2015, 15, 880–898. [Google Scholar] [CrossRef] [PubMed]

- Krishnan, D.; Tay, T.; Fergus, R. Blind deconvolution using a normalized sparsity measure. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 20–25 June 2011.

- Xu, Y.; Hu, X.; Wang, L.; Peng, S. Single image blind deblurring with image decomposition. In Proceedings of the 2012 IEEE International Conference on Proceedings of Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012.

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T. Understanding and evaluating blind deconvolution algorithms. In Proceedings of the Computer Vision and Pattern Recognition, 2009, Miami, FL, USA, 20–25 June 2009; pp. 1964–1971.

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T. Efficient marginal likelihood optimization in blind deconvolution. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 20–25 June 2011; pp. 2657–2664.

- Oh, S.; Kim, G. Robust Estimation of Motion Blur Kernel Using a Piecewise-Linear Model. IEEE Trans. Image Process. 2014, 3, 1394–1407. [Google Scholar]

- Shao, W.; Ge, Q.; Deng, H.; Wei, Z.; Li, H. Motion Deblurring Using Non-Stationary Image Modeling. J Math. Imaging. Vis. 2015, 52, 234–248. [Google Scholar] [CrossRef]

- Money, J.; Kang, S. Total variation minimizing blind deconvolution with shock filter reference. Image Vis. Comput. 2008, 26, 302–314. [Google Scholar] [CrossRef]

- Levin, A.; Fergus, R.; Durand, F.; Freeman, W.T. Image and depth from a conventional camera with a coded aperture. ACM Trans. Graph. 2007, 26, 70–78. [Google Scholar] [CrossRef]

- Yuan, L.; Sun, J.; Quan, L.; Shum, H.-Y. Image deblurring with blurred/noisy image pairs. ACM Trans. Graph. 2007, 26. [Google Scholar] [CrossRef]

- Rudin, L.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Fattal, R.; Lischinski, D.; Werman, M. Gradient domain high dynamic range compression. ACM Trans. Graph. 2002, 21, 249–256. [Google Scholar] [CrossRef]

- Osher, S.; Rudin, L. Feature-oriented image enhancement using shock filters. SIAM J. Numer. Anal. 1990, 4, 919–940. [Google Scholar] [CrossRef]

- Xu, L; Lu, C.; Xu, Y.; Jia, J. Image smoothing via l0 gradient minimization. ACM Trans. Graph. 2011, 6, 174. [Google Scholar] [CrossRef]

- Luo, Y.; Fu, C. Midfrequency-based real-time blind image restoration via independent component analysis and genetic algorithms. Opt. Eng. 2011, 4, 047004. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, F.; Huang, Y.; Luo, Y.; Li, L.; Li, H. Robust Image Restoration for Motion Blur of Image Sensors. Sensors 2016, 16, 845. https://doi.org/10.3390/s16060845

Yang F, Huang Y, Luo Y, Li L, Li H. Robust Image Restoration for Motion Blur of Image Sensors. Sensors. 2016; 16(6):845. https://doi.org/10.3390/s16060845

Chicago/Turabian StyleYang, Fasheng, Yongmei Huang, Yihan Luo, Lixing Li, and Hongwei Li. 2016. "Robust Image Restoration for Motion Blur of Image Sensors" Sensors 16, no. 6: 845. https://doi.org/10.3390/s16060845