1. Introduction

The Kalman filter and its variations have great potential in many applications involving detection, tracking and control [

1,

2,

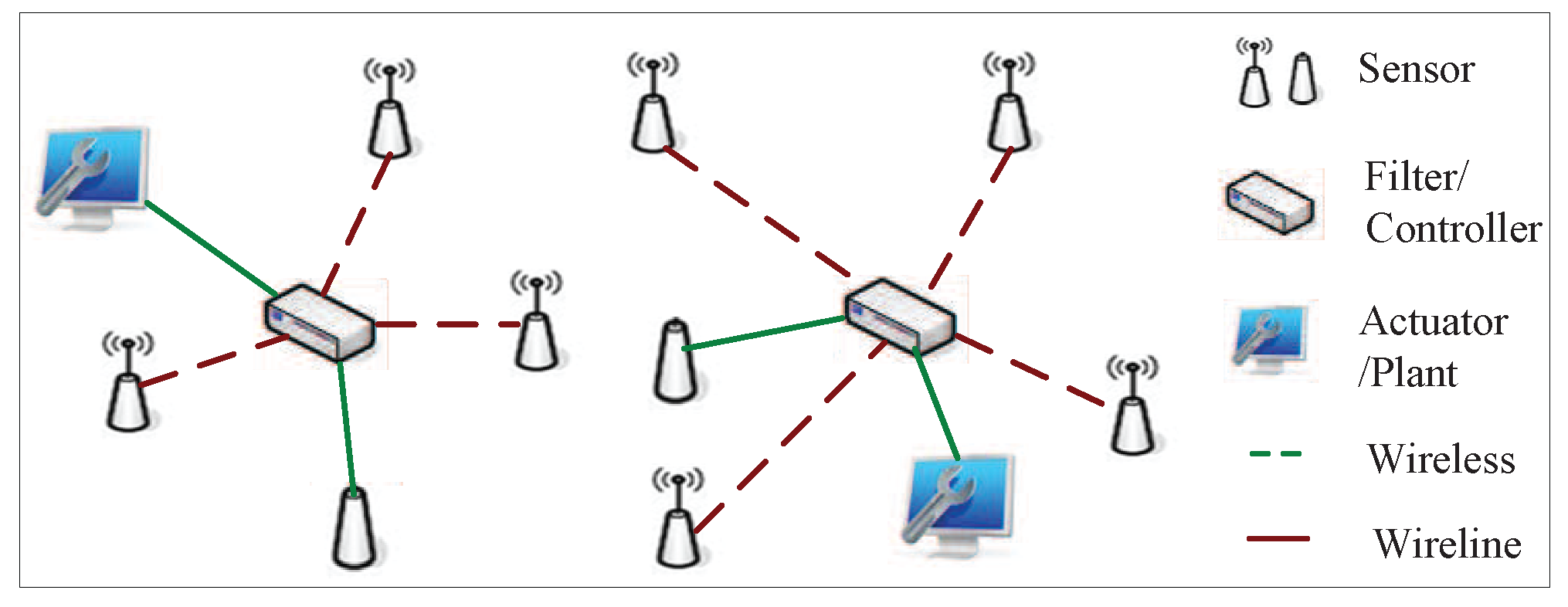

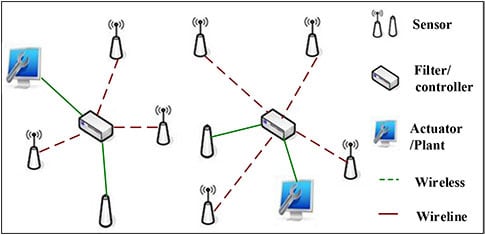

3]. Recently, the problem of filtering for distributed systems has attracted increasing attention due to the advantages, such as low cost, reduced weight and inherent robustness. As shown in

Figure 1, in such distributed systems, sensor measurements and final signal processing usually take place at different physical locations and, thus, require a wireless or wireline communication network to exchange information. In contrast to traditional filtering problems, one main issue of these systems is that packet losses are unavoidable because of congestion and transmission errors in communication channels. How missing data affect the performance of filtering schemes is of significant interest.

Similar to filtering with network packet losses, early work has studied the problem of estimation with missing data at certain time points, where the observation may drop the signal that has errors,

i.e., such an observation may contain noise alone. By modeling the uncertainty in observation processes as an independent and identically distributed (i.i.d.) binary random variable sequence, the author in [

4] proposes minimum mean-square error (MMSE) estimators, which are of a recursive form and similar to the Kalman filter. In [

5], the authors generalize the work of [

4] by investigating the filtering problem under the assumption that the uncertainty is not necessarily i.i.d. Moreover, when the data missing process can be modeled as an i.i.d. sequence with a known probability of the occurrence of signal losses, the work [

6] derives sufficient conditions for the uniform asymptotic stability of the MMSE filter. It is noted that in the scenario of [

6], since the error covariance is governed by a deterministic equation, the stability results can be obtained by defining an equivalent system where the observations contain the signal with probability one.

In the recent research for network models, a large number of works has been reported on the stability analysis of Kalman filtering (see, e.g., [

7,

8,

9,

10,

11,

12,

13,

14,

15,

16] and the references therein), and most of them account for possible observation losses. In the aforementioned literature, there have been basically two different methods to model the packet loss process in network systems. An arguably popular method is to describe the packet loss process as an i.i.d. Bernoulli sequence [

17]. Recently, some results have been published on such a model; see, e.g., [

9,

10,

11]. The second method is to employ the Markov process to model the packet loss phenomenon [

18]. Such a model has been adopted in [

12,

13,

14,

15,

16] to deal with the filtering problem with packet losses. Though under the i.i.d. model, filtering stability may usually be effectively analyzed by solving a modified Riccati recursion, an i.i.d. process is inadequate to describe the states of network channels that do not vary independently in time. Hence, compared to the i.i.d. model, a remarkable advantage of a Markovian packet loss model is that it can capture the possible temporal correlation of network channels.

It should be pointed out that almost all of the aforementioned results are obtained under the single sensor assumption. Note that from the view of state estimation, the single sensor case also includes the multi-sensor condition, where all of the measurements from different sensors can be encapsulated together and sent to the remote filter by a common channel. Under the single sensor assumption, the filter either receives the observations in full or losses them completely. Obviously, such an assumption may not hold in many practical distributed filtering systems. In these systems, the sensors are usually placed in a wide area; the measurements coming from different sensors cannot be encoded together; and they must be sent over multiple different channels. In contrast to the case of a single sensor, the main difficulty induced by multiple sensors is that observations may be partially lost. For the partial packet loss case, the explicit characterization of stability conditions for the Kalman filter is extremely challenging. Fortunately, by modeling the packet loss processes as i.i.d. Bernoulli sequences, the authors in [

19] obtain a sharp transition curve, which is the function of loss rates and can separate the stable and unstable regions of the error covariance matrix, for a couple of special systems, including cases in which the state matrix of the system has a single unstable mode and all observation matrices are invertible. However, they cannot explicitly find the sharp transition curve for other systems, except providing a lower bound and an upper bound for this sharp transition curve. Moreover, when the data dropout process is Markovian, the work [

20] offers the sufficient condition for the covariance stability. Nevertheless, this work fails to offer necessary and sufficient conditions. In addition, the restrictive condition in [

20] is very strong, that is the observation matrix is invertible. More recently, for the scenario of multiple sensors, the authors in [

21] derive necessary and sufficient stability conditions for the estimation error covariance. However, they are unable to characterize stability conditions as a simple form of inequalities, except for the second-order systems with the i.i.d. model. So far, few results in the existing literature are concerned with the explicit filtering stability conditions for multi-sensor systems with partial Markovian packet losses. This is the motivation of the present paper.

Our work studies the stability of Kalman filter under the multi-sensor case. We extend the results in [

12] by allowing the measurements coming from different sensors to be sent by multiple channels. Under our scenario, the whole observations consist of multiple packets coming from corresponding sensors, and part or all of the observations may be lost. For the sake of simplicity, we first assume that the system is set with two sensors and give necessary and sufficient stability conditions for the error covariance matrix. Then, we extend our results to a more general case where the multi-sensor scenario is considered. Different sensors are possibly subject to different packet losses. It is worth pointing out that, similar to [

12], this work gives the stability criterion in the form of a simple inequality whose parameters are the spectral radius of the system state matrix and the transition probabilities of the Markovian packet loss processes. Thus, based on our stability criterion, one may understand how the packet losses affect the stability.

The remainder of the paper is organized as follows.

Section 2 formulates the problem under consideration. In

Section 3, a necessary condition for stability is derived under standard assumptions, and the necessary condition is shown to be also sufficient for certain classes of systems. Differences between our results and previous ones are discussed in

Section 4.

Section 5 presents simulation examples, and some concluding remarks are drawn in

Section 6.

Notation: throughout this paper, a symmetric matrix denotes that P is a positive definite (semi-definite) matrix, and the relationship means . Matrix I represents the identity matrix with a compatible dimension. , and are used to mean the sets of nonnegative integers, real numbers and complex numbers, respectively. denotes the matrix trace, and represents the spectral radius of a matrix.

2. Problem Formulation

Consider the following linear discrete-time stochastic system:

where

is the system state and

is the measured output.

A and

C are constant matrices of compatible dimensions, and

C is of full row rank. Both

and

are white Gaussian noises with zero means and covariance matrices

and

, respectively. Assume that the initial state

is also a random Gaussian vector of mean

and covariance

. Moreover,

,

and

are mutually independent.

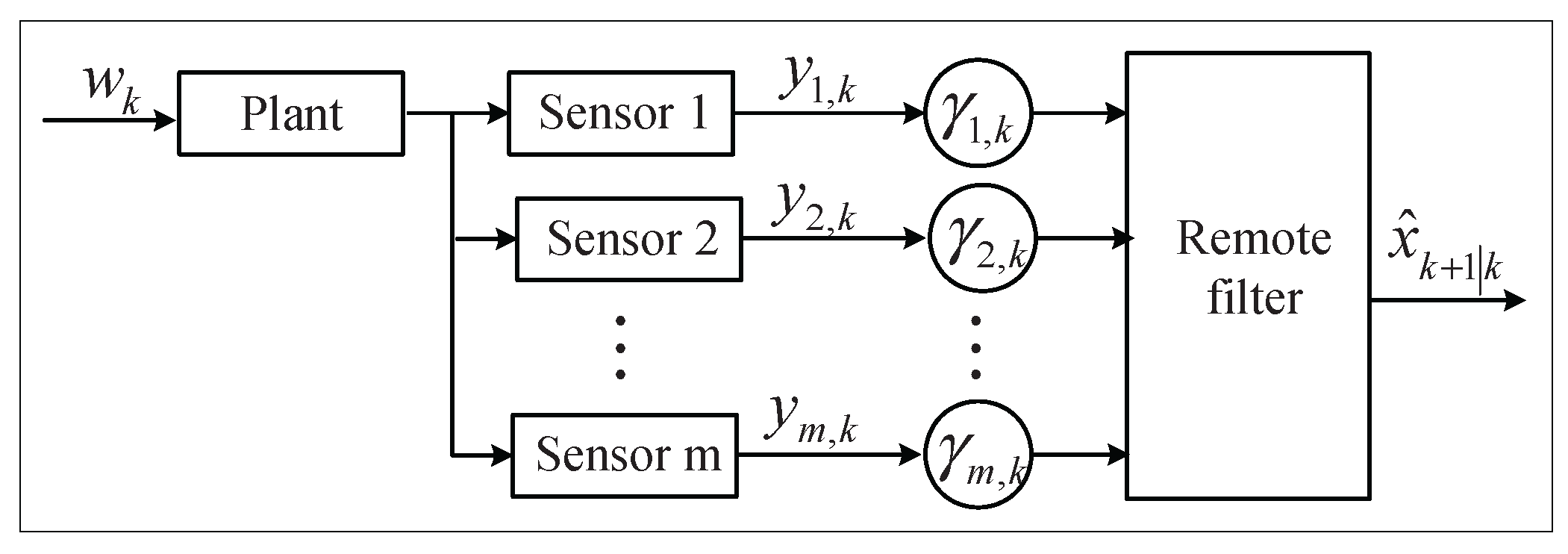

The estimation problem under consideration is illustrated in

Figure 2. For simplicity, we first assume the networked system is set with two sensors. Note that all of our results extend to the more sensors case easily. Under the two-sensor assumption, the observation

consists of two parts

and

, which are transmitted through different channels. Then, Equation (2) can be rewritten as follows:

where

and

. The covariance matrices of

and

are

and

, respectively. Comparing to Equation (2), it is obvious that:

,

, and

. Under our scenario, the sensor measurements

and

are transmitted to the remote filter via different unreliable communication channels. Due to fading and/or congestion, the communication channels may be subject to random packet losses. Two time-homogeneous binary Markov chains

and

are adopted to describe, respectively, the packet loss processes in the two channels. Note that such a Markov model is more general and realistic than the i.i.d. case studied in [

19], since the Markov process can capture the temporal correlation of the channel variation. We assume that

,

, is received correctly in time step

k if

, while there is a packet loss if

. In addition, we denote the transition probability matrix of

as follows:

where

and

, respectively, are the recovery rate and failure rate of the

channel. It is further assumed that

, so that the Markov chain

is ergodic. The distributions of the process

are determined by the corresponding channel gains, bit-rates, power levels and temporal correlation. It is obvious that a smaller value of

and a larger value of

indicate that the

channel is more reliable. To avoid any trivial case, we assume that

and

are independent for every

k and

.

Introduce

to indicate the packet loss status in the whole network at time step

k. In view of the above analysis and assumption, we easily obtain that the process

is a four-state Markov chain. To be more specific, the following indicator function is given:

Then, it follows from Equations (4) and (5) that the Markov process

has a transition probability matrix given by:

In order to simplify the analysis, we classify the packet loss status of networks into two categories by the same method as that in [

20]:

Associated with Equation (7), we, thus, model the packet loss status

as a two-state Markov process with transition probability matrix:

where

is the state space of the Markov chain. Note that with Equation (6), we further obtain that

, which ensures the ergodic property of the above two-state Markov process. Specifically, one can easily derive the following equation:

Remark 1. The problem of mean square stability for Kalman filtering with Markovian packet losses has been studied in [12], where the single sensor case is considered. Our paper generalizes [12] by allowing partial observation losses. In our scenario, the measurements coming from different sensors can be transmitted via different communication channels. Obviously, our results are more meaningful and general in practical applications, since the adopted model can be easily adjusted to describe the single sensor situation. Based on the history

and

, where

and

is the conjugate transpose of

A, one can define the filtering and one-step prediction equations corresponding to the optimal estimation as follows:

The associated estimation and prediction error covariance matrices can be written by:

Our approach is to analyze the estimation error of

; hence, the details for the recursion of

and

are omitted here. Considering [

19], we have the following recursions for estimation error covariance matrices:

In addition, the initial value is

. For simplicity of exposition, we slightly abuse the notation by substituting

with

. To analyze the statistical properties of the estimation error covariance matrix

, we recall the following definition from [

12].

Definition 1. The sequence is said to be stable if for any , where the expectation is taken on with the initial value being any Bernoulli random variable.

Here,

denotes the mean of prediction error covariance at time step

k. Our objective is to derive necessary and sufficient conditions for the stability of

under the multi-sensor environment with partial observation losses. It should be pointed out that, for the Kalman filter with partial Markovian packet losses, the stability has been studied in [

20], while their approach imposes more restriction on systems such that the results are conservative. In this paper, we propose a completely different method to obtain the main results. To make the model nontrivial, we make the following assumptions throughout the paper.

Assumption 1. All of the eigenvalues of A lie outside the unit circle.

Assumption 2. The system is observable.

Assumption 3. , Q and R are all identity matrices with appropriate dimensions.

5. Numerical Examples

In this section, for the purpose of illustrating the results from the previous sections, we present some simple examples.

Example 1. Consider a second order diagonal system with parameters expressed by: Our aim is to compare the stable and unstable regions determined by our stability criteria and other existing stability results. For this system, [

19] proposes that one needs

and

(upper bound) to guarantee the stability of the error covariance matrix; furthermore, one can conclude that the Kalman filter is unstable if the recovery rate pair

satisfies

(lower bound). As shown in

Figure 3, the region above the upper bound (red line) is stable, while the region below the lower bound (blue dotted line) is unstable. However, it can be seen from

Figure 3 that the stability of the region between the upper and lower bounds cannot be determined with the results obtained by [

19]. Recalling the stability result in [

20], it is easy to check that the filter can achieve stability if only

falls above the lower bound; while the stability of the region below the lower bound cannot be determined since [

20] only gives the sufficient condition for stability. We give the necessary and sufficient condition for stability in this paper. For this model, by Theorem 6, we can show that the region above the lower bound is stable; otherwise, it is unstable.

Example 2. Let a higher-order system be expressed by: In order to guarantee stability, the recovery rate pair

should satisfy

by Theorem 6. It is easy to check that the parameter pair

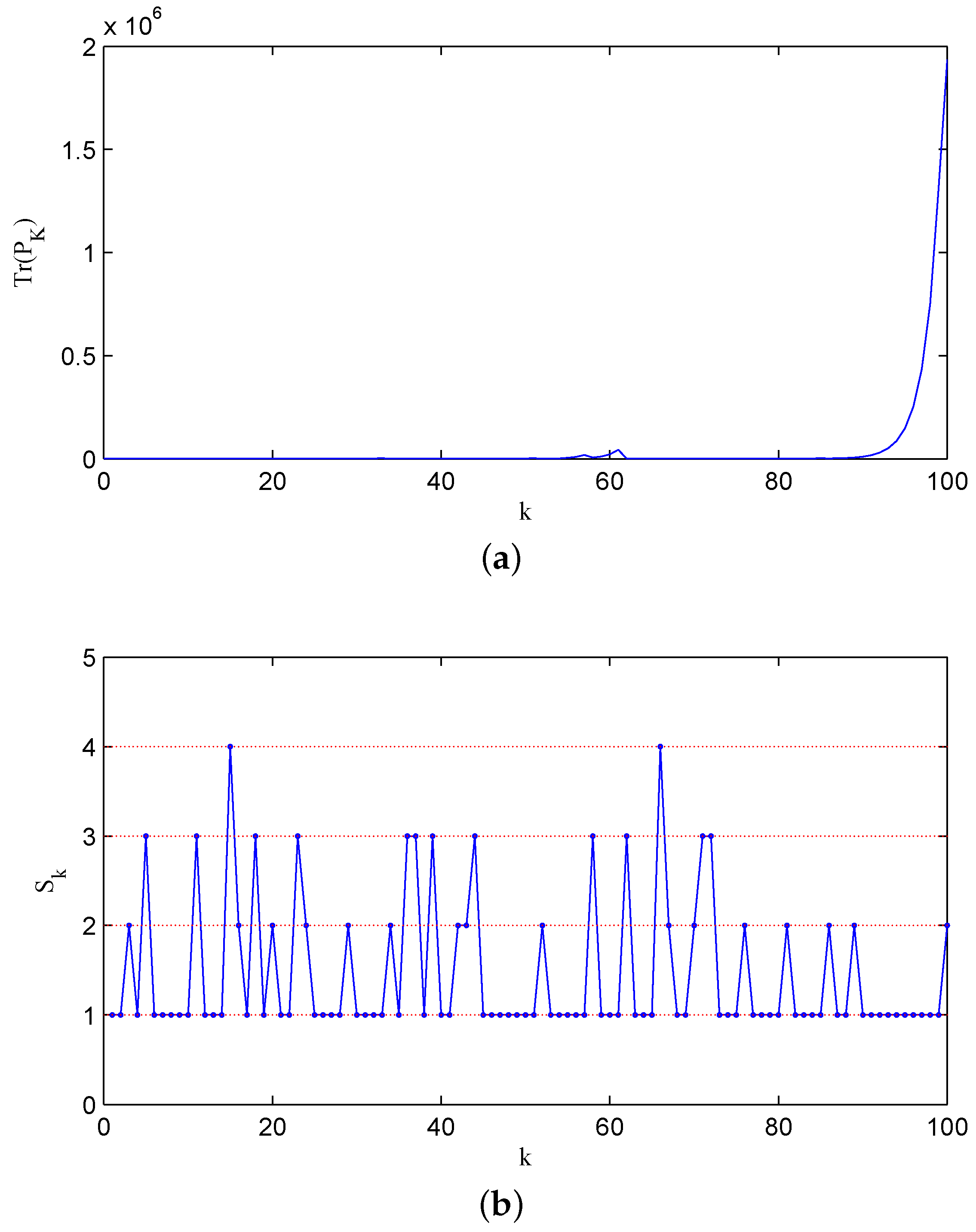

ensures the stability for the error covariance matrix of the filter.

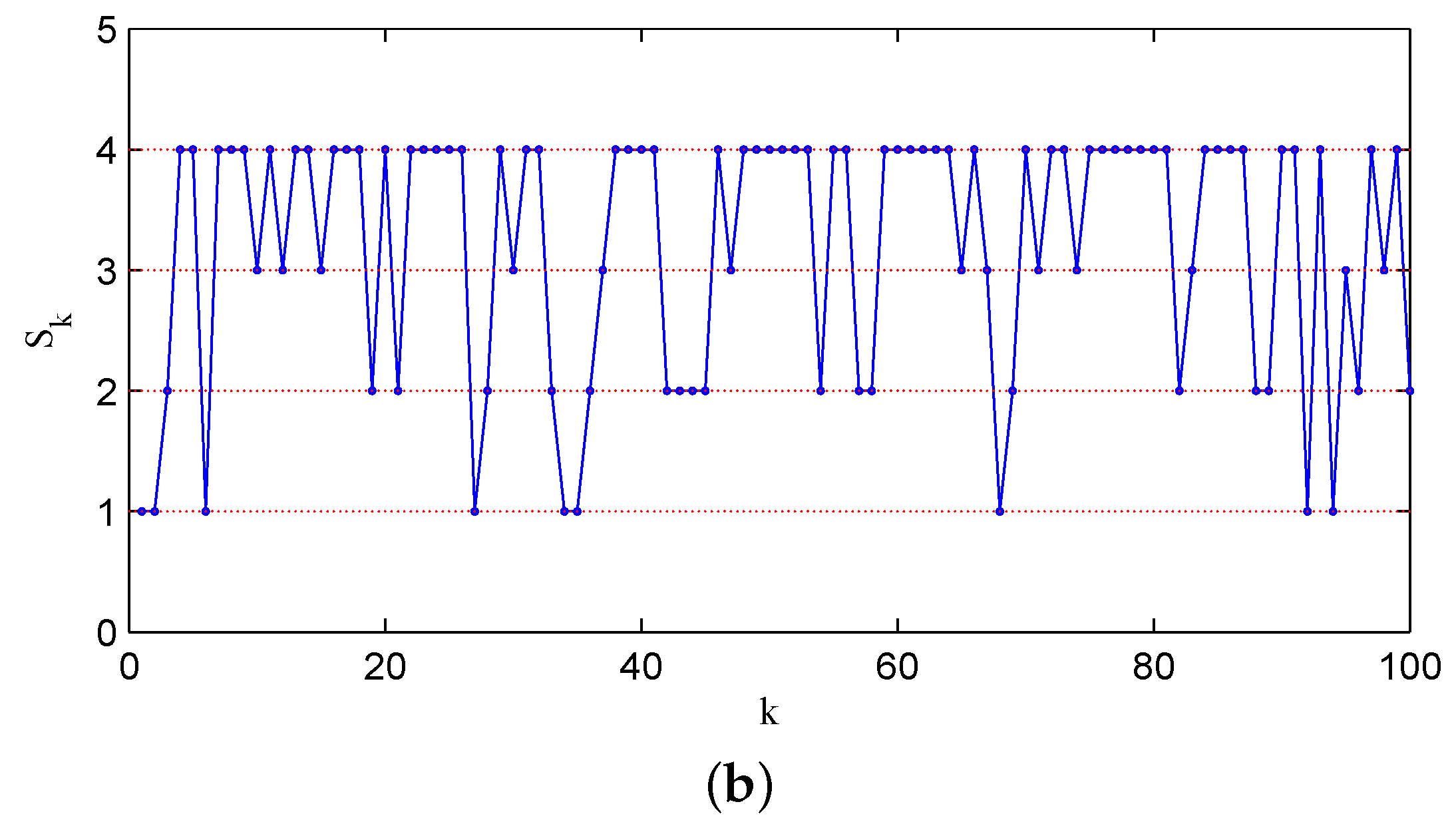

Figure 4a shows the change of the error covariance along that sample path, and in

Figure 4b, the associated state of two channels jumping among

,

,

and

is displayed, where for the sake of simplicity, we note the states

,

,

and

as numbers 1, 2, 3 and 4, respectively. For comparison, we display a sample path with

in

Figure 5a, and the associated channel state is shown in

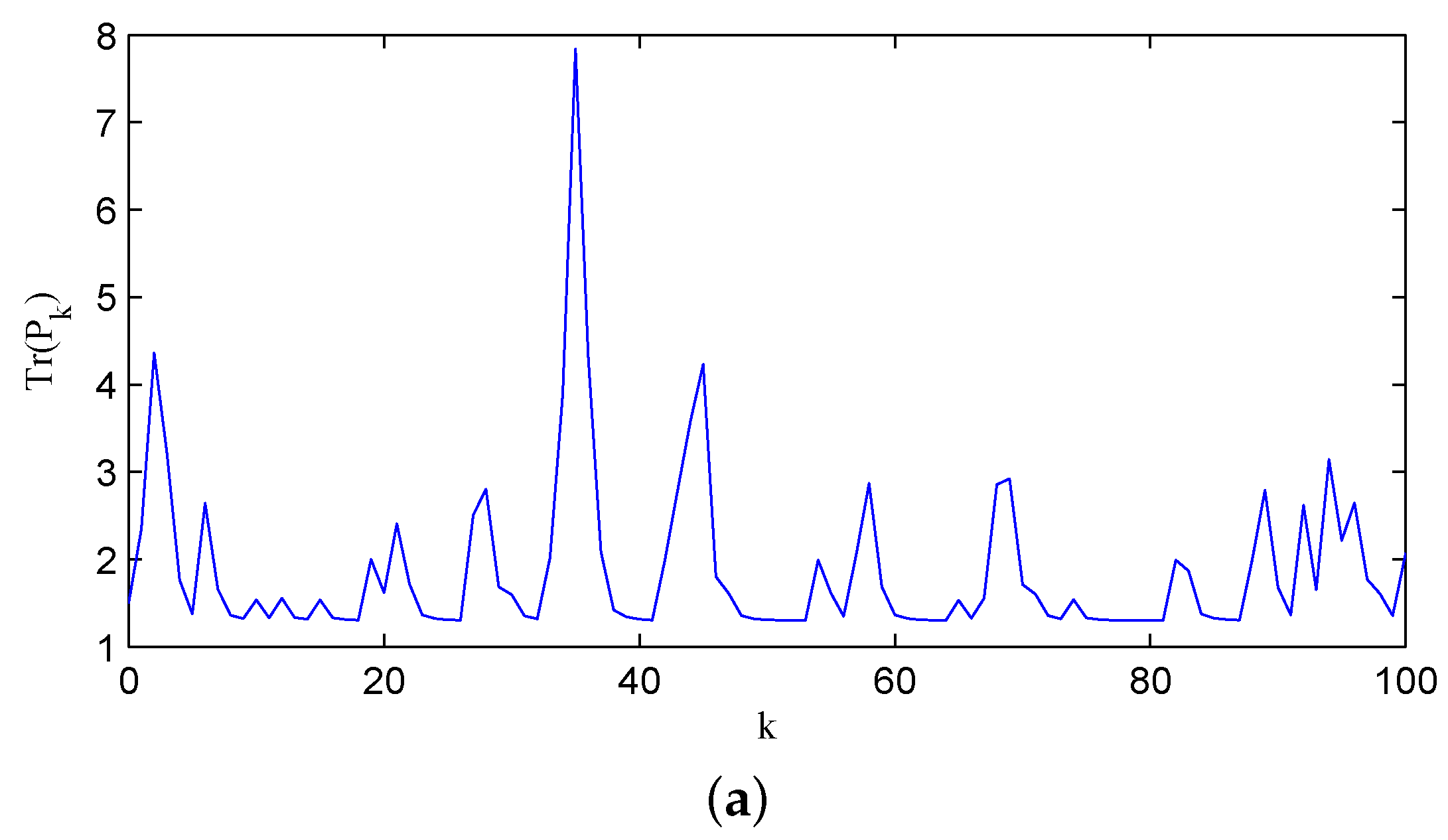

Figure 5b. The above two figures illustrate that with a lower recovery rate pair, the error covariance has more chances to diverge.

Example 3. The results in Theorem 6 can be extended to the system with marginally unstable or/and stable eigenvalues by Lemma 8. Consider an example system specified by . The other parameters are chosen the same as those in Example 2. It is easy to check that is sufficient to guarantee the stability of the error covariance matrix. Figure 6a shows a typical sample path with the recovery rate pair , while Figure 6b displays the associated channel state.