Multiple Human Tracking Using Binary Infrared Sensors

Abstract

:1. Introduction

2. Model of IR Sensor System

3. Proposed Algorithm

3.1. Notations

- ∙

- |*|: The number of elements in list *.

- ∙

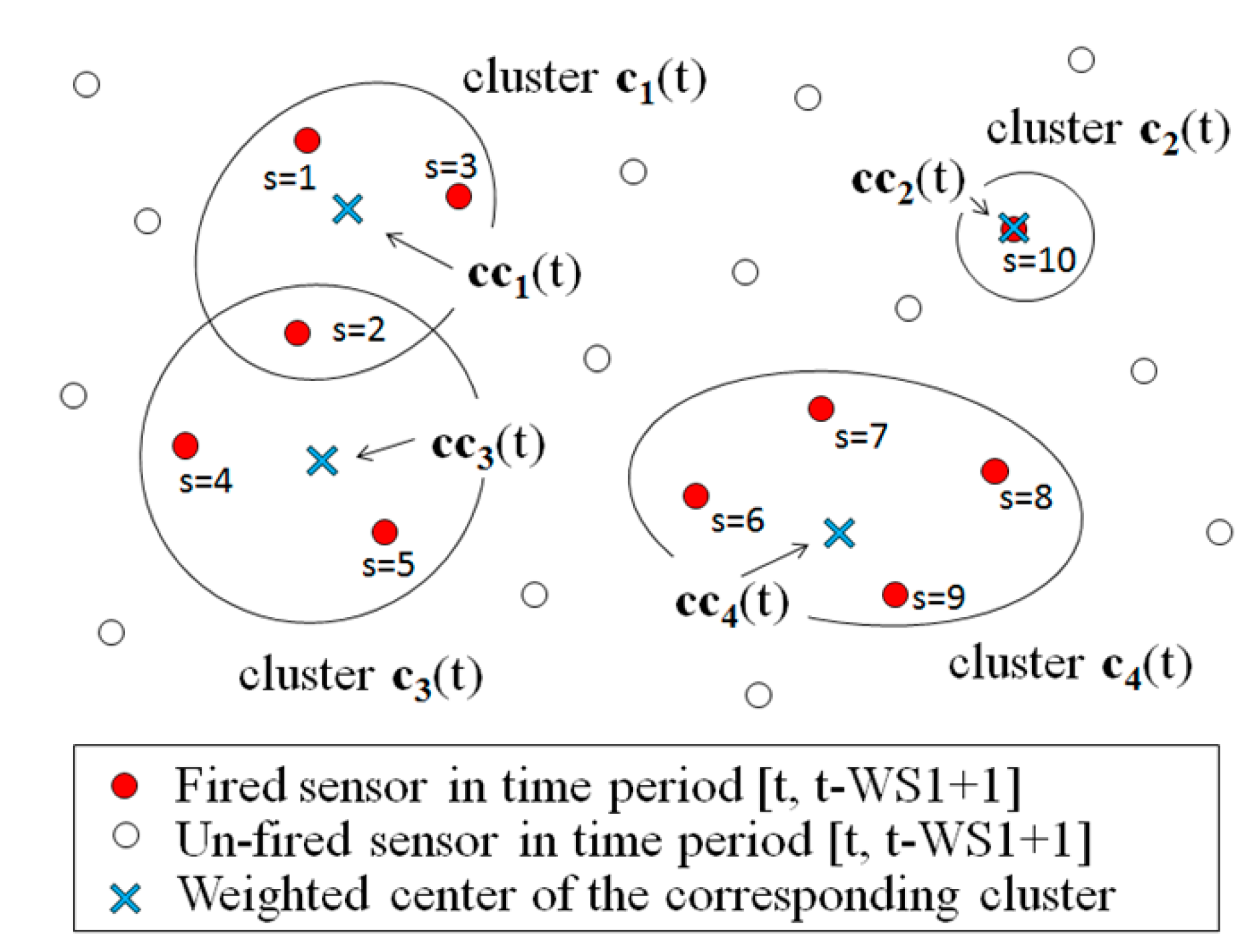

- CC(t): A set of the weighted center coordinates of the clusters at time t. CC(t) = {cci(t)|i = 1, …, Cmax(t)}, where cci(t) = (x, y) and they are referred to as cci(t).x and cci(t).y, respectively.

- ∙

- Cmax(t): The number of clusters at time t.

- ∙

- ds(t): A binary sensed datum obtained by IR sensor s at time t. ds(t) ϵ {0, 1}.

- ∙

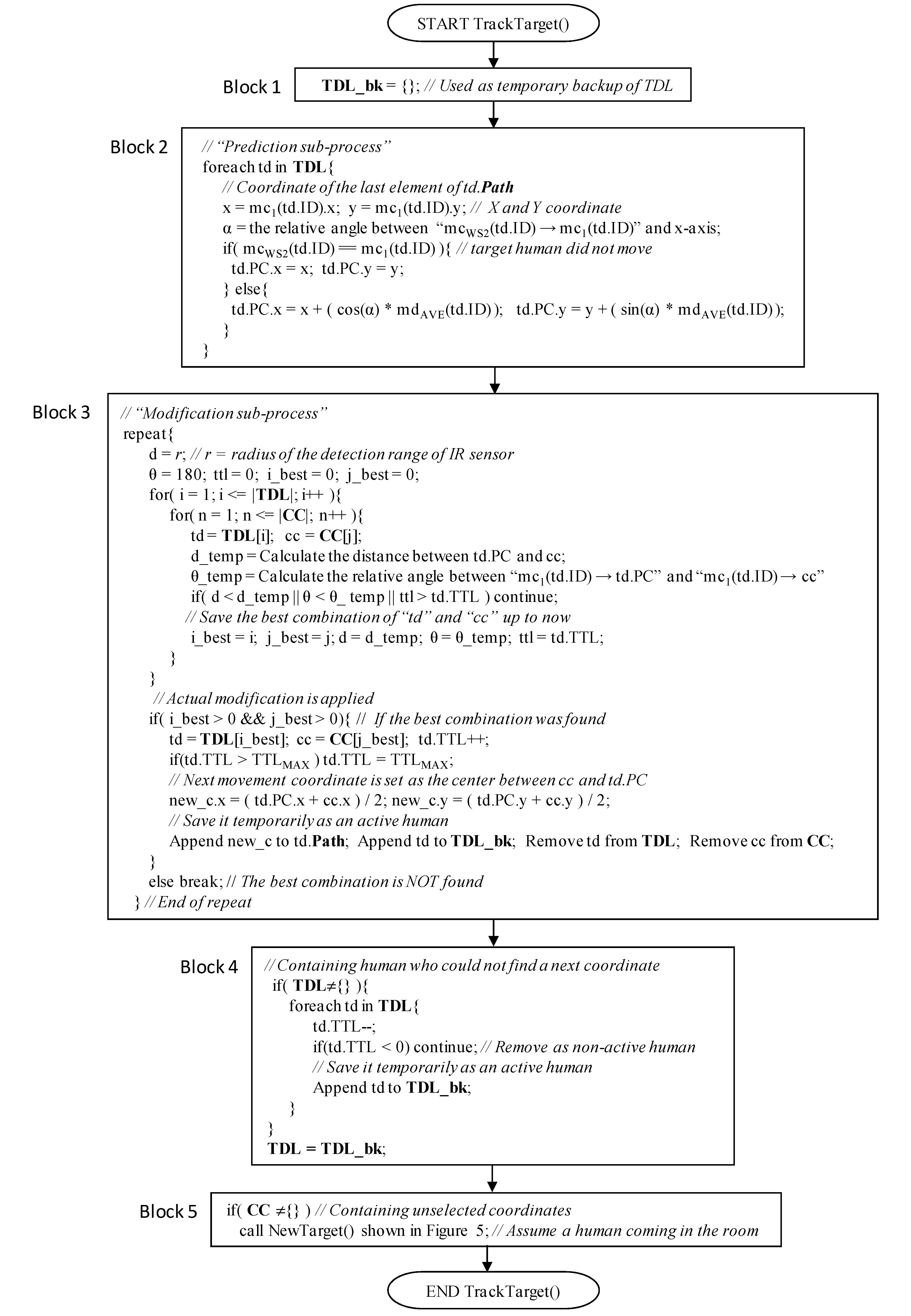

- mdAVE(td.ID): An average of movement distance of target human “td.ID.” The details are described later.

- ∙

- mvt(td.ID): A coordinate set of the most recently estimated WS2 number of coordinates in Path of “td.ID” at time t. mvt(td.ID) = {mci(td.ID)|i = 1, 2, … WS2}, where mci(td.ID) = (x, y). They are referred to as mci(td.ID).x and mci(td.ID).y, respectively. mc1(td.ID) is more recent than mc2(td.ID).

- ∙

- MV(td.ID): A vector from coordinate mcWS2(td.ID) to coordinate mc1(td.ID), i.e., mcWS2(td.ID) → mc1(td.ID). This vector is referred to as the movement vector of target human “td.ID” at time t.

- ∙

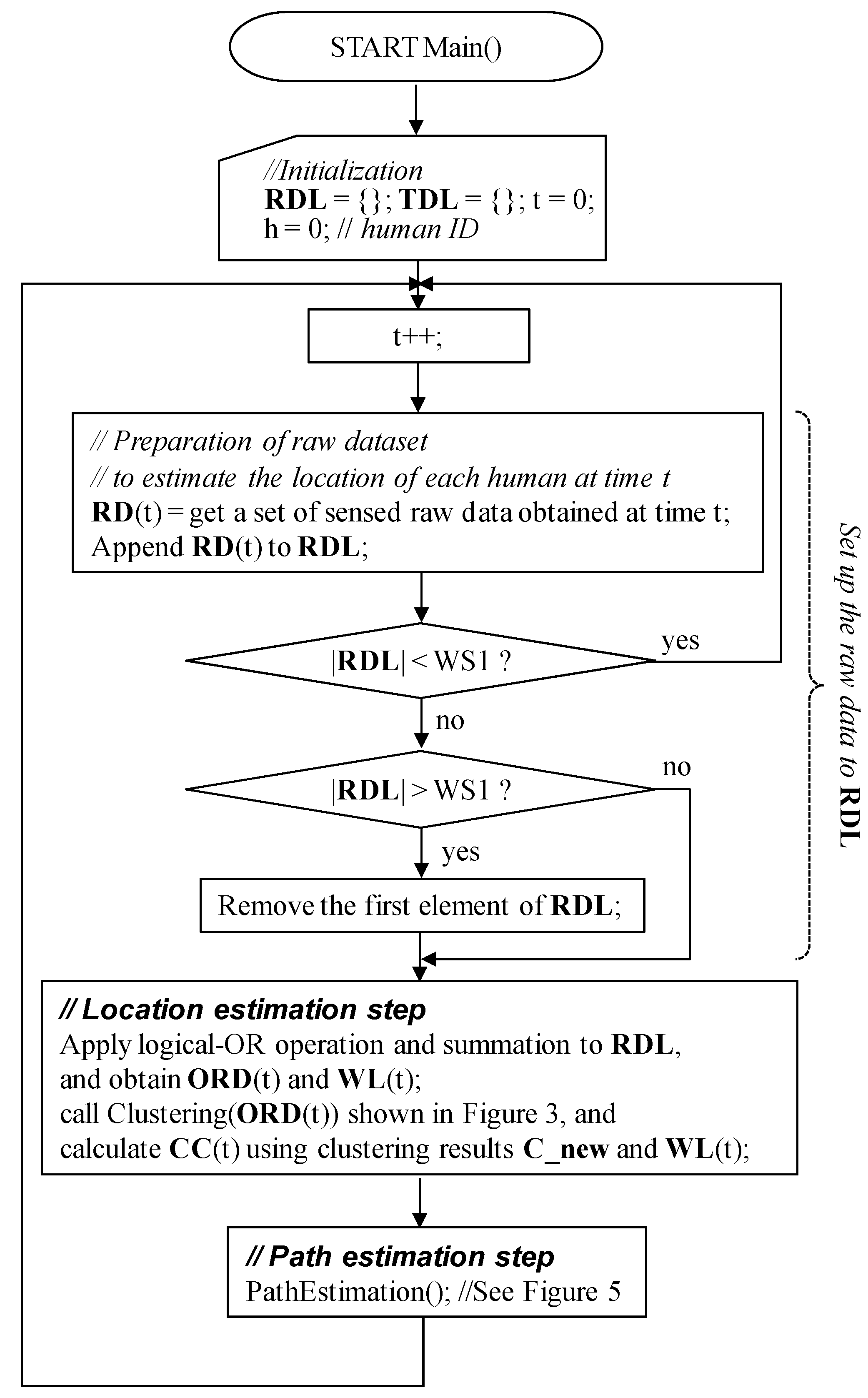

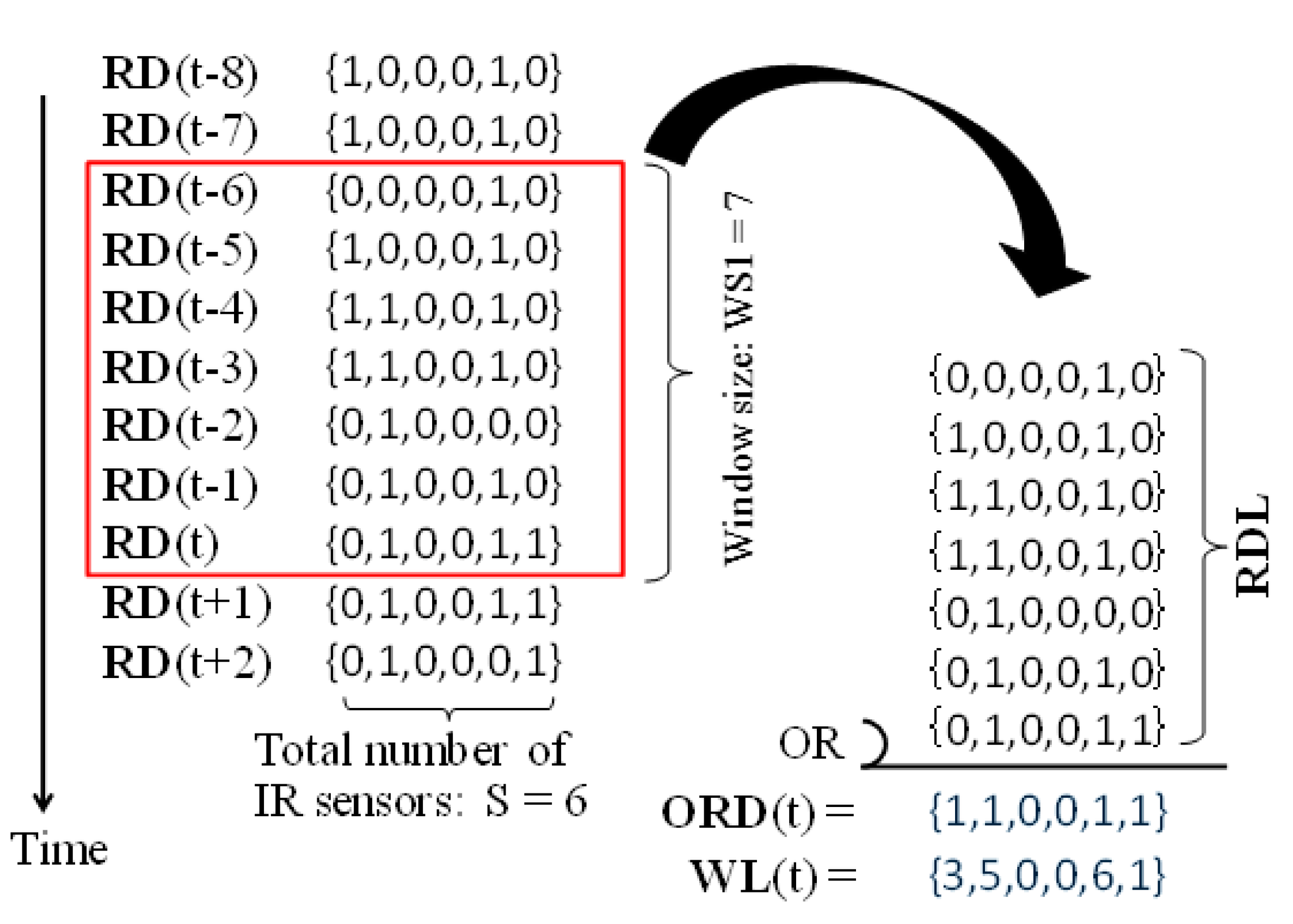

- ORD(t): A binary dataset obtained by applying the logical-OR operation to RDL at time t. ORD(t) = {os(t)|s = 1, …, S}, where os(t) = .

- ∙

- r: The radius of the detection range of each IR sensor.

- ∙

- RD(t): A set of sensed raw data at time t. RD(t) = {ds(t)|s = 1, …, S}. |RD(t)| = S.

- ∙

- RDL: A list containing sets of the sensed raw data. At time t, RDL = {RD(t-WS1 + 1), RD(t-WS1 + 2), …, RD(t-1), RD(t)}. |RDL| = WS1.

- ∙

- S: The total number of IR sensors. S > 0.

- ∙

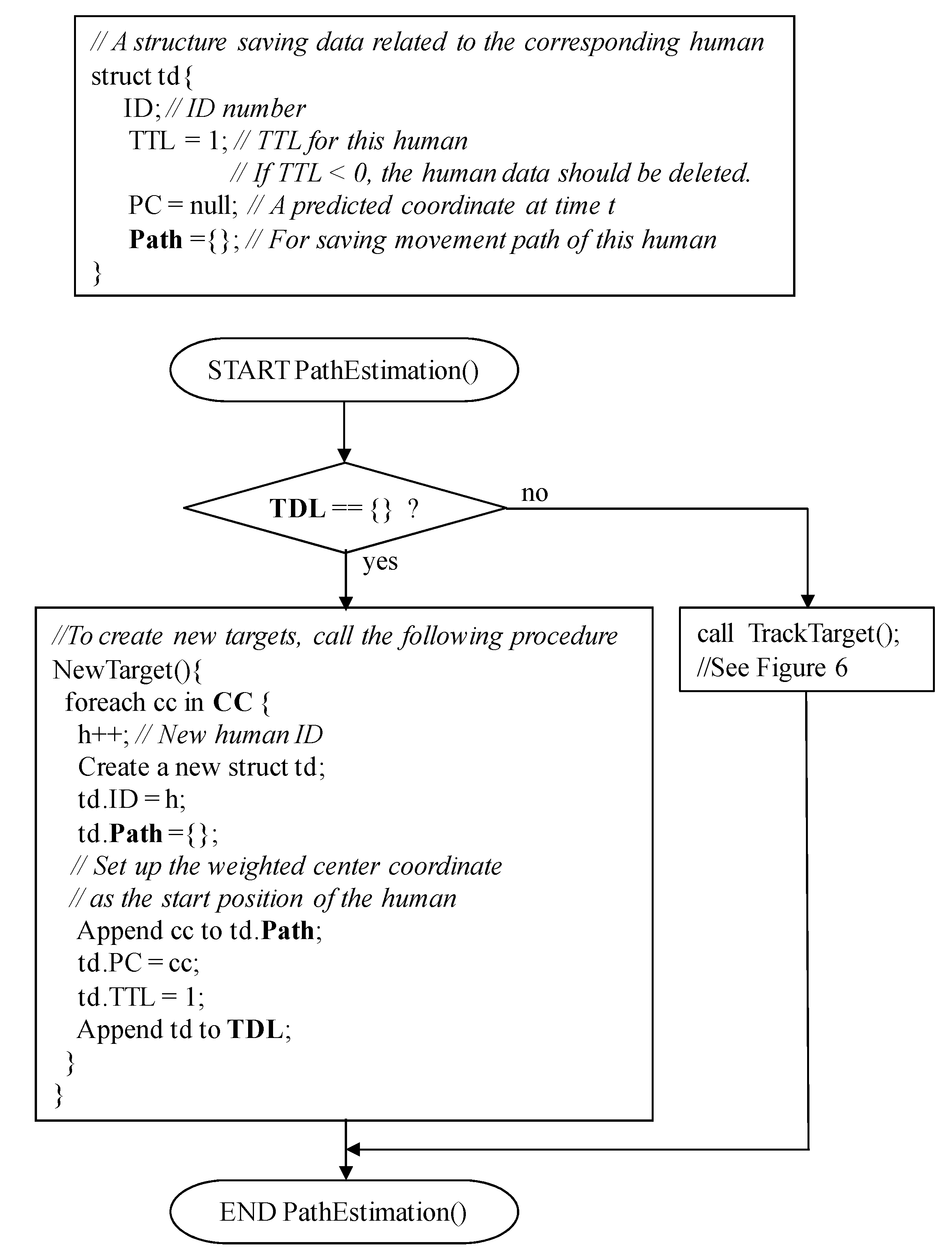

- td: A data structure representing a target human. It contains ID, TTL, PC, and Path. They are referred to as td.ID, td.TTL, td.PC, and td.Path, respectively.

- ∙

- td.ID: A human ID. This is an integer value. Td.ID > 0.

- ∙

- td.PC: A predicted coordinate of target human “td.ID” at time t, where td.PC = (x, y). They are referred to as td.PC.x and td.PC.y, respectively.

- ∙

- td.Path: A list of the estimated route coordinates of target human “td.ID.” By connecting all elements in this list from the first to the last, the estimated movement path of human “td.ID” is obtained.

- ∙

- td.TTL: A lifetime of target human “td.ID”. This is an integer value. The initial value of td.TTL is “1,” and its maximum value is TTLMAX.

- ∙

- TDL: A list of target humans who currently exist in the room.

- ∙

- TTLMAX: A constant integer value. The maximum value of TTL (time to live). TTLMAX > 0.

- ∙

- WL(t): A weight set of the sensors at time t. WL(t) ={ws(t)|s = 1, …, S}.

- ∙

- ws(t): A weight of sensor s at time t. ws(t) = . This is used to calculate the weighted center coordinate of a cluster, to which sensor s belongs. The coordinate calculation method will be defined later.

- ∙

- WS1: Window size for the number of sensed raw datasets to which the logical-OR operation is applied. This is a given integer value. WS1 > 0.

- ∙

- WS2: Window size or the number of elements (coordinates) that construct movement vector mvt(td.ID). This is a given integer value. WS2 > 1.

3.2. Main Procedure

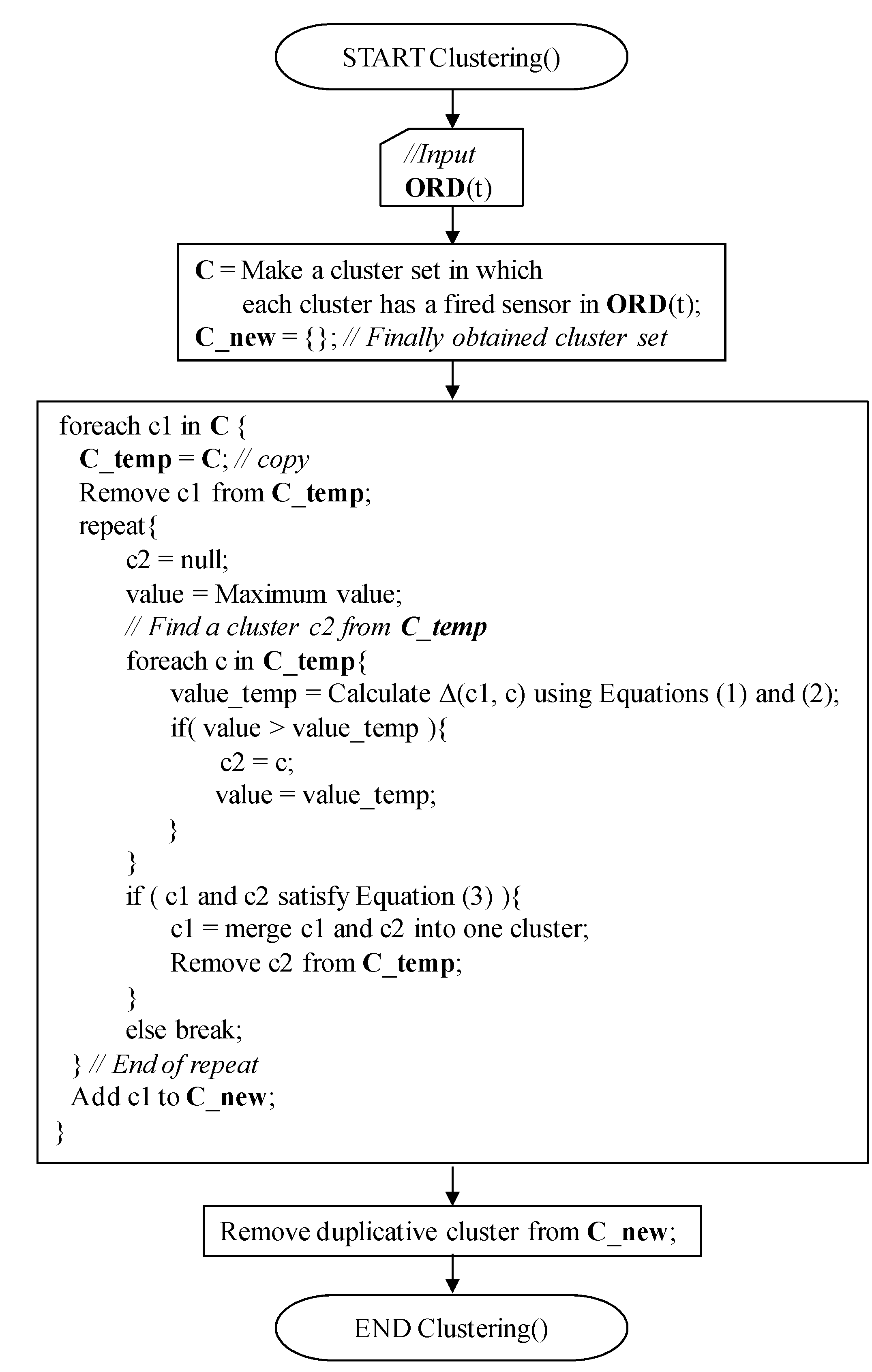

3.3. Location Estimation Step

3.4. Path Estimation Step

4. Evaluation

4.1. Evaluation Environment

| D | S |

|---|---|

| 2.0 | 16 |

| 3.0 | 24 |

| 4.0 | 32 |

| 5.0 | 40 |

4.2. Evaluation Results and Remarks

(1) Success estimation rate

| Density (D) | Number of Humans (H) | |||

|---|---|---|---|---|

| H = 1 | H = 2 | H = 3 | H = 4 | |

| D = 2.0 | 100 | 56 | 34 | 6 |

| 100 | 66 | 28 | 6 | |

| D = 3.0 | 100 | 64 | 22 | 4 |

| 100 | 60 | 32 | 4 | |

| D = 4.0 | 100 | 64 | 24 | 10 |

| 100 | 46 | 20 | 10 | |

| D = 5.0 | 100 | 56 | 28 | 14 |

| 100 | 56 | 22 | 6 | |

| Average | 100.0 | 60.0 | 27.0 | 8.5 |

| 100.0 | 57.0 | 25.5 | 6.5 | |

(2) Averaged error

(3) Averaged tracking rate

| Density (D) | Number of Humans (H) | |||

|---|---|---|---|---|

| H = 1 | H = 2 | H = 3 | H = 4 | |

| D = 2.0 | 0.60 (0.32) | 1.22 (0.89) | 2.05 (1.50) | 3.09 (2.09) |

| 0.68 (0.36) | 2.08 (1.69) | 3.11 (2.23) | 3.42 (2.28) | |

| D = 3.0 | 0.59 (0.29) | 1.27 (0.88) | 1.97 (1.43) | 1.52 (1.04) |

| 0.66 (0.34) | 2.24 (1.73) | 3.12 (2.27) | 3.90 (2.32) | |

| D = 4.0 | 0.59 (0.31) | 1.42 (0.94) | 1.44 (1.02) | 2.04 (1.43) |

| 0.66 (0.35) | 2.43 (1.84) | 3.21 (2.22) | 3.18 (2.26) | |

| D = 5.0 | 0.54 (0.27) | 1.38 (1.11) | 2.01 (1.42) | 2.62 (1.63) |

| 0.61 (0.30) | 3.20 (2.35) | 3.03 (2.26) | 2.69 (2.39) | |

| Average | 0.58 (0.30) | 1.32 (0.96) | 1.87 (1.34) | 2.32 (1.55) |

| 0.65 (0.34) | 2.49 (1.90) | 3.12 (2.25) | 3.30 (2.31) | |

| Density (D) | Number of Humans (H) | |||

|---|---|---|---|---|

| H = 1 | H = 2 | H = 3 | H = 4 | |

| D = 2.0 | 97.98 (7.33) | 57.22 (14.04) | 35.40 (5.60) | 33.56 (2.73) |

| 94.63 (13.31) | 61.34 (17.19) | 43.30 (8.87) | 42.90 (2.38) | |

| D = 3.0 | 99.78 (0.09) | 66.94 (16.34) | 55.12 (11.15) | 43.47 (5.13) |

| 99.55 (0.10) | 69.67 (12.97) | 58.22 (8.06) | 54.60 (1.34) | |

| D = 4.0 | 99.78 (0.06) | 75.39 (13.19) | 58.46 (9.13) | 48.46 (5.11) |

| 99.55 (0.07) | 71.77 (15.41) | 54.64 (4.07) | 55.06 (7.89) | |

| D = 5.0 | 99.78 (0.07) | 83.20 (14.34) | 64.51 (14.57) | 51.57 (6.82) |

| 99.55 (0.07) | 73.69 (13.02) | 59.29 (4.40) | 58.39 (2.70) | |

| Average | 99.33 (1.89) | 70.69 (14.48) | 53.37 (10.11) | 44.27 (4.95) |

| 98.32 (3.39) | 69.12 (14.65) | 53.86 (6.35) | 52.74 (3.58) | |

(4) Success rate of the number of humans

| Density (D) | Number of Humans (H) | |||

|---|---|---|---|---|

| H = 1 | H = 2 | H = 3 | H = 4 | |

| D = 2.0 | 97.76 (0.95) | 83.15 (5.58) | 60.32 (5.58) | 52.79 (1.81) |

| 98.87 (0.64) | 85.79 (6.36) | 59.77 (6.62) | 44.63 (4.07) | |

| D = 3.0 | 97.87 (1.01) | 76.76 (9.58) | 58.97 (8.43) | 55.69 (6.29) |

| 99.03 (0.59) | 84.17 (9.64) | 69.65 (9.13) | 50.08 (0.08) | |

| D = 4.0 | 98.86 (0.54) | 81.62 (8.53) | 55.01 (9.76) | 51.20 (1.83) |

| 99.54 (0.31) | 88.17 (8.39) | 61.91 (11.76) | 54.41 (3.68) | |

| D = 5.0 | 99.10 (0.38) | 78.22 (6.65) | 57.73 (7.74) | 45.62 (3.49) |

| 99.68 (0.17) | 88.83 (6.35) | 71.43 (7.61) | 53.90 (9.94) | |

| Average | 98.40 (0.72) | 79.94 (7.59) | 58.01 (7.88) | 51.13 (3.35) |

| 99.28 (0.43) | 86.74 (7.68) | 65.69 (8.78) | 50.76 (4.44) | |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bhagat, K.; Wermter, S.; Burn, K. Hybrid learning architecture for unobtrusive infrared tracking support. In Proceedings of the IEEE International Joint Conference on Neural Networks 2008 (IJCNN 2008), Brisbane, Australia, 1–8 June 2008; pp. 2703–2709.

- Seki, H.; Tadakuma, S. Abnormality detection monitoring system for elderly people in sensing and robotic support roomt. In Proceedings of the 10th IEEE International Workshop on Advanced Motion Control 2008 (AMC ’08), Trento, Italy, 26–28 March 2008; pp. 56–61.

- Hu, W.; Tan, T.; Wang, L.; Maybank, S. A survey on visual surveillance of object motion and behaviors. IEEE Trans. Syst. Man Cybernet. Part C Appl. Rev. 2004, 34, 334–352. [Google Scholar] [CrossRef]

- Lin, D.T.; Huang, K.Y. Collaborative pedestrian tracking with multiple cameras: Data fusion and visualization. In Proceedings of the International Joint Conference on Neural Networks (IJCNN2010), Barcelona, Spain, 18–23 July 2010; pp. 1–8.

- Watada, J.; Musaand, Z.B. Tracking human motions for security system. In Proceedings of the SICE Annual Conference 2008, Tokyo, Japan, 20–22 August 2008; pp. 3344–3349.

- Changjiang, Y.; Duraiswami, R.; Davis, L. Fast multiple object tracking via a hierarchical particle filter. In Proceedings of the 10th IEEE International Conference on Computer Vision (ICCV 2005), Ottawa, ON, Canada, 17–21 October 2005; Volume 1, pp. 212–219.

- Deng, M.; Guan, Q.; Xu, S. Intelligent video target tracking system based on DSP. In Proceedings of the 2010 International Conference on Computational Problem-Solving (ICCP2010), Li Jiang, China, 3–5 December 2010; pp. 366–369.

- Dhou, S.; Motai, Y. Scale-Invariant Tracking Predictor Using a Pan-Tilt-Zoom Camera. Robotica 2015. [Google Scholar] [CrossRef]

- Lin, D.-T.; Huang, K.-Y. Collaborative Pedestrian Tracking and Data Fusion with Multiple Cameras. IEEE Trans. Inf. Forensics Secur. 2011, 6, 1432–1444. [Google Scholar] [CrossRef]

- Han, J.; Pauwels, E.J.; de Zeeuw, P.M.; de With, P.H.N. Employing a RGB-D Sensor for Real-Time Tracking of Humans across Multiple Re-Entries in a Smart Environment. IEEE Trans. Consum. Electron. 2012, 58, 255–263. [Google Scholar]

- Alberto, I.; Sergio, R.; de Lopez, A.J.; Aritz, V. Location, tracking and identification with RFID and vision data fusion. In Proceedings of the European Workshop on Smart Objects: Systems, Technologies, and Applications (RFID Sys Tech 2010), Ciudad, Spain, 15–16 June 2010; pp. 1–6.

- Mori, T.; Suemasu, Y.; Noguchi, H.; Sato, T. Multiple people tracking by integrating distributed floor pressure sensors and RFID system. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, San Diego, CA, USA, 10–13 October 2004; Volume 6, pp. 5271–5278.

- Shrivastava, N.; Mudumbai, R.; Madhow, U.; Suri, S. Target tracking with binary proximity sensors. ACM Trans. Sens. Netw. 2009, 5, 1–33. [Google Scholar] [CrossRef]

- Honda, S.; Fukui, K.; Moriyama, K.; Kurihara, S.; Numao, M. Extracting human behaviors with infrared sensor network. In Proceedings of the Fourth International Conference on Networked Sensing Systems (INSS ’07), Braunschweig, Germany, 6–8 June 2007; pp. 122–125.

- Watanabe, Y.; Kurihara, S.; Sugawara, T. Sensor network topology estimation using time-series data from infrared human presence sensors. In Proceedings of the IEEE Sensors 2010, Kona, HI, USA, 1 November 2010; pp. 664–667.

- Byunghun, S.; Haksoo, C.; Hyung, S.L. Surveillance tracking system using passive infrared motion sensors in wireless sensor network. In Proceedings of the International Conference on Information Networking 2008 (ICOIN2008), Busan, Korea, 23–25 January 2008; pp. 1–5.

- Wang, Z.; Bulut, E.; Szymanski, E.K. Distributed target tracking with directional binary sensor networks. In Proceedings of the IEEE Global Telecommunications Conference 2009 (GLOBECOM 2009), Honolulu, HI, USA, 30 November–4 December 2009; pp. 1–6.

- Hao, Q.; Hu, F.; Xiao, Y. Multiple human tracking and identification with wireless distributed pyroelectric sensor systems. IEEE Syst. J. 2009, 3, 428–439. [Google Scholar] [CrossRef]

- Nithya, V.S.; Sheshadri, K.; Kumar, A.; Hari, K.V.S. Model based target tracking in a wireless network of passive infrared sensor nodes. In Proceedings of the 2010 International Conference on Signal Processing and Communications (SPCOM2010), Bangalore, India, 18–21 July 2010; pp. 1–5.

- Luo, X.; Shen, B.; Guo, X.; Luo, G.; Wang, G. Human tracking using ceiling pyroelectric infrared sensors. In Proceedings of the IEEE International Conference on Control and Automation 2009 (ICCA 2009), Kunming, China, 9–11 December 2009; pp. 1716–1721.

- Panasonic MP Motion Sensor NaPiOn. Available online: http://www3.panasonic.biz/ac/e/control/sensor/human/napion/index.jsp (accessed on 22 May, 2015).

- Kasama, Y.; Miyazaki, T. Simultaneous estimation of the number of humans and their movement loci in a room using infrared sensors. In Proceedings of the IEEE 26th International Conference on Advanced Information Networking and Applications Workshops (WAINA2012), Fukuoka, Japan, 26–29 March 2012; pp. 508–513.

- Miyazaki, T.; Kasama, Y. Estimation of the number of humans and their movement paths in a room using binary infrared sensors. In Proceedings of the ACM International Conference on Ubiquitous Information Management and Communication (ICUIMC2012), Kuala Lumpur, Malaysia, 20–23 February 2012.

- Kasama, Y.; Miyazaki, T. Movement path estimation for multiple humans in a room using binary infrared sensors. In Proceedings of the IEEE 27th International Conference on Information Networking (ICOIN2013), Bangkok, Thailand, 27–30 January 2013; pp. 42–47.

- Panasonic Infrared Array Sensor Grid-EYE. Available online: https://industrial.panasonic.com/ww/products/sensors/built-in-sensors/grid-eye (accessed on 22 May, 2015).

- Ward, J.H., Jr. Hierarchical grouping to optimize an objective function. J. Am. Stat. Assoc. 1963, 58, 236–244. [Google Scholar] [CrossRef]

- Hao, Q.; Brady, D.J.; Guenther, B.D.; Burchett, J.B.; Shankar, M.; Feller, S. Human Tracking With Wireless Distributed Pyroelectric Sensors. IEEE Sens. J. 2006, 6, 1683–1696. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miyazaki, T.; Kasama, Y. Multiple Human Tracking Using Binary Infrared Sensors. Sensors 2015, 15, 13459-13476. https://doi.org/10.3390/s150613459

Miyazaki T, Kasama Y. Multiple Human Tracking Using Binary Infrared Sensors. Sensors. 2015; 15(6):13459-13476. https://doi.org/10.3390/s150613459

Chicago/Turabian StyleMiyazaki, Toshiaki, and Yuki Kasama. 2015. "Multiple Human Tracking Using Binary Infrared Sensors" Sensors 15, no. 6: 13459-13476. https://doi.org/10.3390/s150613459

APA StyleMiyazaki, T., & Kasama, Y. (2015). Multiple Human Tracking Using Binary Infrared Sensors. Sensors, 15(6), 13459-13476. https://doi.org/10.3390/s150613459