Fast and Flexible Movable Vision Measurement for the Surface of a Large-Sized Object

Abstract

:1. Introduction

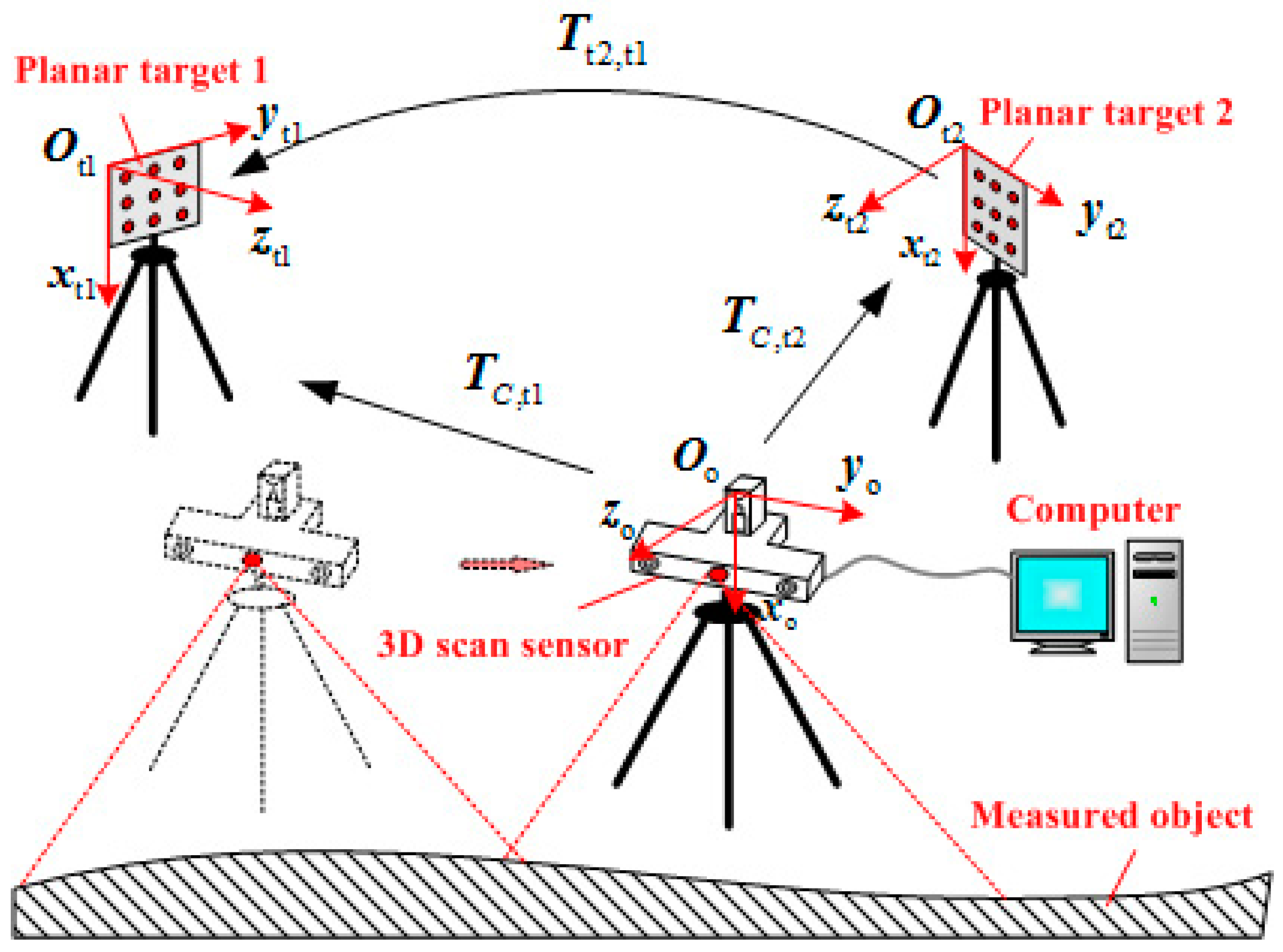

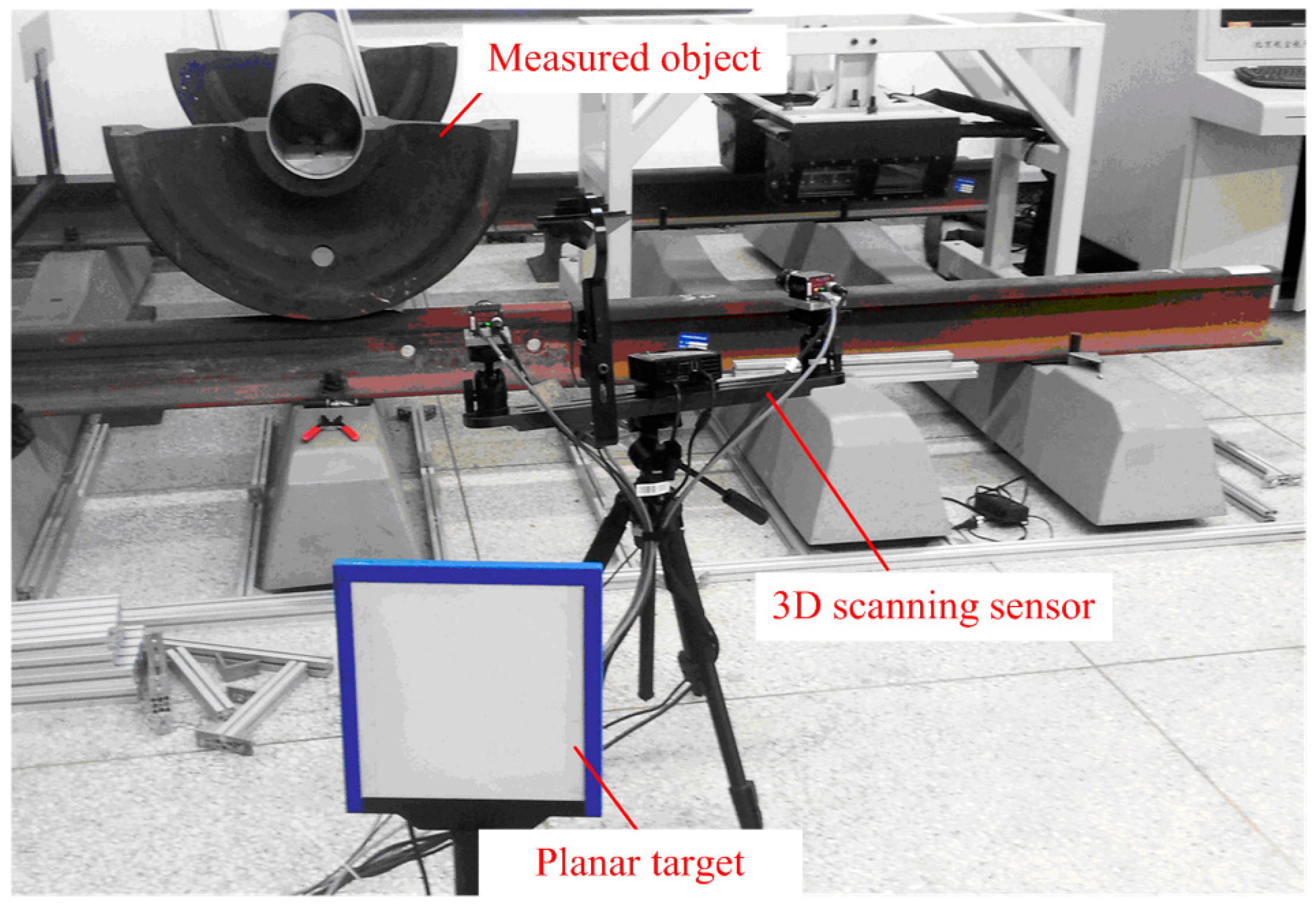

2. System Measurement Principle

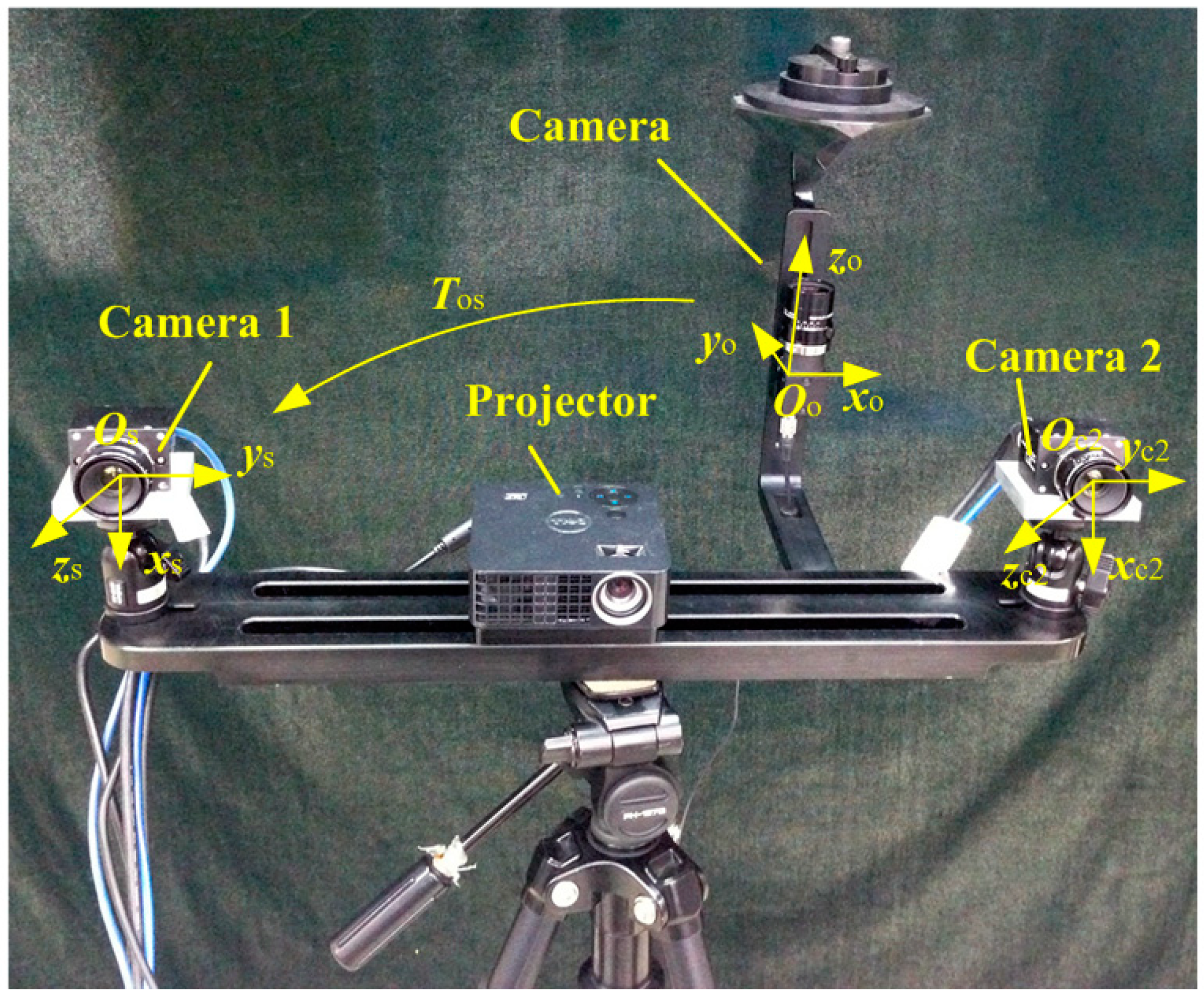

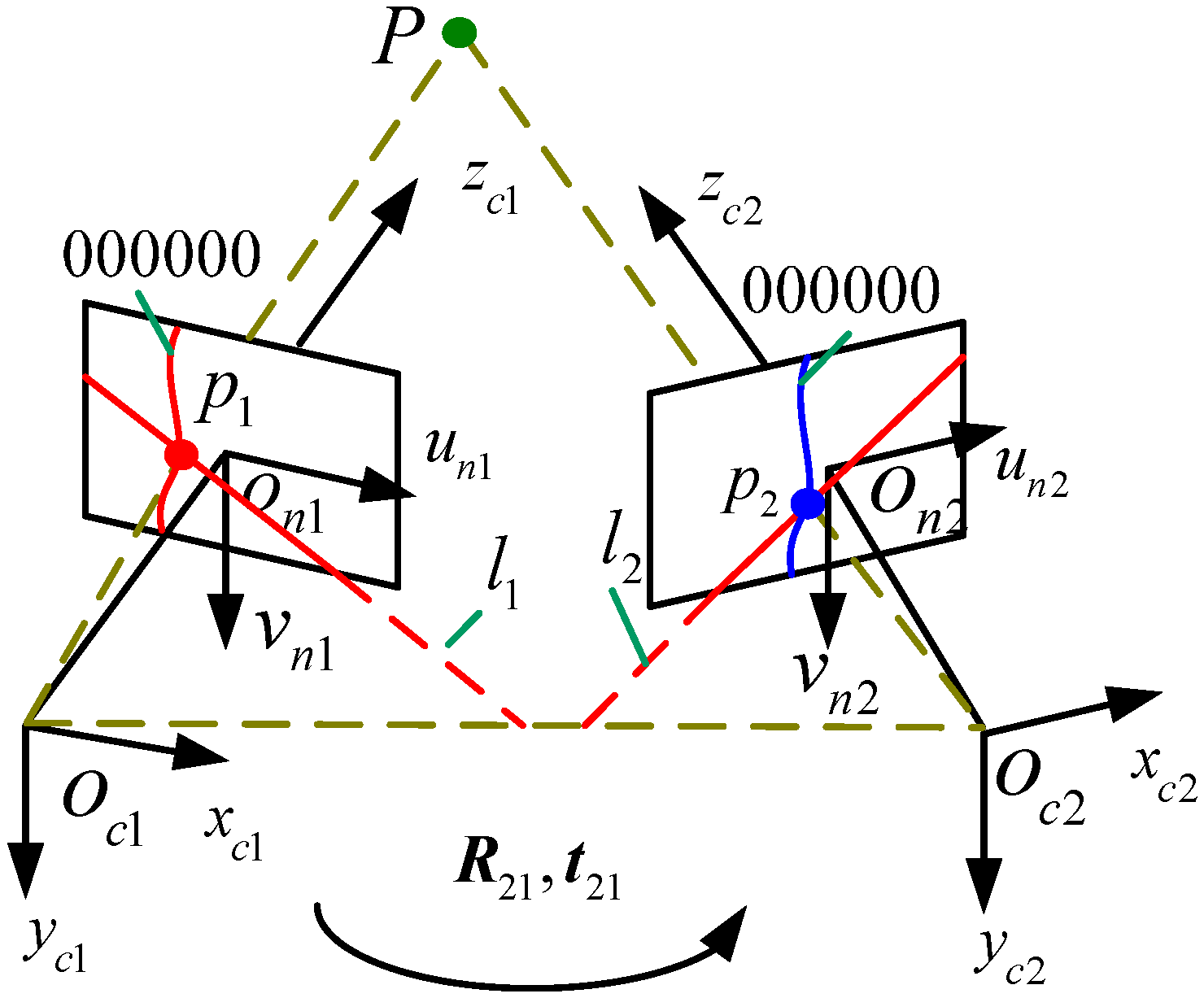

2.1. 3D Scanning Sensor

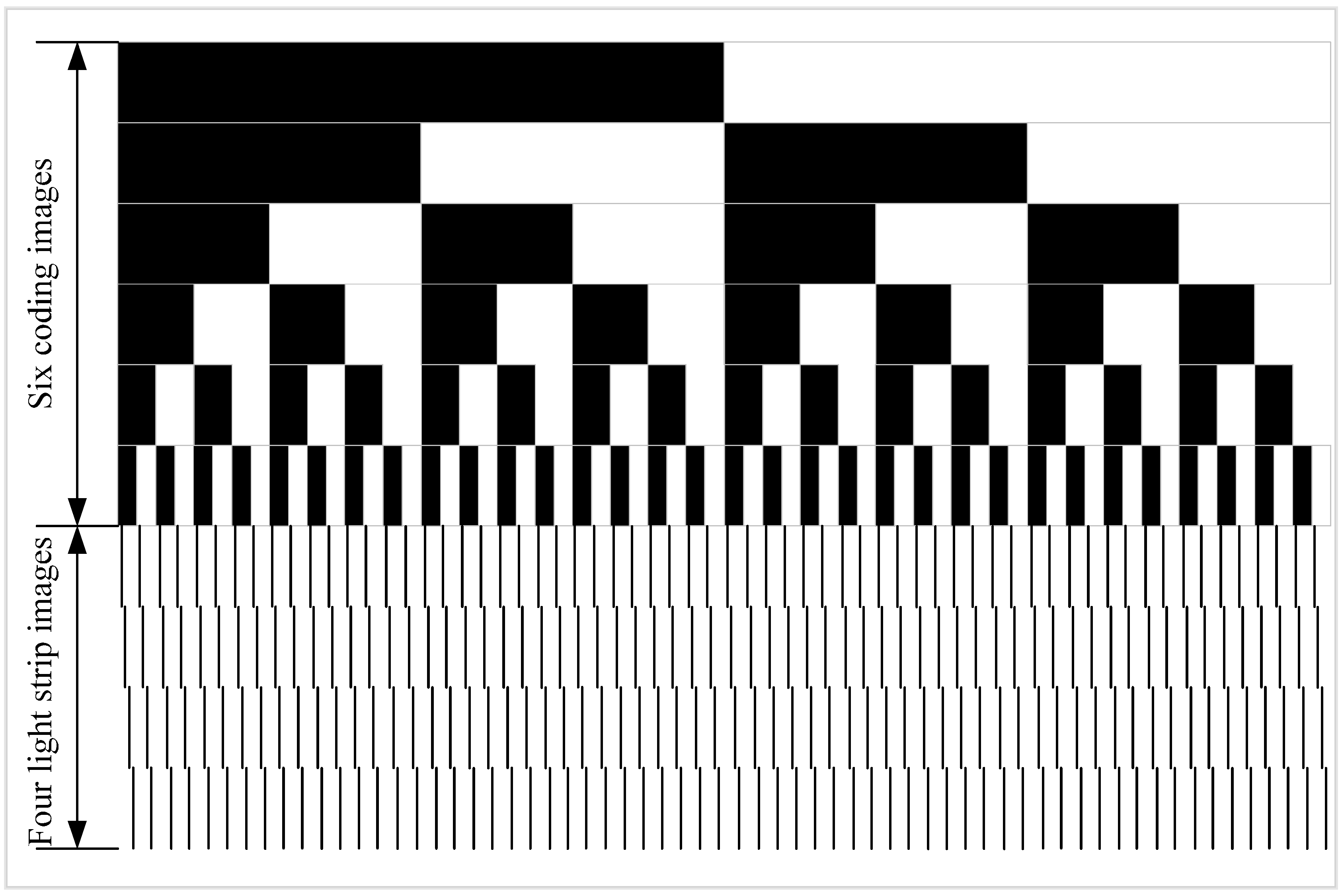

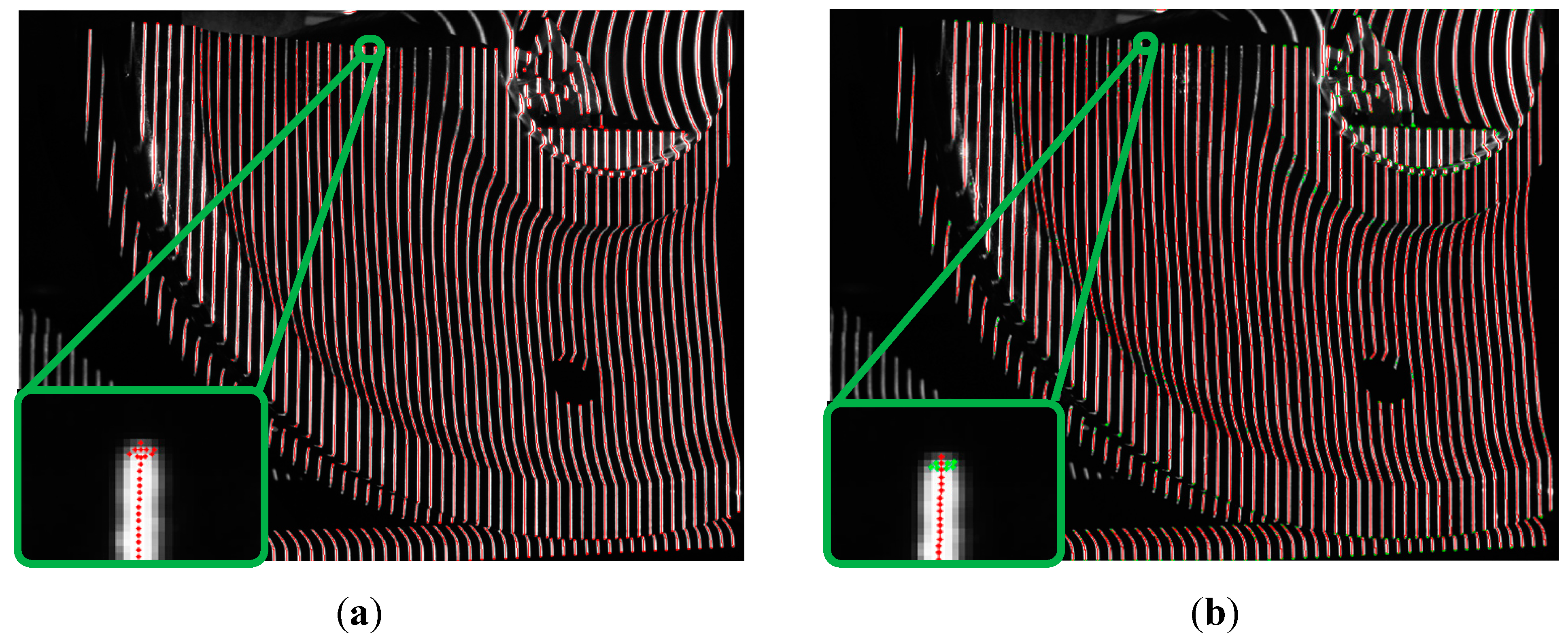

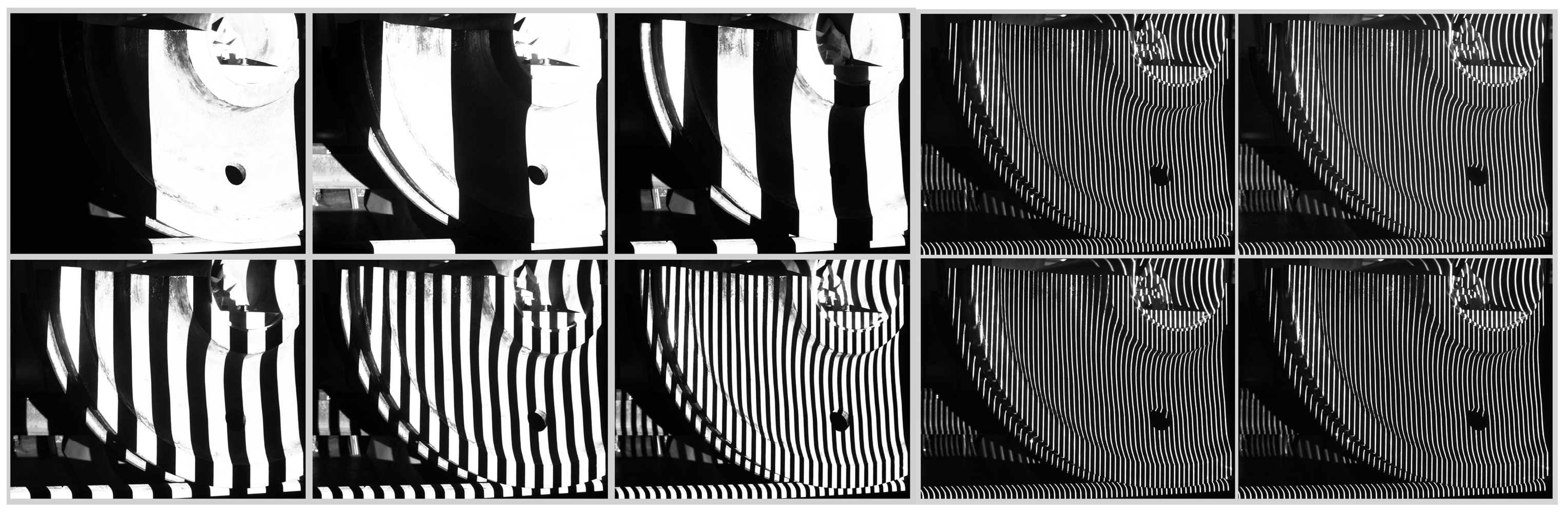

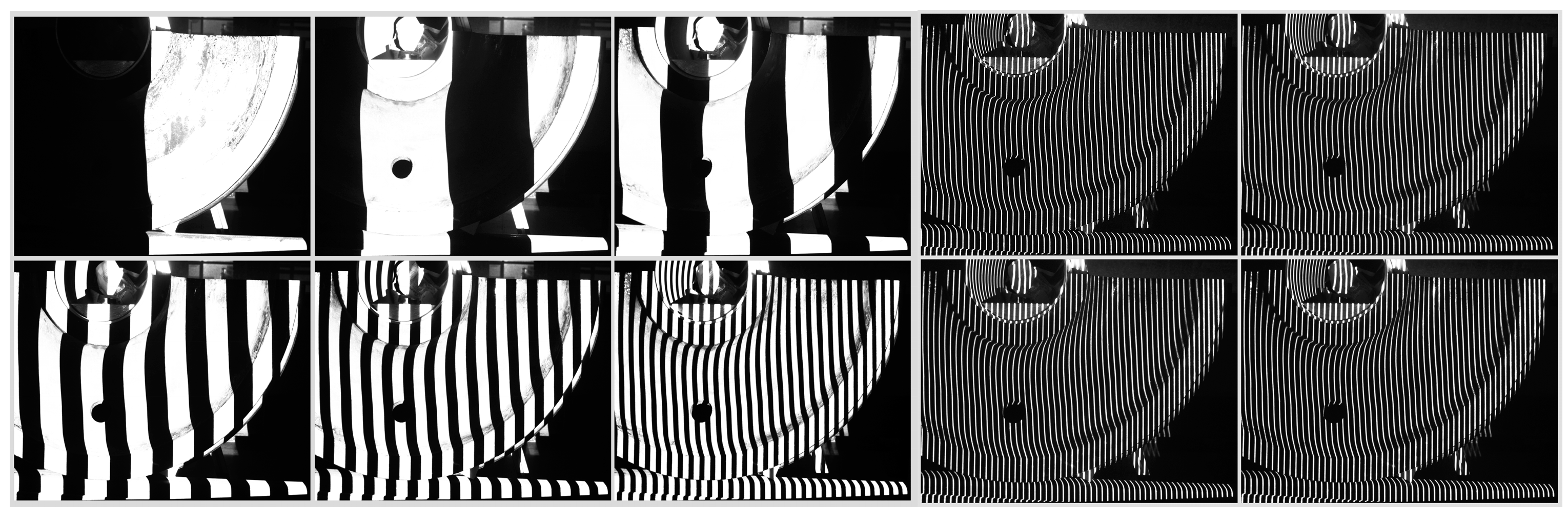

2.2. Light Strip Image Center Extraction and Coding

Light Strip Coding

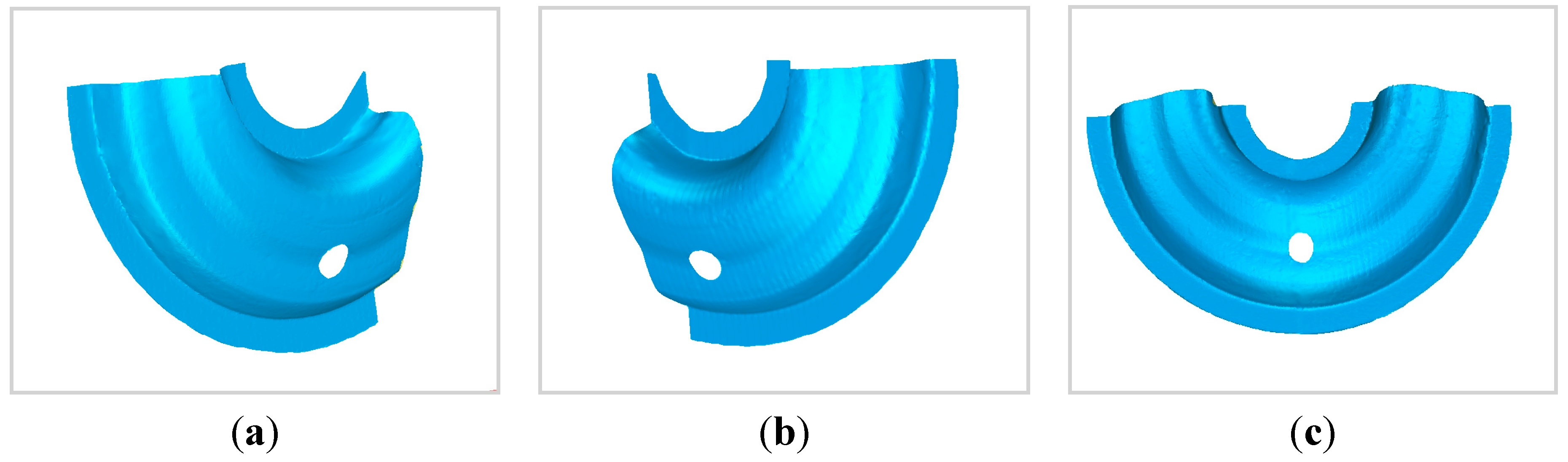

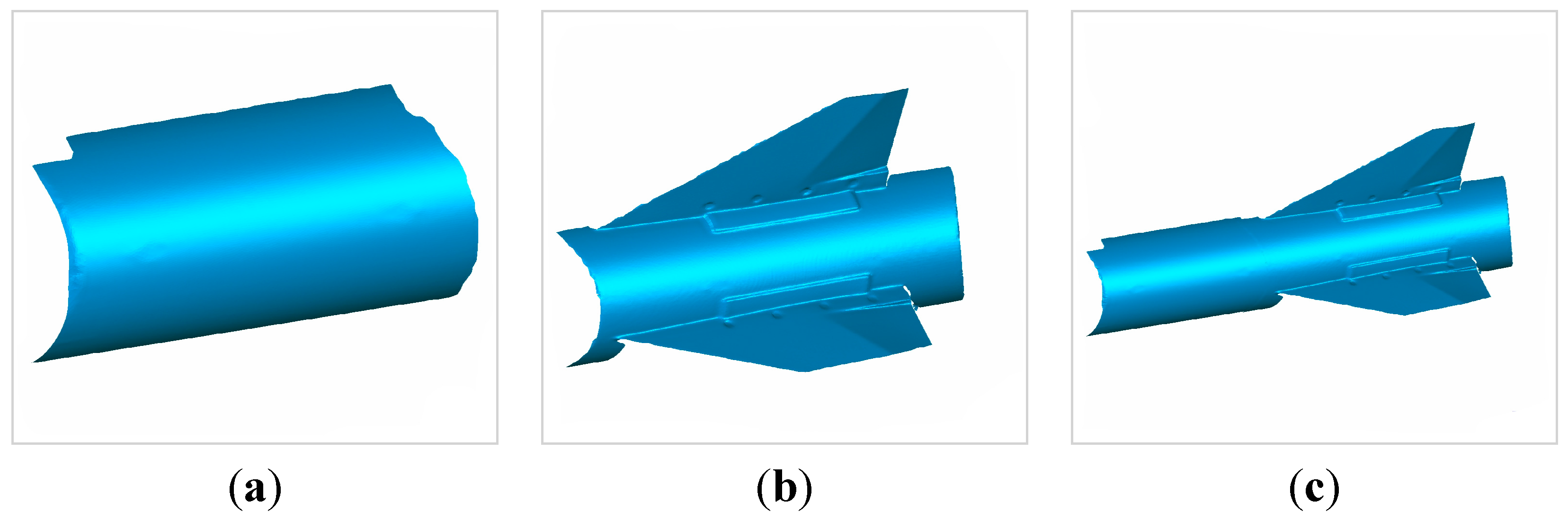

2.3. Partial 3D Reconstruction

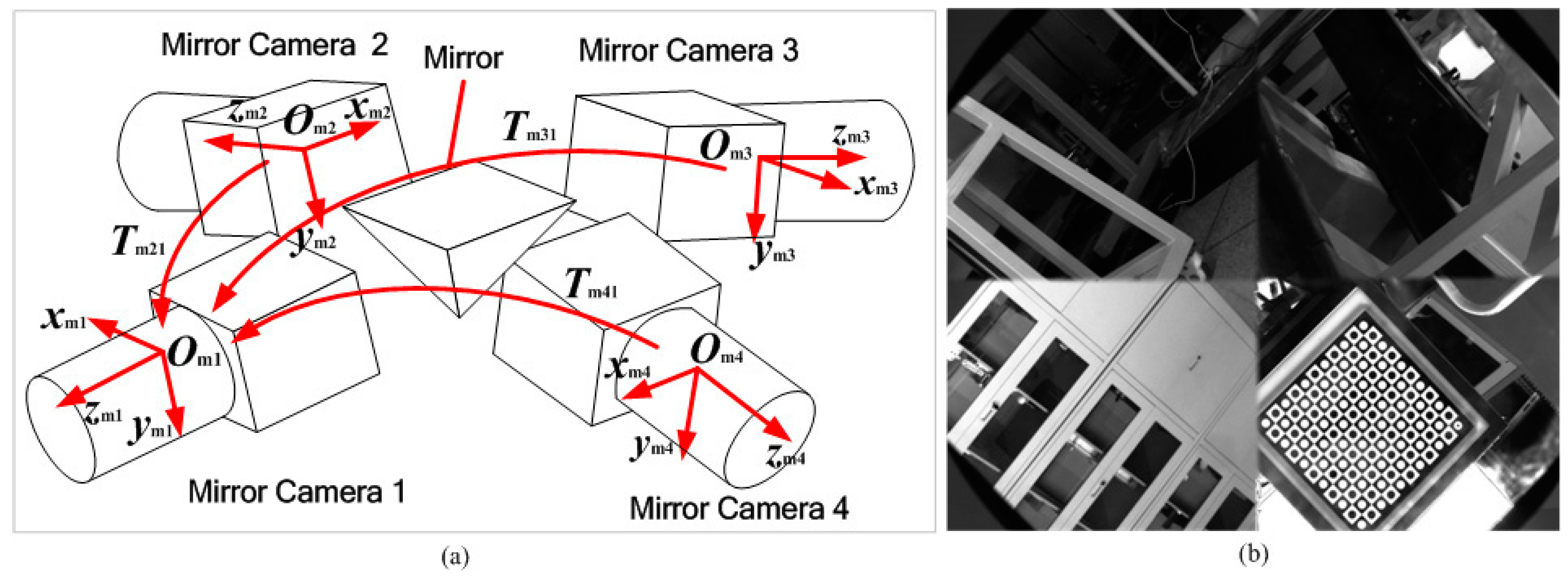

2.4. Global Unity of Partial 3D Data

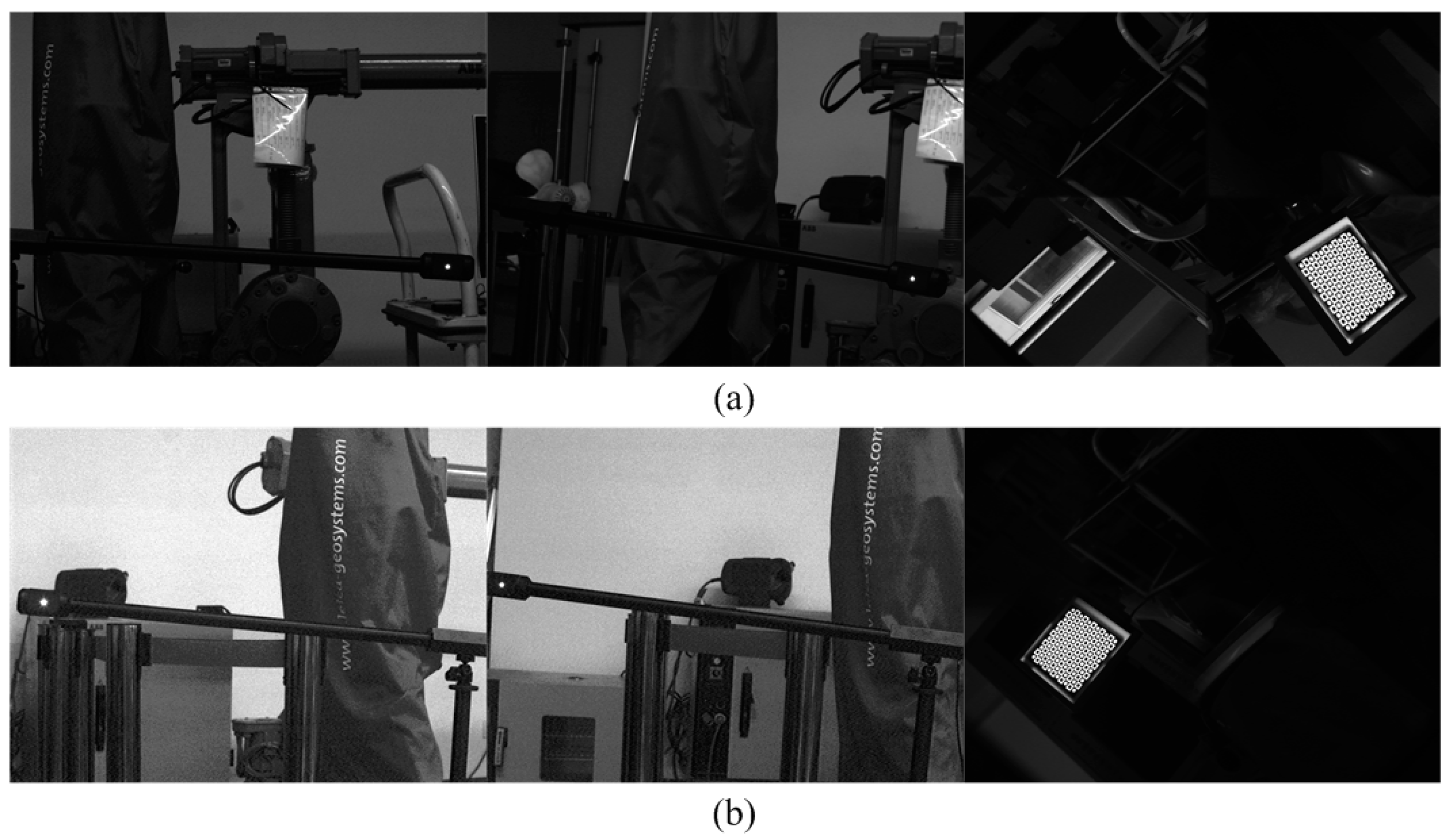

3. Physical Experiments

3.1. System Calibration Results

- (1)

- Binocular stereo vision sensor

- (2)

- Wide-field camera

| Left Point | Right Point | dt (mm) | dm (mm) | Δd (mm) | |||||

|---|---|---|---|---|---|---|---|---|---|

| x (mm) | y (mm) | z (mm) | x (mm) | y (mm) | z (mm) | ||||

| 1 | −38.93 | 69.70 | 944.18 | 1136.60 | 10.56 | 1315.73 | 1234.15 | 1234.27 | −0.12 |

| 2 | −58.28 | −0.67 | 942.06 | 1145.09 | 34.81 | 1213.41 | 1234.15 | 1234.06 | 0.09 |

| 3 | −207.67 | 163.54 | 1177.13 | 1002.70 | 13.97 | 988.70 | 1234.15 | 1234.05 | 0.10 |

| 4 | −253.61 | 44.32 | 1147.33 | 915.61 | 294.11 | 1452.90 | 1234.15 | 1234.04 | 0.11 |

| 5 | 109.48 | 102.07 | 852.49 | 1265.76 | 31.61 | 1278.49 | 1234.15 | 1234.27 | −0.12 |

| 6 | −214.85 | 36.15 | 1012.34 | 1011.10 | 162.10 | 942.91 | 1234.15 | 1233.36 | −0.21 |

| 7 | 55.09 | 16.89 | 1160.02 | 1160.73 | 67.45 | 613.74 | 1234.15 | 1234.27 | −0.12 |

| 8 | 58.98 | 1.35 | 838.59 | 1191.43 | −109.66 | 1316.00 | 1234.15 | 1233.97 | 0.18 |

| RMS error | 0.14 | ||||||||

3.2. Real Data Measurement Experiment

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Chen, F.; Brown, G.M.; Song, M. Overview of three-dimensional shape measurement using optical methods. Opt. Eng. 2000, 39, 10–22. [Google Scholar] [CrossRef]

- Malamas, E.N.; Petrakis, E.G.M.; Zervakis, M.; Petit, L.; Legat, J.D. A survey on industrial vision systems, applications and tools. Image Vis. Comput. 2003, 21, 171–188. [Google Scholar] [CrossRef]

- Kovac, I. Flexible inspection system in the body-in white manufacturing. In Proceedings of the International Workshop on Robot Sensing, 2004 (ROSE 2004), Graz, Austria, 24–25 May 2004; pp. 41–48.

- Okamoto, A.; Wasa, Y.; Kagawa, Y. Development of shape measurement system for hot large forgings. Kobe Steel Eng. Rep. 2007, 57, 29–33. [Google Scholar]

- Furferi, R.; Governi, L.; Volpe, Y.; Carfagni, M. Design and assessment of a machine vision system for automatic vehicle wheel alignment. Int. J. Adv. Robot. Syst. 2013, 10. [Google Scholar] [CrossRef]

- Zeng, L.; Hao, Q.; Kawachi, K. A scanning projected line method for measuring a beating bumblebee Wing. Opt. Commun. 2000, 183, 37–43. [Google Scholar] [CrossRef]

- Jang, W.; Je, C.; Seo, Y.; Lee, S.W. Structured-light stereo: Comparative analysis and integration of structured-light and active stereo for measuring dynamic shape. Opt. Lasers Eng. 2013, 51, 1255–1264. [Google Scholar] [CrossRef]

- Morano, R.A.; Ozturk, C.; Conn, R.; Dubin, S.; Zietz, S.; Nissano, J. Structured light using pseudorandom codes. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 322–327. [Google Scholar] [CrossRef]

- Salvi, J.; Pagès, J.; Batlle, J. Pattern codification strategies in structured light systems. Pattern Recognit. 2004, 37, 827–849. [Google Scholar] [CrossRef]

- Koninckx, T.; Griesser, A.; van Gool, L. Real-time range scanning of deformable surfaces by adaptively coded structured light. In Proceedings of the International Conference on 3-D Digital Imaging and Modelling, Banff, AB, Canada, 6–10 October 2003; pp. 293–300.

- Su, X.Y.; Chen, W.J.; Zhang, Q.C.; Chao, Y.P. Dynamic 3-D shape measurement method based on FTP. Opt. Lasers Eng. 2001, 36, 49–64. [Google Scholar] [CrossRef]

- Zappa, E.; Busca, G. Static and dynamic features of Fourier transform profilometry: A review. Opt. Lasers Eng. 2012, 50, 1140–1151. [Google Scholar] [CrossRef]

- Su, X.Y.; Zhou, W.S.; Bally, G.; Vukicevic, D. Automated phased-measuring profilometry using defocused projection of a Ronchi grating. Opt. Commun. 1992, 94, 561–573. [Google Scholar] [CrossRef]

- Quan, C.G.; Chen, W.; Tay, C.J. Phase-retrieval techniques in fringe-projection profilometry. Opt. Lasers Eng. 2010, 48, 235–243. [Google Scholar] [CrossRef]

- Huang, P.S.; Zhang, C.P.; Chiang, F.P. High-speed 3-D shape measurement based on digital fringe projection. Opt. Eng. 2003, 42, 163–168. [Google Scholar] [CrossRef]

- Zhang, S. Recent progresses on real-time 3D shape measurement using digital fringe projection techniques. Opt. Lasers Eng. 2010, 48, 149–158. [Google Scholar] [CrossRef]

- Sun, J.H.; Zhang, G.J.; Wei, Z.Z.; Zhou, F.Q. Large 3D free surface measurement using a movable coded light-based stereo vision system. Sens. Actuators A Phys. 2006, 132, 460–471. [Google Scholar] [CrossRef]

- Lu, R.S.; Li, Y.F.; Yu, Q. On-line measurement of straightness of seamless steel pipe using machine vision technique. Sens. Actuators A Phys. 2001, 94, 95–101. [Google Scholar] [CrossRef]

- Li, Q.; Ren, S. A Real-Time Visual Inspection System for Discrete Surface Defects of Rail Heads. IEEE Trans. Instrum. Meas. 2012, 61, 2189–2199. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.F.; Wang, Q.L.; Xu, D.; Tan, M. Measurement and defect detection of the weld bead based on online vision inspection. IEEE Trans. Instrum. Meas. 2010, 59, 1841–1849. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, G.J.; Wei, Z.Z.; Sun, J.H. A global calibration method for multiple vision sensors based on multiple targets. Meas. Sci. Technol. 2011, 22. [Google Scholar] [CrossRef]

- Posdamer, J.L.; Altschuler, M.D. Surface measurement by space-encoded projected beam systems Comput. Graph. Image Process 1982, 18, 1–17. [Google Scholar] [CrossRef]

- Steger, C. An unbiased detector of curvilinear structures. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 113–125. [Google Scholar] [CrossRef]

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Bouguet, J.Y. Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 17 February 2015).

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Li, X.; Li, F.; Wei, X.; Zhang, G. Fast and Flexible Movable Vision Measurement for the Surface of a Large-Sized Object. Sensors 2015, 15, 4643-4657. https://doi.org/10.3390/s150304643

Liu Z, Li X, Li F, Wei X, Zhang G. Fast and Flexible Movable Vision Measurement for the Surface of a Large-Sized Object. Sensors. 2015; 15(3):4643-4657. https://doi.org/10.3390/s150304643

Chicago/Turabian StyleLiu, Zhen, Xiaojing Li, Fengjiao Li, Xinguo Wei, and Guanjun Zhang. 2015. "Fast and Flexible Movable Vision Measurement for the Surface of a Large-Sized Object" Sensors 15, no. 3: 4643-4657. https://doi.org/10.3390/s150304643