Reciprocal Estimation of Pedestrian Location and Motion State toward a Smartphone Geo-Context Computing Solution

Abstract

:1. Introduction

- Where are you (location)?

- How can you travel from point A to B (route navigation)?

- What are you doing?

- What is the environment around you?

- What is your current situation?

- What can be done for your benefit?

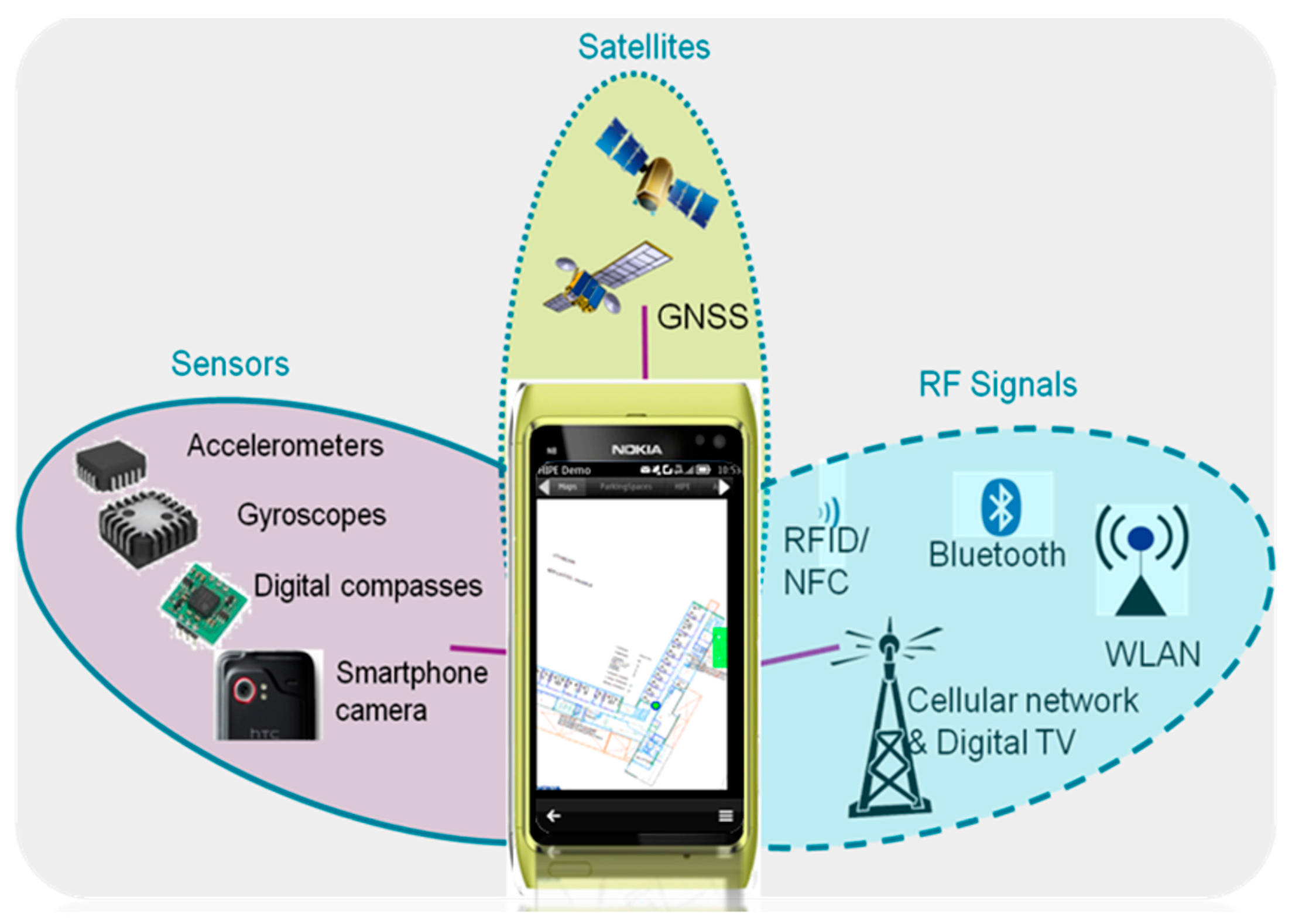

2. Background of Smartphone Mobility Sensing

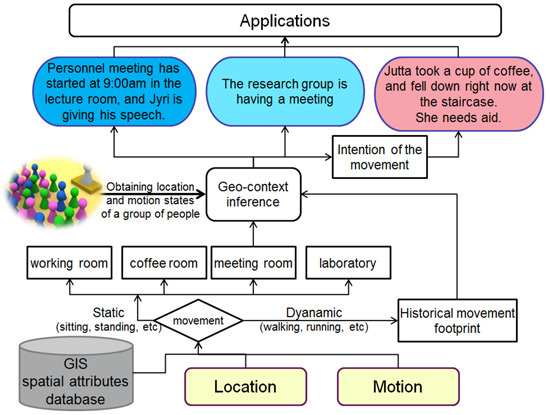

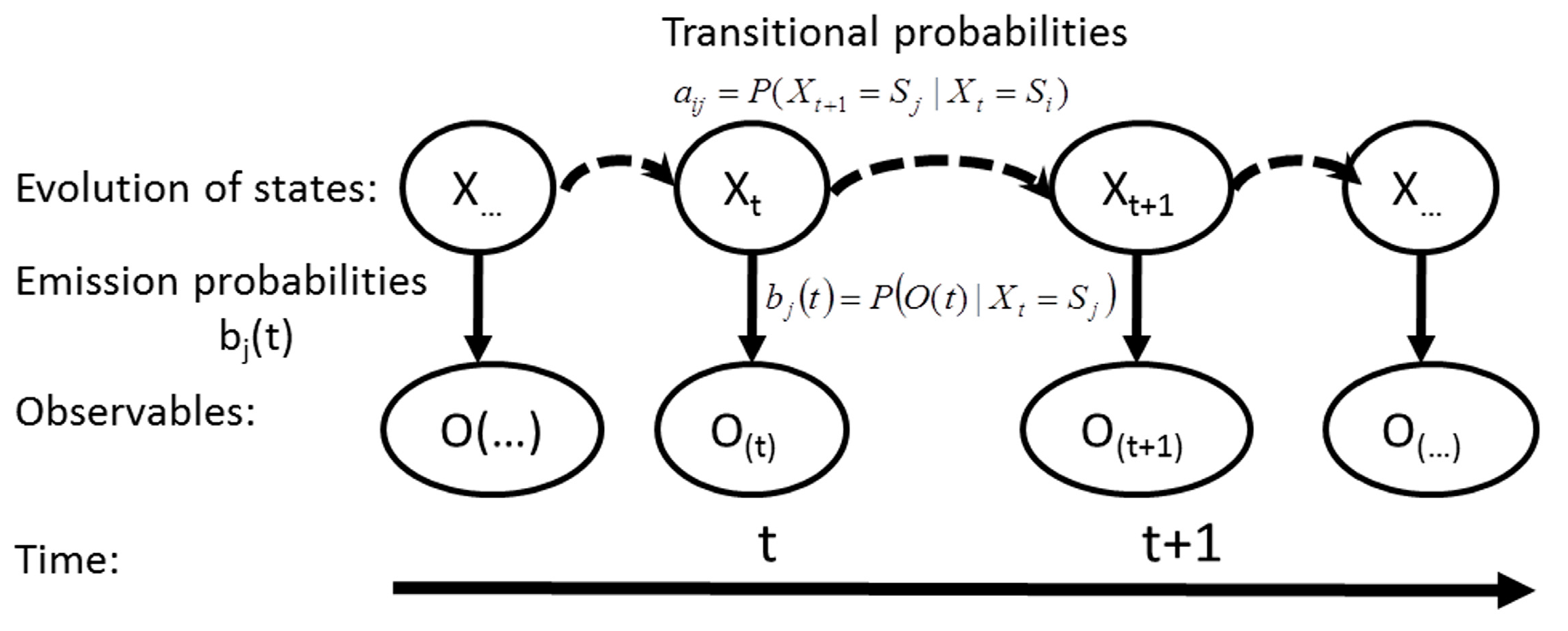

3. Geo-Context Computing Based on Hidden Markov Models

3.1. Problem Formulation and Solutions of Hidden Markov Models

3.2. Radio Signals and MEMS Sensors Integration for Smartphone Positioning

| No. | Combinations of MDI | Sensors and methods used to obtain MDI | |

|---|---|---|---|

| Distance | Heading | ||

| 1 | Measured distance & heading | accelerometers | compass |

| accumulated step lengths | directly measured | ||

| 2 | Measured distance | accelerometers | none |

| accumulated step lengths | unknown | ||

| 3 | Measured heading & assumed speed | none | compass |

| a constant speed model of 1 m/s | directly measured | ||

| 4 | Assumed speed | none | none |

| a constant speed model of 1 m/s | unknown | ||

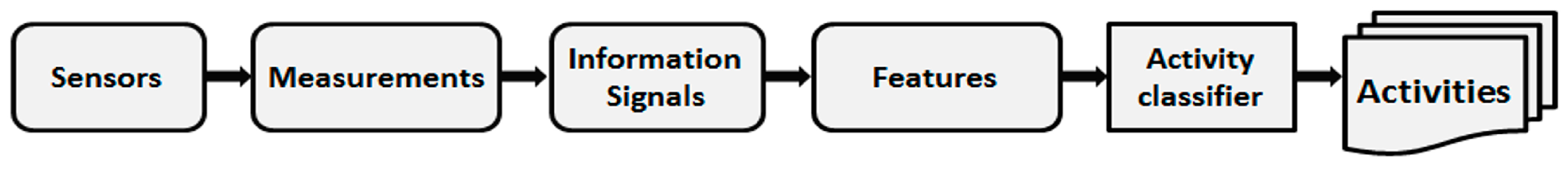

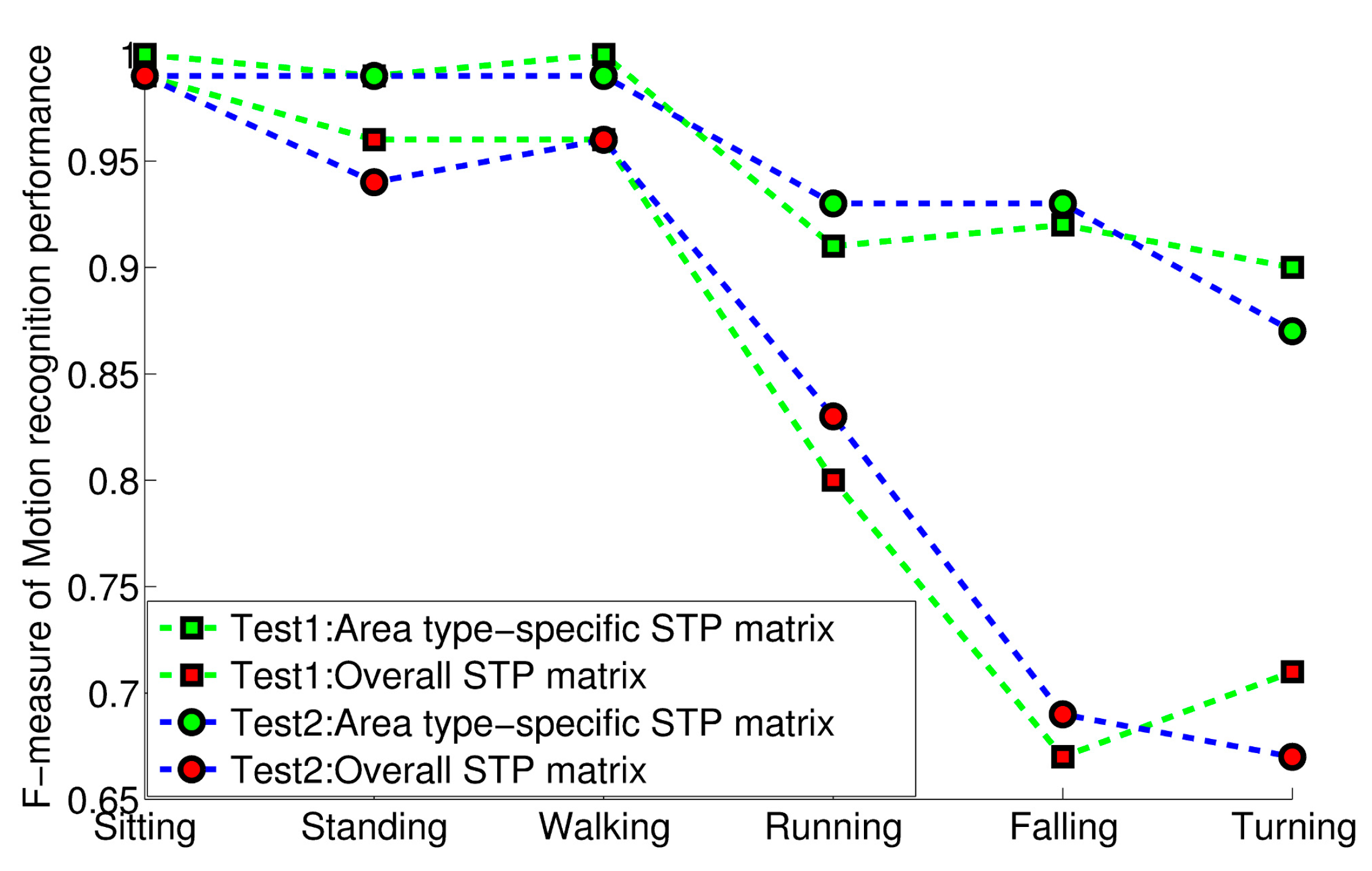

3.3. Human Motion State Recognition

| Area Types | Motion t + 1 | Sitting | Standing | Walking | Running | Falling | Turning |

|---|---|---|---|---|---|---|---|

| Motion t | |||||||

| Working room | Sitting | 0.9913 | 0.0087 | 0.000002 | 0.000002 | 0.000002 | 0.000002 |

| Standing | 0.3103 | 0.1524 | 0.4202 | 0.000002 | 0.000002 | 0.1172 | |

| Walking | 0.0008 | 0.0615 | 0.8451 | 0.000017 | 0.000007 | 0.0925 | |

| Running | 0.000002 | 0.0256 | 0.1039 | 0.8063 | 0.000007 | 0.0642 | |

| Falling | 0.9925 | 0.0075 | 0.000002 | 0.000002 | 0.000002 | 0.000002 | |

| Turning | 0.000002 | 0.2140 | 0.7705 | 0.000004 | 0.000006 | 0.0155 | |

| Coffee room | Sitting | 0.8664 | 0.1336 | 0.000002 | 0.000002 | 0.000002 | 0.000002 |

| Standing | 0.3258 | 0.2631 | 0.3938 | 0.000002 | 0.000002 | 0.0173 | |

| Walking | 0.0008 | 0.0821 | 0.8522 | 0.000022 | 0.000008 | 0.0648 | |

| Running | 0.000002 | 0.0369 | 0.1497 | 0.791438 | 0.000009 | 0.0219 | |

| Falling | 0.9942 | 0.0058 | 0.000002 | 0.000002 | 0.000002 | 0.000002 | |

| Turning | 0.000002 | 0.1180 | 0.8750 | 0.000004 | 0.000006 | 0.0069 | |

| Intersection | Sitting | 0.6419 | 0.3581 | 0.000002 | 0.000002 | 0.000002 | 0.000002 |

| Standing | 0.000002 | 0.1246 | 0.4010 | 0.0048 | 0.000002 | 0.4696 | |

| Walking | 0.000002 | 0.1741 | 0.3454 | 0.0117 | 0.000002 | 0.4687 | |

| Running | 0.000002 | 0.0677 | 0.2287 | 0.3070 | 0.000002 | 0.3966 | |

| Falling | 0.9900 | 0.0100 | 0.000002 | 0.000002 | 0.000002 | 0.000002 | |

| Turning | 0.000002 | 0.0033 | 0.8736 | 0.1195 | 0.000002 | 0.0035 | |

| Staircase | Sitting | 0.6154 | 0.3846 | 0.000002 | 0.000002 | 0.000002 | 0.000002 |

| Standing | 0.000002 | 0.0812 | 0.5412 | 0.2916 | 0.000002 | 0.0859 | |

| Walking | 0.000002 | 0.0846 | 0.6725 | 0.0725 | 0.0862 | 0.0843 | |

| Running | 0.000002 | 0.0405 | 0.2657 | 0.4470 | 0.1681 | 0.0785 | |

| Falling | 0.9900 | 0.0100 | 0.000002 | 0.000002 | 0.000002 | 0.000002 | |

| Turning | 0.000002 | 0.0073 | 0.6433 | 0.1345 | 0.1772 | 0.0377 | |

| Generic area | Sitting | 0.7712 | 0.2288 | 0.000002 | 0.000002 | 0.000002 | 0.000002 |

| Standing | 0.1079 | 0.3682 | 0.3194 | 0.1136 | 0.000002 | 0.0909 | |

| Walking | 0.0682 | 0.1775 | 0.5934 | 0.1359 | 0.000002 | 0.0250 | |

| Running | 0.000002 | 0.1863 | 0.3590 | 0.4489 | 0.000002 | 0.0058 | |

| Falling | 0.9900 | 0.0100 | 0.000002 | 0.000002 | 0.000002 | 0.000002 | |

| Turning | 0.0888 | 0.2166 | 0.6289 | 0.0657 | 0.000002 | 0.000002 |

| Motion t + 1 | Sitting | Standing | Walking | Running | Falling | Turning |

|---|---|---|---|---|---|---|

| Motion t | ||||||

| Sitting | 0.8664 | 0.1336 | 0.000002 | 0.000002 | 0.000002 | 0.000002 |

| Standing | 0.3259 | 0.2637 | 0.3932 | 0.000002 | 0.000002 | 0.0172 |

| Walking | 0.0008 | 0.0821 | 0.8522 | 0.000022 | 0.000008 | 0.0648 |

| Running | 0.000002 | 0.0369 | 0.1497 | 0.7914 | 0.000009 | 0.0219 |

| Falling | 0.9942 | 0.0058 | 0.000002 | 0.000002 | 0.000002 | 0.000002 |

| Turning | 0.000002 | 0.1180 | 0.8750 | 0.000004 | 0.000006 | 0.0070 |

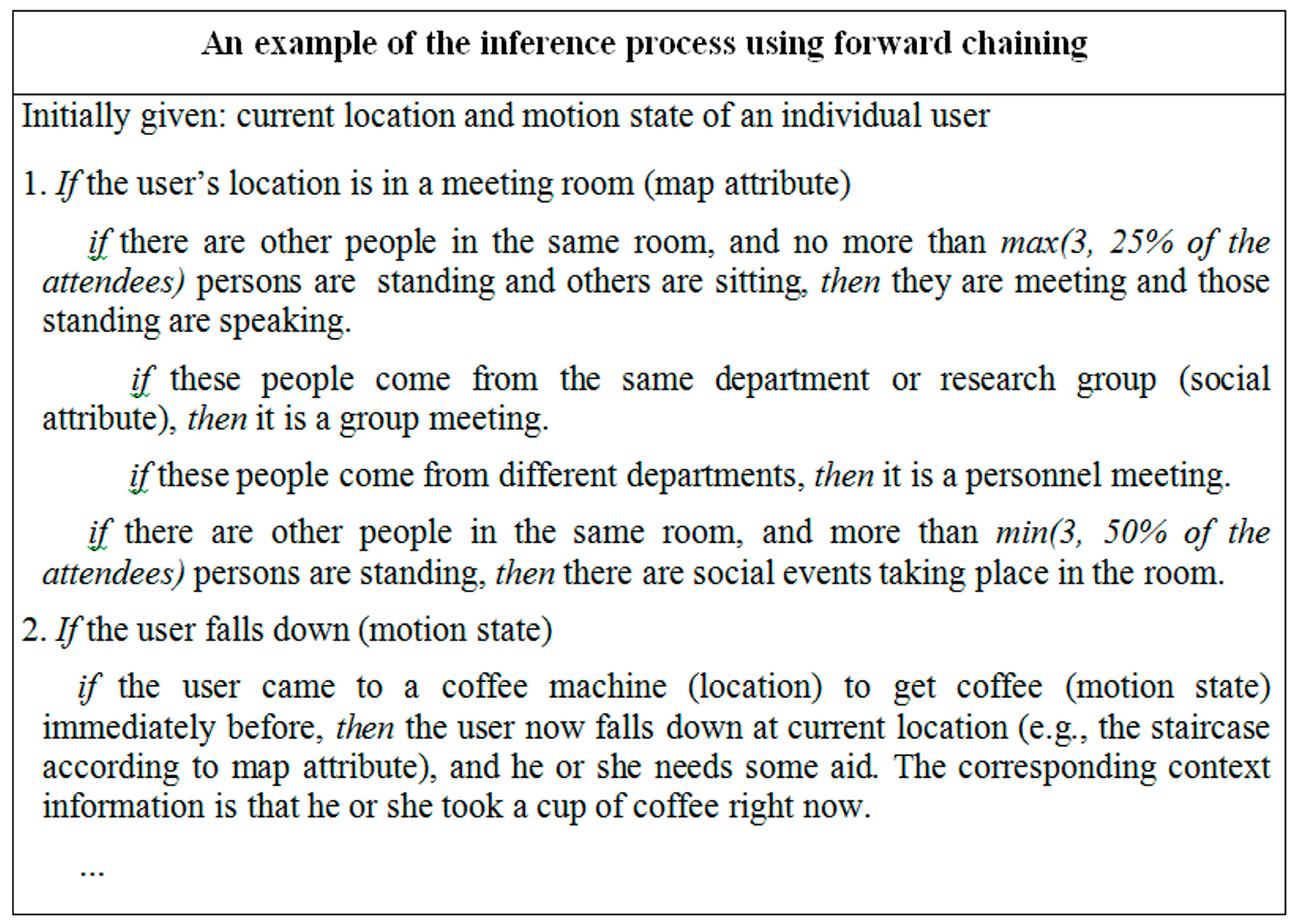

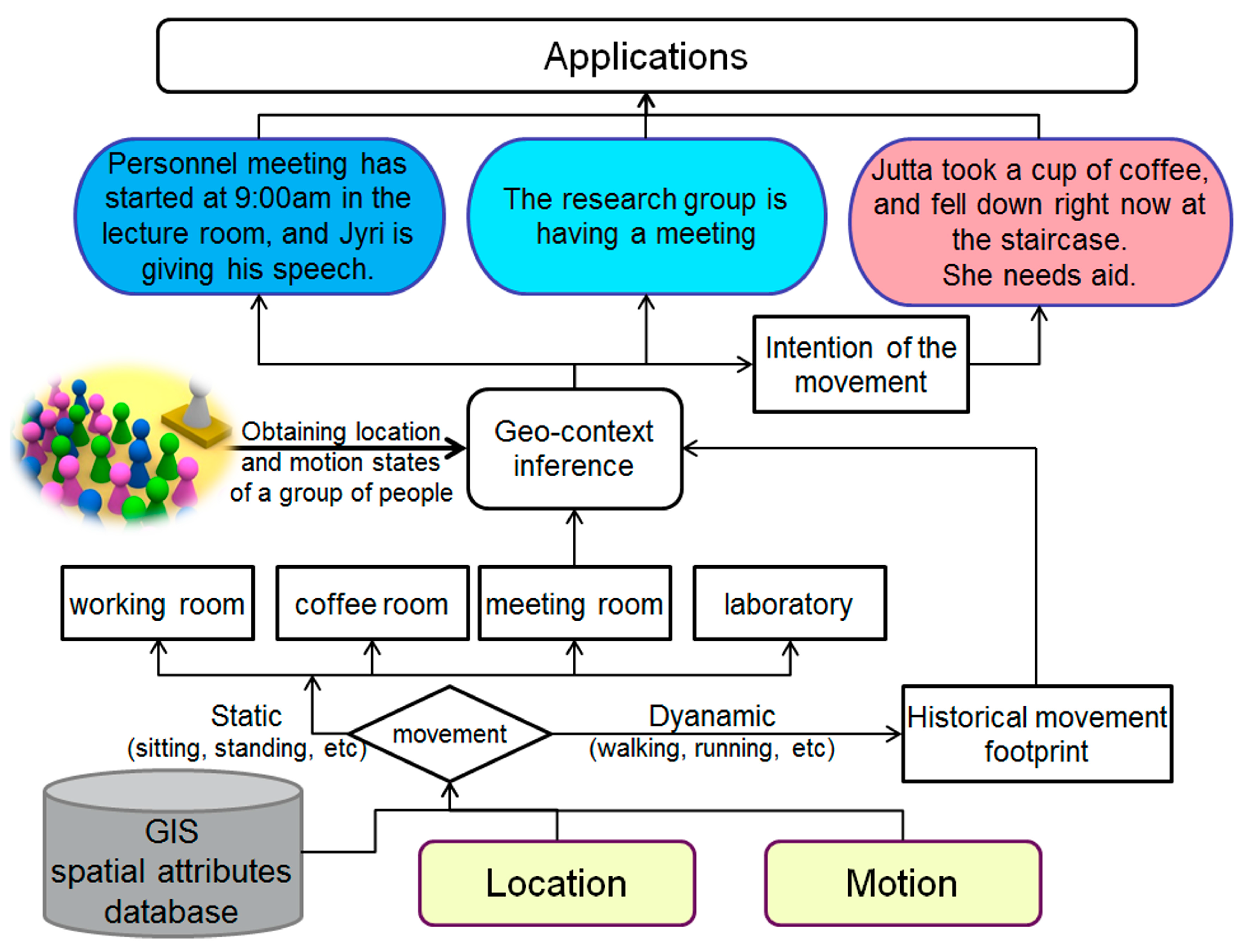

3.4. Geo-Context Inference and Interpretation

4. Conclusions and Outlook

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Calderoni, L.; Ferrara, M.; Franco, A.; Maio, D. Indoor localization in a hospital environment using Random Forest classifiers. Expert Syst. Appl. 2015, 42, 125–134. [Google Scholar] [CrossRef]

- Chen, R.; Chu, T.; Xu, W.; Li, X.; Liu, J.; Chen, Y.; Chen, L.; Hyyppa, J.; Tang, J. Development of a contextual thinking engine in mobile devices. In Proceedings of IEEE UPINLBS 2014, Corpus Christ, TX, USA, 2–5 November 2014.

- Conte, G.; Marchi, M.; Nacci, A.; Rana, V.; Sciuto, D. BlueSentinel: A first approach using iBeacon for an energy efficient occupancy detection system. In Proceedings of Proceedings of the 1st ACM Conference on Embedded Systems for Energy-Efficient Buildings (BuildSys’14), New York, NY, USA, 5–6 November 2014; pp. 11–19. [CrossRef]

- Pei, L.; Chen, L.; Guinness, R.; Liu, J.; Kuusiniemi, H.; Chen, Y.; Chen, R. Sound positioning using a small scale linear microphone array. In Proceedings of the IPIN 2013 Conference, Montbeliard, France, 28–31 October 2013.

- Li, X.; Wang, J.; Li, T. Seamless positioning and navigation by using geo-referenced images and multi-sensor data. Sensors 2013, 13, 9047–9069. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Chen, R.; Chen, Y.; Tang, J.; Hyyppä, J. A bright idea: Testing the feasibility of positioning using ambient light. Available online: http://gpsworld.com/innovation-a-bright-idea (accessed on 11 February 2015).

- Liu, J.; Chen, Y.; Tang, J.; Jaakkola, A.; Hyyppä, J. The uses of ambient light for ubiquitous positioning. In Proceedings of IEEE/ION PLANS 2014, Monterey, CA, USA, 5–8 May 2014.

- Liang, X.; Jaakkola, A.; Wang, Y.; Hyyppä, J.; Honkavaara, E.; Liu, J.; Kaartinen, H. The use of a hand-held camera for individual tree 3D mapping in forest sample plots. Remote Sens. 2014, 6, 6587–6603. [Google Scholar] [CrossRef]

- Al-Hamad, A.; El-Sheimy, N. Smartpones based mobile mapping systems. In Proceedings of ISPRS Technical Commission V Symposium, Riva del Garda, Italy, 23–25 June 2014; Volume XL-5, pp. 29–34.

- Lane, N.D.; Miluzzo, E.; Lu, H.; Peebles, D.; Choudhury, T.; Campbell, A.T. A survey of mobile phone sensing. IEEE Commun. Mag. 2010, 48, 140–150. [Google Scholar] [CrossRef]

- Taniuchi, D.; Maekawa, T. Automatic update of indoor location fingerprints with pedestrian dead reckoning. ACM Trans. Embed. Comput. Syst. 2015, 14. [Google Scholar] [CrossRef]

- Eagle, N.; Pentland, A. Reality mining: Sensing complex social systems. Pers. Ubiquitous Comput. 2006, 10, 255–268. [Google Scholar] [CrossRef]

- Adams, B.; Phung, D.; Venkatesh, S. Sensing and using social context. ACM Trans. Multimed. Comput. Commun. Appl. 2008, 5, 11. [Google Scholar] [CrossRef]

- Choudhury, T.; Pentland, A. Sensing and modeling human networks using the sociometer. In Proceedings of the Proceedings 7th IEEE International Symposium on Wearable Computers (ISWC2003), White Plains, NY, USA, 21–23 October 2003; pp. 216–222.

- Masiero, A.; Guarnieri, A.; Vettore, A.; Pirotti, F. ISVD-based Euclidian structure from motion for smartphones. In Proceedings of ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci., Riva del Garda, Italy, 23–25 June 2014; pp. 401–406.

- Saeedi, S.; Moussa, A.; El-Sheimy, N. Context-Aware Personal Navigation Using Embedded Sensor Fusion in Smartphones. Sensors 2014, 14, 5742–5767. [Google Scholar] [CrossRef] [PubMed]

- Campbell, A.; Choudhury, T. From smart to cognitive phones. IEEE Pervasive Comput. 2012, 11, 7–11. [Google Scholar] [CrossRef]

- Wang, D.; Subagdja, B.; Kang, Y.; Tan, A.; Zhang, D. Towards intelligent caring agents for aging-in-place: Issues and challenges. In Proceedings of 2014 IEEE Symposium on Computational Intelligence for Human-Like Intelligence (CIHLI), Orlando, FL, USA, 9–12 December 2014; pp. 1–8.

- Bahl, P.; Padmanabhan, V. Radar: An in-building RF based user location and tracking system. In Proceedings of IEEE INFOCOM, Tel-Aviv, Israel, 26–30 March 2000; pp. 775–784.

- Youssef, M.; Agrawala, A. The Horus WLAN location determination system. In Proceedings of the 3rd International Conference on Mobile Systems, Applications, and Services, New York, NY, USA, 5 June 2005; pp. 205–218.

- Liu, J.; Chen, R.; Pei, L.; Chen, W.; Tenhunen, T.; Kuusniemi, H.; Kröger, T.; Chen, Y. Accelerometer assisted robust wireless signal positioning based on a hidden Markov model. In Proceedings of IEEE/ION PLANS 2010, Indian Wells, CA, USA, 4–6 May 2010; pp. 488–497.

- Liu, J.; Chen, R.; Pei, L.; Guinness, R.; Kuusniemi, H. A hybrid smartphone indoor positioning solution for mobile LBS. Sensors 2012, 12, 17208–17233. [Google Scholar] [CrossRef] [PubMed]

- Au, A.; Feng, C.; Valaee, S.; Reyes, S.; Sorour, S.; Markowitz, S.N.; Gordon, K.; Eizenman, M.; Gold, D. Indoor tracking and navigation using received signal strength and compressive sensing on a mobile device. IEEE Trans. Mob. Comput. 2013, 12, 2050–2062. [Google Scholar] [CrossRef]

- Jie, Y.; Qiang, Y.; Lionel, N. Learning adaptive temporal radio maps for signal-strength-based location estimation. IEEE Trans. Mob. Comput. 2008, 7, 869–883. [Google Scholar]

- Kushki, A.; Plataniotis, K.N.; Venetsanopoulos, A.N. Intelligent dynamic radio tracking in indoor wireless local area networks. IEEE Trans. Mob. Comput. 2010, 9, 405–419. [Google Scholar] [CrossRef]

- Pei, L.; Chen, R.; Liu, J.; Chen, W.; Kuusniemi, H.; Tenhunen, T.; Kröger, T.; Leppäkoski, H.; Chen, Y.; Takala, J. Motion recognition assisted indoor wireless navigation on a mobile phone. In Proceedings of the ION GNSS 2010 conference, Portland, OR, USA, 21–24 September 2010; pp. 3366–3375.

- Frank, K.; Vera-Nadales, M.J.; Robertson, P.; Angermann, M. Reliable real-time recognition of motion related human activities using MEMS inertial sensors. In Proceedings of the ION GNSS 2010, Portland, OR, USA, 21–24 September 2010; pp. 2919–2932.

- Shin, B.; Kim, C.; Kim, J.; Lee, S.; Kee, C.; Lee, T. Hybrid model-based motion recognition for smartphone users. ETRI J. 2014, 36, 1016–1022. [Google Scholar] [CrossRef]

- Parviainen, J.; Bojja, J.; Collin, J.; Leppänen, J.; Eronen, A. Adaptive activity and environment recognition for mobile phones. Sensors 2014, 14, 20753–20778. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zou, H.; Jiang, H.; Zhu, Q.; Soh, Y.; Xie, L. Fusion of WiFi, smartphone sensors and landmarks using the Kalman filter for indoor localization. Sensors 2015, 15, 715–732. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Sen, S.; Elgohary, A.; Farid, M.; Youssef, M; Choudhury, R. Unsupervised Indoor Localization. In Proceedings of the Mobisys, Low Wood Bay, Lake District, UK, 25–29 June 2012.

- Lukianto, C.; Sternberg, H.; Gacic, A. STEPPING—Phone-based portable pedestrian indoor navigation. Arch. Photogramm. Cartogr. Remote Sens. 2011, 22, 311–323. [Google Scholar]

- Rabiner, L.R. A tutorial on hidden Markov models and selected applications in speech recognition. IEEE Proc. 1989, 77, 257–286. [Google Scholar] [CrossRef]

- Pei, L.; Chen, R.; Liu, J.; Kuusniemi, H.; Chen, Y.; Tenhunen, T. Using motion-awareness for the 3D indoor personal navigation on a Smartphone. In Proceedings of the 24rd International Technical Meeting of The Satellite Division of the Institute of Navigation, Portland, OR, USA, 19–23 September 2011; pp. 2906–2912.

- Godha, S.; Lachapelle, G.; Cannon, M.E. Integrated GPS/INS system for pedestrian navigation in a signal degraded environment. In Proceedings of the ION GNSS 2006 Conference, Fort Worth, TX, USA, 26–29 September 2006.

- Kuusniemi, H.; Liu, J.; Pei, L.; Chen, Y.; Chen, L.; Chen, R. Reliability considerations of multi-sensor multi-network pedestrian navigation. IET Radar Sonar Navig. 2012, 6, 157–164. [Google Scholar] [CrossRef]

- Pei, L.; Guinness, R.; Chen, R.; Liu, J.; Kuusniemi, H.; Kaistinen, J. Human behavior cognition using smartphone sensors. Sensors 2013, 13, 1402–1424. [Google Scholar] [CrossRef] [PubMed]

- Pei, L.; Liu, J.; Guinness, R.; Chen, Y.; Kuusniemi, H.; Chen, R. Using LS-SVM based motion recognition for smartphone indoor wireless positioning. Sensors 2012, 12, 6155–6175. [Google Scholar] [CrossRef] [PubMed]

- Pei, L.; Chen, R.; Liu, J.; Tenhunen, T.; Kuusniemi, H.; Chen, Y. An inquiry-based Bluetooth indoor positioning approach for the Finnish pavilion at Shanghai World Expo2010. In Proceedings of the Position Location and Navigation Symposium (PLANS), 2010 IEEE/ION, Indian Wells, CA, USA, 3–6 May 2010; pp. 1002–1009.

- King, T.; Kopf, S.; Haenselmann, T.; Lubberger, C.; Effelsberg, W. COMPASS: A probabilistic indoor positioning system based on 802.11 and digital compasses. In Proceedings of the International Workshop on Wireless Network Testbeds, Experimental Evaluation and Characterization (WiNTECH’06), Los Angeles, CA, USA, 29 September 2006; pp. 34–40.

- Besada, J.A.; Bernardos, A.M.; Tarrio, P.; Casar, J.R. Analysis of tracking methodr wireless indoor localization. In Proceedings of 2nd International Symposium on Wireless Pervasive Computing 2007.(ISWPC ’07), San Juan, Puerto Rico, 5–7 February 2007; pp. 493–497.

- Liu, J.; Chen, R.; Chen, Y.; Pei, L.; Chen, L. iParking: An intelligent indoor location-based smartphone parking service. Sensors 2012, 12, 14612–14629. [Google Scholar] [CrossRef] [PubMed]

- Masiero, A.; Guarnieri, A.; Pirotti, F.; Vettore, A. A Particle Filter for Smartphone-Based Indoor Pedestrian Navigation. Micromachines 2014, 5, 1012–1033. [Google Scholar] [CrossRef]

- Widyawan; Pirkl, G.; Munaretto, D.; Fischer, C.; An, C.; Lukowicz, P.; Klepal, M.; Timm-Giel, A.; Widmer, J.; Pesch, D.; et al. Virtual lifeline: Multimodal sensor data fusion for robust navigation in unknown environments. Pervasive Mob. Comput. 2012, 8, 388–401. [Google Scholar] [CrossRef]

- Tian, Z.; Fang, X.; Zhou, M.; Li, L. Smartphone-Based Indoor Integrated WiFi/MEMS Positioning Algorithm in a Multi-Floor Environment. Micromachines 2015, 6, 347–363. [Google Scholar] [CrossRef]

- Evennou, F.; Marx, F.; Novakov, E. Map-aided Indoor Mobile Positioning System Using Particle Filter. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC’ 05), New Orleans, LA, USA, 13–17 March 2005; Volume 4, pp. 2490–2494.

- Kotsiantis, S.B. Supervised machine learning: A review of classification techniques. Informatica 2007, 31, 249–268. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning, and Inference; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Liu, J. Hybrid positioning with smartphones. In Ubiquitous Positioning and Mobile Location-Based Services in Smart Phones; Chen, R., Ed.; IGI Global: Hershey, PA, USA, 2012; pp. 159–194. [Google Scholar]

- Ristic, B.; Arulampalam, S.; Gordon, N. Beyond the Kalman Filter: Particle Filters for Tracking Applications; Artech House Publishers: Norwood, MA, USA, 2004. [Google Scholar]

- Hautefeuille, M.; O’Flynn, B.; Peters, F.H.; O’Mahony, C. Development of a Microelectromechanical System (MEMS)-Based Multisensor Platform for Environmental Monitoring. Micromachines 2011, 2, 410–430. [Google Scholar] [CrossRef]

- Aggarwal, P.; Syed, Z.; El-Sheimy, N. MEMS-Based Integrated Navigation; Artech House Publishers: Norwood, UK, 2010. [Google Scholar]

- Francis, J.M.; Kubala, F.; Schwartz, R.; Weischedel, R. Performance measures for information extraction. In Proceedings of DARPA Broadcast News Workshop, Herndon, VA, USA, 28 February–3 March 1999; pp. 249–252.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Zhu, L.; Wang, Y.; Liang, X.; Hyyppä, J.; Chu, T.; Liu, K.; Chen, R. Reciprocal Estimation of Pedestrian Location and Motion State toward a Smartphone Geo-Context Computing Solution. Micromachines 2015, 6, 699-717. https://doi.org/10.3390/mi6060699

Liu J, Zhu L, Wang Y, Liang X, Hyyppä J, Chu T, Liu K, Chen R. Reciprocal Estimation of Pedestrian Location and Motion State toward a Smartphone Geo-Context Computing Solution. Micromachines. 2015; 6(6):699-717. https://doi.org/10.3390/mi6060699

Chicago/Turabian StyleLiu, Jingbin, Lingli Zhu, Yunsheng Wang, Xinlian Liang, Juha Hyyppä, Tianxing Chu, Keqiang Liu, and Ruizhi Chen. 2015. "Reciprocal Estimation of Pedestrian Location and Motion State toward a Smartphone Geo-Context Computing Solution" Micromachines 6, no. 6: 699-717. https://doi.org/10.3390/mi6060699