Comparing Machine and Deep Learning Methods for the Phenology-Based Classification of Land Cover Types in the Amazon Biome Using Sentinel-1 Time Series

Abstract

:1. Introduction

- Describe phenological patterns of land cover/land use;

- Characterize erosion/accretion changes in coastal and fluvial environments;

- Evaluate the behavior of VV-only, VH-only, and both VV and VH (VV&VH) datasets in the differentiation of land-cover/land-use features;

- Compare the behavior of five traditional machine learning models (RF, XGBoost, SVM, k-NN, and MLP) and four RNN models (LSTM, Bi-LSTM, GRU, and Bidirectional GRU (Bi-GRU)) in time-series classification;

- Produce a land-cover/land-use map for the Amapá region.

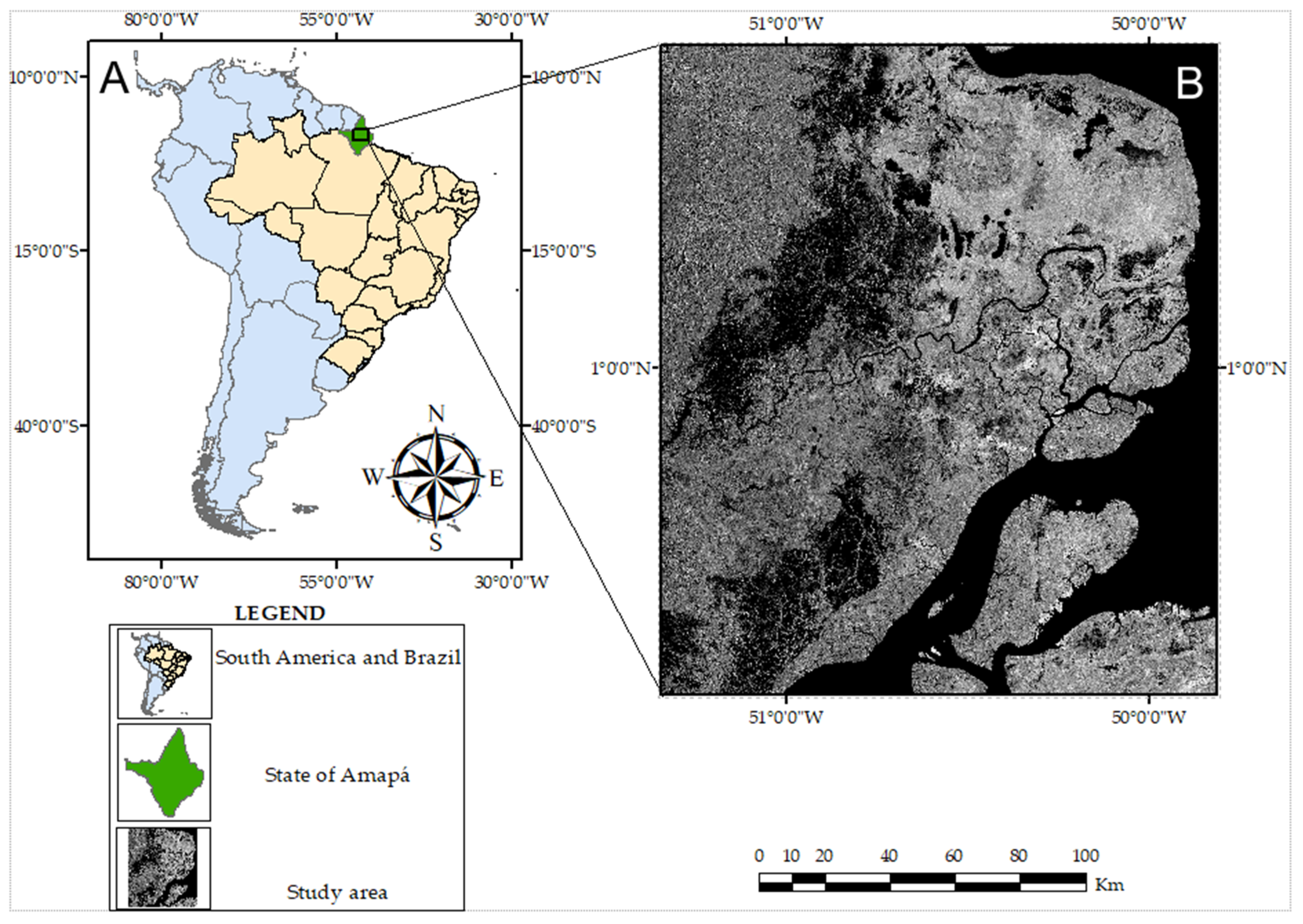

2. Study Area

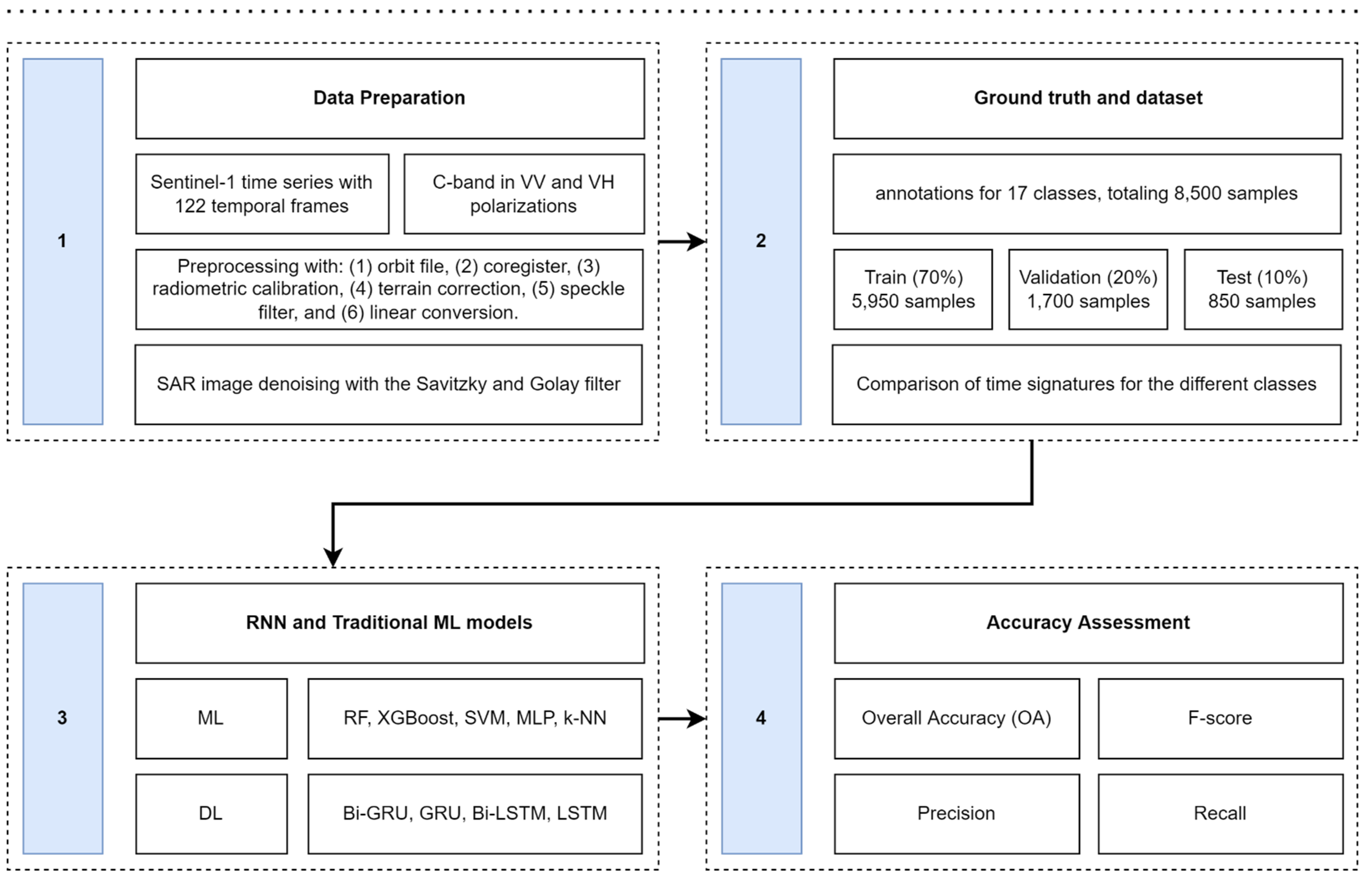

3. Materials and Methods

3.1. Data Preparation

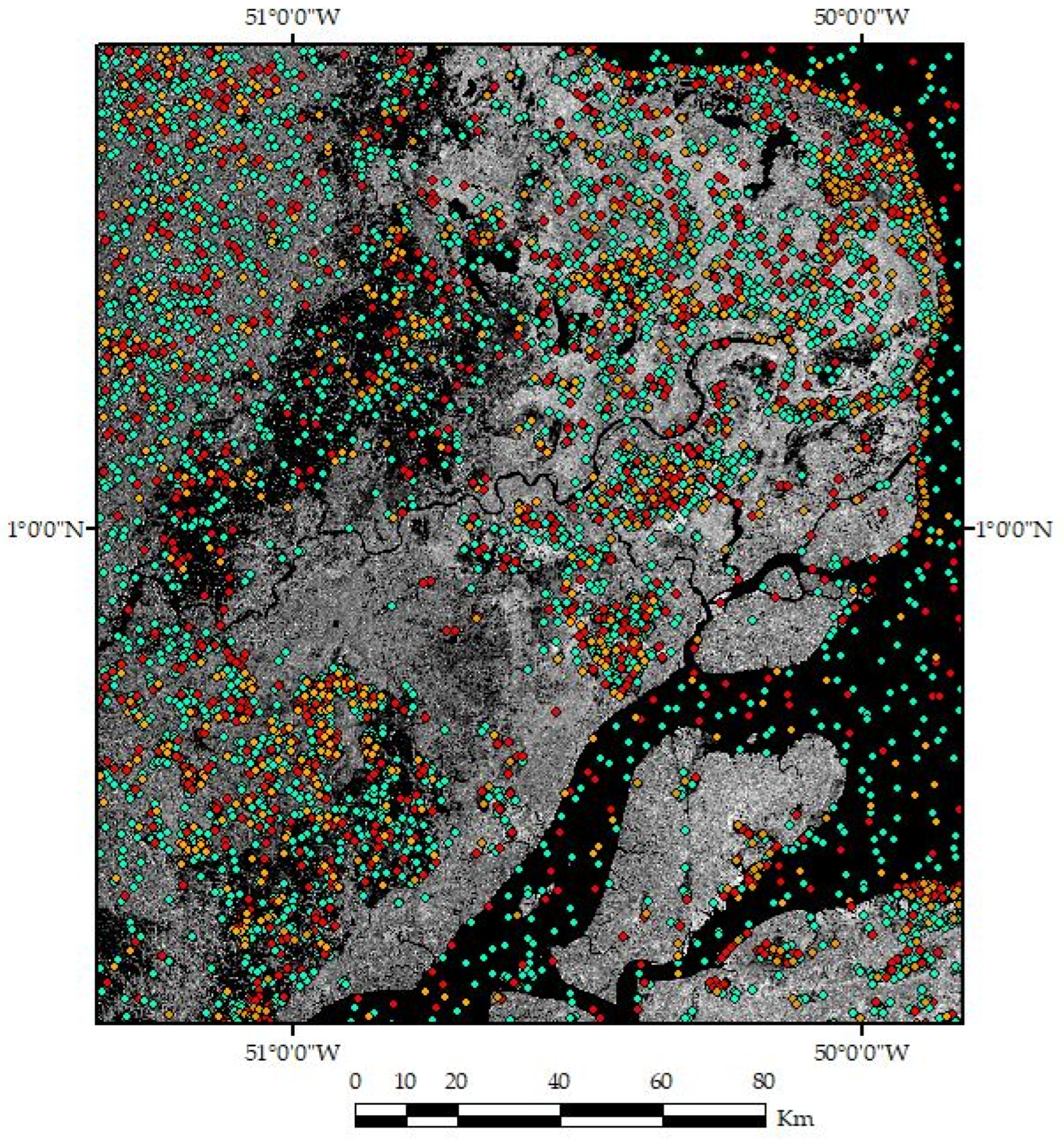

3.2. Ground Truth and Sample Dataset

3.3. Image Classification

3.3.1. Traditional Machine Learning Methods

3.3.2. Recurrent Neural Network Architectures

3.4. Accuracy Assessment

4. Results

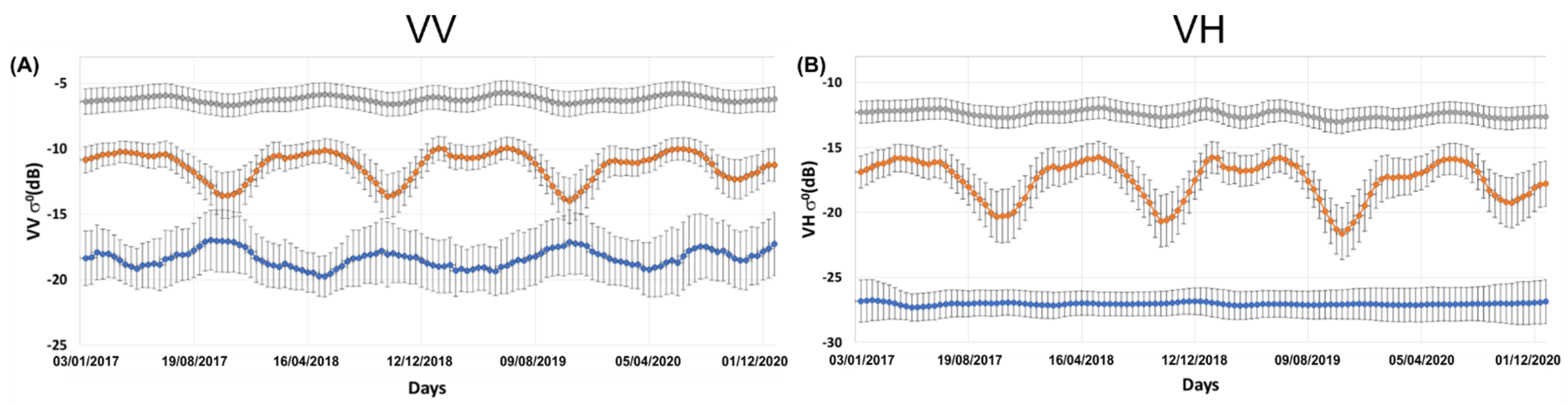

4.1. Temporal Backscattering Signatures

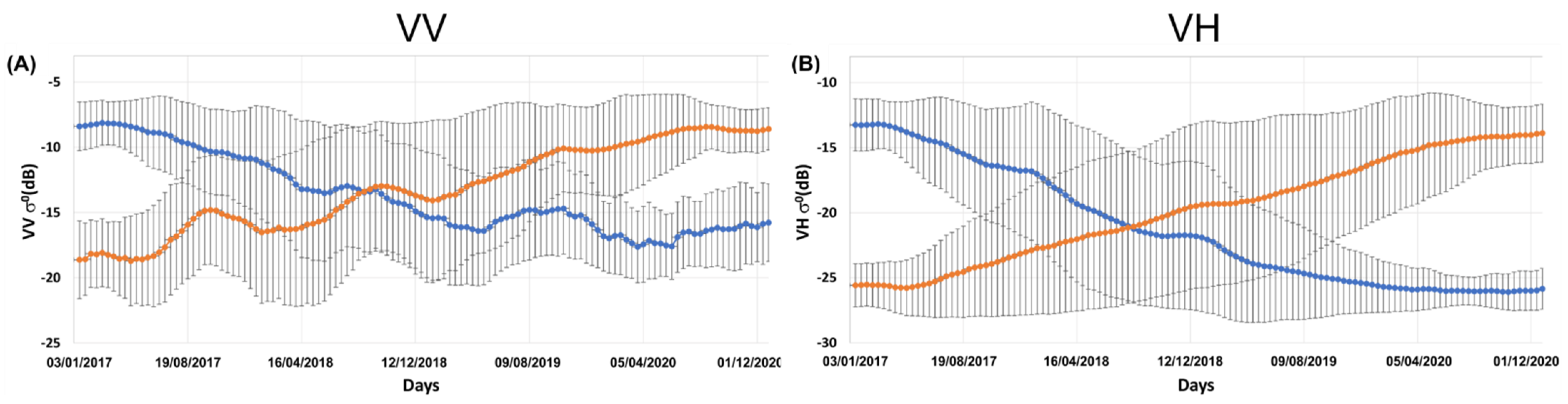

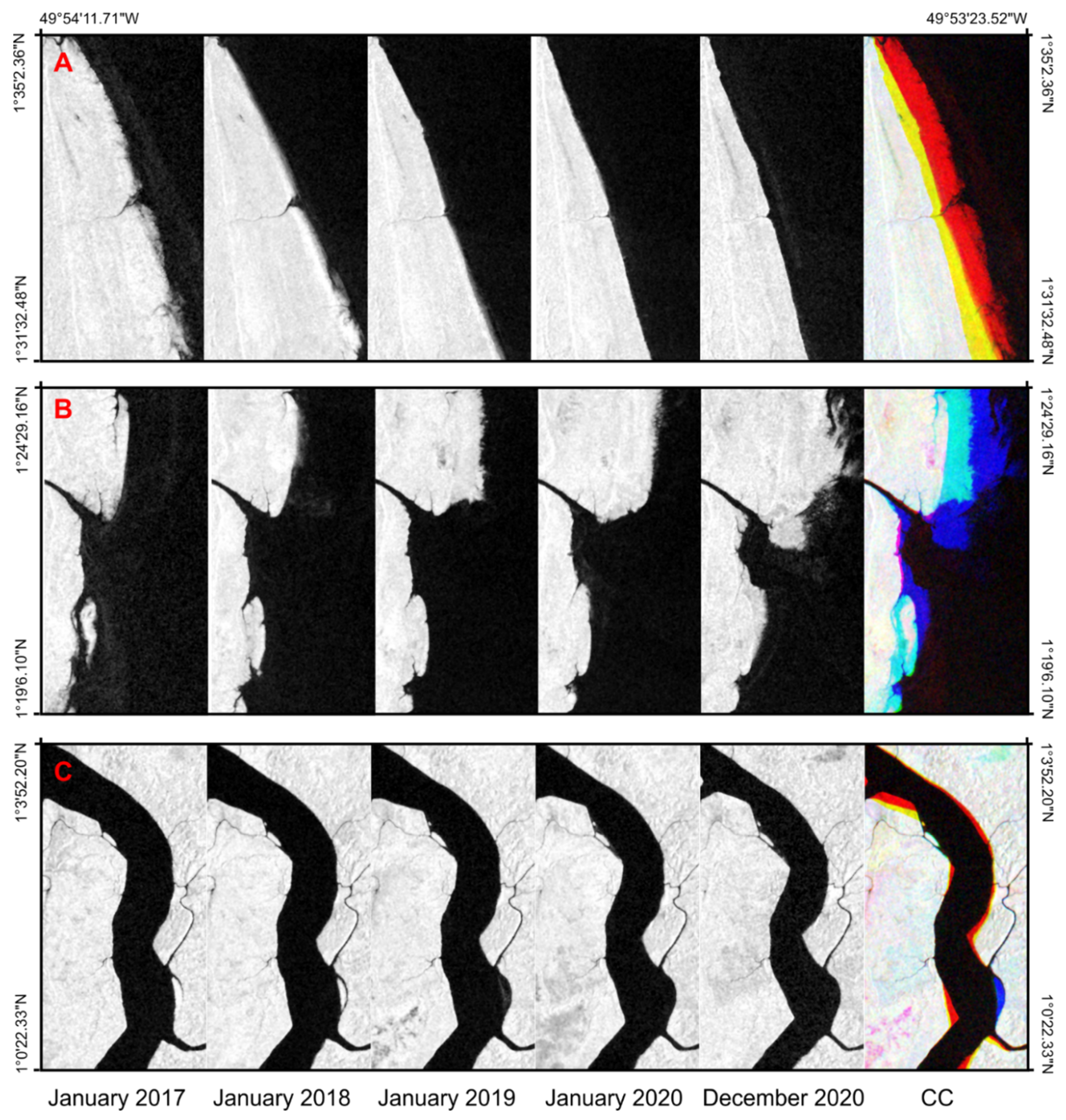

4.1.1. Water Bodies and Accretion/Erosion Changes due to Coastal and River Dynamics

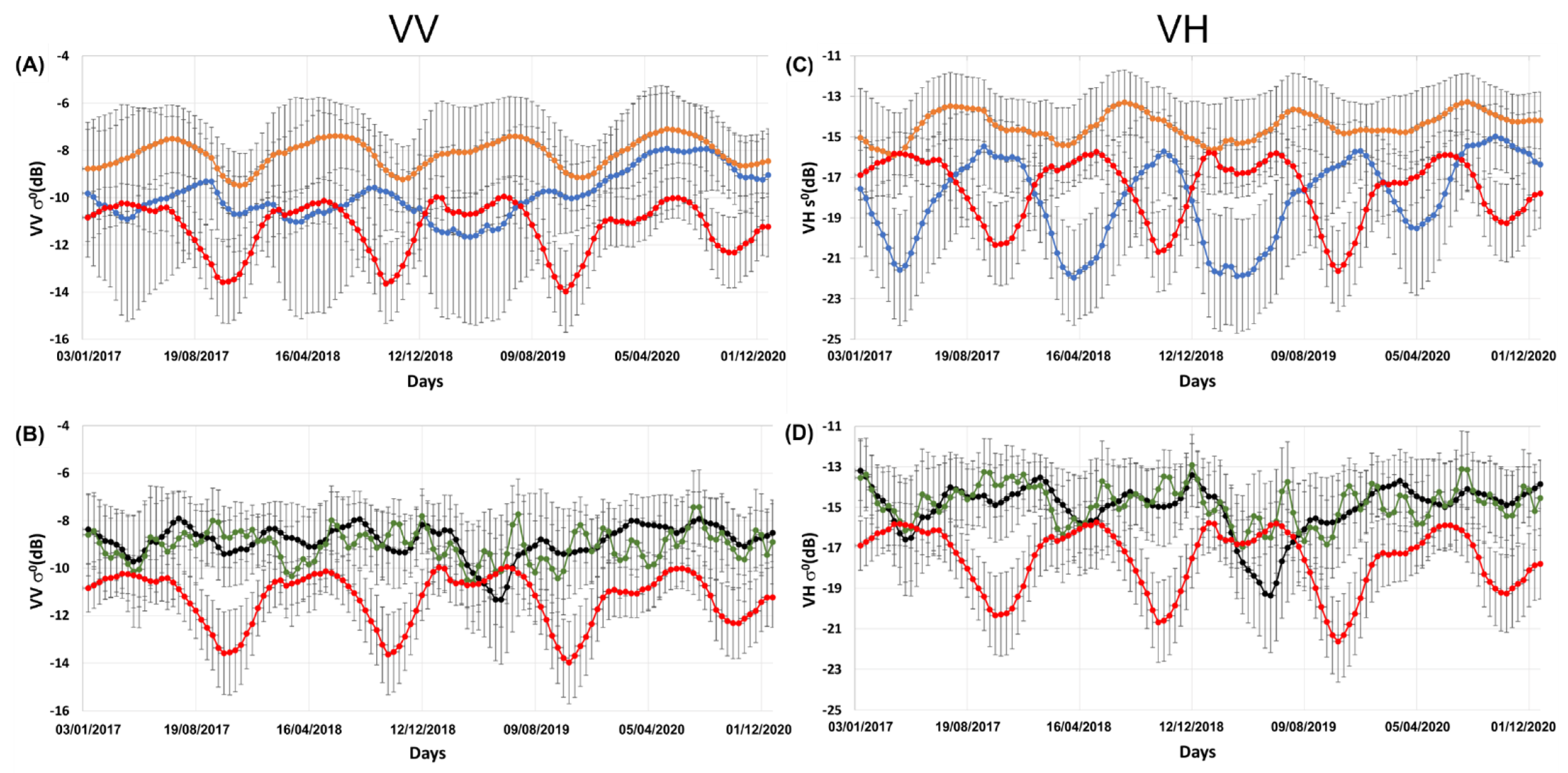

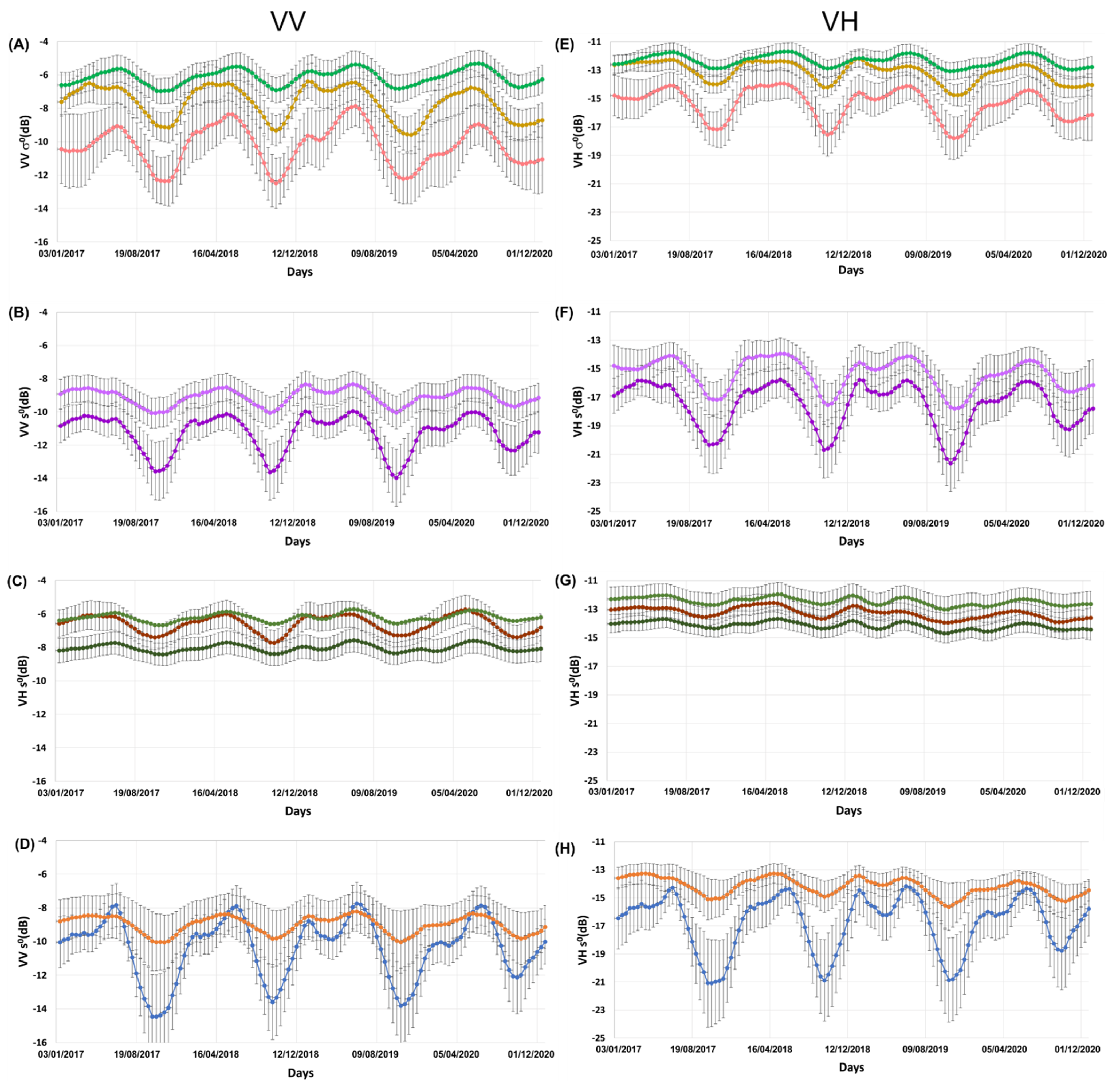

4.1.2. Phenological Patterns

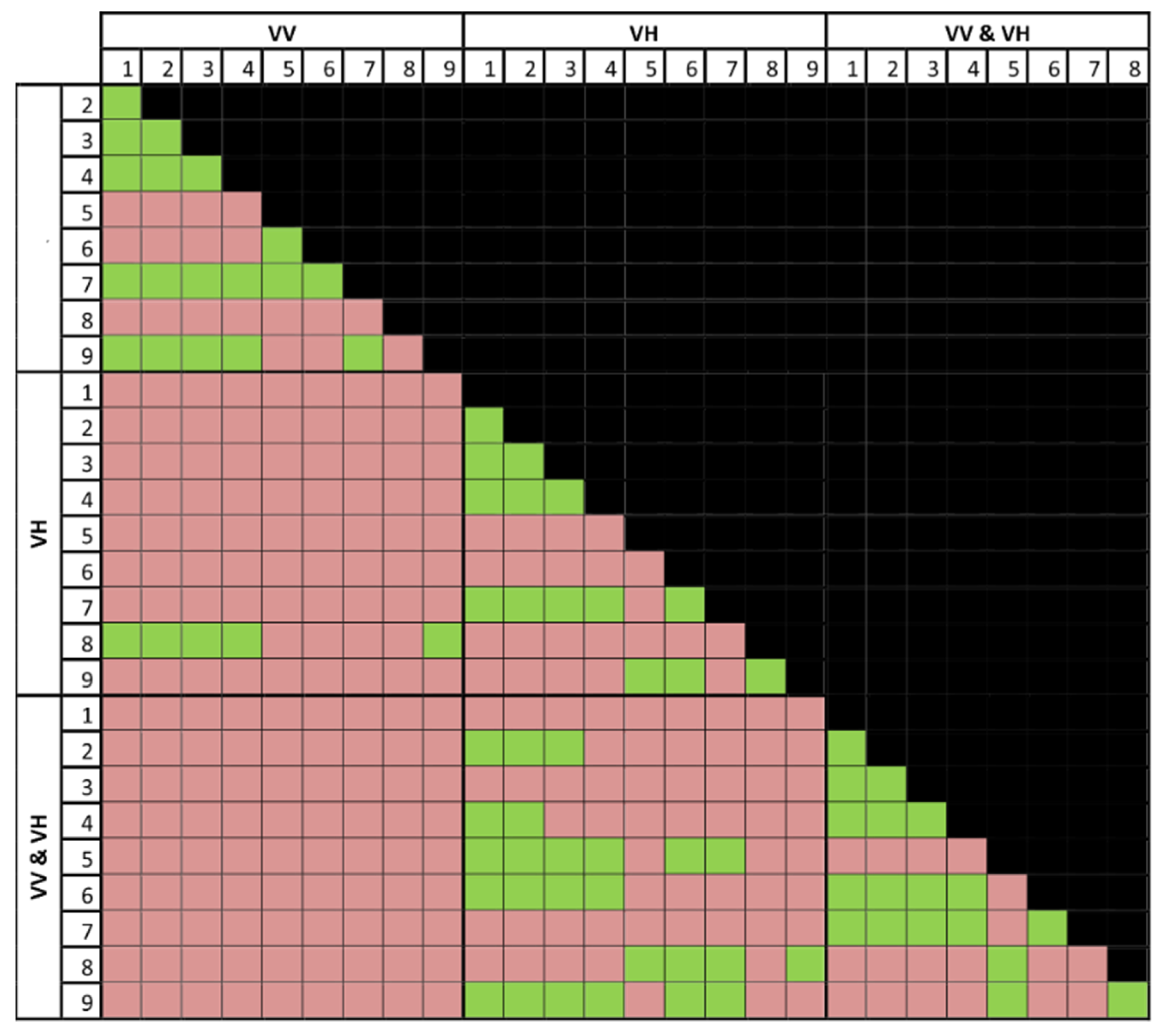

4.2. Comparison between RNN and Machine Learning Methods

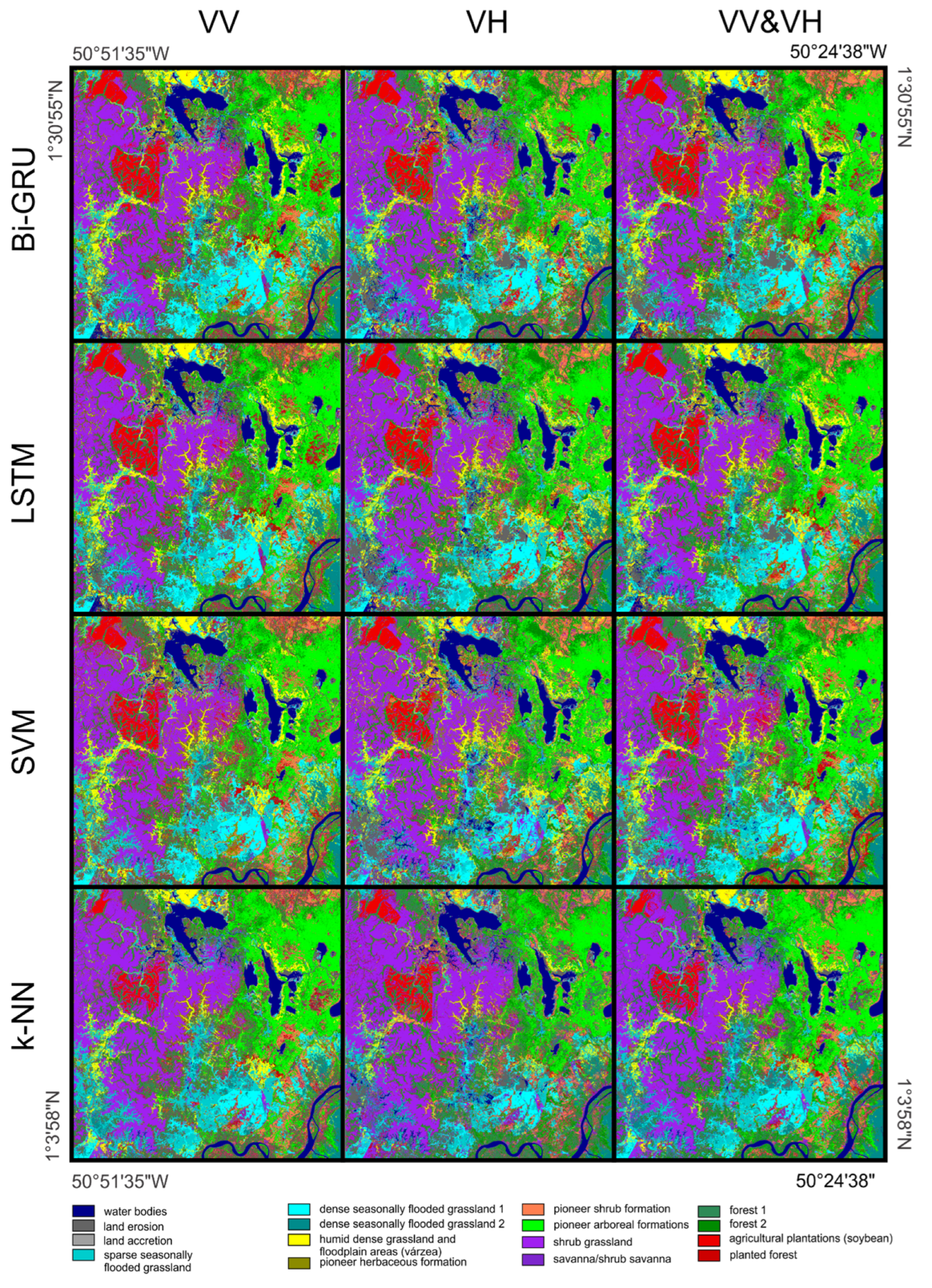

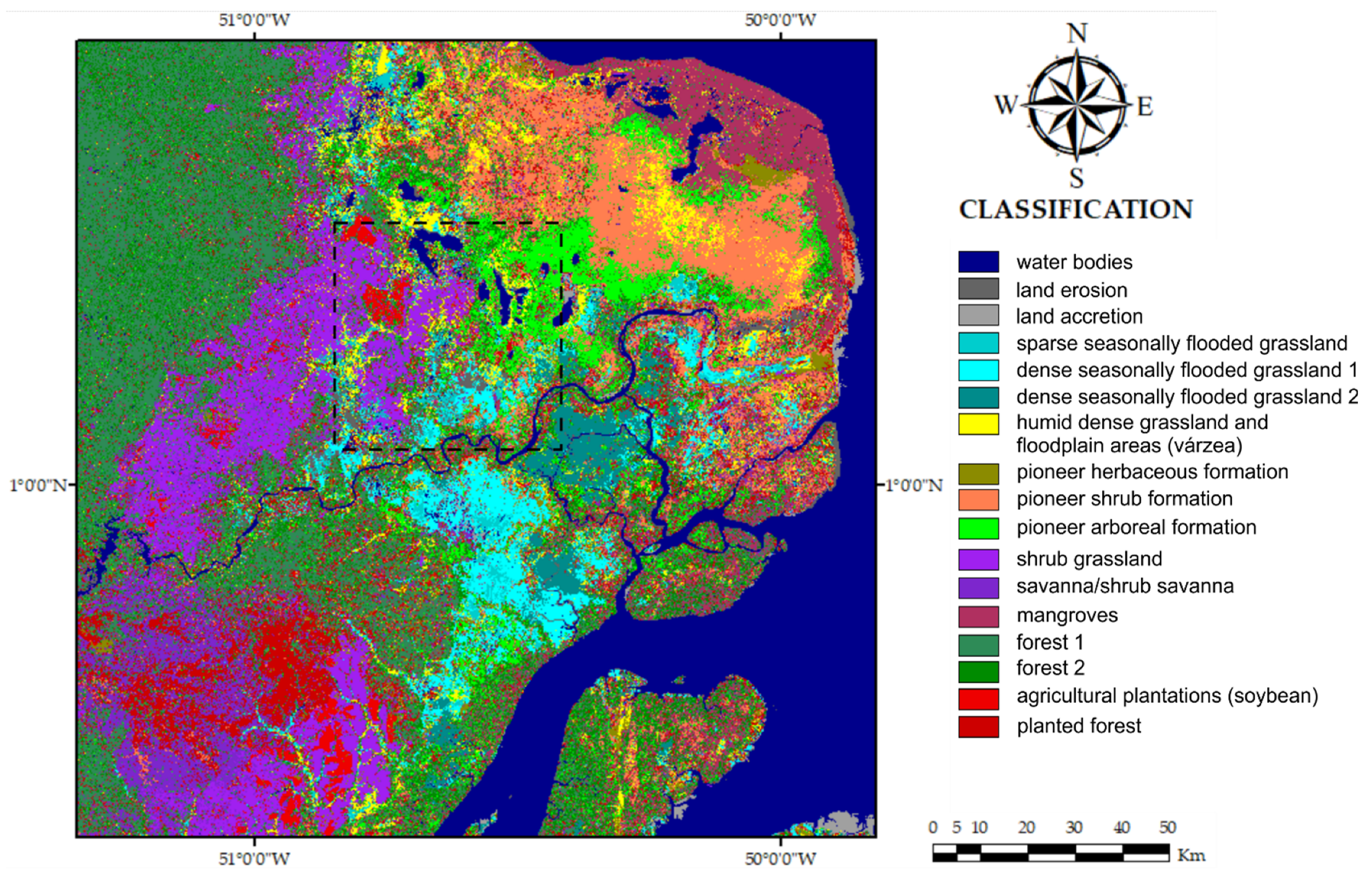

4.3. Land-Cover/Land-Use Map

5. Discussion

5.1. Temporal Signatures of Water Bodies and Alterations by Land Accretion and Erosion Processes in Coastal and River Environments

5.2. Temporal Signatures of Vegetation

5.3. Classifier Comparison

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Devecchi, M.F.; Lovo, J.; Moro, M.F.; Andrino, C.O.; Barbosa-Silva, R.G.; Viana, P.L.; Giulietti, A.M.; Antar, G.; Watanabe, M.T.C.; Zappi, D.C. Beyond forests in the Amazon: Biogeography and floristic relationships of the Amazonian savannas. Bot. J. Linn. Soc. 2021, 193, 478–503. [Google Scholar] [CrossRef]

- Antonelli, A.; Zizka, A.; Carvalho, F.A.; Scharn, R.; Bacon, C.D.; Silvestro, D.; Condamine, F.L. Amazonia is the primary source of Neotropical biodiversity. Proc. Natl. Acad. Sci. USA 2018, 115, 6034–6039. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Sha, Z.; Yu, M. Remote sensing imagery in vegetation mapping: A review. J. Plant Ecol. 2008, 1, 9–23. [Google Scholar] [CrossRef]

- Lechner, A.M.; Foody, G.M.; Boyd, D.S. Applications in Remote Sensing to Forest Ecology and Management. One Earth 2020, 2, 405–412. [Google Scholar] [CrossRef]

- De Carvalho, W.D.; Mustin, K. The highly threatened and little known Amazonian savannahs. Nat. Ecol. Evol. 2017, 1, 1–3. [Google Scholar] [CrossRef]

- Pires, J.M.; Prance, G.T. The vegetation types of the Brazilian Amazon. In Amazonia: Key Environments; Prance, G.T., Lovejoy, T.E., Eds.; Pergamon Press: New York, NY, USA, 1985; pp. 109–145. ISBN 9780080307763. [Google Scholar]

- de Castro Dias TC, A.; da Cunha, A.C.; da Silva JM, C. Return on investment of the ecological infrastructure in a new forest frontier in Brazilian Amazonia. Biol. Conserv. 2016, 194, 184–193. [Google Scholar] [CrossRef]

- Misra, G.; Cawkwell, F.; Wingler, A. Status of phenological research using sentinel-2 data: A review. Remote Sens. 2020, 12, 2760. [Google Scholar] [CrossRef]

- Caparros-Santiago, J.A.; Rodriguez-Galiano, V.; Dash, J. Land surface phenology as indicator of global terrestrial ecosystem dynamics: A systematic review. ISPRS J. Photogramm. Remote Sens. 2021, 171, 330–347. [Google Scholar] [CrossRef]

- Gao, F.; Zhang, X. Mapping Crop Phenology in Near Real-Time Using Satellite Remote Sensing: Challenges and Opportunities. J. Remote Sens. 2021, 2021, 1–14. [Google Scholar] [CrossRef]

- Bajocco, S.; Raparelli, E.; Teofili, T.; Bascietto, M.; Ricotta, C. Text Mining in Remotely Sensed Phenology Studies: A Review on Research Development, Main Topics, and Emerging Issues. Remote Sens. 2019, 11, 2751. [Google Scholar] [CrossRef] [Green Version]

- Broich, M.; Huete, A.; Paget, M.; Ma, X.; Tulbure, M.; Coupe, N.R.; Evans, B.; Beringer, J.; Devadas, R.; Davies, K.; et al. A spatially explicit land surface phenology data product for science, monitoring and natural resources management applications. Environ. Model. Softw. 2015, 64, 191–204. [Google Scholar] [CrossRef]

- D’Odorico, P.; Gonsamo, A.; Gough, C.M.; Bohrer, G.; Morison, J.; Wilkinson, M.; Hanson, P.J.; Gianelle, D.; Fuentes, J.D.; Buchmann, N. The match and mismatch between photosynthesis and land surface phenology of deciduous forests. Agric. For. Meteorol. 2015, 214–215, 25–38. [Google Scholar] [CrossRef]

- Richardson, A.D.; Keenan, T.F.; Migliavacca, M.; Ryu, Y.; Sonnentag, O.; Toomey, M. Climate change, phenology, and phenological control of vegetation feedbacks to the climate system. Agric. For. Meteorol. 2013, 169, 156–173. [Google Scholar] [CrossRef]

- Workie, T.G.; Debella, H.J. Climate change and its effects on vegetation phenology across ecoregions of Ethiopia. Glob. Ecol. Conserv. 2018, 13, e00366. [Google Scholar] [CrossRef]

- Piao, S.; Liu, Q.; Chen, A.; Janssens, I.A.; Fu, Y.; Dai, J.; Liu, L.; Lian, X.; Shen, M.; Zhu, X. Plant phenology and global climate change: Current progresses and challenges. Glob. Chang. Biol. 2019, 25, 1922–1940. [Google Scholar] [CrossRef]

- Morellato, L.P.C.; Alberton, B.; Alvarado, S.T.; Borges, B.; Buisson, E.; Camargo, M.G.G.; Cancian, L.F.; Carstensen, D.W.; Escobar, D.F.E.; Leite, P.T.P.; et al. Linking plant phenology to conservation biology. Biol. Conserv. 2016, 195, 60–72. [Google Scholar] [CrossRef]

- Rocchini, D.; Andreo, V.; Förster, M.; Garzon-Lopez, C.X.; Gutierrez, A.P.; Gillespie, T.W.; Hauffe, H.C.; He, K.S.; Kleinschmit, B.; Mairota, P.; et al. Potential of remote sensing to predict species invasions. Prog. Phys. Geogr. Earth Environ. 2015, 39, 283–309. [Google Scholar] [CrossRef]

- Evangelista, P.; Stohlgren, T.; Morisette, J.; Kumar, S. Mapping Invasive Tamarisk (Tamarix): A Comparison of Single-Scene and Time-Series Analyses of Remotely Sensed Data. Remote Sens. 2009, 1, 519–533. [Google Scholar] [CrossRef]

- Nguyen, L.H.; Henebry, G.M. Characterizing Land Use/Land Cover Using Multi-Sensor Time Series from the Perspective of Land Surface Phenology. Remote Sens. 2019, 11, 1677. [Google Scholar] [CrossRef]

- Potgieter, A.B.; Zhao, Y.; Zarco-Tejada, P.J.; Chenu, K.; Zhang, Y.; Porker, K.; Biddulph, B.; Dang, Y.P.; Neale, T.; Roosta, F.; et al. Evolution and application of digital technologies to predict crop type and crop phenology in agriculture. In Silico Plants 2021, 3, diab017. [Google Scholar] [CrossRef]

- Wolkovich, E.M.; Cook, B.I.; Davies, T.J. Progress towards an interdisciplinary science of plant phenology: Building predictions across space, time and species diversity. New Phytol. 2014, 201, 1156–1162. [Google Scholar] [CrossRef] [PubMed]

- Park, D.S.; Newman, E.A.; Breckheimer, I.K. Scale gaps in landscape phenology: Challenges and opportunities. Trends Ecol. Evol. 2021, 36, 709–721. [Google Scholar] [CrossRef] [PubMed]

- Asner, G.P. Cloud cover in Landsat observations of the Brazilian Amazon. Int. J. Remote Sens. 2001, 22, 3855–3862. [Google Scholar] [CrossRef]

- Martins, V.S.; Novo, E.M.L.M.; Lyapustin, A.; Aragão, L.E.O.C.; Freitas, S.R.; Barbosa, C.C.F. Seasonal and interannual assessment of cloud cover and atmospheric constituents across the Amazon (2000–2015): Insights for remote sensing and climate analysis. ISPRS J. Photogramm. Remote Sens. 2018, 145, 309–327. [Google Scholar] [CrossRef]

- Batista Salgado, C.; Abílio de Carvalho, O.; Trancoso Gomes, R.A.; Fontes Guimarães, R. Cloud interference analysis in the classification of MODIS-NDVI temporal series in the Amazon region, municipality of Capixaba, Acre-Brazil. Soc. Nat. 2019, 31, e47062. [Google Scholar]

- Liu, C.-a.; Chen, Z.-x.; Shao, Y.; Chen, J.-s.; Hasi, T.; Pan, H.-z. Research advances of SAR remote sensing for agriculture applications: A review. J. Integr. Agric. 2019, 18, 506–525. [Google Scholar] [CrossRef]

- Jin, X.; Kumar, L.; Li, Z.; Feng, H.; Xu, X.; Yang, G.; Wang, J. A review of data assimilation of remote sensing and crop models. Eur. J. Agron. 2018, 92, 141–152. [Google Scholar] [CrossRef]

- David, R.M.; Rosser, N.J.; Donoghue, D.N.M. Remote sensing for monitoring tropical dryland forests: A review of current research, knowledge gaps and future directions for Southern Africa. Environ. Res. Commun. 2022, 4, 042001. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. SAR-based detection of flooded vegetation–a review of characteristics and approaches. Int. J. Remote Sens. 2018, 39, 2255–2293. [Google Scholar] [CrossRef]

- Dostálová, A.; Lang, M.; Ivanovs, J.; Waser, L.T.; Wagner, W. European wide forest classification based on sentinel-1 data. Remote Sens. 2021, 13, 337. [Google Scholar] [CrossRef]

- Dostálová, A.; Wagner, W.; Milenković, M.; Hollaus, M. Annual seasonality in Sentinel-1 signal for forest mapping and forest type classification. Int. J. Remote Sens. 2018, 39, 7738–7760. [Google Scholar] [CrossRef]

- Ling, Y.; Teng, S.; Liu, C.; Dash, J.; Morris, H.; Pastor-Guzman, J. Assessing the Accuracy of Forest Phenological Extraction from Sentinel-1 C-Band Backscatter Measurements in Deciduous and Coniferous Forests. Remote Sens. 2022, 14, 674. [Google Scholar] [CrossRef]

- Rüetschi, M.; Schaepman, M.E.; Small, D. Using multitemporal Sentinel-1 C-band backscatter to monitor phenology and classify deciduous and coniferous forests in Northern Switzerland. Remote Sens. 2018, 10, 55. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P. Flood Monitoring in Vegetated Areas Using Multitemporal Sentinel-1 Data: Impact of Time Series Features. Water 2019, 11, 1938. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. Detection of temporary flooded vegetation using Sentinel-1 time series data. Remote Sens. 2018, 10, 1286. [Google Scholar] [CrossRef]

- Hu, Y.; Tian, B.; Yuan, L.; Li, X.; Huang, Y.; Shi, R.; Jiang, X.; Wang, L.; Sun, C. Mapping coastal salt marshes in China using time series of Sentinel-1 SAR. ISPRS J. Photogramm. Remote Sens. 2021, 173, 122–134. [Google Scholar] [CrossRef]

- Gašparović, M.; Dobrinić, D. Comparative assessment of machine learning methods for urban vegetation mapping using multitemporal Sentinel-1 imagery. Remote Sens. 2020, 12, 1952. [Google Scholar] [CrossRef]

- Arias, M.; Campo-Bescós, M.Á.; Álvarez-Mozos, J. Crop classification based on temporal signatures of Sentinel-1 observations over Navarre province, Spain. Remote Sens. 2020, 12, 278. [Google Scholar] [CrossRef]

- Bargiel, D. A new method for crop classification combining time series of radar images and crop phenology information. Remote Sens. Environ. 2017, 198, 369–383. [Google Scholar] [CrossRef]

- Reuß, F.; Greimeister-Pfeil, I.; Vreugdenhil, M.; Wagner, W. Comparison of long short-term memory networks and random forest for sentinel-1 time series based large scale crop classification. Remote Sens. 2021, 13, 5000. [Google Scholar] [CrossRef]

- Ndikumana, E.; Minh, D.H.T.; Baghdadi, N.; Courault, D.; Hossard, L. Deep recurrent neural network for agricultural classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef] [Green Version]

- Xu, L.; Zhang, H.; Wang, C.; Zhang, B.; Liu, M. Crop classification based on temporal information using Sentinel-1 SAR time-series data. Remote Sens. 2019, 11, 53. [Google Scholar] [CrossRef]

- Planque, C.; Lucas, R.; Punalekar, S.; Chognard, S.; Hurford, C.; Owers, C.; Horton, C.; Guest, P.; King, S.; Williams, S.; et al. National Crop Mapping Using Sentinel-1 Time Series: A Knowledge-Based Descriptive Algorithm. Remote Sens. 2021, 13, 846. [Google Scholar] [CrossRef]

- Nikaein, T.; Iannini, L.; Molijn, R.A.; Lopez-Dekker, P. On the value of sentinel-1 insar coherence time-series for vegetation classification. Remote Sens. 2021, 13, 3300. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, Z.; Jiang, H.; Jing, W.; Sun, L.; Feng, M. Evaluation of Three Deep Learning Models for Early Crop Classification Using Sentinel-1A Imagery Time Series—A Case Study in Zhanjiang, China. Remote Sens. 2019, 11, 2673. [Google Scholar] [CrossRef]

- Crisóstomo de Castro Filho, H.; Abílio de Carvalho Júnior, O.; Ferreira de Carvalho, O.L.; Pozzobon de Bem, P.; dos Santos de Moura, R.; Olino de Albuquerque, A.; Rosa Silva, C.; Guimarães Ferreira, P.H.; Fontes Guimarães, R.; Trancoso Gomes, R.A.; et al. Rice Crop Detection Using LSTM, Bi-LSTM, and Machine Learning Models from Sentinel-1 Time Series. Remote Sens. 2020, 12, 2655. [Google Scholar] [CrossRef]

- Torbick, N.; Chowdhury, D.; Salas, W.; Qi, J. Monitoring Rice Agriculture across Myanmar Using Time Series Sentinel-1 Assisted by Landsat-8 and PALSAR-2. Remote Sens. 2017, 9, 119. [Google Scholar] [CrossRef]

- Chang, L.; Chen, Y.; Wang, J.; Chang, Y. Rice-Field Mapping with Sentinel-1A SAR Time-Series Data. Remote Sens. 2020, 13, 103. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J. Mapping winter wheat planting area and monitoring its phenology using Sentinel-1 backscatter time series. Remote Sens. 2019, 11, 449. [Google Scholar] [CrossRef]

- Nasrallah, A.; Baghdadi, N.; El Hajj, M.; Darwish, T.; Belhouchette, H.; Faour, G.; Darwich, S.; Mhawej, M. Sentinel-1 data for winter wheat phenology monitoring and mapping. Remote Sens. 2019, 11, 2228. [Google Scholar] [CrossRef]

- Li, N.; Li, H.; Zhao, J.; Guo, Z.; Yang, H. Mapping winter wheat in Kaifeng, China using Sentinel-1A time-series images. Remote Sens. Lett. 2022, 13, 503–510. [Google Scholar] [CrossRef]

- Orynbaikyzy, A.; Gessner, U.; Conrad, C. Crop type classification using a combination of optical and radar remote sensing data: A review. Int. J. Remote Sens. 2019, 40, 6553–6595. [Google Scholar] [CrossRef]

- Denize, J.; Hubert-Moy, L.; Betbeder, J.; Corgne, S.; Baudry, J.; Pottier, E. Evaluation of Using Sentinel-1 and -2 Time-Series to Identify Winter Land Use in Agricultural Landscapes. Remote Sens. 2018, 11, 37. [Google Scholar] [CrossRef]

- Dobrinić, D.; Gašparović, M.; Medak, D. Sentinel-1 and 2 time-series for vegetation mapping using random forest classification: A case study of northern croatia. Remote Sens. 2021, 13, 2321. [Google Scholar] [CrossRef]

- Mercier, A.; Betbeder, J.; Rumiano, F.; Baudry, J.; Gond, V.; Blanc, L.; Bourgoin, C.; Cornu, G.; Ciudad, C.; Marchamalo, M.; et al. Evaluation of Sentinel-1 and 2 Time Series for Land Cover Classification of Forest–Agriculture Mosaics in Temperate and Tropical Landscapes. Remote Sens. 2019, 11, 979. [Google Scholar] [CrossRef]

- Arjasakusuma, S.; Kusuma, S.S.; Rafif, R.; Saringatin, S.; Wicaksono, P. Combination of Landsat 8 OLI and Sentinel-1 SAR time-series data for mapping paddy fields in parts of west and Central Java Provinces, Indonesia. ISPRS Int. J. Geo-Inf. 2020, 9, 663. [Google Scholar] [CrossRef]

- Demarez, V.; Helen, F.; Marais-Sicre, C.; Baup, F. In-season mapping of irrigated crops using Landsat 8 and Sentinel-1 time series. Remote Sens. 2019, 11, 118. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, X.; Liu, L.; Wu, X.; Qin, Y.; Steiner, J.L.; Dong, J. Mapping sugarcane plantation dynamics in Guangxi, China, by time series Sentinel-1, Sentinel-2 and Landsat images. Remote Sens. Environ. 2020, 247, 111951. [Google Scholar] [CrossRef]

- Whelen, T.; Siqueira, P. Time-series classification of Sentinel-1 agricultural data over North Dakota. Remote Sens. Lett. 2018, 9, 411–420. [Google Scholar] [CrossRef]

- Mestre-Quereda, A.; Lopez-Sanchez, J.M.; Vicente-Guijalba, F.; Jacob, A.W.; Engdahl, M.E. Time-Series of Sentinel-1 Interferometric Coherence and Backscatter for Crop-Type Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4070–4084. [Google Scholar] [CrossRef]

- Amherdt, S.; Di Leo, N.C.; Balbarani, S.; Pereira, A.; Cornero, C.; Pacino, M.C. Exploiting Sentinel-1 data time-series for crop classification and harvest date detection. Int. J. Remote Sens. 2021, 42, 7313–7331. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Wu, H.; Prasad, S. Convolutional recurrent neural networks for hyperspectral data classification. Remote Sens. 2017, 9, 298. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Scalable recurrent neural network for hyperspectral image classification. J. Supercomput. 2020, 76, 8866–8882. [Google Scholar] [CrossRef]

- Ma, A.; Filippi, A.M.; Wang, Z.; Yin, Z. Hyperspectral image classification using similarity measurements-based deep recurrent neural networks. Remote Sens. 2019, 11, 194. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In Proceedings of the EMNLP 2014 Conference on Empirical Methods in Natural Language Processing; Association for Computational Linguistics: Doha, Qatar, 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- He, T.; Xie, C.; Liu, Q.; Guan, S.; Liu, G. Evaluation and comparison of random forest and A-LSTM networks for large-scale winter wheat identification. Remote Sens. 2019, 11, 1665. [Google Scholar] [CrossRef]

- Reddy, D.S.; Prasad, P.R.C. Prediction of vegetation dynamics using NDVI time series data and LSTM. Model. Earth Syst. Environ. 2018, 4, 409–419. [Google Scholar] [CrossRef]

- Rußwurm, M.; Körner, M. Multi-temporal land cover classification with sequential recurrent encoders. ISPRS Int. J. Geo-Inf. 2018, 7, 129. [Google Scholar] [CrossRef] [Green Version]

- Sun, Z.; Di, L.; Fang, H. Using long short-term memory recurrent neural network in land cover classification on Landsat and Cropland data layer time series. Int. J. Remote Sens. 2019, 40, 593–614. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Ienco, D.; Gaetano, R.; Dupaquier, C.; Maurel, P. Land Cover Classification via Multitemporal Spatial Data by Deep Recurrent Neural Networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1685–1689. [Google Scholar] [CrossRef]

- Minh, D.H.T.; Ienco, D.; Gaetano, R.; Lalande, N.; Ndikumana, E.; Osman, F.; Maurel, P. Deep Recurrent Neural Networks for Winter Vegetation Quality Mapping via Multitemporal SAR Sentinel-1. IEEE Geosci. Remote Sens. Lett. 2018, 15, 464–468. [Google Scholar] [CrossRef]

- Dubreuil, V.; Fante, K.P.; Planchon, O.; Neto, J.L.S. Os tipos de climas anuais no Brasil: Uma aplicação da classificação de Köppen de 1961 a 2015. Confins 2018, 37. [Google Scholar] [CrossRef]

- Rabelo, B.V.; do Carmo Pinto, A.; do Socorro Cavalcante Simas, A.P.; Tardin, A.T.; Fernandes, A.V.; de Souza, C.B.; Monteiro, E.M.P.B.; da Silva Facundes, F.; de Souza Ávila, J.E.; de Souza, J.S.A.; et al. Macrodiagnóstico do Estado do Amapá: Primeira aproximação do ZEE; Instituto de Pesquisas Científicas e Tecnológicas do Estado do Amapá (IPEA): Macapá, Brazil, 2008; Volume 1. [Google Scholar]

- De Menezes, M.P.M.; Berger, U.; Mehlig, U. Mangrove vegetation in Amazonia: A review of studies from the coast of Pará and Maranhão States, north Brazil. Acta Amaz. 2008, 38, 403–419. [Google Scholar] [CrossRef]

- De Almeida, P.M.M.; Madureira Cruz, C.B.; Amaral, F.G.; Almeida Furtado, L.F.; Dos Santos Duarte, G.; Da Silva, G.F.; Silva De Barros, R.; Pereira Abrantes Marques, J.V.F.; Cupertino Bastos, R.M.; Dos Santos Rosario, E.; et al. Mangrove Typology: A Proposal for Mapping based on High Spatial Resolution Orbital Remote Sensing. J. Coast. Res. 2020, 95, 1–5. [Google Scholar] [CrossRef]

- Cohen, M.C.L.; Lara, R.J.; Smith, C.B.; Angélica, R.S.; Dias, B.S.; Pequeno, T. Wetland dynamics of Marajó Island, northern Brazil, during the last 1000 years. CATENA 2008, 76, 70–77. [Google Scholar] [CrossRef]

- de Oliveira Santana, L. Uso de Sensoriamento Remoto Para Identificação e Mapeamento do Paleodelta do Macarry, Amapá. Master’s Thesis, Federal University of Pará, Belém, Brazil, 2011. [Google Scholar]

- Silveira, O.F.M.d. A Planície Costeira do Amapá: Dinâmica de Ambiente Costeiro Influenciado Por Grandes Fontes Fluviais Quaternárias. Ph.D. Thesis, Federal University of Pará, Belém, Brazil, 1998. [Google Scholar]

- Jardim, K.A.; dos Santos, V.F.; de Oliveira, U.R. Paleodrainage Systems and Connections to the Southern Lacustrine Belt applying Remote Sansing Data, Amazon Coast, Brazil. J. Coast. Res. 2018, 85, 671–675. [Google Scholar] [CrossRef]

- da Costa Neto, S.V. Fitofisionomia e Florística de Savanas do Amapá. Federal Rural University of the Amazon. Ph.D. Thesis, Federal Rural University of the Amazon, Belém, Brazil, 2014. [Google Scholar]

- Azevedo, L.G. Tipos eco-fisionomicos de vegetação do Território Federal do Amapá. Rev. Bras. Geogr. 1967, 2, 25–51. [Google Scholar]

- Veloso, H.P.; Rangel-Filho, A.L.R.; Lima, J.C.A. Classificação da Vegetação Brasileira, Adaptada a um Sistema Universal; IBGE—Departamento de Recursos Naturais e Estudos Ambientais: Rio de Janeiro, Brazil, 1991; ISBN 8524003847. [Google Scholar]

- Brasil. Departamento Nacional da Produção Mineral. Projeto RADAM. In Folha NA/NB.22-Macapá; Geologia, Geomorfologia, Solos, Vegetação e Uso Potencial da Terra; Departamento Nacional da Produção Mineral: Rio de Janeiro, Brazil, 1974. [Google Scholar]

- Aguiar, A.; Barbosa, R.I.; Barbosa, J.B.F.; Mourão, M. Invasion of Acacia mangium in Amazonian savannas following planting for forestry. Plant Ecol. Divers. 2014, 7, 359–369. [Google Scholar] [CrossRef]

- Rauber, A.L. A Dinâmica da Paisagem No Estado do Amapá: Análise Socioambiental Para o Eixo de Influência das Rodovias BR-156 e BR-210. Ph.D. Thesis, Federal University of Goiás, Goiânia, Brazil, 2019. [Google Scholar]

- Hilário, R.R.; de Toledo, J.J.; Mustin, K.; Castro, I.J.; Costa-Neto, S.V.; Kauano, É.E.; Eilers, V.; Vasconcelos, I.M.; Mendes-Junior, R.N.; Funi, C.; et al. The Fate of an Amazonian Savanna: Government Land-Use Planning Endangers Sustainable Development in Amapá, the Most Protected Brazilian State. Trop. Conserv. Sci. 2017, 10, 1940082917735416. [Google Scholar] [CrossRef]

- Mustin, K.; Carvalho, W.D.; Hilário, R.R.; Costa-Neto, S.V.; Silva, C.R.; Vasconcelos, I.M.; Castro, I.J.; Eilers, V.; Kauano, É.E.; Mendes, R.N.G.; et al. Biodiversity, threats and conservation challenges in the Cerrado of Amapá, an Amazonian savanna. Nat. Conserv. 2017, 22, 107–127. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.Ö.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Hüttich, C.; Gessner, U.; Herold, M.; Strohbach, B.J.; Schmidt, M.; Keil, M.; Dech, S. On the suitability of MODIS time series metrics to map vegetation types in dry savanna ecosystems: A case study in the Kalahari of NE Namibia. Remote Sens. 2009, 1, 620–643. [Google Scholar] [CrossRef]

- Filipponi, F. Sentinel-1 GRD Preprocessing Workflow. Proceedings 2019, 18, 11. [Google Scholar] [CrossRef]

- Savitzky, A.; Golay, M.J.E. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Chen, J.; Jönsson, P.; Tamura, M.; Gu, Z.; Matsushita, B.; Eklundh, L. A simple method for reconstructing a high-quality NDVI time-series data set based on the Savitzky-Golay filter. Remote Sens. Environ. 2004, 91, 332–344. [Google Scholar] [CrossRef]

- Singh, R.; Sinha, V.; Joshi, P.; Kumar, M. Use of Savitzky-Golay Filters to Minimize Multi-temporal Data Anomaly in Land use Land cover mapping. Indian J. For. 2019, 42, 362–368. [Google Scholar] [CrossRef]

- Soudani, K.; Delpierre, N.; Berveiller, D.; Hmimina, G.; Vincent, G.; Morfin, A.; Dufrêne, É. Potential of C-band Synthetic Aperture Radar Sentinel-1 time-series for the monitoring of phenological cycles in a deciduous forest. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102505. [Google Scholar] [CrossRef]

- Pang, J.; Zhang, R.; Yu, B.; Liao, M.; Lv, J.; Xie, L.; Li, S.; Zhan, J. Pixel-level rice planting information monitoring in Fujin City based on time-series SAR imagery. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102551. [Google Scholar] [CrossRef]

- Abade, N.A.; Júnior, O.; Guimarães, R.F.; de Oliveira, S.N.; De Carvalho, O.A.; Guimarães, R.F.; de Oliveira, S.N. Comparative Analysis of MODIS Time-Series Classification Using Support Vector Machines and Methods Based upon Distance and Similarity Measures in the Brazilian Cerrado-Caatinga Boundary. Remote Sens. 2015, 7, 12160–12191. [Google Scholar] [CrossRef]

- Ren, J.; Chen, Z.; Zhou, Q.; Tang, H. Regional yield estimation for winter wheat with MODIS-NDVI data in Shandong, China. Int. J. Appl. Earth Obs. Geoinf. 2008, 10, 403–413. [Google Scholar] [CrossRef]

- Geng, L.; Ma, M.; Wang, X.; Yu, W.; Jia, S.; Wang, H. Comparison of Eight Techniques for Reconstructing Multi-Satellite Sensor Time-Series NDVI Data Sets in the Heihe River Basin, China. Remote Sens. 2014, 6, 2024–2049. [Google Scholar] [CrossRef]

- IBGE Instituto Brasileiro de Geografia e Estatística. Vegetação 1:250.000. Available online: https://www.ibge.gov.br/geociencias/informacoes-ambientais/vegetacao/22453-cartas-1-250-000.html?=&t=downloads (accessed on 1 May 2022).

- IBGE Instituto Brasileiro de Geografia e Estatística. Cobertura e Uso da Terra do Brasil na escala 1:250 000. Available online: https://www.ibge.gov.br/geociencias/informacoes-ambientais/cobertura-e-uso-da-terra/15833-uso-da-terra.html?=&t=downloads (accessed on 1 May 2022).

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Estabrooks, A.; Jo, T.; Japkowicz, N. A multiple resampling method for learning from imbalanced data sets. Comput. Intell. 2004, 20, 18–36. [Google Scholar] [CrossRef]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling; Springer: New York, NY, USA, 2013; ISBN 978-1-4614-6848-6. [Google Scholar]

- Larose, D.T.; Larose, C.D. Discovering Knowledge in Data; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2014; ISBN 9781118874059. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995; Volume 148, ISBN 9781475724424. [Google Scholar]

- Bishop, C.M. Neural Networks for Pattern Recognition, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2011; ISBN 0387310738. [Google Scholar]

- Meng, Q.; Cieszewski, C.J.; Madden, M.; Borders, B.E. K Nearest Neighbor Method for Forest Inventory Using Remote Sensing Data. GIScience Remote Sens. 2007, 44, 149–165. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent Neural Network Regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Gao, L.; Guo, Z.; Zhang, H.; Xu, X.; Shen, H.T. Video Captioning with Attention-Based LSTM and Semantic Consistency. IEEE Trans. Multimed. 2017, 19, 2045–2055. [Google Scholar] [CrossRef]

- Deng, J.; Schuller, B.; Eyben, F.; Schuller, D.; Zhang, Z.; Francois, H.; Oh, E. Exploiting time-frequency patterns with LSTM-RNNs for low-bitrate audio restoration. Neural Comput. Appl. 2020, 32, 1095–1107. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutnik, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Hovy, E. End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers); Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; Volume 2, pp. 1064–1074. [Google Scholar]

- Siam, M.; Valipour, S.; Jagersand, M.; Ray, N. Convolutional gated recurrent networks for video segmentation. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3090–3094. [Google Scholar] [CrossRef]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef] [PubMed]

- Dietterich, T.G. Approximate Statistical Tests for Comparing Supervised Classification Learning Algorithms. Neural Comput. 1998, 10, 1895–1923. [Google Scholar] [CrossRef] [PubMed]

- Clement, M.A.; Kilsby, C.G.; Moore, P. Multi-temporal synthetic aperture radar flood mapping using change detection. J. Flood Risk Manag. 2018, 11, 152–168. [Google Scholar] [CrossRef]

- Manjusree, P.; Prasanna Kumar, L.; Bhatt, C.M.; Rao, G.S.; Bhanumurthy, V. Optimization of threshold ranges for rapid flood inundation mapping by evaluating backscatter profiles of high incidence angle SAR images. Int. J. Disaster Risk Sci. 2012, 3, 113–122. [Google Scholar] [CrossRef]

- Bangira, T.; Alfieri, S.M.; Menenti, M.; van Niekerk, A. Comparing Thresholding with Machine Learning Classifiers for Mapping Complex Water. Remote Sens. 2019, 11, 1351. [Google Scholar] [CrossRef]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- de Moura, N.V.A.; de Carvalho, O.L.F.; Gomes, R.A.T.; Guimarães, R.F.; de Carvalho Júnior, O.A. Deep-water oil-spill monitoring and recurrence analysis in the Brazilian territory using Sentinel-1 time series and deep learning. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102695. [Google Scholar] [CrossRef]

- Fingas, M.; Brown, C. Review of oil spill remote sensing. Mar. Pollut. Bull. 2014, 83, 9–23. [Google Scholar] [CrossRef] [PubMed]

- Anusha, N.; Bharathi, B. Flood detection and flood mapping using multi-temporal synthetic aperture radar and optical data. Egypt. J. Remote Sens. Sp. Sci. 2020, 23, 207–219. [Google Scholar] [CrossRef]

- Kasischke, E.S.; Smith, K.B.; Bourgeau-Chavez, L.L.; Romanowicz, E.A.; Brunzell, S.; Richardson, C.J. Effects of seasonal hydrologic patterns in south Florida wetlands on radar backscatter measured from ERS-2 SAR imagery. Remote Sens. Environ. 2003, 88, 423–441. [Google Scholar] [CrossRef]

- Kasischke, E.S.; Bourgeau-Chavez, L.L.; Rober, A.R.; Wyatt, K.H.; Waddington, J.M.; Turetsky, M.R. Effects of soil moisture and water depth on ERS SAR backscatter measurements from an Alaskan wetland complex. Remote Sens. Environ. 2009, 113, 1868–1873. [Google Scholar] [CrossRef]

- Lang, M.W.; Kasischke, E.S. Using C-Band Synthetic Aperture Radar Data to Monitor Forested Wetland Hydrology in Maryland’s Coastal Plain, USA. IEEE Trans. Geosci. Remote Sens. 2008, 46, 535–546. [Google Scholar] [CrossRef]

- Liao, H.; Wdowinski, S.; Li, S. Regional-scale hydrological monitoring of wetlands with Sentinel-1 InSAR observations: Case study of the South Florida Everglades. Remote Sens. Environ. 2020, 251, 112051. [Google Scholar] [CrossRef]

- Hong, S.-H.; Wdowinski, S.; Kim, S.-W. Evaluation of TerraSAR-X Observations for Wetland InSAR Application. IEEE Trans. Geosci. Remote Sens. 2010, 48, 864–873. [Google Scholar] [CrossRef]

- Brisco, B. Early Applications of Remote Sensing for Mapping Wetlands. In Remote Sensing of Wetlands; Tiner, R.W., Lang, M.W., Klemas, V.V., Eds.; CRC Press: Boca Raton, FL, USA, 2015; pp. 86–97. [Google Scholar]

- Zhang, B.; Wdowinski, S.; Oliver-Cabrera, T.; Koirala, R.; Jo, M.J.; Osmanoglu, B. Mapping the extent and magnitude of severe flooding induced by hurricane irma with multi-temporal Sentinel-1 SAR and INSAR observations. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–3, 2237–2244. [Google Scholar] [CrossRef]

- Lasko, K.; Vadrevu, K.P.; Tran, V.T.; Justice, C. Mapping Double and Single Crop Paddy Rice with Sentinel-1A at Varying Spatial Scales and Polarizations in Hanoi, Vietnam. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 498–512. [Google Scholar] [CrossRef]

- de Bem, P.P.; de Carvalho Júnior, O.A.; de Carvalho, O.L.F.; Gomes, R.A.T.; Guimarāes, R.F.; Pimentel, C.M.M.M. Irrigated rice crop identification in Southern Brazil using convolutional neural networks and Sentinel-1 time series. Remote Sens. Appl. Soc. Environ. 2021, 24, 100627. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014. Lecture Notes in Computer Science; Fleet, D., Tomas, P., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. ISBN 978-3-319-10601-4. [Google Scholar]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-CNN. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Long Beach, CA, USA, 2019; pp. 6402–6411. [Google Scholar]

| Model | Parameter | Values | |

|---|---|---|---|

| ML | RF | bootstrap | True, False |

| oob_score | True, False | ||

| max_depth | 3, 5, 7 | ||

| n_estimators | 50, 100, 200, 400 | ||

| min_samples_split | 2, 3, 5 | ||

| max_leaf_nodes | None, 2, 4 | ||

| XGBoost | Learning_rate | 0.01, 0.05, 0.1 | |

| Min_child_weight | 1, 3, 5, 7 | ||

| gamma | 1, 3, 5, 7 | ||

| Colsample_bytree | 0.4, 0.5, 0.6 | ||

| Max_depth | 3, 5, 7 | ||

| Reg_alpha | 0, 0.2, 0.3 | ||

| Subsample | 0.6, 0.8 | ||

| SVM | C | 0.5, 1, 2, 3, 5 | |

| Degree | 2, 3, 4 | ||

| Kernel | linear, rbf, poly | ||

| MLP | Hidden_layer_sizes | (100,50), (200,100), (300,150) | |

| activation | logistic, relu, tanh | ||

| Learning_rate | 0.01, 0.001 | ||

| Max_iter | 500, 1000 | ||

| k-NN | N_neighbors | 5, 10, 15, 20 | |

| Weights | uniform, distance | ||

| DL | RNN models | Epochs | 5000 |

| Dropout | 0.5 | ||

| Optimizer | Adam | ||

| Learning rate | 0.001 | ||

| Loss function | Categorical cross-entropy | ||

| Batch size | 1024 | ||

| Hidden layers | 2 | ||

| Hidden layer sizes | (366, 122) | ||

| Model | VV | VH | VV&VH | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AO | P | R | F1 | AO | P | R | F1 | AO | P | R | F1 | ||

| DL | Bi-GRU | 85.57 | 85.88 | 85.57 | 85.72 | 91.61 | 91.64 | 91.61 | 91.63 | 93.49 | 93.58 | 93.49 | 93.53 |

| GRU | 85.1 | 85.21 | 85.1 | 85.15 | 90.51 | 90.73 | 90.51 | 90.62 | 93.18 | 93.34 | 93.18 | 93.3 | |

| Bi-LSTM | 85.57 | 85.79 | 85.57 | 85.68 | 90.98 | 91.19 | 90.98 | 91.08 | 93.26 | 93.33 | 93.26 | 93.29 | |

| LSTM | 85.02 | 85.23 | 85.02 | 85.12 | 90.59 | 90.63 | 90.59 | 90.61 | 93.1 | 93.19 | 93.1 | 93.15 | |

| ML | RF | 81.33 | 82.26 | 81.33 | 81.79 | 87.53 | 87.79 | 87.23 | 87.66 | 90.67 | 90.94 | 90.67 | 90.8 |

| XGBoost | 83.14 | 83.99 | 83.14 | 83.56 | 88.39 | 88.63 | 88.39 | 88.51 | 91.92 | 92.04 | 91.92 | 91.98 | |

| SVM | 82.59 | 83.21 | 82.59 | 82.9 | 90.19 | 90.31 | 90.2 | 90.25 | 92.16 | 92.21 | 92.16 | 92.18 | |

| k-NN | 78.75 | 80.43 | 78.75 | 79.58 | 85.33 | 86.22 | 85.33 | 85.77 | 88.94 | 88.25 | 88.94 | 89.37 | |

| MLP | 83.77 | 84.1 | 83.77 | 83.93 | 88.78 | 89.81 | 88.78 | 89.29 | 90.82 | 91.2 | 90.82 | 91.01 | |

| VV | VH | VV + VH | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Class | P | R | F-Score | P | R | F-Score | P | R | F-Score |

| 1–Water bodies | 100 * | 94.67 | 97.26 | 100 * | 96 | 97.96 | 100 * | 97.33 | 98.65 |

| 2–Land erosion | 91.14 | 96 | 93.51 | 92.41 | 97.33 | 94.81 | 92.50 | 98.67 | 95.48 |

| 3–Land accretion | 95.95 | 94.67 | 95.3 | 98.65 | 97.33 | 97.99 | 98.63 | 96.00 | 97.30 |

| 4–Sparse seasonally flooded grassland | 78.16 | 90.67 | 83.95 | 93.51 | 96.00 * | 94.74 | 96.00 | 96.00 * | 96.00 |

| 5–Dense seasonally flooded grassland 1 | 87.5 | 84.00 | 85.71 | 94.81 | 97.33 * | 96.05 | 97.33 | 97.33 * | 97.33 |

| 6–Dense seasonally flooded grassland 2 | 95.89 | 93.33 | 94.59 | 97.30 * | 96.00 * | 96.64* | 97.30 * | 96.00 * | 96.64 * |

| 7–Dense humid grassland and floodplain areas | 86.44 | 68.00 | 76.12 | 93.33 | 93.33 | 93.33 | 93.59 | 97.33 | 95.42 |

| 8–Pioneer herbaceous formation | 85.9 | 89.33 | 87.58 | 97.22 | 93.33 | 95.24 | 96.00 | 96.00 | 96.00 |

| 9–Pioneer shrub formation | 84.42 | 86.67 | 85.53 | 97.26 | 94.67 | 95.95 | 94.44 | 90.67 | 92.52 |

| 10–Pioneer arboreal formation | 71.25 | 76.00 | 73.55 | 85.9 | 89.33 | 87.58 | 91.55 | 86.67 | 89.04 |

| 11–Shrub grassland | 75.68 | 74.67 | 75.17 | 83.75 | 89.33 | 86.45 | 87.32 | 82.67 | 84.93 |

| 12–Savanna/shrub savanna | 88.31 | 90.67 | 89.47 | 93.67 | 98.67 | 96.1 | 92.59 | 100 | 96.15 |

| 13–Mangroves | 92.00 | 92.00 | 92.00 | 76.71 | 74.67 | 75.68 | 91.03 | 94.67 | 92.81 |

| 14–Forest 1 | 78.48 | 82.67 | 80.52 | 88.31 | 90.67 | 89.47 | 95.65 | 88.00 | 91.67 |

| 15–Forest 2 | 72.41 | 84.00 | 77.78 | 83.56 | 81.33 | 82.43 | 84.81 | 89.33 | 87.01 |

| 16–Agriculture plantations (soybean) | 94.44 | 90.67 | 92.52 | 95.83 | 92.00 | 93.88 | 97.22 | 93.33 | 95.24 |

| 17–Planted forest | 81.97 | 66.67 | 73.53 | 85.71 | 80.00 | 82.76 | 84.81 | 89.33 | 87.01 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Magalhães, I.A.L.; de Carvalho Júnior, O.A.; de Carvalho, O.L.F.; de Albuquerque, A.O.; Hermuche, P.M.; Merino, É.R.; Gomes, R.A.T.; Guimarães, R.F. Comparing Machine and Deep Learning Methods for the Phenology-Based Classification of Land Cover Types in the Amazon Biome Using Sentinel-1 Time Series. Remote Sens. 2022, 14, 4858. https://doi.org/10.3390/rs14194858

Magalhães IAL, de Carvalho Júnior OA, de Carvalho OLF, de Albuquerque AO, Hermuche PM, Merino ÉR, Gomes RAT, Guimarães RF. Comparing Machine and Deep Learning Methods for the Phenology-Based Classification of Land Cover Types in the Amazon Biome Using Sentinel-1 Time Series. Remote Sensing. 2022; 14(19):4858. https://doi.org/10.3390/rs14194858

Chicago/Turabian StyleMagalhães, Ivo Augusto Lopes, Osmar Abílio de Carvalho Júnior, Osmar Luiz Ferreira de Carvalho, Anesmar Olino de Albuquerque, Potira Meirelles Hermuche, Éder Renato Merino, Roberto Arnaldo Trancoso Gomes, and Renato Fontes Guimarães. 2022. "Comparing Machine and Deep Learning Methods for the Phenology-Based Classification of Land Cover Types in the Amazon Biome Using Sentinel-1 Time Series" Remote Sensing 14, no. 19: 4858. https://doi.org/10.3390/rs14194858