1. Introduction

Wheat yield is an important part of national food security [

1], and spikes per unit area is an important factor in wheat yield. Obtaining a rapid and accurate count of the number of spikes per unit area is thus crucial for determining wheat yield.

With the continuous improvement in the mechanization and digitalization of agricultural production, the methods of predicting crop production have gradually diversified, and many methods are now available for small area production forecasting. These methods include field artificial prediction, capacitance measurement, climate analysis and prediction, remote sensing prediction, and prediction of the year’s harvest [

2]. However, these methods have the disadvantages of being highly subjective and incurring high cost, and cannot provide accurate results for small areas. In contrast, image processing techniques provide satisfactory results for small area production forecasting.

Compared with fruit and vegetable crop counting, wheat crop counting and yield estimation based on image processing techniques are still in the relatively primitive stage. Few works have focused on counting wheat spikes, which constitutes one of the most important components of wheat yield. An automated method for predicting the yield of cereals, especially of wheat, is highly desirable because its manual evaluation is excessively time consuming. To address this issue, we propose herein to use image processing methods to count the number of wheat spikes per square meter, thereby simplifying the work of agriculture technicians.

The image processing technology based on single data source cannot guarantee spectral resolution and image resolution simultaneously because of the singleness of data sources. As a way to solve this problem, image fusion keeps the spectral characteristics of low resolution multispectral images and gives it high spatial resolution. Kong et al. proposed an infrared and visible image fusion method based on non-subsampled shearlet transform and a spiking cortical model [

3]. Li et al. [

4] introduced a novelty image fusion method based on a sparse feature matrix. Ma et al. [

5] discussed an infrared and visible image fusion method based on a visual saliency map. Zhang et al. [

6] proposed a fusion algorithm for Hyperspectral Remote Sensing Image Combined with Harmonic Analysis and Gram-Schmidt Transform which shows a good performance during the fusion operation between different resolution images. Since the Gram-Schmidt Transform has the above characteristic, it was used in our work. The key to using image processing to count wheat spikes is image segmentation [

7]. In recent years, the segmentation of RGB images, or more generally multispectral images, has gained significant research attention. For example, Ghamisi et al. [

8] proposed a heuristic-based segmentation technique for application to hyperspectral and color images, and Su and Hu discussed an image-quantization technique that uses a self-adaptive differential evolution algorithm, with the technique being verified by using standard test images [

9]. Furthermore, Sarkar and Das [

10] proposed a segmentation procedure based on Tsallis entropy and differential evolution. In image segmentation based on multi-source data, three different image features can be extracted according to different characteristics of target objects as a basis for separation of soil background: color, texture, and sharp [

11]. However, at the filling stage, wheat ear and leaf have similar texture features, and cannot be identified accurately through the difference of texture [

12]. Meanwhile, the severe adhesion between wheat ears is so serious that it is impossible to obtain accurate sharp information [

13]. Based on these considerations, the color feature is used as the basis for image segmentation.

At present, there are many methods of image segmentation based on color features. Chen et al. [

14] introduced a medical image segmentation by combining Minimum cut and Oriented Active Appearance Models. Narkhede et al. [

15] used an edge detection method for color image segmentation. Gong et al. [

16] proposed an efficient fuzzy clustering method in image segmentation. Pahariya et al. [

17] successfully used a snake model with a noise adaptive fuzzy switching median filter method in image segmentation. Subudhi et al. [

18] introduced a region growing method for Aerial Color Image Segmentation. Tang et al. [

19] proposed an improved Otsu Method for Image Segmentation based gray level and gradient mapping function. Zhao et al. [

20] introduced a maximum entropy method to deal with 2D image segmentation. Compared with other methods, the maximum entropy method shows robustness to the size of the interest region and is more adaptable to complex backgrounds so it could be used in the coarse segmentation operation in this paper [

21].

In addition, observation methods are crucial for data acquisition, and such methods differ greatly in accuracy, stability, and duration. Establishing a rapid, accurate, high-throughput, non-invasive, and multi-phenotypic analysis capability for field crops is one of the great challenges of precision agriculture in the twenty-first century [

22]. Modern agriculture demands the development of a high-throughput platform to analyze the phenotypic platforms [

23].

Table 1 compares several current propositions to obtain crop phenotype (

Table 1).

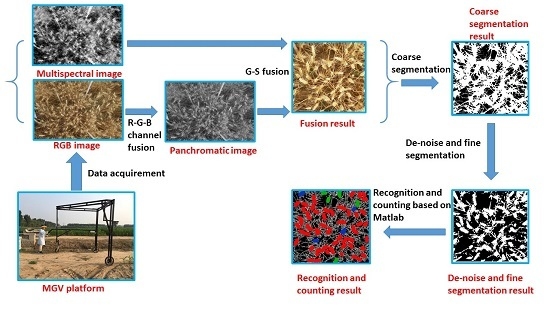

In view of the drawbacks with current segmentation algorithms and observation methods, we propose herein a new method to obtain wheat spike number statistics. The method exploits the field-based phenotype platform (FPP): First, high-definition digital images and the corresponding multispectral images of plots are obtained by using a home-built ground-moving phenotype platform vehicle which could overcome the limitation of bad weather conditions and high-cost. After two images are ortho corrected and registered, this paper introduces an algorithm to identify wheat ears in the filling stage. This paper also presents a novel direction to count the number of wheat ears. The analysis shown in this paper, however, can be extended for any component phenotype.

The paper has the following novel research contributions: (a) it introduces a method for optimizing threshold selection in the maximum entropy segmentation method; (b) it presents a better way for noise reduction based on morphological filters which can provides more accurate coarse-segmentation results; (c) it introduces a new direction of fine-segmentation of adhesive parts of wheat ears based on morphological reconstruction.

3. Methodology

Figure 3 shows a flow chart of the proposed method. The basic steps are the Gram–Schmidt (GS) fusion of panchromatic images and multispectral images, the determination of the maximum entropy segmentation threshold by FACT, removal of the threshold segmentation results based on morphological filters, and segmentation of the wheat adhesion region based on morphological reconstruction (MR). First, the GS algorithm is used to improve information quality of the image, following which FACT is used to determine the segmentation threshold, and then the maximum entropy method segmentation method is applied for threshold segmentation. Next, the segmentation results are de-noised based on the clustering method and, finally, the adhesive parts of the target are treated by MR. These components are described in detail in the following subsections (

Figure 3).

3.1. Fusion of Panchromatic Images and Multispectral Images Based on Gram-Schmidt Spectral Sharpening

The spatial resolution of multispectral images is improved by the fusion of high spatial resolution panchromatic images and corresponding low resolution multispectral images. Here, the Gram-Schmidt spectral sharpening method is used to achieve this goal.

Using the gray value

Bi of the

i band of the multispectral image, the gray value of the whole color band

P is simulated according to the weight of the

Wi, that is:

The panchromatic image is used as the first component for the GS transform of the simulated multispectral image;

The statistical value of the panchromatic image is adjusted to match the first component G-S1 after the G-S transform to produce a modified image;

A new data set is generated by replacing the first component of the G-S transform with a modified high resolution band image;

A multispectral image enhanced by spatial resolution can be obtained by inverse G-S transform to the new data set.

3.2. Maximum Entropy Threshold Segmentation for Threshold Selection of Firefly Algorithm Based on Chaos Theory

The traditional maximum entropy method is not sensitive to target size and can only be used for image segmentation of small targets. In addition, it provides better segmentation results for images of different target sizes and signal-to-noise ratios. The maximum-entropy method segments the image by maximizing the entropy of the segmented image. Therefore, the choice of the optimal threshold plays a decisive role in the segmentation effect. The global ergodic method, which is a conventional threshold-determination method, traverses all the gray levels to find the optimal threshold. However, this method is time consuming and computationally complex, especially for complex image multi-threshold segmentation, making it unsuitable for real-time processing.

To solve these problems, we improve the standard Firefly Algorithm (FA) and propose a new method called FACT. Here, the firefly represents the pixel of the image. For FACT, the movement of fireflies is very important because it determines the optimization ability of the algorithm. The movement of a common firefly is manifested as follows:

When fireflies

Xi and

Xj attract each other,

Xi moves toward

Xj if

I(

xj) >

I(

xi) and its new location is determined by

where

t stands for evolutionary algebra,

β indicates the degree of attraction,

α∈[0,1] represents the step length factor, and

r1 is a random number whose distribution is uniform over [0,1]. When

r1 < 0.5 (

r1 > 0.5),

Xm is set to

UB −

Xi (

T) [

Xi(

T) −

LB], where

UB and

LB denote the upper and lower bounds of the defined domain, respectively.

Equation (1) consists of three terms: The first term, Xi(T), indicates the current position of the firefly. The second term, β[Xj(T) − Xi(T)], represents the change in Xi caused by the attraction between Xj and Xm; this leads to global optimization. The third term, α(r1 − 0.5)Xm, is the local random fluctuation and provides local optimization.

Because the brightest firefly

XB cannot be attracted by other fireflies, its motion cannot be described by Equation (1), so we propose the following:

where

t represents evolutionary algebra and

r2 is a random number uniformly distributed over [0,1]. If

r2 < 0.5 (

r2 > 0.5),

Xm is set to

UB −

Xi (

T) [

Xi(

T) −

LB].

We see from the discussion of the firefly motion (Equation (1)) that the local search term is only a random search. Therefore, the local mining capacity of the algorithm is weak. To overcome this shortcoming, we use a local search operator based on chaotic sequences.

The basic idea of the new operator is to use the randomness, ergodicity, and regularity of chaotic sequences to perform a local search. This operator consists of five specific steps:

- (a)

In the firefly population, {Xi; i = 1, …, NP}, a firefly vector XB is selected at random from the first p individuals with the best quality. The threshold of p is chosen to be 5%.

- (b)

The chaotic variable

chi is generated by using logistic chaotic formula

where

,

chi ≠ 0.25, 0.5, 0.75, and

chi is a random number evenly distributed over the range (0, 1). The length of the chaotic sequence is expressed by

K.

- (c)

The following equation is used to map the chaotic variable

chi into the chaotic vector

CHi defined over the domain (

LB,

UB)

- (d)

The chaotic vector

CHi and

XB are linearly combined to generate candidate firefly vectors

Xc by using

where

represents the contraction factor and is defined by

where

maxIter represents the maximum number of iterations of the algorithm, and

t indicates the current iteration number.

- (e)

We now select between the candidate firefly vector Xc and the current optimal firefly vector Xb. If Xc replaces Xb or the length of the chaotic sequence reaches K, the local search ends. If neither of these criteria is met, we go to step (b) and begin a new iteration.

The pseudocode of the chaotic FA based on the basic FA is as follows:

| Pseudocode for chaotic firefly algorithm |

| (i) Random initialization of firefly populations{Xi(0)|i = 1,…NP} |

| (ii) Calculate brightness I according to the target function f |

| (iii) for t = 1:maxIter |

| (iv) for i = 1:n |

| (v) for j = 1:n |

| (vi) if [I(Xj(t)] > I(Xi(t) |

| (vii) Move to the firefly Xi(t) according to (iv) |

| (viii) end if |

| (ix) end for j |

| (x) end for i |

| (xi) Local search using chaotic local operators |

| (xii) Move to the best firefly Xb(T) according to (v) |

| (xiii) end while |

3.3. De-Noise Operation Based on Morphological Filters

After coarse segmentation is complete, a large number of unclassified noise points still exist in the coarse segmentation results which will affect the accuracy of computer identification. To remove these points without affecting the segmentation results, we use a de-noising method based on morphological filters. Compared with other de-noising methods like the spherical coordinates system [

25], the noise standard deviation (SD) estimation method [

26], and the multi-wavelet transform method [

27], the morphological filters have a better performance in dealing with details [

28]. We now introduce the principle of this method.

Let

f be the result of threshold segmentation. CB (morphology based on contour structure elements) morphological dilation

DB(

f) and erosion-operation results

EB(

f) with

f based on structural element

B are given as

where

B stands for a structural element,

is the dilation operation operator, and

is the erosion operator.

CB morphological open and closed operations and operators

CBOB(

f) and

CBCB(

f) with

f based on structural element

B is expressed as

Compared with the classical morphological open and closed operators, the CB morphological open and closed operations defined by Equations (9) and (10) have more filtering power. These algorithms, however, filter out more details. Therefore, in this work, we propose the following filter definitions:

where

Bi and

Bj indicate different or identical structural elements.

The algorithm proceeds as follows:

- (a)

After the original images are segmented by the threshold, two parts are obtained, called foreground target

O and background target

B. The target

O is filtered with the filters

FOij(

f) and

FCij(

f), and the results are labeled

O1 and

O2, respectively. The background

B is filtered with the filters

NOij(

f) and

NCij(

f), and the results are labeled

B1 and

B2, respectively. Next, the weighted mergings of

O1 and

O2 and

B1 and

B2 are called the merged foreground and background

and

, respectively:

where

,

,

, and

are weights. The coefficients of

and

control the brightness of the target and background (detail clarity), and the coefficients of

and

control the dim degree of the two objects above (smoothness).

- (b)

Repeat step (a) for each new foreground target O and background B. The number of repeated operations is N.

- (c)

Reform the N iteration results with foreground targets and background to obtain the new image .

- (d)

Repeat steps (a)–(c) for , with the number of repeating operation being M. This gives the filtered image .

Given that the time complexity of the algorithm increases with the growth of

M, we need to select

M appropriately to make the filtering details more prominent. The larger

N is, the more blurred is the image

. However, if the value of

N is too small, the filter’s ability to filter out noise weakens. Therefore, the value of

N for the image with serious noise pollution can be increased. Usually,

M takes on a value from the set {1, 2, 3, 4} and

N from the set {1, 2, 3}. Here, to obtain an intuitive feel for the effect of de-noising, we use an area of size 50 cm × 50 cm for the filtering experiments (

Figure 4).

Figure 4 shows that, after the original image is filtered by the proposed morphology filter, the number of noise points in the selected region is reduced, and the curve of objects is more prominent.

3.4. Fine-Segmentation of the Wheat Adhesion Region Based on Morphological Reconstruction

After the threshold segmentation and de-noising process, the objects in the foreground region remain stuck to each other and need to be further segmented. Each object has two boundaries: one is the boundary between the foreground object and the background area, and the other is the boundary between the objects that are stuck together. These are all located in the foreground area where the threshold was segmented, and the segmentation results can be achieved by determining these boundaries. In the foreground region where the threshold is segmented, the gray value of the boundary region adjacent to the background region is greater than the gray value of the region surrounding it [

29]. Therefore, the boundaries of all the objects to be segmented in the pretreated graph have locally higher gray values. The dome can be defined as a region with a larger gray value in the local region, and the above boundary region is considered as a dome [

30], so it can be extracted by grayscale morphological reconstruction. To avoid missing boundary points, different domes are extracted in different directions. In this work, we select six directions in equal intervals from 0° to 180°.

3.5. Wheat Ear Detection and Statistics

After the above operation, we get several separate and disconnected bright areas, each of which represents an unidentified wheat ear. Here, we use the regionprops function in Matlab R2017b to count the independent regions in the image so as to count the number of wheat ears. Meanwhile, we processed Ground Truth operation for each image, and manually labeled the wheat ears in the image, so as to compare with the result of computer recognition.

5. Discussion

5.1. Analysis of Effect of Coarse-Segmentation Method on Recognition Accuracy

Image segmentation is a fundamental technique in image processing [

42] and is the premise of object recognition and image interpretation. An image-segmentation problem typically involves extracting the consistent region and objects of interest from an image-processing process [

43].

Based on the results presented in

Table 4 and

Figure 7, we conclude that the segmentation results obtained by the algorithm proposed herein are closer to the actual measured values in different regions and for different vegetation coverage conditions, which means that this method has the advantages of high accuracy and good robustness in different areas.

After comparing numerical accuracy, the segmentation results are quantitatively analyzed by using successive inter-regional contrast (

Table 5), intra-regional uniform measure (

Table 6), and segmentation accuracy (

Table 7) [

44,

45]. The inter-region contrast index is a measurement of image segmentation quality based on inter-regional contrast where the smaller value represents the better segmentation effect. The uniform measurement value judges the internal uniformity of the segmentation results and a bigger value means a better effect. After that, the segmentation results obtained by computer recognition were compared with the results of artificial ground truth to get the segmentation accuracy (

Table 5,

Table 6 and

Table 7).

From all of the above tables, we can draw the conclusion that compared with the other segmentation methods, the method proposed in this paper could achieve more accurate image segmentation. Moreover, it can effectively control the occurrence of over segmentation and under segmentation.

5.2. Analysis of Effect of Fusion Method on Recognition Accuracy

Several different fusion methods have been used before to fuse the two different resolution images. After that, several indexes were introduced to evaluate the fusion process and fusion results. However, it is not clear whether image fusion has a positive impact on recognition accuracy. Meanwhile, the effect of different image fusion methods on recognition accuracy has not been discussed. Here, the accuracy of wheat ear recognition is compared in the following five cases: Without image fusion, image fusion based on the G-S method, image fusion based on non-sub sampled shearlet transform, image fusion based on the sparse feature matrix, and image fusion based on the visual saliency map.

From

Figure 8, we obtain the following conclusions that compared with other fusion algorithms, the G-S method has the most obvious improvement in final recognition accuracy. Meanwhile, in the repeated experiments of three plots, the recognition accuracy obtained by image fusion was improved by 3–12% compared to the result without image fusion which shows that image fusion operation had a positive impact on recognition accuracy.

5.3. Analysis of the Effect of Illumination Intensity on Recognition Accuracy

In previous contrast experiments, the images used were captured under the same illumination conditions. The experimental results indicate that, when the light intensity is stable at 30,000–50,000 lx, after the image is transformed into a two-valued image, the gray values of the foreground and background objects vary greatly [

46]. These satisfactory results can be obtained by means of computer segmentation and recognition. However, in actual field applications, it is impossible to ensure that the intensity of illumination remains within this range [

47]. To further analyze the effect of illumination intensity on the segmentation algorithm, we designed the following experiment: The control variables for this experiment are listed in

Table 8.

In clear weather, data were collected at different times of a given day for each plot of crops. The acquisition times were at 8 a.m., 12 a.m., and 4 p.m. At the same time, the shutter was used to control the light input into the sensor by partially blocking the incident light outside the field of view of the sensor, and the illumination intensity at the center of the lens was measured precisely by the luminometer. In this experiment, two sensors were placed at a height of 1.5 m above the ground. The illumination intensity was maintained within the range of 5000–80,000 lx. A total of 11 gradients were selected, and the change in illumination intensity between the adjacent gradient was 7500 lx (

Figure 9).

Figure 9 shows that, when the illumination intensity is within the range of 35,000–55,000 lx, the precision of the segmentation method is satisfactory. In the interval 5000–35,000 lx and 35,000–80,000 lx, the segmentation precision decreases significantly, which indicates that the proposed method is very sensitive to illumination intensity [

48].

The fluctuation of segmentation accuracy caused by light intensity is mainly caused by the projection and reflection from the wheat. These two factors may lead to changes in the distribution of R, G, and B components in the captured panchromatic image.

Figure 9 shows that, when the illumination intensity is less than 35,000 lx, the segmentation precision fluctuates less. This shows that wheat leaves do not cast shadows or reflect light under low-light conditions. However, because of the low reflectivity, the background soil and foreground crops cannot be accurately judged from the acquired images. When the illumination intensity is 35,000–50,000 lx, the segmentation accuracy is improved due to the improvement of illumination conditions. When the light intensity is greater than 50,000 lx, the shadow of the projection of the wheat itself is very obvious, and the reflection of the target itself begins to appear, which leads to a change in the distribution of the RGB components in the resulting panchromatic image, resulting in an increase in segmentation error.

5.4. Analysis of Sample Size

For this work, three experimental plots with three randomly selected quadrats were used for repeated tests. The data redundancy basically meets the requirement. However, because of the particularity of field experiments, these data cannot fully represent the segmentation results of all areas of the field. Moreover, the growth stage of the wheat varies from place to place and the phenotypic type differs significantly between plots. The comparative experiments on different growth stages represent a deficiency of the experimental design.

5.5. Analysis of Observation Range on Recognition Accuracy

To determine the area in which the algorithm is applicable, we extend the shooting range by increasing the height of the cantilever. After determining the central projection point, we take the point as the center and divide the research area into circles of different radii. The radius of the study area ranges from 0.25 to 0.75 m. The proposed method is used to process the image from this region, and the results are compared with the results of manual statistics. Similarly, the red dots indicate part of the artificial and machine recognition agreement, purple points represent points of artificial recognition and where machine identification is not possible. The orange dots indicate where machine identification is erroneous (

Figure 10).

Figure 10 shows that, when the observation radius reaches 0.5 m, using the proposed method of segmentation and recognition can achieve results with good statistics. Extending the radius to 0.5–0.75 m results in a rapid decrease in the accuracy of the algorithm (

Figure 11).

In view of these experimental results, we make the following analysis:

1. Influence of sensor resolution and pattern noise

For a small observation area, the camera is close to the target. In contrast, when viewing a large area, the camera is higher above the target, thereby reducing the sharpness of the target. When the observation radius reaches 0.5–0.75 m, the edge details of the target begin to blur in the image due to the resolution limit of the camera. This effect hinders the segmentation of adhesions [

49]. In addition, because of the increased observation area, the amount of noise and information in the image both increase [

50]. When the noise exceeds the tolerance of the filter, the residual noise point begins to affect the statistical results [

51]. Moreover, the change in image resolution with the new acquisition system may also impact the parameter values. At present, we have not obtained images of the same plot with the two different digital cameras, so we cannot deduce which effect is dominant [

52].

2. Influence of image-edge distortion

Due to the plateau height of the ground phenotype constraints, sensors can only capture images of a certain area [

53]. The nearer the wheat spike is to the edge of the image, the more obvious is the morphological aberration [

54]. In addition, the excessive deformation affects computer-vision target recognition. Two main types of edge distortion exist: barrel and pincushion distortion.

Here, we use Matlab R2017b to correct barrel and pincushion distortion, following which we segment the corrected image in succession (

Figure 12).

Figure 12 shows that barrel correction significantly improves the image segmentation accuracy when the observation radius continues to expand [

55]. For small observation radius, pincushion correction leads to slight improvement of the image segmentation accuracy [

56], but a significant improvement in accuracy occurs for a larger observation radius. We thus conclude that the main factor leading to the decrease in accuracy is the edge barrel distortion when the segmentation area increases [

57].

5.6. Accuracy Analysis of Manual Statistics

The proposed method gives satisfactory results for images taken at the mature period because the ears do not have a lot of overlaps [

58]. However, because our objective is not to determine the exact shape but only the right location of each spike, these locations can be represented by a few pixels, which may sometimes be accidentally erased when cleaning the images. Thus, errors in the counting results may also be due to such imperfections. A study at the pixel level is needed to solve this dilemma. Concerning the counting level, improvements should be possible through the threshold segmentation of a new type of firefly. However, above all, the present results should be compared with manual counting, which can only be done just before the harvest.

5.7. Analysis of the Algorithm Efficiency

Speed is also critical for image segmentation applications [

59]. In this paper, average running time is used to evaluate the algorithm efficiency [

60]. The average running-times of several methods are shown in

Figure 13. Here, we use the G-S method to complete the image fusion (

Figure 13).

From

Figure 13, we can see that the average running times of different coarse segmentation methods are respectively: 13.6 s, 15 s, 14 s, 14.7 s, 14 s, and 11 s. The method proposed in this paper has the shortest running-time. As the other steps are the same, the improvement of the coarse segmentation efficiency is the key to the shortening of the time. The use of the global search ability of the firefly, which greatly reduces the search time of the optimal, leads us to conclude that the algorithm is less computationally complex and improves the speed of image coarse segmentation, making it better suited for real-time image segmentation.

6. Conclusions

We propose herein a new algorithm based on a land phenotype platform for counting wheat spikes. First, the images are acquired by a home-built ground phenotype platform. The panchromatic and multispectral images are fused by using the G-S fusion algorithm, which improves the detail of information. Next, the target function is obtained by using the maximum-entropy method, the optimal threshold of the image is found by using the FACT, and the image is segmented with this threshold. The experimental results show that the proposed method improves the segmentation accuracy and segmentation speed, has good noise immunity, and is highly robust. By solving some shortcomings of the conventional methods, such as long segmentation time and excessive computational complexity, it opens a broad range of applications in the field of precision agriculture yield estimation.

Experimental results show that the improved algorithm is competitive compared with the existing method. However, these results also show that the chaotic local search operator can significantly improve the performance of the initial evolution algorithm, whereas the latter is limited. To further improve the performance of the algorithm, the next step is to combine other local search techniques with chaotic local search operators.