1. Introduction

Since the turn of the 21st century, we have seen a surge of studies on the state of U.S. education addressing issues such as cost, graduation rates, retention, achievement, engagement, and curricular outcomes. There is an expectation that graduates should be able to enter the workplace equipped to take on complex and “messy” or ill-structured problems as part of their professional and everyday life. Nationally, there is a growing consensus that in order to be competitive in the global workforce, students need to gain from their education skills that address digital literacy, collaboration and communication, creativity and innovation, problem-solving, and responsible citizenship [

1].

To meet these challenges, organizations such as the Association of American Colleges and Universities (AAC&U) and the Partnership for 21st Century Skills have identified a core set of skills and proposed guiding frameworks for educational institutions. The Framework for 21st Century Learning outlines student outcomes not only in core subjects and themes but also in skills such as life and career skills, learning and innovation skills, and information, media, and technology skills [

1]. The report on College Learning for the New Global Century [

2] also provides a framework that focuses on essential learning outcomes that can serve as a bridge between K-12 education and college learning. Much like the Framework for 21st Century Learning, the essential learning outcomes for college education emphasize deep knowledge of human cultures and the physical and natural world. The frameworks also emphasize the application of the learned knowledge through the development of students’ intellectual and practical skills, personal and social responsibility skills, and integrative learning skills [

2]. Both frameworks for 21st century learning recommend a theory-to-practice approach to facilitate students’ connection of knowledge with judgments and action. For this to occur, deep learning should result from students’ educational experiences.

Deep learning involves competencies on “both knowledge in a domain and knowledge of how, why, and when to apply this knowledge to answer questions and solve problems” [

3]. When students apply deep-level processing, learning outcomes improve [

4]; however, research studies also indicate that learners in college often fail to apply refined reasoning when faced with ill-structured problems [

5]. Ill-structured problems are not only complex but are enmeshed in uncertainty due to divergent perspectives and the lack of “right answer” solutions [

6,

7]. Real-world problems range in complexity. The more ill-structured and complex the situation or problem, the deeper the student has to engage in making sense of the issues that surround it. Therefore, the design of educational interventions should enable students to engage in deep-level processing of the domain knowledge and provide opportunities for students to apply this knowledge in real-world settings.

Digital learning interventions are being increasingly adopted in educational settings to take advantage of the collective intelligence that can be captured and harnessed into a “digital commons for learning” [

8]. With the abundance of online resources and just-in-time approaches to information retrieval, higher education institutions are expanding their online course offerings [

9]. One area that holds a great deal of potential for transforming online learning is learning analytics. Learning analytics refers to the collection, measurement, analysis, and reporting of data about learners in order to understand how learning outcomes and the environments in which learning occurs can be enhanced [

10]. Over the last 10 years, across U.S. and international higher education institutions, there has been an increased focus on learning analytic interventions. The 2012 Horizon Report put learning analytics on a two to three year path for widespread adoption in higher education [

11], and this has come to pass [

12]. However, much of the effort in educational and learning analytics has concentrated on analyzing utilization data from learning management systems and/or other enterprise-level systems within an institution to model learner activity [

10]. By modeling learner activity in such systems, institutions and educators have been able to identify “at risk” students, such as students who may be absent or not active within a virtual class environment. In doing so, institutions have been able to put in place appropriate mitigation measures to help the students stay on track. In other words, learning analytics has been implemented as a warning trigger for institutions and educators. The research done from this perspective has shown positive impacts on achievement and student retention [

13].

This application of learning analytics is a worthwhile but a limited first step. The transformational opportunity is in utilizing learning analytics as a driver and a support tool for learner-managed systems of learning. In this paper, we describe a learning analytics model that is grounded in the social cognitive theoretical perspective. At the core of the socio-cognitive psychological perspective is the idea that learning occurs in a social environment and that interactions between behaviors, the individual, and the environment influence each other [

14]. The proposed model is a combination of activity data in relation to interaction with an online system as well as the output of what learners have been working on as part of the online system. This combination of activity data and learning artifacts can then be used to drive the design of a learner-managed dashboard enabling the mapping of data to both the social and individual aspects of the learning process. In addition, it could serve to support learners in following a reflective sensemaking approach for integrating the multiple perspectives that arise as part of the social discourse around ill-structured problems.

This paper focuses on a learning analytics model that has the potential to support a reflective sensemaking approach in the context of ethical reasoning. A 2004 survey study examining student achievement in college in regards to the liberal education essential outcomes revealed that students had low engagement in outcomes for individual and social responsibility such as ethical reasoning and action [

2]. When the survey was repeated in 2010, there was no statistically significant change [

15]. People routinely face ethical, ill-structured problems without clear-cut answers in both their personal and professional lives. They tend to reason through these issues and justify the reasoning in different ways depending on their own personal philosophical orientation [

16].

Developing deep learning within the context ill-structured ethical dilemmas requires a reflective analytical approach that challenges students to integrate differing perspectives into their own value-based ethical judgments [

17]. Within the context of digital learning, the dialogic interaction that is necessary for this deep-level processing is not one that is easily attained in the linearly designed online discussion-based environments, nor do such environments make it easy for instructors to support the generation of quality discourse. For this reason, we identified an online tool, Cohere, for testing that enabled learners to engage in online discourse through the creation of connections among differing conceptual perspectives. This online open-source tool generated concept and social networks based on learners’ dialogic interaction. It therefore provided us the opportunity to begin exploring what has been elusive thus far in online discussion-based environments: learning with ill-structured problems by making visible the interaction of social and individual learning.

2. Exploratory Research Project

We conducted our exploratory research project in Fall 2012, following a design-based research methodology. According to The Design-Based Research Collective [

18], this methodology allows for research that is applied to practice and is intended for investigating the process of learning within a complex system. Since design-based research is used for refining theories of learning to inform practice, the result of such research informs or generates models rather than products or programs [

19].

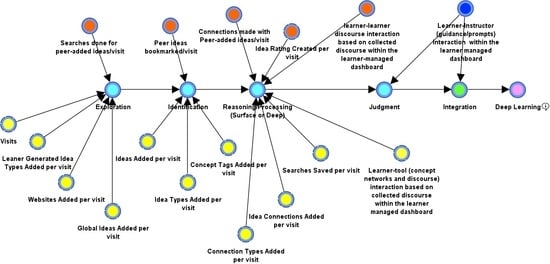

Our aim was to examine how learning analytics can be used to guide and support a learner in applying a reflective sensemaking approach. Since reflective sensemaking is an iterative process of exploration, identification, processing, and judgment of relevance of information, it has the potential to lead learners to deep learning (

Figure 1).

Sensemaking is a process that results in understanding through various interactions and is therefore difficult to operationalize and analyze. From a psychological perspective, sensemaking is about a continuous effort to understand connections that arise through interaction, such as people-people interaction, in order to be able to take action [

20,

21]. Within the context of complex and ill-structured problems, a sensemaking approach manifests itself as one explores, identifies, processes, and judges the relevance of information [

22]. A reflective sensemaking approach is an iteration of these steps allowing the learner to dig deeper into the subject matter. Langley [

23] proposed seven strategies used for sensemaking of process data distinguished by: the depth of data or process detail and breadth of data or number of cases; the form of sensemaking each strategy is concerned with; and the characteristics of each strategy in terms of accuracy, generality, and simplicity. The approach we take is based on what Langley [

23] refers to as the synthetic strategy describing a probabilistic interaction of the process events.

This approach assumes a longitudinal implementation in order to observe the evolving performance.

Figure 1 is the cognitive process model we used as the basis for the design of a learning activity that engages learners in guided discourse. This model is also used to drive the design of the proposed learner-managed dashboard to enable integration of multiple perspectives arising from the discourse. Our model draws its theoretical frame from a socio-cognitive agentic perspective especially as it relates to collective agency. According to Bandura [

24], “group attainments are the product not only of the shared intentions, knowledge, and skills of its members, but also of the interactive, coordinated, and synergistic dynamics of their transactions” (p. 14).

In

Figure 1, we represent the macro-level sensemaking process in the blue nodes operationalized in our model as a progression of a series of meso-level cognitive processes referring to exploration, identification, reasoning/processing, and judgment of relevant information. In this model, we hypothesize that the depth/level of interaction in each of the process events, within the context of solving ill-structured problems, relates to the next. We operationalize each of these process events by mapping learner interaction as it relates to the learning activity tasks. The green node represents the integration of concepts (dependent variable) and is the immediate learning outcome of the proposed sensemaking process model. The deep learning node (pink) represents the lasting learning outcome and can only be observed through a longitudinal intervention.

4. Mapping the Learner-Tool Activity to the Sensemaking Process

During this exploratory phase of our research, we focused on mapping the learner-tool interaction variables at each stage in the sensemaking process to provide us with an indication of the depth of learner-content interaction, based on the learning activity tasks described in

Table 1.

Figure 2 shows the analytics model we propose for further testing following a single-subject, multiple baseline, across-subjects design. This design allows us to treat each individual as his/her own control to better understand individual differences. This permits us to capture data markers at multiple baselines and interventions for evidence of individual development.

To measure a learner’s depth of

exploration via learner-tool interaction data, we would need to capture the following activity:

total number of visits a learner has made

the number of ideas that were generated per visit

the websites added per visit

global ideas added per visit (global ideas are ideas added to Cohere voluntarily and not required)

searches done by learner to discover ideas added by peers (social learning aspect)

To show the depth of the

identification construct, we propose to use the following learner-tool interaction variables:

ideas added per visit

idea types added per visit

concept tags added per visit

peer ideas bookmarked per visit (social learning aspect)

To determine the level of

processing (surface or deep processing), we identify the following variables in relation to learner-tool interaction:

idea connections added per visit

idea connection types added per visit

searches saved per visit

peer ideas rated per visit

idea connections with peer-generated ideas

In the learning analytics model shown in

Figure 2, we have included two additional clusters of interaction data to be captured from learner interaction within the proposed learner-managed dashboard addressed in the discussion section of this paper. The learner-tool interaction data and learner-learner interaction data captured from the learner-managed dashboard would enhance this model in terms of modeling learners’ depth of processing.

In terms of

judgment, within this reflective sensemaking process, we designate the states of this node as pre-reflective, quasi-reflective, and reflective. These states are based on King and Kitchner’s [

25] reflective judgment model. The collected discourse within the learner-managed dashboard would be used to measure judgment.

The

integration node in the model shown in

Figure 2 captures the temporal and iterative aspects of the learning process providing evidence of reflective sensemaking through integration of the conceptual perspectives that are generated in the discourse. The integration node is the immediate learning outcome from the learning process. We hypothesize that through an iterative process of integration, deep learning can occur. Further research is needed to determine how this model will fare in capturing the elusiveness we have been discussing so far. A research project is underway within the context of Massive Open Online Courses (MOOCs).

5. Results from Formative Evaluation

In a focus group with the students (

N = 20) who used Cohere to complete the case-based assignment shown in

Table 1 as part of their coursework in Bioethics, it became apparent that the interface and the complexity of the concept network visualization undermined the level of interaction with the tool and therefore the discourse itself. The Bioethics students were supportive of using a tool that enables discourse in a non-linear manner and offered specific recommendations for simplifying the tool.

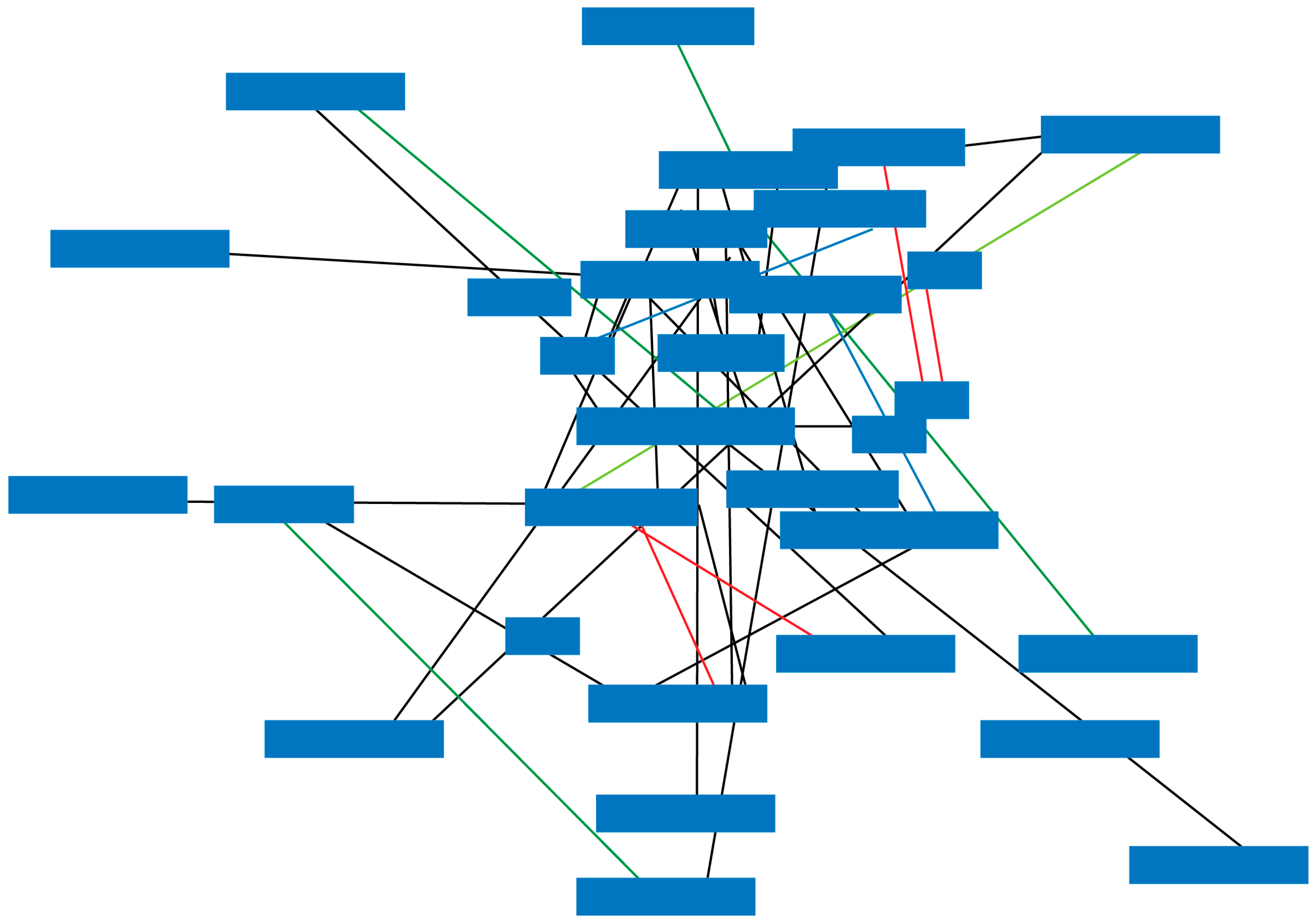

Figure 3 shows an example of the complexity of the concept network from the exploratory study we conducted with the undergraduate students in a Bioethics course.

We also tested Cohere in Fall 2012 with graduate students (N = 18). Even though the task the students engaged in was different from the Bioethics course, the graduate students voiced similar concerns regarding the complexity of the interface. However, they also felt that using an online tool that enables a more connected discourse would help them gain more from the group discussion.

Based on the testing conducted with the two courses, our review of the literature stemming from the science of learning, psychology, instructional systems design, and learning analytics, and the expertise we bring to this research effort as academics and practitioners, we propose a different approach to engaging with non-linear online discussion-based tools. We argue that after the initial interaction with a concept or knowledge-mapping tool, there is a need for a learner-managed dashboard that would take advantage of a learner analytics solution in order to enable further interaction with the knowledge or concept network.

6. Discussion

In this section, we discuss a possible design for a learner-managed dashboard specifically to address the iterative cycles needed in the sensemaking process that could lead learners to a truly reflective exploration of Ill-structured ethical problems. Ill-structured ethical problems do not tend to have correct or incorrect answers; therefore, how one makes sense of the issues that surround the ethical problems influences the learner’s judgment as such problems require reasoned judgment [

25]. Ethical reasoning entails an assessment of the learner’s own ethical values within a social context and occurs through an examination of how different ethical theoretical perspectives and concepts are applied to the issue [

2,

26]. It also involves consideration of the ramifications of the resolution or decision that require a depth of understanding [

26].

The process of ethical reasoning can be described as a process of sensemaking through dialogue [

27]. Ensuring deep understanding of the ethical perspectives and concepts when faced with ill-structured problems is a process that requires dialogue over time as well as repeated exposure to problem scenarios [

28]. The study of dialogue, and the ways in which interactions with other learners form, is central to sensemaking in that it is an “issue of language, talk, and communication” focused on the “interplay of action and interpretation rather than the influence of evaluation on choice” [

21]. This type of dialogic interaction yields co-constructed knowledge and can be examined from a social-cognitive dimension to explain how knowledge and people’s appraisals are related and influenced within a particular context. Since the social cognitive theory assumes an agentic perspective and not a reactive one—in other words learners are proactive, self-reflecting, and self-regulating [

14,

29]—engaging learners in an exploration of multiple perspectives around an ill-structured ethical problem has the potential to deepen both their conceptual understanding and their reflective reasoning.

According to Jonassen [

30], multiple external representations of a problem should begin to enable learners to have better internal representations of the problem space. Concept mapping, as an instructional strategy, has been applied across disciplines to allow learners to visualize and connect their ideas to prior knowledge, which Jonassen [

30,

31] argues should allow learners to re-conceptualize the problem, thus refining their conceptual thinking. The 2011 final report of the National Science Foundation Task Force on Cyberlearning and Workforce Development highlighted, among other aspects, the need for better interactive visualizations of learning data to support sensemaking [

32]. It emphasized the need for frameworks that provide innovative ways for self-assessment and teaching tools that examine how visualizations can be used effectively to integrate knowledge and learning opportunities over time. Though the report was mostly making reference to visualizations of scientific phenomena and “big data”, the exploratory research presented in this paper investigates a model that has the potential to guide and support a learner in engaging with complex visualizations for deep learning.

From a socio-cognitive agentic perspective, learning involves exploration, manipulation, and influence over the environment [

14]. Therefore, the design of a learner-managed dashboard that is driven by a learning analytics model that incorporates the social and individual aspects of the reflective sensemaking process is critical in the longitudinal iteration or sensemaking for deep learning.

7. Possible Dashboard Design

Following is a suggested design based on the exploratory research study described earlier in the paper that engaged learners in using Cohere, a social concept-mapping tool. The proposed design recommendations assume that learners have progressed through steps 1–4 of the learning activity (

Table 1) and are now being asked to explore the visualizations of the concept networks that were generated from steps 1–4.

Figure 3 in the previous section of the paper shows an example of a concept network generated as a result of 20 students’ engagement with steps 1–4 of the learning activity. Given the complexity of the network and the lack of an interface that would make it easy for students to delve deeper into the concepts from within Cohere, the proposed learner-managed dashboard would do just that.

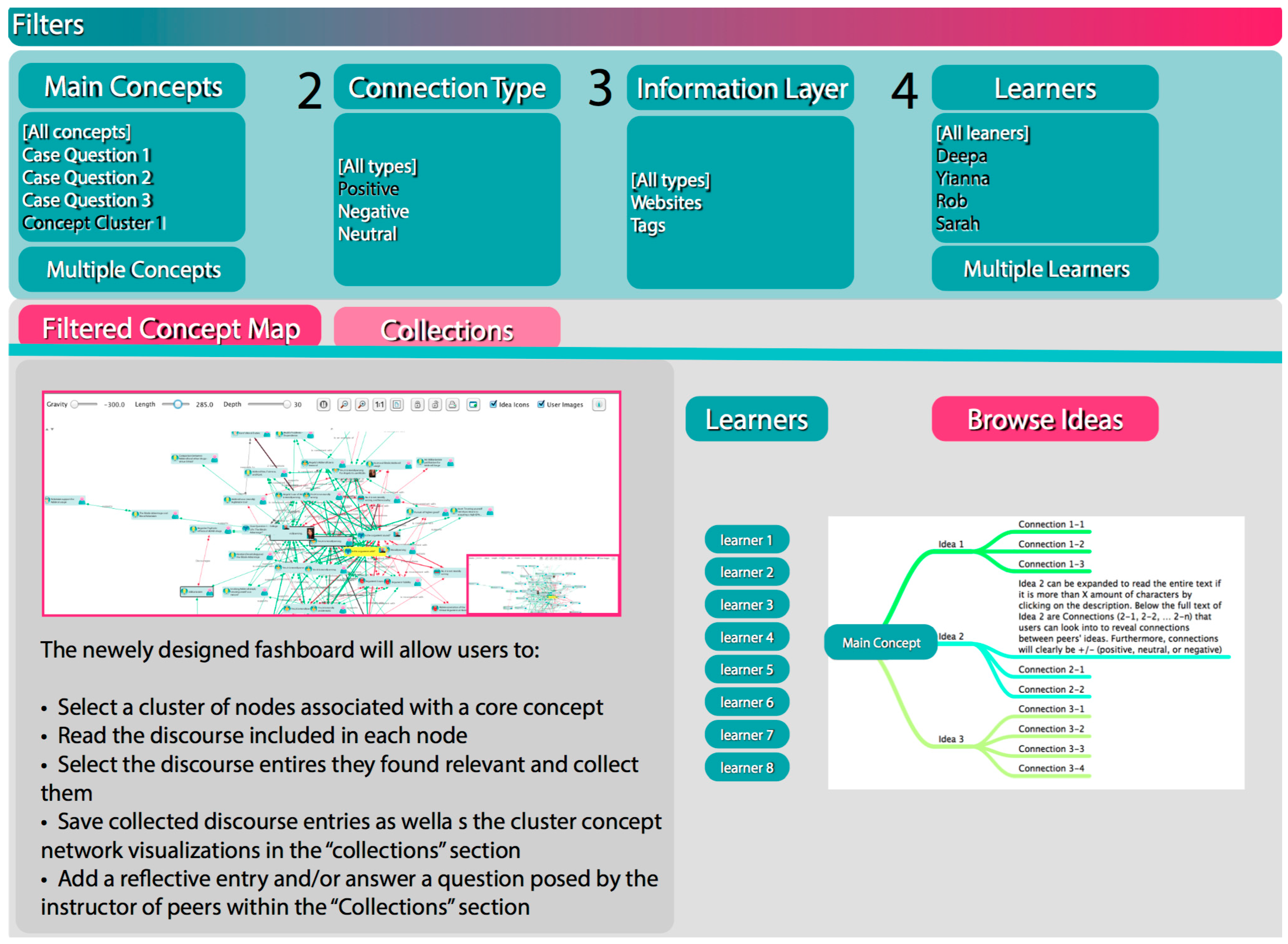

The initial brainstorming of the design of the learner-managed environment is shown in

Figure 4 below. The learner-managed dashboard would keep the visual representation of the concepts in context with learners’ exploration of the discourse. The learner-managed dashboard would allow learners to select a cluster of nodes associated with core concepts (left side bottom section of schematic) and be able to read the discourse included in each node (right side bottom section of schematic). As they read the student contributions, they would be able to select the discourse entries they found relevant and collect them. These collected discourse entries as well as the cluster concept network visualization would be saved in the Collections section. Within the Collections section, the learners would also be able to add a reflective entry and/or answer a question posed by the instructor or peers. These new entries would form connections with the selected discourse entry and therefore the concept network would grow in depth.

For example, steps 5–10 in

Table 1 could be implemented using a learner-managed dashboard. Learners would be able to explore the concept network by filtering the main concepts, the type of connections, the information type such as websites and/or tags related to the total learner contributions, and learners. This type of filtering will enable learners to zoom in and out to identify the cluster of connections that they found suprising and then be able to determine the validity and soundness of the arguments by viewing them in both the visual mapping and in a more easily readable format on the bottom right portion of

Figure 4. The activity data from this type of learner engagement with the learner-managed dashboard would be data that is fed into the model shown in

Figure 2.

For the design of this learning environment, we pay close attention to principles derived from cognitive load theory and operationalized by Sweller [

33] in three categories—intrinsic, extraneous, and germane. Intrinsic cognitive load varies depending on the complexity of the material to be learned and learners’ prior knowledge in relation to the new material to be learned [

34]. Within the context of solving ill-structured problems, intrinsic cognitive load would tend to be high due to the complexity and novelty of the learning. Therefore extraneous cognitive load, meaning the organization and sequence of the instructional tasks, should be low through good instructional design implementation. If good instructional design frees up extraneous cognitive load, the freed-up working memory load should then be re-directed to what is germane to the learning. Germane cognitive load is therefore the use of the available working memory in relation the construction of relevant knowledge for the expected learning outcomes. These three types of cognitive load are thought of as additive and therefore should not go beyond what one’s working memory can handle [

34]. Within the context of ill-structured problems, we can assume that intrinsic load will be high and therefore, what we expect from a good instructional design intervention is for extraneous load to be reduced so that germane load can be increased.

8. Conclusions

In the context of online learning, we have identified two key issues that are elusive (hard to capture and make visible): learning with ill-structured problems and the interaction of social and individual learning. We believe that the intersection between learning and analytics has the potential to minimize the elusiveness of deep learning.

The use of discourse mapping for supporting learning with ill-structured problems allowed for limited testing of the proposed analytics model due to the complex visualizations and extraneous cognitive load. Given the feedback from students during focus groups, we were able to document enhancements that should be made toward the design of a more user-friendly, learner-managed environment that utilizes the proposed reflective sensemaking learning analytics model. The exploratory project we implemented helped us to identify options for an enhanced interface design that would enable individual learners to make sense of the collective knowledge to further one’s sensemaking. Given this research, we propose a hybrid learner-managed environment that includes both a dashboard of progress and engagement, as well as an application for further interactions.

In addition, it became clear that in order to support deeper judgment and conceptual integration of multiple theoretical perspectives, the development of an instructor-managed dashboard would be critical. An instructor-managed dashboard would provide opportunities for instructors to offer guidance and prompts to learners as part of the reflective sensemaking process.

In summary, this paper addressed two key issues: learning with ill-structured problems and the interaction of social and individual learning. We propose a learning analytics solution in order to capture, measure, analyze, and report learner data to support reflective sensemaking of ill-structured ethical problems in online learning environments. We also share the design of the learning activity that specifically addresses the interaction of individual and social learning noting that the reflective sensemaking process is one that can be intentionally supported with appropriate instructional strategies.

Through Georgetown’s partnership with edX, a program initiated by MIT and Harvard that offers online, open-access courses, the learning analytics approach proposed here has been partially integrated within the GeorgetownX learning design approach [

35]. Further research is needed to investigate the potential of a learner- and an instructor-managed dashboard solution that supports reflective sensemaking and deep learning. This further research will built upon work by other researchers who are investigating learner profiles in online courses to better understand learning dispositions-in-action [

36]. Learning dispositions-in-action are defined as the “mediating space for negotiation and meaning making which is formed by fluid socio-psychological processes” [

36]. Determining learner intentions and how they manifest within an online space offers us an insight as to how purposeful learners can be in how they approach their learning and enable us to determine the types of learning interventions that are appropriate for deeper learning.