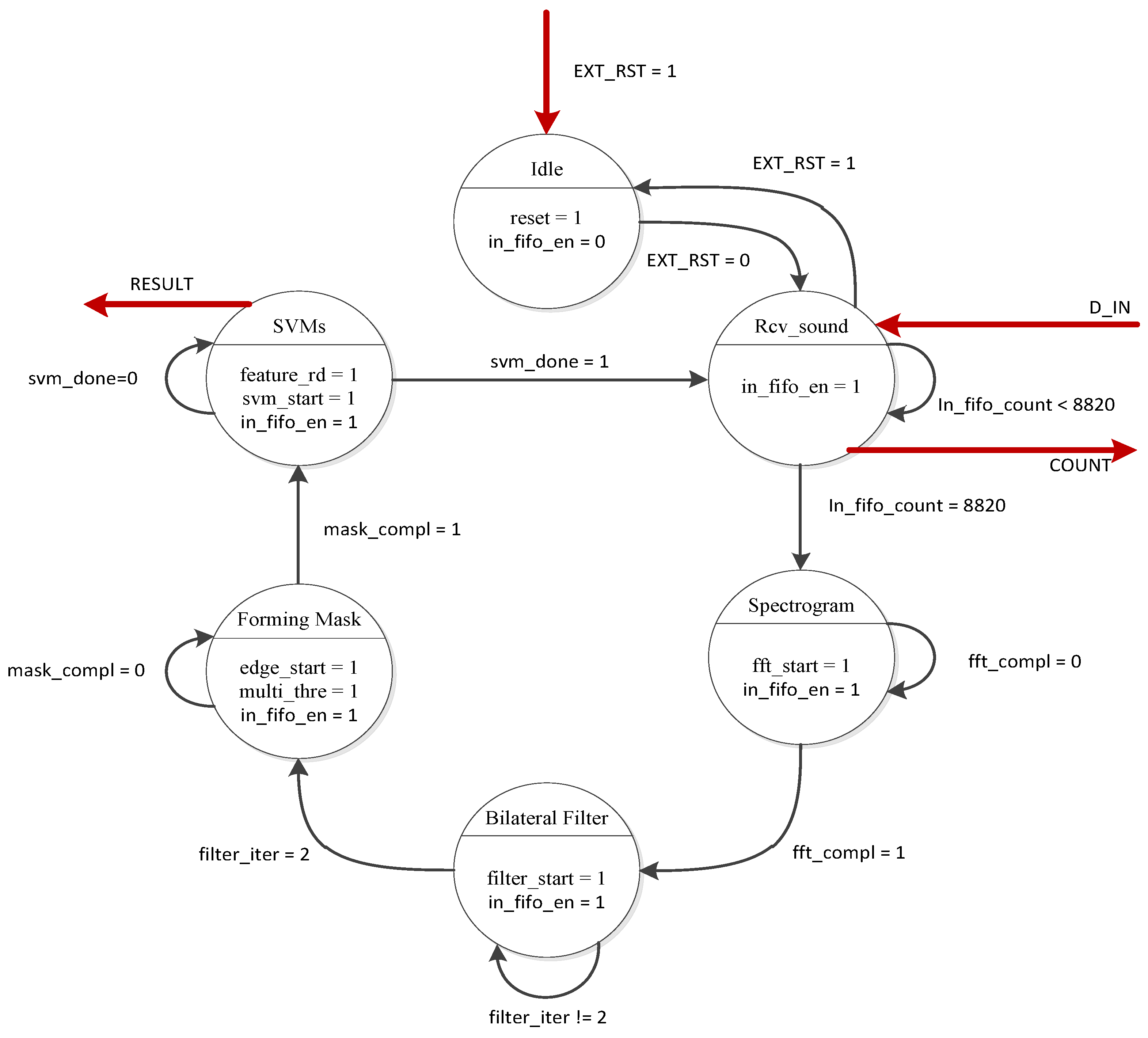

4.1. Wheezing Sound Detection Results

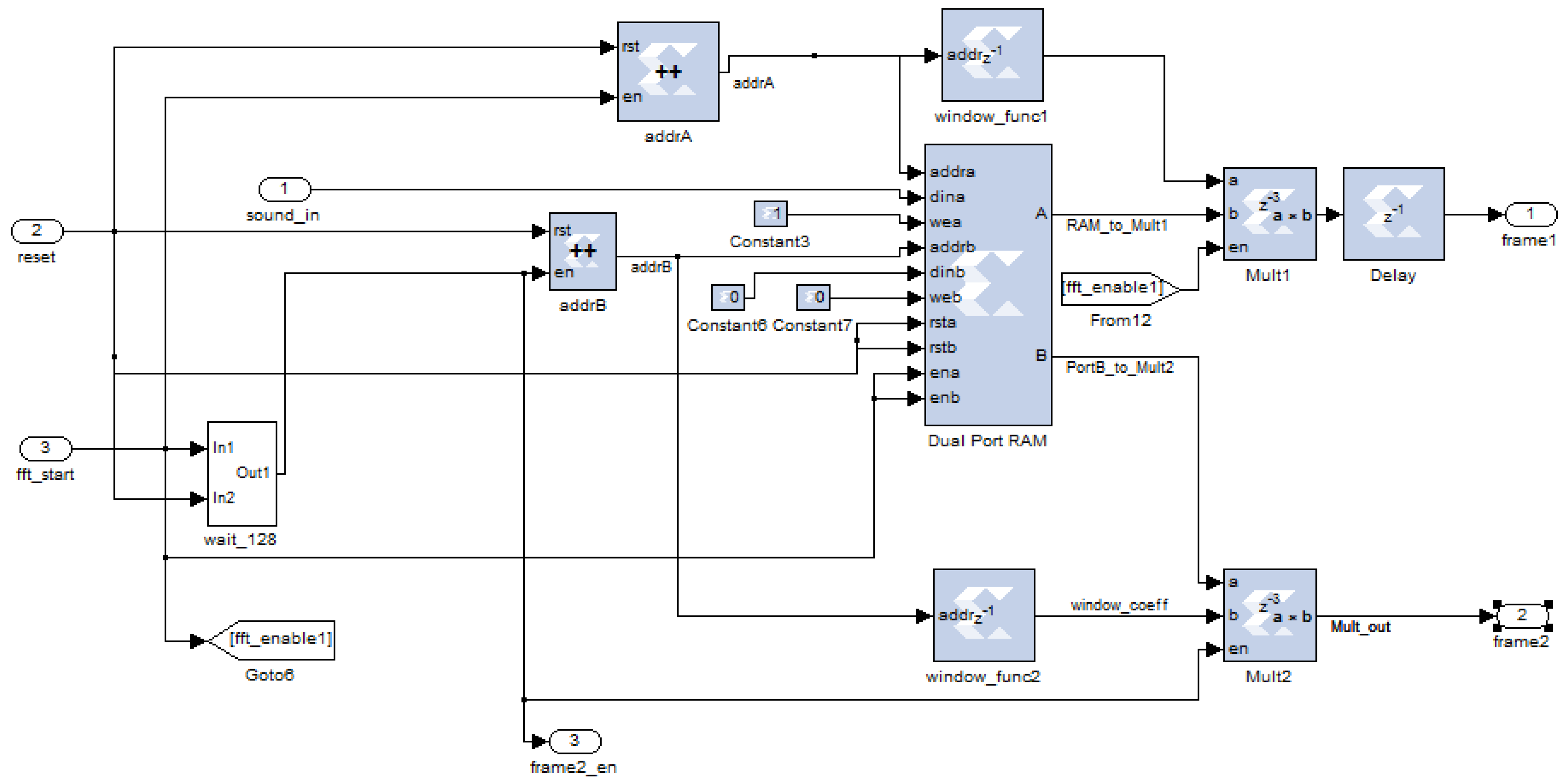

We applied the SVM classifier to predict whether sound data contained wheezes or normal sounds, based on the image properties of the spectrogram objects identified by the mask. As shown in

Figure 14, we chose four parameters to extract the wheezing features:

PCY: Frequency located at the centroid of the wheezing episode.

PT: Time duration of the wheezing episode.

PS: Slope of the wheezing episode.

PAR: Area ratio of the wheezing episode/bounding box of the wheezing episode.

These parameters were used to represent the shapes of the objects, and reduce the complexity of the classifier.

Figure 14.

Parameter for extracting wheezing features.

Figure 14.

Parameter for extracting wheezing features.

The SVM classifier must be trained for wheezing recognition before implementation. We used the RBF kernel function and grid search method to determine the corresponding σ which yielded the most satisfactory result.

Figure 15 show the accuracy of wheezing recognition corresponded to the coefficients of SVM model yielded by using various parameter sets. The performance of the system using various parameter sets was analyzed to verify that the selected parameters adequately assessed wheezing features and derive the most efficient parameter set. We analyzed breath sounds recorded at National Taiwan University Hospital [

27], and divided these sounds into training samples and testing samples. The training samples consisted of sound samples recorded from 11 asthmatic patients and 10 healthy people. The testing samples consisted of sound samples recorded from 13 asthmatic patients and 12 healthy people. All sound files were segmented into 2-second units.

Figure 15.

(a) Grid searching (Features: PT, PCY ); (b) Grid searching (Features: PT, PAR ); (c) Grid searching (Features: PT, PS ); (d) Grid searching (Features: PT, PCY, PAR); (e) Grid searching (Features: PT, PCY, PS); (f) Grid searching (Features: PT, PAR, PS); (g) Grid searching (Features: PT, PCY, PAR, PS).

Figure 15.

(a) Grid searching (Features: PT, PCY ); (b) Grid searching (Features: PT, PAR ); (c) Grid searching (Features: PT, PS ); (d) Grid searching (Features: PT, PCY, PAR); (e) Grid searching (Features: PT, PCY, PS); (f) Grid searching (Features: PT, PAR, PS); (g) Grid searching (Features: PT, PCY, PAR, PS).

The training sample results revealed that the proposed system achieved an accuracy of up to 96.63% using the parameter sets (P

T, P

S) and (P

T, P

AR, P

S). After we trained the SVM, we used the trained models to classify the testing samples. The performance (PER) of the recognition system was estimated by calculating the sensitivity (SE) and specificity (SP), defined in the following equations:

The testing samples were analyzed using various parameter sets. The recognition system exhibited superior performance using the parameter sets (P

T, P

S) and (P

T, P

AR, P

S), as shown in

Table 3. We implemented the SVM model using the parameters (P

T, P

S), because these parameters used fewer hardware resources. The results of the implementation of this SVM in hardware are shown in

Figure 16 and

Figure 17. The system recognizes wheezing episodes when the SVM output exceeds 26.

Identical testing samples were sent to the platform through the UART port to confirm the wheezing detection performance of the WDSIP after the implementation of all functional blocks in hardware. Tera Term was used to connect the platform and set the band rate of serial port to 115,200 bps, to estimate the reliability of the UART transmission from a PC to the platform. We wrote a data set (0–232) to a file, and sent this file to the platform, where a program we had written compared the received data with an accumulator, the estimated error rate of UART transmission was obtained. The results show that no errors were observed when these 4,294,967,296 testing samples were sent to the platform.

As mentioned, fixed-point operation in hardware allows the wheezing recognition error to be predicted. To estimate this error, we assumed that the wheezing recognition results of the software were correct, and compared them with the hardware results. The differences between the software and hardware results are listed in

Table 4. The main factor that causes the discrepancy between these results is the depth of the LUT, in which the weight coefficients of the photometric filter are stored. The weight coefficients are quantized, the number of coefficients stored in the LUT is set to 8192, and the precision of the corresponding quantized coefficients is limited to 0.01. This limited precision can be considered the quantization error, which decreases the SNR of the wheezing signal, and impedes the performance of the wheezing recognition system. The performance of the recognition system in hardware and software is compared in

Table 5.

Table 3.

Recognition results for different features.

Table 3.

Recognition results for different features.

| Selected Features | TP | TN | FP | FN | Sensitivity | Specificity | Performance |

|---|

| (PT, PCY) | 128 | 209 | 21 | 13 | 0.907801 | 0.908696 | 0.908248 |

| (PT, PAR) | 128 | 209 | 21 | 13 | 0.907801 | 0.908696 | 0.908248 |

| (PT, PS) | 128 | 215 | 15 | 13 | 0.907801 | 0.934783 | 0.921193 |

| (PT, PCY, PAR) | 128 | 209 | 21 | 13 | 0.907801 | 0.908696 | 0.908248 |

| (PT, PCY, PS) | 124 | 221 | 9 | 17 | 0.879433 | 0.96087 | 0.91925 |

| (PT, PAR, PS) | 128 | 215 | 15 | 13 | 0.907801 | 0.934783 | 0.921193 |

| (PT, PCY, PAR, PS) | 124 | 221 | 9 | 17 | 0.879433 | 0.96087 | 0.91925 |

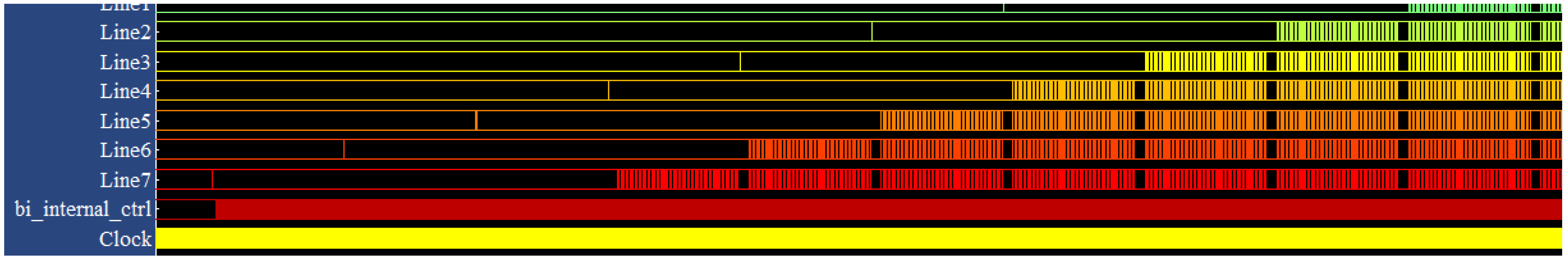

Figure 16.

Output of the wheeze detection system (wheezing case).

Figure 16.

Output of the wheeze detection system (wheezing case).

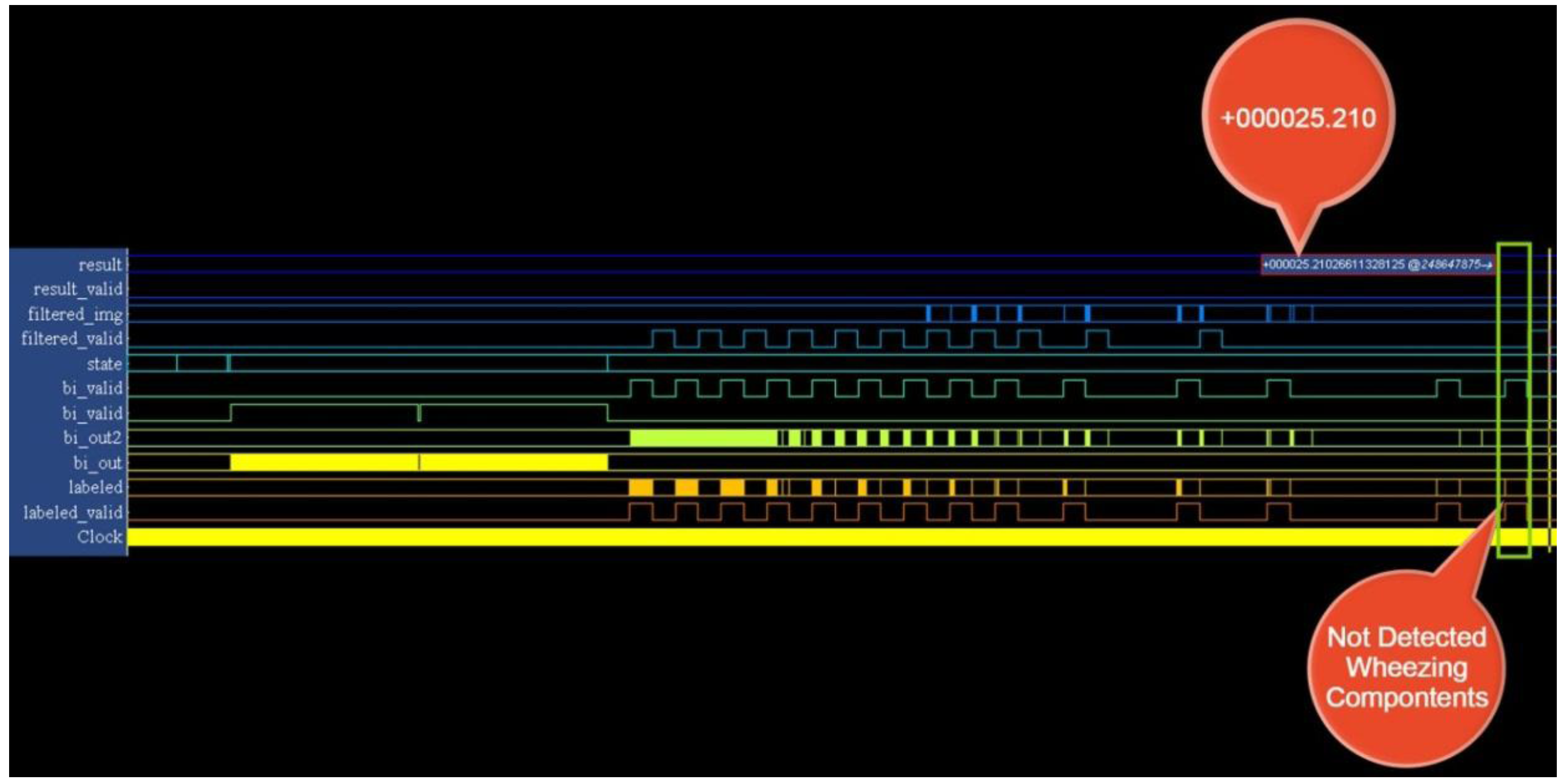

Figure 17.

Output of the wheeze detection system (normal case).

Figure 17.

Output of the wheeze detection system (normal case).

Table 4.

Cross validation.

Table 4.

Cross validation.

| Cross Validation between Software and Hardware | Amount of Samples |

|---|

| Software classifies as normal and hardware classifies as wheeze | 6 samples |

| Software classifies as wheeze and hardware classifies as normal | 11 samples |

| Total amount of errors | 17 samples |

| Error rate (Total amount of testing samples = 371) | 0.0458 |

Table 5.

Recognition results from Matlab and hardware.

Table 5.

Recognition results from Matlab and hardware.

| | TP | TN | FP | FN | Sensitivity | Specificity | Performance |

|---|

| Matlab | 128 | 215 | 15 | 13 | 0.907801 | 0.934783 | 0.921193 |

| Hardware | 125 | 216 | 14 | 16 | 0.88652482 | 0.93913043 | 0.9124486 |

As shown in

Table 5, the estimated performance of the wheezing recognition system in hardware is impeded because of the quantization error. The wheezing detection procedure is considerably affected by the quantization error because it relies on estimating the gradient by calculating the first derivative of the center pixel. To reduce the effect of the quantization error, the size of the LUT of the photometric coefficients can be increased to increase precision, however, this substantially increases the demand on hardware resources. Therefore, we used a new SVM model based on features extracted from the hardware directly, thus allowing the SVM to yield correct weights.

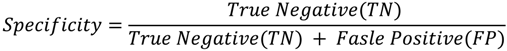

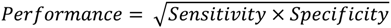

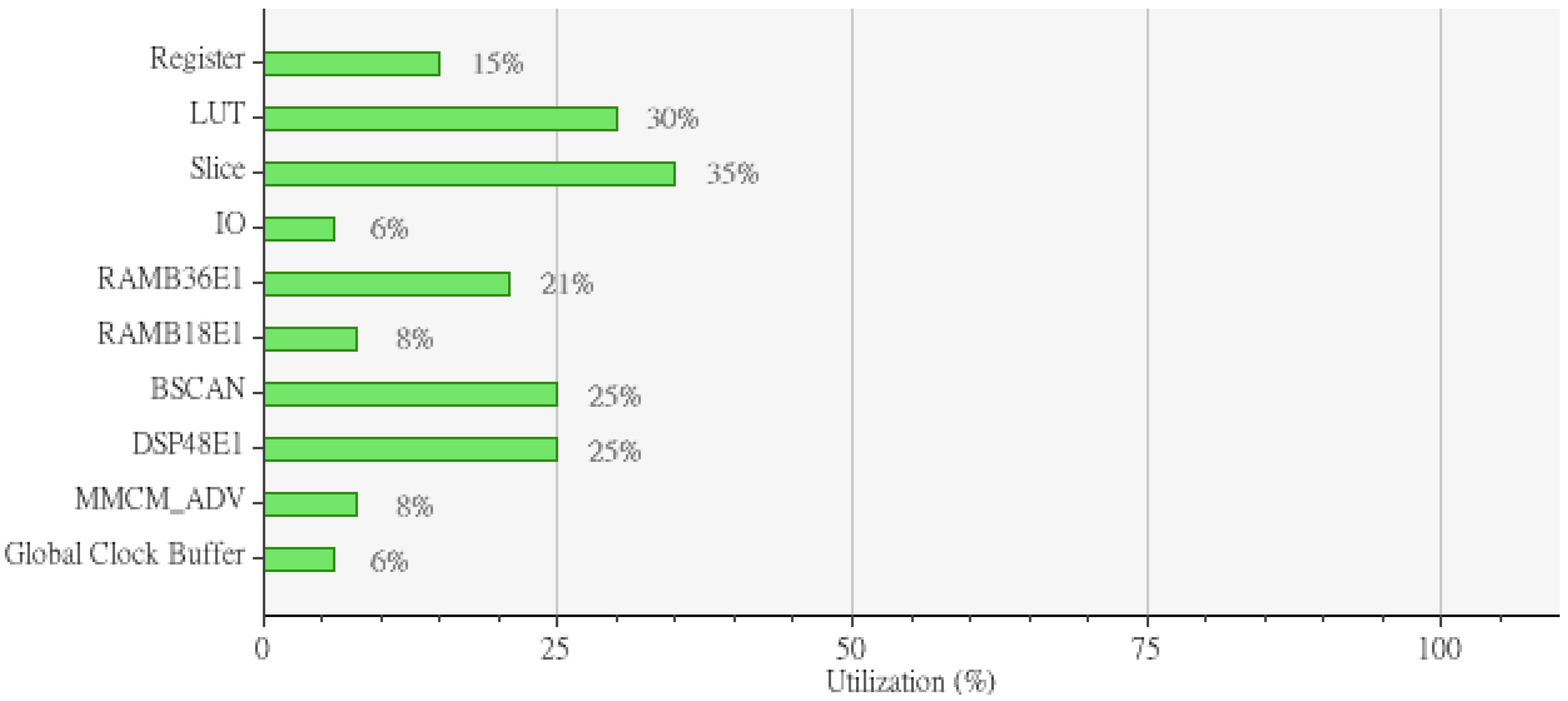

4.2. Implementation Results of the WDSIP

The WDSIP was implemented using a Xilinx Virtex-6 FPGA ML605 platform. The internal placement and routing of the FPGA is illustrated in

Figure 18. The total hardware resources used by the WDSIP are listed in

Table 6 and

Figure 19.

Table 6.

A summary of the resource usage by WDSIP.

Table 6.

A summary of the resource usage by WDSIP.

| | Spectrogram | Bilateral | Image Labeling System | Morphological | Total |

|---|

| Filtering | Processing | Used |

|---|

| Slice Register | 17511 | 12881 | 9228 | 6449 | 46069 |

| Occupied Slices | 4095 | 3783 | 3436 | 3073 | 14387 |

| Block RAMs | 62 | 69 | 48 | 20 | 199 |

| DSP Slices | 57 | 66 | 55 | 14 | 192 |

Figure 18.

FPGA internal placement and routing.

Figure 18.

FPGA internal placement and routing.

Figure 19.

Implemented utilization.

Figure 19.

Implemented utilization.

As shown in

Table 6, spectrogram conversion was responsible for using the most hardware resources in the WDSIP implementation. This is because spectrogram conversion requires collecting 8,820 points, and the resulting substantial block RAM demands were not taken into consideration when the depth of the FIFO was set to 8,820. Thus, the FIFO was switched to dual-port RAM to reduce block RAM usage. Moreover, the divider must be used to obtain the power intensities of the spectrogram, and this requires a substantial number of DSP slices to compute.

We applied a 7 × 7 window when processing the spectrogram by using bilateral filtering, but it is necessary to process more pixels than such a window allows at a time. Although we optimized the architecture of the bilateral filter by dividing the input pixels into eight subgroups, the internal stage of the bilateral filter (i.e., the photometric filter and spatial filter) still required considerable multipliers and adders. Thus, bilateral filtering was found to be responsible for the second-highest hardware resource demands in the system.

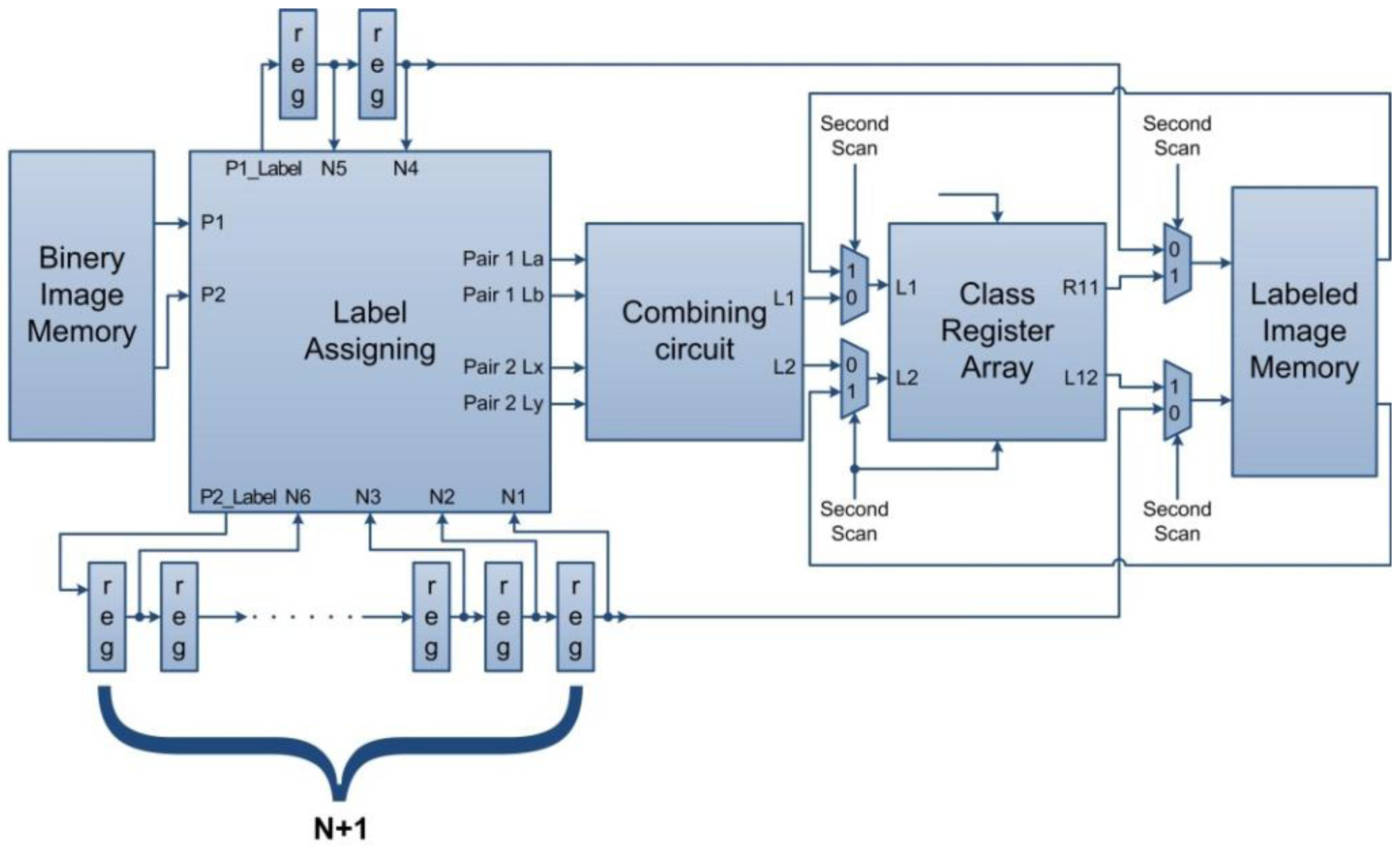

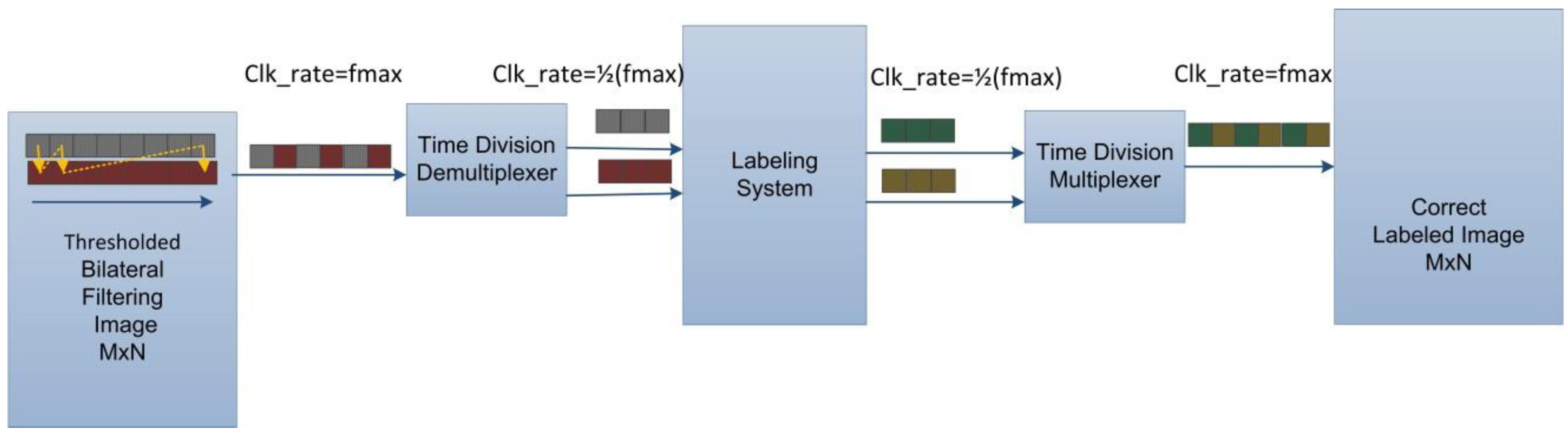

To form the image mask, the temporary image created by the image labeling system after the first raster scanning, as well as the output of the properties scanning system, must be stored in dual-port RAM. To streamline operation and reduce processing time, we implemented additional dual-port RAM, as shown in

Figure 12. The system requires three dual-port RAM modules to store these images, requiring a substantial amount of block memory.

Implemented in hardware, the maximum speed of the WDSIP reached 51.97 MHz; a 2-second breath recording can thus be analyzed for wheezing in 0.07956 seconds. This is adequate for high-speed wheezing detection. We analyzed the power consumption of the WDSIP, and the results are shown in

Table 7; the low power consumption of the WDSIP is appropriate for portable device applications. The WDSIP was compared with other portable device applications proposed in previous studies, as shown in

Table 8.

Table 7.

A summary of power consumption of WDSIP.

Table 7.

A summary of power consumption of WDSIP.

| | Power (W) |

|---|

| Logic Power | 0.13203 |

| Signal Power | 0.02470 |

| Total | 0.15673 |

Table 8.

Comparison with previous studies.

Table 8.

Comparison with previous studies.

| | Bahoura [15] | Lin et al. [27] | Zhang et al. [14] | Yu et al. [13] | This Study |

|---|

| Method | GMM + MFCC | MA + BPNN | Sampling | Correlation | Bilateral filter + SVMs |

| entropy | coefficient |

| Performance | SE= 0.946 | SE= 1.0 | Not mentioned | SE= 0.83 | SE= 0.887 |

| SP= 0.919 | SP= 0.895 | SP= 0.86 | SP= 0.939 |

| PER=0.932 | PER= 0.946 | PER= 0.844 | PER= 0.912 |

| Platform | Laptop | Laptop | Laptop and PDA | Laptop and PDA | Standalone |

| FPGA system |

| Speed | Slow | Slow | Fast | Fast | Very Fast |