Comparison of CBERS-04, GF-1, and GF-2 Satellite Panchromatic Images for Mapping Quasi-Circular Vegetation Patches in the Yellow River Delta, China

Abstract

1. Introduction

2. Materials and Methods

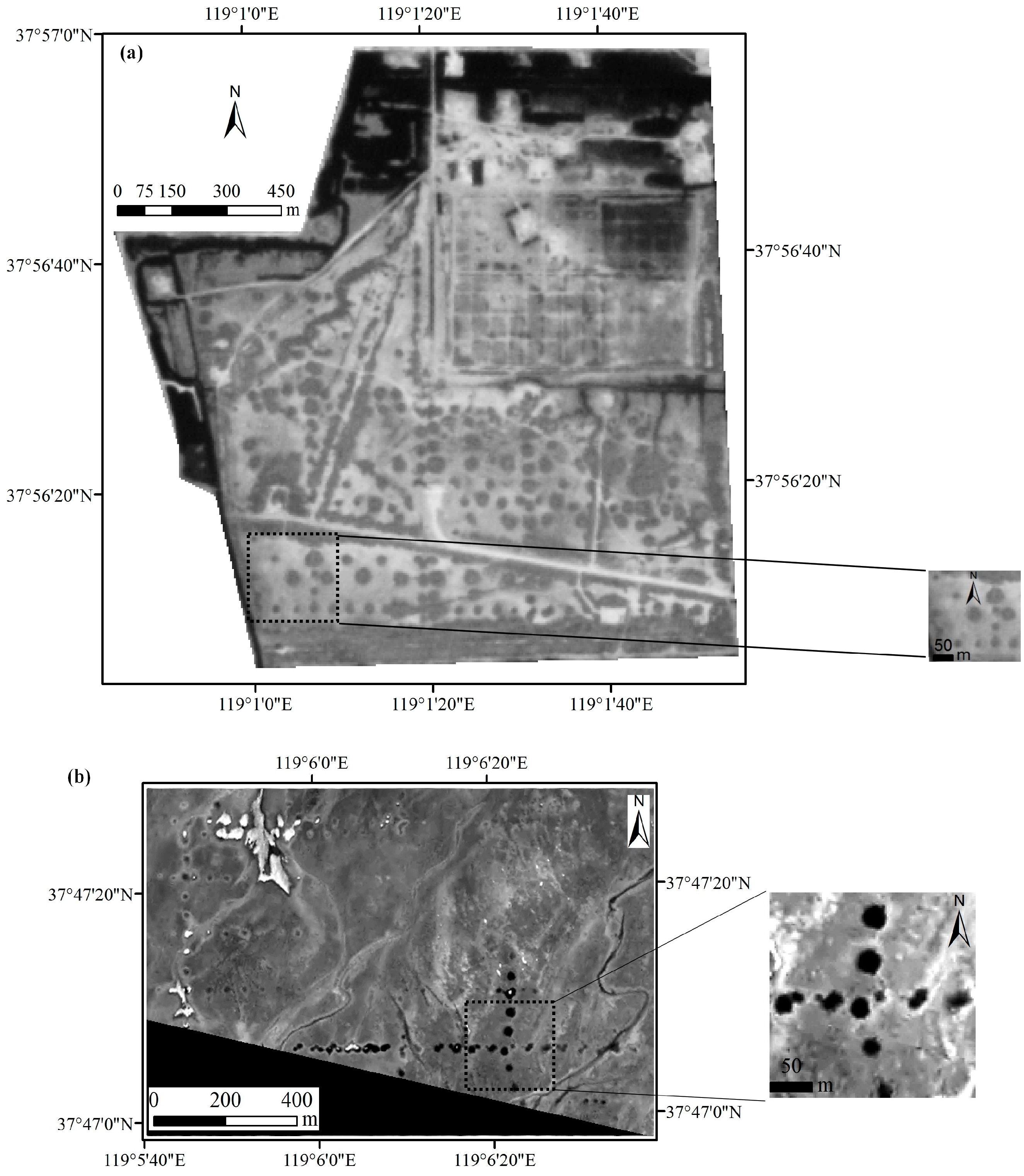

2.1. Study Area

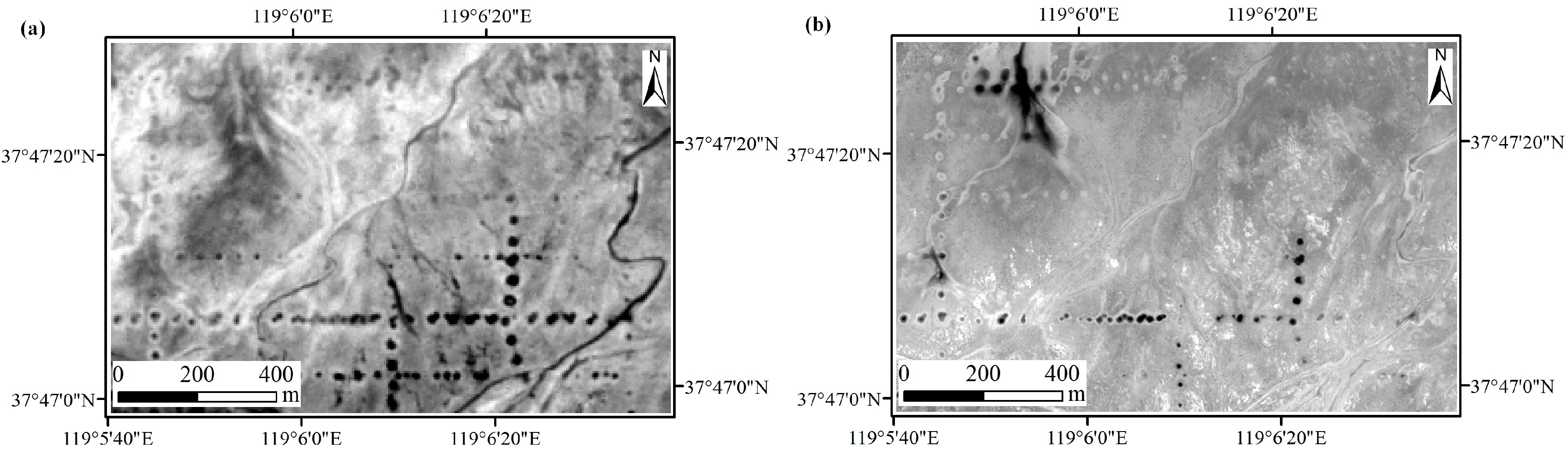

2.2. Remote Sensing Data and Pre-Processing

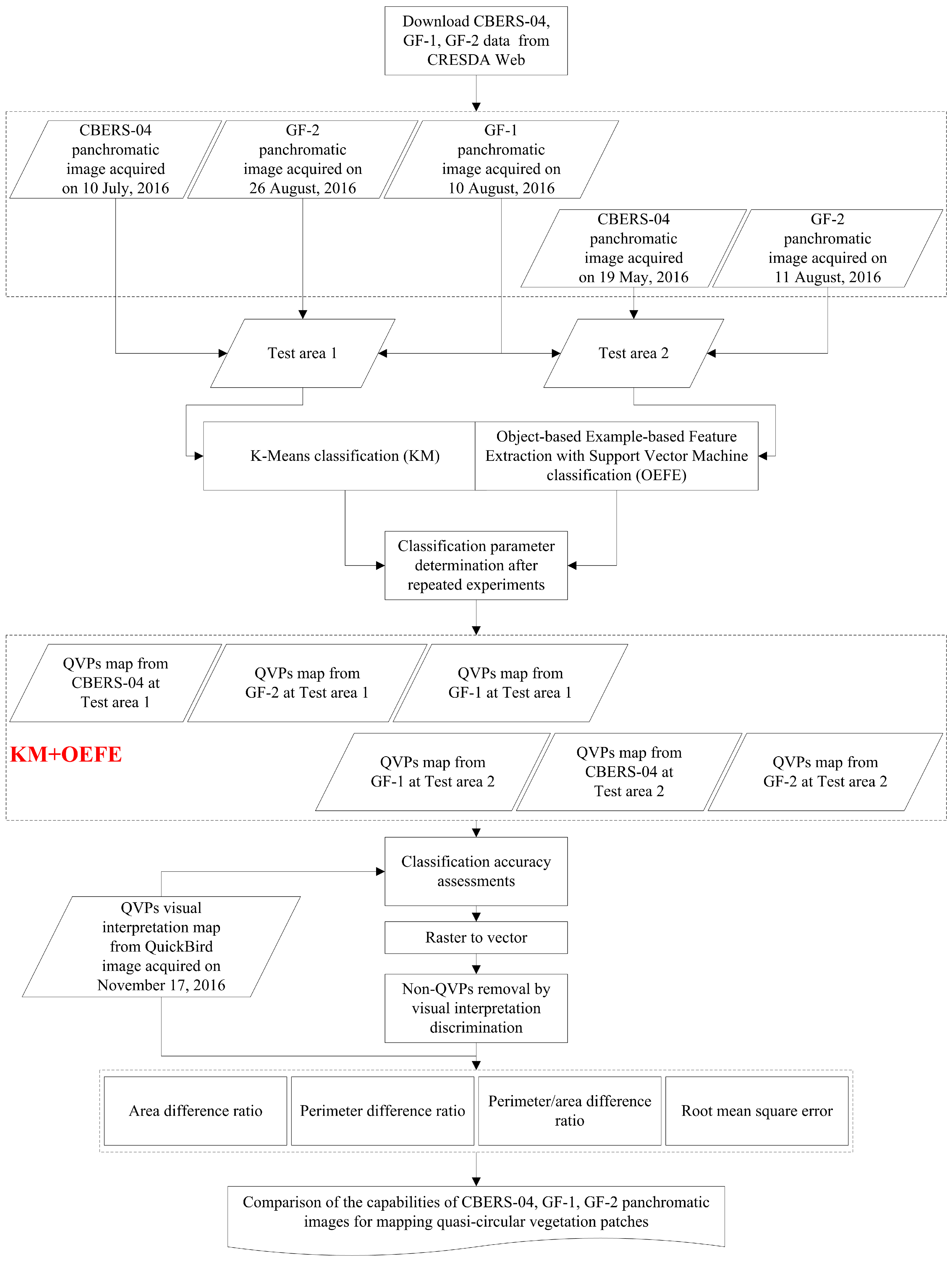

2.3. Vegetation Patch Classification

3. Results

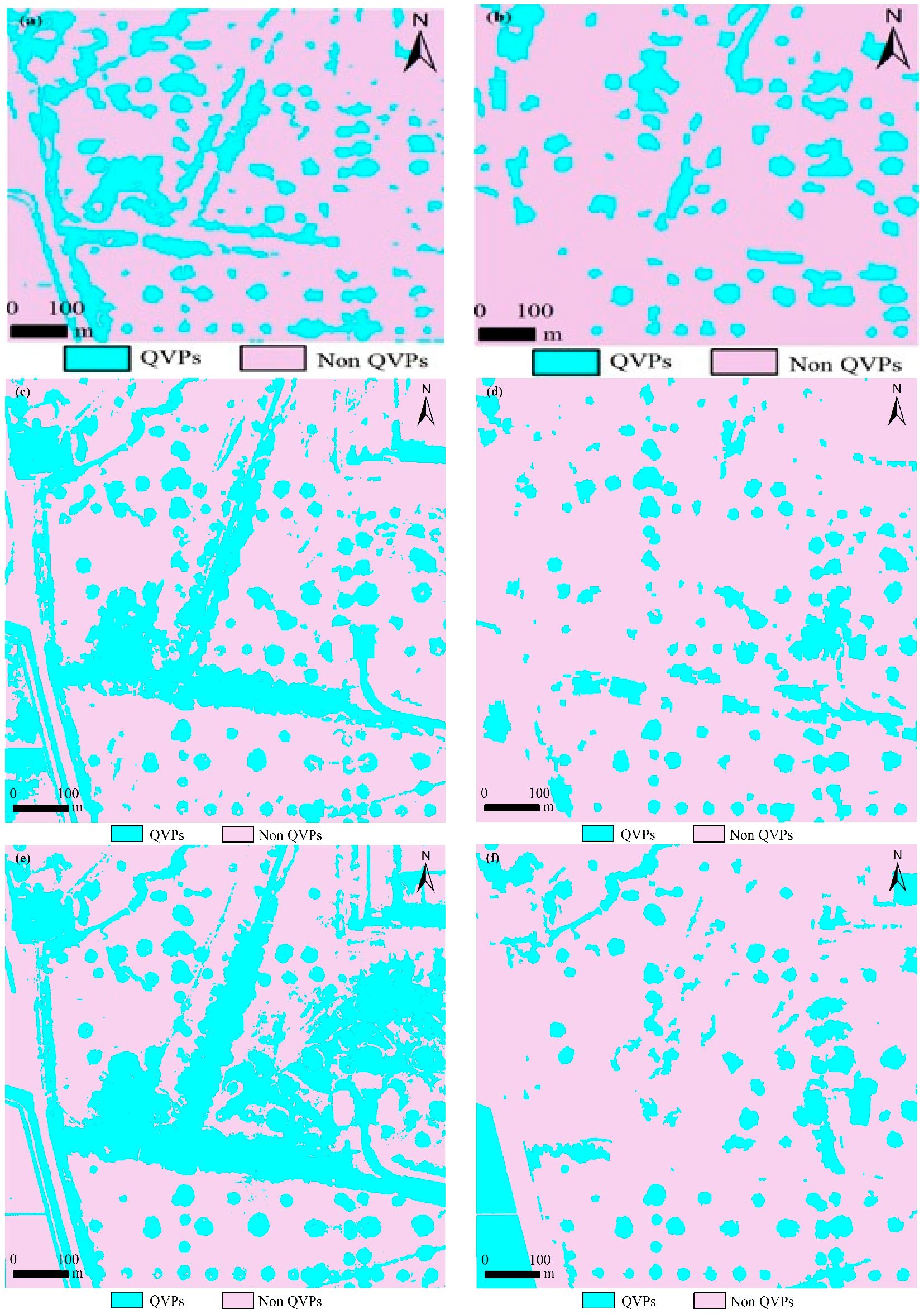

3.1. K-Means Unsupervised Classification

3.2. Object-Based Example-Based Feature Extraction

4. Discussion

4.1. K-Means Unsupervised Classification and Object-Based Example-Based Feature Extraction Approaches

4.2. Quasi-Circular Vegetation Patch Identification with GF-1, GF-2, and CBERS-04

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Aguiar, M.R.; Sala, O.E. Patch structure, dynamics and implication for the functioning of arid ecosystem. Trends Ecol. Evol. 1999, 14, 273–277. [Google Scholar] [CrossRef]

- Saco, P.M.; Willgoose, G.R.; Hancock, G.R. Eco-geomorphology of banded vegetation patterns in arid and semi-arid regions. Hydrol. Earth Syst. Sci. 2007, 11, 1717–1730. [Google Scholar] [CrossRef]

- Bordeu, I.; Clerc, M.G.; Couteron, P.; Lefever, R.; Tlidi, M. Self-replication of localized vegetation patches in scarce environments. Sci. Rep. 2016, 6, 33703. [Google Scholar] [CrossRef] [PubMed]

- Lejeune, O.; Tlidi, M.; Lefever, R. Vegetation spots and stripes: Dissipative structures in arid landscapes. Int. J. Quantum Chem. 2004, 98, 261–271. [Google Scholar] [CrossRef]

- Valentin, C.; d’Herbes, J.M.; Poesen, J. Soil and water components of banded vegetation patterns. Catena 1999, 37, 1–24. [Google Scholar] [CrossRef]

- Janeau, J.L.; Mauchamp, A.; Tarin, G. The soil surface characteristics of vegetation stripes in Northern Mexico and their influences on the system hydrodynamics: An experimental approach. Catena 1999, 37, 165–173. [Google Scholar] [CrossRef]

- Galle, S.; Ehrmann, M.; Peugeot, C. Water balance in a banded vegetation pattern: A case study of tiger bush in western Niger. Catena 1999, 37, 165–173. [Google Scholar] [CrossRef]

- Dunkerley, D.L.; Brown, K.J. Banded vegetation near Broken Hill, Australia: Significance of surface roughness and soil physical properties. Catena 1999, 37, 75–88. [Google Scholar] [CrossRef]

- Dunkerley, D.L.; Brown, K.J. Oblique vegetation banding in the Australian arid zone: Implications for theories of pattern evolution and maintenance. J. Arid Environ. 2002, 51, 163–181. [Google Scholar] [CrossRef]

- Couteron, P.; Lejeune, O. Periodic spotted patterns in semi-arid vegetation explained by a propagation-inhibition model. J. Ecol. 2001, 89, 616–628. [Google Scholar] [CrossRef]

- Jankowitz, W.J.; Van Rooyen, M.W.; Shaw, D.; Kaumba, J.S.; Van Rooyen, N. Mysterious circles in the Namib Desert. S. Afr. J. Bot. 2008, 74, 332–334. [Google Scholar] [CrossRef]

- Armas, C.; Pugnaire, F.I.; Sala, O.E. Patch structure dynamics and mechanisms of cyclical succession in a Patagonian steppe (Argentina). J. Arid Environ. 2008, 72, 1552–1561. [Google Scholar] [CrossRef]

- Liu, Q.S.; Liu, G.H.; Huang, C.; Xie, C.J. Vegetation Patch Structure and Dynamics at Gudong Oil Field of the Yellow River Delta, China. In Geo-Informatics in Resource Management and Sustainable Ecosystem; Bian, F., Xie, Y., Cui, X., Zeng, Y., Eds.; Springer: Heidelberg, Germany, 2013; pp. 177–187. [Google Scholar]

- Sheffer, E.; Yizhaq, H.; Shachak, M.; Meron, E. Mechanisms of vegetation-ring formation in water-limited systems. J. Theor. Biol. 2011, 273, 138–146. [Google Scholar] [CrossRef] [PubMed]

- Kinast, S.; Ashkenazy, Y.; Meron, E. A coupled vegetation–crust model for patchy landscapes. Pure Appl. Geophys. 2014, 173, 983–993. [Google Scholar] [CrossRef]

- Sherratt, J.A. An analysis of vegetation stripe formation in semi-arid landscapes. J. Math. Biol. 2005, 51, 183–197. [Google Scholar] [CrossRef] [PubMed]

- Sherratt, J.A. Pattern solutions of the Klausmeier Model for banded vegetation in semi-arid environments I. Nonlinearity 2010, 23, 2657–2675. [Google Scholar] [CrossRef]

- Barbier, N.; Couteron, P.; Lejoly, J.; Deblauwe, V.; Lejeune, O. Self-organized vegetation patterning as a fingerprint of climate and human impact on semi-arid ecosystems. J. Ecol. 2006, 94, 537–547. [Google Scholar] [CrossRef]

- D’Odorico, P.; Laio, F.; Ridolfi, L. Patterns as indicators of productivity enhancement by facilitation and competition in dryland vegetation. J. Geophys. Res. 2006, 111, G03010. [Google Scholar] [CrossRef]

- Rietkerk, M.; Dekker, S.C.; De Ruiter, P.C.; Van de Koppel, J. Self-organized patchiness and catastrophic shifts in ecosystems. Science 2004, 305, 1926–1929. [Google Scholar] [CrossRef] [PubMed]

- Von Hardenberg, J.; Kletter, A.Y.; Yizhaq, H.; Nathan, J.; Meron, E. Periodic versus scale-free patterns in dryland vegetation. Proc. R. Soc. B 2010, 277, 1771–1776. [Google Scholar] [CrossRef] [PubMed]

- Underwood, E.C.; Ustin, S.L.; Ramirez, C.M. A comparison of spatial and spectral image resolution for mapping invasive plants in coastal California. Environ. Manag. 2007, 39, 63–83. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.S.; Liu, G.H.; Huang, C.; Xie, C.J. Using SPOT 5 fusion-ready imagery to detect Chinese tamarisk (saltcedar) with mathematical morphological method. Int. J. Digit. Earth 2014, 7, 217–228. [Google Scholar] [CrossRef]

- Trodd, N.M.; Dougill, A.J. Monitoring vegetation dynamics in semi-arid African rangelands: Use and limitations of Earth observation data to characterize vegetation structure. Appl. Geogr. 1998, 18, 315–330. [Google Scholar] [CrossRef]

- Valta-Hulkkonen, K.; Kanninen, A.; Pellikka, P. Remote sensing and GIS for detecting changes in the aquatic vegetation of a rehabilitated lake. Int. J. Remote Sens. 2004, 25, 5745–5758. [Google Scholar] [CrossRef]

- Frenkel, R.E.; Boss, T.R. Introduction, establishment and spread of Spartina patens on Cox Island, Siuslaw Estuary, Oregon. Wetlands 1998, 8, 33–49. [Google Scholar] [CrossRef]

- Kadmon, R.; Harari-Kremer, R. Studying long-term vegetation dynamics using digital processing of historical aerial photographs. Remote Sens. Environ. 1999, 68, 164–176. [Google Scholar] [CrossRef]

- Becker, T.; Getzin, S. The fairy circles of Kaokoland (North-West Namibia) origin, distribution, and characteristics. Basic Appl. Ecol. 2000, 1, 149–159. [Google Scholar] [CrossRef]

- Strand, E.K.; Smith, A.M.S.; Bunting, S.C.; Vierling, L.A.; Hann, D.B.; Gessler, P.E. Wavelet estimation of plant spatial patterns in multitemporal aerial photography. Int. J. Remote Sens. 2006, 27, 2049–2054. [Google Scholar] [CrossRef]

- Bryson, M.; Reid, A.; Ramos, F.; Sukkarieh, S. Airborne vision-based mapping and classification of large farmland environments. J. Field Robot. 2010, 27, 632–655. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Havstad, K.M.; Paris, J.F.; Beck, R.F.; McNeely, R.; Gonzalez, A.L. Object-oriented image analysis for mapping shrub encroachment from 1937 to 2003 in southern New Mexico. Remote Sens. Environ. 2004, 93, 198–210. [Google Scholar] [CrossRef]

- Liu, Q.S.; Liu, G.H.; Huang, C.; Xie, C.J.; Shi, L. Using ALOS high spatial resolution image to detect vegetation patches. Procedia Environ. Sci. 2011, 10, 896–901. [Google Scholar] [CrossRef]

- Liu, Q.S.; Zhang, Y.J.; Liu, G.H.; Huang, C. Detection of Quasi-circular Vegetation Community Patches Using Circular Hough Transform Based on ZY-3 Satellite Image in the Yellow River Delta, China. In Proceedings of the International Geoscience and Remote Sensing Symposium, Melbourne, Australia, 21–26 July 2013; IEEE: New York, NY, USA, 2013; pp. 2149–2151. [Google Scholar] [CrossRef]

- Liu, Q.S.; Liu, G.H.; Huang, C.; Shi, L.; Zhao, J. Monitoring vegetation recovery at abandoned land. In Proceedings of the International Congress on Image and Signal Processing, Shenyang, China, 14–16 October 2015; IEEE: New York, NY, USA, 2015; pp. 88–92. [Google Scholar] [CrossRef]

- Liu, Q.S.; Liang, L.; Liu, G.H.; Huang, C.; Li, H.; Zhao, J. Mapping quasi-circular vegetation patches using QuickBird image with an object-based approach. In Proceedings of the 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics, Shanghai, China, 14–16 October 2017; IEEE: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Pu, R.L.; Landry, S.; Yu, Q.Y. Assessing the potential of multi-seasonal high resolution Pleiades satellite imagery for mapping urban tree species. Int. J. Appl. Earth Obs. Geoinf. 2018, 71, 144–158. [Google Scholar] [CrossRef]

- Pham, T.D.; Bui, D.T.; Yoshino, K.; Le, N.N. Optimized rule-based logistic model tree algorithm for mapping mangrove species using ALOS PALSAR imagery and GIS in the tropical region. Environ. Earth Sci. 2018, 77, 159–171. [Google Scholar] [CrossRef]

- Roth, K.L.; Roberts, D.A.; Dennison, P.E.; Peterson, S.H.; Alonzo, M. The impact of spatial resolution on the classification of plant species and functional types within imaging spectrometer data. Remote Sens. Environ. 2015, 171, 45–57. [Google Scholar] [CrossRef]

- Kunitomo, J.; Morimoto, Y. Vegetation monitoring using different scale of remote sensing data. J. Environ. Sci. 1999, 11, 216–220. [Google Scholar]

- Wang, L.; Sousa, W.P.; Gong, P.; Biging, G.S. Comparison of IKONOS and QuickBird images for mapping mangrove species on the Caribbean coast of Panama. Remote Sens. Environ. 2004, 91, 432–440. [Google Scholar] [CrossRef]

- Wang, J.B.; Dong, J.W.; Liu, J.Y.; Huang, M.; Li, G.C.; Running, S.W.; Smith, W.K.; Harris, W.; Saigusa, N.; Kondo, H.; et al. Comparison of gross primary productivity derived from GIMMS NDVI3g, GIMMS, and MODIS in Southeast Asia. Remote Sens. 2014, 6, 2108–2133. [Google Scholar] [CrossRef]

- Griffith, J.A.; McKellip, R.D.; Morisette, J.T. Comparison of Multiple Sensors for Identification and Mapping of Tamarisk in Western Colorado: Preliminary Findings. In Proceedings of the ASPRS 2005 Annual Conference on Geospatial Goes Global: From Your Neighborhood to the Whole Planet, Baltimore, MD, USA, 7–11 March 2005. [Google Scholar]

- Alavi Panah, S.K.; Goossens, R.; Matinfar, H.R.; Mohamadi, H.; Ghadiri, M.; Irannegad, H.; Alikhah Asl, M. The efficiency of landsat TM and ETM+ thermal data for extracting soil information in arid regions. J. Agric. Sci. Technol.-Iran 2008, 10, 439–460. [Google Scholar]

- Selkowitz, D.J. A comparison of multi-spectral, multi-angular, and multi-temporal remote sensing datasets for fractional shrub canopy mapping in Arctic Alaska. Remote Sens. Environ. 2010, 114, 1338–1352. [Google Scholar] [CrossRef]

- Nagendra, H.; Rocchini, D.; Ghate, R.; Sharma, B.; Pareeth, S. Assessing plant diversity in a dry tropical forest: Comparing the utility of Landsat and Ikonos satellite images. Remote Sens. 2010, 2, 478–496. [Google Scholar] [CrossRef]

- Boggs, G.S. Assessment of SPOT 5 and QuickBird remotely sensed imagery for mapping tree cover in savannas. Int. J. Appl. Earth Obs. 2010, 12, 217–224. [Google Scholar] [CrossRef]

- Lozano, F.J.; Súarez-Seoane, S.; Luis, E.D. Effects of wildfires on environmental variability: A comparative analysis using different spectral indices, patch metrics and thematic resolutions. Landsc. Ecol. 2010, 25, 697–710. [Google Scholar] [CrossRef]

- Taylor, S.; Kumar, L.; Reid, N. Accuracy comparison of Quickbird, Landsat TM and SPOT 5 imagery for Lantana camara mapping. J. Spat. Sci. 2011, 56, 241–252. [Google Scholar] [CrossRef]

- Li, W.B.; Du, Z.Q.; Ling, F.; Zhou, D.B.; Wang, H.L.; Gui, Y.N.; Sun, B.Y.; Zhang, X.M. A comparison of land surface water mapping using the normalized difference water index from TM, ETM+ and ALI. Remote Sens. 2013, 5, 5530–5549. [Google Scholar] [CrossRef]

- Novack, T.; Esch, T.; Kux, H.; Stilla, U. Machine learning comparison between WorldView-2 and QuickBird-2-simulated imagery regarding object-based urban land cover classification. Remote Sens. 2011, 3, 2263–2282. [Google Scholar] [CrossRef]

- Fernandes, M.R.; Aguiar, F.C.; Silva, J.M.N.; Ferreira, M.T.; Pereira, J.M.C. Optimal attributes for the object based detection of giant reed in riparian habitats: A comparative study between Airborne High Spatial Resolution and WorldView-2 imagery. Int. J. Appl. Earth Obs. 2014, 32, 79–91. [Google Scholar] [CrossRef]

- Ghosh, A.; Fassnacht, F.E.; Joshi, P.K.; Koch, B. A framework for mapping tree species combining hyperspectral and LiDAR data: Role of selected classifiers and sensor across three spatial scales. Int. J. Appl. Earth Obs. 2014, 26, 49–63. [Google Scholar] [CrossRef]

- Agapiou, A.; Alexakis, D.D.; Hadjimitsis, D.G. Spectral sensitivity of ALOS, ASTER, IKONOS, LANDSAT and SPOT satellite imagery intended for the detection of archaeological crop marks. Int. J. Digit. Earth 2014, 7, 351–372. [Google Scholar] [CrossRef]

- Els, A.; Merlo, S.; Knight, J. Comparison of Two Satellite Imaging Platforms for Evaluating Sand Dune Migration in the Ubari Sand Sea (Libyan Fazzan). In Proceedings of the 36th International Symposium on Remote Sensing of Environment, Berlin, Germany, 11–15 May 2015; pp. 1375–1380. [Google Scholar] [CrossRef]

- Liu, Q.S.; Liu, G.H.; Chu, X.L. Comparison of Different Spatial Resolution Bands of SPOT 5 to Vegetation Community Patch Detection. In Proceedings of the 2012 5th International Congress on Image and Signal Processing, Chongqing, China, 16–18 October 2012; pp. 1190–1194. [Google Scholar]

- Liu, Q.S.; Huang, D.; Liu, G.H.; Huang, C. Remote Sensing and Mapping of Vegetation Community Patches at Gudong Oil Field, China: A comparative Use of SPOT 5 and ALOS data. In Proceedings of the SPIE 8531, Edinburgh, UK, 24–27 September 2012; pp. 85311Q-1–85311Q-7. [Google Scholar] [CrossRef]

- Li, Y.Y.; Liu, Q.S.; Liu, G.H.; Huang, C. Detect Quasi-circular Vegetation Community Patches Using Images of Different Spatial Resolutions. In Proceedings of the 6th International Congress on Image and Signal Processing, Hangzhou, China, 16–18 December 2013; IEEE: New York, NY, USA, 2013; pp. 824–829. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Liu, Q.S.; Liu, G.H.; Tang, S.J. Mapping of Circular or Elliptical Vegetation Community Patches: A Comparative Use of SPOT-5, ALOS and ZY-3 Imagery. In Proceedings of the 8th International Congress on Image and Signal Processing, Shenyang, China, 14–16 October 2015; IEEE: New York, NY, USA, 2015; pp. 666–671. [Google Scholar] [CrossRef]

- Liu, Q.S.; Liang, L.; Liu, G.H.; Huang, C. Comparison of Two Satellite Imaging Platforms for Monitoring Quasi-circular Vegetation Patch in the Yellow River Delta, China. In Proceedings of the SPIE 10405, San Diego, CA, USA, 6–10 August 2017; p. 1040504. [Google Scholar] [CrossRef]

- Lafortezza, R.; Brown, R.D. A framework for landscape ecological design of new patches in the rural landscape. Environ. Manag. 2004, 34, 461–473. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.H.; Drost, H.J. Atlas of the Yellow River Delta, 1st ed.; The Publishing House of Surveying and Mapping: Beijing, China, 1997; pp. 29–33. ISBN 7-5030-0904-7. [Google Scholar]

- Liu, Q.S.; Liu, G.H.; Huang, C.; Wu, C.S.; Jing, X. Remote sensing analysis on the spatial-temporal dynamics of quasi-circular vegetation patches in the Modern Yellow River Delta, China. Remote Sens. Technol. Appl. 2016, 31, 349–358. (In Chinese) [Google Scholar]

- Cresda, CBERS-04, Slate. Available online: http://www.cresda.com/EN/satellite/7159.shtml (accessed on 20 January 2017).

- Cresda, GF-1, Slate. Available online: http://www.cresda.com/EN/satellite/7155.shtml (accessed on 20 January 2017).

- Cresda, GF-2, Slate. Available online: http://www.cresda.com/EN/satellite/7157.shtml (accessed on 20 January 2017).

- Kamal, M.; Phinn, S. Hyperspectral data for mangrove species mapping: A comparison of pixel-based and object-based approach. Remote Sens. 2011, 3, 2222–2242. [Google Scholar] [CrossRef]

- Ghosh, A.; Joshi, P.K. A comparison of selected classification algorithms for mapping bamboo patches in lower Gangetic plains using very high resolution WorldView 2 imagery. Int. J. Appl. Earth Obs. 2014, 26, 298–311. [Google Scholar] [CrossRef]

- Guo, Y.S.; Liu, Q.S.; Liu, G.H.; Huang, C. Individual tree crown extraction of high resolution image based on marker-controlled watershed segmentation method. J. Geoinf. Sci. 2016, 18, 1259–1266. [Google Scholar] [CrossRef]

- Liu, Q.S.; Liu, G.H. Combining tasseled cap transformation with support vector machine to classify Landsat TM imagery data. In Proceedings of the 6th International Conference on Natural Computation (ICNC 2010), Yantai, China, 10–12 August 2010; pp. 3570–3572. [Google Scholar] [CrossRef]

- Vafaei, S.; Soosani, J.; Adeli, K.; Fadaei, H.; Naghavi, H.; Pham, T.D.; Bui, D.T. Improving accuracy estimation of forest aboveground biomass based on incorporation of ALOS-2 PALSAR-2 and Sentinel-2A imagery and machine learning: A case study of the Hyrcanian forest area (Iran). Remote Sens. 2018, 10, 172. [Google Scholar] [CrossRef]

- Clark, P.E.; Seyfried, M.S.; Harris, B. Intermountain plant community classification using Landsat TM and SPOT HRV data. J. Range Manag. 2001, 54, 152–160. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, Y.; Pu, R.L.; Zhang, Z.Z. Mapping Robinia Pseudoacacia forest health conditions by using combined spectral, spatial and textural information extracted from IKONOS imagery and random forest classifier. Remote Sens. 2015, 7, 9020–9044. [Google Scholar] [CrossRef]

- Cushnie, J.L. The interactive effect of spatial resolution and degree of internal variability within land-cover types on classification accuracies. Int. J. Remote Sens. 1987, 8, 15–29. [Google Scholar] [CrossRef]

| Satellite | Band No. | Spectral Range (nm) | Spatial Resolution (m) | Acquisition Date |

|---|---|---|---|---|

| CBERS-04 | 1 | 510–850 | 5 | 19 May and 10 July 2016 |

| GF-1 | 1 | 450–900 | 2 | 10 August 2016 |

| GF-2 | 1 | 450–900 | 0.8 | 11 and 26 August 2016 |

| Test Area | Class | CBERS-04 | GF-1 | GF-2 | |||

|---|---|---|---|---|---|---|---|

| PA (%) | UA (%) | PA (%) | UA (%) | PA (%) | UA (%) | ||

| 1 | QVPs | 58.57 | 97.16 | 62.71 | 81.76 | 77.62 | 87.61 |

| Non QVPs | 97.56 | 62.35 | 81.11 | 61.69 | 85.14 | 73.76 | |

| OA (%) | 74.67 | 70.54 | 80.82 | ||||

| Kappa | 0.52 | 0.42 | 0.62 | ||||

| 2 | QVPs | 59.3 | 95.83 | 46.89 | 97.46 | 39.34 | 64.34 |

| Non QVPs | 97.3 | 69.57 | 98.84 | 66.23 | 79.49 | 58.22 | |

| OA (%) | 77.88 | 73.55 | 60.03 | ||||

| Kappa | 0.56 | 0.46 | 0.19 |

| Items | Test Areas | GF-2 | GF-1 | CBERS-04 |

|---|---|---|---|---|

| The RMSE in area (m2) | 1 | 243.68 | 330.18 | 352.18 |

| 2 | 165.43 | 255.83 | 169.16 | |

| The RMSE in perimeter (m) | 1 | 32.31 | 17.88 | 42.05 |

| 2 | 52.16 | 45.37 | 32.88 | |

| The RMSE in perimeter/area | 1 | 0.15 | 0.19 | 0.14 |

| 2 | 0.36 | 0.57 | 0.13 |

| Test Area | Class | CBERS-04 | GF-1 | GF-2 | |||

|---|---|---|---|---|---|---|---|

| PA (%) | UA (%) | PA (%) | UA (%) | PA (%) | UA (%) | ||

| 1 | QVPs | 58.03 | 99.69 | 63.62 | 93.14 | 69.59 | 85.3 |

| Non QVPs | 99.74 | 62.56 | 93.67 | 65.59 | 83.77 | 67.06 | |

| OA (%) | 75.25 | 76.4 | 75.62 | ||||

| Kappa | 0.53 | 0.54 | 0.52 | ||||

| 2 | QVPs | 38.12 | 93.41 | 51.99 | 96.34 | 54.5 | 96.64 |

| Non QVPs | 97.19 | 60.03 | 98.13 | 68.3 | 98.22 | 69.65 | |

| OA (%) | 66.99 | 75.67 | 77.03 | ||||

| Kappa | 0.35 | 0.51 | 0.53 |

| Items | Test Areas | GF-2 | GF-1 | CBERS-04 |

|---|---|---|---|---|

| The RMSE in area (m2) | 1 | 265.60 | 357.01 | 556.16 |

| 2 | 183.13 | 182.52 | 224.87 | |

| The RMSE in perimeter (m) | 1 | 37.55 | 27.90 | 50.38 |

| 2 | 40.23 | 24.28 | 32.92 | |

| The RMSE in perimeter/area | 1 | 0.21 | 0.10 | 0.04 |

| 2 | 0.22 | 0.21 | 0.06 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Q.; Huang, C.; Liu, G.; Yu, B. Comparison of CBERS-04, GF-1, and GF-2 Satellite Panchromatic Images for Mapping Quasi-Circular Vegetation Patches in the Yellow River Delta, China. Sensors 2018, 18, 2733. https://doi.org/10.3390/s18082733

Liu Q, Huang C, Liu G, Yu B. Comparison of CBERS-04, GF-1, and GF-2 Satellite Panchromatic Images for Mapping Quasi-Circular Vegetation Patches in the Yellow River Delta, China. Sensors. 2018; 18(8):2733. https://doi.org/10.3390/s18082733

Chicago/Turabian StyleLiu, Qingsheng, Chong Huang, Gaohuan Liu, and Bowei Yu. 2018. "Comparison of CBERS-04, GF-1, and GF-2 Satellite Panchromatic Images for Mapping Quasi-Circular Vegetation Patches in the Yellow River Delta, China" Sensors 18, no. 8: 2733. https://doi.org/10.3390/s18082733

APA StyleLiu, Q., Huang, C., Liu, G., & Yu, B. (2018). Comparison of CBERS-04, GF-1, and GF-2 Satellite Panchromatic Images for Mapping Quasi-Circular Vegetation Patches in the Yellow River Delta, China. Sensors, 18(8), 2733. https://doi.org/10.3390/s18082733