Exploring 3D Human Action Recognition: from Offline to Online

Abstract

:1. Introduction

2. Related Studies

2.1. Offline Action Recognition

2.2. Online Action Recognition

3. Proposed Methods

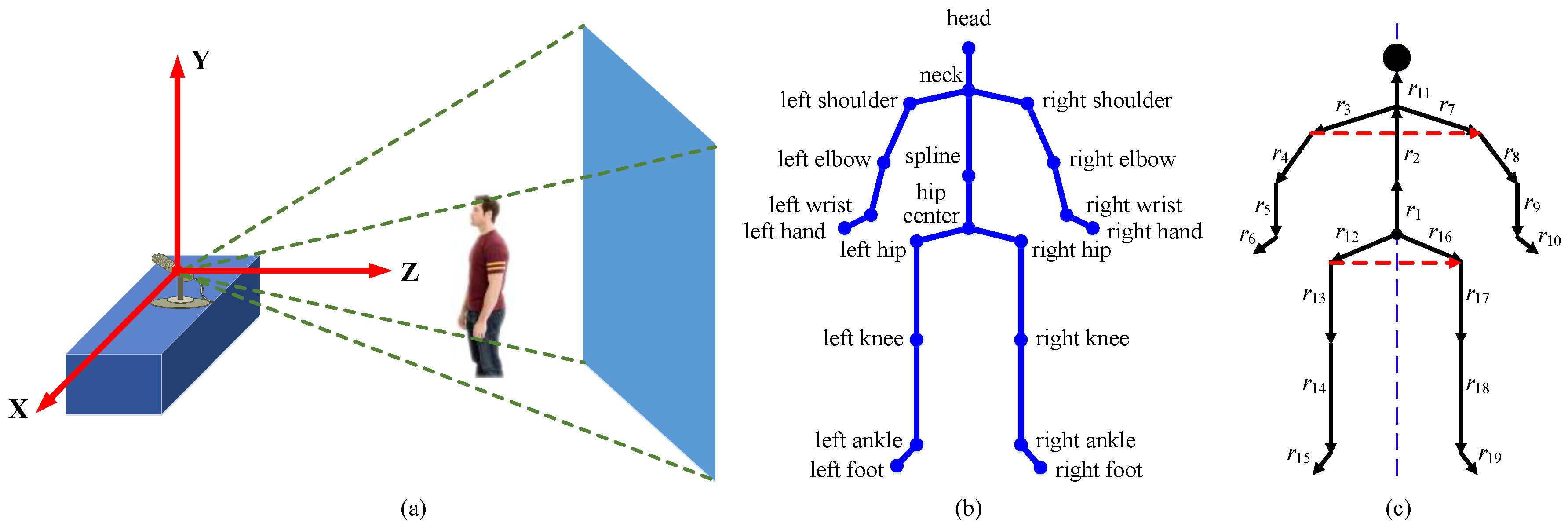

3.1. Skeletal Sequence Preprocessing

3.1.1. Denoising

3.1.2. Translation Invariance

3.1.3. Human Body Size Invariance

3.1.4. Viewpoint Invariance

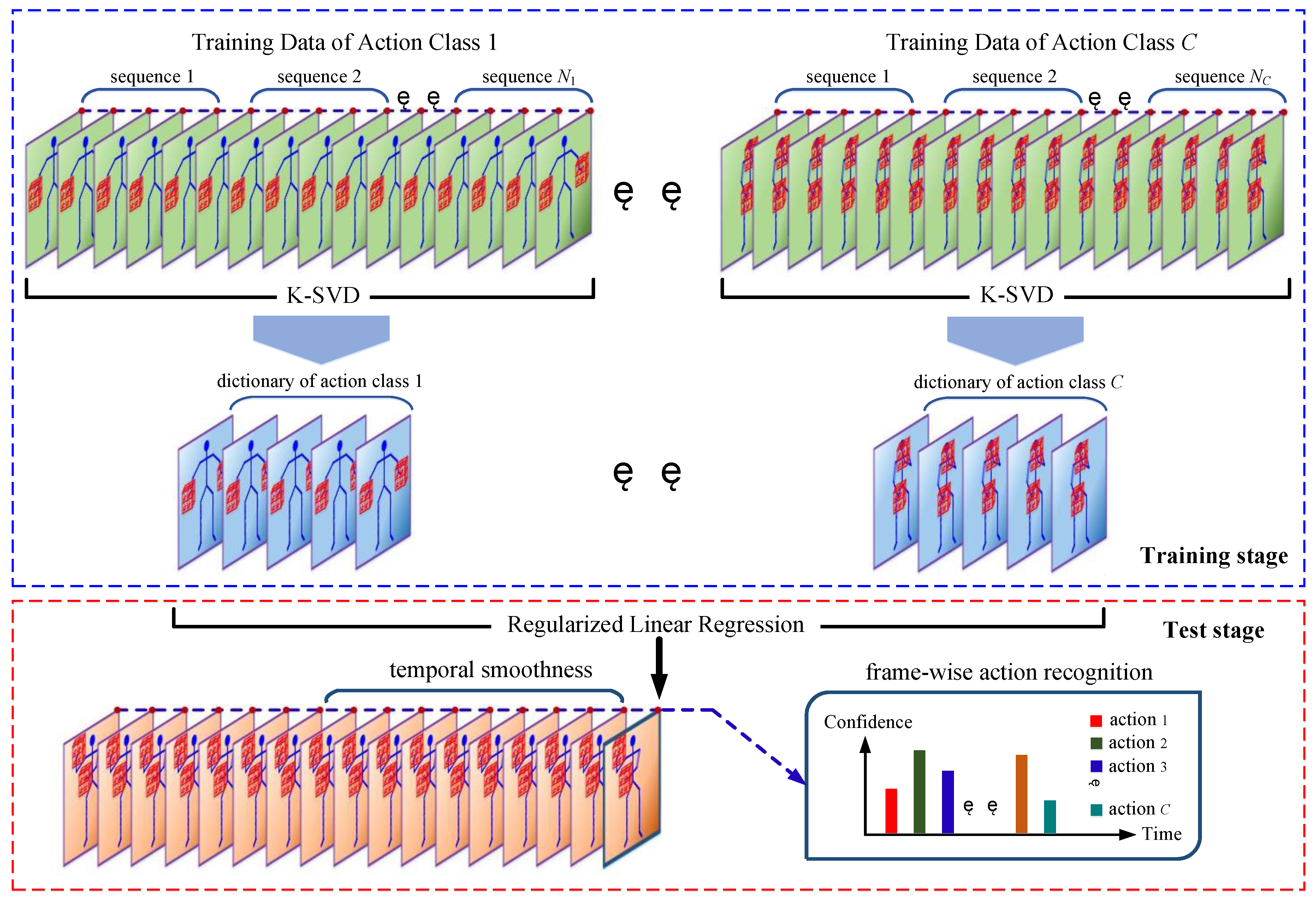

3.2. K-SVD- and RLR-Based Methods

3.2.1. Pairwise Relative Joint Positions

3.2.2. Local Occupancy Patterns

3.2.3. Dictionary Learning

3.2.4. Frame-Wise Action Recognition

3.2.5. Temporal Smoothness

- Step 1.

- Assume that i = 1, 2, …, C-1 is the ith label of actions of interest, and C is the label of meaningless or undefined actions. confThresh is a preset threshold. seq-1 and seq-2 denote two sub-sequences within two adjacent sliding windows, and seq-2 temporally follows behind seq-1. The two windows have the same size, which equals to the sliding step. The reference value of confThresh is 2/C.

- Step 2.

- ← compute the average confidence of the ith action of seq-k (k = 1, 2 and i = 1, 2, …, C).

- Step 3.

- class{seq-k} ← determine the action label of seq-k according to (k = 1, 2).

- Step 4.

- If max{} < confThresh, class{seq-k} ← C (k = 1, 2).

- Step 5.

- If class{seq-1} = class{seq-2} | | class{seq-1} = C | | class{seq-2} = C, return class{seq-k} (k = 1, 2). Otherwise, class{seq-1} ← C, and then return class{seq-k} (k = 1, 2).

3.3. Depth Motion Map-Based Methods

3.3.1. Depth Motion Map

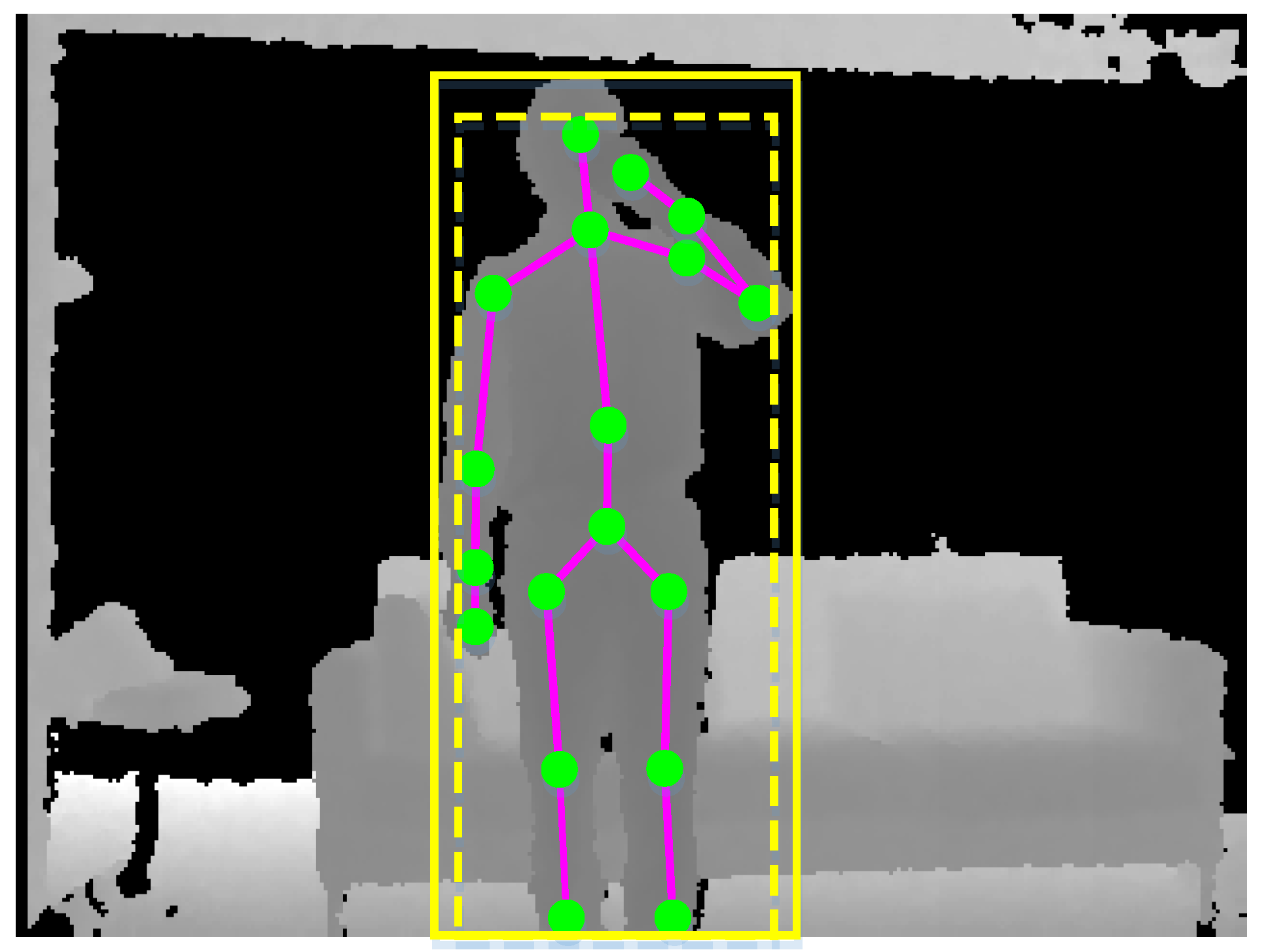

3.3.2. Human Foreground Extraction

3.3.3. Offline and Online Segmentation

| Algorithm 1 Offline segmentation |

|

| Algorithm 2 Online segmentation |

|

3.3.4. Feature Fusion

3.3.5. Feature Classification

4. Experiments

4.1. MSR 3D Online Action Dataset

4.1.1. Quantitative Comparison

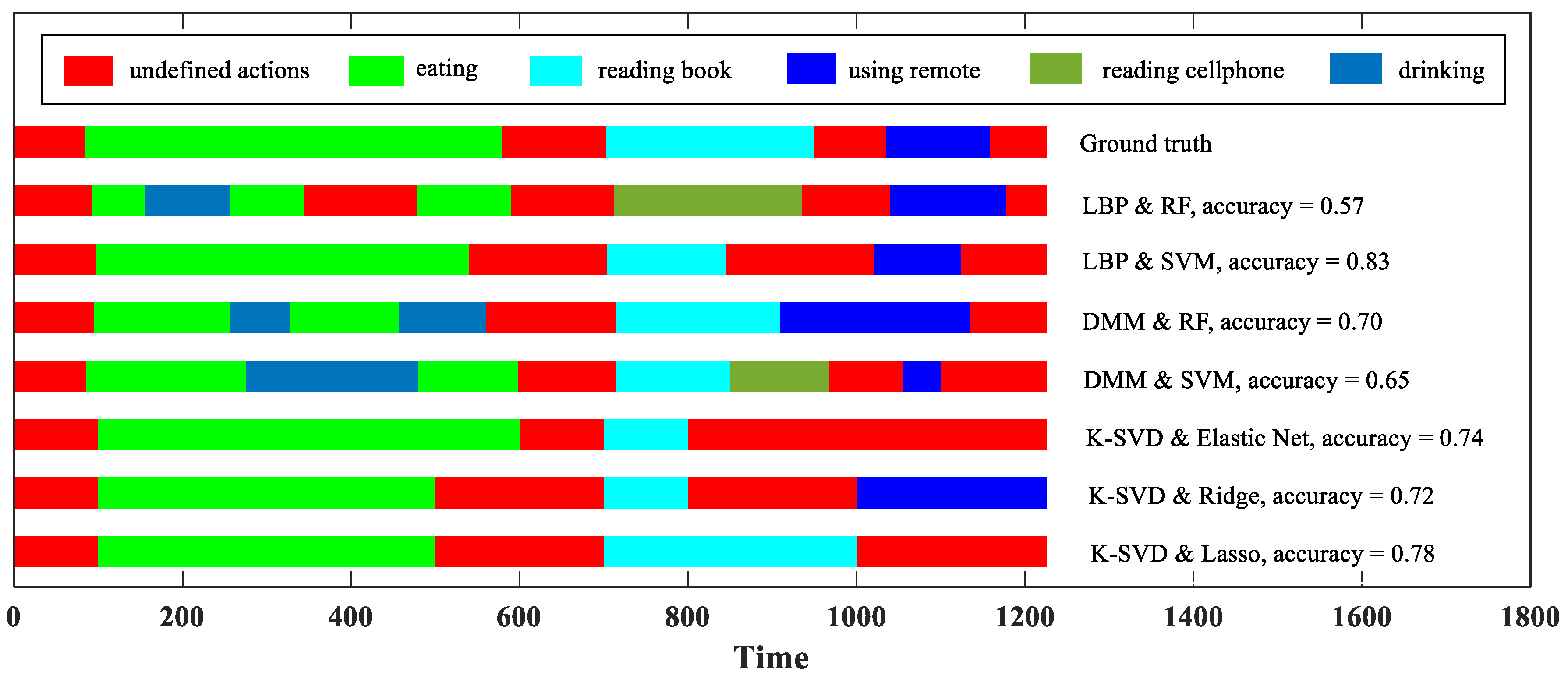

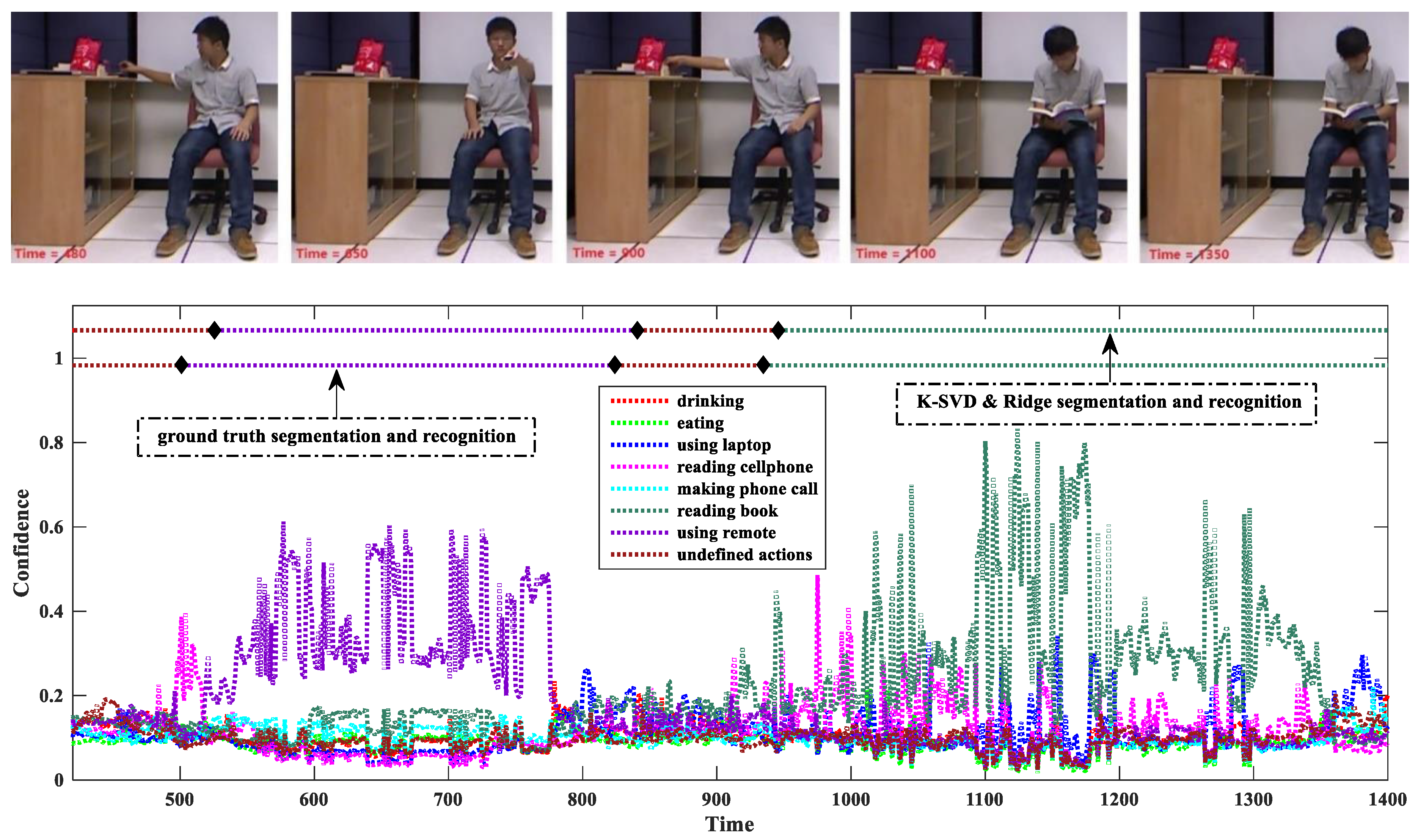

4.1.2. Action Recognition Visualization

4.1.3. Temporal Smoothness

4.2. MSR Daily Activity 3D Dataset

4.2.1. Quantitative Comparison

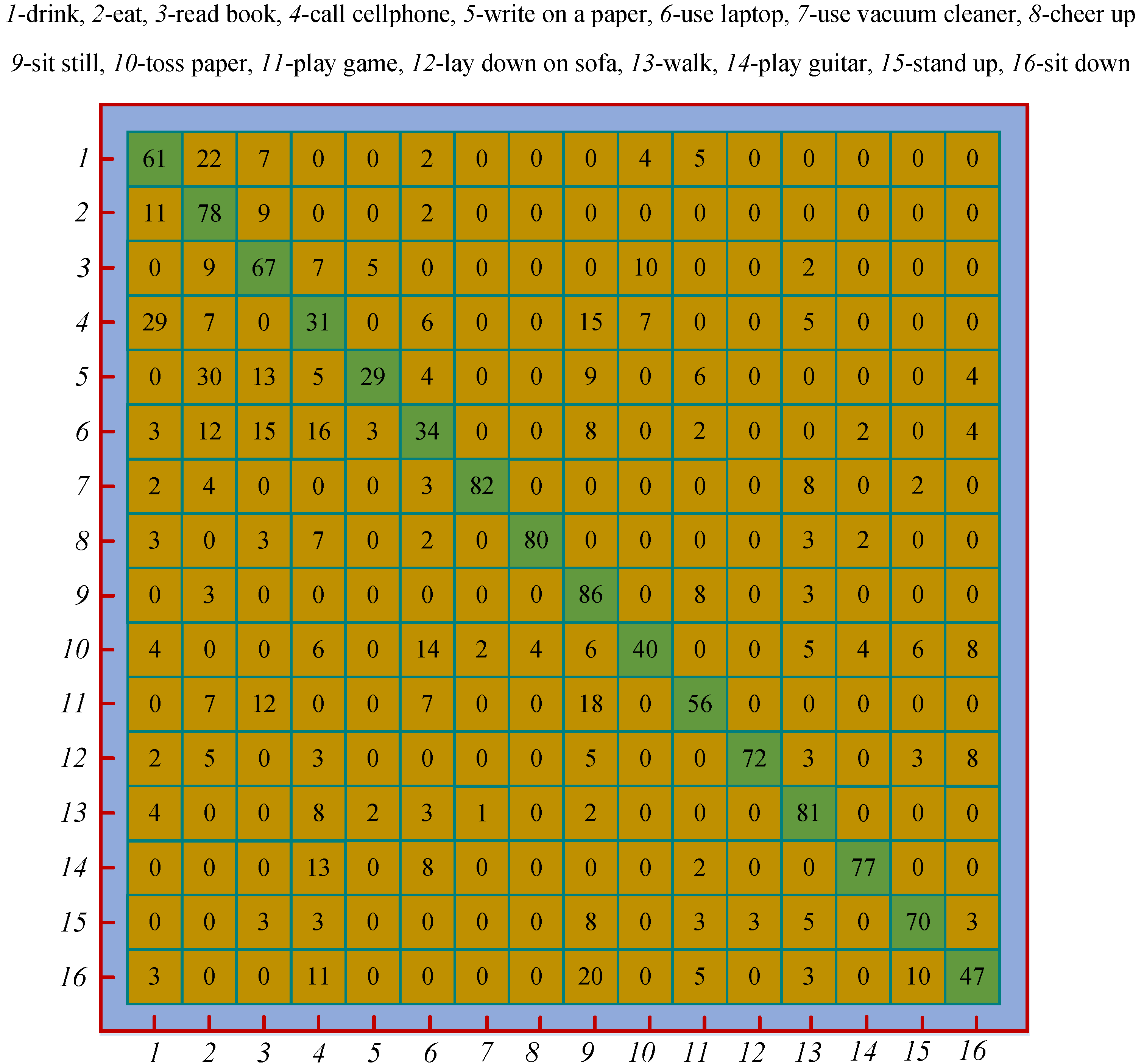

4.2.2. Confusion Matrix

4.3. Real-Time Performance

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ye, M.; Zhang, Q.; Wang, L.; Zhu, J.; Yang, R.; Gall, G. A survey on human motion analysis from depth data. In Time-of-Flight and Depth Imaging. Sensors, Algorithms, and Applications; Grzegorzek, M., Theobalt, C., Koch, R., Kolb, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8200, pp. 149–187. [Google Scholar]

- Bux, A.; Angelov, P.; Habib, Z. Vision based human activity recognition: A review. In Advances in Computational Intelligence Systems; Angelov, P., Gegov, A., Jayne, C., Shen, Q., Eds.; Springer: Cham, Switzerland, 2017; Volume 513, pp. 341–371. [Google Scholar]

- Herath, S.; Harandi, M.; Porikli, F. Going deeper into action recognition: A survey. Image Vision Comput. 2017, 60, 4–21. [Google Scholar] [CrossRef]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from a single depth image. IEEE Comput. Vis. Pattern Recognit. 2011, 56, 1297–1304. [Google Scholar]

- Luo, J.; Wang, W.; Qi, H. Group sparsity and geometry constrained dictionary learning for action recognition from depth maps. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 1809–1816. [Google Scholar]

- Vemulapalli, R.; Arrate, F.; Chellappa, R. Human action recognition by representing 3D human skeletons as points in a Lie group. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 588–595. [Google Scholar]

- Ellis, C.; Masood, S.; Tappen, M.J.L., Jr.; Sukthankar, R. Exploring the trade-off between accuracy and observational latency in action recognition. Int. J. Comput. Vis. 2013, 101, 420–436. [Google Scholar] [CrossRef]

- Devanne, M.; Wannous, H.; Berretti, S.; Pala, P.; Daoudi, M.; Bimbo, A.D. 3-D human action recognition by shape analysis of motion trajectories on Riemannian manifold. IEEE Trans. Syst. Man Cybernetics 2014, 45, 1023–1029. [Google Scholar] [CrossRef] [PubMed]

- Amor, B.B.; Su, J.; Srivastava, A. Action recognition using rate-invariant analysis of skeletal shape trajectories. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Tian, Y. EigenJoints-based action recognition using naïve-bayes-nearest-neighbor. In Proceedings of the IEEE Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 14–19. [Google Scholar]

- Zanfir, M.; Leordeanu, M.; Sminchisescu, C. The moving pose: An efficient 3D kinematics descriptor for low-latency action recognition and detection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2752–2759. [Google Scholar]

- Zhu, G.; Zhang, L.; Shen, P.; Song, J. An online continuous human action recognition algorithm based on the Kinect sensor. Sensors 2016, 16, 161. [Google Scholar] [CrossRef] [PubMed]

- Gong, D.; Medioni, G.; Zhao, X. Structured time series analysis for human action segmentation and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1414–1427. [Google Scholar] [CrossRef] [PubMed]

- Evangelidis, G.D.; Singh, G.; Horaud, R. Continuous gesture recognition from articulated poses. In Computer Vision-ECCV 2014 Workshops. ECCV 2014; Lecture Notes in Computer Science; Agapito, L., Bronstein, M., Rother, C., Eds.; Springer: Cham, Switzerland, 2014; Volume 8925, pp. 595–607. [Google Scholar]

- Li, W.; Zhang, Z.; Liu, Z. Action recognition based on a bag of 3D points. In Proceedings of the IEEE Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 9–14. [Google Scholar]

- Yang, X.; Zhang, C.; Tian, Y. Recognizing actions using depth motion maps-based histograms of oriented gradients. In Proceedings of the ACM International Conference on Multimedia, Nara, Japan, 29 October–2 November 2012; pp. 1057–1060. [Google Scholar]

- Chen, C.; Liu, K.; Kehtarnavaz, N. Real-time human action recognition based on depth motion maps. J. Real-Time Image Process. 2013, 12, 155–163. [Google Scholar] [CrossRef]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. Action recognition from depth sequences using depth motion maps-based local binary patterns. In Proceedings of the IEEE WACV, Waikoloa, HI, USA, 5–9 January 2015; pp. 1092–1099. [Google Scholar]

- Wang, P.; Li, W.; Gao, Z.; Zhang, J.; Tang, C.; Ogunbona, P.O. Action recognition from depth maps using deep convolutional neural networks. IEEE Trans. Human-Machine Syst. 2016, 46, 498–509. [Google Scholar] [CrossRef]

- Xia, L.; Aggarwal, J.K. Spatio-temporal depth cuboid similarity feature for activity recognition using depth camera. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2834–2841. [Google Scholar]

- Eum, H.; Yoon, C.; Lee, H.; Park, M. Continuous human action recognition using depth-MHI-HOG and a spotter model. Sensors 2015, 15, 5197–5227. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Liu, Z.; Wu, Y.; Yuan, J. Learning actionlet ensemble for 3D human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 914–927. [Google Scholar] [CrossRef] [PubMed]

- Ohn-Bar, E.; Trivedi, M.M. Joint angles similarities and HOG2 for action recognition. In Proceedings of the IEEE Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013; pp. 465–470. [Google Scholar]

- Yu, G.; Liu, Z.; Yuan, J. Discriminative orderlet mining for real-time recognition of human-object interaction. In Computer Vision—ACCV 2014. ACCV 2014; Lecture Notes in Computer Science; Cremers, D., Reid, I., Saito, H., Yang, M.H., Eds.; Springer: Cham, Switzerland, 2014; pp. 50–65. [Google Scholar]

- Fanello, S.R.; Gori, I.; Metta, G.; Odone, F. Keep it simple and sparse: real-time action recognition. J. Mach. Learn. Res. 2013, 14, 2617–2640. [Google Scholar]

- Wu, C.; Zhang, J.; Savarese, S.; Saxena, A. Watch-n-patch: Unsupervised understanding of actions and relations. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Boston, MA, USA, 7 June 2015; pp. 4362–4370. [Google Scholar]

- Zhou, F.; de la Torre, F.; Hodgins, J.K. Hierarchical aligned cluster analysis for temporal clustering of human motion. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 582–596. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Liu, Z.; Tan, J. Human motion segmentation using collaborative representations of 3D skeletal sequences. IET Comput. Vision 2007. [Google Scholar] [CrossRef]

- Gong, D.; Medioni, G.; Zhu, S.; Zhao, X. Kernelized temporal cut for online temporal segmentation and recognition. In Computer Vision—ECCV 2012. ECCV 2012. Lecture Notes in Computer Science; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7574. [Google Scholar]

- Aharon, M.; Elad, M.; Bruckstein, A.M. K-SVD: An algorithm for designing of overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. Royal Statistical Soc. B 1996, 58, 267–288. [Google Scholar]

- Hoerl, A.; Kennard, R. Ridge regression. In Encyclopedia of Statistical Sciences; Wiley: New York, NY, USA, 1988; pp. 129–136. [Google Scholar]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. Royal Stat. Soc. 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Yao, B.; Jiang, X.; Khosla, A.; Lin, A.L.; Guibas, L.; Li, F. Human action recognition by learning bases of action attributes and parts. Int. J. Comput. Vis. 2011, 23, 1331–1338. [Google Scholar]

- Gong, D.; Medioni, G. Dynamic manifold warping for view invariant action recognition. In Proceedings of the IEEE Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 571–578. [Google Scholar]

- Devanne, M.; Berretti, S.; Pala, P.; Wannous, H.; Daoudi, M.; del Bimbo, A. Motion segment decomposition of RGB-D sequences for human behavior understanding. Pattern Recogn. 2017, 61, 222–233. [Google Scholar] [CrossRef]

- Sparse Models, Algorithms and Learning for Large-scale data, SMALLBox. Available online: http://small-project.eu (accessed on 21 May 2017).

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least angle regression. Ann. Stat. 2004, 32, 407–451. [Google Scholar]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Analy. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. Available online: http://www. csie.ntu.edu.tw/cjlin/libsvm (accessed on 21 May 2017).

- Zhang, J.; Li, W.; Ogunbona, P.O.; Wang, P.; Tang, C. RGB-D-based action recognition datasets: A survey. Pattern Recogn. 2016, 60, 86–105. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, M.; Feng, X. Sparse representation or collaborative representation: Which helps face recognition? In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 471–478. [Google Scholar]

| Action Subset | Description (The Number #i is the Action Category Index) |

|---|---|

| S0 | This subset contains background actions (#10, or undefined actions), which are only used for continuous action recognition. There are 14 sequences in total. |

| S1 | This subset contains seven meaningful actions, including drinking (#0), eating (#1), using laptop (#2), reading cellphone (#3), making phone call (#4), reading book (#5), and using remote (#6). There are 112 sequences in total, and each sequence contains only one action. |

| S2 | The actions in this subset are the same as in S1 except that the human subjects change. There are 112 sequences in total, and each sequence contains only one action. |

| S3 | The actions in this subset are the same as in S1 and S2, but both the action execution environment and human subjects change. There are 112 sequences in total, and each sequence contains only one action. |

| S4 | There are 36 unsegmented action sequences (#8), and each one contains multiple actions. The meaningful actions (#0-#6), as well as the background actions (#10), are recorded continuously. The duration of these sequences lasts from 30 s to 2 min. For evaluation purpose, each frame is manually labeled, but the boundary between two consecutive actions may not be very accurate since it is difficult to determine the boundary. |

| Method | Accuracy | |

|---|---|---|

| Ours | K-SVD & Lasso | 0.433 |

| K-SVD & Ridge | 0.506 | |

| K-SVD & Elastic Net | 0.528 | |

| DMM & SVM | 0.571 | |

| DMM & RF | 0.532 | |

| LBP & SVM | 0.582 | |

| LBP & RF | 0.543 | |

| Orderlet [24] | 0.564 | |

| DSTIP + DCSF [20] | 0.321 | |

| Moving Pose [11] | 0.236 | |

| EigenJoints [10] | 0.500 | |

| DNBC [36] | 0.609 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, R.; Liu, Z.; Tan, J. Exploring 3D Human Action Recognition: from Offline to Online. Sensors 2018, 18, 633. https://doi.org/10.3390/s18020633

Li R, Liu Z, Tan J. Exploring 3D Human Action Recognition: from Offline to Online. Sensors. 2018; 18(2):633. https://doi.org/10.3390/s18020633

Chicago/Turabian StyleLi, Rui, Zhenyu Liu, and Jianrong Tan. 2018. "Exploring 3D Human Action Recognition: from Offline to Online" Sensors 18, no. 2: 633. https://doi.org/10.3390/s18020633