Temporal and Fine-Grained Pedestrian Action Recognition on Driving Recorder Database

Abstract

:1. Introduction

2. Related Work

2.1. Pedestrian Detection

2.2. Space-Time Representation

2.3. Traffic Database

3. Self-Collected Databases

3.1. NTSEL Database (NTSEL)

3.2. Near-Miss Driving Recorder Database (NDRDB)

3.3. Difficulties of Self-Collected Databases

- We should notice small changes in pedestrian actions, namely a meaningful change such as walking straight into turning should be recognized. In many cases, the change occurs in a moment. Therefore, a sophisticated descriptor is preferable to catch a subtle difference.

- An in-vehicle driving recorder is moving depending on the vehicle ego-motion (The NTSEL database does not include moving background. However, the database contains difficulties coming from a cluttered background and fine-grained pedestrian actions.). The fine-grained pedestrian action recognition should be done in a cluttered scene that contains complicated and moving backgrounds.

- The collection of pedestrian fine-grained action is difficult. A large amount of fine-grained pedestrian action data allows us to significantly understand fine-grained pedestrian actions from a vehicle-mounted driving recorder. Although the collection of such data is very difficult due to the rarity of action change such as turning in actual driving, we should treat a feature extraction and learning in a small-scale walking action database, with the aim of improving the avoidance of accidental situations. Therefore, we should consider how to learn about a strong model with a small-scale database.

4. Proposed Approach

5. Experiment

5.1. Implementation

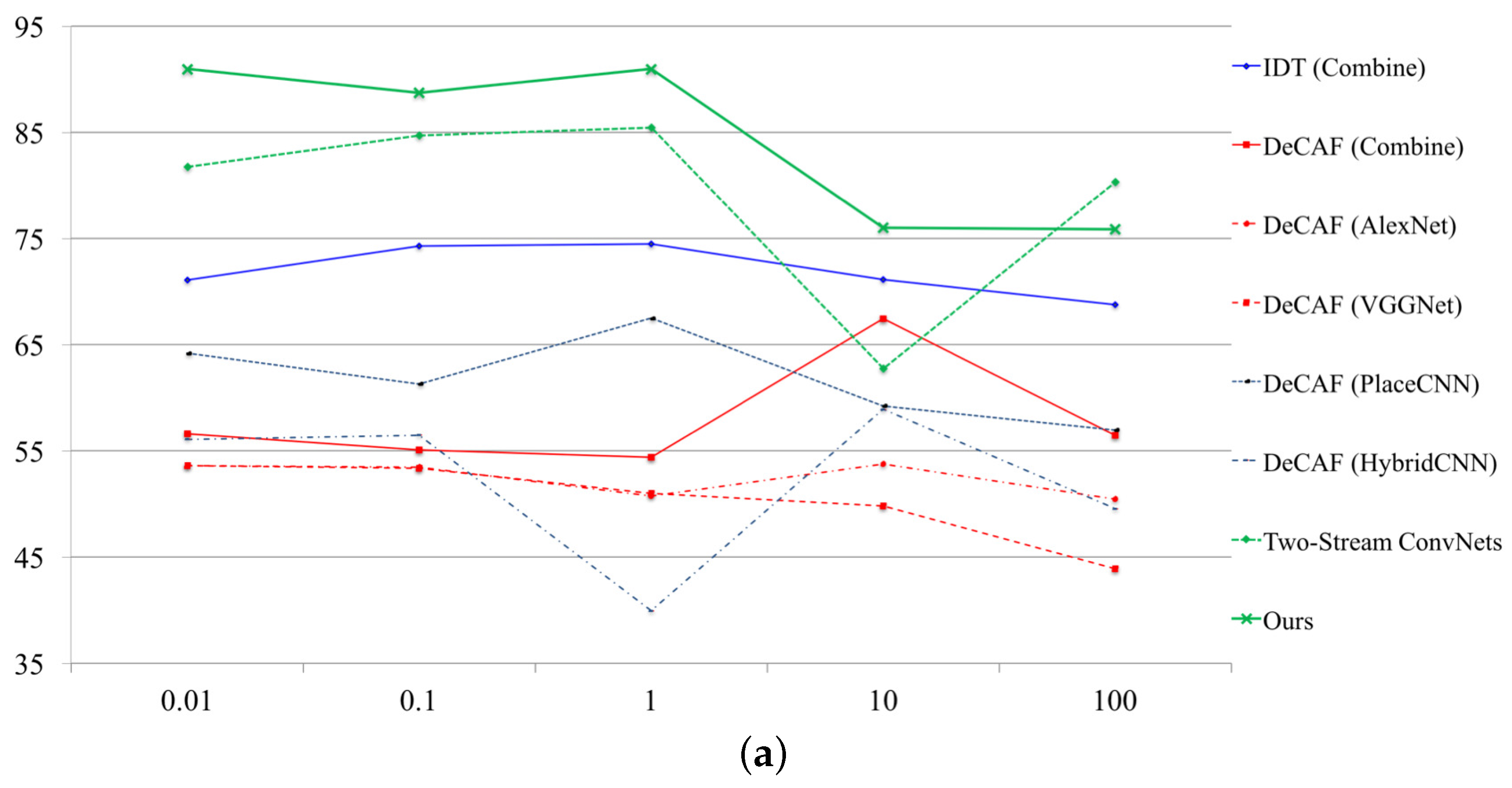

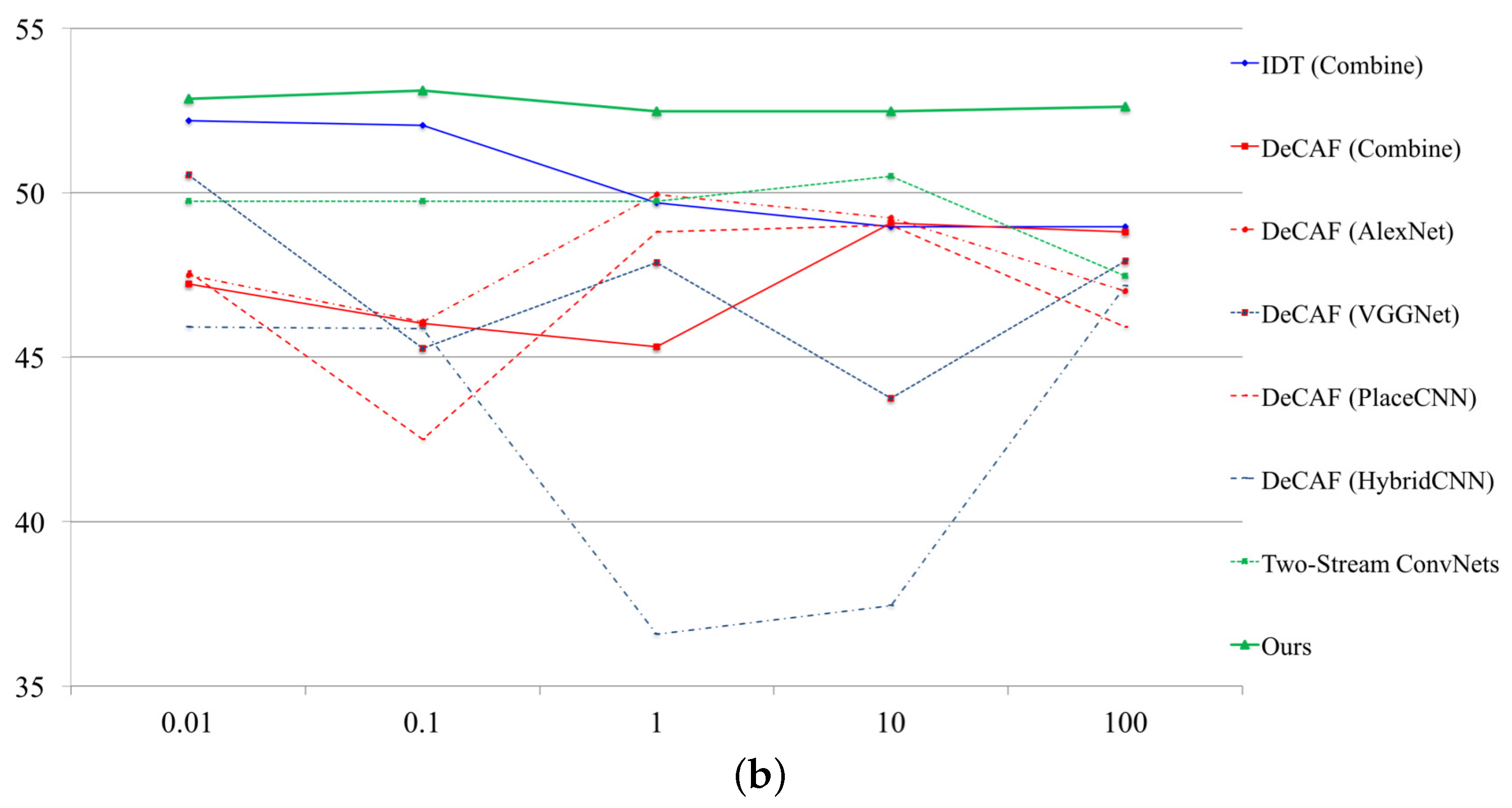

5.2. Exploration Study

5.3. Comparison of Representative Approaches

5.4. Visual Results

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| Important Acronyms | |

| DNN | Deep Neural Networks |

| CNN, convnet(s) | Convolutional Neural Networks |

| NTSEL | National Traffic Science and Environment Laboratory Database |

| NDRDB | Near-Miss Driving Recorder Database |

| Conv | Convolutional Layer |

| Pool | Pooling Layer |

| Fusion | Fusion Layer |

| FC | Fully-Connected Layer |

| DeCAF | Deep Convolutional Activation Features |

| SVM | Support Vector Machines |

References

- Geronimo, D.; Lopez, A.M.; Sappa, A.D.; Graf, T. Survey of Pedestrian Detection for Advanced Driver Assistance Systems. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1239–1258. [Google Scholar] [CrossRef] [PubMed]

- Benenson, R.; Omran, M.; Hosang, J.; Schiele, B. Ten years of pedestrian detection, what have we learned? In Proceedings of the European Conference on Computer Vision Workshop (ECCVW), Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Viola, P.; Jones, M. Rapid Object Detection using a Boosted Cascaded of Simple Features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001; pp. 511–518. [Google Scholar]

- Felzenszwalb, P.; Girshick, R.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representation (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zhang, S.; Benenson, R.; Omran, M.; Hosang, J.; Schiele, B. How Far are We from Solving Pedestrian Detection? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Zhang, L.; Lin, L.; Liang, X.; He, K. Is Faster R-CNN Doing Well for Pedestrian Detection? In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Watanabe, T.; Ito, S.; Yokoi, K. Co-occurrence Histograms of Oriented Gradients for Pedestrian Detection. In Proceedings of the 3rd Pacific-Rim Symposium on Image and Video Technology (PSIVT), Tokyo, Japan, 13–16 January 2009. [Google Scholar]

- Kataoka, H.; Tamura, K.; Iwata, K.; Satoh, Y.; Matsui, Y.; Aoki, Y. Extended Feature Descriptor and Vehicle Motion Model with Tracking-by-detection for Pedestrian Active Safety. IEICE Trans. Inf. Syst. 2014, 296–304. [Google Scholar] [CrossRef]

- Dollar, P.; Tu, Z.; Perona, P.; Belongie, S. Integral Channel Features. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 7–10 September 2009. [Google Scholar]

- Csurka, G.; Dance, C.R.; Fan, L.; Willamowski, J.; Bray, C. Visual Categorization with Bags of Keypoints. In Proceedings of the Workshop on Statistical Learning in Computer Vision (ECCVW), Prague, Czech Republic, 11–14 May 2004. [Google Scholar]

- Jegou, H.; Douze, M.; Schmid, C.; Perez, P. Aggregating local descriptors into a compact image representation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Perronnin, F.; Sanchez, J.; Mensink, T. Improving the fisher kernel for large-scale image classification. In Proceedings of the European Conference on Computer Vision (ECCV), Heraklion, Greece, 5–11 September 2010. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Du, X.; El-Khamy, M.; Lee, J.; Davis, L.S. Fused DNN: A deep neural network fusion approach to fast and robust pedestrian detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017. [Google Scholar]

- Wang, X.; Xiao, T.; Jiang, Y.; Shao, S.; Sun, J.; Shen, C. Repulsion Loss: Detecting Pedestrians in a Crowd. arXiv, 2017; arXiv:1711.07752. [Google Scholar]

- Dalal, N.; Triggs, B.; Schmid, C. Human Detection using Oriented Histograms of Flow and Appearance. In Proceedings of the European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006. [Google Scholar]

- González, A.; Vázquez, D.; Ramos, S.; López, A.; Amores, J. Spatiotemporal Stacked Sequential Learning for Pedestrian Detection. In Proceedings of the Iberian Conference on Pattern Recognition and Image Analysis, Santiago de Compostela, Spain, 17–19 June 2015. [Google Scholar]

- Laptev, I. On Space-Time Interest Points. Int. J. Comput. Vis. 2005, 64, 107–123. [Google Scholar] [CrossRef]

- Wang, H.; Klaser, A.; Schmid, C. Dense Trajectories and Motion Boundary Descriptors for Action Recognition. Int. J. Comput. Vis. 2013, 103, 60–79. [Google Scholar] [CrossRef]

- Wang, H.; Schmid, C. Action Recognition with Improved Trajectories. In Proceedings of the International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. In Proceedings of the International Conference on Computer Vision (ICCV), Los Alamitos, CA, USA, 7–13 December 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition. In Proceedings of the Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Wang, L.; Qiao, Y.; Tang, X. Action Recognition with Trajectory-Pooled Deep-Convolutional Descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian Detection: An Evaluation of the State of the Art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian Detection: A Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Menze, M.; Geiger, A. Object Scene Flow for Autonomous Vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Chen, X.; Kundu, K.; Zhang, Z.; Ma, H.; Fidler, S.; Urtasun, R. Monocular 3D Object Detection for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Bai, M.; Luo, W.; Kundu, K.; Urtasun, R. Exploiting Semantic Information and Deep Matching for Optical Flow. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Luo, W.; Schwing, A.; Urtasun, R. Efficient Deep Learning for Stereo Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Kundu, A.; Vineet, V.; Koltun, V. Feature Space Optimization for Semantic Video Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- First Workshop on Fine-Grained Visual Categorization. Available online: https://sites.google.com/site/cvprfgvc/ (accessed on 9 February 2018).

- Hiyari-Hatto Database. Available online: http://web.tuat.ac.jp/~smrc/drcenter.html (accessed on 9 February 2018).

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional Two-Stream Network Fusion for Video Action Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Donahue, J.; Jia, Y.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A deep convolutional activation feature for generic visual recognition. In Proceedings of the International Conference on Machine Learning (ICML), Beijing, China, 21–26 June 2014. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y. Towards Good Practices for Very Deep Two-Stream ConvNets. arXiv, 2015; arXiv:1507.02159. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Action Classes From Videos in the Wild. arXiv, 2012; arXiv:1212.0402. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Xiao, J.; Torralba, A.; Oliva, A. Learning Deep Features for Scene Recognition using Places Database. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I.S. Multispectral Pedestrian Detection: Benchmark Dataset and Baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- González, A.; Fang, Z.; Socarras, Y.; Serrat, J.; Vázquez, D.; Xu, J.; López, A.M. Pedestrian Detection at Day/Night Time with Visible and FIR Cameras: A Comparison. Sensors 2016, 16, 820. [Google Scholar] [CrossRef] [PubMed]

- Fang, Z.; Vázquez, D.; López, A.M. On-Board Detection of Pedestrian Intentions. Sensors 2017, 17, 2193. [Google Scholar] [CrossRef] [PubMed]

| DB | NTSEL Database; NTSEL | Near-Miss Driving Database; NDRDB |

|---|---|---|

| #Video (#Frame) | #Video (#Frame) | |

| #Walking | 25 (2648) | 15 (515) |

| #Crossing | 25 (2726) | 43 (1773) |

| #Turning | 25 (923) | 13 (593) |

| #Riding a Bicycle | 25 (1632) | 11 (457) |

| #Total | 100 (7929) | 82 (3338) |

| NTSEL (%) | NDRDB (%) | |

|---|---|---|

| End-to-End | N/A | N/A |

| Without fine-tuning (DeCAF) | 82.73 | 46.70 |

| With fine-tuning (DeCAF) | 88.73 | 51.77 |

| #Fc-Unit | NTSEL (%) | NDRDB (%) |

|---|---|---|

| 128 | 88.58 | 51.01 |

| 256 | 88.73 | 48.47 |

| 512 | 89.30 | 51.49 |

| 1024 | 86.30 | 53.23 |

| 2048 | 89.01 | 49.87 |

| 4096 | 91.01 | 53.23 |

| Approach | NTSEL (%) | NDRDB (%) |

|---|---|---|

| IDT (HOG) | 70.18 | 50.43 |

| IDT (HOF) | 64.76 | 52.05 |

| IDT (MBH) | 65.38 | 49.12 |

| IDT [30] | 74.52 | 52.19 |

| DeCAF (ImageNet) [16] | 53.78 | 49.94 |

| DeCAF (ImageNet with VGG-16) [6] | 53.63 | 50.54 |

| DeCAF (Places205 [49] | 67.48 | 49.02 |

| DeCAF (Hybrid) [49] | 58.91 | 47.17 |

| DeCAF (Combined) [46] | 67.44 | 49.07 |

| Two-stream ConvNets (Spatial) | 69.04 | 48.47 |

| Two-stream ConvNets (Temporal) | 64.05 | 45.93 |

| Two-stream ConvNets [32] | 85.44 | 50.50 |

| TDD [33] | 68.39 | 54.66 |

| Ours | 91.01 | 53.23 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kataoka, H.; Satoh, Y.; Aoki, Y.; Oikawa, S.; Matsui, Y. Temporal and Fine-Grained Pedestrian Action Recognition on Driving Recorder Database. Sensors 2018, 18, 627. https://doi.org/10.3390/s18020627

Kataoka H, Satoh Y, Aoki Y, Oikawa S, Matsui Y. Temporal and Fine-Grained Pedestrian Action Recognition on Driving Recorder Database. Sensors. 2018; 18(2):627. https://doi.org/10.3390/s18020627

Chicago/Turabian StyleKataoka, Hirokatsu, Yutaka Satoh, Yoshimitsu Aoki, Shoko Oikawa, and Yasuhiro Matsui. 2018. "Temporal and Fine-Grained Pedestrian Action Recognition on Driving Recorder Database" Sensors 18, no. 2: 627. https://doi.org/10.3390/s18020627