In this section, we discuss the evaluations conducted in terms of performance and effectiveness. First, we validate the architectural design and algorithms for the proposed MOFT by comparing it with intra-varied MOFT. Then, we compare it with state-of-the-art trackers on tracking benchmarks.

7.1. Dataset and Evaluation Metric

Dataset: We evaluated our proposed method on a project of Karlsruhe Institute of Technology and Toyota Technological Institute (KITTI) tracking [

42] and MOT benchmark [

43] datasets. We constructed a pair dataset consisting of cropped objects in frames

k and

from both RGB and depth frames for the training data.

The KITTI tracking dataset contains data captured in driving environments. It consists of 21 training sequences with annotations and also provides various sensor modalities, such as single image, stereo image and 3D point clouds. In our experiments, we used two kinds of paired modalities: (1) RGB frames with depth frames extracted from a stereo camera and (2) RGB frames with depth frames extracted from 3D point clouds. For training, we used 40,000 pairs from 18 training sequences; the remainder was used for evaluations.

We used both the 2015 and 2016 MOT benchmarks (2015: 11 sequences; 2016: seven sequences). Because the MOT benchmark does not provide the depth sequences, we only generated MOFT on RGB frames when evaluations were conducted on the MOT benchmark. For training, we used 32,000 pairs from 15 training sequences; the remainder was used for evaluations.

Evaluation metrics: The following tracking evaluation metrics [

44,

45] from both benchmarks were utilized: multiple object tracking accuracy (MOTA), multiple object tracking precision (MOTP), mostly tracked targets (MT), mostly lost targets (ML) and the number of ID switches (IDS).

7.2. Experimental Setup

Environments: We implemented the proposed method using Caffe [

39] and MATLAB on an Intel-Core i7-6700 quad-core 4.0-GHz processor with 64.00 GB of RAM and an NVIDIA GeForce Titan X graphics card with 12 GB of memory for CUDA computations.

Tracking candidates: In this work, the object detector in [

36] was used to sample the target candidates. Furthermore, the candidate sampling methods in [

11] were used as a comparison model.

Depth frames extraction: We used the adaptive random work method proposed by Lee et al. [

46] to extract the depth frames from the stereo camera of the KITTI tracking benchmark. To track objects in 3D point clouds from the KITTI benchmark, we mapped the 3D point clouds into a 2D dense depth map using up-sampling [

33].

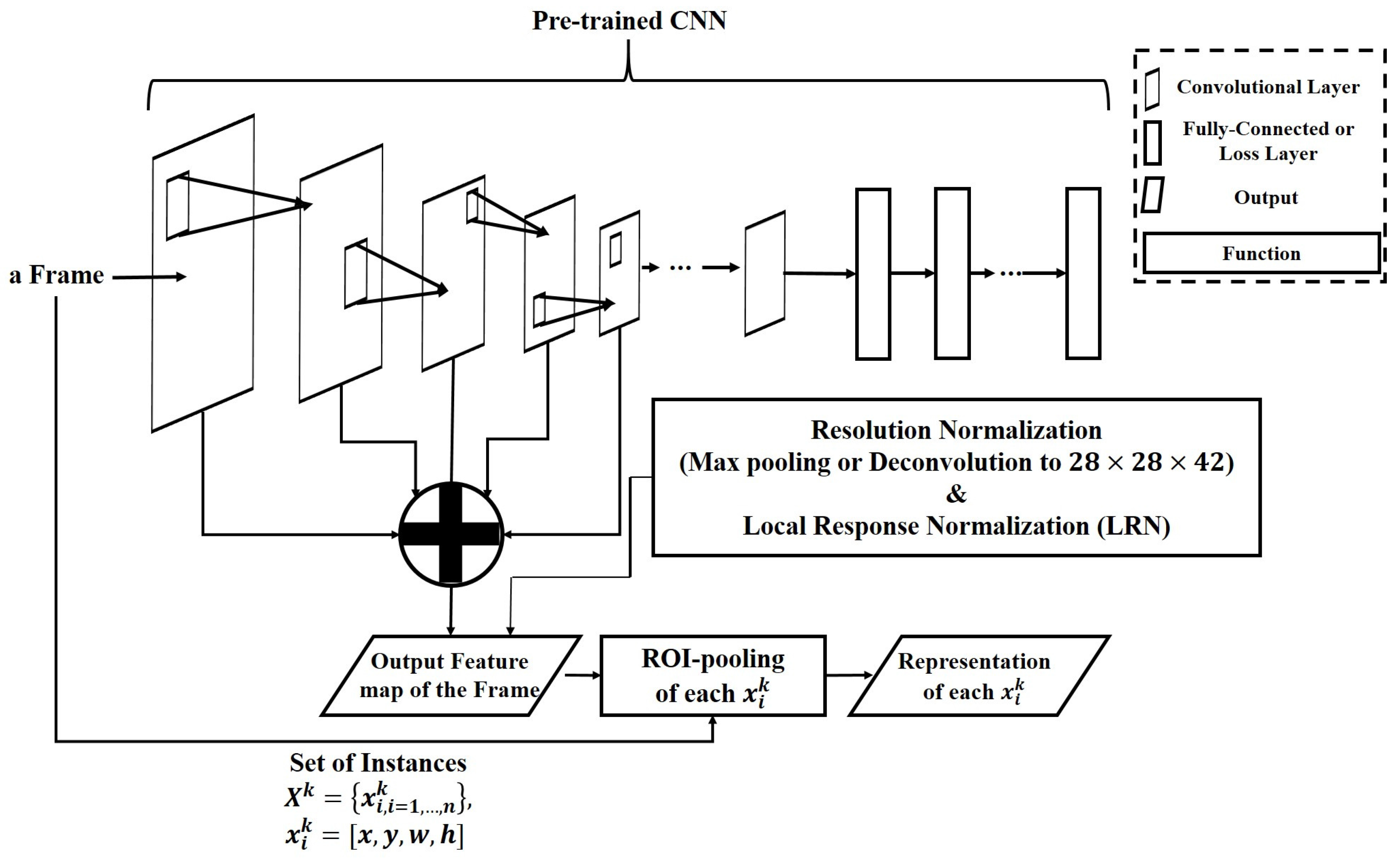

Data representation: In this work, we used the VGG-16 network [

34] pre-trained on the ILSVRC2012 dataset [

47] to represent RGB image data. With the same layered architecture, supervision transfer was applied to the depth map for data representation. The scale-level range was set to five levels because the VGG-16 network has five convolutional layers. To classify the scale-level of instances, we used scale-dependent pooling (SDP) [

35].

Bounding box regression: If we have used a candidate sampling method or detection strategy, we generated a refinement strategy for the bounding boxes extracted from each frame using the method presented by Girshick et al. [

37] to precisely localize the bounding box of target objects. Bounding box regression facilitates accuracy in the tracked target boxes by training four ridge regressors

, where

is the center coordinates of the box and

is the width and height of the box. In our work, the regressors are not updated during testing because of noise.

7.3. Evaluation

We evaluated the proposed method by comparing it with intra-varied MOFTs to validate the performance of our architecture choices for MOFT. The matching target experiment was conducted to validate the suitability of the matching between target objects in frame k and candidates in frame for MOT tasks. In the representation architecture experiment, we observed which pre-trained CNNs precisely represent instances and whether adaptively representing instances in accordance with their scale levels is more suitable than representing in a fixed form. The data modality experiment was conducted to show the effectiveness of the proposed fusion tracking method. To show whether the structured fine-tuning update improves the performance of MOFT or not, we compared MOFT with and without fine-tuning in the update experiment. The proposed matching function was designed for application to any unseen target. The generality experiment was conducted to verify this issue. Finally, we compared the proposed MOFT with state-of-the-art trackers.

The basic setting for the proposed method (the row ours in

Table 1) was as follows: representation using VGG-16 architectures, adaptive representation according to scale levels of the instance, applying supervision transfer to represent depth data, matching targets in frame

k with candidates in frame

and the result fusion using BBMs. All of the models, including the proposed model, were evaluated using RGB and depth fusion trackers (except in the data modality and generality experiments). The depth data were extracted from 3D point cloud sequences from the KITTI tracking benchmark dataset. The shown tracking results validated on KITTI benchmark were measured as the average in the entire object classes.

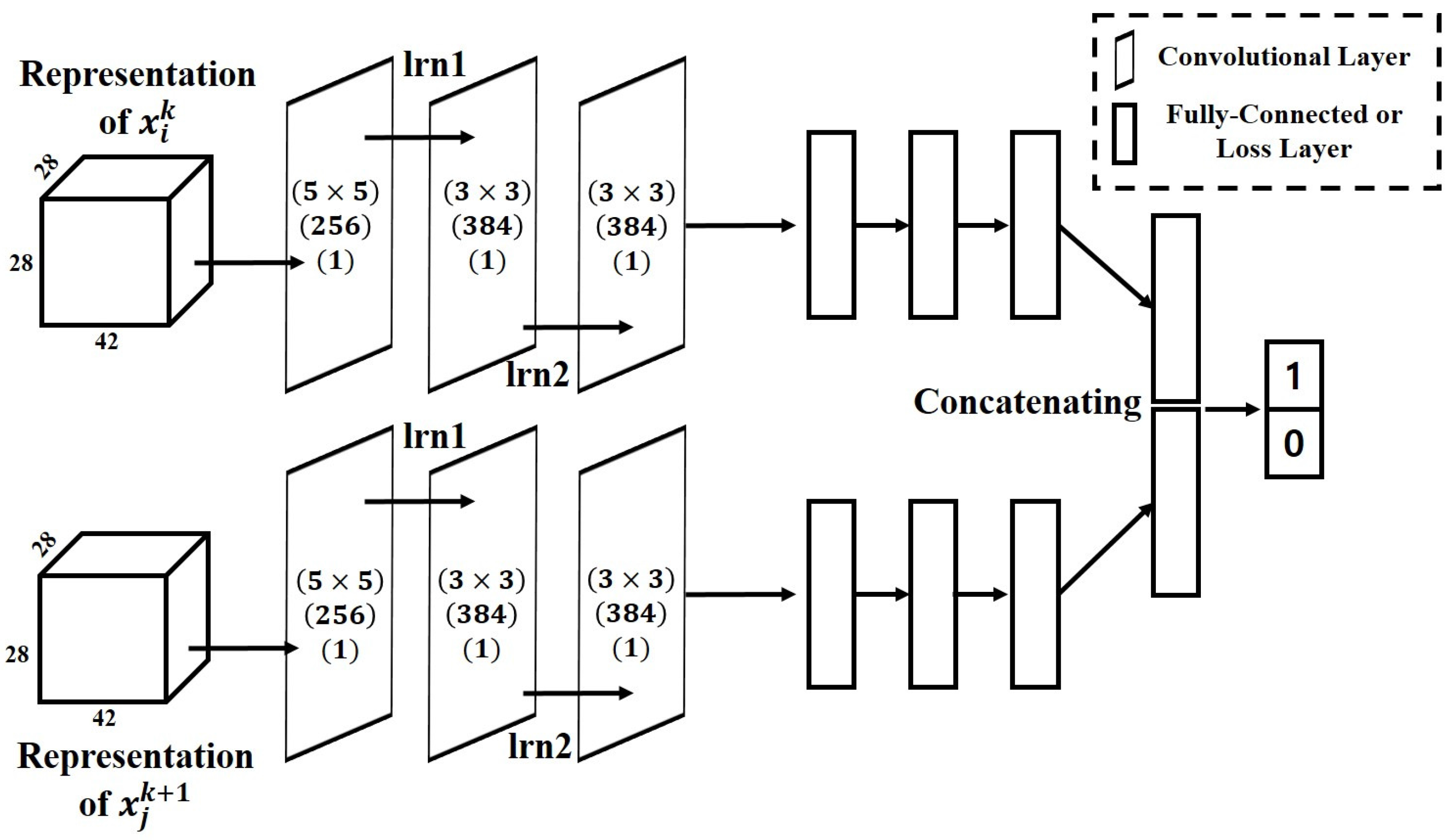

Matching target: To show which target models are suitable for matching with target candidates, we compared ours to a method that matches initialized target objects in the first frame with candidates in the current frame (

). To train the matching function for

, we constructed a dataset in which a pair consisted of a cropped object from the first frame and the corresponding object from the frames remaining in a sequence. As shown in the rows of

and

in

Table 2, the matching between consecutive frames (

) can more accurately track the multiple objects than

in most metrics. Because the target states of the current frame are significantly influenced in the previous frame, noises introduced by temporally-changed states can have a negative effect on the matching function.

Representation architecture: First, we compared the pre-trained network architectures on their ability to represent instances. For a comparison target, we used AlexNet [

48] pre-trained on ILSVRC2012 (

) because it is a popular network architecture that comprises smaller layers than VGG-16. As in our proposed model, we divided the scale into five levels for adaptively representing instances on AlexNet. The rows

and

in

Table 2 show that the representation of instances from the larger network (VGG-16) has better tracking performances on all metrics.

Next, we compared the performances of trackers according to the usages of layers for representation. The following comparison models were used: outputs of

(

),

(

),

(

) and

with

(

) layers from VGG-16. To uniformly feed different sizes of layered representations into our matching network, we applied the sampling scheme described in

Section 4.1 into each layered representation. From the results shown in the rows

and

in

Table 2, it is clear that adaptively representing instances according to their scale levels results in more accurate tracking than representing all scale levels of instances in the fixed layers, because information loss is prevented.

Data modality: To observe accuracy differences in the used data modality, we measured the performance when the tracker was generated on each sensor. was generated on RGB sequences, and and were generated on depth sequences extracted from stereo camera and 3D point clouds, respectively. As shown in the rows , MOTA was the highest when tracking was generated on only RGB sequences, whereas the MOTPs of and were higher than that of . As a result, gave the best performances on all of the metrics compared with . Thus, it is clear that information conflicts generated from modalities can be compensated by combining the tracking results of modalities.

Update: To show that the proposed structured fine-tuning makes MOFT become robust, we compared with MOFT without the fine-tuning procedure. As a result, the entire metrics are high for MOFT tracked objects with the structured fine-tuning. As shown in the rows , however, the performance of MOFT without the structured fine-tuning is not a low.

Generality: This experiment was used to verify that the proposed matching function can be generally applied without references to test sequences. To this end, we evaluated the trackers in two ways: (1) training and testing the matching function on the same dataset and (2) training and testing the matching function on different datasets. This evaluation was conducted on both the MOT [

43] and KITTI [

42] benchmark datasets. Because the MOT benchmark does not include depth data, the trackers only tracked objects on RGB sequences in this experiment.

Table 3 shows the dataset used for training and testing and the resulting performances. The first row in

Table 3 is the same as

in

Table 1 and

Table 2. As a result, although MOFTs for which training and testing are performed on the same dataset can track objects more accurately than other cases, there was no significant performance degradation when comparing the first and third rows or the second and fourth rows.

State-of-the-art comparisons: One of the advantages of MOFT stated above is that it is robust to distortion factors because of a matching function trained on external video sequences offline. Further, information conflicts can be compensated by employing a modality fusion scheme.

Table 4 shows the tracking performances on the MOT16 dataset.

Table 5 and

Table 6 show the tracking performances on the KITTI tracking benchmark. On the MOT benchmark, MOFT provides the best performance in terms of MOTA and MT. On the KITTI benchmark, MCMOT-CPT, which uses a general CNN detector, has the higher performances on the car category, while the MOFT has the higher performance on the pedestrian category. Generally, the instances of pedestrians have a low resolution while an instance including car has an adequate resolution, which can avoid information losses raised from passing many layers of CNNs. In other words, if an instance including a pedestrian was passed in many layers of CNNs, information losses can be easily introduced. Therefore, the MOFT can track multiple objects without category-dependence because the MOFT adaptively represents instances according to their sizes. Further, MOFT achieved state-of-the-art performances on the other metrics.

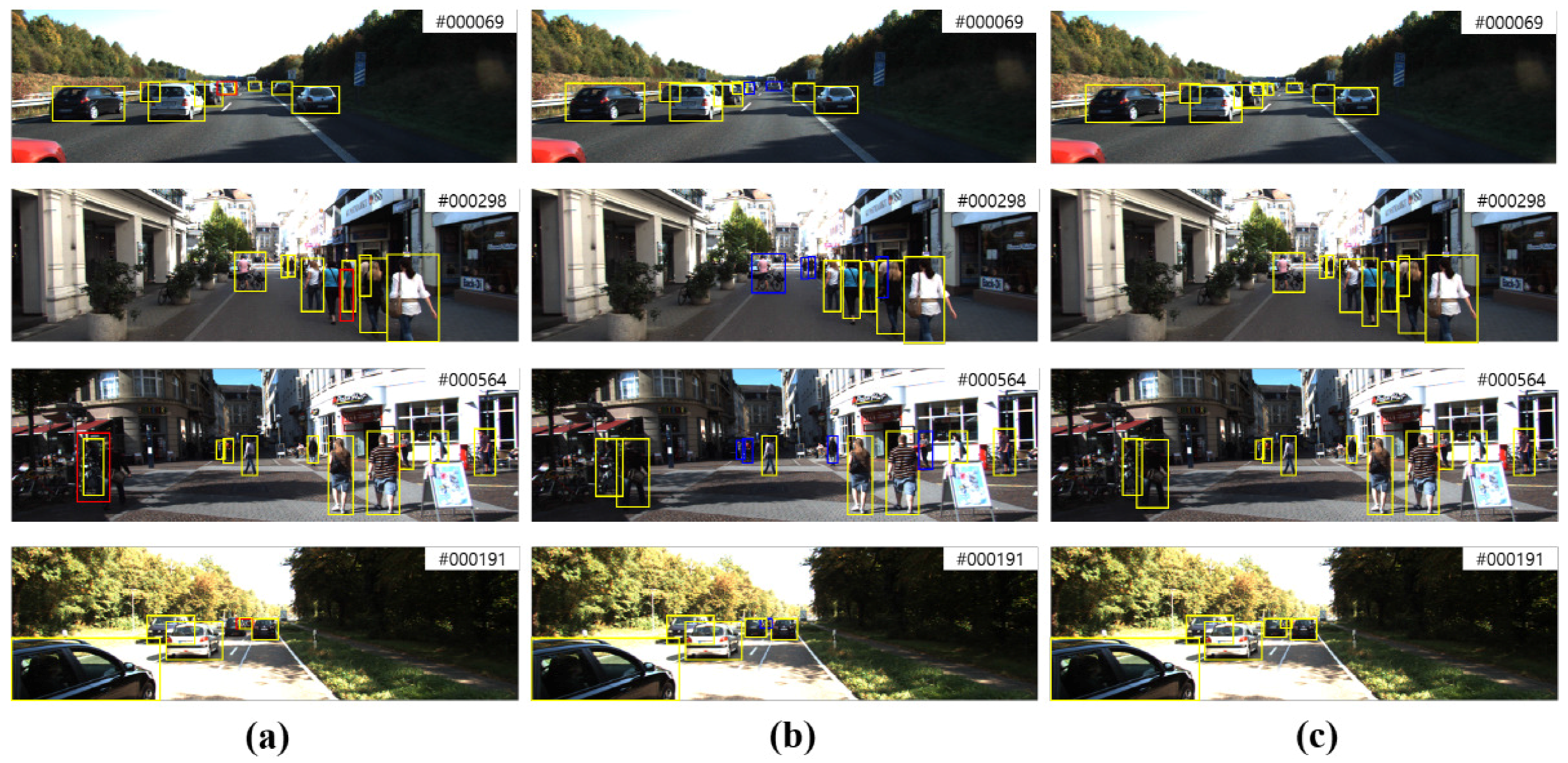

Qualitative results: MOFT was only generated on the KITTI benchmark dataset to qualitatively evaluate the proposed tracker because the MOT benchmark does not include depth data.

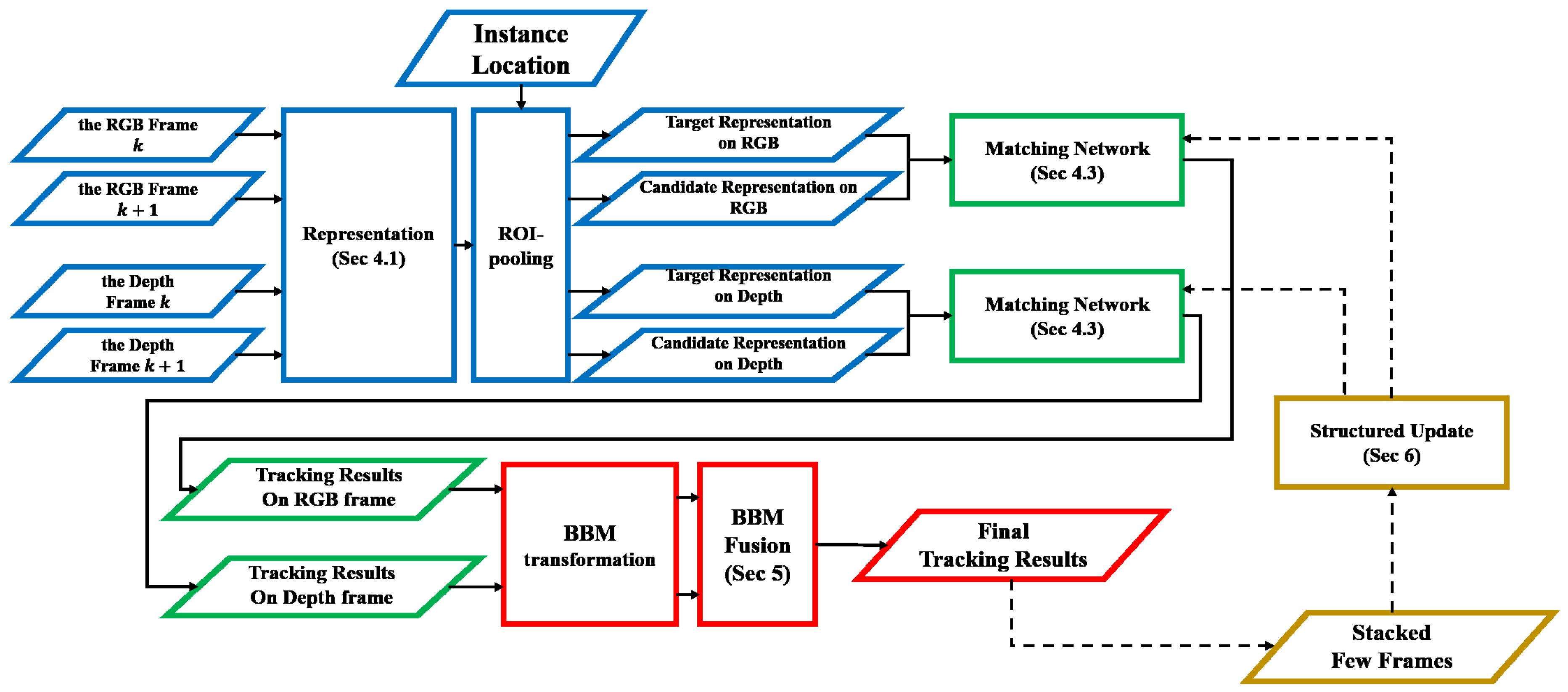

Figure 5a,b depicts the tracked targets of each tracker on RGB and depth (from 3D point clouds) frames, respectively.

Figure 5c shows the tracked targets of MOFT. It can be seen that, whereas the bounding boxes are shifted to similar objects in the RGB tracker, the depth tracker cannot precisely track the distant targets. MOFT compensates the limitations of each modality. As shown in

Figure 5, the tracking failures are overcome.

Although a modality fusion procedure is applied with discounting factors in MOFT, tracking failures from the failures of each modality still occurred. On the top of

Figure 6, bounding box shifting and missing between similar targets can be observed. This may be as a result of the influence of the RGB tracker. On the bottom of

Figure 6, distant objects are considered as disappearing objects. Even though they maintain tracking-available sizes for the RGB tracker, they may be overlooked in the depth tracker.