1. Introduction

A road sign recognition system can technically be developed as part of an intelligent transportation system that can continuously monitor the driver, the vehicle, and the road in order, for example, to inform the driver in time about upcoming decision points regarding navigation and potentially risky traffic situations. Road sign detection and recognition [

1] is an essential part of the Autonomous Intelligence Vehicle Design (AIVD) [

2]. It is widely used for intelligent driving assistance [

3], self-directed vehicles, traffic rules and regulation awareness, disabled (blind) pedestrian awareness and so on. On the other hand, road sign detection and recognition can also be a part of self-driving car [

4] technology to determine the road-traffic environment in real-time.

Detection and recognition is one of the most challenging tasks in the field of computer vision [

5] and digital image processing to detect a specific object in a real-time environment [

6]. Researchers are paying more attention in intelligent transportation systems [

7]. Some of them have successfully implemented road sign recognition methods to detect and recognize red-colored road signs [

8] only or single classes of road signs [

9,

10,

11,

12,

13], and some of them have used specific country road signs [

7,

14,

15,

16]. In this field, a group of researchers has already shown distinguished performance based on annotated road signs [

14,

16,

17]. Overall, for a standard road sign recognition approach, further improvements are needed.

The aim of this research was to overcome the current limitations of road sign recognition such as single-color or single-class and specific country’s road signs. It is quite challenging to fulfill the road sign recognition task in a real-time changing environment. Among the many issues that must be addressed are the low light conditions, fading of signs, and most importantly, non-standard signs, which also degrade the performance of the road sign recognition system.

Road signs which are designed for human eyes, are easily detectable and recognizable [

16], even with significant variations, but for a computer vision system, small variations cannot be adjusted automatically, so it needs proper guidance. Standard color and shape are the main properties of standard road signs [

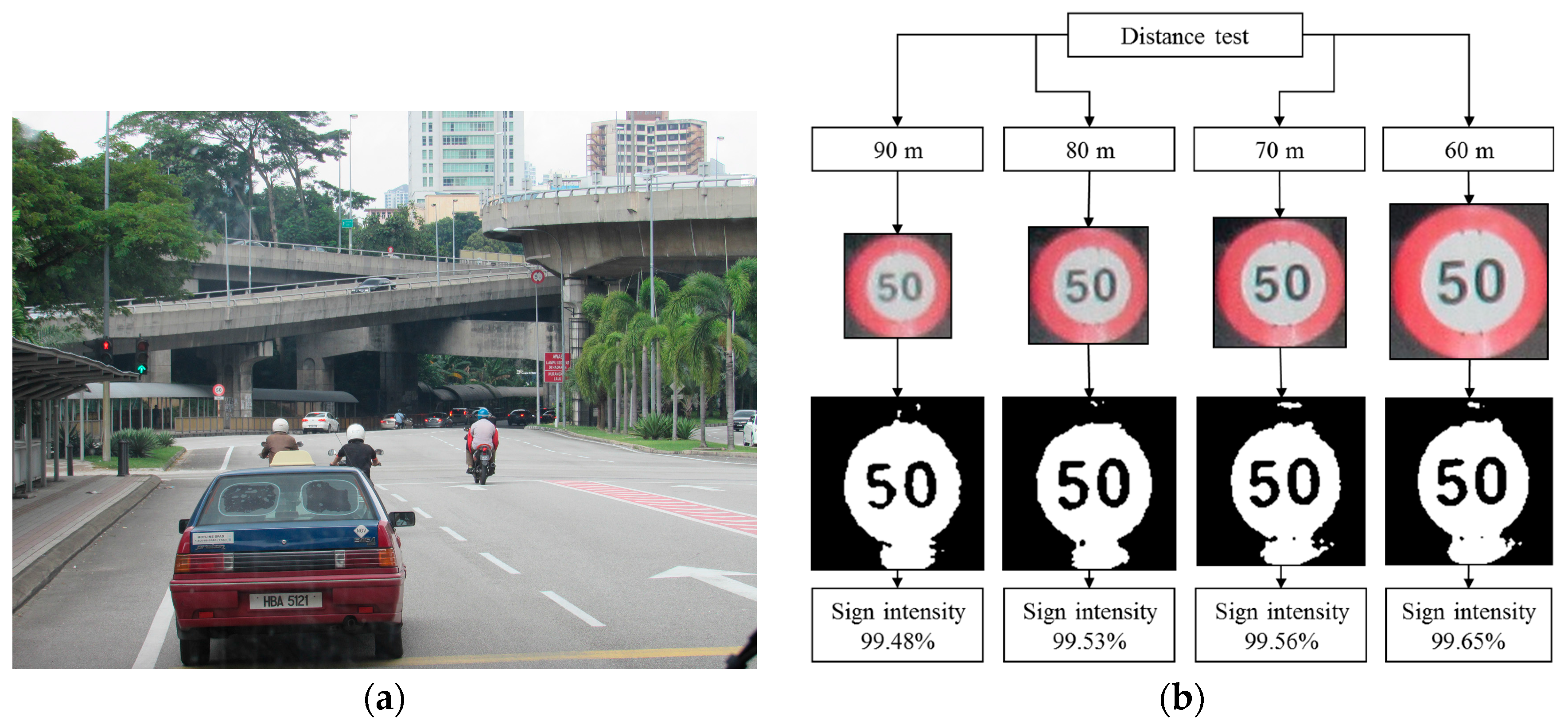

18]. Though the road sign has state of the art, various natural issues and human errors cause variations in color, shape, or both. For instance, multiple non-standard road signs may be found on Malaysian highways, as seen in

Figure 1b.

This paper focused on the standard and non-standard road sign detection and recognition. Accuracy is a key consideration, because one misclassified or undetected sign could have an adverse impact on the driver. The main objective of this research is to develop a robust hybrid algorithm that can be used in a wide range, to evaluate the system performance with other existing methods and eventually to evaluate the classification algorithm performance. The proposed method consists of the following two stages: (1) detection and (2) recognition.

Detection is performed by using video frame segmentation and hybrid color segmentation algorithms. This hybrid color segmentation algorithm contains a RGB histogram equalization, RGB color segmentation, modified grey scale segmentation, binary image segmentation, and a shape matching algorithm. A RGB color segmentation algorithm is used for subtracting red (R), green (G) and blue (B) components of the input image. In the next step, a RGB to grayscale converter is used to convert the subtracted image into a grayscale image. Then, a 2-dimensional 3-by-3 median filter is used to remove existing noise from the grayscale images. Next, it replaces all the input image pixels’ value with luminance that is greater than 0.18 to 1 as a white pixel, and all others pixels are replaced to 0 as a black pixel. From this process, a grayscale image is converted into a binary image. A threshold level of 0.18 is used for this conversion process, because it gives the best performance for this system. After that conversion, the first step is removing all the small objects from the binary images which contain less than 300 pixels and then labeling all connected components using 8-connected objects. The next step is measuring of the image region properties, and how many candidates are available on that binary image is found. This is the target candidate to identify as a road sign. Then from the target candidate, the algorithm determines the position (X, Y-coordinate), height (H), and width (W) of every single object accordingly. For the candidate selection, candidates which have a height (H) and width (W) ratio close to 1, are considered as a target candidate. Based on the selected candidates’ properties, the sign image is cropped from the original RGB input frame. That input frame is also a high resolution RGB image with target objects. Here, the detected target road sign contains enough pixel information because it is extracted from an original RGB input frame. Initially, that detected road sign image is resized to 128-by-128 pixels. Then that RGB image has been converted into a grayscale image and existing noise removed by using 3-by-3 2-dimensional median filter. Then, the grayscale image is converted into a binary image with an average grayscale histogram level. All these algorithms are tested using thousands of images. The hybrid color segmentation algorithm has eventually been chosen for this proposed system as it shows the best performance for detection of road signs. Finally, a robust custom feature extraction method has been introduced for the first time in the road sign recognition system to extract multiple features from a single image.

Training images are collected by acquiring appropriate frames from video sequences, which were captured on different roads and highways in Malaysia in a real-time environment. For the recognition, an artificial neural network is implemented by using the Neural Network Pattern Recognition Tool with MATLAB. The standard network that is used for pattern recognition, is a two-layer feedforward network, with a sigmoid transfer function in the hidden layer, and a softmax transfer function in the output layer. Real-time video frames goes through this network. However, signs which may completely be obscured by other vehicles or trees may not be recognized, although the system recognizes and interprets various standard and non-standard road signs using vision-only information. It has reached an exceptionally high recognition accuracy.

In this work, in order to achieve robust, fast detection and recognition of road signs, a hybrid color segmentation algorithm with a robust custom feature extraction method has been proposed. This feature extraction method achieves robustness in improving the computation efficiency. Further, in order to reduce the classification time, ANN-based classification which has been selected with comparing the classification performance among with other classifiers, is implemented. Experimental results show that this work achieves robust road sign recognition in comparison to the other existing methods, and achieves high recognition accuracy with low false positive rate (FPR). This low FPR can increase the system stability and reliability for real-time applications. Multiple robustness testing results have indicated that this proposed system is able to achieve promising performance, even in adverse conditions.

2. Related Work

Various road sign detection and recognition methods and algorithms have been developed [

19,

20,

21,

22,

23,

24]. All researchers are implementing their methods to achieve a common goal [

25]. Some researchers have done the detection [

26,

27,

28] part, some are tracking the detection and a few have described effective recognition parts [

29,

30]. According to Paclík et al. [

31], an automated traffic sign detection system was introduced for the first time in Japan in 1984. In the field of road sign recognition, the most common approach has two (2) main stages which are firstly detection and secondly recognition. The detection stage works to identify the proper region of interest (ROI) and color segmentation algorithms are mostly used. This detection stage is performed and followed by some form of shape detection and recognition. In the recognition stage, detected candidates are either rejected or identified with some recognition methods, for example, shape matching [

32] and some other form of classifiers such as ANN [

15], support vector machine (SVM) [

7,

33,

34], clustering [

35], and fuzzy logic [

9].

Mostly, the color information method is used for image segmentation to make up the majority of system’s detection part [

36,

37,

38,

39,

40,

41,

42,

43]. A color matching method was introduced by De La Escalera et al. [

44] where they used it to look for patterns in a specific correspondence relationship to rectangular, triangular and some circular signs. However their proposed method faced some difficulties regarding different road signs with the same shape. For sign recognition, a physics-based [

45] method was used, but it needed to keep in memory changes in the parameter model to accommodate the natural variation in illumination [

46]. A neural network [

47] was used to recognize road and traffic signs for an intelligent driving assistance system, but this system showed some contradictory road and traffic sign pattern results with complex image backgrounds. A real-time road and traffic sign detection plus recognition system were developed by Ruta et al. [

48] to perform the recognition from a video using class-specific discriminative features. An automatic colored traffic sign detection system [

49] was developed by using optoelectronic correlation architectures. A real-time road and traffic sign recognition system were introduced by Deshmukh et al. [

50] which was based on color image segmentation. They used a segmentation technique which was more difficult because the system had been developed in C language that was not so strong in comparison to MATLAB or OpenCV. Therefore, it can be concluded that the main difficulties of color-based road sign detection and recognition systems are illumination, adverse weather conditions and poor lighting conditions.

The Optical Character Recognition (OCR) [

51] tool “Tesseract” was used to detect text in road and traffic signs. The results showed a higher accuracy compared to the HOG-SVMs system. A unique system for the automated detection and recognition of text in road traffic signs was proposed by Greenhalgh et al. [

52]. A half structure was employed to outline search regions inside the image, within which traffic sign candidates were found. Maximally stable extremal regions (MSERs) and HUE, saturation and color thresholding area units were used to find an oversized range of candidates, after that those units were reduced by applying constraints supported by temporal and structural data. The strategy was relatively evaluated and it achieved an overall F-measure of 0.87.

Visual sign information extraction and recognition remain challenging due to the uncontrolled lightening conditions, occlusion, and variations in shape, size, and color [

53]. Gil-Jimenez et al. [

54] introduced a novel algorithm for shape classification which was based on the support vector machine (SVM) and the FFT of the signature of the blob. Khan et al. [

32] investigated image segmentation and joint transform correlation (JTC) with the integration of shape analysis for a road sign recognition method. Their experimental results on real-life images showed a high success rate and a very low false hit rate. For the traffic sign detection, a hybrid active contour (HAC) algorithm was proposed by Ai et al. [

55]. It dealt with a location probability distribution function (PDF), statistical color model (SCM), and global curve length and it was further improved by a new geometry-preserving active polygon (GPAP) model [

56]. A video-based detection and classification of traffic signs which were based on color and shape benchmark, was investigated by Balali et al. [

57]. They also introduced a roadway inventory management system based on the detection and classification of traffic signs from Google Street View images [

58]. Chen et al. [

59] presented a new traffic sign detection method by combining both the AdaBoost algorithm and support vector regression (SVR) which achieved fast and accurate detection.

From the related work, road sign colors represent the key information for drivers. Color is a significant source of information for the detection and recognition of road and traffic signs. As their colors are characteristic hallmarks of road and traffic signs, color can simplify this process. An important part of any color-based detection system is “color space conversion”, which converts the RGB image into other forms that simplify the detection process. This means that color space conversion separates the brightness of color information by converting RGB color space to another color space. This gives a good detection capability depending on the color of the tail. There are many color spaces available in the related works, namely, HIS [

60], HBS, HSV [

61], IHLS [

62], L*a*b* [

40] color system YIQ [

60] and YUV [

63]. Saturation color systems are mostly used in the detection of road signs, but the other color systems are also used for this task.

Various techniques are commonly used in the recognition of road signs as presented above. Some of those techniques may be combined with others to produce a hybrid recognition system. In comparison to the recognition based on color and shape, this approach may have several limitations. Gaps in the color-based recognition, such as those caused by weather conditions and faded road signs can be offset by using shape-based recognition which may give more superior performance. For color-based recognition, most approaches can work considerably faster in indexing color. Although the indexing color can segment an image when the sign of the road is slightly inclined or partially occluded, its calculation time increases sharply in a complex background. Therefore, color indexing is not ideal for implementation in real time applications. Color thresholding, on the other hand, may not be robust when the weather conditions are bad or a sign is faded.

For a Malaysian road and traffic sign recognition system, Wali et al. [

8] developed a color segmentation algorithm with SVMs and their system performance was 95.71%. This is not sufficient for a complete stable system, so it needs further research to implement a stable version of their road and traffic sign recognition system.

After surveying different research works, the objective of the proposed system is to present a fast and robust system for road sign recognition which is a real-time vision-based. For the first time in a road sign recognition system, a robust custom feature extraction method is introduced to extract multiple features from a single input image. In this proposed approach, for reducing the processing time, a hybrid segmentation algorithm with shape measurement-based detection, and an ANN with custom features extraction are used to recognize road signs that can account for multiple variations in road signs. This hybrid segmentation algorithm is used to make this detection and recognition more robust to changes in illumination.

4. Experimental Results

The experiment may take place in number of steps. An Intel Core-i5 2.50 GHz CPU computer with 4 GB of RAM is used to run this program to recognize road signs. The prototype is developed within the MATLAB environment. The image processing toolbox, computer vision toolbox and neural network toolbox are used to implement this system.

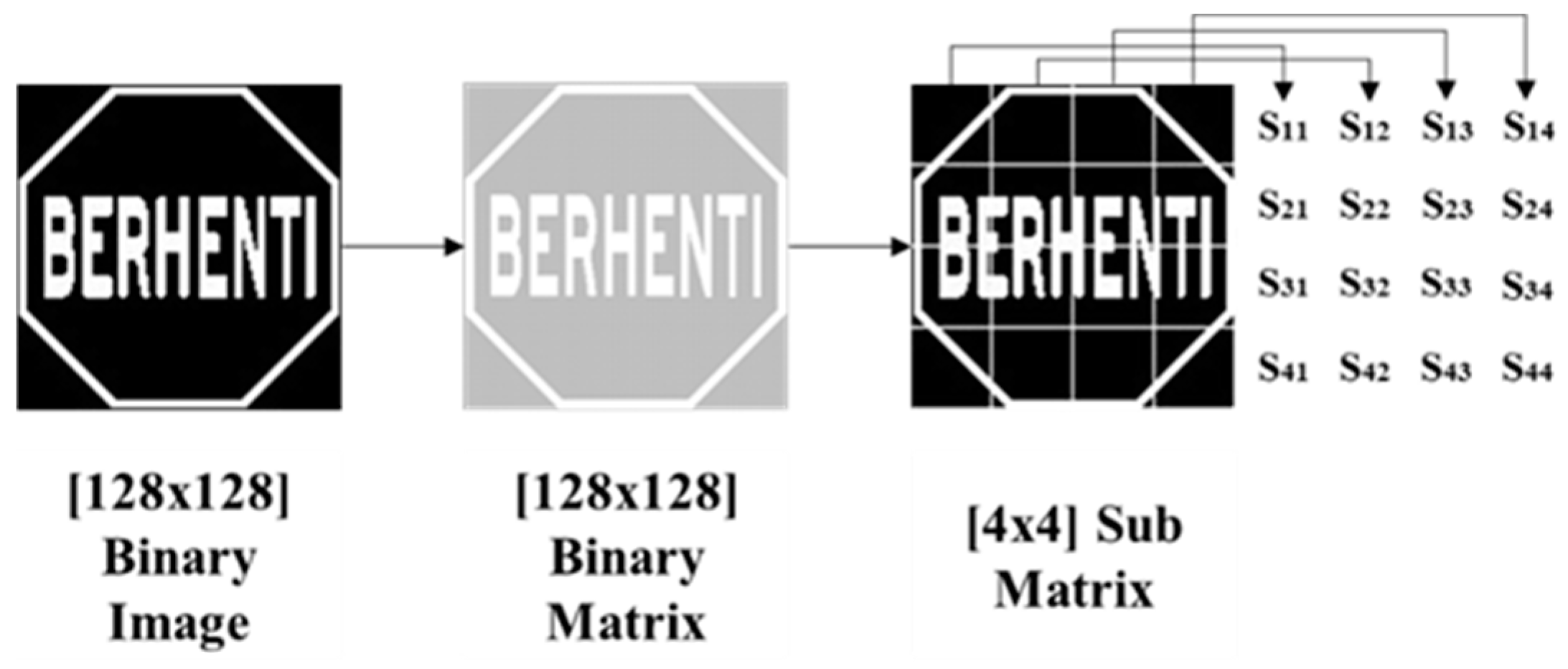

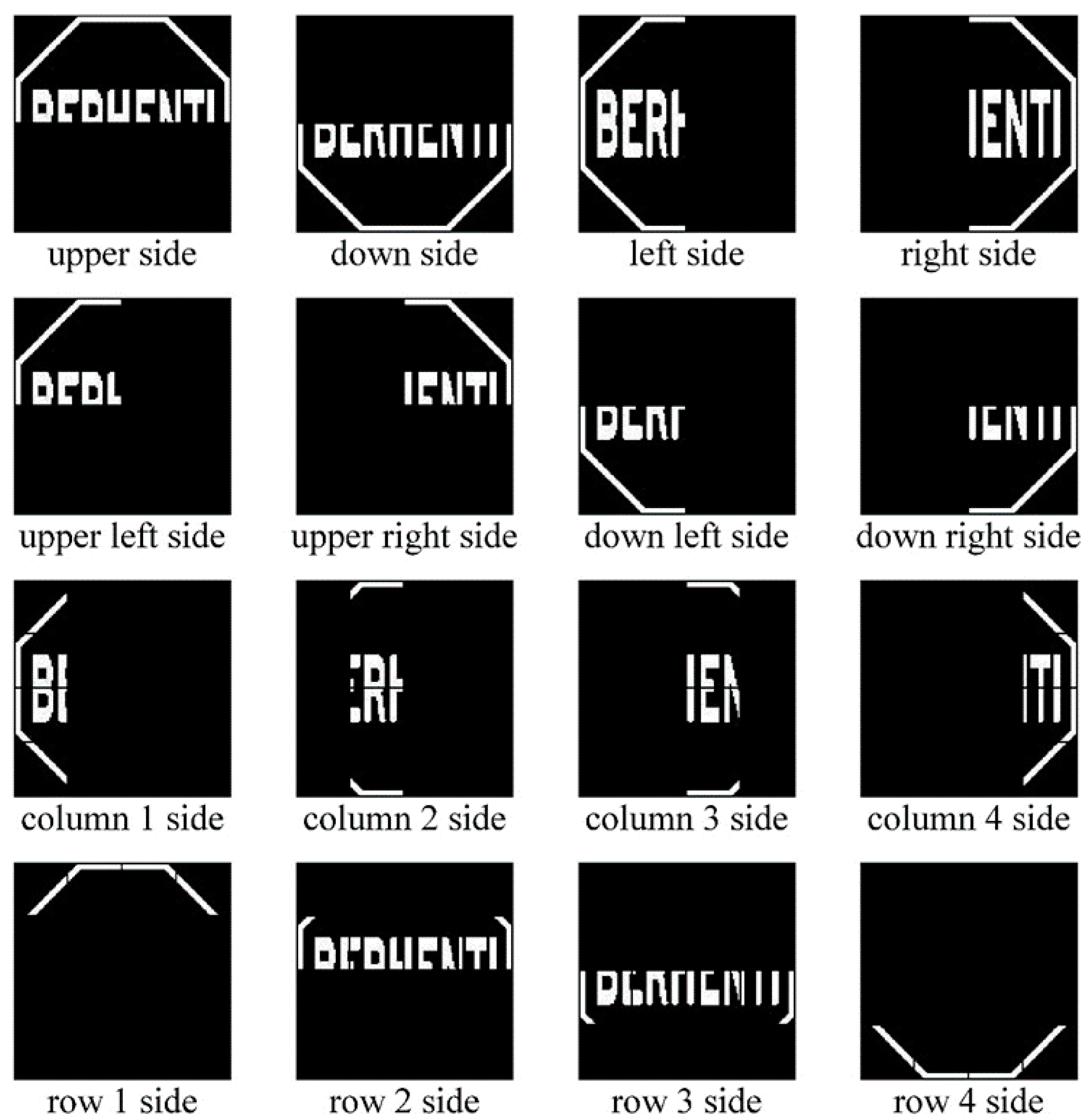

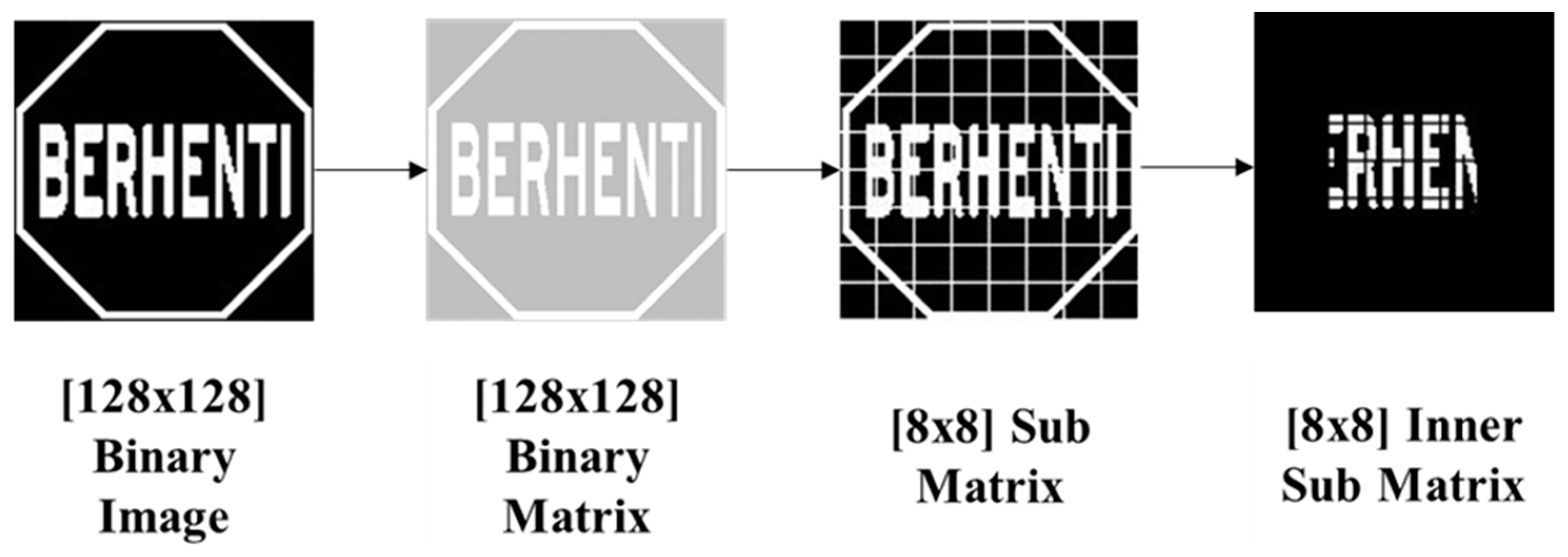

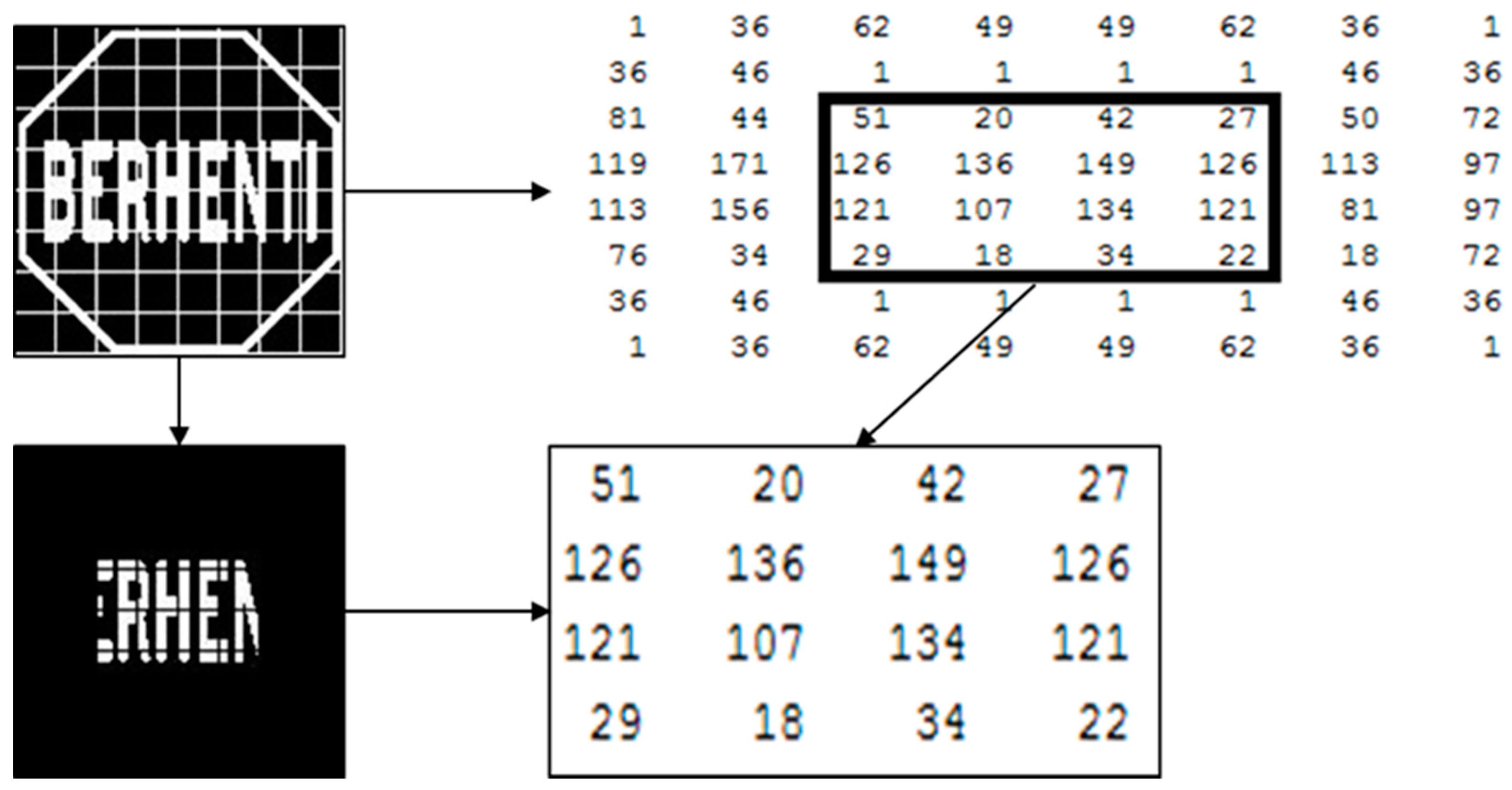

A digital camera was mounted on the dashboard of a moving vehicle to capture video from a real-time environment. This video is segmented frame by frame with 1 s intervals, and it went through a hybrid color segmentation algorithm to identify the available or not road sign candidates. This hybrid color segmentation algorithm contains a RGB histogram equalization, RGB color segmentation, modified grey scale segmentation, binary image segmentation and shape matching algorithm. This hybrid color algorithm determines the exact position and properties of the target road sign. Then, according to that position and properties, the target road sign is extracted. At this point, there is no valuable information loss because the target road sign is extracted from the original image frame. This extracted image is converted into a grayscale image and normalized to 128-by-128 pixels. The normalized image is smoothened by a noise removal algorithm and it is converted into a binary image. This candidate image passes through the feature extraction process to extract the 278 feature vector. This feature vector is used to train the artificial neural network for recognition of the road sign.

4.1. Training, Testing, and Validation Data

To get an efficient response from the network, it is necessary to have a reasonable number of training samples. For this proposed system, a set of 100 samples is used for each class of road sign and 1000 samples for 10 classes of road sign. The extracted 1000-by-278 features vector is used as a ANN input data set for training, testing, and validating the network.

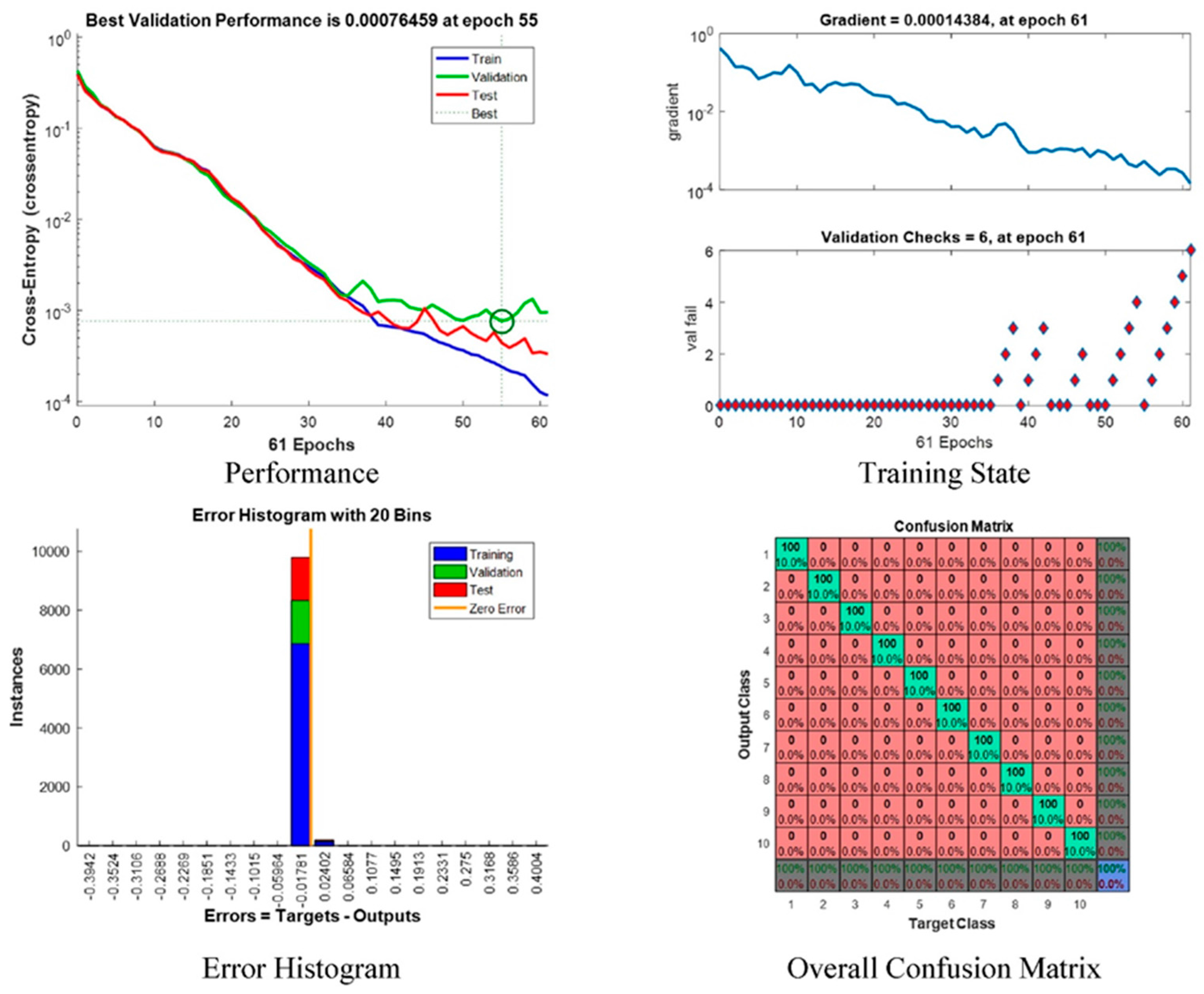

Figure 11 shows the neural network’s performance, training state, error histogram, and overall confusion matrix. From the performance plot, the cross-entropy error is maximum at the beginning of training. For this proposed system, the best validation performance is at epoch 55, and at this point the cross-entropy error is very close to zero. On the training state plot, the maximum validation check 6 at epoch 61 and at this point, the neural network halts the training process to give best performance. The error histogram plot represents that the error of this proposed system is very close to zero. An overall confusion matrix is three sets of combined confusion matrices, which are the training confusion matrix, validation confusion matrix, and testing confusion matrix. This overall confusion matrix plot shows 100% correct classification for this proposed system.

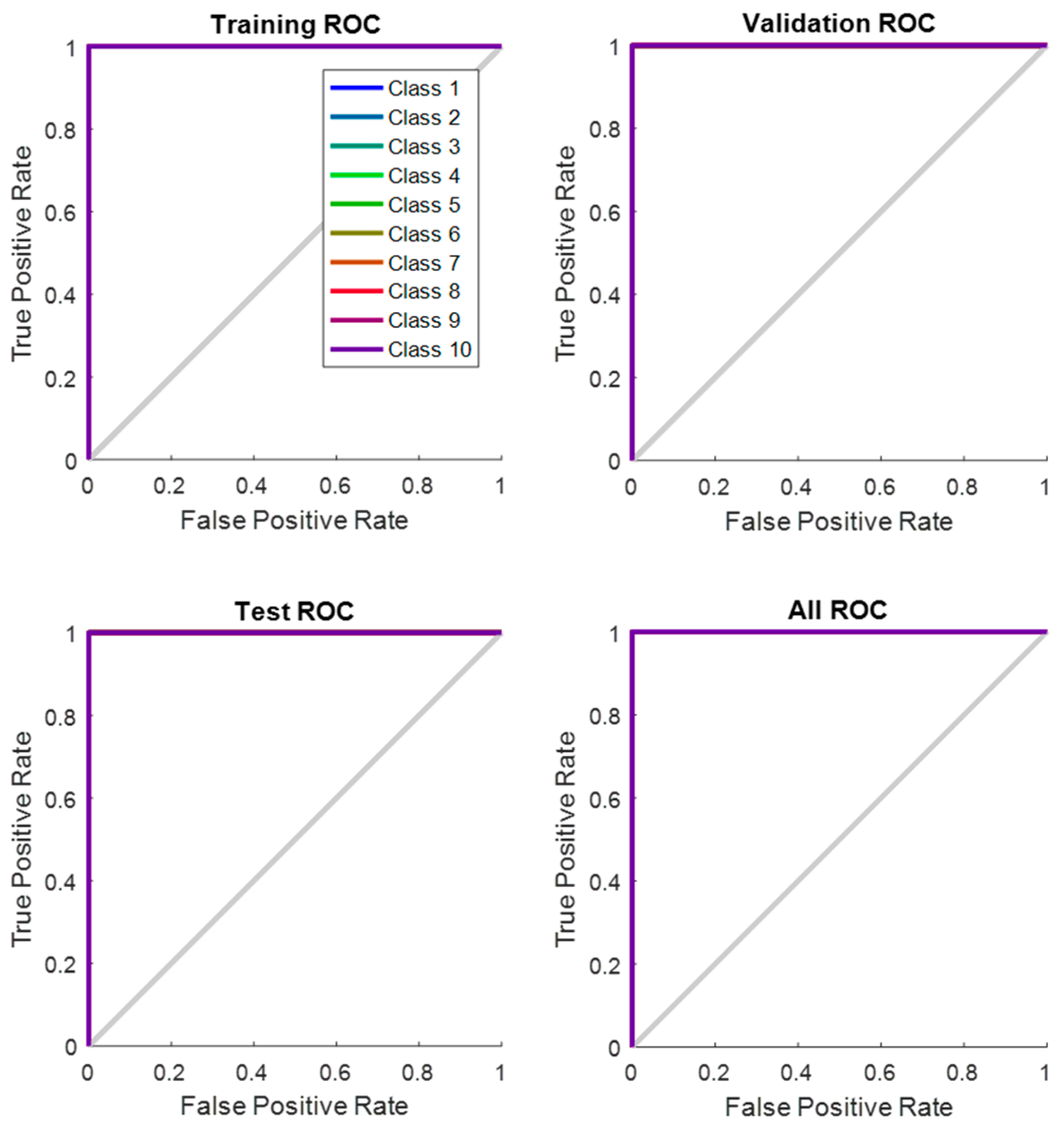

Receiver Operating Characteristic (ROC) curve of the network which illustrates true positive rate verses false positive rate at various threshold settings of the network, is shown in

Figure 12. Area under the curve (AUC) shows a maximum perfect result for this proposed system. At the neural network train, test and validation conclusion, this network performs 100% correct classification of 10 classes of road sign.

4.2. Experiment with Malaysian Traffic Sign Database

Traffic signs are set up along the roadside, as an indication to instruct a driver to obey some traffic regulation. Some traffic signs are used to indicate a potential danger. There are two different groups of traffic signs in Malaysia: ideogram-based and text-based sign. Ideogram-base traffic signs use simple ideographs to express the meaning while the text-based traffic sign expression contains text with other symbols such as arrows.

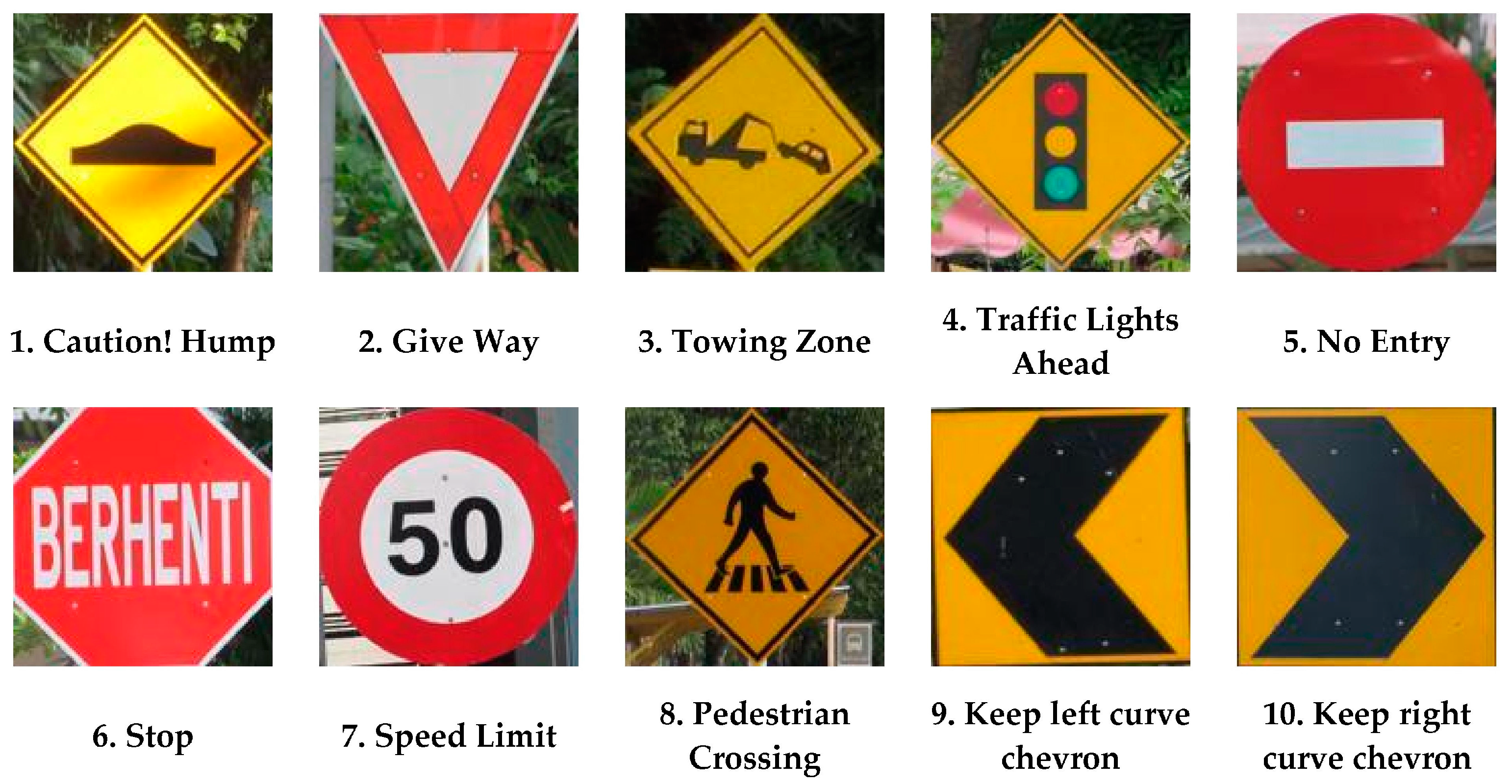

The Malaysian traffic sign database [

64,

65] consists of 100 classes of traffic sign used in Malaysia. Some examples have been shown in

Figure 13. From this database, 10 classes of traffic sign are extracted as proposed for this system are shown in

Figure 14.

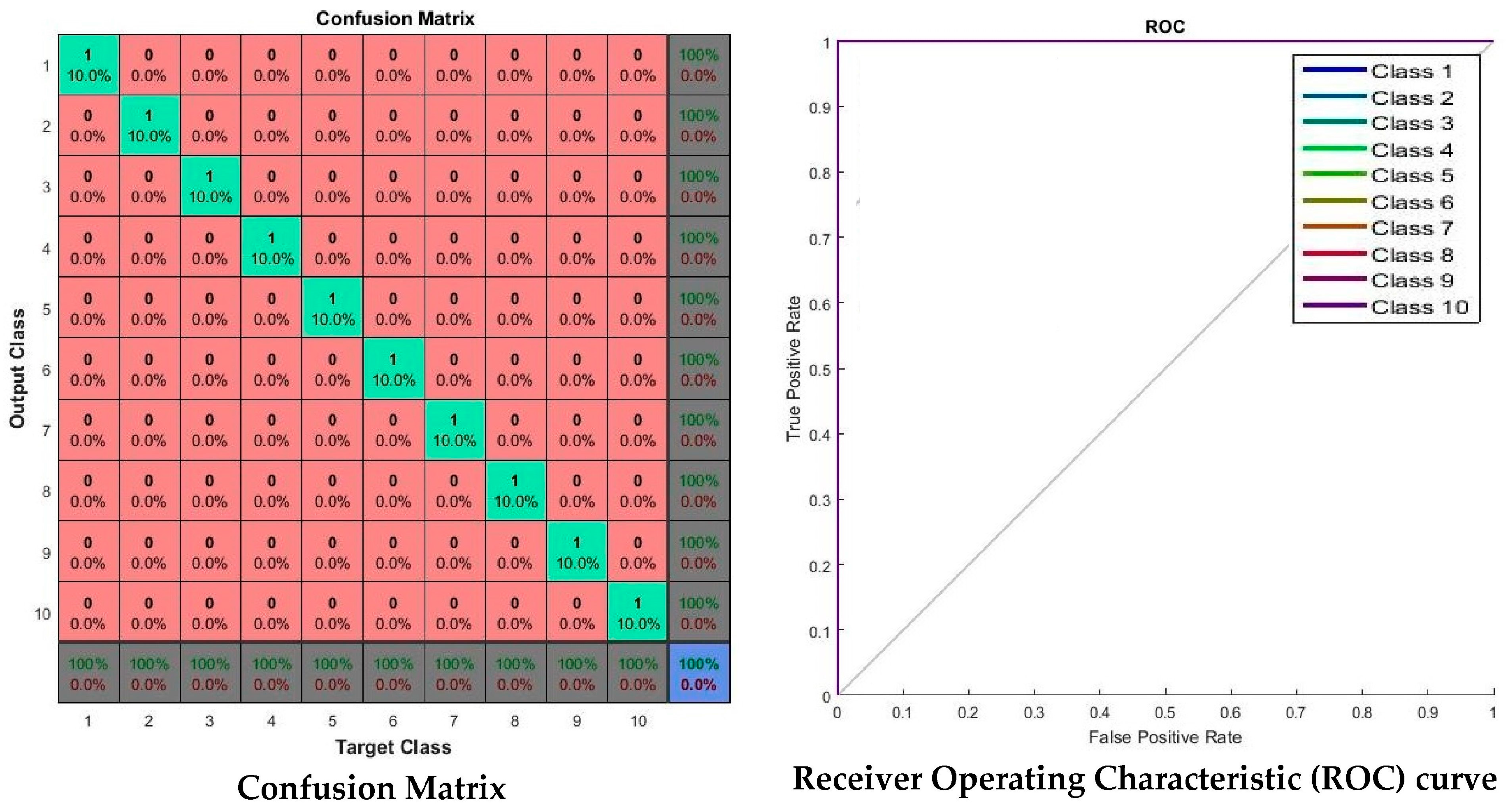

Figure 15 is the experimental result with Malaysian traffic sign database which shows that all 10 classes of road signs are correctly classified and there are no misclassifications. The Receiver Operating Characteristic (ROC) curve shows all classes of road signs achieved the maximum area under the curve (AUC) and it shows a maximum perfect result for the Malaysian Traffic Sign Database.

4.3. Experiment with LISA Dataset

The LISA dataset [

66] contains a large number of United States (US) traffic signs. The LISA dataset covers 47 type of US traffic signs, with a total of 7855 annotations on 6610 frames. The sign image sizes vary from 6-by-6 to 167-by-168 pixels and the full image frame sizes vary from 640-by-480 to 1024-by-522 pixels. To conduct the experiment with the LISA dataset, 20 class of US traffic sign image samples are taken into consideration because they are also commonly used in Malaysia. The extracted sign type and number of extracted signs are listed in

Table 4.

A total of 1371 US traffic signs are used to evaluate the proposed methodology. The performance result is shown in

Table 5.

Table 5 shows that the training number 5 has a better result because it has the lower error percentage (8.75273 e

−1) then the other four training methods. This training number is considered for evaluating the performance. The performance is evaluated based on the confusion matrix and receiver operating characteristic (ROC) curve.

Figure 16 shows some examples of LISA traffic signs.

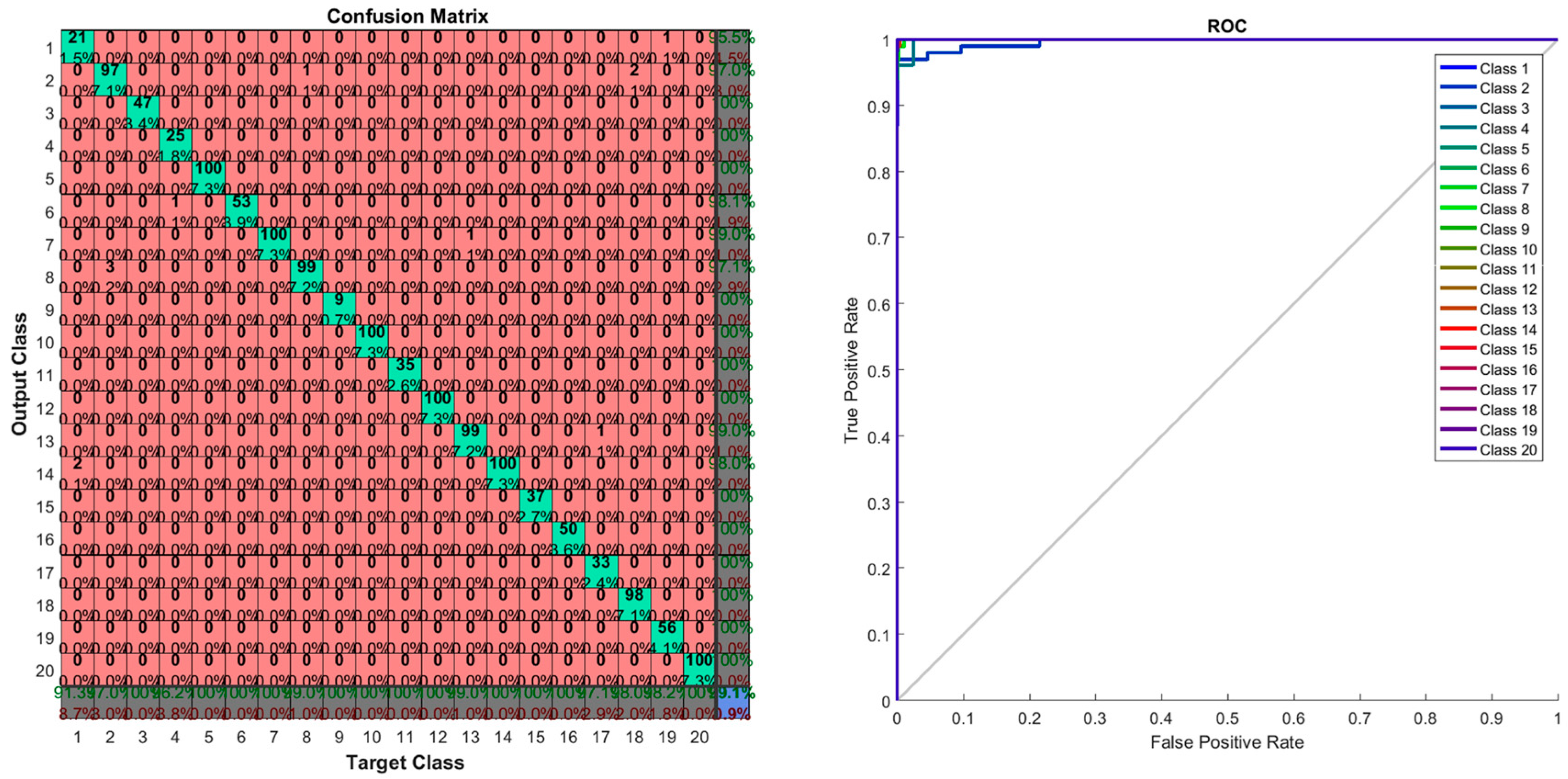

The confusion matrix and ROC curve are shown in

Figure 17. In the confusion matrix, the red cubes present the incorrect classification and the green cubes present the correct classification according to the output class and target class. On the right bottom corner, the blue cube shows the overall percentage of the classification which in this case is 99.10% of correct classification performance. The ROC curve shows the area under the curve of every (AUC) 20 class testing samples. All testing class samples have achieved the maximum area under curve (AUC) except a few testing class samples that are misclassified as other classes. On the ROC curve, the top left corner shows that a small amount of classes do not achieve the maximum area under curve (AUC). At the conclusion of the experiment with the LISA dataset, the proposed method gives the desired classification performance.

4.4. Experiment with Convolution Neural Network (CNN)

The proposed method has been tested with a CNN which has two hidden layers to classify traffic signs. Firstly, the hidden layers have been trained individually in an unsupervised fashion using autoencoders. Then a final softmax layer has been trained, and joined with the two hidden layers to form a deep network, which is trained one final time in a supervised fashion. A total of 1000 real-time traffic sign samples are used to get the experimental results with CNN.

Figure 18a shows the architecture of CNN and

Figure 18b shows the CNN confusion matrix. The number in the bottom right-hand square of the confusion matrix gives the overall accuracy, which is 94.40%.

4.5. Experiment with Real-Time

Real-time test images are collected by acquiring targeted frames from a video sequence which is recorded from a real-time environment instantly as per

Table 1. For this real-time experiment, 10 classes of sign are selected. Every class of sign contains 100 sample frames which are extracted from the video sequence, and in total 1000 sample frames are used to get real-time experimental results. These selected frames are then passed through the detection process, and the output image is a 128-by-128 binary image. This binary image is converted into a 128-by-128 binary matrix for the feature extraction process, and a 278 feature vector is extracted from each binary image. This feature vector is the input of the ANN to recognize which class of road sign it is.

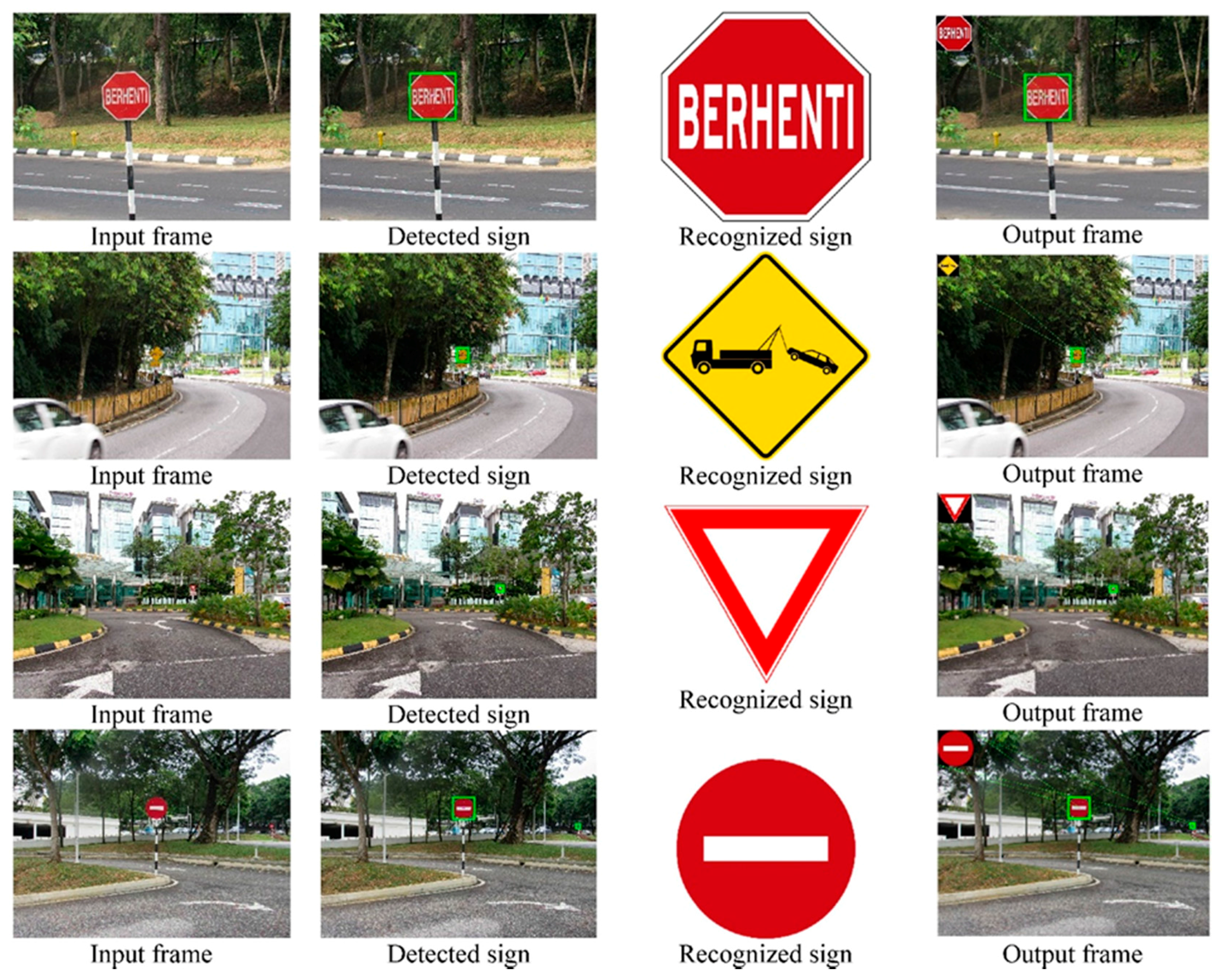

Figure 19 shows some examples of real-time input signs.

Figure 20 shows real-time experimental results of “Stop” sign, “Towing zone” sign, “Yield” sign and “No entry” sign. The first column represents the input frames, the second column is the detected signs, the third column is the recognized signs and the fourth column represents the corresponding output frames.

The real-time experiment confusion matrix and receiver operating characteristic (ROC) curve are shown in

Figure 21. In this confusion matrix, the high numbers of correct responses are shown in the green squares. The low numbers of incorrect responses are shown in the red squares. The lower right blue square illustrates the overall accuracy. A class 8 sign, “Pedestrian Crossing”, was misclassified with a sign class 4, a “Traffic Lights Ahead” sign. The remaining other sign classes are correctly classified. The ROC curve shows that all classes of sign achieved the maximum area under curve (AUC) except class 8. A single misclassification of class 8 occurred due to the numerous (more than 10) non-standard “Pedestrian Crossing” sign formats that exist on actual Malaysian roadsides.

From the confusion matrix of the real-time experiment data, the evaluation parameters are precision, sensitivity, specificity, F-measure, false positive rate (

FPR), and accuracy rate (

AR) which are based on the number of true positive (

TP), false positive (

FP), true negative (

TN), and false negative (

FN) values as indicated in

Table 6. True positive (

TP) and true negative (

TN) are defined as the traffic signs that are correctly recognized as the correct class and when other classes of traffic signs are correctly recognized as other class of traffic signs. False positive (

FP) is defined as the traffic sign that is not recognized correctly. For the false negative (

FN), a class of traffic sign is incorrectly recognized as another class of traffic sign.

In

Table 7, a comparison between the proposed method and other existing methods based on the evaluation parameters is shown.

Table 8 presents the evaluated proposed system performance based on Neural Networks (NN). IDSIA [

71] team used Committee of CNNs method to achieve a 99.46% of correct recognition rate, and this proposed system achieved a 99.90% correct recognition rate.

4.6. Classification Algorithm Performance

For the classification algorithm performance, the proposed input vector set is applied as an input vector set to the 23 different classification algorithms which are shown in

Table 9. In these 23 classification algorithms, model number 1.23, which is the proposed classifier for this system, gives the best accuracy with 99.90%. Model 1.12 gives the nearest accuracy of 95.0% which is given by a Fine KNN classifier. Model 1.18 and model 1.22 which give the worst accuracy of 25%, are produced by the Boosted Trees and RUSBoosted Trees classifiers, respectively.

4.7. Robustness Testing

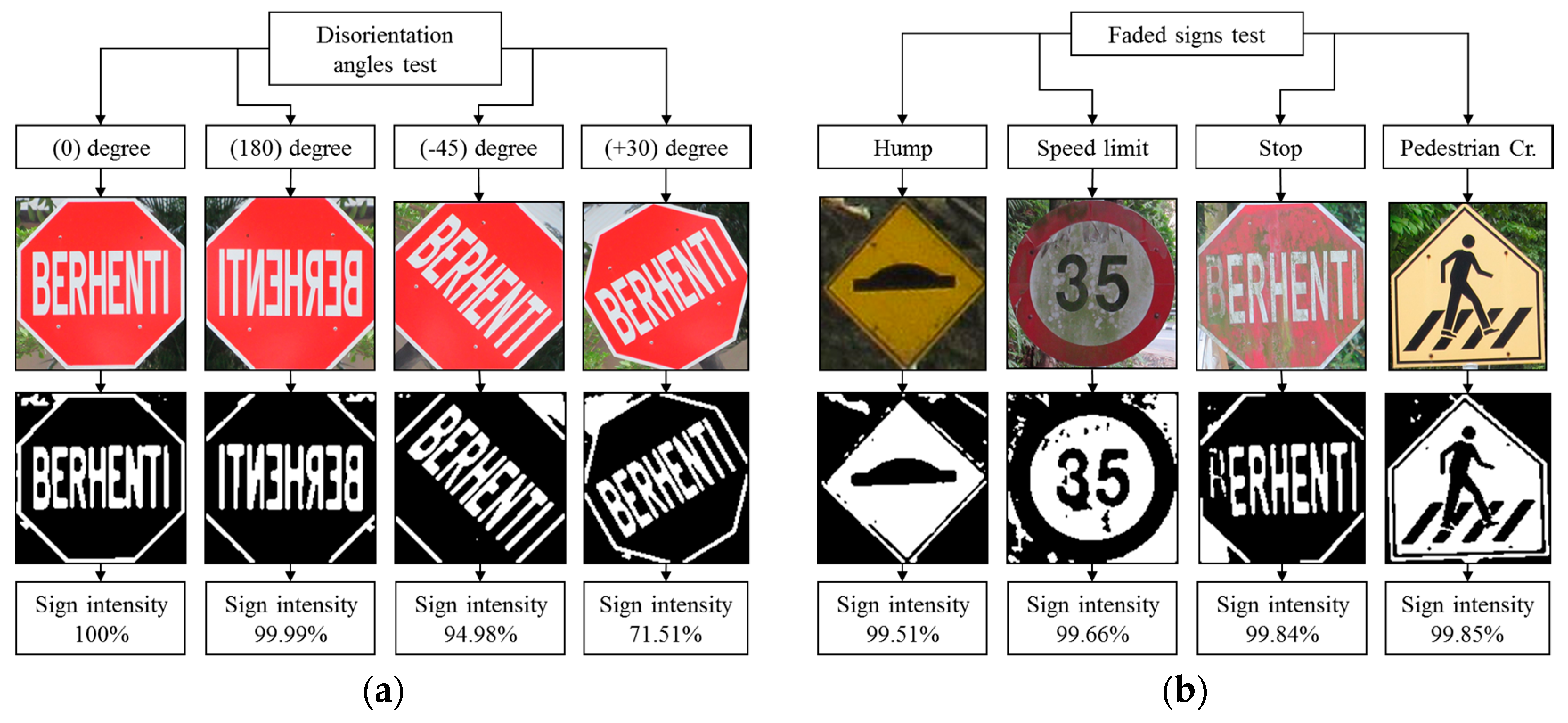

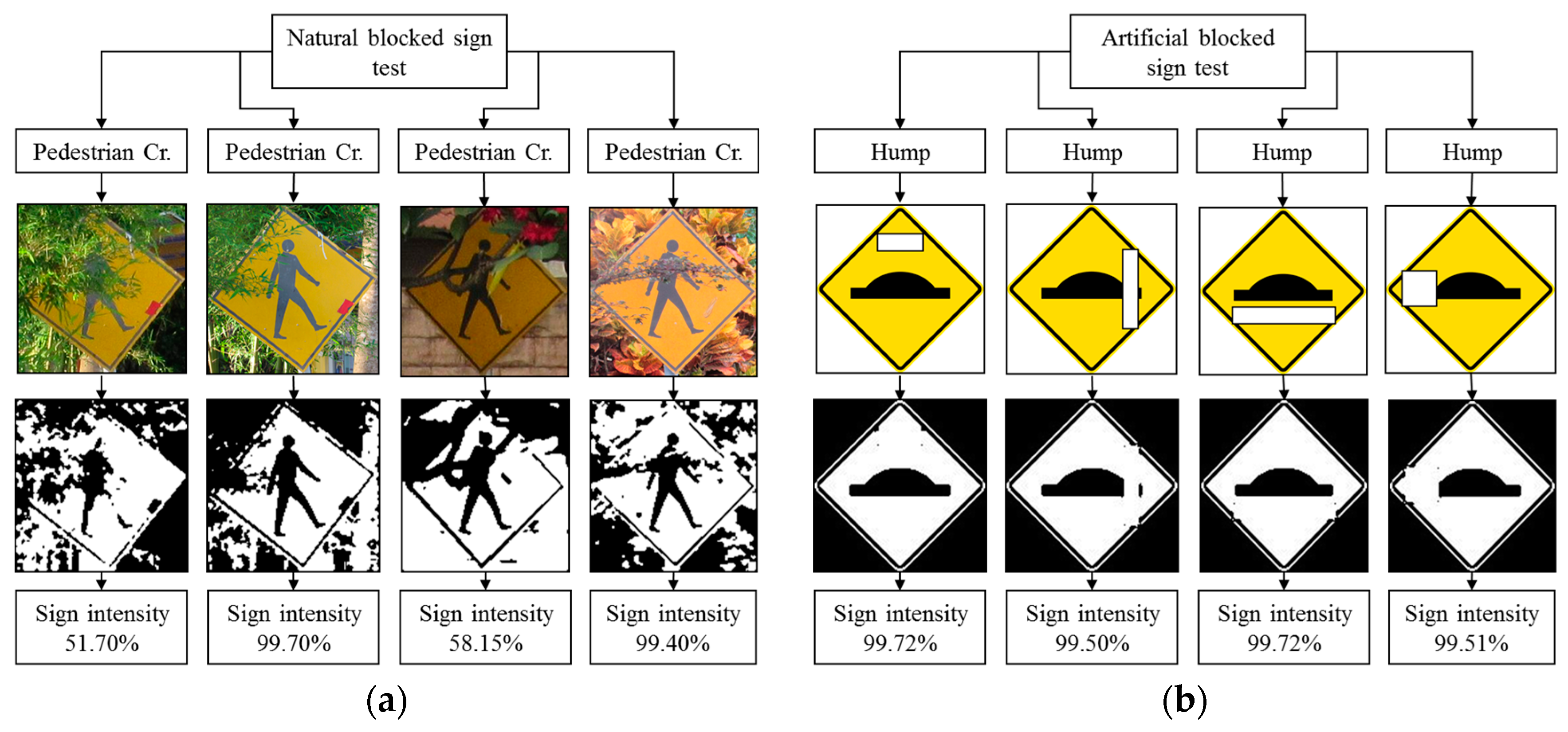

Robustness testing is a testing methodology used to detect the vulnerabilities of a component under unexpected inputs or in a stressful environment. For this proposed system, some of the robustness testing was carried out with natural images and some are synthetic images.

Figure 22,

Figure 23,

Figure 24,

Figure 25 and

Figure 26 illustrate the robustness testing of the proposed system.

4.8. Summary of Experiment Results

As a summary of our experimental results, the proposed method has been tested with a Malaysian traffic sign database [

64,

65], a real-time Malaysian database (with CNN and with ANN), and the LISA dataset [

66]. Confusion matrixes and ROC curves are used to evaluate the classification performance. Different features extraction and their results have also been discussed earlier.

Table 10 shows the summary of the experimental results.