A High Spatial Resolution Depth Sensing Method Based on Binocular Structured Light

Abstract

:1. Introduction

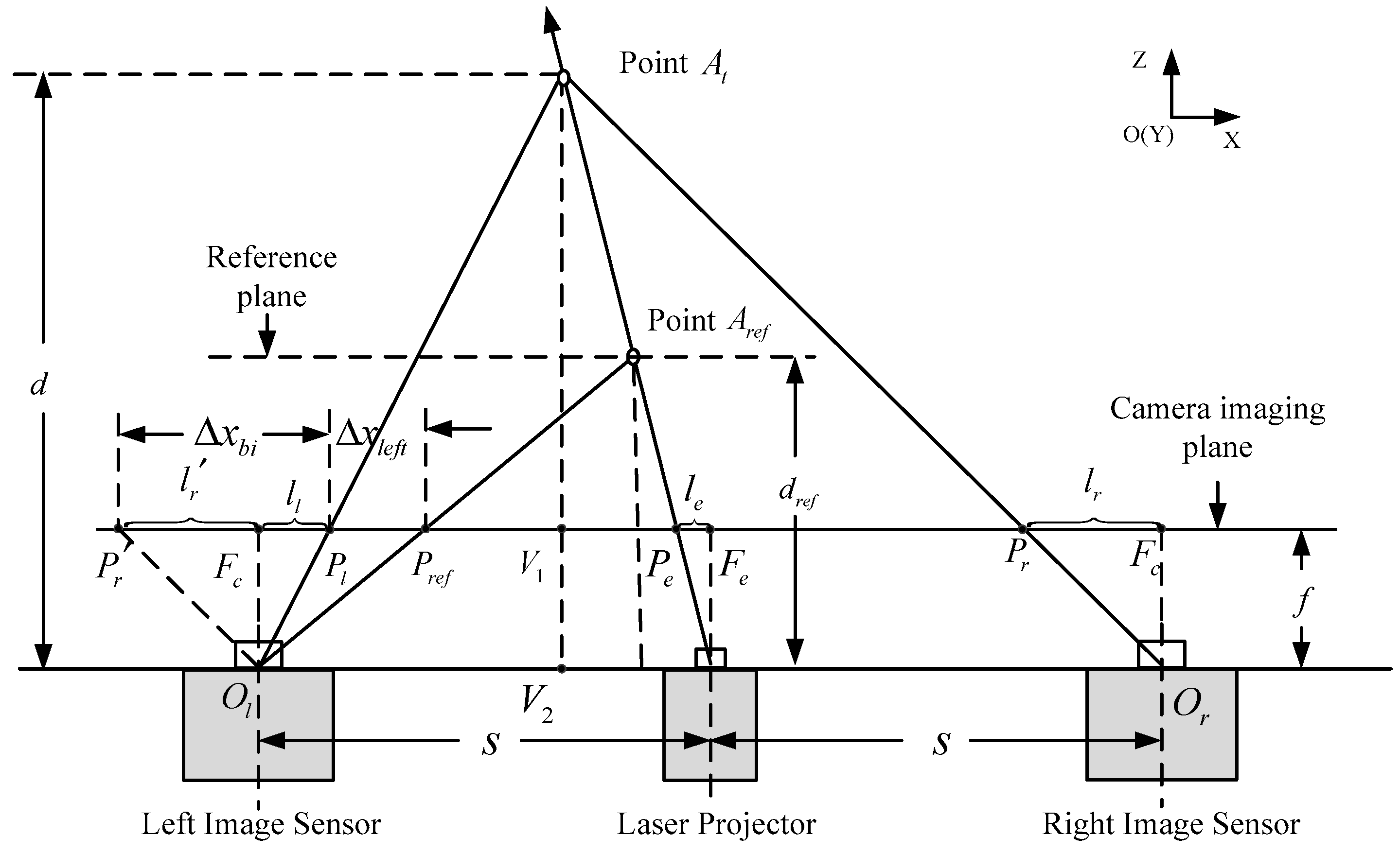

2. Related Ranging Principles

2.1. Triangulation Principle

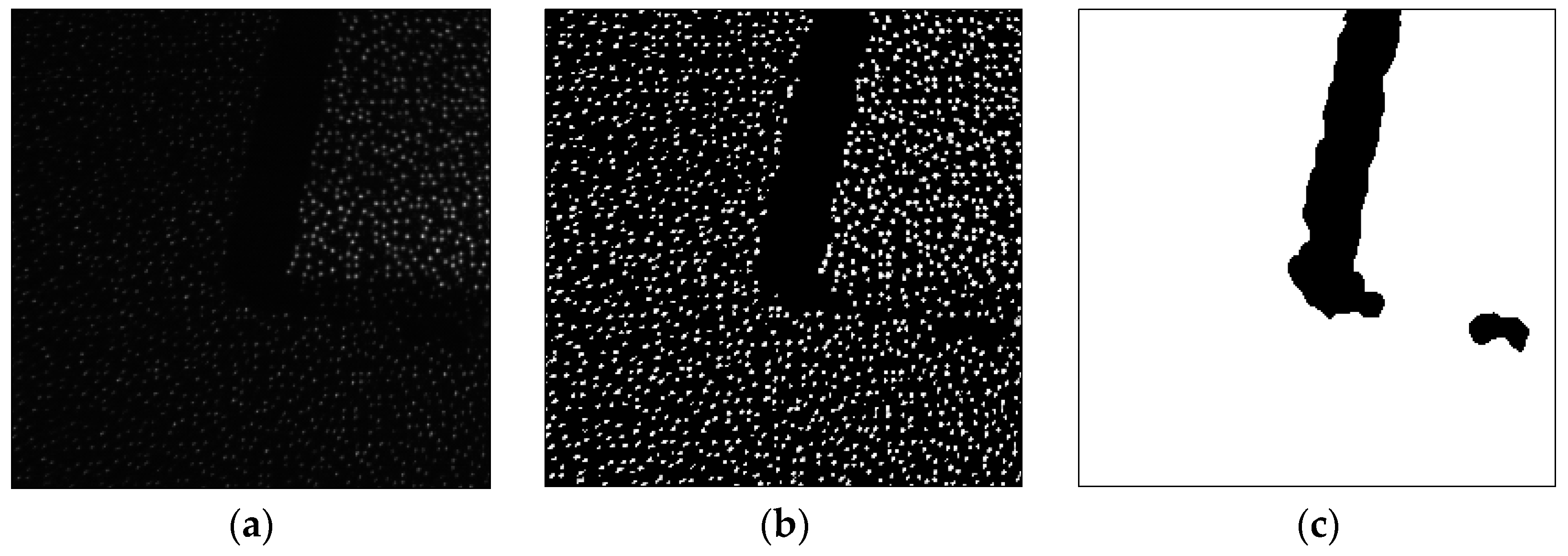

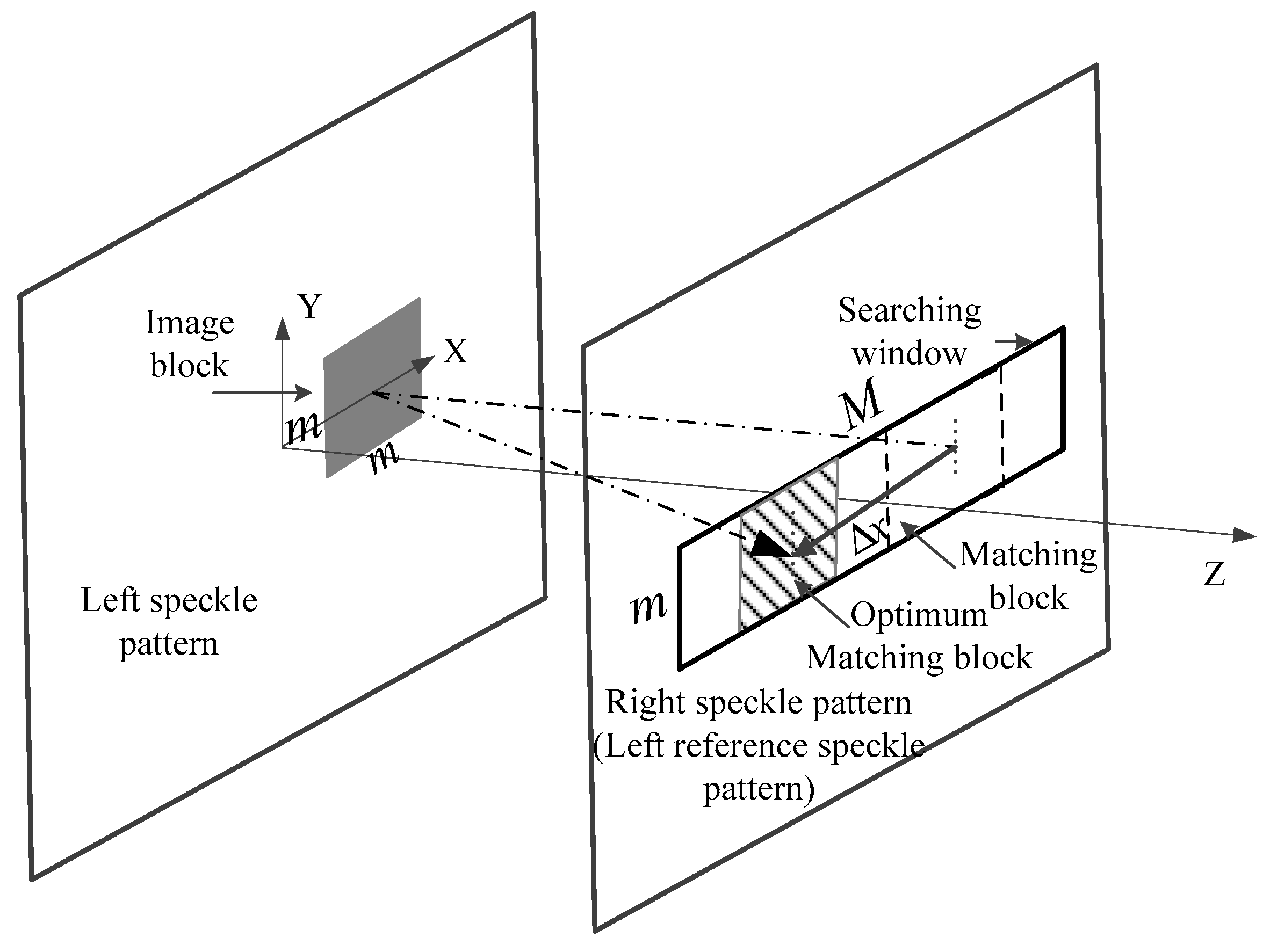

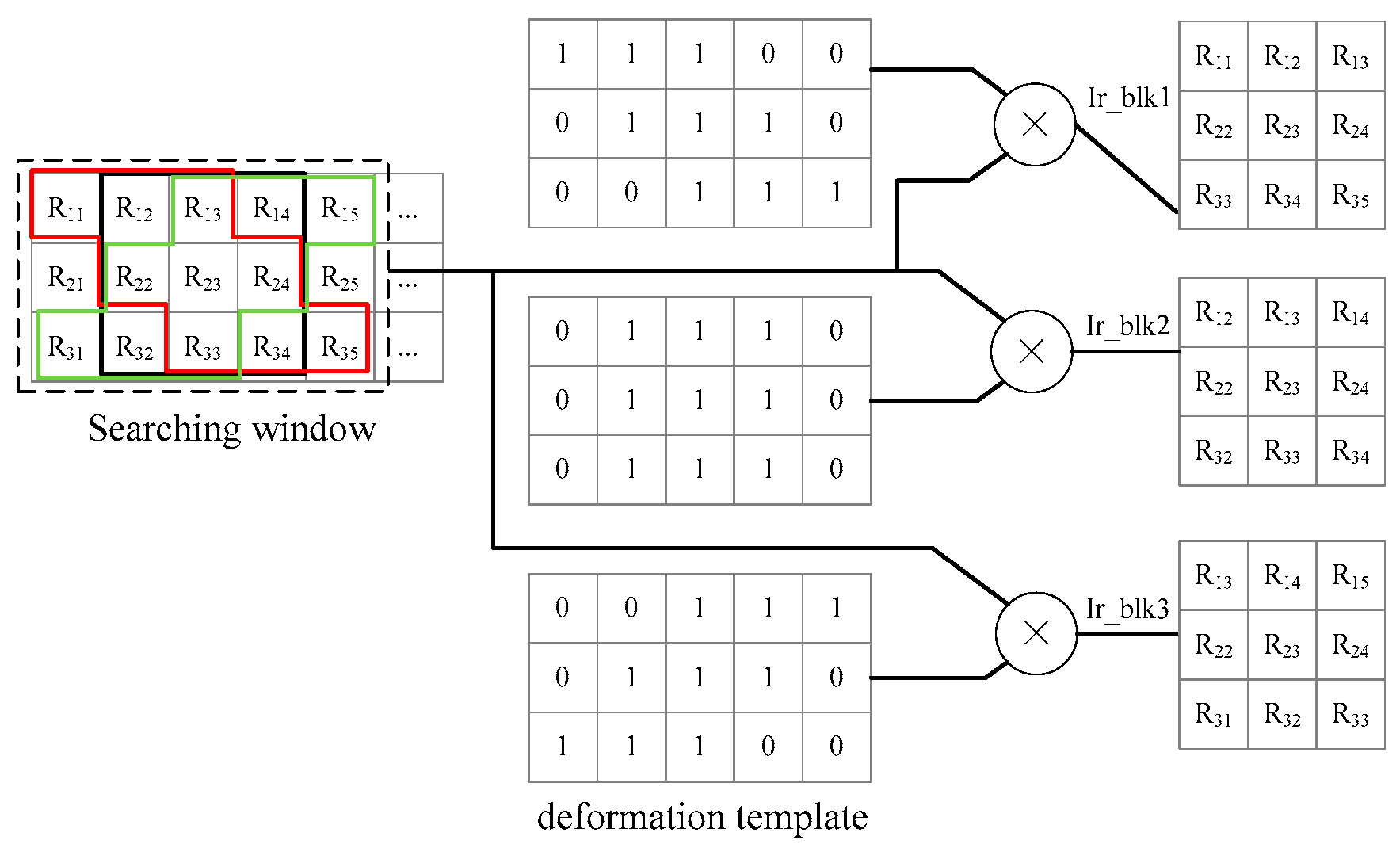

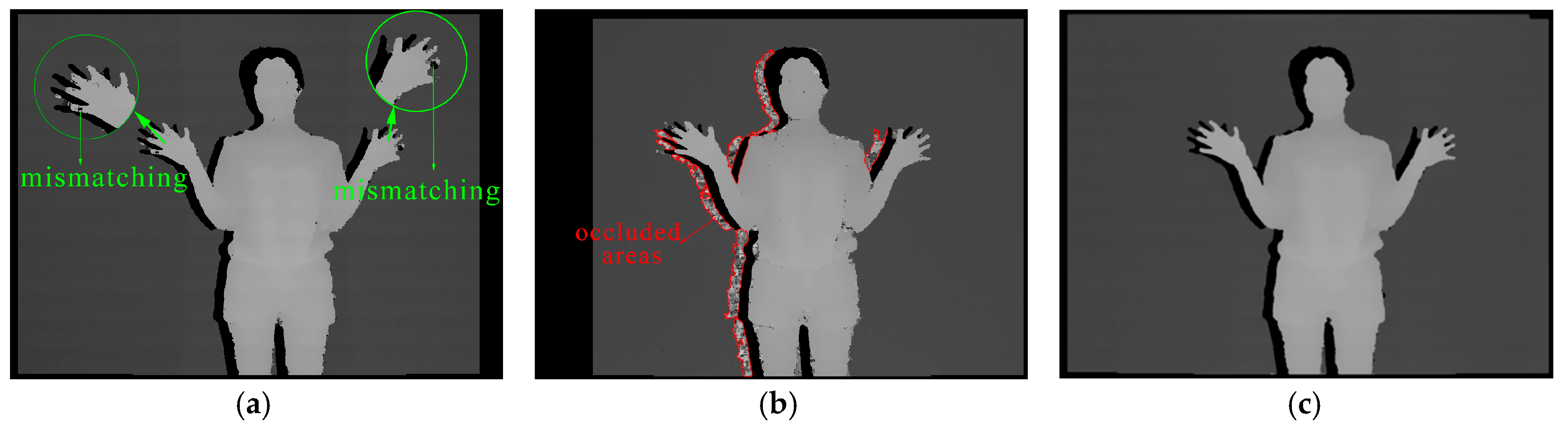

2.2. Digital Image Correlation

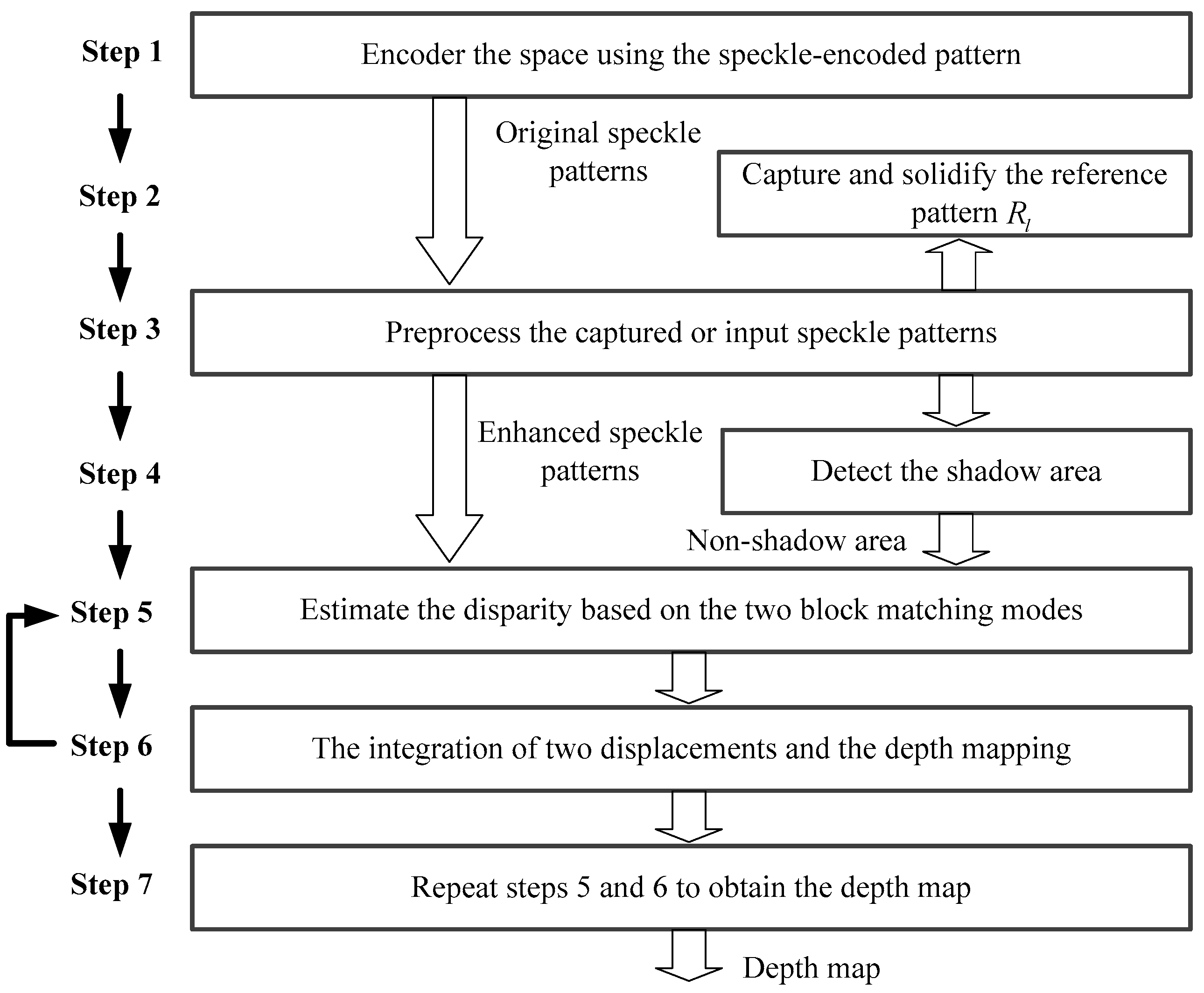

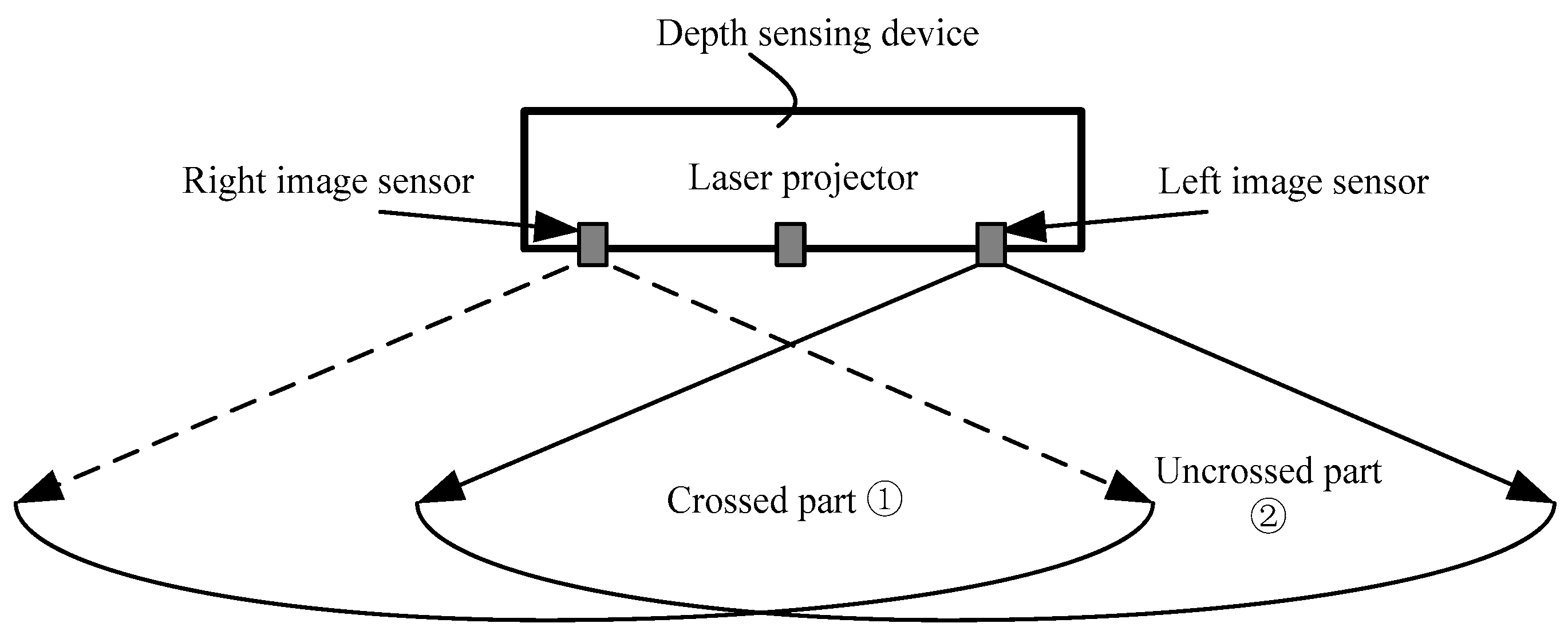

3. The Depth-Sensing Method from Two Infrared Cameras Based on Structured Light

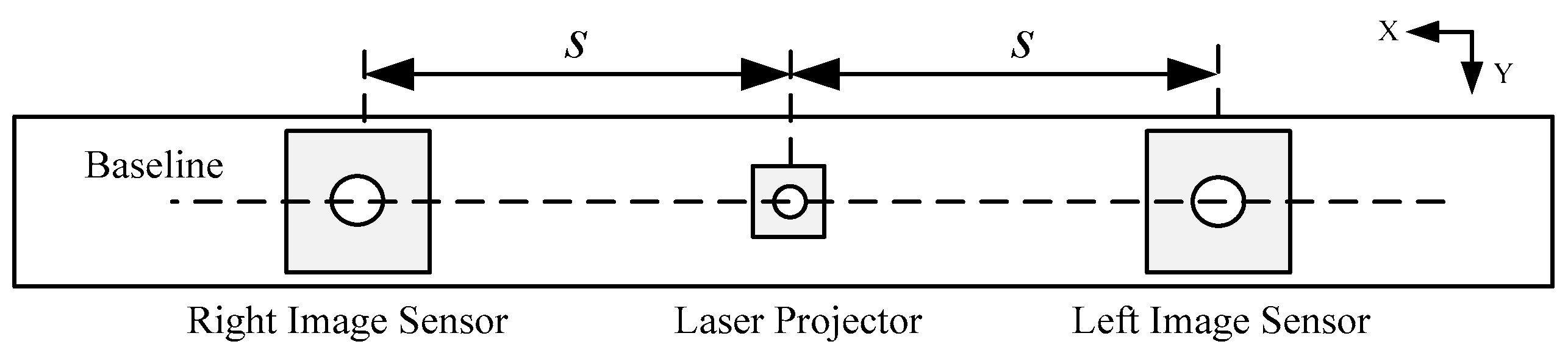

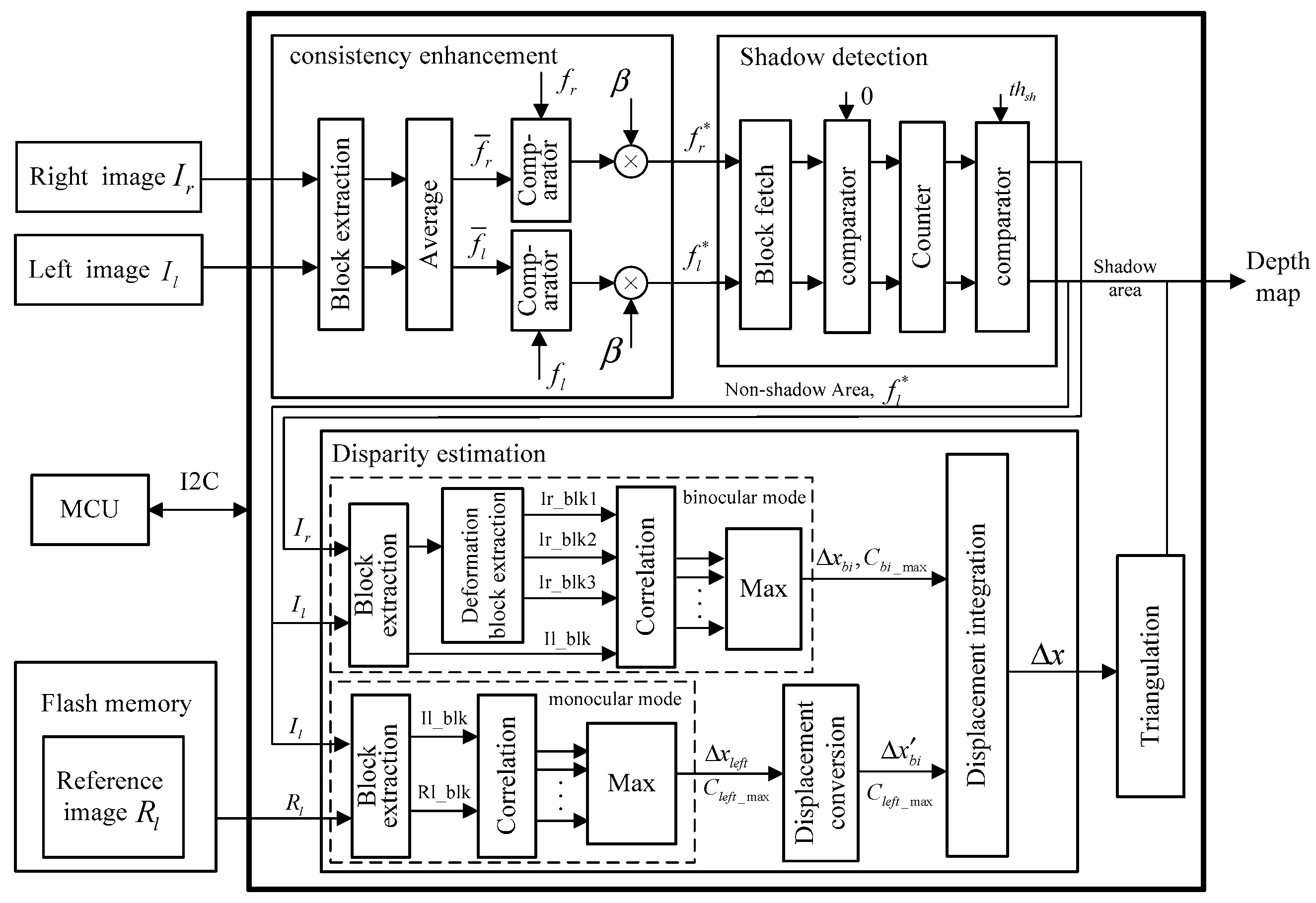

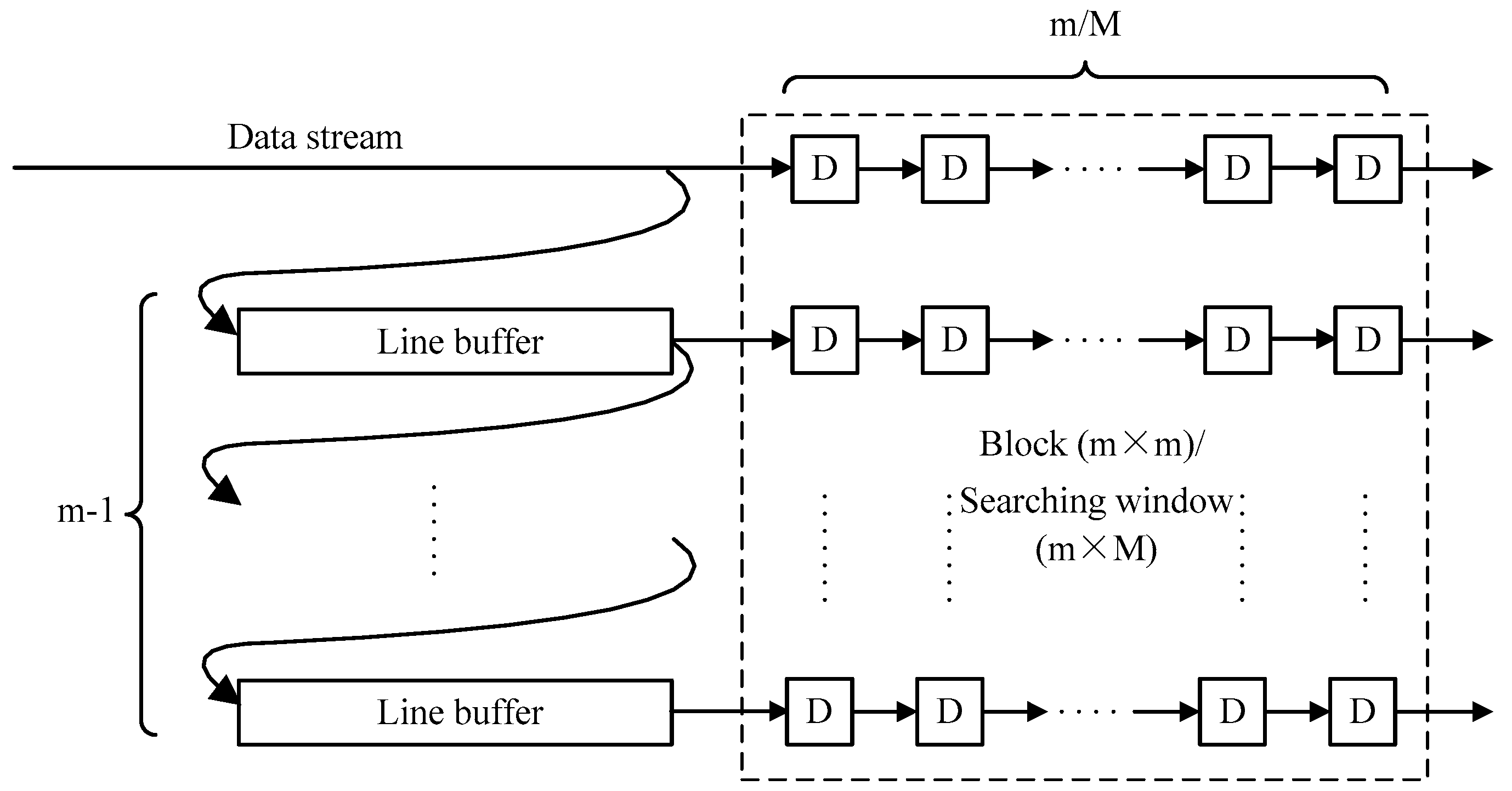

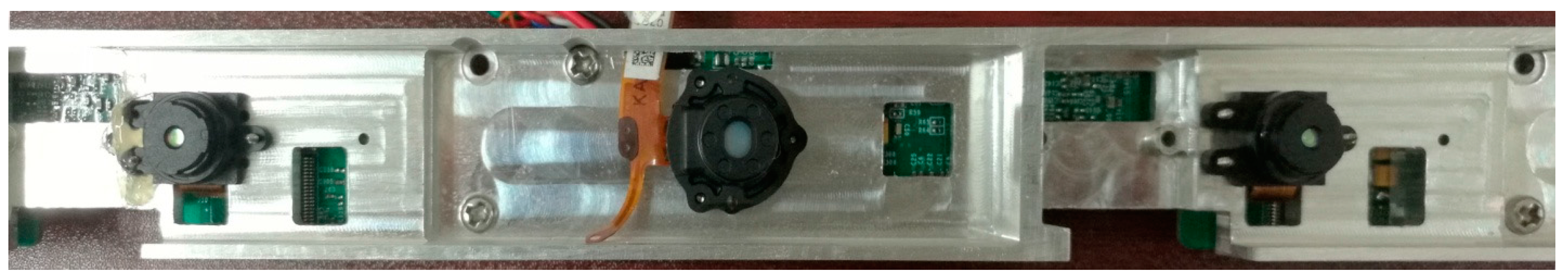

4. Hardware Architecture and Implementation

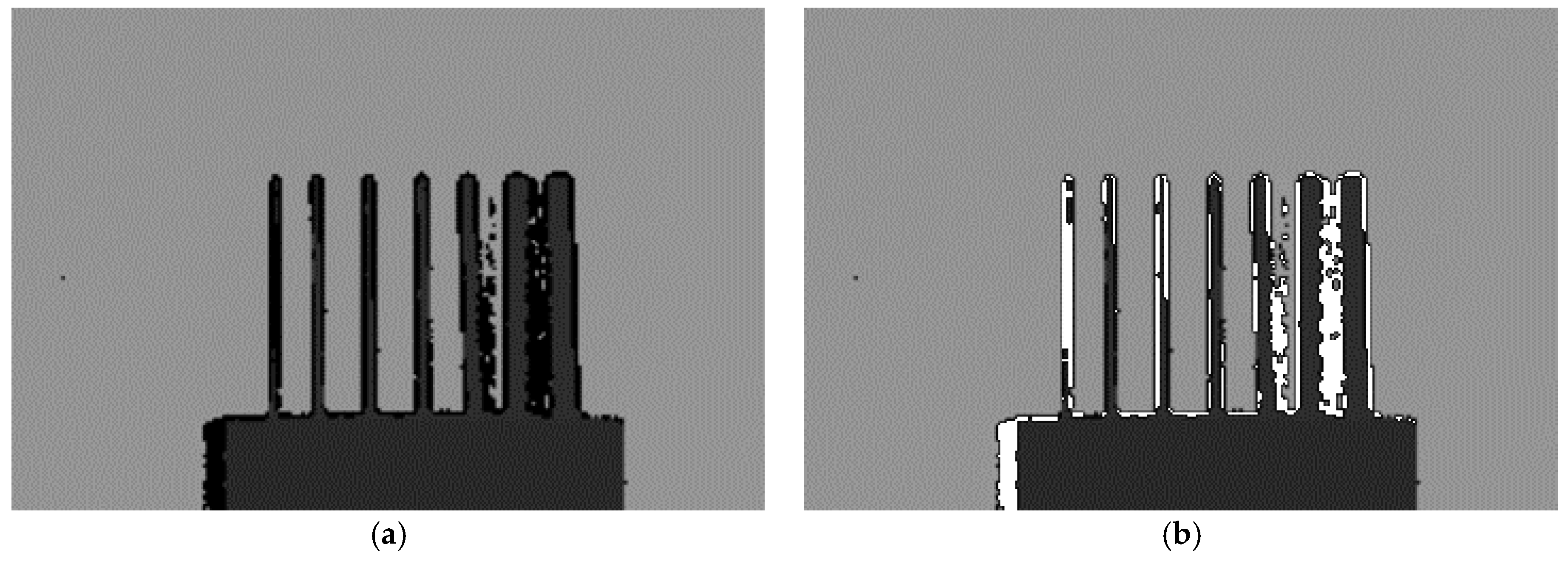

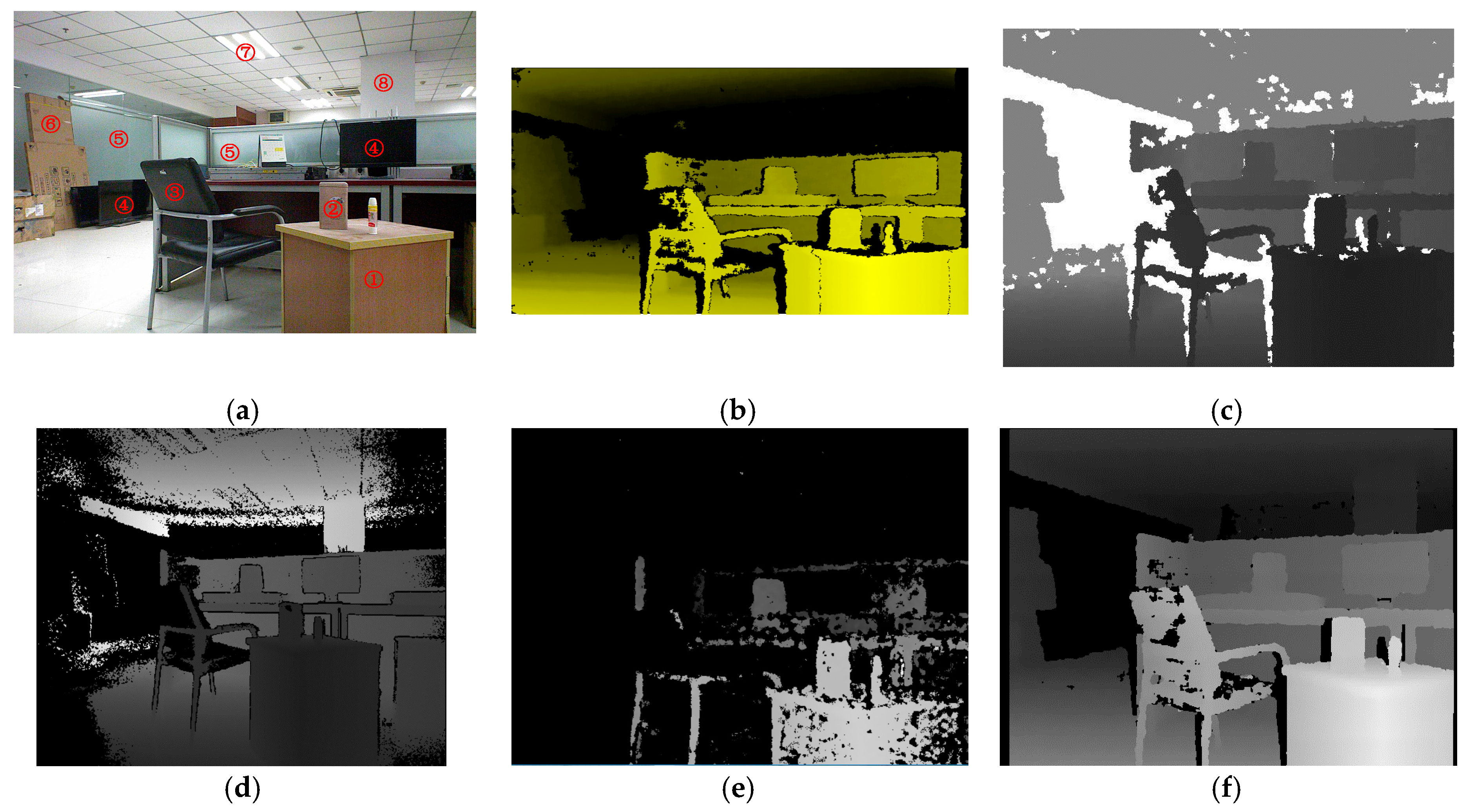

5. Experimental Results and Discussion

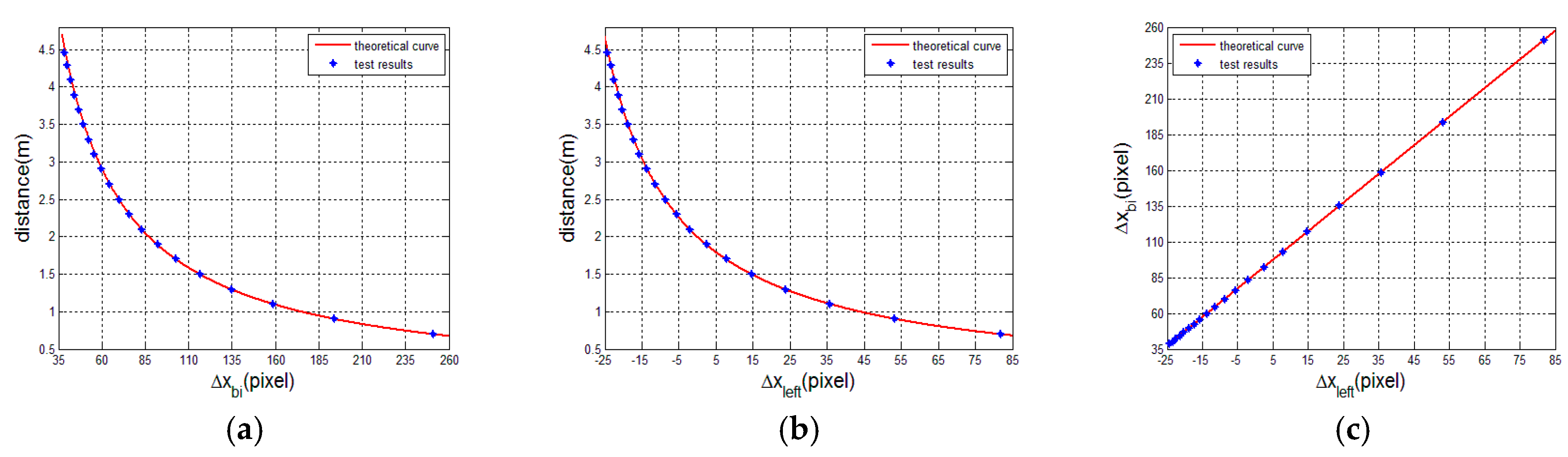

5.1. The Validation of the Transforming Relationship between Two Displacements

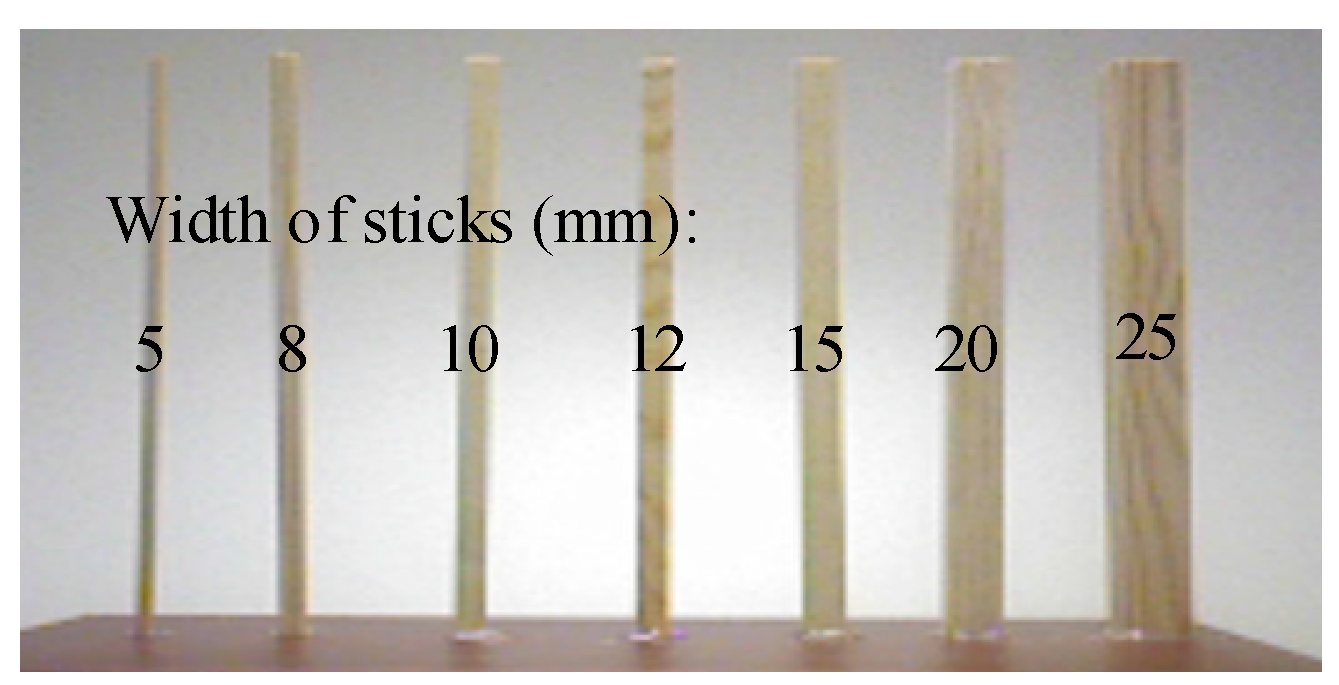

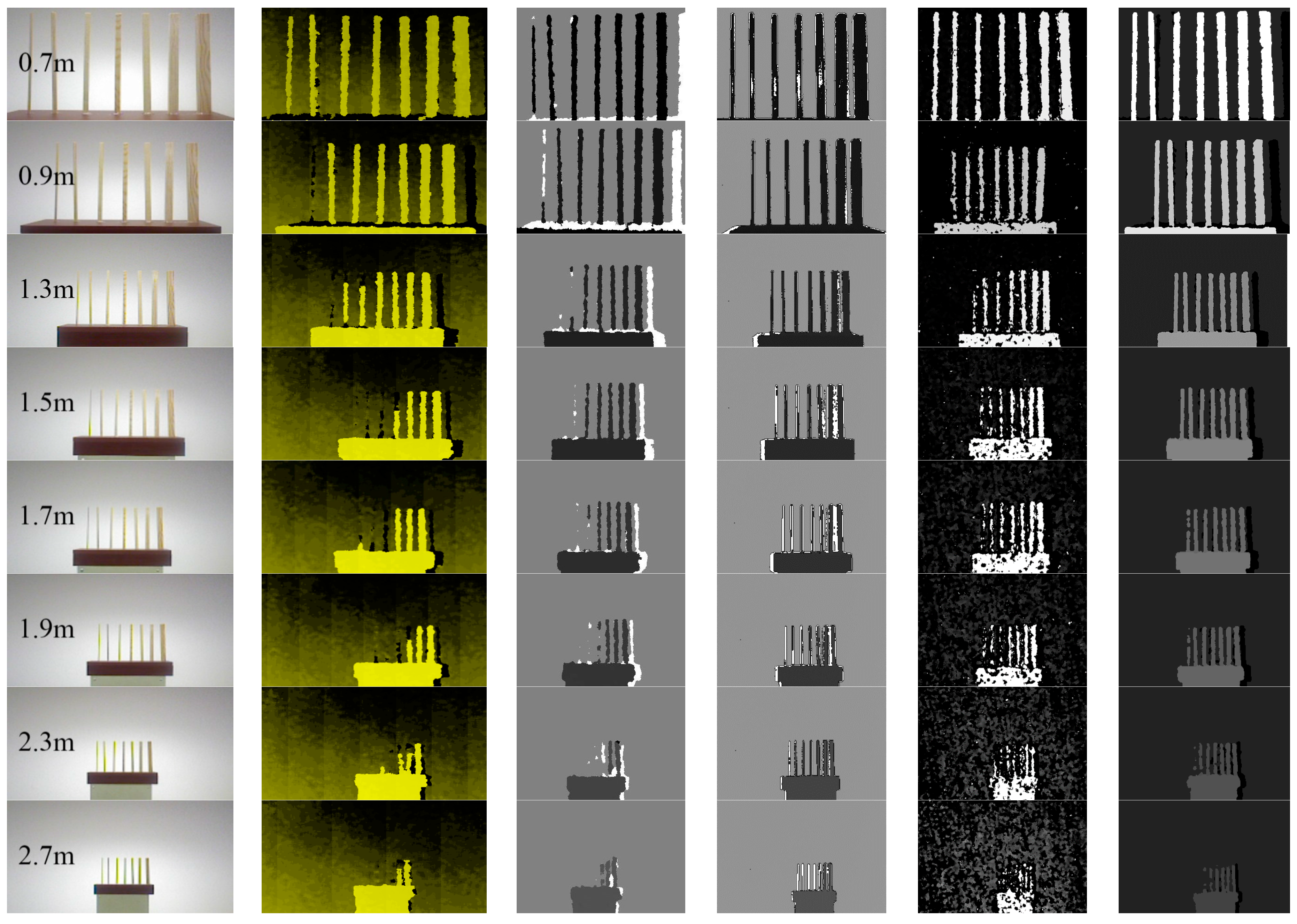

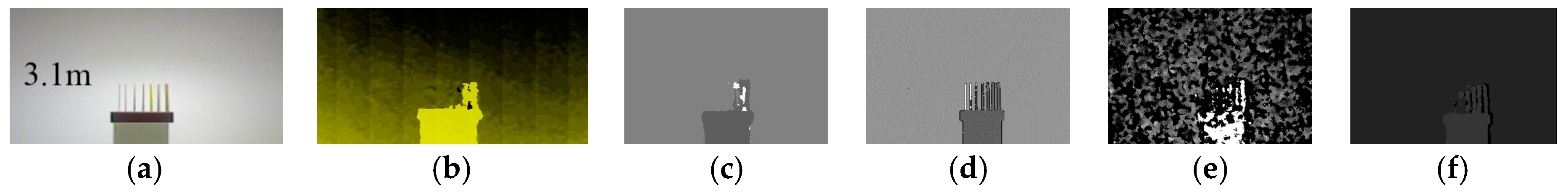

5.2. The Spatial Resolution in X-Y Direction

5.3. The Analysis and Comparison of Results

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

References

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimedia 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced computer vision with microsoft kinect sensor: A review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar] [PubMed]

- Iii, F.A.M.; Lauder, A.; Rector, K.; Keeling, P.; Cherones, A.L. Measurement of active shoulder motion using the Kinect, a commercially available infrared position detection system. J. Shoulder Elbow Surg. Am. Shoulder Elbow Surg. 2015, 25, 216–223. [Google Scholar]

- Hernandez-Belmonte, U.H.; Ayala-Ramirez, V. Real-time hand posture recognition for human-robot interaction tasks. Sensors 2016, 16, 36. [Google Scholar] [CrossRef] [PubMed]

- Plouffe, G.; Cretu, A. Static and dynamic hand gesture recognition in depth data using dynamic time warping. IEEE Trans. Instrum. Meas. 2016, 65, 305–316. [Google Scholar] [CrossRef]

- Kondyli, A.; Sisiopiku, V.P.; Zhao, L.; Barmpoutis, A. Computer assisted analysis of drivers’ body activity using a range camera. IEEE Intell. Transp. Syst. Mag. 2015, 7, 18–28. [Google Scholar] [CrossRef]

- Yue, H.; Chen, W.; Wu, X.; Wang, J. Extension of an iterative closest point algorithm for simultaneous localization and mapping in corridor environments. J. Electron. Imaging 2016, 25, 023015. [Google Scholar] [CrossRef]

- Kim, H.; Lee, S.; Kim, Y.; Lee, S.; Lee, D.; Ju, J.; Myung, H. Weighted joint-based human behavior recognition algorithm using only depth information for low-cost intelligent video-surveillance system. Expert Syst. Appl. 2015, 45, 131–141. [Google Scholar] [CrossRef]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A. Kinect fusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar]

- Tang, S.; Zhu, Q.; Chen, W.; Darwish, W.; Wu, B.; Hu, H.; Chen, M. enhanced RGB-D mapping method for detailed 3D indoor and outdoor modeling. Sensors 2016, 16, 1589. [Google Scholar] [CrossRef] [PubMed]

- Jin, S.; Cho, J.; Pham, X.D.; Lee, K.M.; Park, S.K.; Kim, M.; Jeon, J.W. FPGA design and implementation of a real-time stereo vision system. IEEE Trans. Circuits Syst. Video Technol. 2010, 20, 15–26. [Google Scholar]

- Banz, C.; Hesselbarth, S.; Flatt, H.; Blume, H.; Pirsch, P. Real-time stereo vision system using semi-global matching disparity estimation: Architecture and FPGA-implementation. In Proceedings of the International Conference on Embedded Computer Systems, Samos, Greece, 19–22 July 2010; pp. 93–101. [Google Scholar]

- Geng, J. Structured-light 3D surface imaging: a tutorial. Adv. Opt. Photonics 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Salvi, J.; Pagès, J.; Batlle, J. Pattern codification strategies in structured light systems. Pattern Recognit. 2004, 37, 827–849. [Google Scholar] [CrossRef]

- Kim, W.; Wang, Y.; Ovsiannikov, I.; Lee, S.H.; Park, Y.; Chung, C.; Fossum, E. A 1.5Mpixel RGBZ CMOS image sensor for simultaneous color and range image capture. In Proceedings of the IEEE International Solid-state Circuits Conference Digest of Technical Papers, San Francisco, CA, USA, 19–23 February 2012; pp. 392–394. [Google Scholar]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus time-of-flight kinect. Comput. Vision Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Gere, D.S. Depth Perception Device and System. U.S. Patent 2013/0027548, 31 January 2013. [Google Scholar]

- Lin, B.S.; Su, M.J.; Cheng, P.H.; Tseng, P.J.; Chen, S.J. Temporal and spatial denoising of depth maps. Sensors 2015, 15, 18506–185025. [Google Scholar] [CrossRef] [PubMed]

- Camplani, M.; Salgado, L. Efficient spatio-temporal hole filling strategy for Kinect depth maps. In Proceedings of the IS&T/SPIE Electronic Imaging, Burlingame, CA, USA, 22–26 January 2012. [Google Scholar]

- Yao, H.; Ge, C.; Hua, G.; Zheng, N. The VLSI implementation of a high-resolution depth-sensing SoC based on active structured light. Mach. Vision Appl. 2015, 26, 533–548. [Google Scholar] [CrossRef]

- Ma, S.; Shen, Y.; Qian, J.; Chen, H.; Hao, Z.; Yang, L. Binocular Structured Light Stereo Matching Approach for Dense Facial Disparity Map; Springer: Berlin/Heidelberg, Germany, 2011; pp. 550–559. [Google Scholar]

- An, D.; Woodward, A.; Delmas, P.; Chen, C.Y. Comparison of Structured Lighting Techniques with a View for Facial Reconstruction. 2012. Available online: https://www.researchgate.net/publication/268427066_Comparison_of_Structured_Lighting_Techniques_with_a_View_for_Facial_Reconstruction (accessed on 3 March 2017).

- Nguyen, T.T.; Slaughter, D.C.; Max, N.; Maloof, J.N.; Sinha, N. Structured light-based 3D reconstruction system for plants. Sensors 2015, 15, 18587–18612. [Google Scholar] [CrossRef] [PubMed]

- Yang, R.; Cheng, S.; Yang, W.; Chen, Y. Robust and accurate surface measurement using structured light. IEEE Trans. Instrum. Meas. 2008, 57, 1275–1280. [Google Scholar] [CrossRef]

- Dekiff, M.; Berssenbrügge, P.; Kemper, B.; Denz, C.; Dirksen, D. Three-dimensional data acquisition by digital correlation of projected speckle patterns. Appl. Phys. B 2010, 99, 449–456. [Google Scholar] [CrossRef]

- Robert, L.; Nazaret, F.; Cutard, T.; Orteu, J.J. Use of 3-D digital image correlation to characterize the mechanical behavior of a fiber reinforced refractory castable. Exp. Mech. 2007, 47, 761–773. [Google Scholar] [CrossRef]

- Lu, H.; Cary, P.D. Deformation measurements by digital image correlation: Implementation of a second-order displacement gradient. Exp. Mech. 2000, 40, 393–400. [Google Scholar] [CrossRef]

- Hirschmüller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vision 2002, 47, 7–42. [Google Scholar] [CrossRef]

| Distance(m) | Theory Value | Test Value | Distance(m) | Theory Value | Test Value |

|---|---|---|---|---|---|

| 0.7 | 250.06 | 250.79 ± 0.94 | 2.7 | 64.67 | 64.21 ± 0.51 |

| 0.9 | 193.08 | 193.66 ± 0.75 | 2.9 | 60.08 | 59.60 ± 0.59 |

| 1.1 | 157.96 | 158.58 ± 0.71 | 3.1 | 56.21 | 55.71 ± 0.57 |

| 1.3 | 134.26 | 135.05 ± 0.74 | 3.3 | 52.88 | 52.35 ± 0.63 |

| 1.5 | 116.36 | 116.83 ± 0.65 | 3.5 | 49.91 | 49.33 ± 0.58 |

| 1.7 | 102.55 | 102.73 ± 0.61 | 3.7 | 47.19 | 46.62 ± 0.58 |

| 1.9 | 92.06 | 92.06 ± 0.53 | 3.9 | 44.80 | 44.24 ± 0.61 |

| 2.1 | 83.12 | 83.15 ± 0.56 | 4.1 | 42.61 | 42.02 ± 0.47 |

| 2.3 | 75.76 | 75.70 ± 0.53 | 4.3 | 40.64 | 40.04 ± 0.50 |

| 2.5 | 70.07 | 69.99 ± 0.50 | 4.46 | 39.11 | 38.45 ± 0.58 |

| Distance(m) | Theory Value | Test Value | Distance(m) | Theory Value | Test Value |

|---|---|---|---|---|---|

| 0.7 | 81.40 | 81.79 ± 0.67 | 2.7 | −11.30 | −11.37 ± 0.80 |

| 0.9 | 52.90 | 53.20 ± 0.59 | 2.9 | −13.59 | −13.75 ± 0.58 |

| 1.1 | 35.34 | 35.64 ± 0.58 | 3.1 | −15.53 | −15.67 ± 0.43 |

| 1.3 | 23.50 | 23.87 ± 0.58 | 3.3 | −17.20 | −17.36 ± 0.43 |

| 1.5 | 14.55 | 14.75 ± 0.47 | 3.5 | −18.68 | −18.89 ± 0.42 |

| 1.7 | 7.64 | 7.73 ± 0.32 | 3.7 | −20.04 | −20.22 ± 0.37 |

| 1.9 | 2.39 | 2.41 ± 0.28 | 3.9 | −21.24 | −21.37 ± 0.44 |

| 2.1 | −2.08 | −2.09 ± 0.30 | 4.1 | −22.33 | −22.49 ± 0.50 |

| 2.3 | −5.76 | −5.69 ± 0.51 | 4.3 | −23.32 | −23.45 ± 0.45 |

| 2.5 | −8.60 | −8.72 ± 0.68 | 4.46 | −24.08 | −24.28 ± 0.50 |

| Distance (m) | Binocular Mode | Monocular Mode | Distance (m) | Binocular Mode | Monocular Mode |

|---|---|---|---|---|---|

| 0.7 | 0 | 0.48 | 2.7 | 0 | 0.03 |

| 0.9 | 0 | 0.22 | 2.9 | 0 | 0.03 |

| 1.1 | 0 | 0.18 | 3.1 | 0 | 0.04 |

| 1.3 | 0 | 0.06 | 3.3 | 0 | 0.03 |

| 1.5 | 0 | 0 | 3.5 | 0 | 0.08 |

| 1.7 | 0 | 0 | 3.7 | 0.01 | 0.07 |

| 1.9 | 0 | 0 | 3.9 | 0.01 | 0.06 |

| 2.1 | 0 | 0 | 4.1 | 0.03 | 0.06 |

| 2.3 | 0 | 0 | 4.3 | 0.09 | 0.06 |

| 2.5 | 0 | 0 | 4.46 | 0.20 | 0.09 |

| Item | Kinect | Kinect 2 | Realsense R200 | Our Method |

|---|---|---|---|---|

| Working mode | Structured light | ToF | Structured light | Structured light |

| Range limit | 0.8~3.5 m | 0.5~4.5 m | 0.4~2.8 m | 0.8~4.5 m |

| Framerate | 30 fps | 30 fps | Up to 60 fps | Up to 60 fps |

| Image resolution | 640 × 480 | 512 × 424 | Up to 640 × 480 | Up to 1280 × 960 |

| Bits of depth image | 10bits | unpublished | 12bits | 12bits |

| Vertical Field | 43° | 60° | 46° ± 5° | 43° |

| Horizontal Field | 57° | 70° | 59° ± 5° | 58° |

| Output interface | USB 2.0 | USB 3.0 | USB 3.0 | USB 3.0 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, H.; Ge, C.; Xue, J.; Zheng, N. A High Spatial Resolution Depth Sensing Method Based on Binocular Structured Light. Sensors 2017, 17, 805. https://doi.org/10.3390/s17040805

Yao H, Ge C, Xue J, Zheng N. A High Spatial Resolution Depth Sensing Method Based on Binocular Structured Light. Sensors. 2017; 17(4):805. https://doi.org/10.3390/s17040805

Chicago/Turabian StyleYao, Huimin, Chenyang Ge, Jianru Xue, and Nanning Zheng. 2017. "A High Spatial Resolution Depth Sensing Method Based on Binocular Structured Light" Sensors 17, no. 4: 805. https://doi.org/10.3390/s17040805