1. Introduction

With the rapid development of the robotic manufacturing industry and the continuous decrease of robots’ production cost, industrial robots are being extensively used as economical and flexible orienting devices in modern industries [

1,

2]. According to the statistics, about 80% of the industrial robots are used in the automobile manufacturing industry where they do all kinds of jobs, including spot welding, picking and placing, drilling and cutting and so on. With the improvement of robot performance, the application level of the industrial robots has been raised higher and higher, and nowadays industrial robots are expected to undertake even more advanced tasks such as precision machining, high-accuracy autonomous inspection and intelligent control [

3,

4]. Also, the Industrie 4.0 [

5] and the European Commission Horizon 2020 Framework Programme [

6] have clearly pointed out the intelligent application direction of industrial robots.

One of the most typical robot intelligent applications is robotic intelligent grasping in automotive manufacturing. During the car assembly process, different components and parts are installed in an orderly way at different workstations of the production line, and eventually assembled into a complete car. In the conventional automobile workshops, a couple of employees are arranged at each workstation, and they are responsible for taking parts out of the work bins, and placing them onto a high-accuracy centering device. Then, the robots grasp the parts from the centering device, and install them onto the car. Industrial robots are quite competent at these jobs due to their high repeatability. However, as problems of labor-force shortages, high labor costs, harsh working environments and unstable personnel operation become increasingly prominent, manufacturing enterprises have been eagerly awaiting the time when robots can grasp parts from the work bins directly instead of by manual operation, so robotic intelligent grasping has inevitably risen to be an trend in modern automobile manufacturing.

In automobile manufacturing, car body parts are placed in different ways, for example, roofs are often stacked in the work bins, doors are usually placed on shelves, side-walls are hung on an electric monorail system (EMS), and some smaller parts are placed directly on the conveyor belt. In these circumstances, the position and orientation of these parts are uncertain, and may have a ±50 mm position deviation or a ±5° angle offset in some extreme cases. Generally, the accuracy requirement for operations such as grasping will be of the order of 0.5 mm. In order to grasp these imprecisely-placed parts, the robot should adjust its trajectory according to the pose of the part and align its gripper to it. In other words, the robot should be able to recognize the pose of a part before grasping it.

There are some real-time position and orientation measurement devices that can be used for robot pose tracking and guiding, such as the indoor global positioning system (iGPS) [

7,

8], and the workspace measuring and positioning system (wMPS) [

9]. However, in this equipment, the position of a spatial point is measured by ensuring that the optical information from two or more measuring stations is acquired, but this requirement cannot be satisfied in most cases because of the occlusion caused by many factors, including the complex structure of the moving objects, obstacles and physical obstructions in the working volume, and the limited field of view of the optical devices.

In recent years, vision measurement technology, which features non-contact, high accuracy, real-time and the on-line measurement, has developed fast and become used widely [

10]. The robotic visual inspection system, which combines vision measurement technology and robot technology has been applied extensively in the automotive and aircraft manufacturing industries [

11,

12,

13]. Therefore determining the part pose with vision measurement technology before the robot grasps the part may be a feasible means to realize intelligent robot grasping. There are several different robotic vision solutions such as monocular vision [

14], binocular vision [

15], structured light method [

16], and the multiple-sensor method [

17], that have proven their suitability in the robotic visual inspection tasks, but in general we cannot get three dimensional (3D) information about parts with a single camera [

18]. The structured light method needs laser assistance and the measuring range is limited by the laser triangular measurement principle [

19]. Binocular vision systems have a complex configuration that needs the relative transform relationship calibration (also called extrinsic parameters calibration) and time-consuming algorithms for feature extraction and stereo matching, and they are also not suitable for parts with complex shapes and large surface curvature changes [

20,

21,

22]. As most automobile parts are molded by the punch forming method, featured points on the parts have great consistency and small dimensional deviations. We could develop a low-cost and high-adaptability 6D pose (3-DOF of translations and 3-DOF of angles) estimation method by combining the geometric information of parts and visual measurement technology [

23,

24].

In this paper, we will introduce a monocular-based 6D pose estimation method with the assistance of the part geometry information, which aims at enabling industrial robots to grasp automobile parts, especially those with large-sizes, at informal poses. An industrial camera is mounted on the end of the robot with the gripper, and the robot uses the camera to measure several featured points on the part at different poses. Due to the robot’s high repeatability but low accuracy, the measuring pose of the camera in each robot position will be consistent and can be calibrated by high-accuracy measuring equipment such as a laser tracker. The positions of the featured points on the part can be also calibrated beforehand. A nonlinear optimization model for the camera object space collinearity error in different poses is established, and the initial iteration value is estimated based on differential transformation and the 6-DOF pose of the part is thus determined with more than four featured points. In addition, based on the uncertainty analysis theory, the measuring poses of the camera are optimized to improve the estimation accuracy. Finally, based on the principle of the robotic intelligent grasping system developed in this paper, the robot could adjust its learned path and grasp the part.

The rest of the paper is organized as follows:

Section 2 introduces the principle of the robot intelligent grasping system and the system calibration method. The mathematic model of our monocular 6-DOF pose estimation technology is presented in

Section 3. Then the uncertainty analysis and camera pose optimization method are given in

Section 4. Experimental tests and results are given in

Section 5. The paper will finish in

Section 6 with a short conclusion and a summary.

2. Robotic Intelligent Grasping Systems

2.1. Principle of the Robotic Intelligent Grasping System

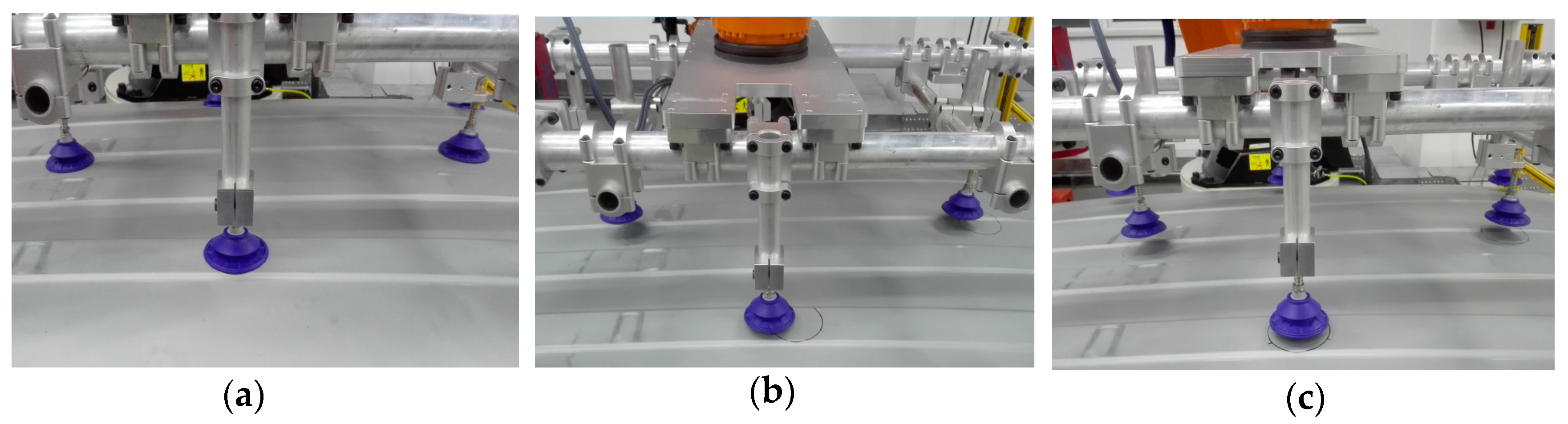

In a robotic loading workstation, the robot grasps components or parts from work bins or shelves and then assembles them onto the car body. Components and parts vary in size and differ in shape, as small-sized parts such as a top rail or a wheel fender may be less than 0.5 m × 0.5 m, while large-sized parts such as a roof or a side-wall may be larger than 2 m × 1 m. As there are no precise positioning devices for work bins or shelves, i.e., the poses of the parts are usually uncertain, the position deviation between different parts can be as large as ±50 mm. In order to determine the pose of each part and guide the robot to grasp it precisely, a camera is mounted on the robot end-flange to measure the pose of the part, then the robot will adjust the pose of the gripper according to the measured part pose.

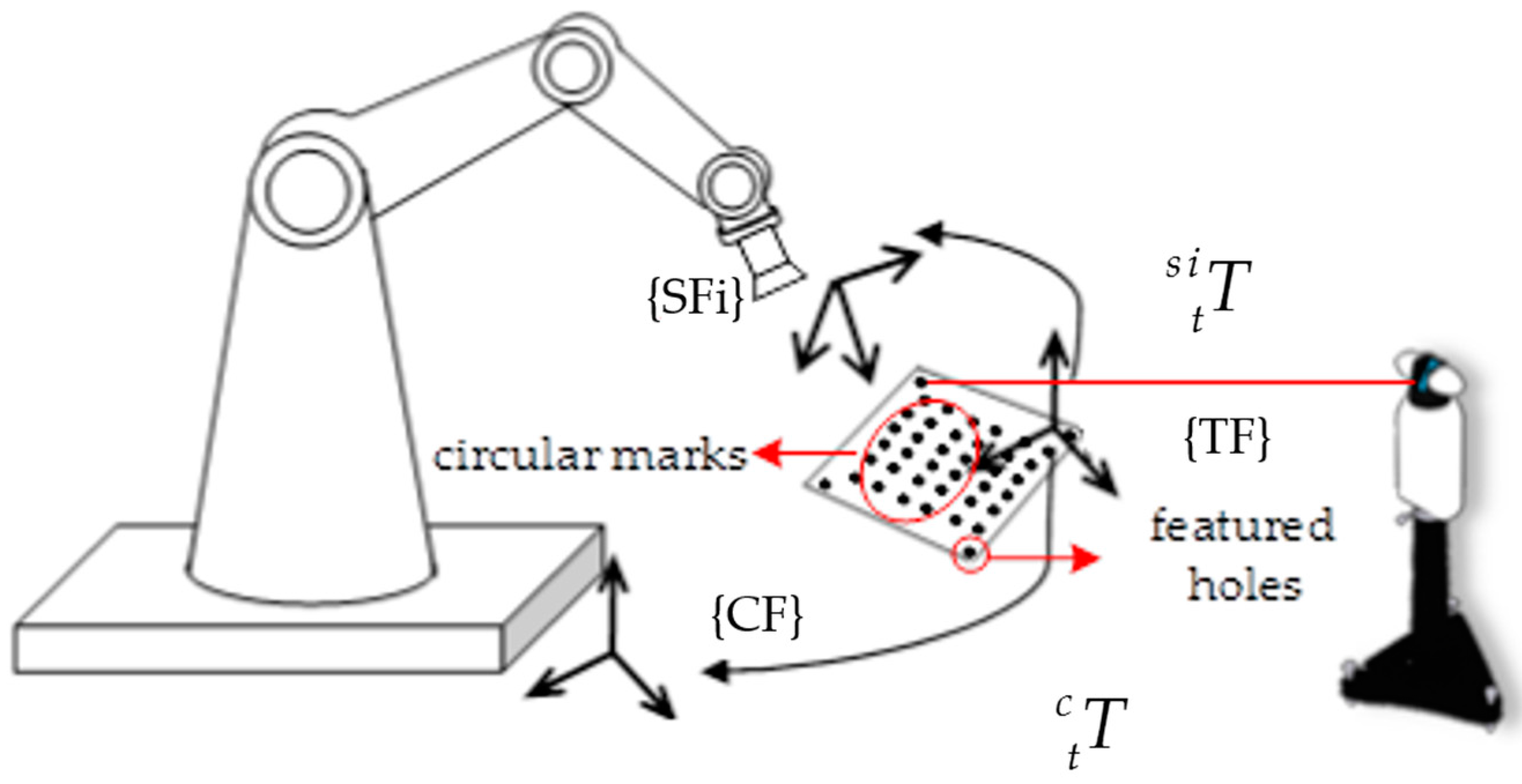

As shown in

Figure 1, before the robot moves to grasp a part, it moves and locates the camera to measure several featured points on it, and then determines the part pose based on the measurement model. In order to enlarge the camera’s field of view, a short focal length lens was mounted on it. For small-size parts, the featured points could be included in one field of view of the camera, and the robot only needs to move to one position, but for a large-size part, the featured points will have a wider distribution, and the robot needs to move to several positions. As the former is a special case of the latter, the large-size part is discussed in this paper. The coordinate systems of the robotic intelligent grasping system consist of cell frame (CF), robot base frame (BF), part frame (WF) and camera pose frame (SF). As the camera was located to several poses in order to measure the featured points on the large-size parts, a SFi is defined at each measuring pose.

The 1-st camera frame (SF1) was taken as the reference frame in this method, that is pose of the part was determined relative to SF1. The part frame (WF) is defined based on the CAD model or established with several featured points on it. The principle of the robotic intelligent grasping system is described in detail as follows:

- (1)

A robot measuring path was taught, along which the robot could take the camera to measure the featured points on the large-size part, and the part pose relative to SF1 ( could be determined.

- (2)

For an initial part, an initial grasping path was taught, along which the robot could grasp the initial part precisely. And pose of the initial part relative to SF1 (

) was measured and recorded. As shown in

Figure 1, the part pose relative to SF1 can be given as follows:

where

is the transform relationship between SF1 and BF;

is the transformation between BF and CF; and

is the transformation between CF and the initial part frame (WF). Then, for a practical part that has a pose deviation with the initial part, the robot takes the camera to measure the part along the measuring trajectory, and determines the pose of the part relative to SF1 (

), which can be given as follows:

As the industrial robot is fixed in the station, transform relationship between BF and CF () is invariable. Also as the camera system adopted in this method has a wide field of view, it can include the featured points on the practical deviated parts at the same robot pose. Because of the high repeatability of the industrial robot, the pose of SF1 relative to BF () can be regard as fixed.

- (3)

Finally, in order to grasp a practical part, the robot pose should be adjusted so that the pose of the gripper could align with the part pose, that is, the pose of the practical part relative to the gripper should be the same as the initial part. As the camera is mounted on the robot end-flange, the relative position between the camera and the gripper is fixed, the robot pose adjustment can be also regard as aligning the camera pose with the part pose. After the robot pose adjustment, the pose of the practical part relative to SF1 is the same as the initial part, that is:

where

is the transform relationship between the adjusted SF1 and BF.

In the robot control system, the pose adjustment can be realized by base frame offset, changing the transform relationship between the BF and the CF with an offset transformation matrix:

where

is the base frame offset transformation matrix.

Combining Equations (2)–(4), we can obtain the base frame offset transformation matrix as:

As and are the determined pose of the initial part and the practical part relative to SF1, we could calculate the base frame offset transformation matrix , with which the robot could adjust its pose based on the coordinate transformation principle and robot inverse kinematics. The trajectory adjustment was performed in the robot controller. The base frame offset is also acting on the taught grasping path, and the robot could move to grasp the practical part along the adjusted grasping path, thus achieving intelligent grasping.

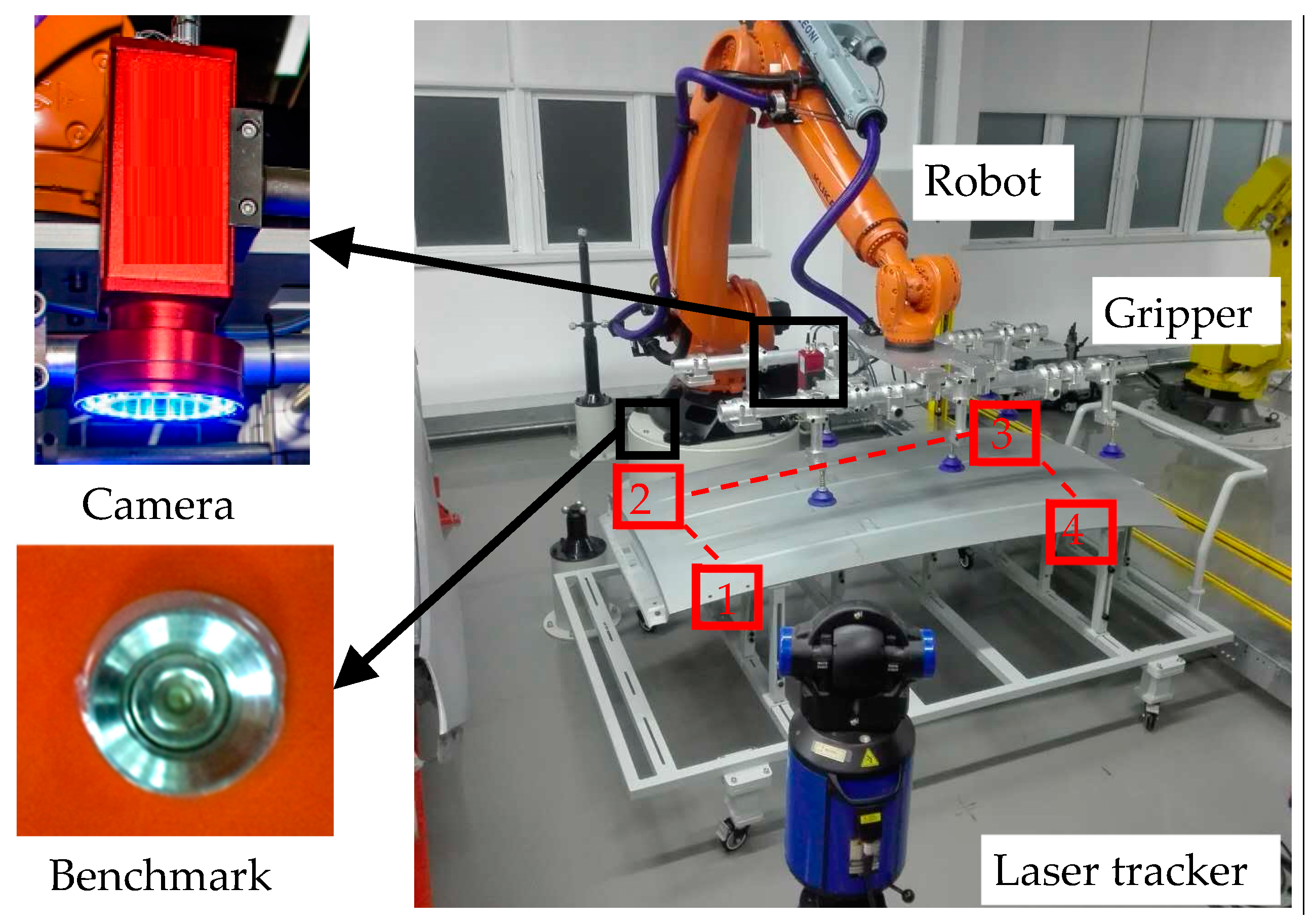

2.2. System Calibration Method

The calibration of the robotic intelligent grapping system is to determine the transform relationship between different frames, including the cell frame (CF), robot base frame (BF), part frame (WF) and i-th camera pose frame (SFi). In this paper, a laser tracker is used as the measuring equipment, and CF is set up by measuring several benchmarks on the workstation. Although CF is not necessary, it is usually defined in the practical workshop to relate different robots, so we keep it in this paper.

As BF is defined based on the robot’s mechanical structure, it cannot be measured directly. In this paper, a sophisticated tool is mounted on the robot flange to hold a 1.5 inch spherically mounted reflector (SMR) of a laser tracker, and the center of the SMR is defined as the tool center point (TCP). The robot is controlled to move to several different positions with different postures, and the positions of the SMR are measured by the laser tracker. Then the transformation between the BF and the laser tracker is calculated based on the measured SMR positions and robot kinematics.

The part calibration process includes measuring the coordinates of the featured points in WF and calibrating the transform relationship between WF and CF. An initial pose is defined for the initial part, the laser tracker measures several featured points on the part and WF can be established based on these points. As CF has been measured and established before, the transform relationship between WF and CF can be calculated based on the principle of rigid body transformation.

After the robot’s measuring trajectory has been taught, pose of the camera measuring pose frames (SFi) need to be calibrated. As coordinate system of a camera is defined based on its optical structure, which cannot be measured with the laser tracker directly. In this paper, the camera pose frames were calibrated based on homography and principle of rigid body transformation, which was illustrated in

Figure 2. A calibration target with circular marks and several featured holes is adopted, the target frame (TF) is defined by the featured holes and the circular marks have been calibrated in the target frame. When the camera stops at a measuring pose, it captures image of the calibration target, the transform relationship between SFi and the target frame can be calculated with the homography, and the target frame can be measured by the laser tracker. Then the transform relationship between SFi and CF can be calculated as follows:

3. The Mathematic Models for Monocular 6-DOF Pose Estimation Technology

The monocular-based 6-DOF pose estimation technology is mainly based on the camera pinhole model and the principle of rigid body transformation. Unlike similar pose estimation techniques with a single camera, the featured points in this method are low in number and wide in distribution, the camera is mounted on the robot end-flange and the robot has to move to several different poses to capture pictures of these featured points. The principle of 6-DOF pose estimation technology based on a single camera and couple of wide-distributed featured points is discussed in detail here.

3.1. Pinhole Camera Model

As shown in

Figure 3, the ideal pinhole camera model is used to represent the relationship between the object space and the image space, the relationship between the coordinate of the object point

Pw and the image point

Pc is given as follows:

where

z is the third row of

T ×

Pw, and

Pw = [

xw yw zw 1]

T is the coordinate of a spatial point in the object space and

Pc = [

u v 1]

T is its corresponding coordinate in image space.

is the camera extrinsic parameter, which represent the relationship between the camera coordinate system and the object coordinate system, and

is the camera intrinsic matrix which represent the relationship between the camera coordinate system and its image space.

ax and

ay are the effective focal length in pixels of the camera along the

x and

y direction, (

u0,

v0) is the coordinate of the principle point,

γ is the skew factor which is usually set to zero.

The ideal pinhole camera model has not considered the distortion of camera lens, but for a real imaging system, lens distortion in the radial and tangential directions is unavoidable due to mechanical imperfection and lens misalignments. Considering the impact of lens distortion, the relationship between the ideal image point [

u v]

T and the distorted point [

u’ v’]

T is:

where

xp = (

u’ − u0)/

ax,

yp = (

v’ − v0)/

ay,

xd = (

u − u0)/

ax,

yd = (

v − v0)/

ay,

[

k1 k2 p1 p2] are the lens distortion coefficients. The coordinates of image point

Pc in the following paper are the pixel coordinates after distortion correction.

3.2. Camera Multi-Pose Measurement Model

Determining the rigid transformation that relates images to the known geometry, pose estimation problem, is one of the central problems in photogrammetry, robotics vision, computer graphics, and computer vision. However, the pose estimation problem discussed in this paper is different from the common ones: the camera needs to take pictures of widely-distributed featured points at multiple poses instead of only one pose. The most significant advantage of the method presented in this paper is that it can be adopted for pose estimation of large-size part, much larger than the camera’s field of view. The measurement model is presented in detail as follows.

In order to relate the measured results of different featured points, the coordinate transform relationship among different camera measuring poses should be calibrated in advance. Taking the first camera pose (SF1) as reference, the pinhole model of camera at the

i-th measuring pose is:

where

Pci = [

ui vi 1]

T is the pixel image coordinate of a featured point,

Pwi = [

xwi ywi zwi 1]

T is its corresponding coordinate in the part frame, which is calibrated with the method in

Section 2.2.

is the transform relationship between the first camera pose (SF1) and the

i-th camera pose (SFi), which is also calibrated with the method presented in

Section 2.2.

is the relationship between SF1 and the WF, which is the part pose to be estimated in this method.

The image point

Pci is usually normalized by the internal parameter matrix

A of the camera to obtain the normalized image point, and let

be the direction vector of the line linking the principle point and the image point:

where

.

The normalized image point is the projection of

Mi on the normalized image plane. According to the collinearity equation,

Mi, the normalized image point and principle point should be collinear under the idealized pinhole camera model, which can be expressed as follows:

Another way of considering the collinearity property is the orthogonal projection of the spacial point

Mi on the direction vector

should be

Mi itself, that is:

where

is the line-of-sight projection matrix which could project a scene point orthogonally to the line of sight constructed by the image point and the principle point.

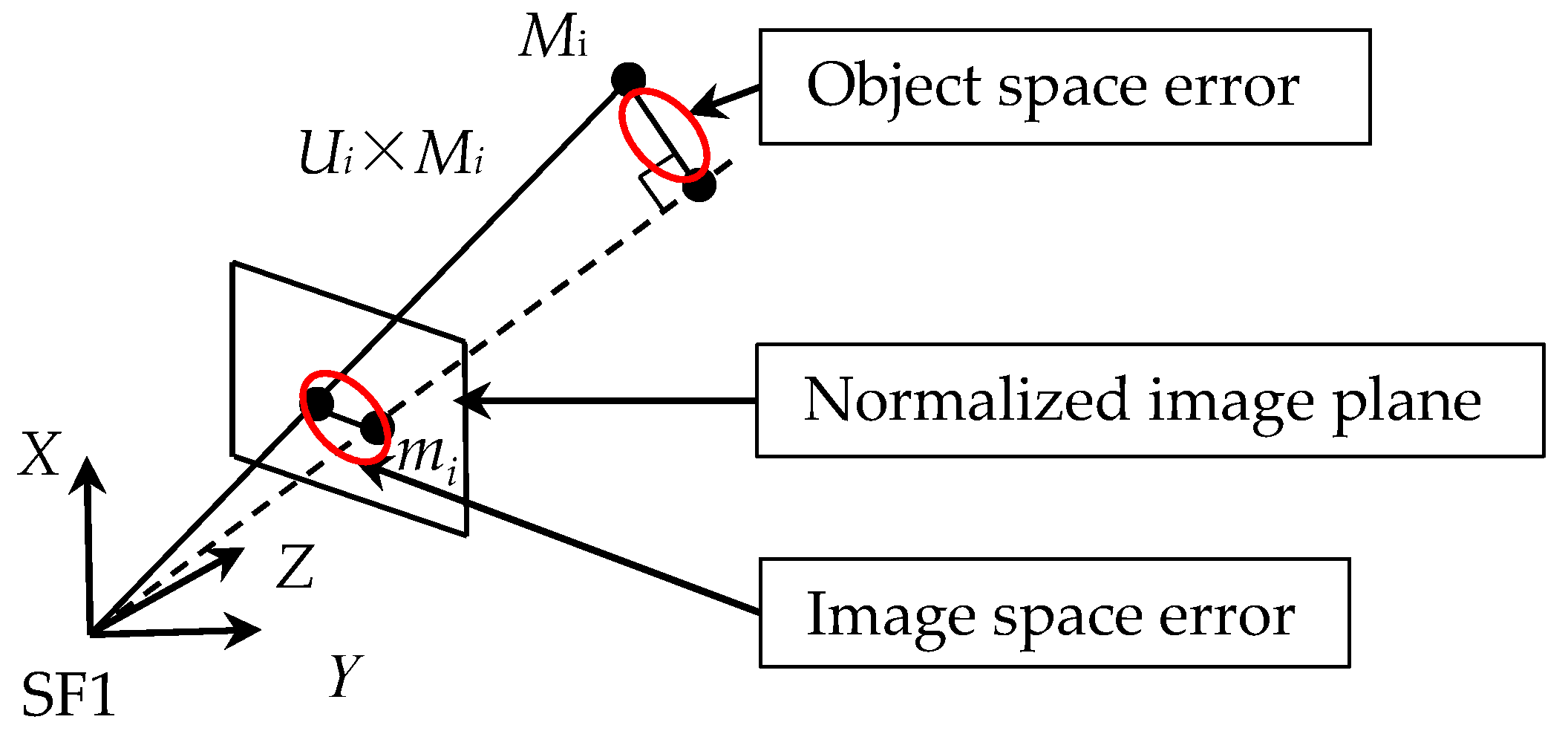

As shown in

Figure 4, the collinearity error can be considered in the image space or in the object space, in this paper we consider to penalize the collinearity error in the object space:

Although Equation (14) can be decomposed into three linear equations, the rank of its coefficient matrix is 2, that means we can only obtain two independent equations for a featured point. During the practical measurement, with n featured points on the part, we can formulate a function system with 2n equations about the elements of the matrix .

Because of the coefficient error in Equation (14), which is caused by the measurement error, the rotation matrix of

does not in general satisfy the orthogonal constraint, so in this paper, we adopt a nonlinear method to determine

, which will consider the orthogonal constraint condition as follows:

In principle, the unknown can be determined by the solution of the equations provided by only three featured points, in consideration of the measurement error and efficiency, we chose at least four featured points to improve the accuracy.

Based on Equations (14) and (15),

can be obtained by minimizing the following function:

where M is the penalty factor. Levenberg-Marquardt algorithm [

25,

26,

27] is used to solve the nonlinear minimization problem in Equation (15).

3.3. Estimation of the Initial Iteration Value

For most iterative optimization algorithms, a relatively accurate estimation of the initial iteration value is generally necessary. This is not only for an accurate result, but also to reduce the number of iterations. Consequently, we adopt an estimation method to obtain a relatively accurate initial value. The method is expounded as follows.

In the application of the robotic intelligent grasping, deviation between poses of the initial part and the practical part is relatively small comparing with the part size. So the pose of the initial part and the practical part relative to SF1 can be associated based on the differential transformation:

where

is the differential transformation matrix. The differential transformation is based on Taylor expansion and is usually used to deal with non-linear problems.

Then Equation (11) can be rewritten as:

and Equation (14) can be expanded as:

where

is the line-of-sight projection matrix;

is the corresponding coordinate in WF;

is a transformation matrix of the initial part, which can be also calibrated beforehand. Equation (19) can be rewritten as:

Also, the rank of the coefficient matrix in Equation (20) is 2, so we can only acquire two equations for one featured point

Pwi. With n featured points on the part, we can formulate a function system with 2n linear equations, and then a matrix equation can be obtained in form of

Ax =

B with

x = [δ

x δ

y δ

z dx dy dz]

T.

x can be solved by means of the least-squares method as follows:

Note that the Equation (17) is based on the approximation condition that the rotation angle

between the practical part and the initial part should be small and we make the following assumption:

The approximation condition in Equation (22) means that the deviation between the pose of the practical part and the pose of the initial part should be small. When the rotation angle is large, the differential transformation will introduce significant error, so that solution for Equation (20) can only offer an estimation of the initial iteration value for Equation (15).

4. Camera Pose Optimization

As described above, the camera was mounted on the robot end-flange and oriented to measure the featured points on the part from different robot pose. Theoretically, the measuring poses of the camera were significant to the final accuracy of the part pose estimation. In order to optimize the camera poses and obtain higher accuracy, the uncertainty transitive model of the objective function was analyzed here.

Mathematically, the model for a multivariate system takes the form of an implicit relationship:

where

P is measured or influence quantities (the input quantities),

X is the measurand (the output quantities).

For the system described in this paper,

X is the parameters of the transform relationship between SF1 and WF (

) and

P is the image coordinates of the featured points extracted from the captured images.

and Equation (16) can be rewritten as:

In order to meet the minimization condition

E(

X,P) = min, the derivative of

X in Equation (25) should equal to zero, that is:

In this paper, covariance matrix

QX and

Qp are used to express the measuring uncertainty of

X and

P as follows:

where

and

are the uncertainties of the extracted pixel coordinates of the featured points in image

x and

y direction.

Based on the derivation method of implicit function,

Qp and

QX satisfy the following relationship:

and

QX can be expressed as follows:

with

.

Suppose the uncertainties of the extracted pixel coordinates conform to the two-dimensional normal distribution with the mean value equals to zero:

We have:

where

is the uncertainty of the featured points introduced by the image processing error.

Equation (31) has revealed the influence rule between the measuring uncertainty of P and the uncertainty of X. As we can see, in order to decrease the uncertainty of X, we should not only decrease the uncertainty of P (the extracted image coordinates), but also decrease the influence of the uncertainty of P. Method to decrease the uncertainty of P can be using high-resolution camera or improving accuracy of image processing algorithm, and method to decease the influence of the uncertainty of P is to decrease the trace of HHT by optimizing the camera measuring pose.

In this paper, we take the roof intelligent grasping as an example, the camera has to move to 4 different poses and measure four different featured points on the roof. In order to obtain a clear picture of the featured points, the camera usually stops right above the featured points. That is the measuring orientations of the camera have been limited by the featured points, but the positions of the camera can be optimized. In the simulation experiment, we adjust the camera positions and make the pixel image coordinates of the featured points move from the image center to the image boundaries. The root of the trace of

HHT changes as shown in

Figure 5, where

X and

Y mean the pixel distances away from image center along in two directions.

As we can see in

Figure 5, when the featured point moves far from the principle point, the influence of the uncertainty of the image points become less, but the decrease trend becomes slower when the distance is more than 400 pixels (the simulated camera has a resolution of 2456 × 2058). When applying to the actually engineering project, in consideration of lighting conditions and other factors, we suggest that the featured points image at about 1/4 length away from the edge of the image plane.