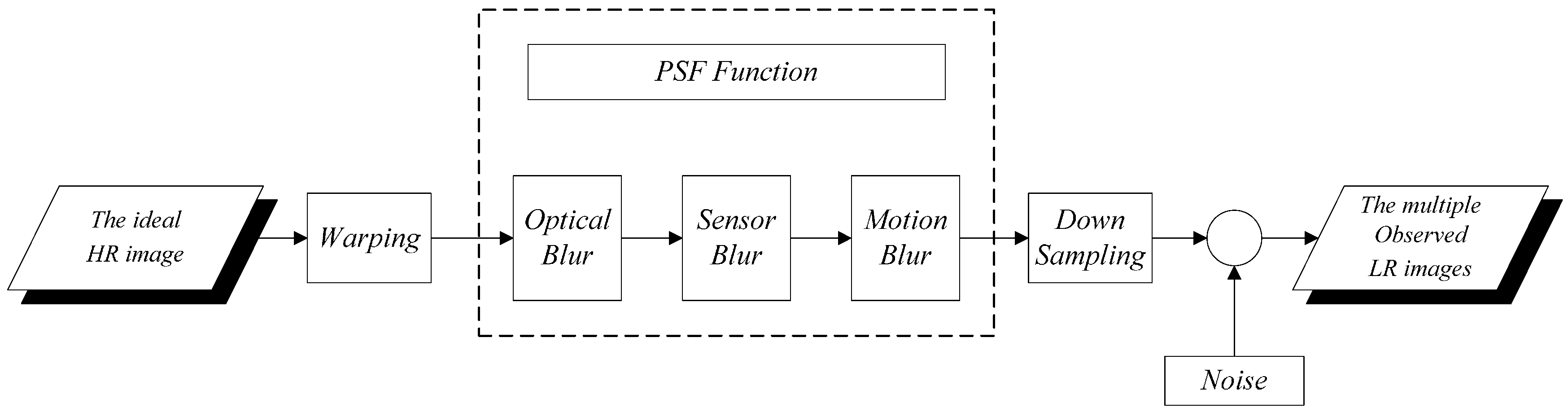

2.1. Observation Model

Generally, SR image reconstruction techniques present an inverse problem of recovering the HR image by degrading the LR images. The HR image is obtained under certain conditions, such as satisfying the theory of Nyquist sampling, and is affected by some of the inherent resolution limitations of sensors in the acquisition process [

24], including warping, blurring, subsampling operators, and additive noise. Therefore, an observation model (1) can be formulated, which relates to the ideal HR image

to the corresponding

-th observed LR images

. The model can overcome the inherent resolution limitation of the LR imaging systems. The LR images display different subpixel shifts from each other because the spatial resolution is very low to capture all the details of the original scene. Finally, a goal image with denser pixel values and rich image information, called HR image, will be achieved:

where

is the ideal undegraded image required to be calculated,

is the observed LR image, and

represents the blur matrix, including relative camera-scene motion blur, sensor blur, atmospheric turbulence, and optical blur. Generally, the blur matrix is modeled as convolution with an unknown PSF to estimate blurs [

25].

is the warp matrix (e.g., rotation, translation scaling, and so on). The relative movement parameters can be estimated using the subpixel shifts of the multiple LR images.

represents a subsampling matrix, and

is the lexicographically ordered noise vector. The observation model is illustrated in

Figure 1.

Image degradation occurs when the acquired image is corrupted by many factors. The image egradation process can be viewed as a linear invariant system, in which the noises can be ignored. The degradation model is described by Equation (2):

The model is composed of four main attributes: the original image without degradation

, the degraded image

, a PSF

, and some noises

.

is the convolution operating symbol. To restore the quality of the image,

can be estimated using some PSF estimation methods, such as the knife-edge method. When the original image is processed by downsampling, the PSF

of the downsampled image is obtained. However,

does not apply to the model (2); hence, the relationship between

and

is derived in

Section 2.3. The derived formula will be applied to the SR reconstruction model.

2.3. Relationship between and

In this section, the relationship between and is deduced and validated, and the simulation experiment is designed to verify the correctness of the formula. The derived formula is proven to be suitable for SR reconstruction. In the process of SR reconstruction, the PSF of the HR image can be estimated by the PSF of the LR image; and , where the downsampling ratio is .

When knife-edge areas are extracted from a remote sensing image with gray values from 0 to 1, the original signal along the gradient direction is represented by the unit step signal

. Given the PSF

and degrading and downsampling operator

,

is finally expressed as the signal in the image. Equation (8) can be determined in the first downsampling model:

where

is the downsampling operating symbol, the downsampling operator

can be taken as a calculation of one-dimensional downsampling multiples of

, which is the equivalent of

compression from this function. The formula is shown in (9):

Therefore, when the variable is less than 0, the signal value of

is 0; when the variable is greater than 0, the signal value is 1. With general downsampling using the convolution operation method, the knife-edge areas can be mathematically formulated as follows:

According to the first downsampling model, Equation (11) can be deduced:

Based on the principle of knife-edge method, the PSF can be obtained from the derivative function of the edge spread function (ESF). The PSF is calculated as shown in Equation (12) by the knife-edge method:

We can assume that the original signal can be restored effectively by the PSF with the values calculated by the knife-edge method. Deconvolution is used in the downsampling image

and the PSF initially. The deconvolution image requires rise sampling, so that the original image

can be obtained, as shown in Equation (13):

where

is the deconvolution operation. Upsampling operator

can be taken as the calculation of one-dimensional upsampling multiples of

, which is the equivalent of the

compression from the two-dimensional function of two coordinate axes, as shown in (14):

If Equation (14) is correct, Equation (15) must exist:

Hypothesis (13) can be proven as tenable because Equations (11) and (16) yield the same results; hence, Equation (15) is correct.

The original signal can be restored effectively by the PSF of the existing degraded image proof. The signal can be applied in the process of image SR reconstruction. The PSF of the LR image calculated by the knife-edge method can be applied in the SR reconstruction process.

The PSF is approximated by Gaussian functions with appropriate parameters because the PSF follows a Gaussian distribution [

27,

28,

29]. The PSF

of the HR image can be written as follows:

The PSF

of the LR image can be expressed using Equation (18):

Therefore, the relationship between the Gaussian function parameters and the PSF of the

and

images can be deduced from Equation (12), as shown in Equations (20), where

is the Gaussian function parameter of

, and

is the Gaussian function parameter of

:

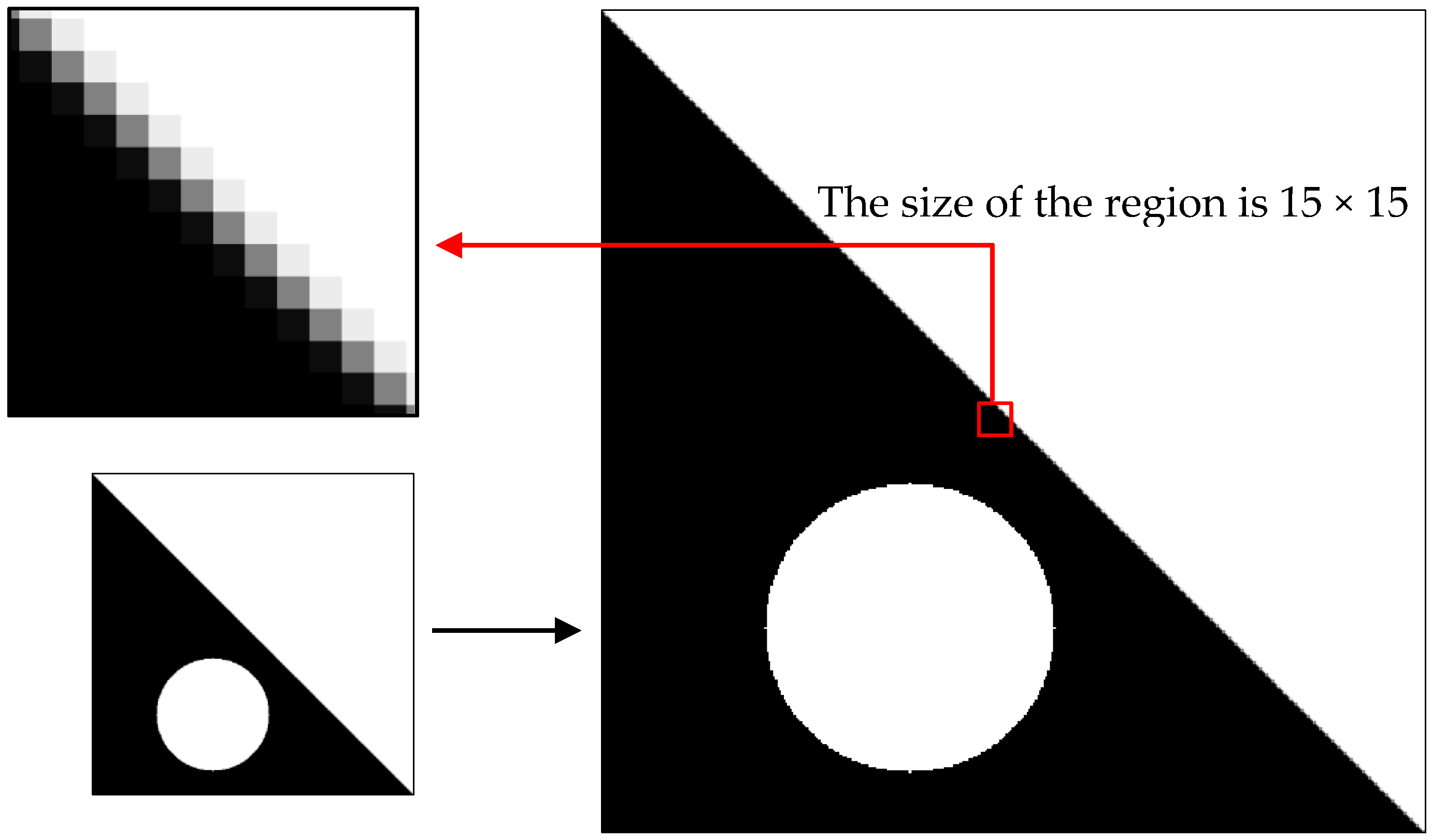

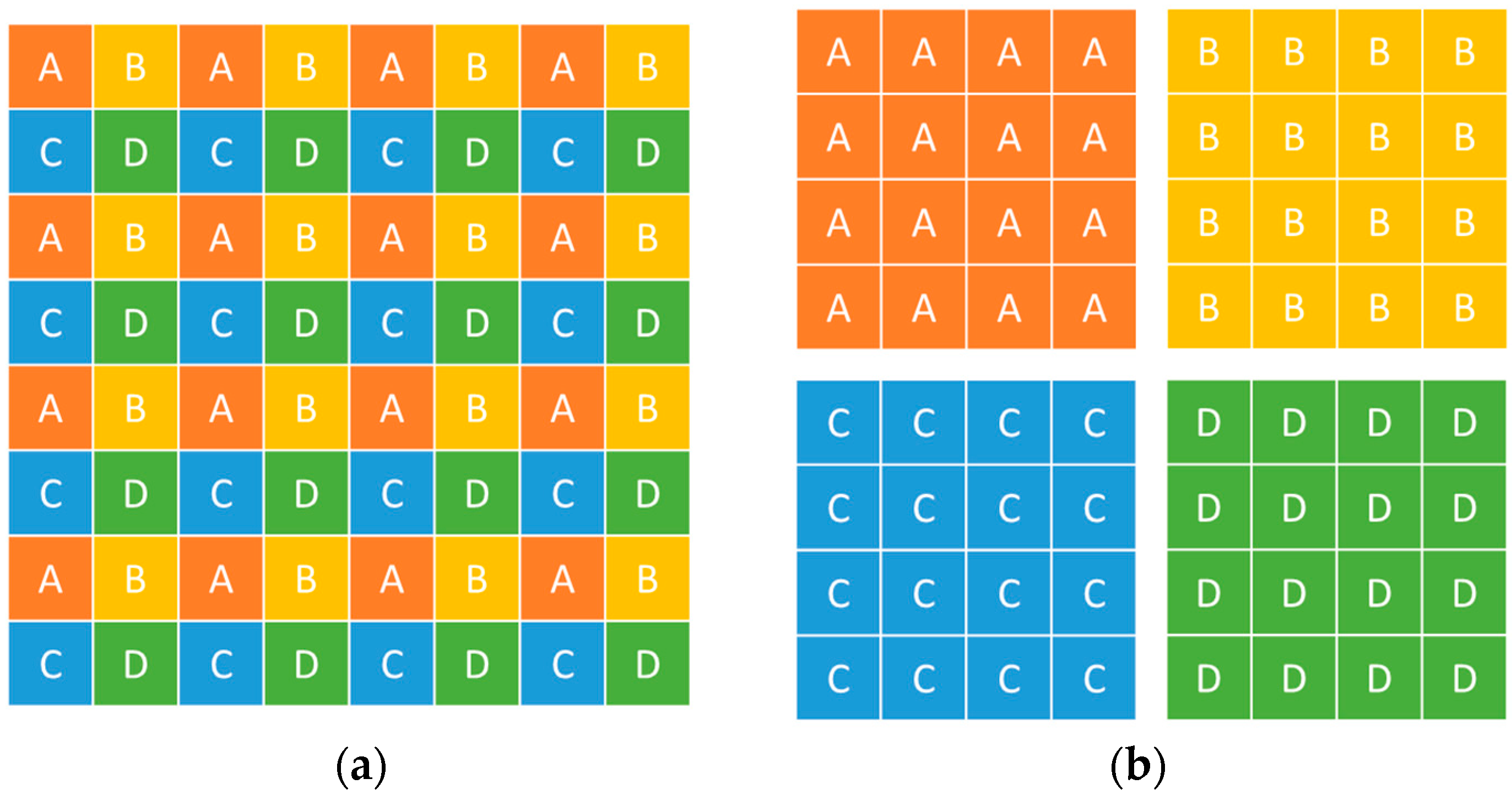

The simulation experiment is conducted to verify the proposed computation formula. A man-made knife-edge figure is drawn using computer language, and the figure is degraded by convolution by using the PSF, which is estimated by Gaussian functions. The selection process of the knife-edge area is shown in

Figure 2. The seven Gaussian function parameters were set at 0.5, 0.75, 1.0, 1.5, 1.75, 2.0, and 2.5. The resized image by resampling is presented with different multiples of

set at 0.5, 1.5, 2.0, 2.5, and 3.0. The knife-edge area must be selected to obtain the Gaussian function parameter of the sampled image; the size of the region is 15 × 15 pixels. According to the formula derived, the result is in correspondence with the original parameter. The results are summarized in

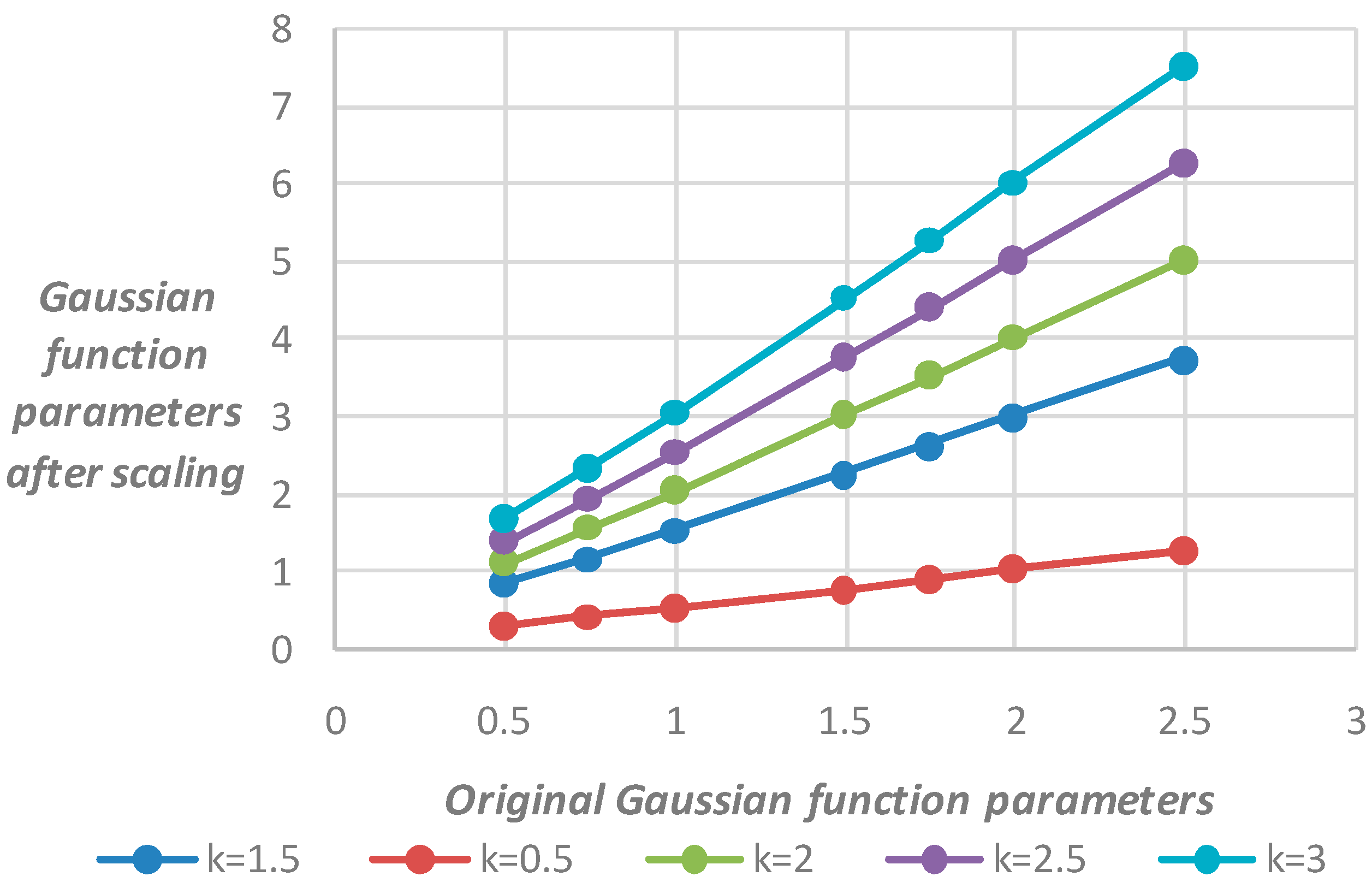

Table 1, and

Figure 3 shows that the Gaussian function parameters between the original and scaled image basically meet the linear relationship.

The results in

Figure 3 and

Table 1 show the PSF Gaussian function parameters between the images before the downsampling and after satisfying the linear relationship when the image is scaled at different scales of

. The ratio of the scaling parameter variation to the original parameter variation is similar to

. The ratio satisfies the formula which was just deduced in Equation (20).

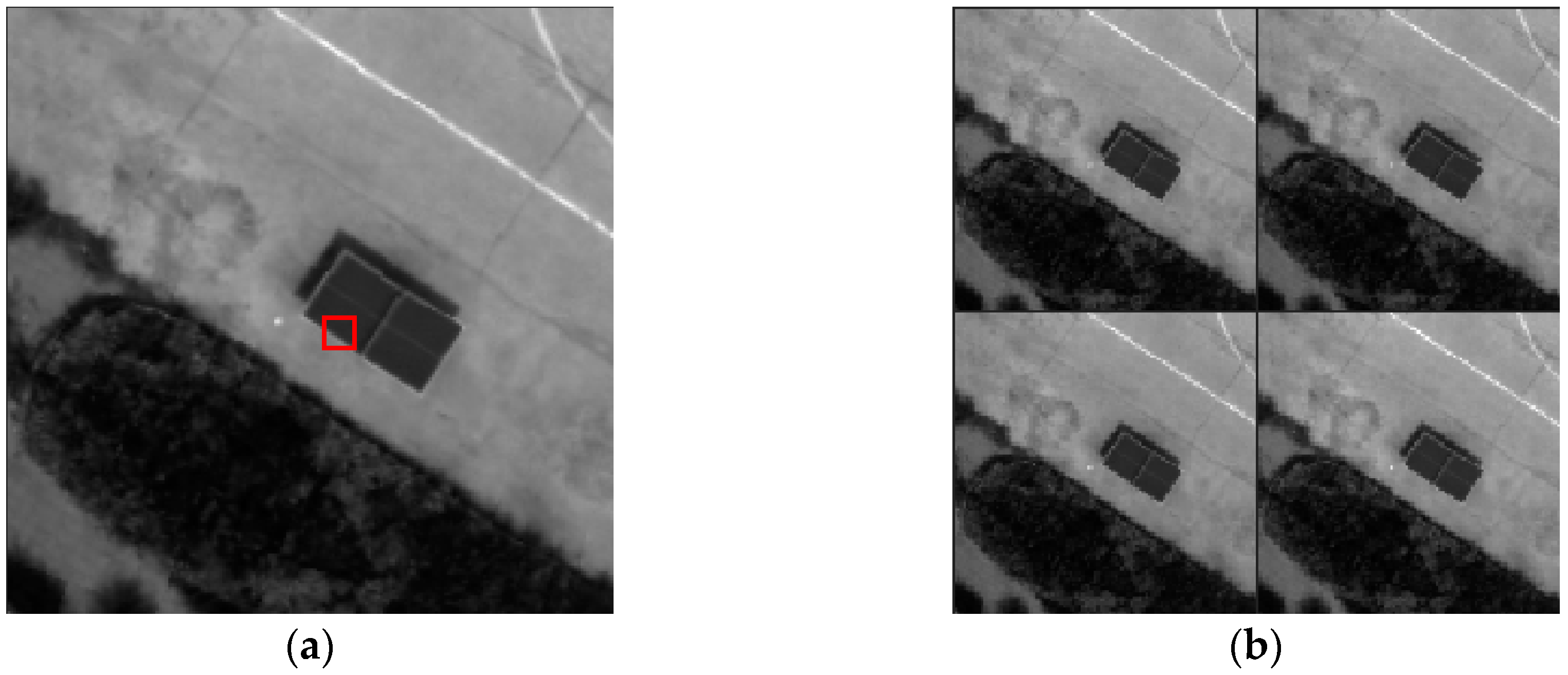

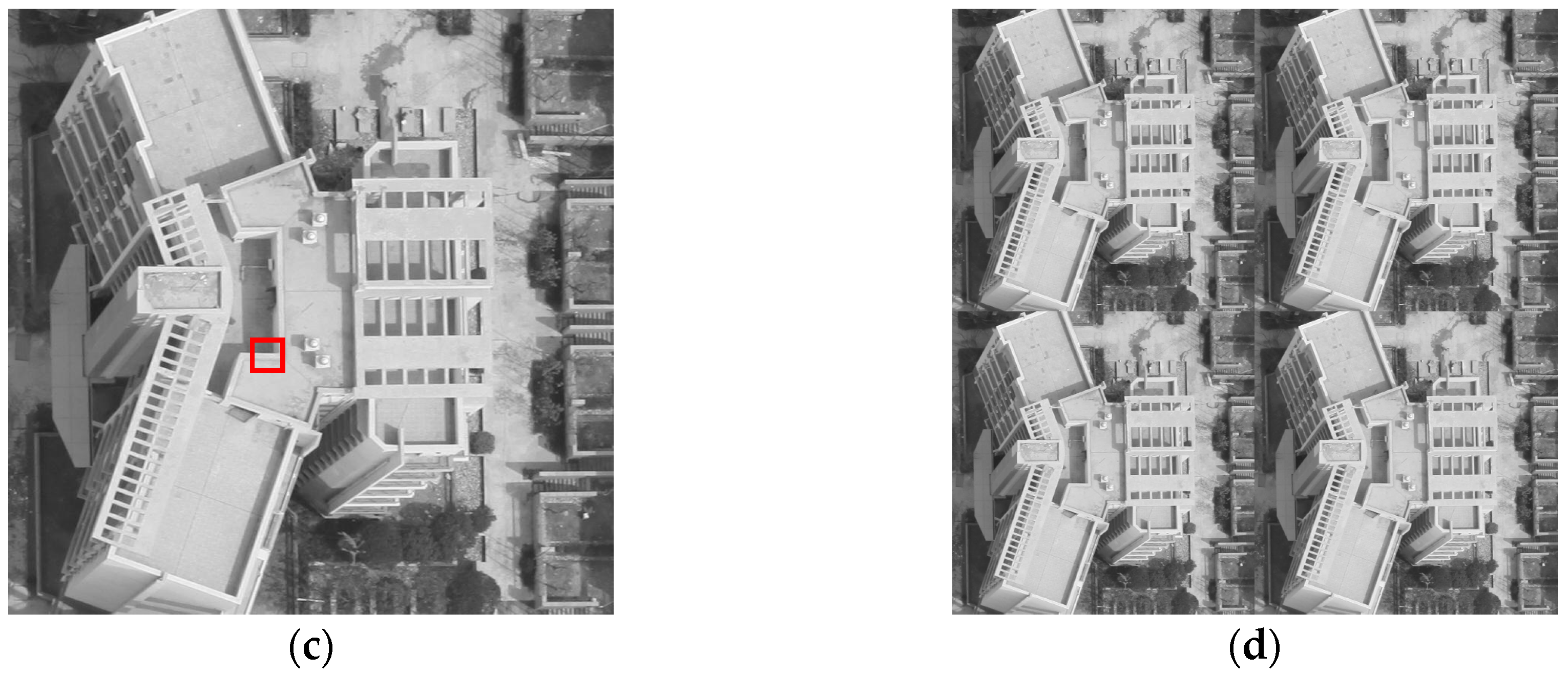

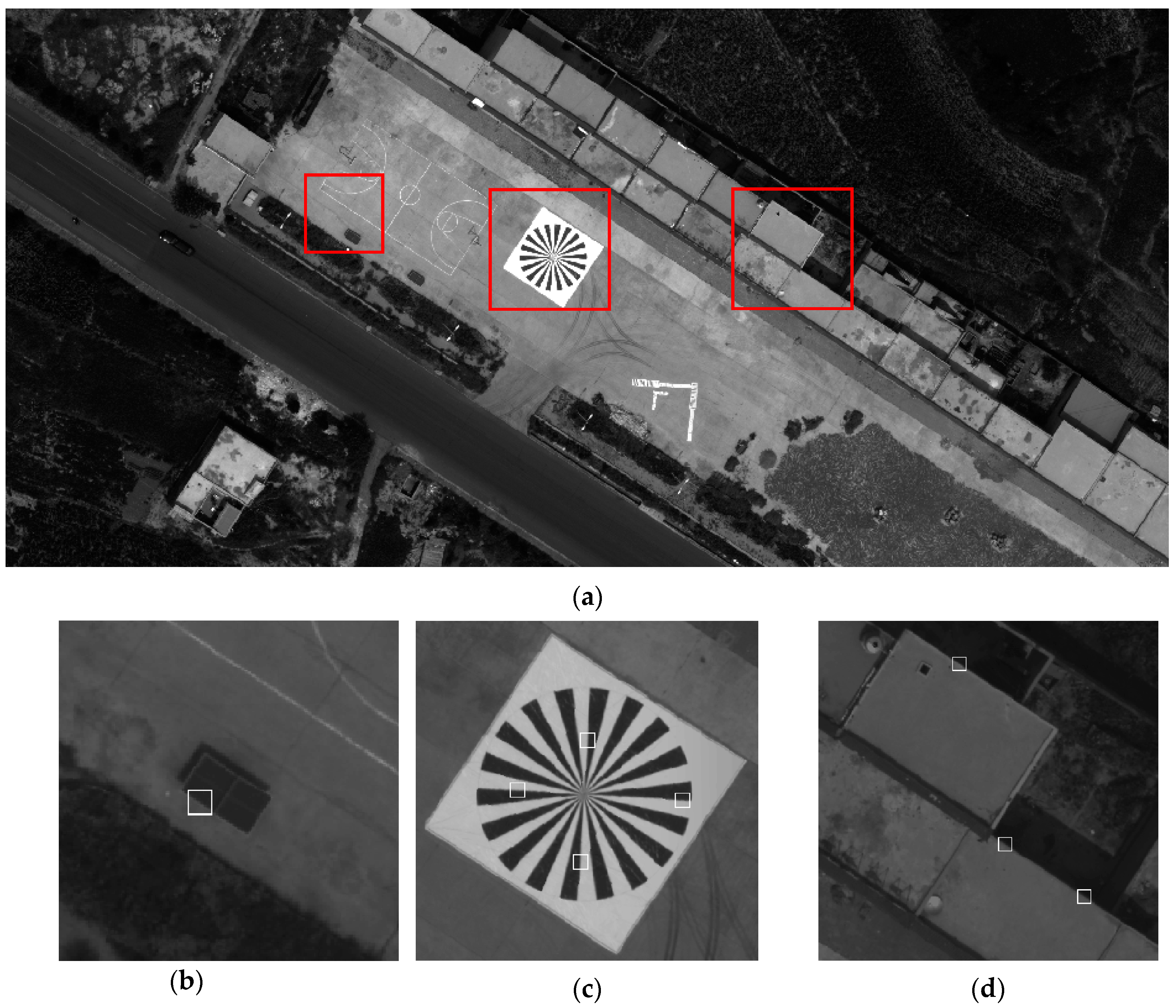

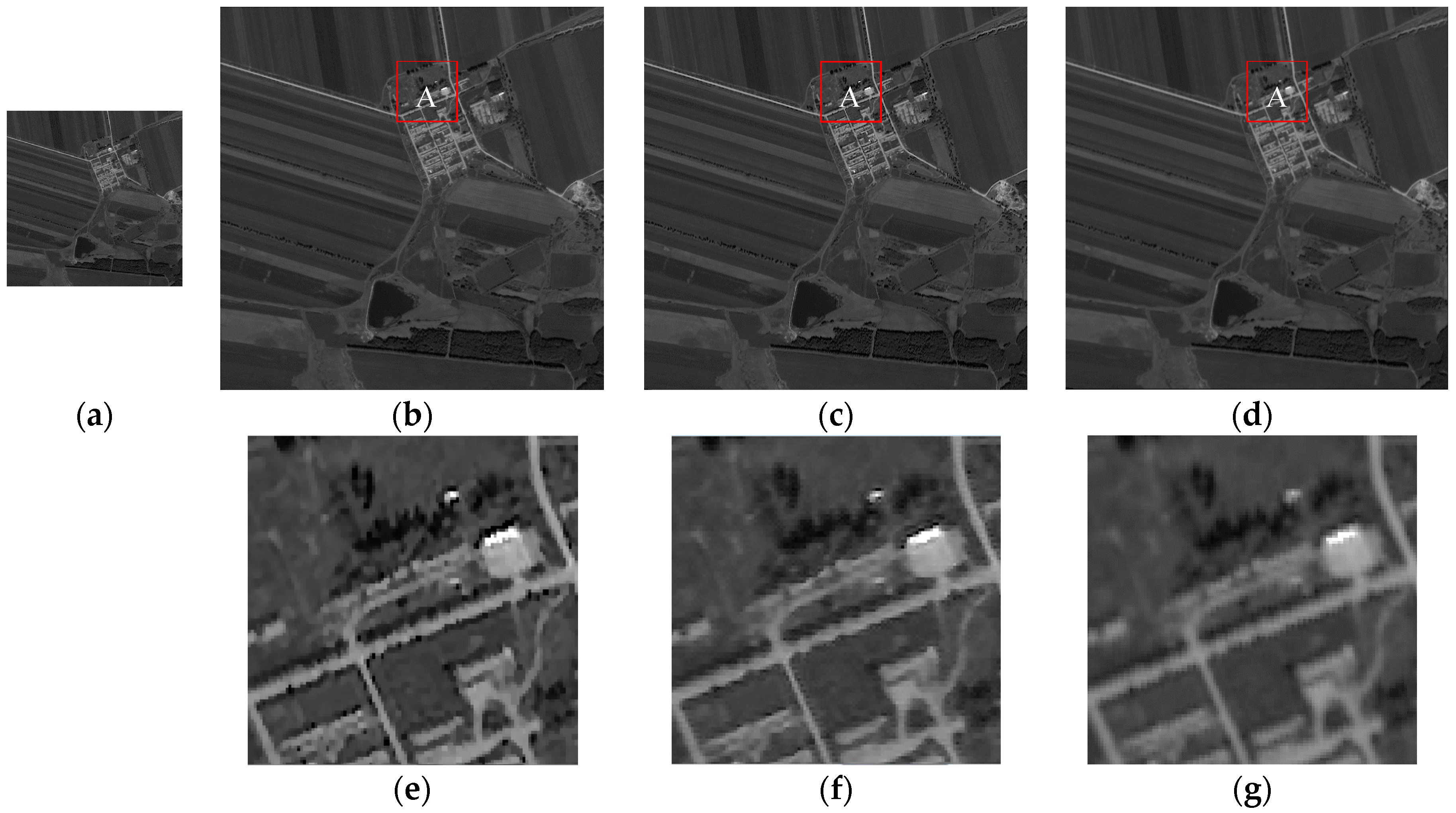

The correctness of Equation (20) in the real image can be proven by the following experiments. The experimental images with some knife-edge areas can be selected by the ADS 40 remote sensing image with the size of 200 × 200 (Example 1) and the unmanned aerial vehicle (UAV) image with the size of 800 × 800 (Example 2). The experimental data are shown in

Figure 4.

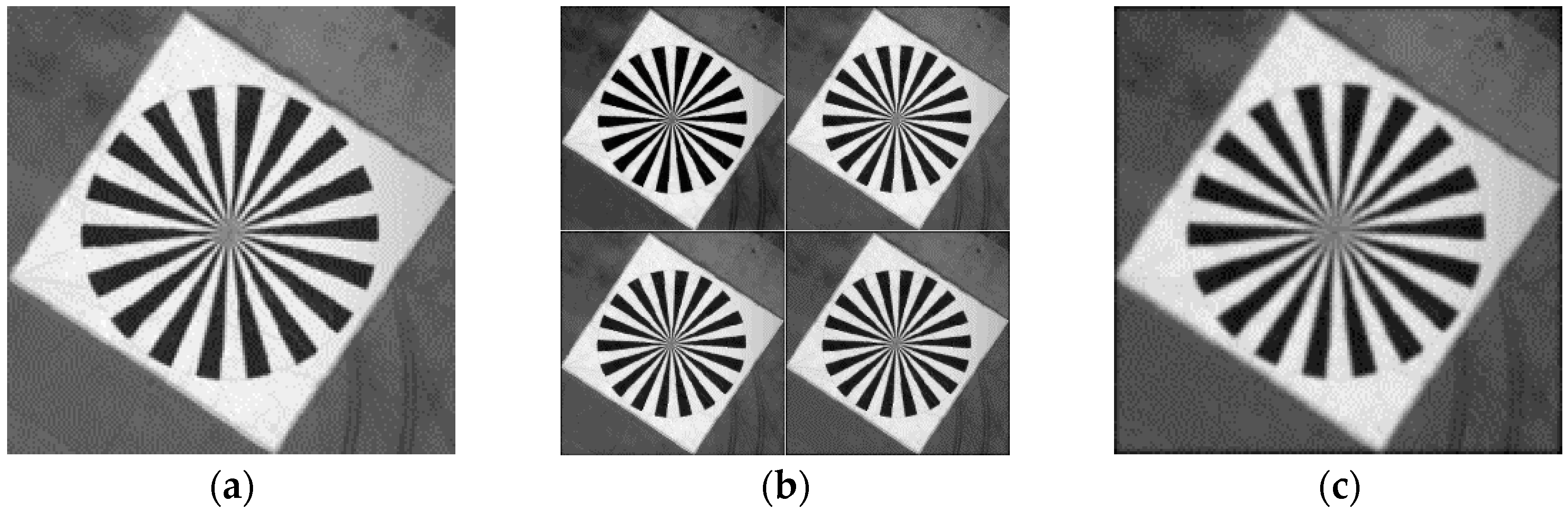

First, four LR images must be acquired from the downsampling model, as shown in

Figure 5, in which the original image becomes a series of LR image sequences with size of 100 × 100.

Second, the knife-edge areas with four LR images and the experimental image are estimated using the PSF estimation based on slant knife-edge method. The PSFs can be obtained separately.

Finally, an oversampling rate is set at 2, indicating that the original image is zoomed out in half in the experiment. Based on the relationship between the images before the downsampling and after deducing, the Gaussian function parameter σ, after downsampling, must be half of the original. The results are shown in

Table 2. The value coincides with the equation, proving that SR image reconstruction based on the PSFs of LR images is possible.

2.4. PSF Estimation of Low-Resolution Remote Sensing Images

The optical information of the remote sensing image is blurred in the process of the image capture because of the relative motion between the object being photographed and the satellite and the CCD or the atmosphere turbulence. The PSF of an imaging platform can represent the response of an imaging system to a point source. Therefore, the calculation of the PSF in the acquired image is a significant step to restore the ideal remote sensing images. The blurring process [

30], as a convolution of an image, is shown in Equation (21):

where

is the convolution operator,

represents the PSF,

is the original image,

is the degradation of the image, and

are the blurring filters.

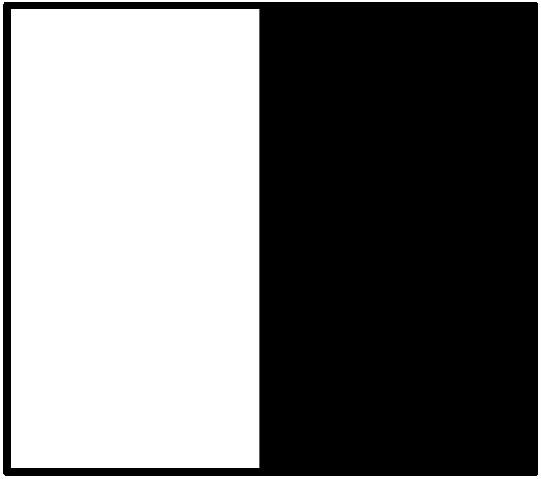

However, even when the PSF [

31] is measurable, it is influenced by some unpredictable conditions; hence, many methods are proposed to solve the problem. The most common methods in PSF estimation are the knife-edge [

32], spotlight [

33], and pulse [

34] methods. In addition, the knife-edge method is the most commonly employed method. The principles of the typical knife-edge method [

35,

36] and the ideal knife-edge area are shown in

Figure 6. The knife-edge area is assumed as a square area, and the knife edge goes through the center of the area. Each row of the knife-edge satisfies formula (22), in which the value of the image greater than the edge boundary line

is 1, and the value less than

is zero [

36,

37]. However, the typical knife-edge method for point spread function estimation is limited by edge slant angle. The knife-edge in the image must be parallel to the sampling direction and the slant angle should be within 8° [

32]. Under most circumstances, slant knife-edges have certain slope to the direction of the ideal ones; thus, the ideal knife-edge cannot be determined in all situations. Qin et al. [

38] estimated the PSF by a robust method to solve this problem in the typical knife-edge method; they built a mathematical model of the relationship between the line spread function (LSF) estimated by the typical knife-edge method and the real PSF. Although an accurate PSF is obtained, the computed PSF still contains an error because the algorithm uses the discrete function to derive the subpixel error:

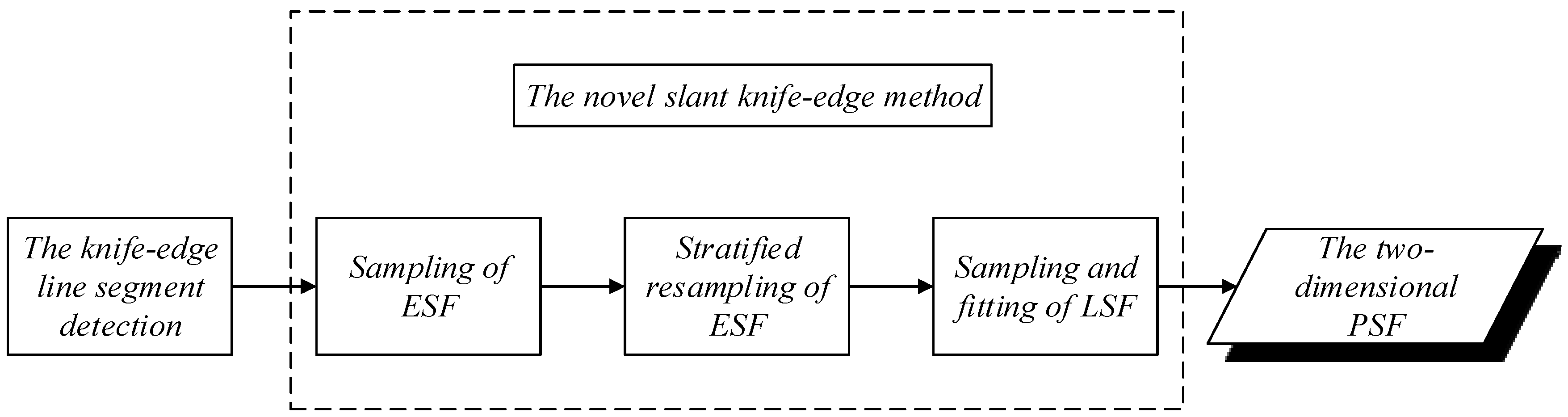

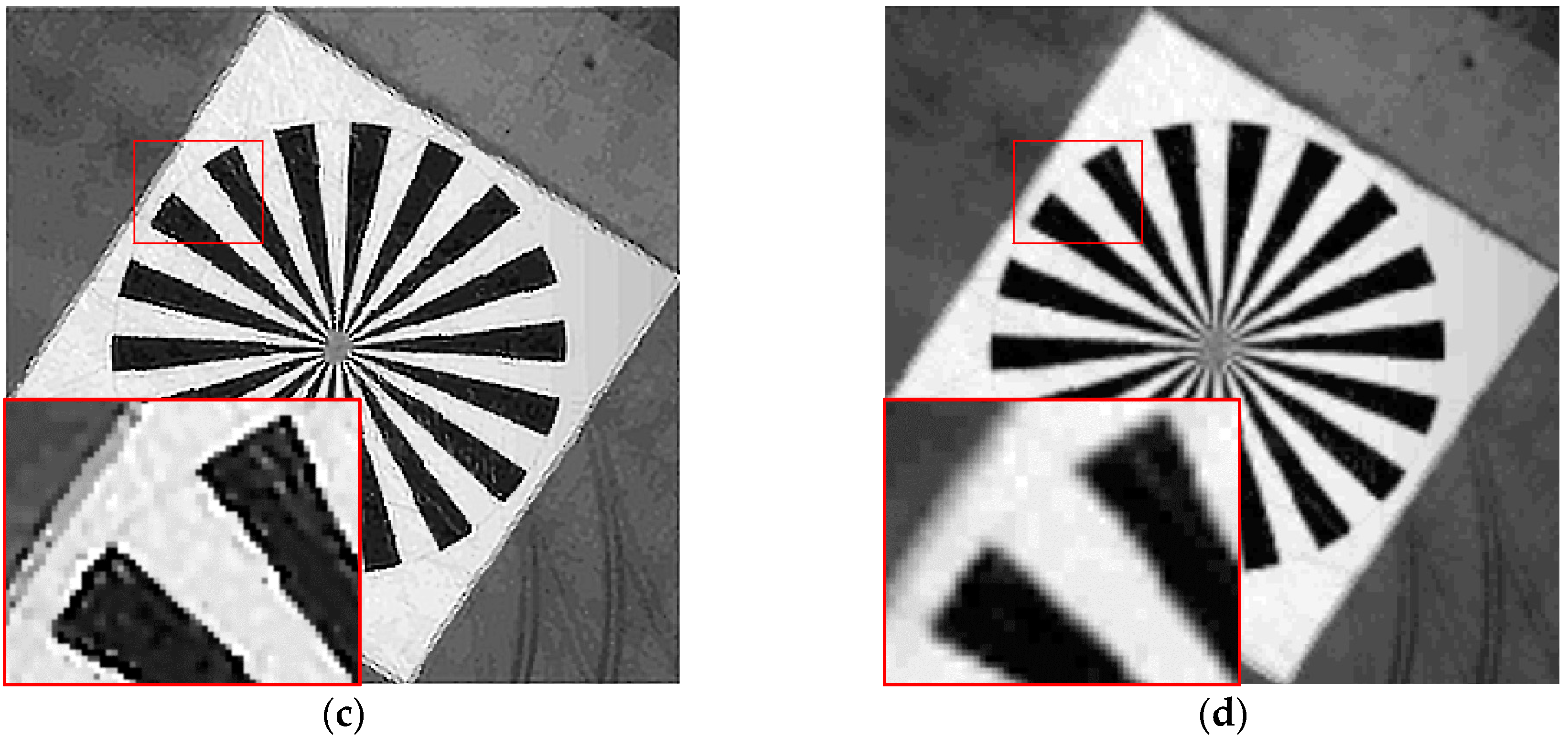

In this study, a novel slant knife-edge method is used because this method fits the LSF directly and ensures the evenness of the edge spread function (ESF) sample to improve the accuracy of the PSF estimation.

Figure 7 illustrates the process of the novel slant knife-edge method in a simplified sequence flow diagram. However, the area can be searched using several methods, and the three following requirements must be satisfied:

The area cannot be extracted from the borders of the image to avoid the noise around the borders.

The area must be excellent in linearity to ensure the accuracy of the PSF estimation.

Evident gray value differences between two sides of the edge to reduce the influence of the noise must be obtained.

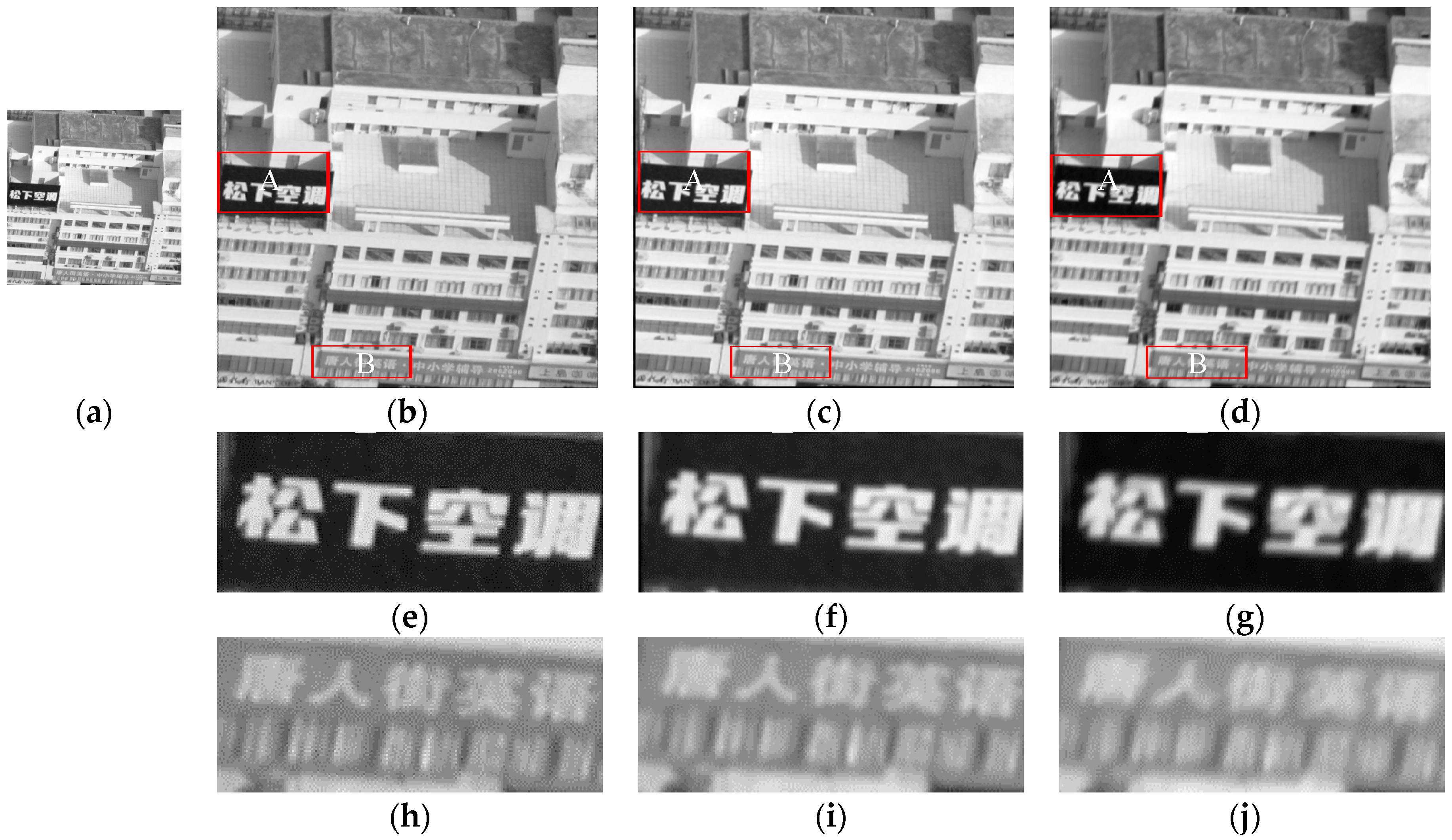

The original data and results of the experiment are displayed in

Figure 8. The measurement area and three examples of extracted knife-edge areas are also shown. Most of the knife-edge areas extracted successfully and the process of removing the weak-related areas are displayed. Follow ups are described in detail. ESF sampling, ESF denoising, ESF resampling, and LSF sampling results are shown in

Figure 9.