Assessment of Pansharpening Methods Applied to WorldView-2 Imagery Fusion

Abstract

:1. Introduction

2. Methodology

2.1. Algorithms

2.1.1. GS and GSA

2.1.2. HR

2.1.3. HCS

- (a)

- The squares of the multispectral intensity (I2) and the PAN (P2) are calculated using Equations (7) and (8), respectively:

- (b)

- Calculate the mean (uP) and standard deviation (σp) of P2, as well as the mean (uI) and standard deviation (σP) of I2.

- (c)

- The P2 is adjusted to the mean and standard deviation of I2, using Equation (9):

- (d)

- The square root of the adjusted P2 is assigned to Iadj (i.e., ), Iadj is used in the reverse transform from HCS color space back to the original color space, using Equation (10):in which is defined using Equation (11):

2.1.4. ATWT

- (1)

- Use the à trous wavelet transform to decompose the PAN image to n wavelet planes. Usually, n = 2 or 3.

- (2)

- Add the wavelet planes (i.e., spatial details) of the decomposed PAN images to each of the spectral bands of the MS image to produce fused MS bands.

2.1.5. GLP

2.1.6. NSCT

- (a)

- Each original MS band is decomposed using 1-level NSCT to get one coarse level, , and one fine level, ;

- (b)

- The PAN band is decomposed using3-level NSCT into one coarse level, , and three fine levels, which are denoted as , , and , respectively.

- (c)

- The coefficients of each MS band, and , are up-sampled to the scale of the PAN band using the bi-linear interpolation algorithm.

- (d)

- The coarse level of the fused ith MS band, , is the up-sampled coarse level of the ith MS band , whereas the fine levels 2 () and 3 () of the fused ith MS band are the fine levels 2 () and 3 () of the PAN band.

- (e)

- The fused fine level 1, , is obtain by fusing the coefficients of the same level obtained from both the ith MS band and the PAN band. For each pixel (x, y), the coefficients of the fused fine level 1, , is determined according to Equation (18):where and LEPAN(x, y) are the local energy of pixel (x, y) for the ith MS band and the PAN band, respectively, calculated within a (2M + 1) × (2P + 1) window using the formula shown in Equation (19):The inverse NSCT is applied to the fused coefficients to provide the fused ith MS band.This improved version was demonstrated to provide pansharpened images with a good spectral quality.

2.2. Quality Indexes

2.2.1. ERGAS

2.2.2. SAM

2.2.3. Q2n

2.2.4. SCC

2.3. Information Indexes

2.3.1. NDVI

2.3.2. NDWI

2.3.3. MBI

- (a)

- A brightness image b is generated by setting the value of each pixel p to be the maximum digital number of the visible bands. Only the visible channels are considered due to they have the most significant contributions to the spectral property of buildings.

- (b)

- The directional white top-hat (WTH) reconstruction is employed to highlight bright structures that have a size equal to or smaller than the size of the structure element (SE), and meanwhile suppresses other dark structures in the image. WTH with linear SE is defined as Equation (34):in which is an opening by reconstruction operator using a linear SE with a size of s and a direction of .

- (c)

- The difference morphological profiles (DMP) of white top-hat transforms are employed to model building structures in a multi-scale manner:

- (d)

- Finally, MBI is defined based on DMP using Equation (36):where ND and NS are the directionality and the scale of the DMPs, respectively. The definition of the MBI is based on the fact that building structures have high local contrast and, hence, have larger feature values in most of the directions of the profiles. Accordingly, buildings will have large MBI values.

3. Experimental Results

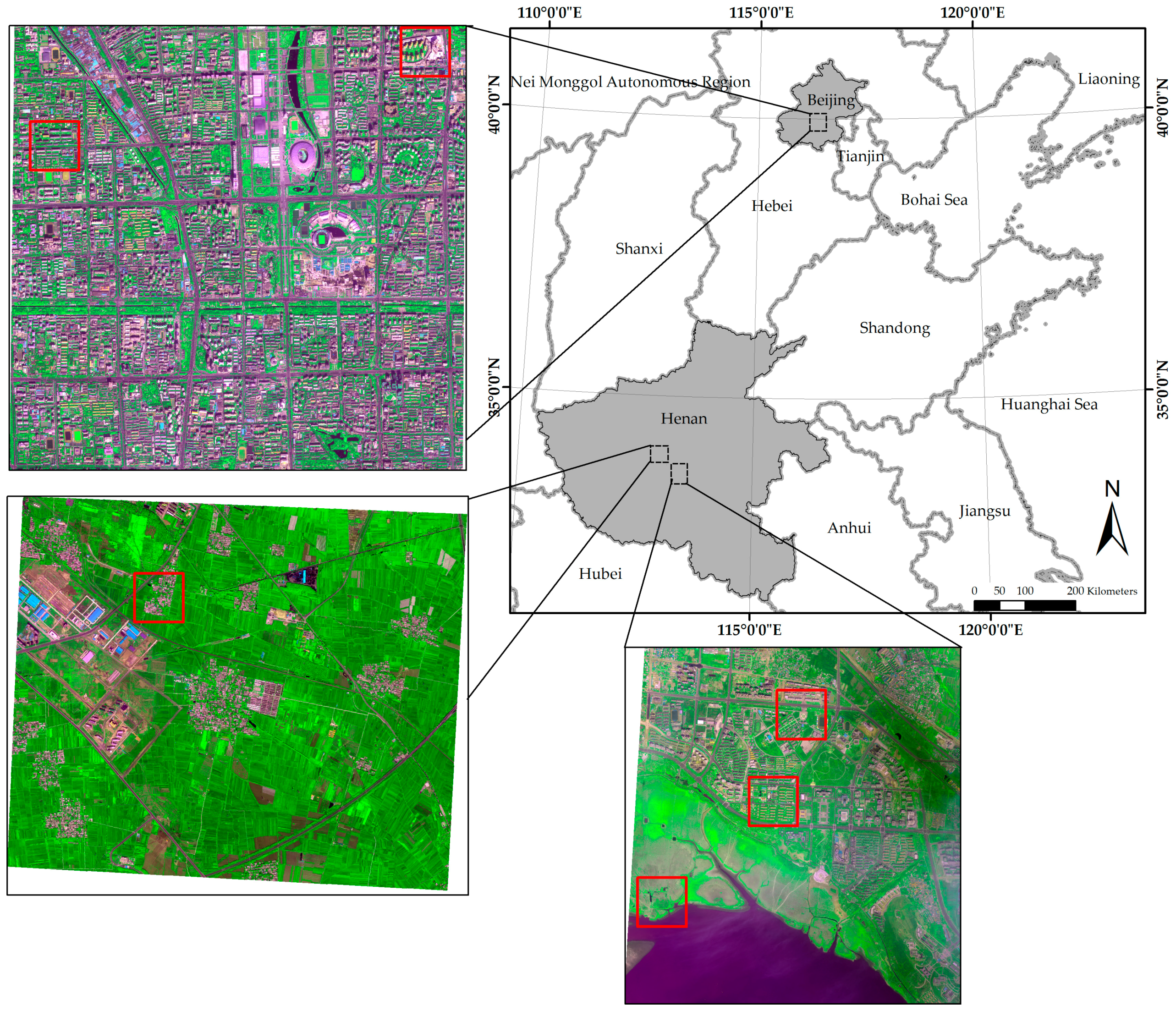

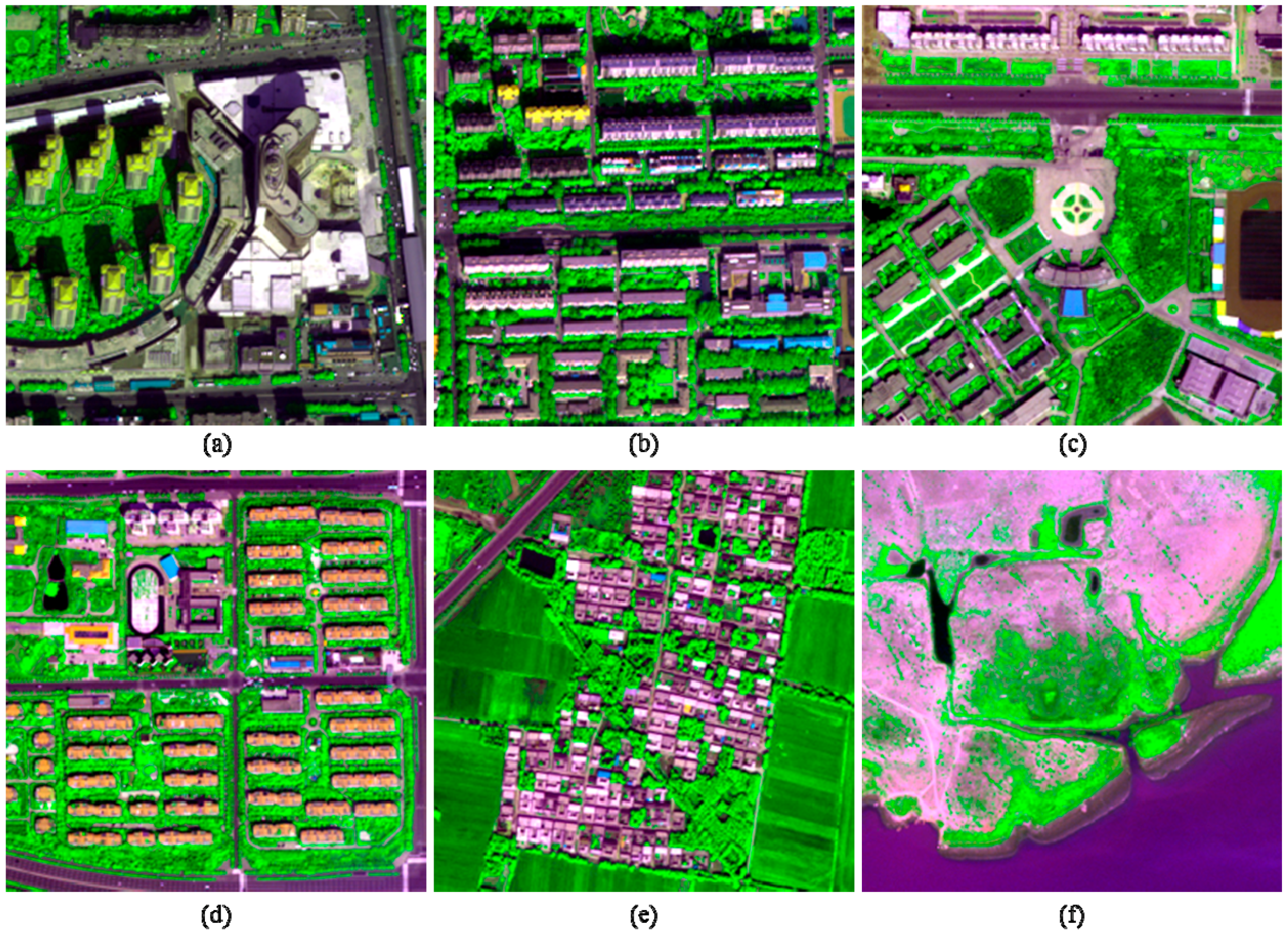

3.1. Datasets

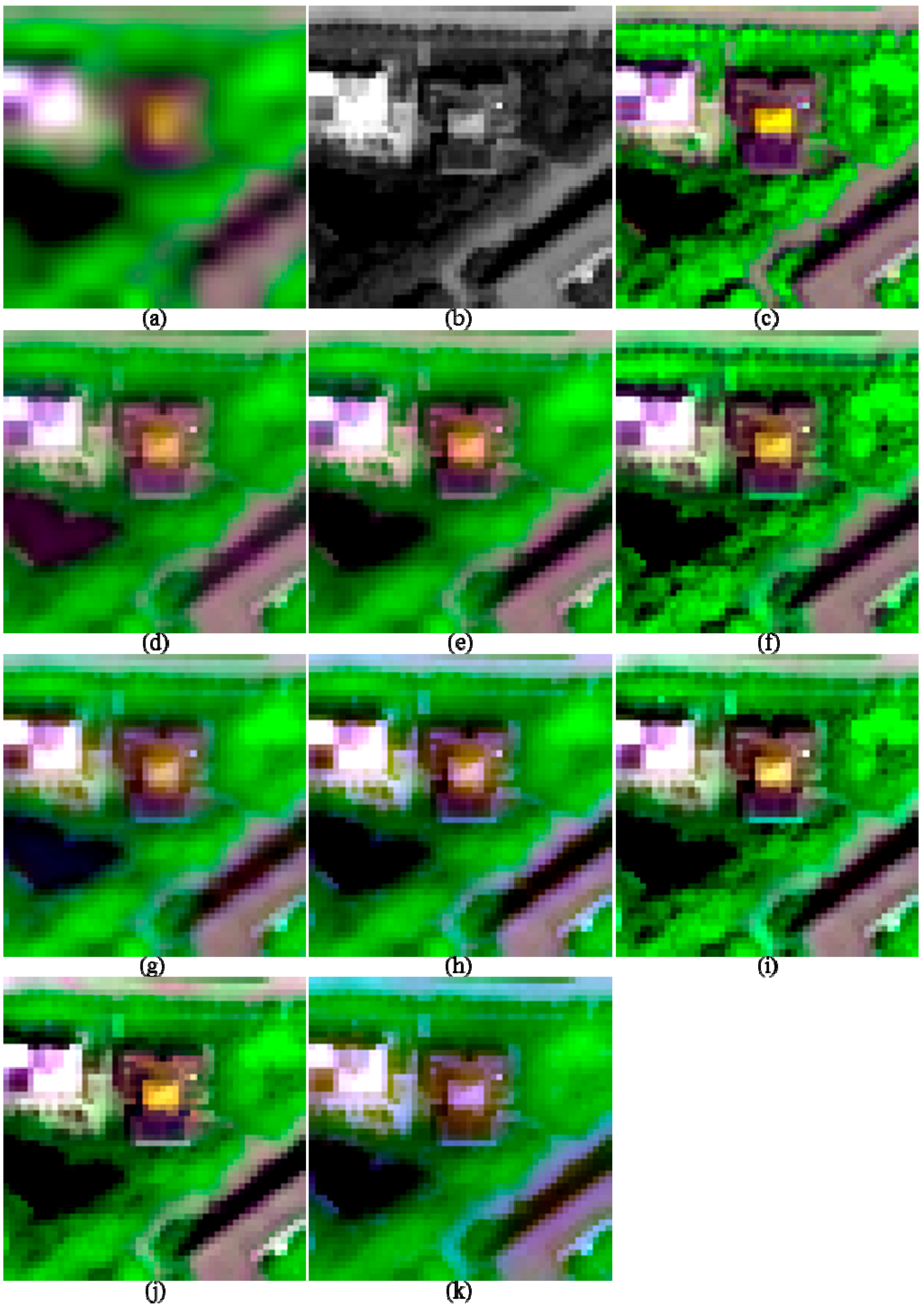

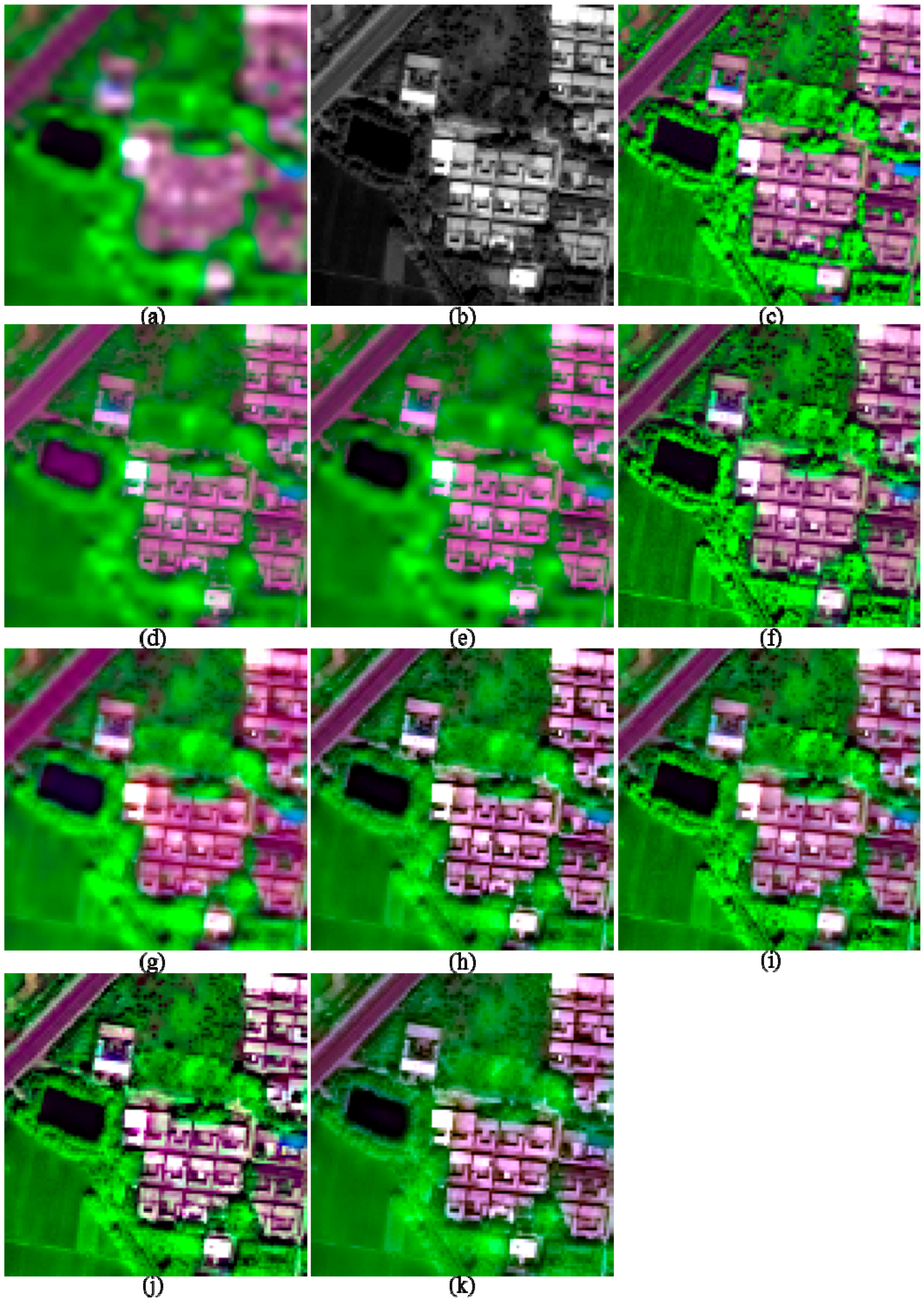

3.2. Fusing Using the Selected Algorithms

3.3. Quality Indexes

3.3.1. Assessment for the Two Urban Images

3.3.2. Assessment for the Two Suburban Images

3.3.3. Assessment for the Two Rural Images

3.4. Information Preservation

3.4.1. CMBI

3.4.2. CNDVI and CNDWI

4. Discussion

4.1. General Performances of the Selected Pansharpening Methods

4.2. Effects for Different Spectral Ranges between the PAN and MS Bands

4.3. How to Extend the Selected Pansharpening Methods to Other HSR Satellite Images

5. Conclusions

- (1)

- Generally, the HR, GSA, GLP_ESDM and GLP_ECBD methods give better performances than the other methods, whereas the NSCT and HCS methods offer the poorest performances, for most of the test images, in terms of quality indexes and visual inspection. Some of the fusion products generated by the GS and ATWT methods show significant spectral distortions. In addition, the performances of the eight methods in terms of CMBI are consistent with those in terms of Q8 and SCC. Consequently, the HR, GSA, GLP_ESDM, and GLP_ECBD methods are good choices if the fused WV-2 images will be used for image interpretation and applications related to urban buildings. The four methods can also provide good performances for other WV-2 image scenes, for producing fused images used for image interpretation.

- (2)

- The order of the pansharpening methods in terms of CNDVI is consistent with that in terms of CNDWI. This is because both of the two indexes measure the differences between inter-band relationships of the fused image and those of the reference MS image, and both of them are related to the quality of the fused NIR1 bands. The GLP_ESDM method offers higher CNDVI and CNDWI values for I1, I2 and I5, whereas the GLP_ECBD method provides higher CNDVI and CNDWI values for I3, I4 and I6, as well as good performances in terms of quality indexes and visual inspection. Consequently, the GLP_ESDM and GLP_ECBD methods are better than other methods, if the fused WV-2 images will be used for applications related to vegetation and water-bodies. However, for this case, it is better to select a best method by comparing the indexes CNDVI and CNDWI, as well as quality indexes and visual inspection, since the GLP_ESDM and GLP_ECBD methods may give different performances for images with different land cover objects.

- (3)

- According to the experimental results of this work and the analyses the algorithms of the selected pansharpening methods, we can offer two suggestions for the fusion of images obtained by sensors similar with WV-2, such as Geoeye-1 and Worldview-3/4. Firstly, for the spectral bands with relative high correlations with the PAN band, the synthetized PAN band should be obtained using the original PAN band and the injection gains should considering the relationship between each MS band and the PAN band. The HR, GSA, GLP_ESDM, and GLP_ECBD method also can offer good performances for scenes obtained by GeoEye-1 and Worldview-3/4, for producing fused images used for interpretation and applications related to urban buildings. Secondly, for the spectral bands with relative low correlations with the PAN band, local dissimilarity between the MS and PAN bands should be considered for the fusion of these bands, i.e., the NIR band, especially for the case that the fused images will be used in applications related to vegetation and water-bodies.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, R.; Zeng, M.; Chen, J. Study on geological structural interpretation based on Worldview-2 remote sensing image and its implementation. Procedia Environ. Sci. 2011, 10, 653–659. [Google Scholar]

- Jawak, S.D.; Luis, A.J. A spectral index ratio-based Antarctic land-cover mapping using hyperspatial 8-band Worldview-2 imagery. Polar Sci. 2013, 7, 18–38. [Google Scholar] [CrossRef]

- Ghosh, A.; Joshi, P.K. A comparison of selected classification algorithms for mapping bamboo patches in lower gangetic plains using very high resolution Worldview-2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 298–311. [Google Scholar] [CrossRef]

- Mutanga, O.; Adam, E.; Cho, M.A. High density biomass estimation for wetland vegetation using Worldview-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- Rapinel, S.; Clement, B.; Magnanon, S.; Sellin, V.; Hubert-Moy, L. Identification and mapping of natural vegetation on a coastal site using a Worldview-2 satellite image. J. Environ. Manag. 2014, 144, 236–246. [Google Scholar] [CrossRef] [PubMed]

- Ozdemir, I.; Karnieli, A. Predicting forest structural parameters using the image texture derived from Worldview-2 multispectral imagery in a dryland forest, Israel. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 701–710. [Google Scholar] [CrossRef]

- Carper, W.J.; Lillesand, T.M.; Kiefer, R.W. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogramm. Eng. Remote Sens. 1990, 56, 459–467. [Google Scholar]

- Zhou, X.; Liu, J.; Liu, S.; Cao, L.; Zhou, Q.; Huang, H. A GIHS-based spectral preservation fusion method for remote sensing images using edge restored spectral modulation. ISPRS J. Photogramm. Remote Sens. 2014, 88, 16–27. [Google Scholar] [CrossRef]

- Welch, R.; Ehlers, M. Merging multiresolution SPOT HRV and Landsat TM data. Photogramm. Eng. Remote Sens. 1987, 53, 301–303. [Google Scholar]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M.; Alparone, L. MS + Pan image fusion by an enhanced Gram-Schmidt spectral sharpening. In New Developments and Challenges in Remote Sensing; Bochenek, Z., Ed.; Millpress: Rotterdam, The Netherlands, 2007; pp. 113–120. [Google Scholar]

- Shensa, M.J. The discrete wavelet transform—Wedding the à trous and Mallat algorithms. IEEE Trans. Sign. Process. 1992, 40, 2464–2482. [Google Scholar] [CrossRef]

- Teggi, S.; Cecchi, R.; Serafini, F. TM and IRS-1C-PAN data fusion using multiresolution decomposition methods based on the ‘a tròus’ algorithm. Int. J. Remote Sens. 2003, 24, 1287–1301. [Google Scholar] [CrossRef]

- Mallat, S.G. Multifrequency channel decompositions of images and wavelet models. IEEE Trans. Acoust. Speech Sign. Process. 1989, 37, 2091–2110. [Google Scholar] [CrossRef]

- Yocky, D.A. Multiresolution wavelet decomposition image merger of Landsat Thematic Mapper and SPOT panchromatic data. Photogramm. Eng. Remote Sens. 1996, 62, 1067–1074. [Google Scholar]

- Amolins, K.; Zhang, Y.; Dare, P. Wavelet based image fusion techniques—An introduction, review and comparison. ISPRS J. Photogramm. Remote Sens. 2007, 62, 249–263. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Lotti, F. Lossless image compression by quantization feedback in a content-driven enhanced Laplacian pyramid. IEEE Trans. Image Process. 1997, 6, 831–843. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.H.; Jiao, L.C. Fusion algorithm for remote sensing images based on nonsubsampled Contourlet transform. Acta Autom. Sin. 2008, 34, 274–281. [Google Scholar] [CrossRef]

- Saeedi, J.; Faez, K. A new pan-sharpening method using multiobjective particle swarm optimization and the shiftable contourlet transform. ISPRS J. Photogramm. Remote Sens. 2011, 66, 365–381. [Google Scholar] [CrossRef]

- Dong, W.; Li, X.E.; Lin, X.; Li, Z. A bidimensional empirical mode decomposition method for fusion of multispectral and panchromatic remote sensing images. Remote Sens. 2014, 6, 8446–8467. [Google Scholar] [CrossRef]

- Dong, L.; Yang, Q.; Wu, H.; Xiao, H.; Xu, M. High quality multi-spectral and panchromatic image fusion technologies based on Curvelet transform. Neurocomputing 2015, 159, 268–274. [Google Scholar] [CrossRef]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of pan-sharpening algorithms: Outcome of the 2006 GRS-S data-fusion contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef]

- Gonzalez-Audicana, M.; Saleta, J.L.; Catalan, R.G.; Garcia, R. Fusion of multispectral and panchromatic images using improved IHS and PCA mergers based on wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1291–1299. [Google Scholar] [CrossRef]

- Guo, Q.; Liu, S. Performance analysis of multi-spectral and panchromatic image fusion techniques based on two wavelet discrete approaches. Optik Int. J. Light Electron Opt. 2011, 122, 811–819. [Google Scholar] [CrossRef]

- Chen, F.; Qin, F.; Peng, G.; Chen, S. Fusion of remote sensing images using improved ICA mergers based on wavelet decomposition. Procedia Eng. 2012, 29, 2938–2943. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.P.; Wang, Z.F. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Padwick, C.; Deskevich, M.; Pacifici, F.; Smallwood, S. Worldview-2 pan-sharping. In Proceedings of the 2010 Conference of American Society for Photogrammetry and Remote Sensing, San Diego, CA, USA, 21 May 2010.

- El-Mezouar, M.C.; Kpalma, K.; Taleb, N.; Ronsin, J. A pan-sharpening based on the non-subsampled Contourlet transform: Application to Worldview-2 imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1806–1815. [Google Scholar] [CrossRef]

- Du, Q.; Younan, N.H.; King, R.; Shah, V.P. On the performance evaluation of pan-sharpening techniques. IEEE Geosci. Remote Sens. Lett. 2007, 4, 518–522. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 33, 2565–2586. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef]

- Zhang, Y.; Mishra, R.K. A review and comparison of commercially available pan-sharpening. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012.

- Yuhendra; Alimuddin, I.; Sumantyo, J.T.S.; Kuze, H. Assessment of pan-sharpening methods applied to image fusion of remotely sensed multi-band data. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 165–175. [Google Scholar] [CrossRef]

- Nikolakopoulos, K. Quality assessment of ten fusion techniques applied on Worldview-2. Eur. J. Remote Sens. 2015, 48, 141–167. [Google Scholar] [CrossRef]

- Maglione, P.; Parente, C.; Vallario, A. Pan-sharpening Worldview-2: IHS, Brovey and Zhang methods in comparison. Int. J. Eng. Technol. 2016, 8, 673–679. [Google Scholar]

- Huang, X.; Wen, D.; Xie, J.; Zhang, L. Quality assessment of panchromatic and multispectral image fusion for the ZY-3 satellite: From an information extraction perspective. IEEE Geosci. Remote Sens. Lett. 2014, 11, 753–757. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Jing, L.; Cheng, Q. Two improvement schemes of PAN modulation fusion methods for spectral distortion minimization. Int. J. Remote Sens. 2009, 30, 2119–2131. [Google Scholar] [CrossRef]

- Nunez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution-based image fusion with additive Wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1204–1211. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Dalla Mura, M.; Licciardi, G.; Chanussot, J. Contrast and error-based fusion schemes for multispectral image pansharpening. IEEE Geosci. Remote Sens. Lett. 2014, 11, 930–934. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Interband structure modeling for Pan-sharpening of very high-resolution multispectral images. Inf. Fusion 2005, 6, 213–224. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. MTF-tailored multiscale fusion of high-resolution MS and PAN imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Ranchin, T.; Aiazzi, B.; Alparone, L.; Baronti, S.; Wald, L. Image fusion—The ARSIS concept and some successful implementation schemes. ISPRS J. Photogramm. Remote Sens. 2003, 58, 4–18. [Google Scholar] [CrossRef]

- Yuhas, R.; Goetz, A.; Boardman, J. Discrimination among Semi-Arid Landscape Endmembers Using the Spectral Angle Mapper (sam) Algorithm. In Summaries of the Third Annual JPL Airborne Geoscience Workshop; Jet Propulsion Laboratory: Pasadena, CA, USA, 1992; pp. 147–149. [Google Scholar]

- Alparone, L.; Baronti, S.; Garzelli, A.; Nencini, F. A global quality measurement of pan-sharpened multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 313–317. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi/hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Otazu, X.; Gonzalez-Audicana, M.; Fors, O.; Nunez, J. Introduction of sensor spectral response into image fusion methods: Application to wavelet-based methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. Morphological building/shadow index for building extraction from high-resolution imagery over urban areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 161–172. [Google Scholar] [CrossRef]

- Chavez, P.S., Jr. An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- Chavez, P.S. Image-based atmospheric corrections—Revisited and improved. Photogramm. Eng. Remote Sens. 1996, 62, 1025–1036. [Google Scholar]

- Moran, M.S.; Jackson, R.D.; Slater, P.N.; Teillet, P.M. Evaluation of simplified procedures for retrieval of land surface reflectance factors from satellite sensor output. Remote Sens. Environ. 1992, 41, 169–184. [Google Scholar] [CrossRef]

- Zhang, Q.; Guo, B.L. Multifocus image fusion using the nonsubsampled contourlet transform. Sign. Process. 2009, 89, 1334–1346. [Google Scholar] [CrossRef]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the International Conference on Fusion Earth Data, Sophia Antipolis, France, 26–28 January 2000.

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Chen, M.; Liu, S.G.; Shao, Z.F.; Zhou, X.R.; Liu, P. Illumination and contrast balancing for remote sensing images. Remote Sens. 2014, 6, 1102–1123. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Geosci. Remote Sens. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Alparone, L.; Alazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar] [CrossRef]

- Smith, W.D. Quaternions, Octonions, and Now, 16-ons and 2n-ons, New Kinds of Numbers, 2004. Available online: http://www.scorevoting.net/WarrenSmithPages/homepage/nce2.pdf (accessed on 30 September 2014).

- Ebbinghaus, H.B. Numbers; Springer: New York, NY, USA, 1991. [Google Scholar]

- Jing, L.; Cheng, Q.; Guo, H.; Lin, Q. Image misalignment caused by decimation in image fusion evaluation. Int. J. Remote Sens. 2012, 33, 4967–4981. [Google Scholar] [CrossRef]

| Image | Location | Type | Objects | Information Indices |

|---|---|---|---|---|

| I1 | Beijing | Urban | High buildings, squares, roads, vegetation, shadows | MBI, NDVI |

| I2 | Beijing | Urban | Moderate buildings, squares, roads, vegetation, shadows | MBI, NDVI |

| I3 | Pingdingshan | Suburban | Low buildings, squares, roads, vegetation, shadows, water bodies | MBI, NDVI, NDWI |

| I4 | Pingdingshan | Suburban | Low buildings, squares, roads, vegetation, shadows, water bodies | MBI, NDVI, NDWI |

| I5 | Pingdingshan | Rural | Building, roads, farms, water bodies | NDVI, NDWI |

| I6 | Pingdingshan | Rural | Vegetation, water bodies, bare soils | NDVI, NDWI |

| Image | Method | ERGAS | SAM | Q8 | SCC |

|---|---|---|---|---|---|

| I1 | GS | 1.64 | 2.19 | 0.957 | 0.911 |

| GSA | 1.28 | 1.92 | 0.974 | 0.908 | |

| HR | 1.26 | 1.76 | 0.977 | 0.911 | |

| HCS | 1.95 | 2.58 | 0.897 | 0.881 | |

| ATWT | 2.09 | 2.72 | 0.879 | 0.863 | |

| GLP_ESDM | 1.56 | 2.05 | 0.956 | 0.898 | |

| GLP_ECBD | 1.93 | 2.36 | 0.956 | 0.888 | |

| NSCT_M2 | 2.22 | 3.66 | 0.849 | 0.861 | |

| EXP | 1.64 | 2.19 | 0.857 | 0.582 | |

| I2 | GS | 2.70 | 3.79 | 0.909 | 0.844 |

| GSA | 2.22 | 3.45 | 0.946 | 0.839 | |

| HR | 2.20 | 3.14 | 0.951 | 0.849 | |

| HCS | 2.87 | 3.99 | 0.850 | 0.809 | |

| ATWT | 2.76 | 4.02 | 0.861 | 0.799 | |

| GLP_ESDM | 2.51 | 3.47 | 0.916 | 0.824 | |

| GLP_ECBD | 3.14 | 4.26 | 0.905 | 0.785 | |

| NSCT_M2 | 3.04 | 5.03 | 0.831 | 0.795 | |

| EXP | 3.84 | 3.99 | 0.797 | 0.499 |

| Image | Method | ERGAS | SAM | Q8 | SCC |

|---|---|---|---|---|---|

| I3 | GS | 1.31 | 1.90 | 0.908 | 0.885 |

| GSA | 1.03 | 1.76 | 0.942 | 0.883 | |

| HR | 1.38 | 2.23 | 0.927 | 0.849 | |

| HCS | 1.28 | 1.98 | 0.881 | 0.871 | |

| ATWT | 1.21 | 1.93 | 0.907 | 0.872 | |

| GLP_ESDM | 1.39 | 1.91 | 0.902 | 0.835 | |

| GLP_ECBD | 1.33 | 1.91 | 0.921 | 0.856 | |

| NSCT_M2 | 1.39 | 2.41 | 0.887 | 0.869 | |

| EXP | 1.62 | 1.98 | 0.837 | 0.796 | |

| I4 | GS | 1.74 | 2.85 | 0.871 | 0.841 |

| GSA | 1.43 | 2.74 | 0.919 | 0.831 | |

| HR | 1.84 | 3.25 | 0.893 | 0.779 | |

| HCS | 1.74 | 3.01 | 0.850 | 0.817 | |

| ATWT | 1.66 | 2.98 | 0.868 | 0.821 | |

| GLP_ESDM | 1.82 | 2.82 | 0.876 | 0.796 | |

| GLP_ECBD | 1.79 | 2.88 | 0.892 | 0.805 | |

| NSCT_M2 | 1.88 | 3.64 | 0.843 | 0.818 | |

| EXP | 2.21 | 3.01 | 0.759 | 0.669 |

| Image | Method | ERGAS | SAM | Q8 | SCC |

|---|---|---|---|---|---|

| I5 | GS | 1.66 | 2.41 | 0.822 | 0.838 |

| GSA | 1.56 | 2.66 | 0.887 | 0.799 | |

| HR | 1.32 | 2.06 | 0.914 | 0.882 | |

| HCS | 1.61 | 2.23 | 0.837 | 0.861 | |

| ATWT | 1.59 | 2.37 | 0.853 | 0.857 | |

| GLP_ESDM | 1.49 | 1.99 | 0.875 | 0.874 | |

| GLP_ECBD | 1.90 | 2.45 | 0.874 | 0.845 | |

| NSCT-M2 | 1.71 | 2.83 | 0.829 | 0.855 | |

| EXP | 2.30 | 2.23 | 0.748 | 0.665 | |

| I6 | GS | 1.88 | 2.95 | 0.762 | 0.756 |

| GSA | 1.16 | 1.75 | 0.857 | 0.785 | |

| HR | 1.08 | 1.60 | 0.873 | 0.812 | |

| HCS | 1.05 | 1.51 | 0.735 | 0.848 | |

| ATWT | 0.97 | 1.49 | 0.858 | 0.868 | |

| GLP_ESDM | 1.12 | 1.52 | 0.857 | 0.809 | |

| GLP_ECBD | 0.99 | 1.44 | 0.868 | 0.854 | |

| NSCT-M2 | 1.10 | 1.77 | 0.820 | 0.865 | |

| EXP | 1.09 | 1.51 | 0.818 | 0.849 |

| Method | CMBI | CNDVI | CNDWI | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I1 | I2 | I3 | I4 | I1 | I2 | I3 | I4 | I5 | I6 | I3 | I4 | I5 | I6 | |

| GS | 0.973 | 0.972 | 0.969 | 0.934 | 0.922 | 0.877 | 0.929 | 0.891 | 0.912 | 0.946 | 0.915 | 0.858 | 0.876 | 0.950 |

| GSA | 0.975 | 0.979 | 0.981 | 0.959 | 0.915 | 0.855 | 0.924 | 0.879 | 0.884 | 0.966 | 0.908 | 0.853 | 0.830 | 0.977 |

| HR | 0.976 | 0.978 | 0.977 | 0.950 | 0.923 | 0.885 | 0.899 | 0.816 | 0.927 | 0.969 | 0.876 | 0.780 | 0.895 | 0.981 |

| HCS | 0.962 | 0.949 | 0.923 | 0.848 | 0.926 | 0.883 | 0.917 | 0.880 | 0.940 | 0.974 | 0.911 | 0.859 | 0.917 | 0.986 |

| ATWT | 0.965 | 0.956 | 0.934 | 0.882 | 0.911 | 0.849 | 0.928 | 0.890 | 0.928 | 0.975 | 0.912 | 0.851 | 0.915 | 0.986 |

| GLP_ESDM | 0.965 | 0.960 | 0.945 | 0.899 | 0.928 | 0.887 | 0.915 | 0.871 | 0.942 | 0.972 | 0.901 | 0.841 | 0.922 | 0.984 |

| GLP_ECBD | 0.964 | 0.957 | 0.961 | 0.926 | 0.892 | 0.811 | 0.925 | 0.876 | 0.906 | 0.977 | 0.909 | 0.851 | 0.860 | 0.988 |

| NSCT_M2 | 0.965 | 0.948 | 0.905 | 0.842 | 0.860 | 0.778 | 0.898 | 0.862 | 0.896 | 0.968 | 0.873 | 0.800 | 0.871 | 0.982 |

| EXP | 0.825 | 0.798 | 0.829 | 0.783 | 0.926 | 0.883 | 0.917 | 0.880 | 0.940 | 0.974 | 0.911 | 0.859 | 0.917 | 0.986 |

| Image | Method | CC | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| C | B | G | Y | R | RE | NIR1 | NRI2 | Avg | ||

| I1 | GS | 0.985 | 0.985 | 0.988 | 0.992 | 0.990 | 0.990 | 0.978 | 0.978 | 0.986 |

| GSA | 0.987 | 0.987 | 0.991 | 0.995 | 0.993 | 0.992 | 0.978 | 0.977 | 0.988 | |

| HR | 0.990 | 0.990 | 0.993 | 0.996 | 0.994 | 0.993 | 0.977 | 0.977 | 0.989 | |

| HCS | 0.819 | 0.945 | 0.982 | 0.989 | 0.987 | 0.984 | 0.970 | 0.969 | 0.956 | |

| ATWT | 0.849 | 0.931 | 0.985 | 0.991 | 0.986 | 0.987 | 0.974 | 0.971 | 0.959 | |

| GLP_ESDM | 0.959 | 0.979 | 0.986 | 0.990 | 0.990 | 0.988 | 0.975 | 0.973 | 0.980 | |

| GLP_ECBD | 0.982 | 0.982 | 0.986 | 0.989 | 0.986 | 0.985 | 0.967 | 0.959 | 0.980 | |

| NSCT_M2 | 0.805 | 0.906 | 0.985 | 0.993 | 0.987 | 0.988 | 0.971 | 0.965 | 0.950 | |

| EXP | 0.952 | 0.948 | 0.944 | 0.942 | 0.939 | 0.928 | 0.917 | 0.916 | 0.936 | |

| I3 | GS | 0.958 | 0.957 | 0.962 | 0.967 | 0.962 | 0.952 | 0.899 | 0.903 | 0.945 |

| GSA | 0.977 | 0.977 | 0.986 | 0.990 | 0.984 | 0.977 | 0.906 | 0.906 | 0.963 | |

| HR | 0.976 | 0.975 | 0.983 | 0.986 | 0.980 | 0.969 | 0.830 | 0.823 | 0.940 | |

| HCS | 0.836 | 0.946 | 0.977 | 0.979 | 0.969 | 0.968 | 0.892 | 0.893 | 0.933 | |

| ATWT | 0.918 | 0.932 | 0.980 | 0.982 | 0.975 | 0.971 | 0.900 | 0.898 | 0.945 | |

| GLP_ESDM | 0.919 | 0.964 | 0.978 | 0.985 | 0.980 | 0.966 | 0.842 | 0.844 | 0.935 | |

| GLP_ECBD | 0.962 | 0.963 | 0.975 | 0.982 | 0.975 | 0.965 | 0.876 | 0.861 | 0.945 | |

| NSCT_M2 | 0.886 | 0.906 | 0.980 | 0.978 | 0.969 | 0.968 | 0.872 | 0.863 | 0.928 | |

| EXP | 0.922 | 0.921 | 0.921 | 0.923 | 0.920 | 0.910 | 0.886 | 0.885 | 0.911 | |

| I5 | GS | 0.958 | 0.951 | 0.945 | 0.947 | 0.953 | 0.908 | 0.868 | 0.875 | 0.926 |

| GSA | 0.969 | 0.964 | 0.963 | 0.962 | 0.967 | 0.981 | 0.949 | 0.957 | 0.964 | |

| HR | 0.968 | 0.966 | 0.974 | 0.968 | 0.967 | 0.984 | 0.954 | 0.965 | 0.968 | |

| HCS | 0.739 | 0.892 | 0.954 | 0.966 | 0.967 | 0.984 | 0.962 | 0.973 | 0.930 | |

| ATWT | 0.901 | 0.924 | 0.976 | 0.967 | 0.965 | 0.987 | 0.966 | 0.975 | 0.958 | |

| GLP_ESDM | 0.913 | 0.962 | 0.975 | 0.970 | 0.970 | 0.984 | 0.949 | 0.963 | 0.961 | |

| GLP_ECBD | 0.942 | 0.935 | 0.959 | 0.967 | 0.968 | 0.985 | 0.964 | 0.974 | 0.962 | |

| NSCT_M2 | 0.858 | 0.888 | 0.970 | 0.960 | 0.957 | 0.981 | 0.957 | 0.968 | 0.943 | |

| EXP | 0.940 | 0.932 | 0.923 | 0.925 | 0.931 | 0.976 | 0.966 | 0.974 | 0.946 | |

| I6 | GS | 0.957 | 0.959 | 0.967 | 0.967 | 0.966 | 0.948 | 0.802 | 0.800 | 0.921 |

| GSA | 0.970 | 0.974 | 0.985 | 0.982 | 0.980 | 0.963 | 0.750 | 0.747 | 0.919 | |

| HR | 0.979 | 0.980 | 0.987 | 0.987 | 0.985 | 0.969 | 0.858 | 0.854 | 0.950 | |

| HCS | 0.879 | 0.966 | 0.978 | 0.971 | 0.964 | 0.936 | 0.848 | 0.846 | 0.924 | |

| ATWT | 0.896 | 0.924 | 0.980 | 0.979 | 0.971 | 0.950 | 0.858 | 0.862 | 0.928 | |

| GLP_ESDM | 0.905 | 0.962 | 0.976 | 0.978 | 0.979 | 0.955 | 0.869 | 0.848 | 0.934 | |

| GLP_ECBD | 0.956 | 0.960 | 0.972 | 0.971 | 0.967 | 0.945 | 0.790 | 0.829 | 0.924 | |

| NSCT_M2 | 0.873 | 0.905 | 0.982 | 0.978 | 0.968 | 0.953 | 0.801 | 0.829 | 0.911 | |

| EXP | 0.904 | 0.899 | 0.893 | 0.899 | 0.900 | 0.788 | 0.747 | 0.748 | 0.848 | |

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Jing, L.; Tang, Y. Assessment of Pansharpening Methods Applied to WorldView-2 Imagery Fusion. Sensors 2017, 17, 89. https://doi.org/10.3390/s17010089

Li H, Jing L, Tang Y. Assessment of Pansharpening Methods Applied to WorldView-2 Imagery Fusion. Sensors. 2017; 17(1):89. https://doi.org/10.3390/s17010089

Chicago/Turabian StyleLi, Hui, Linhai Jing, and Yunwei Tang. 2017. "Assessment of Pansharpening Methods Applied to WorldView-2 Imagery Fusion" Sensors 17, no. 1: 89. https://doi.org/10.3390/s17010089

APA StyleLi, H., Jing, L., & Tang, Y. (2017). Assessment of Pansharpening Methods Applied to WorldView-2 Imagery Fusion. Sensors, 17(1), 89. https://doi.org/10.3390/s17010089