1. Introduction

Object change detection in Very-High-Resolution (VHR) remotely sensed imagery has become a hot topic in the field of remotely sensed imagery analysis, and object-oriented image analysis has been the primary way to solve the “salt and pepper” problem [

1], which commonly occurred in pixel-based image analysis. In the field of object-based change detection (OBCD), VHR imagery is usually segmented to several objects and the image objects are regarded as the basic processing units. The main difference between pixel-based change detection and object-based change detection is that image objects have more feature information, so multi-feature image analysis can identify more change information for VHR remotely sensed imagery [

2,

3].

Feature selection selects a subset of relevant features from the available features to improve change detection performance relative to using single features. Existing feature selection algorithms can be broadly classified into two categories: filter approaches and wrapper approaches [

4]. Wrappers achieve better results than filters, because wrappers include a learning algorithm as part of the evaluation function. There are two components of a feature selection algorithm: the search algorithm and the evaluation function [

5]. Common methods of feature selection are principal component analysis [

6], Sequential Forward Selection (SFS) [

7], Sequential Float Forward Selection (SFFS) [

8] and Backtracking Search Optimization Algorithm (BSO) [

9]. It is difficult to implement a global search in the high-dimensional feature space for these algorithms. Further, the search process is separate from the evaluation process, so it is easy to fall into the trap of local convergence. Subsequently, Evolutionary Computation (EC) algorithms have been used for the feature selection problem, such as simulated annealing [

10], Genetic Algorithms (GA) [

11,

12], Differential Evolution algorithm (DE) [

13] and swarm intelligent algorithms, in particular Cuckoo Search (CS) [

14,

15], ant Colony Optimization (CO) [

16] and Particle Swarm Optimization (PSO) [

17]. These algorithms have the advantages of high efficiency, high speed and low cost, but they also have random components and some results are hard to reproduce in experiments.

As a typical example of EC algorithms, PSO has a high search ability and is computationally less expensive than some other EC algorithms, so it has been used as an effective technique in feature selection. Starting with a random solution, PSO searches for the optimum solution in the feature space according to some mechanism, and the search space can be expanded to the whole problem space without incurring more cost. That is, PSO is an adaptive probabilistic search algorithm, and only the objective function rather than gradient information is needed to evaluate the fitness of solutions. Moreover, PSO is an easily implemented algorithm, has less adjustable parameters and is also computationally inexpensive in both speed and memory requirements [

18].

The genetic particle swarm optimization (GPSO) algorithm integrates PSO with GA to improve solution speed and global search ability, and has been widely used in optimal selection problems [

18,

19,

20,

21,

22]. GPSO has some advantages such as optimization ability of fast approaching to the optimal solution, simple parameters setup and high global search ability to avoid the local optimal problem. But the drawbacks of GPSO mainly include that it easily falls into the local optimal solution in late iteration, and the different parameters setup, such as swarm scale, would affect the efficiency and cost of algorithm [

18]. Nazir proposed an efficient gender classification technique for real-world facial images based on PSO and GA selecting the most import features set for representing gender [

19]. Inbarani proposed a supervised feature selection method based on hybridization of PSO and applied this approach to diseases diagnosis [

20]. Yang proposed a novel feature selection and classification method for hyperspectral images by combining PSO with SVM, and the results indicate that this hybrid method has higher classification accuracy and effectively extracts optimal bands [

21]. Chuang et al. [

22] applied the so-called catfish effect to PSO for feature selection, claiming that the catfish particles help PSO avoid premature convergence and lead to better results than sequential GA, SFS, BSO and other methods. Additionally, GPSO has also been applied in the field of remotely sensed imagery analysis, such as image segmentation [

23], image classification [

24,

25] and hyperspectral band selection [

26]. Up to now, several PSO-based feature selection methods have been proposed in the literature [

4,

18,

25,

27,

28]. However, GPSO-based feature selection has seldom been used in remotely sensed image change detection.

Fitness functions are an important part of GPSO-based feature selection algorithms, and they are used to evaluate the selected feature subset during the search process, by comparing the current solution with established evaluation criterion for particle updates and termination of the procedure. Fitness functions are commonly built based on correlation, distance metrics and information gain. Furthermore, the classification error rate of a classifier also has been used to build the fitness function in the wrapper feature selection method. It is worth noting that the fitness function used for GPSO should be related to the aim of the research.

Existing studies on OBCD for VHR remotely sensed imagery base feature selection primarily on expert knowledge or experimental data, both of which have a low efficiency rate and a low precision rate [

29]. This paper proposes a GPSO feature selection algorithm to be utilized in OBCD by using object-based hybrid multivariate alternative detection (OB-HMAD) model, and analyzes the fitness convergence and OBCD accuracy of the algorithms using the ratio of mean to variance (RMV) as the fitness function. Additionally, we discuss the sensitivity of the number of features to be selected and the scale of the particle swarm to the precision and efficiency of the algorithm, and analyze the reliability of RMV by comparing with other fitness functions. Therefore, our paper is organized into five sections.

Section 2 presents the theoretical constructions behind our proposed GPSO-based feature selection approach using a RMV fitness function, and illustrates how our technique can be used for multiple features OBCD. Analysis of our results obtained for two experimental cases are reported in the

Section 3, sensitivity of GPSO-based feature selection algorithm has been discussed in the

Section 4, while

Section 5 outlines our conclusions.

2. Methodology

2.1. Genetic Particle Swarm Optimization

PSO uses an information-sharing mechanism that allows individuals to learn from each other to promote the development of the entire swarm. It has excellent global search ability even in high-dimensional solution spaces. In PSO, possible solutions are called particles, and each particle i comprises three parts: xi, the current position of the particle; vi, the current velocity of the particle, which also denotes the recursive solution update; and pbesti, the personal best position of the particle which is the best local solution. The solution space is searched by starting from particles randomly distributed as in a swarm.

Assume

M features are to be selected, and let a particle

xi (of size

M × 1) denote the selected feature indices, and

vi denote the update for selected feature indices. Then the particle swarm is composed of

N particles (

N is the size of particle swarm), and every particle has a position vector

xi to indicate its position and a velocity vector

vi to indicate its flying direction and speed. As the particle flies in the solution space, it stores the best local solution

pbesti and the best global solution

gbesti. Here, possible solutions are called particles, and recursive solution update is called velocity. Initially, the particles are distributed randomly and updated depending on the best local solution

pbesti and global solution

gbesti. The algorithm then searches for the optimal solution by updating the position and the velocity of each particle according to the following equations:

where

i = 1,2,…,

n,

N is the total number of particles in the swarm,

r1 and

r2 are random numbers chosen uniformly from (0,1),

c1 and

c2 are learning factors (

c1 denotes the preference for the particle’s own experience, and

c2 denotes the preference for the experience of the group),

t is the number of iteration, and

ω is the inertia weight factor that controls the impact of the previous velocity

vi which provides improved convergence performance in various applications [

30]. Because feature indices are discrete values, rounding off the solutions to adapt the continuous PSO to a discrete form is necessary.

Adapted from the GA, a crossover operator is used in PSO to improve the global searching capability and to avoid running into local optimum. After updating the position vector

xi and the velocity vector

vi of the particle using Equations (1) and (2), the algorithm calculates a crossover with two particles, as follows:

Relative to PSO, this GPSO algorithm has a crossover operation that occurs after updating the position and velocity, and uses the gendered descendant particles rather than the parent particles for the next iteration. The crossover operation helps the descendant particles to inherit the advantages of their parent particles and maintains population diversity. The crossover mechanism selects the particle from all particles into the cross-matching pool with a certain degree of crossover probability, which has been determined beforehand and remains unchanged throughout the crossover process; matches any two particles in the pool randomly, determines the crossover point by the crossover weight wi, which has been calculated by the fitness value of particle, generates the descendant particle by the crossover operation.

2.2. Ratio of Mean to Variance Fitness Function

According to the purpose of change detection, we choose RMV as the fitness function for evaluating the fitness of particles in the GPSO algorithm, which denotes the availability of the candidate feature in the image object feature dataset.

In general, the mean and variance of a data set are related to the important feature information, so some features are used to compare the samples belonging to different classes [

31]. This denotes the separability of a multi-class sample by normalizing the mean of the feature dataset according to its variance and comparing it among the different classes.

Assume that

A and

B are feature datasets belong to different classes, where

A is the dataset of changed samples that have the feature

f, and

B is the dataset of unchanged samples that have the feature

f. Then, the importance of feature

f can be expressed by Equations (7) and (8):

where

Sf is the significance of feature

f and represents the potential to classify the two dataset

A and

B,

meanf (A) and

meanf (B) are the means datasets

A and

B,

Varf (

A) and

Varf (

B) are the variances of datasets

A and

B, and

nA and

nB are the number of samples in

A and

B, respectively.

The optimum features are selected from the feature dataset once the features have been sorted by the feature importance index

. Assume that

M features are to be selected, then the importance matrix

S can be constructed by the obtained importance index of

M features for each class, and the mean value of the feature importance

SAVG can also be calculated using the feature importance matrix

S, which has

M feature importance indices:

The objective function

J is given as follow:

It is apparent that larger values of

SAVG and

J indicate stronger classification capability of the selected feature subset from the feature dataset, so the fitness function of RMV is:

2.3. GPSO-Based Feature Selection for Object-Based Change Detection

When the proposed GPSO-based feature selection algorithm is used in the field of multiple features objected based change detection, the essential step is how the features are selected from the feature set. After the features have been extracted from the image object and the features set has been built in the field of OBCD, we give each feature an index, then these feature indices have been selected by GPSO-based feature selection algorithm.

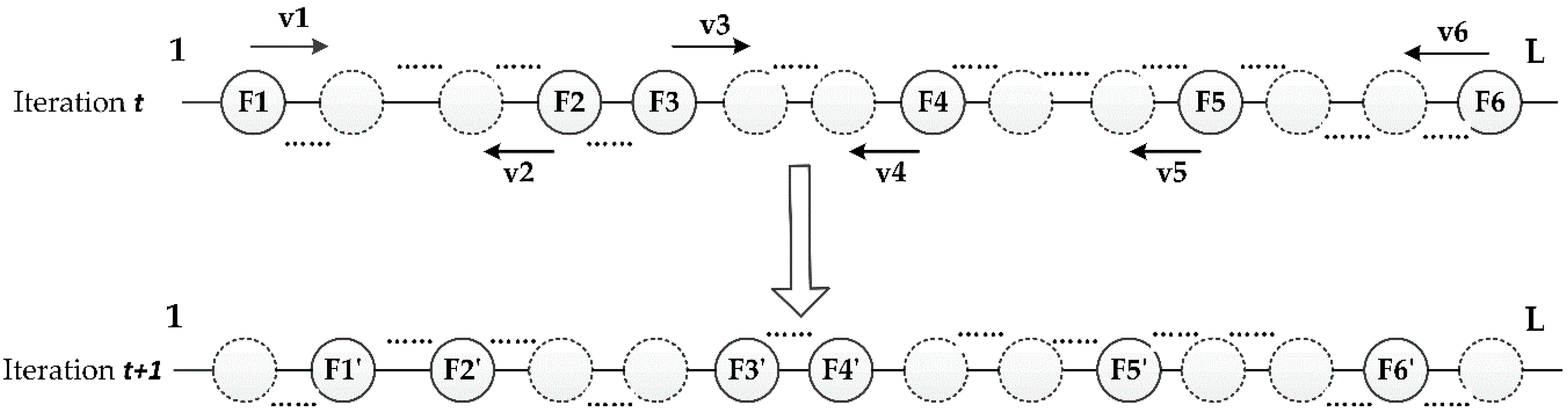

Figure 1 explains how the feature indexes update in the iteration of GPSO algorithm, and it illustrates one particle before and after one iteration step when selecting six features from the image object feature set which has

L features. At the

t-th iteration, six features are selected by each particle, x(

t) = (

F1,

F2,

…,

F6)T, and GPSO determines the update v(

i) =

(v1,

v2,

…,

v6)T. At the (

t + 1)-th iteration, the selected feature indices becomes x(

t + 1) = (

F1’,

F2’,

…,

F6’)T. According to the

Figure 1, one particle

xi denote a kind of feature combination, also can be regarded as a potential solution for the feature selection problem. At the end of iterations, the feature indices included in the best global solution

gbesti of the particle swarm is the optimal result of feature selection.

The procedures of the proposed GPSO-based feature selection algorithm are described as follows (

Figure 2) [

26].

Step 1: Normalize and set the parameters, including the size of the particle swarm N, the learning factors c1 and c2, the inertia weight factor ω and the maximum number of iterations itermax;

Step 2: Assume that M features are about to be selected from the feature set, and randomly initialize N particles xi, and each particle includes M indices of the features to be selected;

Step 3: Evaluate the fitness of each particle by Equation (12), and determine pbesti and gbesti;

Step 4: Update the position and velocity vectors of each particle using Equations (1)–(6);

Step 5: If the algorithm is converged, then stop; otherwise, go to Step 3 and continue;

Step 6: The particle yielding the global optimum solution gbesti is the final solution and includes the selected feature subset.

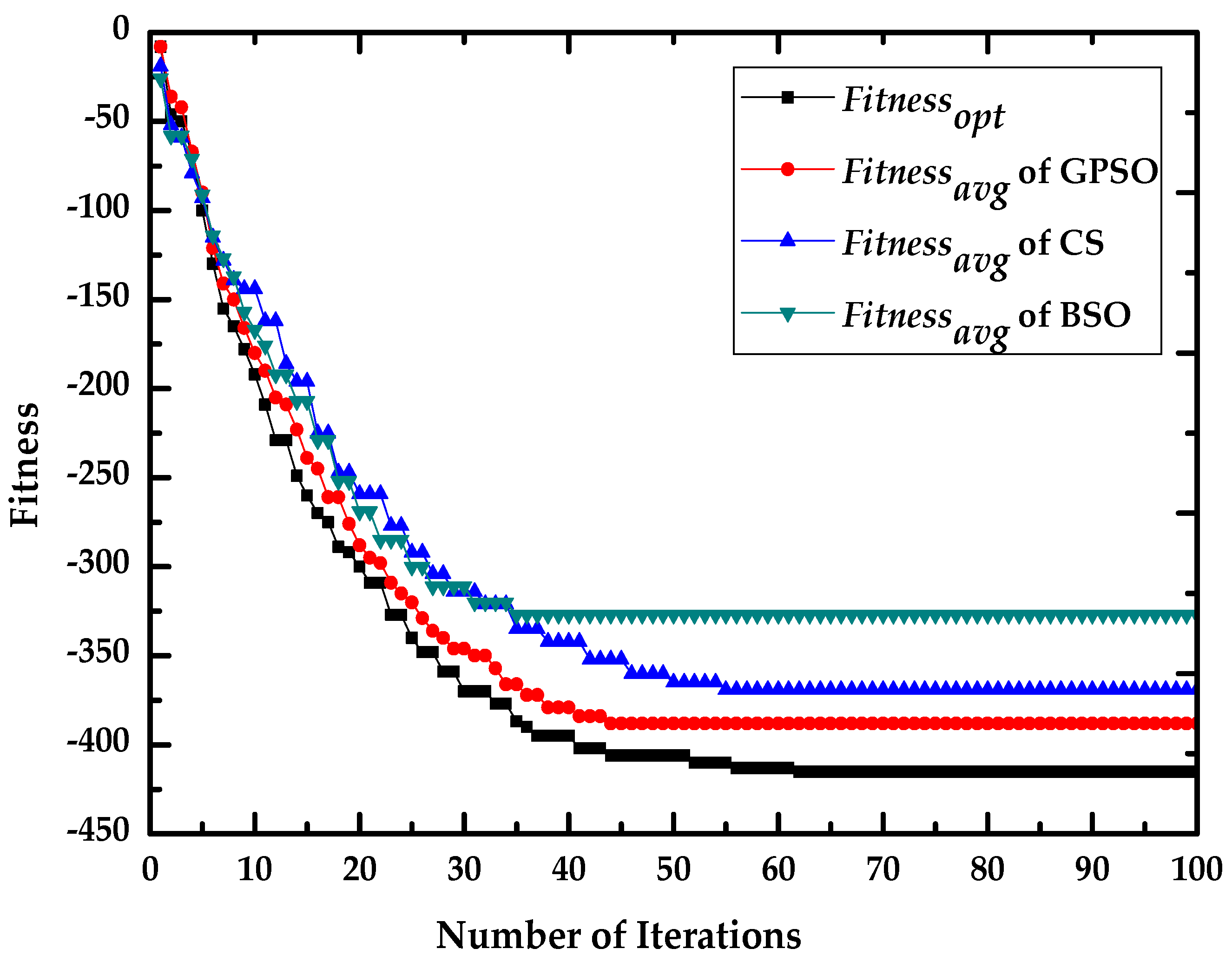

Additionally, to validate the fitness convergence and OBCD accuracy of proposed GPSO-based feature selection algorithm, we choose Backtracking Search Optimization algorithm (BSO) and Cuckoo Search (CS) algorithm to compare with the GPSO algorithm.

BSO is also a kind of bionic algorithm and a population-based iterative evolution algorithm designed to be a global minimizer. The crossover strategy improved the global search ability of BSO, which is similar with the GPSO. Different with GPSO, BSO has a boundary control mechanism, which is effective in achieving population diversity, ensuring efficient searches, even in advanced generations [

9], and it may be where more advanced than GPSO. Similar with the GPSO, CS algorithms also is a swarm intelligent algorithm, and the selection of optimal solution depends on the comparison of fitness value. Different with GPSO, CS updates the location and search path according to the random-walk mechanism, which has better global ability than GPSO and can keep a good balance between local search strategy and exploration of the entire search space. Nevertheless, the GPSO has improved its global search ability by importing into the cross operator in the iteration procedure.

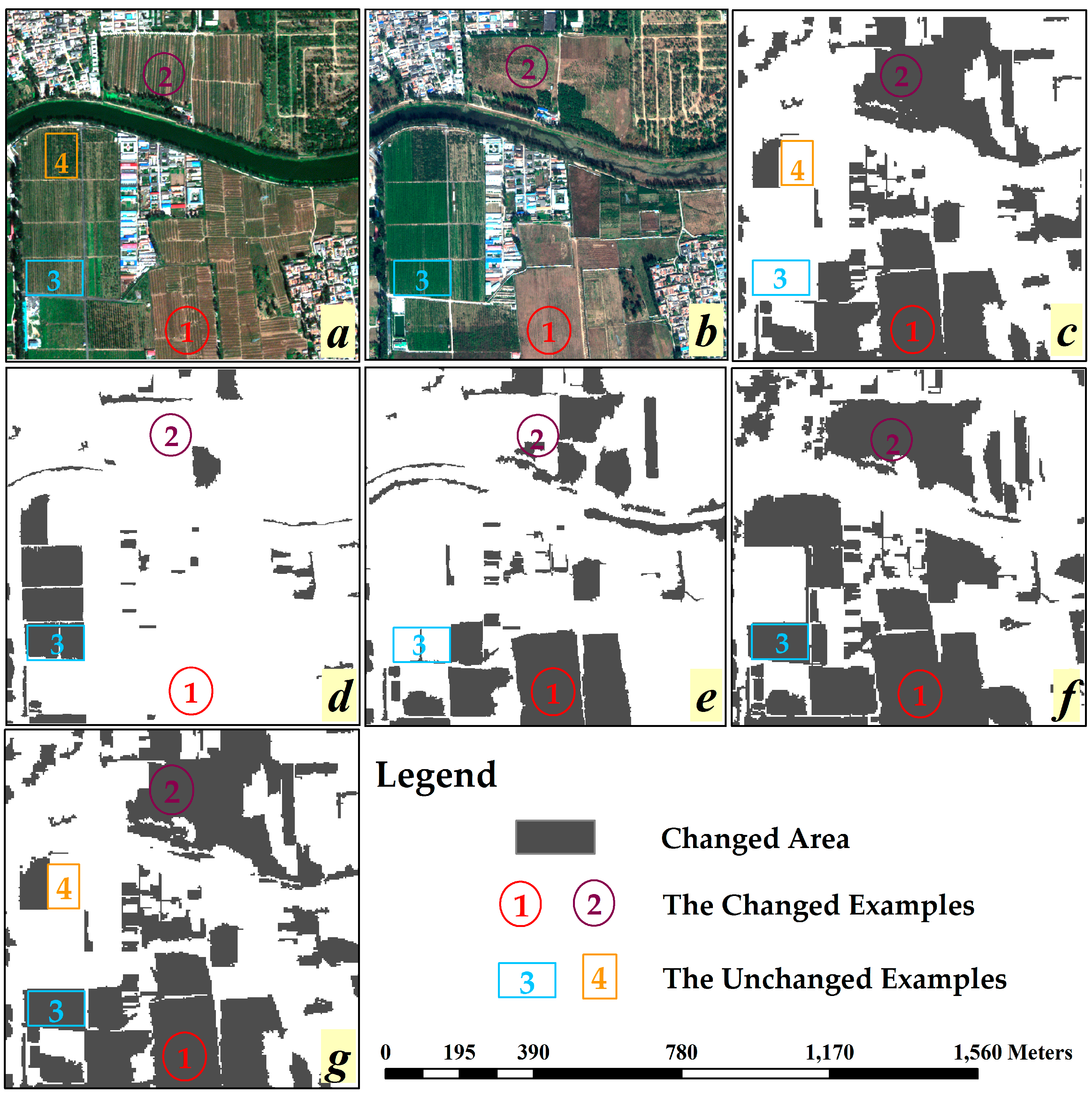

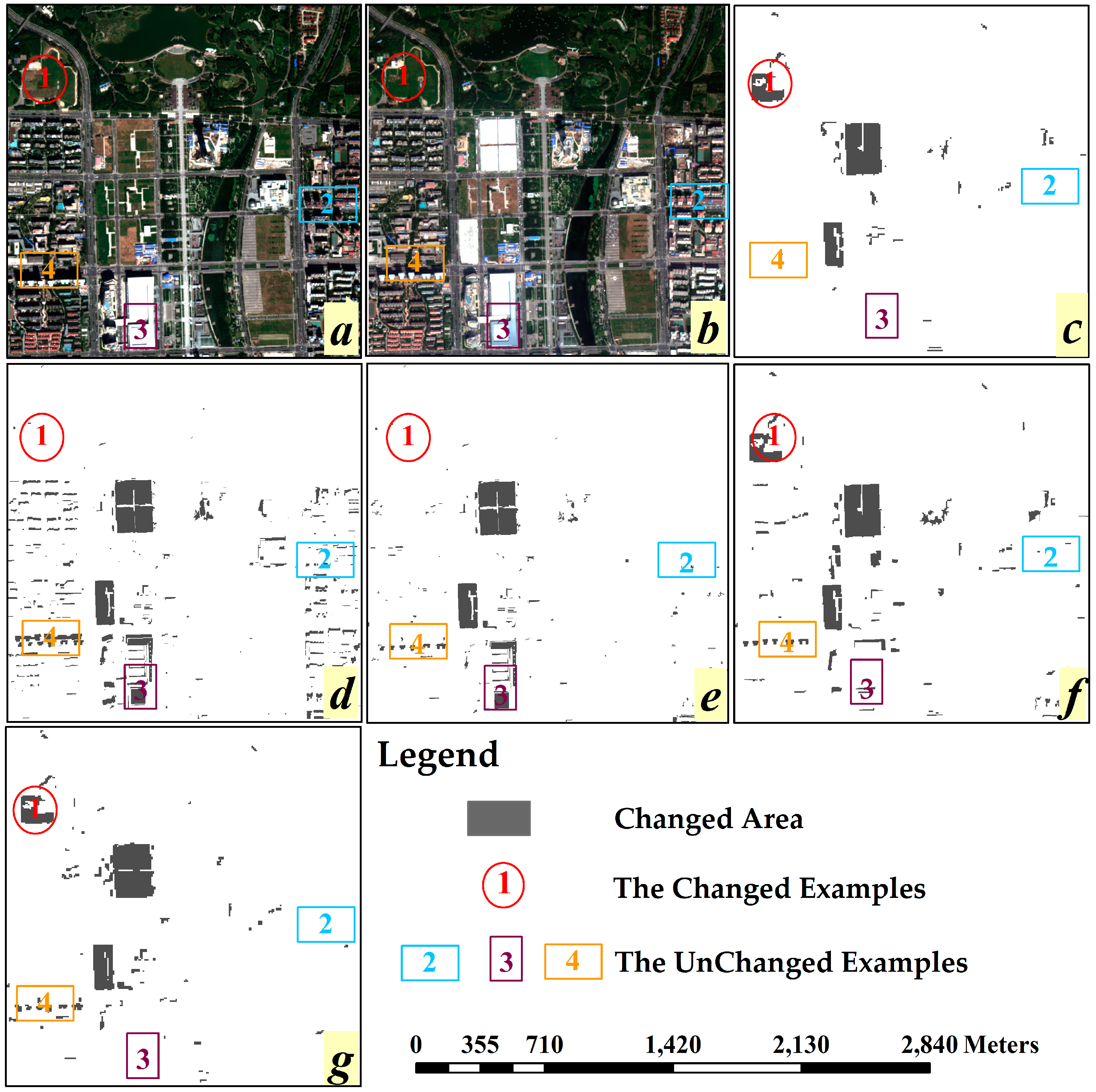

5. Conclusions

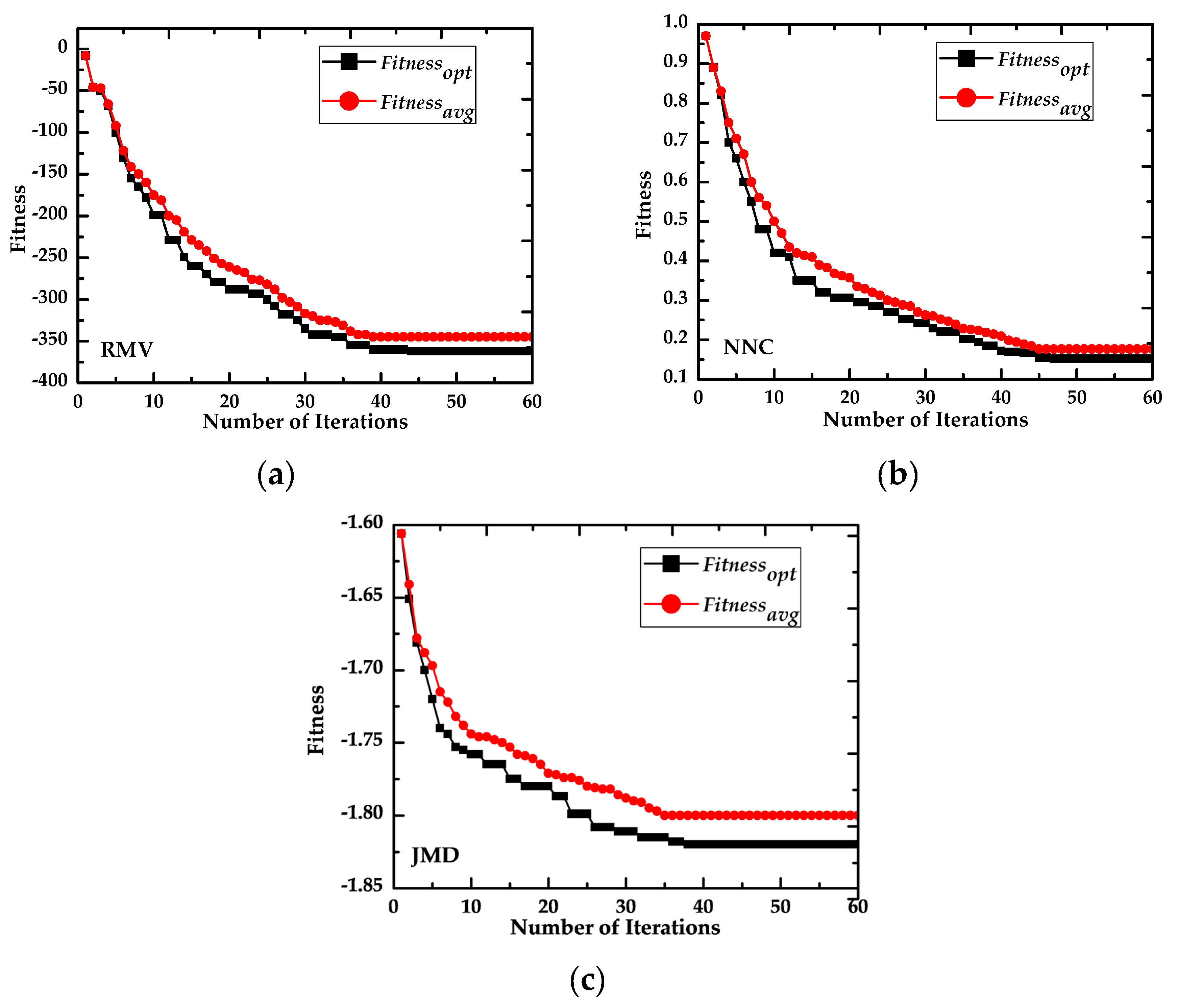

This study applied GPSO to select the optimum image object features for OBCD of VHR remotely sensed images and chose RMV as the fitness function. We analyzed the fitness convergence and accuracy of OBCD in the GPSO-based feature selection algorithm, and discussed the influence of the number of features to be selected and the size of the particle swarm on the precision and efficiency of the algorithm. Additionally, we analyzed the adaptability of the RMV fitness function and compared it with two other fitness functions, JMD and NNC.

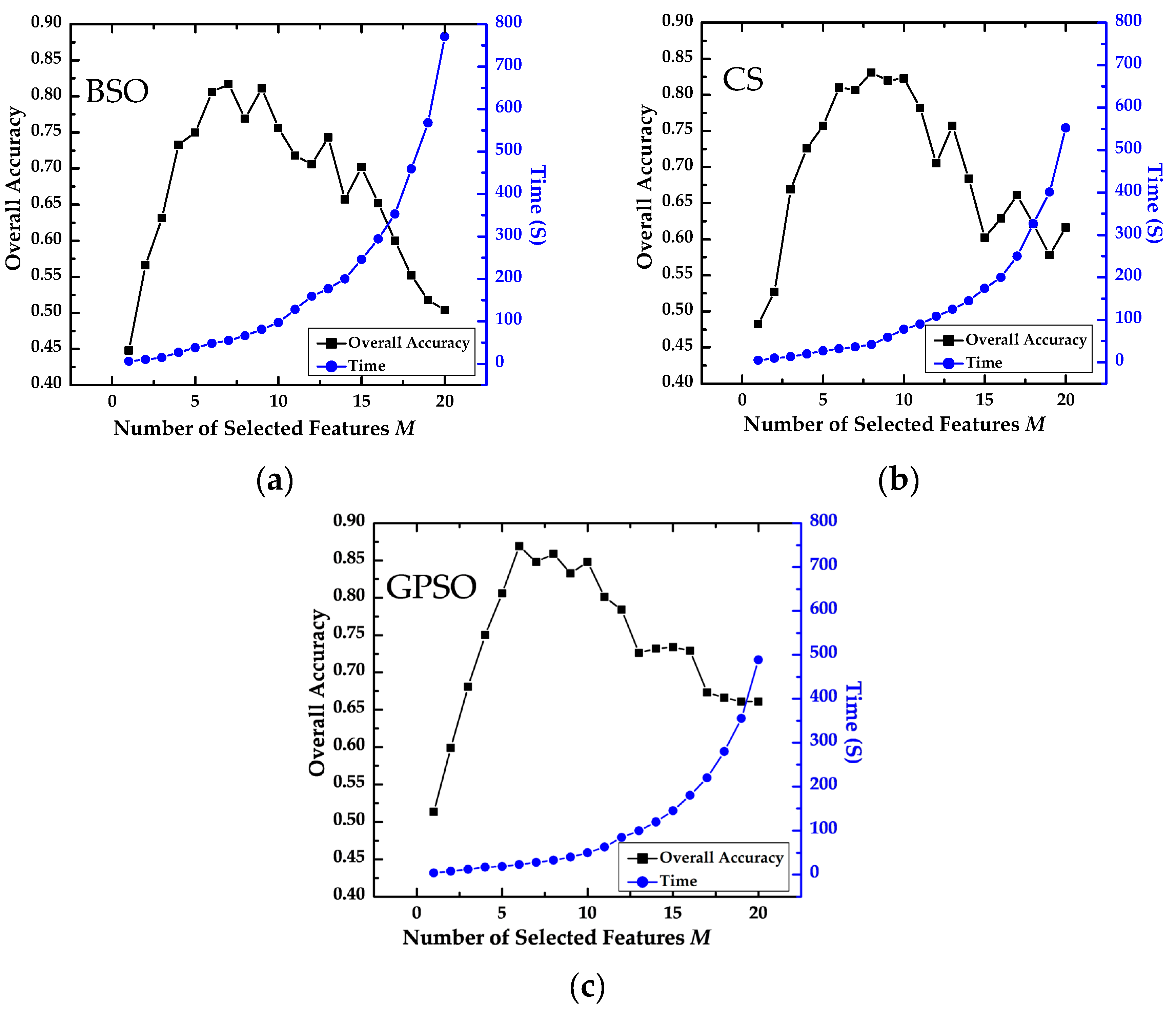

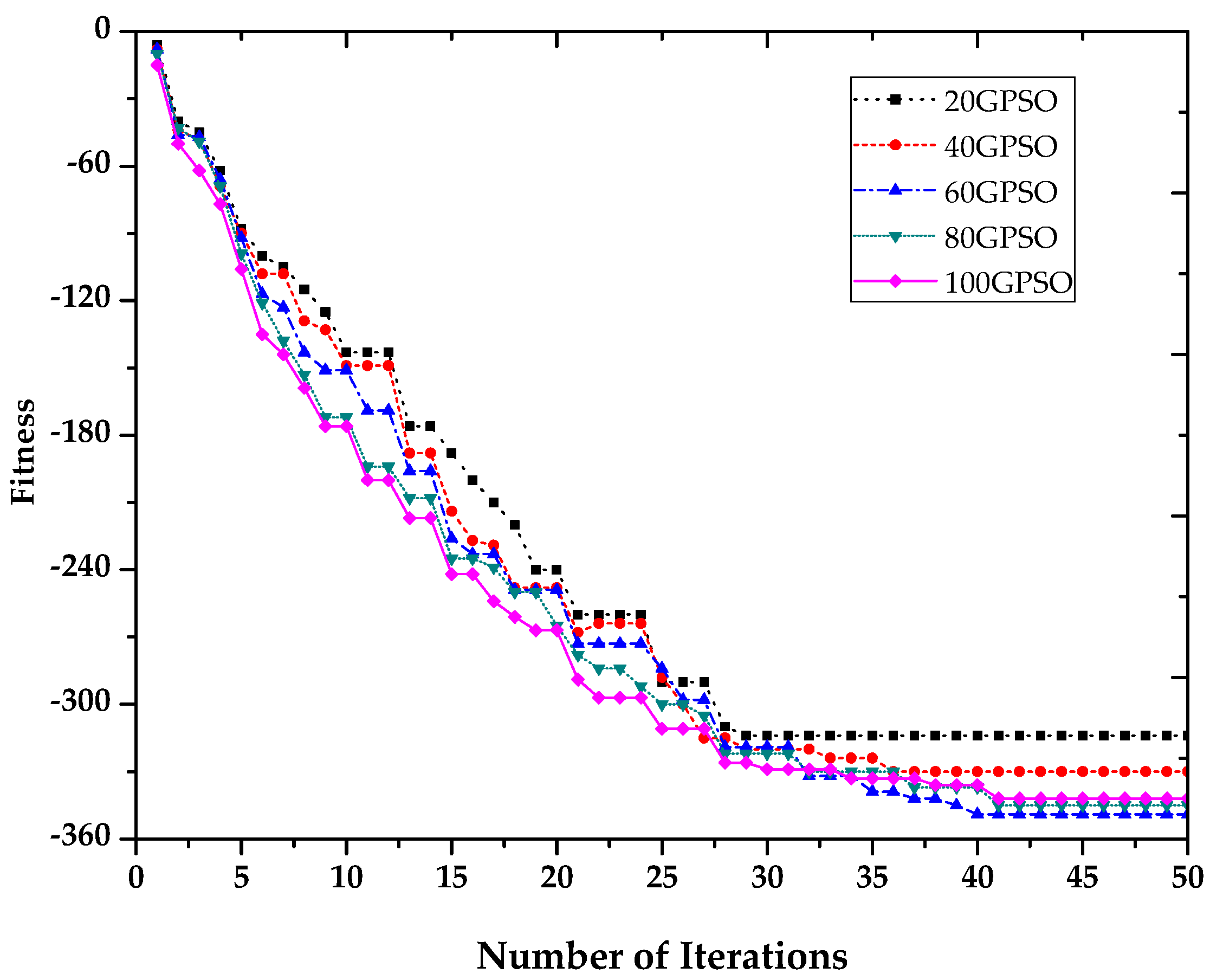

GPSO has the advantages of strong global search ability, high efficiency and stability, and can effectively avoid premature convergence. The experiments prove that the GPSO-based feature selection algorithm performs better than other algorithms in OBCD of VHR remotely sensed images. In the sensitivity analysis of the GPSO-based feature selection algorithm, a larger the number of features to be selected increases the precision and the computational cost of the algorithm when the number of features to be selected is less than 10. The experiments show that the algorithm has high precision and is fast if the number of features is between six and eight. Additionally, the experiments also found that GPSO is not affected as much by the number of features to be selected as the other two algorithms with which it was compared. Similarly, the size of the particle swarm also affects the convergence speed of the algorithms, with the optimum number of initial particle determined to be 60.

As the discriminatory criterion for the GPSO-based feature selection algorithm, the RMV fitness function was analyzed and compared with the JMD and NNC functions. The experiments show that the fitness convergence speed of three fitness functions are similar, and that their final converged fitness values are all close to the optimum fitness value. Relatively speaking, RMV is more suitable to be the fitness function of GPSO-based feature selection algorithm because of the convergence speed and precision of the algorithm.

Meanwhile, the OBCD experiment based on OB-HMAD also showed that multi-feature change detection has higher precision than single-feature change detection, and that multi-feature change detection can distinguish some areas where texture or shape has changed, which is not possible with single-feature change detection. Additionally, the experiment also exposed some problems caused by mutual interference between the features. This means that the GPSO-based feature selection algorithm requires artificial visual interpretation to assist in OBCD.