1. Introduction

In recent years, many researchers have addressed the issue of making Unmanned Aerial Vehicles (UAVs) more autonomous. In this context, the state estimation of the six degrees of freedom (6-DoF) of a vehicle (i.e., its attitude and position) is a fundamental necessity for any application involving autonomy.

Outdoors, this problem is seemingly solved with on-board Global Positioning System (GPS) and Inertial Measurements Units (IMU) with their integrated version, the Inertial Navigation Systems (INS). In fact, unknown, cluttered, and GPS-denied environments still pose a considerable challenge. While attitude estimation is well-handled with available systems [

1], GPS-based position estimation has some drawbacks. Specifically GPS is not a reliable service as its availability can be limited in urban canyons and is completely unavailable in indoor environments.

Moreover, even when GPS signal is available, the problem of position estimation could not be solved in different scenarios. For instance, aerial inspection of industrial plants is an application that requires performing precision manoeuvres in a complex environment. In this case, and due to the several sources of error, the position obtained with a GPS can vary with an error of several meters in just a few seconds for a static location [

2]. In such a scenario, the use of GPS readings, smoothed or not, as the feedback signal of a control system can be unreliable because the control system cannot distinguish between sensor noise and actual small movements of the vehicle. Therefore, some additional sensory information (e.g., visual information) should be integrated into the system in order to improve accuracy.

The aforementioned issues have motivated the move of recent works towards the use of cameras to perform visual-based navigation in periods or circumstances when the position sensor is not available, when it is partially available, or when a local navigation application requires high precision. Cameras are well adapted for embedded systems because they are cheap, lightweight, and power-saving. In this way, a combination of vision and inertial measurements is often chosen as means to estimate the vehicle attitude and position. This combination can be performed with different approaches, as in [

3], where the vision measurement is provided by an external trajectometry system, directly yielding the position and orientation of the robot. In [

4], an external CCD camera provides the measurements. Other on-board techniques were proposed by [

5,

6], where an embedded camera uses different markers to provide a good estimation of position and orientation as well. This estimation was obtained using the specific geometry of different markers and assuming that the marker’s position was known. The same idea was exploited by [

7], implemented with the low-cost Wii remote visual sensor. Finally, visual sensing is sometimes provided by optical flow sensors to estimate the attitude, the position, and the velocity, as in [

8]. In these different approaches, position estimation is obtained by computer vision and the attitude is either obtained by vision (see [

3,

6]) or by IMU sensors. In [

9], even a single angular measurement could significantly improve attitude and position estimation.

Another family of approaches (for instance [

10,

11]) relies on visual SLAM (Simultaneous Localization and Mapping) methods. In this case, the mobile robot operates in a

priori unknown environment using only on-board sensors to simultaneously build a map of its surroundings and locate itself inside this map.

Robot sensors have a large impact on the algorithm used in SLAM. Early SLAM approaches focused on the use of range sensors, such as sonar rings and lasers, see [

12,

13,

14,

15]. Nevertheless, some disadvantages appear when using range sensors in SLAM: correspondence or data association becomes difficult, the sensors are expensive and have a limited working range, and some of them are limited to 2D maps. For small unmanned aerial vehicles, there exist several limitations regarding the design of the platform, mobility, and payload capacity that impose considerable restrictions. Once again, cameras appear as a good option to be used in SLAM systems applied to UAVs.

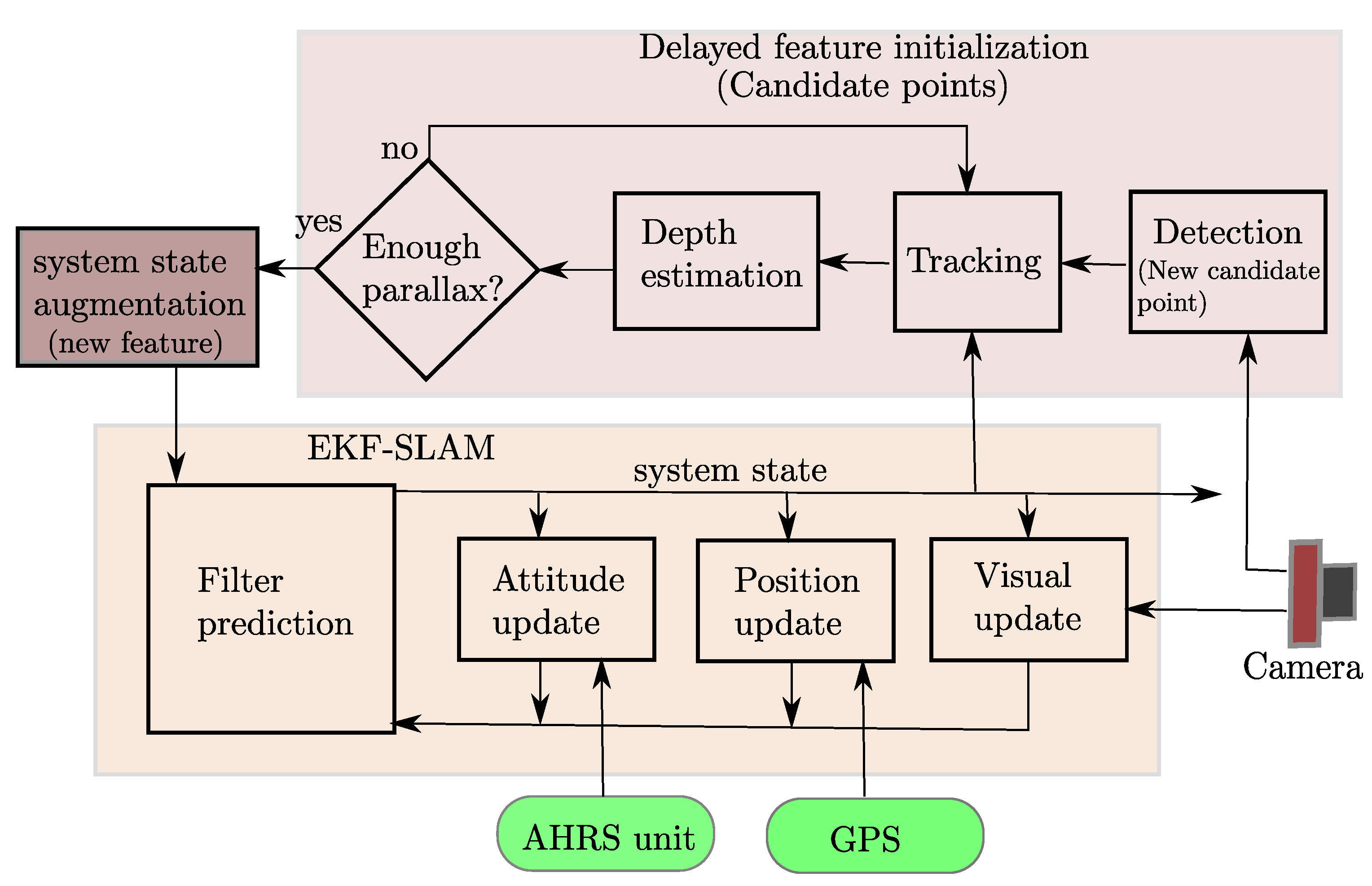

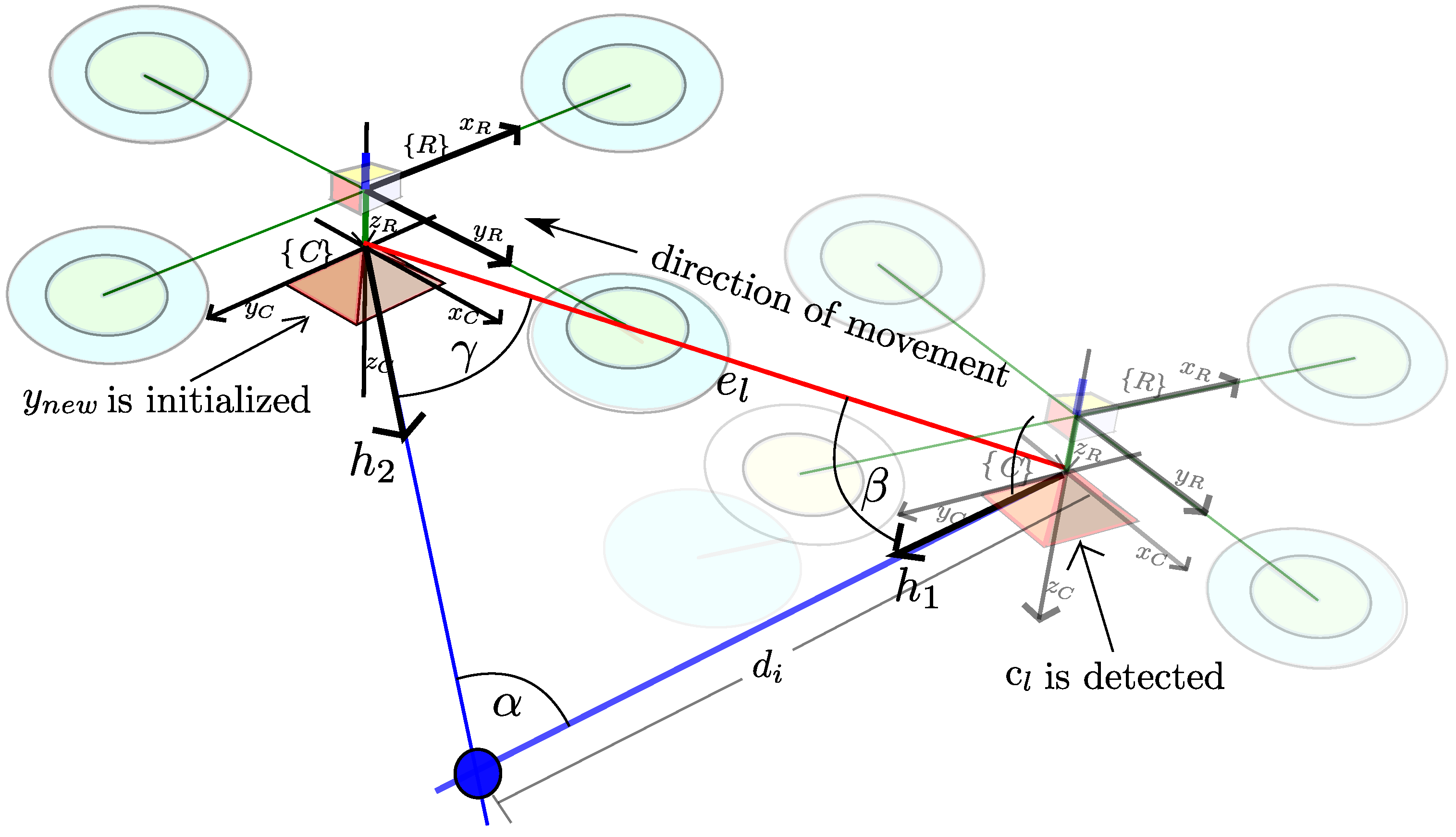

In this work, the authors propose the use of a monocular camera looking downwards, integrated into the aerial vehicle, in order to provide visual information of the ground. With such information, the proposed visual-based SLAM system will be using visual information, attitude, and position measurements in order to accurately estimate the full state of the vehicle as well as the position of the landmarks observed by the camera.

Compared with another kind of visual configurations (e.g., stereo vision), the use of monocular vision has some advantages in terms of weight, space, power consumption, and scalability. For example, in stereo rigs, the fixed base-line between cameras can limit the operation range. On the other hand, the use of monocular vision introduces some technical challenges. First, depth information cannot be retrieved in a single frame, and hence, robust techniques to recover features depth are required. In this work, a novel method is developed following the research initiated in [

16]. The proposed approach is based on a stochastic technique of triangulation to estimate features depth.

In this novel research, a new difficulty appears: the metric scale of the world cannot be retrieved if monocular vision is used as the unique sensory input to the system. For example, in the experiments presented in [

17], the first ten measurements are aligned with the ground-truth in order to obtain the scale of the environment. In [

18], the monocular scale factor is retrieved from a feature pattern with known dimensions. On the other hand, in many real scenarios GPS signal is available, at least for some periods. For this reason, in this work it is assumed that the GPS signal is known during a short period (for some seconds) at the beginning of the trajectory. Those GPS readings will be integrated into the system in order to recover the metric scale of the world. This period of time is what authors consider the initialization period. After this period, the system can rely only on visual information to estimate the position of the aerial vehicle.

The integration of GPS readings with visual information is not new in the literature, see [

19]. In this sense, one of the contributions of this work is to demonstrate that the integration of very noisy GPS measurements into the system for an initial short period is enough to recover the metric scale of the world. Furthermore, the experiments demonstrate that for flight trajectories performed near the origin of the navigation reference frame, it is better to avoid the integration of such GPS measurements after the initialization period.

This paper is organized as follows:

Section 2 states the problem description and assumptions.

Section 3 describes the proposed method in detail.

Section 4 shows the experimental results, and finally in

Section 5, the conclusions of this work are presented.

2. System Specification

2.1. Assumptions

The platform that the authors consider in this work is a quadrotor freely moving in any direction in

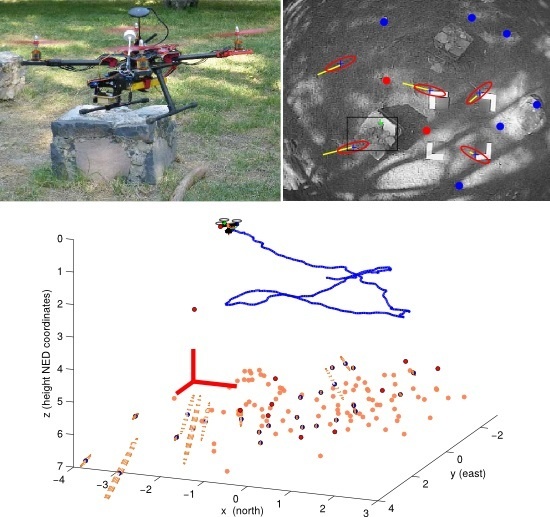

, as shown in

Figure 1. The quadrotor is equipped with a monocular camera, an attitude and heading reference system (AHRS) and a position sensor (GPS). It is important to remark that the proposed visual-based SLAM approach can be applied to another kind of platforms.

The proposed system is mainly intended for local autonomous vehicle navigation. Hence, the local tangent frame is used as the navigation reference frame. The initial position of the vehicle defines the origin of the navigation coordinates frame. The navigation system follows the NED (North, East, Down) convention. In this work, the magnitudes expressed in the navigation, vehicle (robot), and camera frame are denoted respectively by the superscripts , , and . All the coordinate systems are right-handed defined.

In this research, the sensors that have been taken into account are described and modelled in the following subsections.

2.2. Monocular Camera

As a vision system, a standard monocular camera has been considered. In this case, a central-projection camera model is assumed. The image plane is located in front of the camera’s origin where a non-inverted image is formed. The camera frame is right-handed with the z-axis pointing to the field of view.

The

projection of a 3D point located at

to the image plane

is defined by:

where

u and

v are the coordinates of the image point

p expressed in pixel units, and:

being

the same 3D point

, but expressed in the camera frame

by

. In this case, it is assumed that the intrinsic parameters of the camera are already known: (i) focal length

f; (ii) principal point

; and (iii) radial lens distortion

.

Let be the rotation matrix that transforms the navigation frame to the camera frame . Let be a known value, and is computed from the current robot quaternion . Let be the position of the camera’s optical center position expressed in the navigation frame.

Inversely, a directional vector can be computed from the image point coordinates u and v.

The vector points from the camera optical center position to the 3D point location. can be expressed in the navigation frame by , where is the camera-to-navigation rotation matrix. Note that for the mapping case, defined in Equation (3), depth information is lost.

The distortion caused by the camera lens is considered through the model described in [

20]. Using this model (and its inverse form), undistorted pixel coordinates

can be obtained from the distorted pixel

, and conversely.

2.3. Attitude and Heading Reference System

An attitude and heading reference system (AHRS) is a combination of instruments capable of maintaining an accurate estimation of the vehicle attitude while it is manoeuvring. Recent manufacturing of solid-state or MEMS gyroscopes, accelerometers, magnetometers, and powerful microcontrollers as well, have made possible the development of small, low-cost, and reliable AHRS devices (e.g., [

1,

21,

22]). For these reasons, in this work a loosely-coupled approach is considered. In this case, the information of orientation provided by the AHRS is explicitly fused into the system. Hence, the availability of high-rated (typically 50–100 Hz) attitude measurements provided by a decoupled AHRS device are assumed.

Attitude measurements

are modelled by:

where

, being

,

, and

Euler angles denoting respectively the roll, pitch, and yaw of the vehicle. Let

be a Gaussian white noise with power spectral density (PSD)

.

2.4. GPS

The Global Positioning System (GPS) is a satellite-based navigation system that provides 3D position information for objects on or near the Earth’s surface. The GPS system and global navigation satellite systems have been described in detail in numerous studies (e.g., [

2,

23]). Several sources of error affect the accuracy of GPS position measurements. The cumulative effect of each of these error sources is called the user-equivalent range error (UERE). In [

2], these errors are characterized as a combination of slowly varying biases and random noise. In [

24] it is stated that the total UERE is approximately 4.0 m (

σ), from which 0.4 m (

σ) correspond to random noise. In

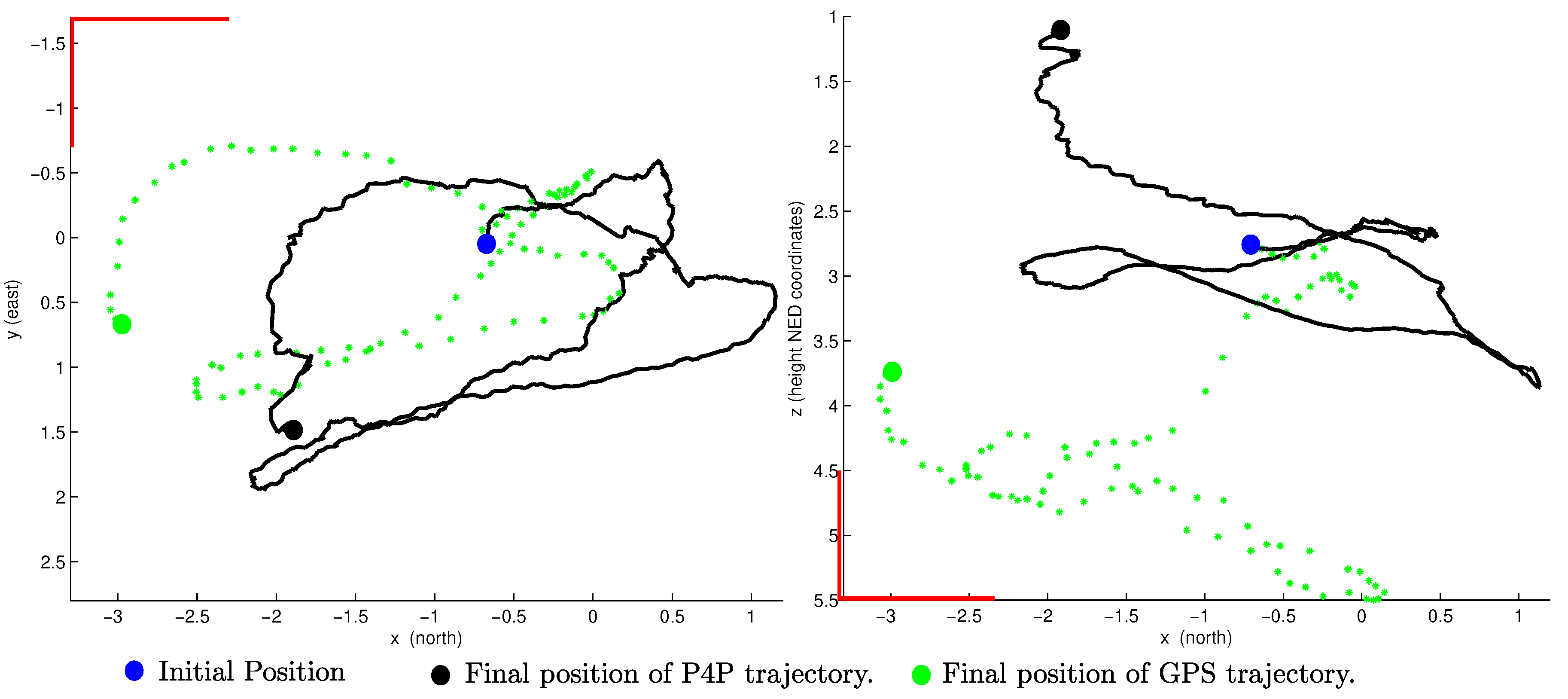

Figure 2, a comparison between the trajectory obtained with GPS and the actual one, flying in a small area, is shown.

In this work, it is assumed that position measurements

can be obtained from the GPS unit, at least at the beginning of the trajectory, and they are modelled by:

where

is a Gaussian white noise with PSD

, and

is the position of the vehicle.

Commonly, position measurements are obtained from GPS devices in geodetic coordinates (latitude, longitude, and height). Therefore, in Equation (5) it is assumed that GPS position measurements have been previously transformed to their corresponding local tangent frame coordinates. It is also assumed that the offset between the GPS antenna and the vehicle frame has been taken into account in the previous transformation.

2.5. Sensor Fusion Approach

The estimator proposed in this work is designed in order to estimate the full state of the vehicle, which will contain the position and orientation of the vehicle and their first derivatives, as well as the location of the landmarks observed by the camera.

Attitude estimation can be well-handled by the available systems in the vehicle, as has been mentioned in the above subsections. Typically, the output of the AHRS is directly used as a feedback to the control system for stabilizing the flying vehicle. On the other hand, the proposed method requires the camera–vehicle to know its orientation in order to estimate its position, as will be discussed later in the paper. In order to account for the uncertainties associated with the estimation provided by the AHRS, the orientation is included into the state vector (see

Section 3.1) and is explicitly fused into the system (see

Section 3.4).

Regarding the problem of position estimation, it cannot be solved for applications that require performing precise manoeuvres, even when GPS signal is available, as it can be inferred from the example presented in

Section 2.4. Therefore, some additional sensory information (e.g., monocular vision) should be integrated into the system in order to improve its accuracy. On the other hand, one of the most challenging aspects of working with monocular sensors has to do with the impossibility of directly recovering the metric scale of the world. If no additional information is used, and a single camera is used as the sole source of data to the system, the map and trajectory can only be recovered without metric information [

25].

Monocular vision and GPS are not suitable to be used separately for navigation purposes in some scenarios. For this reason, the noisy data obtained from the GPS is added during the initialization period in order to incorporate metric information into the system. Hence, after an initial period of convergence, where the system is considered to be in the initialization mode, the system can operate relying only on visual information to estimate the vehicle position.

4. Experimental Results

In this section, the results obtained using synthetic data from simulations are presented as well as the results obtained from experiments with real data. The experiments were performed in order to validate the performance of the proposed method. A MATLAB® implementation was used for this purpose.

4.1. Experiments with Simulations

In simulations, the model used to implement the vehicle dynamics was taken from [

35]. To model the transient behaviour of the GPS error, the approach of [

36] was followed. The monocular camera was simulated using the same parameter values of the camera used in the experiments with real data. The parameter values used to emulate the AHRS were taken from [

1].

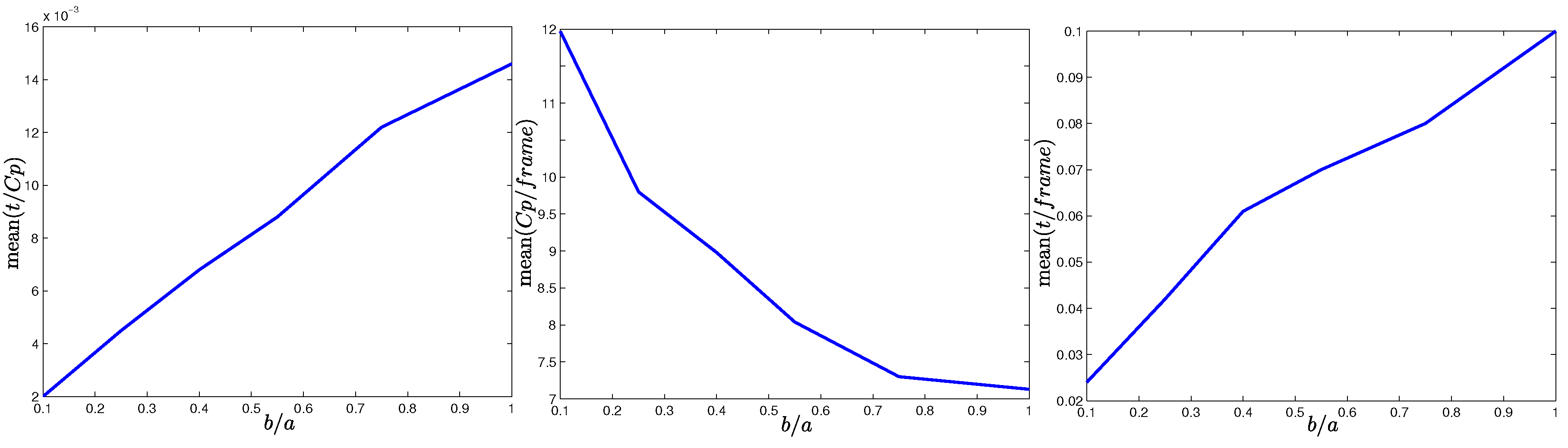

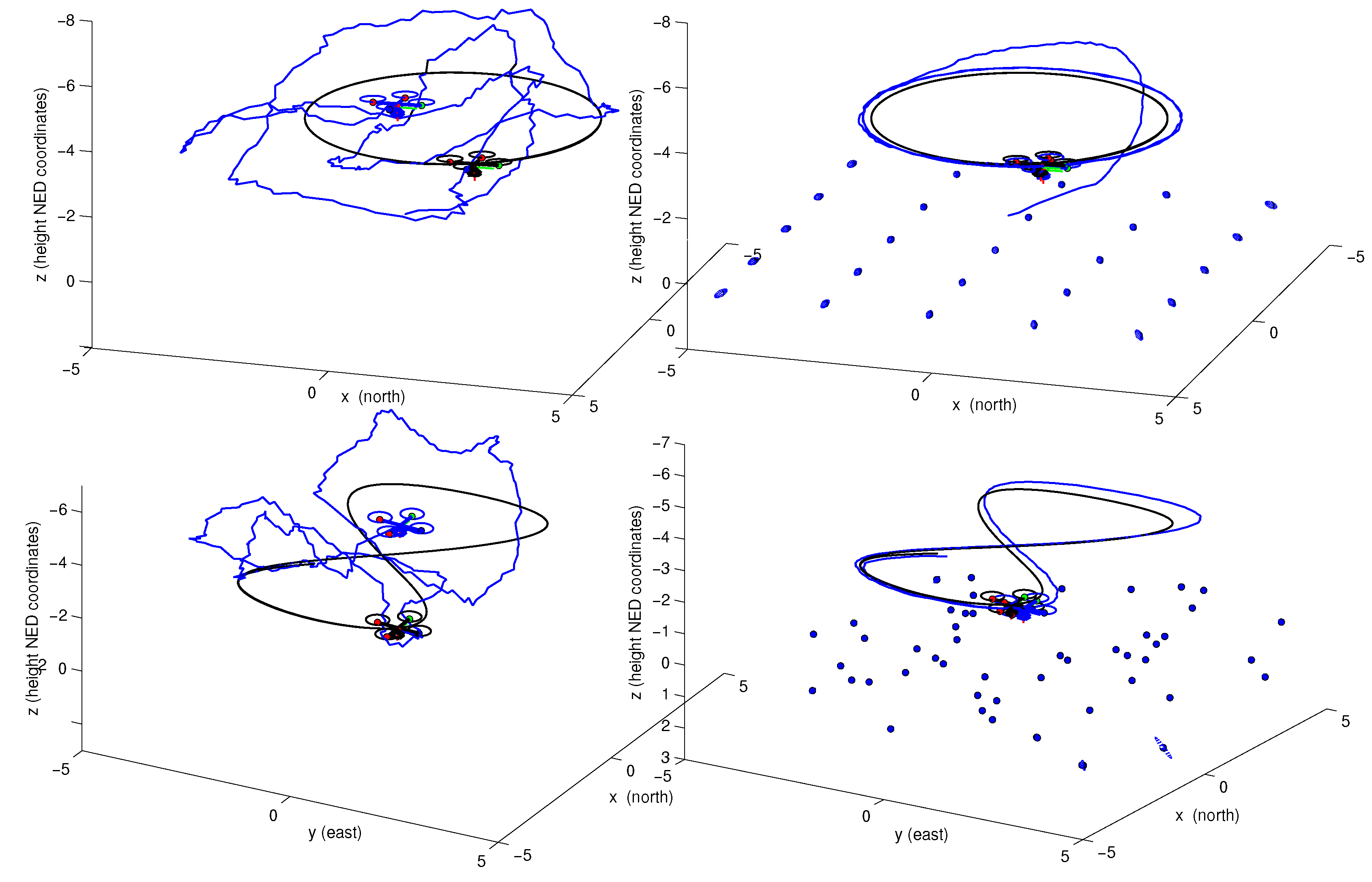

Figure 9 illustrates two cases of simulation: (a) The quadrotor was commanded to take off from the ground and then to follow a circular trajectory with constant altitude. The environment is composed by 3D points, uniformly distributed over the ground, which emulate visual landmarks; (b) The quadrotor was commanded to take off from the ground and then to follow a figure-eight-like trajectory with constant altitude. The environment is composed by 3D points, randomly distributed over the ground.

In simulations, it is assumed that the camera can detect and track visual features, avoiding the data association problem. Furthermore, the problem of the influence of the estimates on the control system was not considered. In other words, an almost perfect control over the vehicle is assumed.

Figure 10 shows the average mean absolute error (MAE) in position, obtained after 20 Monte Carlo runs of simulation. The MAE was computed for three scenarios: (i) using only GPS to estimate position; (ii) using GPS together with camera along all of the trajectory in order to estimate position and map; (iii) using GPS only during the initialization period, and then performing visual-based navigation and mapping.

Figure 9 and

Figure 10 clearly show the benefits of incorporating visual information into the system. It is important to note that the trajectory obtained relying only on the GPS was computed by incorporating GPS readings into the filter, and do not denote raw measurements. In

Figure 10 it is interesting to note that the computed MAE values for the trajectories obtained through visual-based navigation exhibit the classical SLAM behaviour when the quadrotor returns near to its initial position. In this case, the error is minimized close to zero. On the other hand, when the GPS is used all the time, the MAE remains more constant. In this case, it is seen that even when the vehicle is close to its trusted position, there is some influence of the GPS errors that affect the estimation. This behaviour suggests that for trajectories performed near to a local frame of reference, and even when the GPS signal is available, it is better to navigate having more confidence in visual information than in GPS data. On the other hand, in the case of trajectories moving far away from its initial frame of reference, the use of absolute referenced data obtained from the GPS imposes an upper bound on the ever growing error, contrary to what is expected with a pure vision-based SLAM approach.

In these experiments, it is important to note that the most relevant source of error comes from the slow-time varying bias part of the GPS. In this case, some of the effects of this bias can be tackled by the model in Equation (5) by means of increasing the measurement noise covariance matrix. On the other hand, it was found that increasing this measurement matrix too much can affect the convergence of initial features depth. A future work could be, for instance, to develop an adaptive criteria to fuse GPS data, or also to extend the method in order to explicitly estimate the slow-varying bias of the GPS.

4.2. Experiments with Real Data

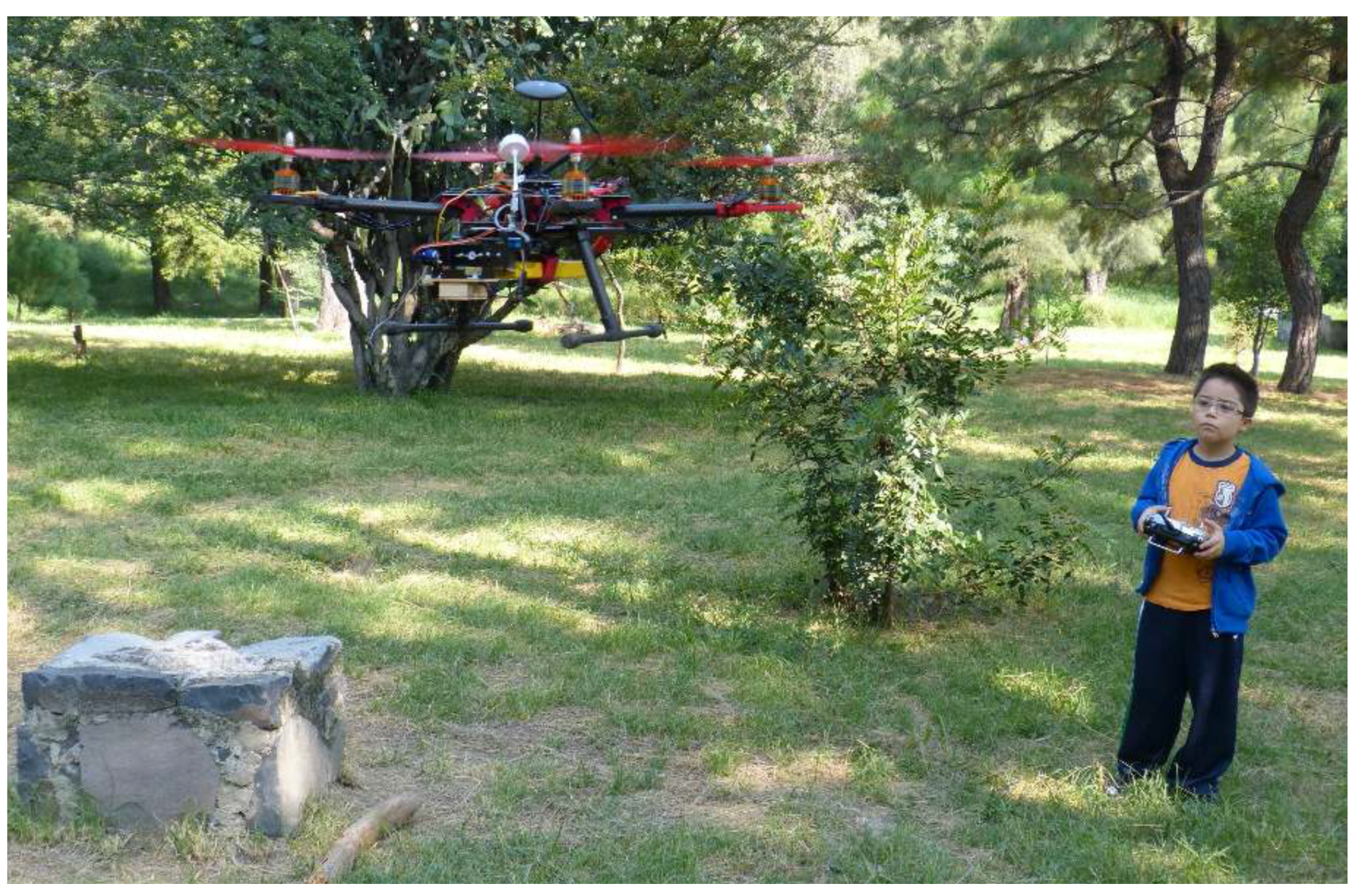

A custom-built quadrotor is used to perform experiments with real data. The vehicle is equipped with an Ardupilot unit as flight controller [

37], a NEO-M8N GPS unit, a radio telemetry unit 3 DR 915 Mhz, a DX201 DPS camera with wide angle lens, and a 5.8 GHz video transmitter. In experiments, the quadrotor has been manually radio-controlled (see

Figure 11).

A custom-built C++ application running on a laptop has been used to capture data from the vehicle, which were received via MAVLINK protocol [

38], as well as capturing the digitalized video signal transmitted from the vehicle. The data captured from the GPS, AHRS, and frames from the camera were synchronized and stored in a dataset. The frames with a resolution of 320 × 240 pixels, in gray scale, were captured at 26

. The flights of the quadrotor were conducted in a open area of a park surrounded by trees, see

Figure 11. The surface of the field is mainly flat and composed by grass and dirt, but the experimental environment also included some small structures and plants. An average of 8–9 GPS satellites were visible at the same time.

In experiments, in order to have an external reference of the flight trajectory to evaluate the performance of the proposed method, four marks were placed in the floor, forming a square of known dimensions (see

Figure 4). Then, a perspective on 4-point (P4P) technique [

39] was applied to each frame in order to compute the relative position of the camera with respect to this known reference. It is important to note that the trajectory obtained by the above technique should not be considered as a perfect reference of ground-truth. However, this approach was very helpful to have a fully independent reference of flight for evaluation purposes. Finally, the MATLAB implementation of the proposed method has been executed offline for all the dataset in order to estimate the flight trajectory and the map of the environment.

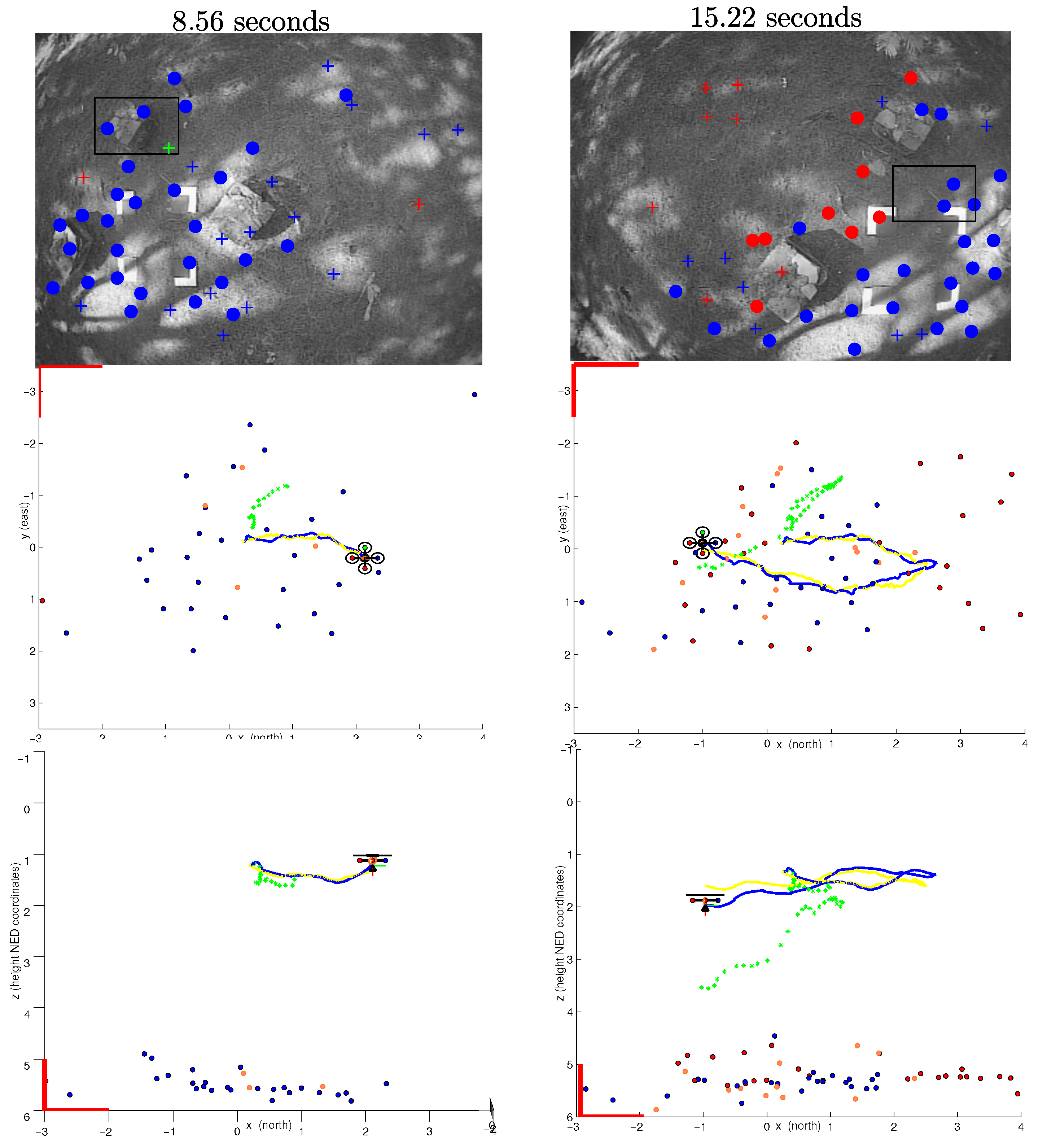

An initial period of flight was considered for initialization purposes, as explained in

Section 3.4.

Figure 12 shows two different instances of a flight trajectory. For this test, the GPS readings were fused into the system only at the initialization period; after that, the position of the vehicle and the map of the environment were recovered using visual information. Since the beginning of the flight (left plots), it can be clearly appreciated how the GPS readings diverge from the actual trajectory. Several features have been included into the map just after a few seconds of flight (right plots).

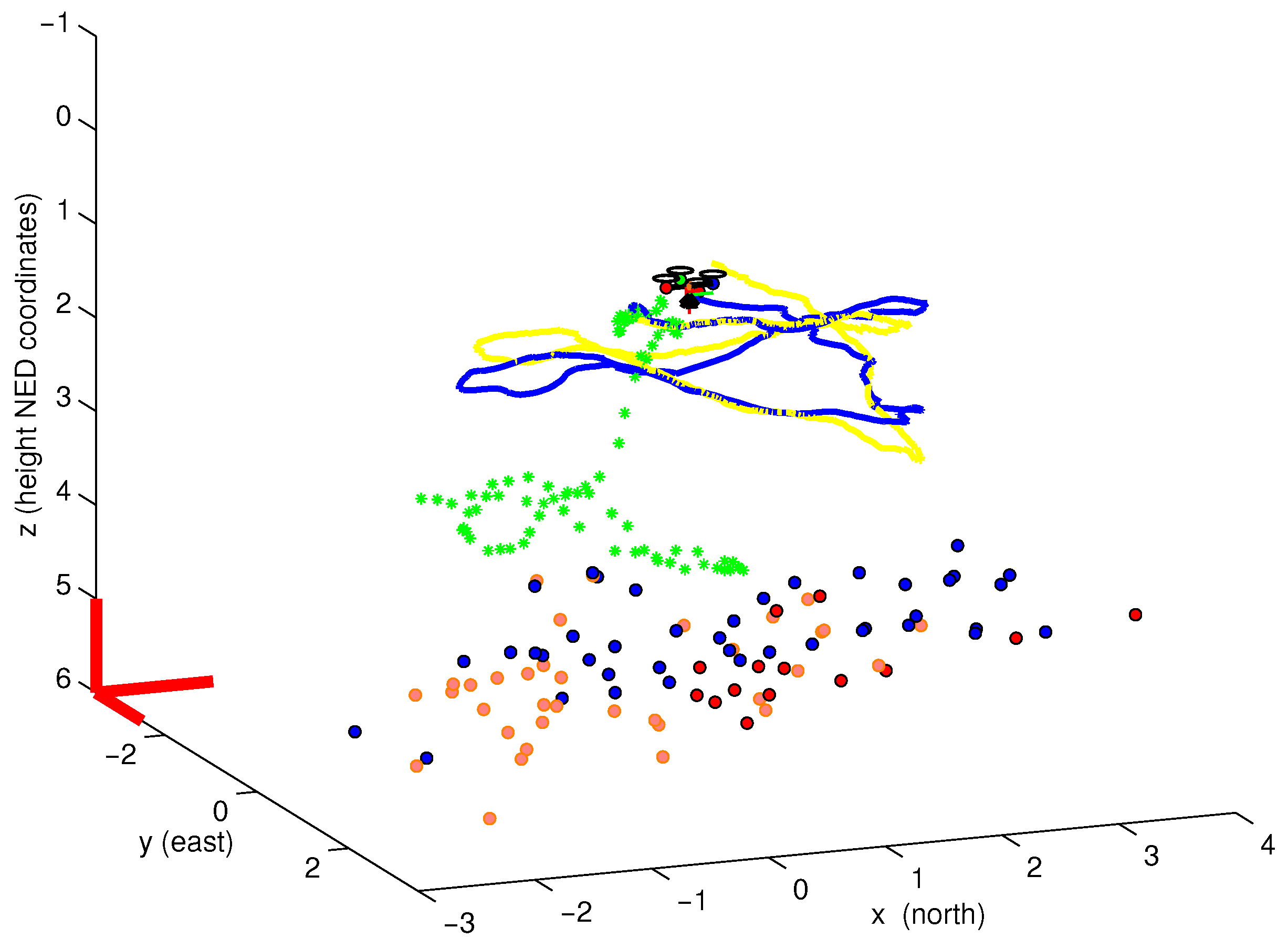

Figure 13 shows a 3D perspective of the estimated map and trajectory after 30 s of flight. In this test, a good concordance between the estimated trajectory and the P4P visual reference were obtained, especially if it is compared with the GPS trajectory.

In order to gain more insight about the performance of the proposed method, the same three experimental variants used in simulations were computed, but in this case with real data: (i) GPS; (ii) GPS + camera; (iii) camera (GPS only at the initialization). In this comparison, all the results were obtained averaging ten executions of each method. Is important to note that those averages are computed because the method is not deterministic since the search and detection of new candidate points is conducted in a random manner over the images (

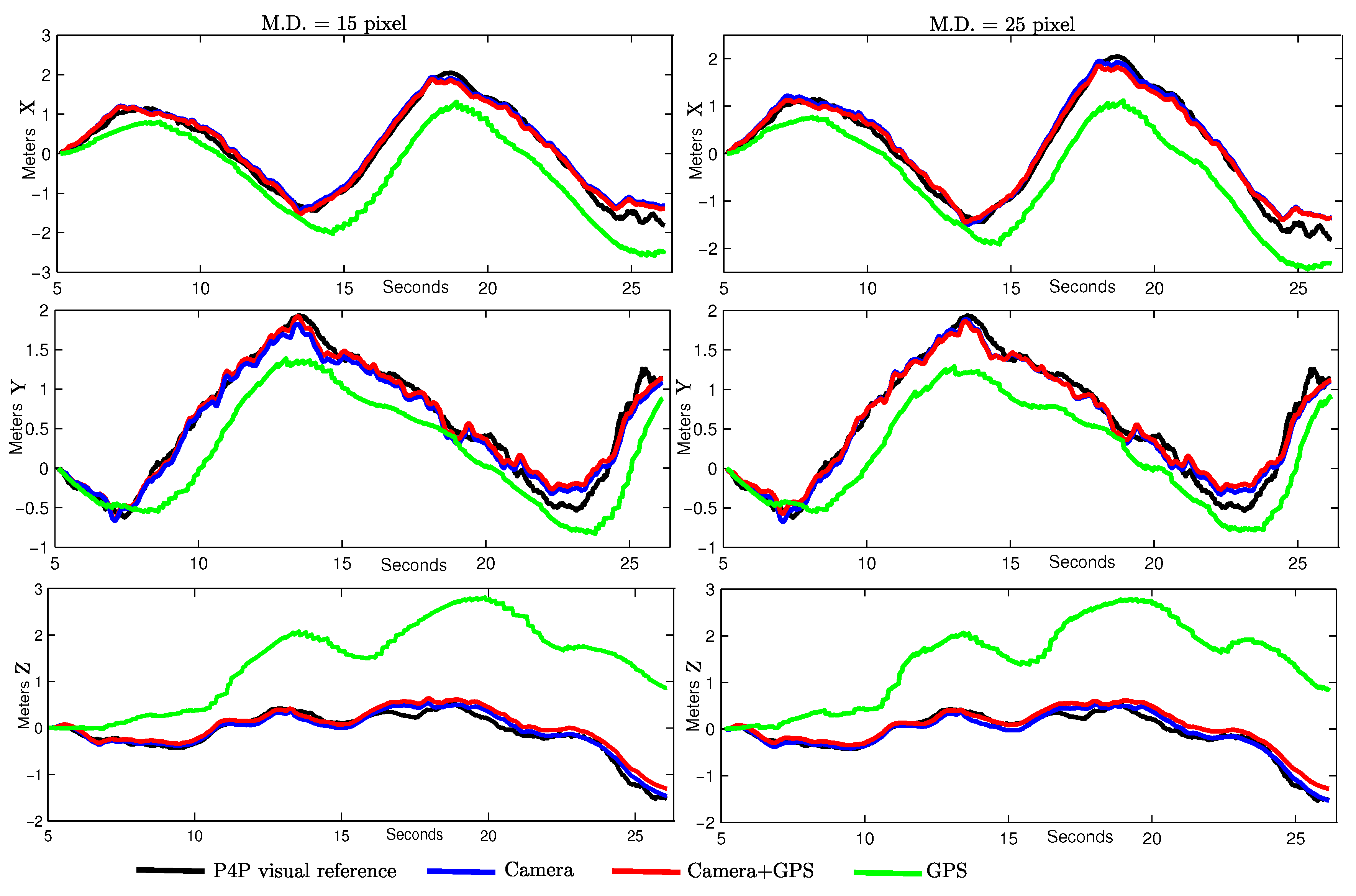

Section 3.3.1). The P4P visual reference was used as ground-truth. The number of visual features being tracked at each frame can affect the performance of monocular SLAM methods. For this reason, the methods were tested by setting two different values of minimum distance (M.D.) between the visual features being tracked. In this case, the bigger the value, the lesser the number of visual features that can be tracked.

Figure 14 shows the progression over time for each case. A separate plot for each coordinate (north, east, and down) is presented.

Table 1 gives a numerical summary of the results obtained in this experimental comparison with real data. These results confirm the results obtained through simulations. For trajectories estimated using only GPS data, the high average MAE in position makes this approach not suitable for its use as feedback to control fine manoeuvres. In this particular case, it is easy to see that the major source of error comes from the altitude computed by the GPS (see

Figure 14, lower plots). Additional sensors (e.g., a barometer) can be used to mitigate this particular error. However, the error in the horizontal plane (north–east) can be still critical for certain applications. In this sense, the benefits obtained by including visual information into the system are evident.

As it could be expected, the number of map features increases considerably as the minimum distance between visual points is decremented. However, it is interesting to remark that, at least for these experiments, there was no important improvement in error reduction. Regarding the use of the GPS altogether with monocular vision, a slightly better concordance was obtained between the P4P reference and trajectory estimated avoiding the GPS data (after the initialization). These results still suggest that, at least for small environments, it could be better to rely more on visual information than on GPS data after the initialization period.

The feasibility to implement monocular SLAM methods in real-time has been widely studied in the past. In particular, since the work of Davison in 2003 [

32], the feasibility for EKF-based methods was shown for maps composed of up to 100 features using standard hardware. Later, in [

29], it was shown that filter-based methods might be beneficial if limited processing power is available. Even real-time performance has been demonstrated for relatively high computation demanding techniques as the optimization-based method proposed in [

40]. In the application proposed in this work, it can be seen (

Table 1) that the number of features that are maintained into the system state (even for the low M.D.) are considerably below an upper bound that should allow a real-time performance, for instance by implementing the algorithm in C or C++ languages.

5. Conclusions

In this work, a vision-based navigation and mapping system with application to unmanned aerial vehicles has been presented. The visual information is obtained with a camera integrated in the flying vehicle pointing to the ground. The proposed scheme is closely related to monocular SLAM systems where a unique camera is used to concurrently estimate a map of visual features as well as the trajectory of the camera. As a difference from the purely monocular SLAM approaches, in this work a multi-sensor scheme is followed in order to take advantage of the set of sensors commonly available in UAVs in order to overcome some technical difficulties associated with monocular SLAM systems.

When a monocular camera is used, depth information cannot be retrieved in a single frame. In this work, a novel method is developed with this purpose. The proposed approach is based on a stochastic technique of triangulation to estimate features depth. Another important challenge that arises with the use of monocular vision comes with the fact that the metric scale of the environment can be only retrieved with a known factor if no additional information is incorporated into the system. In this work, the GPS readings are used during an initial short period of time in order to set the metric scale of estimation. After this period, the system operates relying uniquely on visual information to estimate the location of the vehicle.

Due to the highly noisy nature of the GPS measurements, it is unreliable to work only with filtered GPS data in order to obtain an accurate estimation of position to perform fine manoeuvres. In this case, visual information is incorporated into the system in order to refine such estimations.

The experimental results obtained through simulations as well as with real data suggest the following and relevant conclusions: (i) the integration into the system of very noisy GPS measurements during an initial short period is enough to recover the metric scale of the world; (ii) for flight trajectories performed near to the origin of the navigation frame of reference it is better to avoid integration of GPS measurements after the initialization period.