Robust Radar Emitter Recognition Based on the Three-Dimensional Distribution Feature and Transfer Learning

Abstract

:1. Introduction

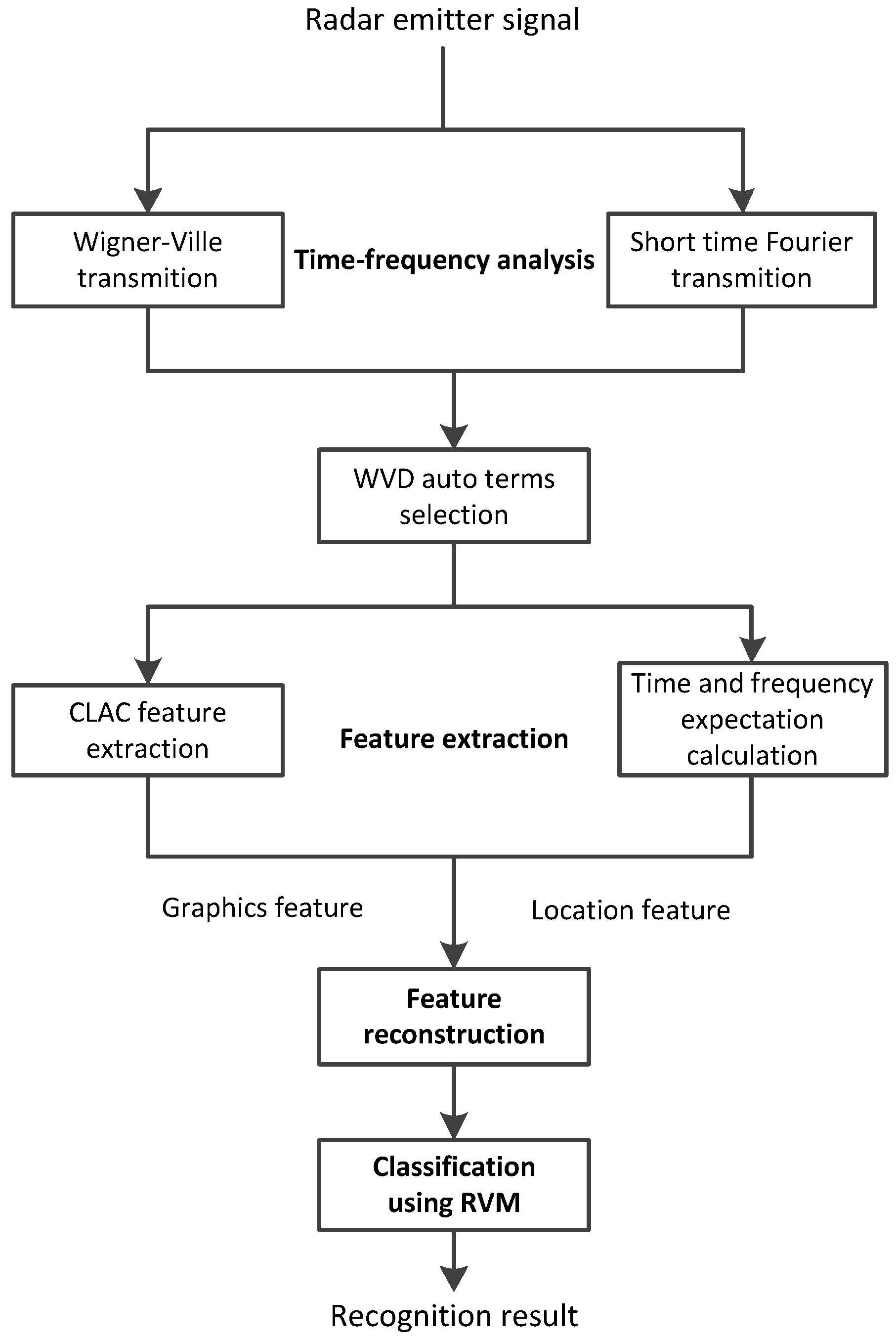

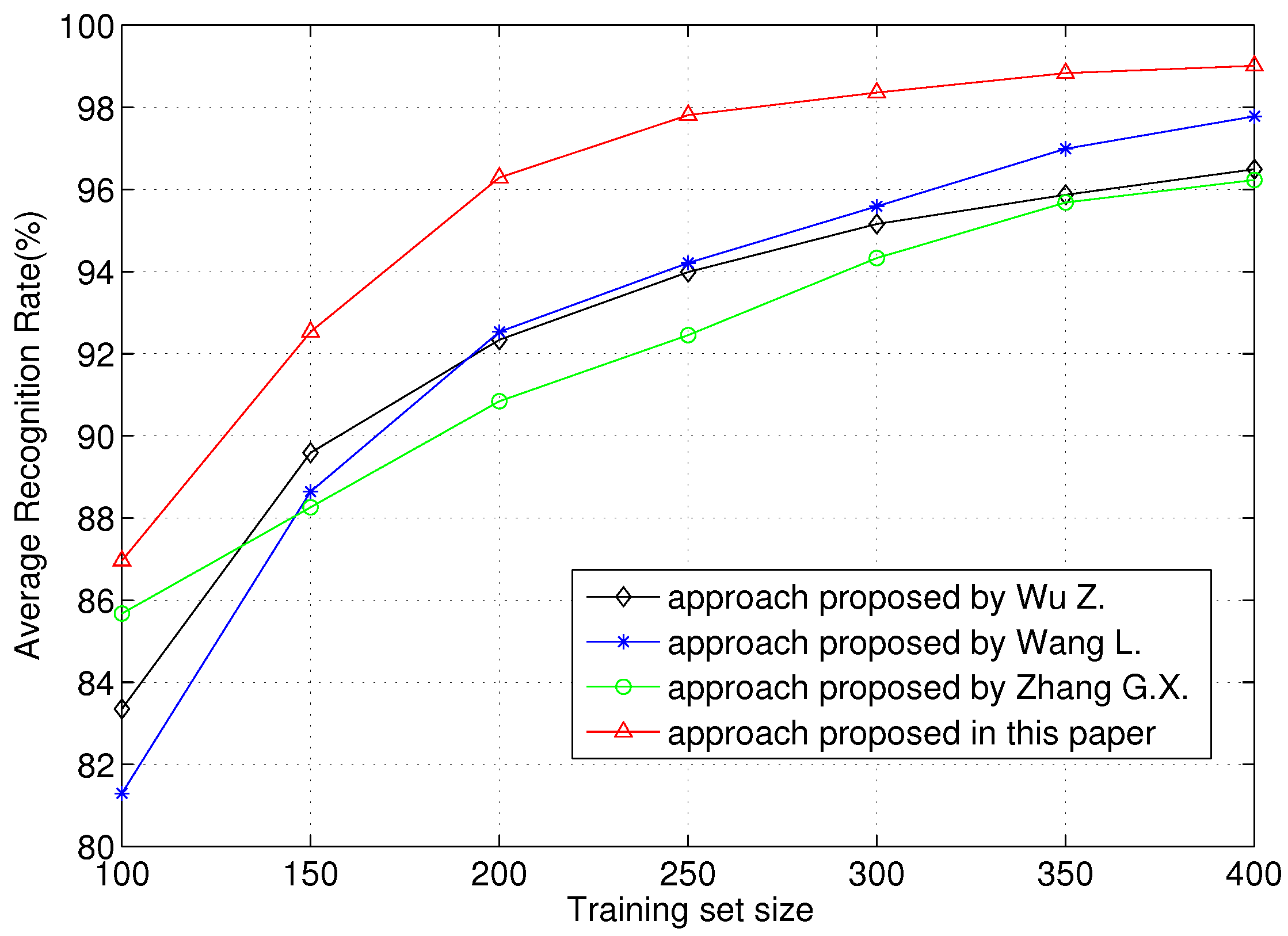

2. Radar Emitter Recognition System

2.1. System Model

- time-frequency analysis,

- feature extraction,

- classification.

2.2. Time-Frequency Analysis of Radar Emitter Signals

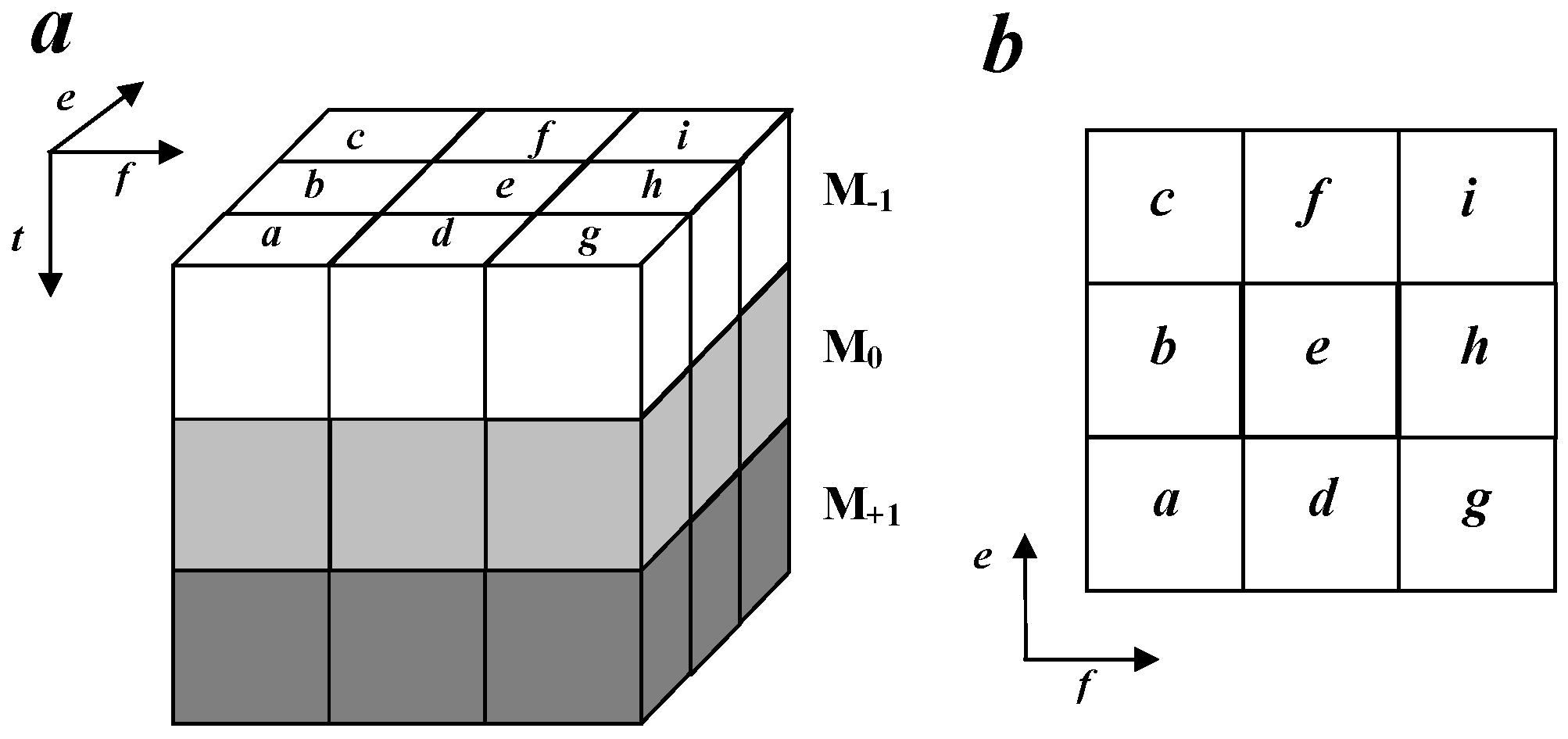

2.3. Feature Extraction

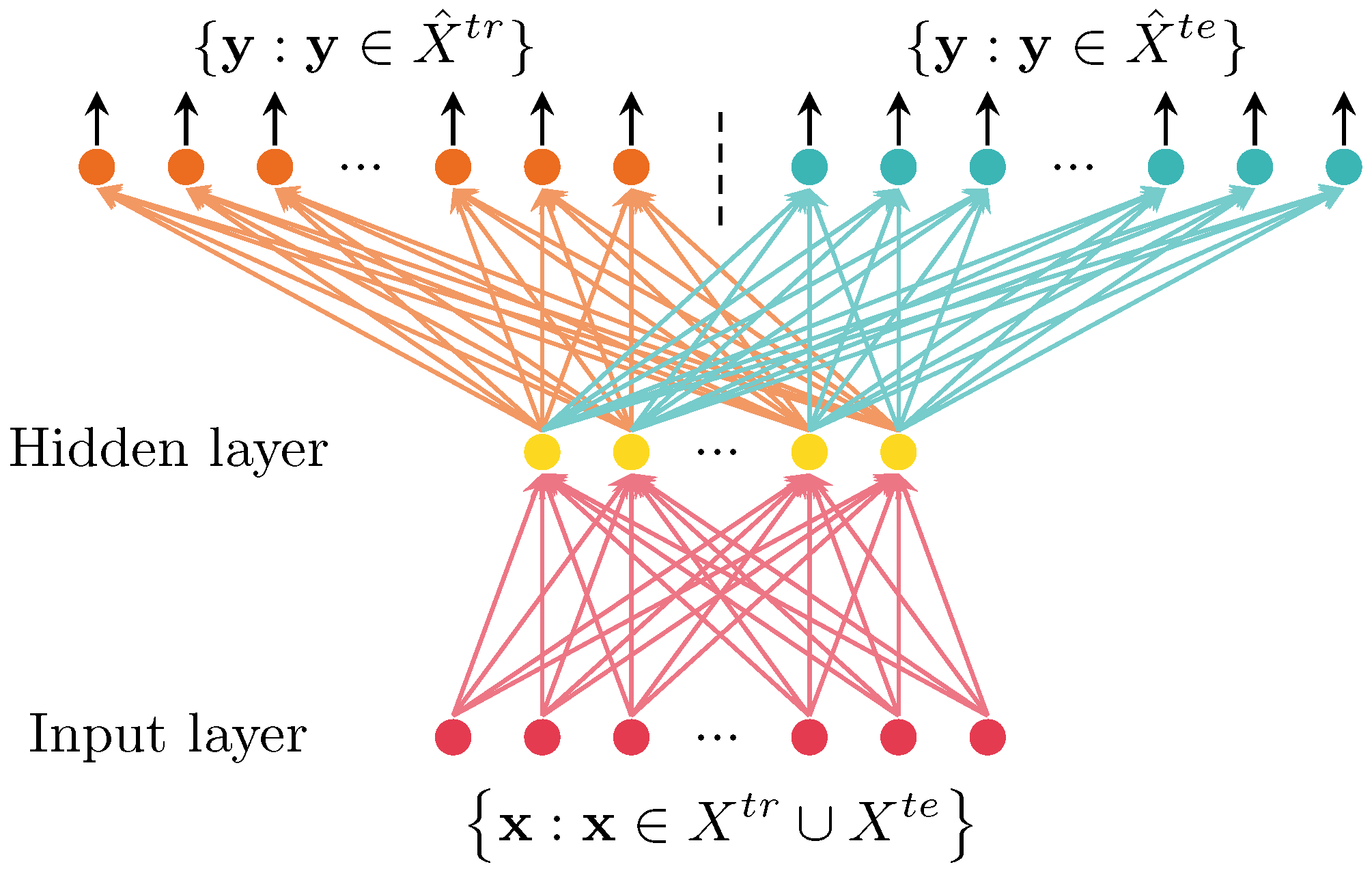

2.4. Feature Learning Based on Transfer Learning

2.5. Classification Using RVM

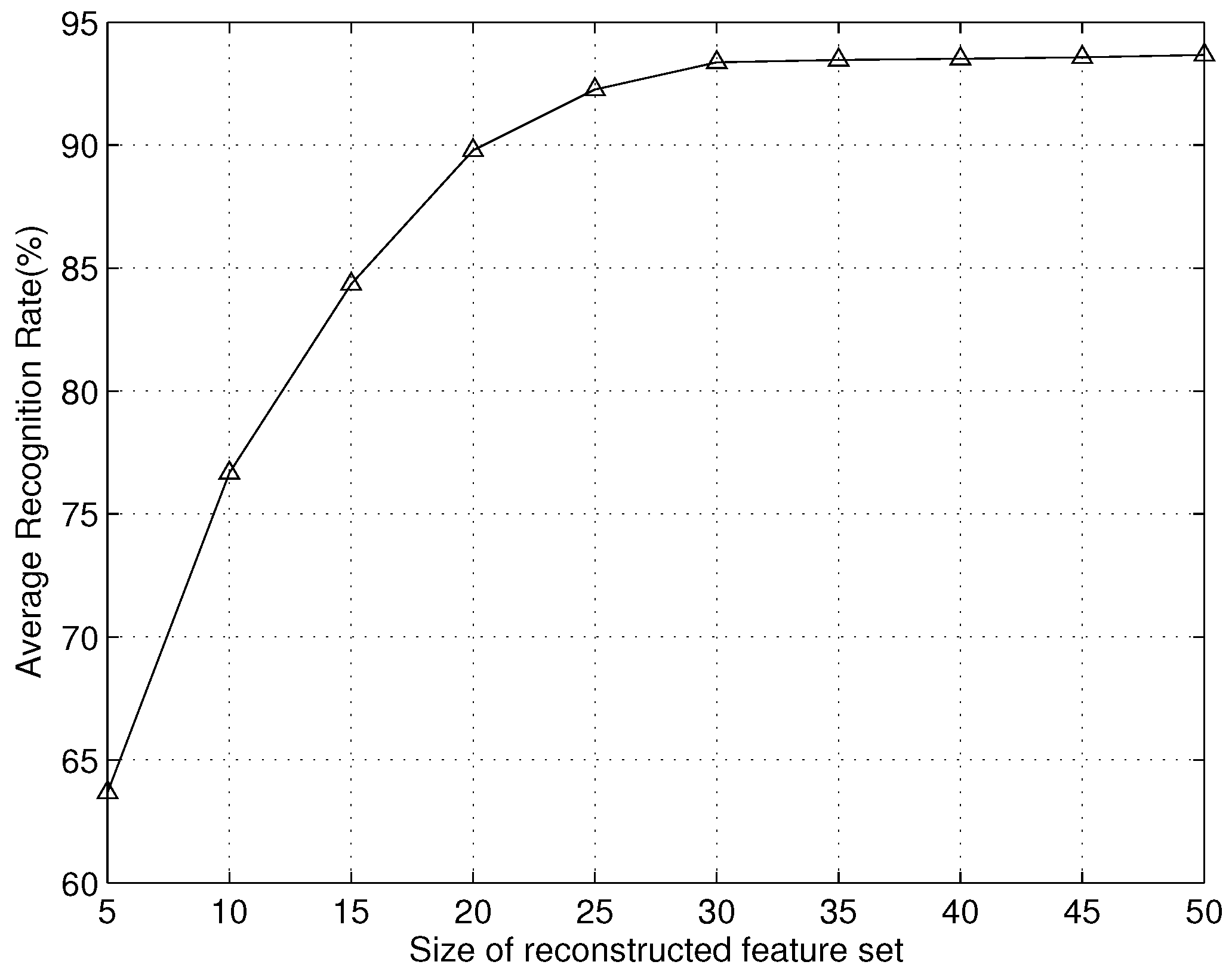

3. Results and Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Dudczyk, J.; Kawalec, A. Specific emitter identification based on graphical representation of the distribution of radar signal parameters. Jokull 2013, 63, 408–416. [Google Scholar] [CrossRef]

- Dudczyk, J.; Kawalec, A. Identification of emitter sources in the aspect of their fractal features. Bull. Pol. Acad. Sci. Tech. Sci. 2013, 61, 623–628. [Google Scholar] [CrossRef]

- Dudczyk, J.; Kawalec, A. Fractal Features of Specific Emitter Identification. Acta Phys. Pol. 2013, 124, 406–409. [Google Scholar] [CrossRef]

- Kawalec, A.; Owczarek, R. Radar emitter recognition using intrapulse data. In Proceedings of the NordSec 2005-The 10th Nordic Workshop on Secure IT-Systems, Warsaw, Poland, 17–19 May 2004; pp. 444–457.

- Ren, M.; Cai, J.; Zhu, Y.; He, M. Radar Emitter Signal Classification Based on Mutual Information and Fuzzy Support Vector Machines. In Proceedings of the International Conference on Software Process 2008, Beijing, China, 26–29 October 2008; pp. 1641–1646.

- Davy, J. Data Modeling and Simulation Applied to Radar Signal Recognition. Prov. Med. Surg. J. 2005, 26, 165–173. [Google Scholar]

- Latombe, G.; Granger, E.; Dilkes, F. Fast Learning of Grammar Production Probabilities in Radar Electronic Support. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1262–1290. [Google Scholar] [CrossRef]

- Bezousek, P.; Schejbal, V. Radar Technology in the Czech Republic. IEEE Aerosp. Electron. Syst. Mag. 2004, 19, 27–34. [Google Scholar] [CrossRef]

- Zhang, L.; Cui, N.; Liu, M.; Zhao, Y. Asynchronous filtering of discrete-time switched linear systems with average dwell time. IEEE Trans. Circ. Syst. I Regul. Pap. 2011, 58, 1109–1118. [Google Scholar] [CrossRef]

- Zhang, L.; Shi, P.; Boukas, E.K.; Wang, C. H∞ model reduction for uncertain switched linear discrete-time systems. Automatica 2008, 44, 2944–2949. [Google Scholar] [CrossRef]

- Chen, T.W.; Jin, W.D. Feature extraction of radar emitter signals based on symbolic time series analysis. In Proceedings of the 2007 International Conference on Wavelet Analysis and Pattern Recognition, Beijing, China, 2–4 November 2007; pp. 1277–1282.

- Ma, X.; Djouadi, S.M.; Li, H. State estimation over a semi-Markov model based cognitive radio system. IEEE Trans. Wirel. Commun. 2012, 11, 2391–2401. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, H.; Kaynak, O. Network-induced constraints in networked control systems—A survey. IEEE Trans. Ind. Inform. 2013, 9, 403–416. [Google Scholar] [CrossRef]

- Li, X.; Bi, G.; Ju, Y. Quantitative SNR Analysis for ISAR Imaging using LPFT. IEEE Trans. Aerosp. Electron. Syst. 2009, 45, 1241–1248. [Google Scholar] [CrossRef]

- Ma, X.; Olama, M.M.; Djouadi, S.M.; Charalambous, C.D. Estimation and identification of time-varying long-term fading channels via the particle filter and the EM algorithm. In Proceedings of the 2011 IEEE Radio and Wireless Symposium (RWS), Phoenix, AZ, USA, 16–19 January 2011; pp. 13–16.

- Ma, X.; Djouadi, S.M.; Kuruganti, T.P.; Nutaro, J.J.; Li, H. Control and estimation through cognitive radio with distributed and dynamic spectral activity. In Proceedings of the 2010 American Control Conference (ACC), Baltimore, MD, USA, 30 June–2 July 2010; pp. 289–294.

- Xing, M.; Wu, R.; Li, Y.; Bao, Z. New ISAR imaging algorithm based on modified Wigner-Ville distribution. IET Radar Sonar Navig. 2009, 3, 70–80. [Google Scholar] [CrossRef]

- Zhang, L.; Shi, P. Model reduction for switched LPV systems with average dwell time. IEEE Trans. Autom. Control 2008, 53, 2443–2448. [Google Scholar] [CrossRef]

- Jianxun, L.; Qiang, L.V.; Jianming, G.; Chunhua, X. An intelligent signal processing method of radar anti deceptive jamming. In Proceedings of the 7th International Symposium on Test Measurement, Beijing, China, 5–8 August 2007.

- Bin, G.F.; Liao, C.J.; Li, X.J. The method of fault feature extraction from acoustic emission signals using Wigner-Ville distribution. Adv. Mater. Res. 2011, 216, 732–737. [Google Scholar] [CrossRef]

- Feng, L.; Peng, S.D.; Ran, T.; Yue, W. Multi-component LFM Signal Feature Extraction Based on Improved Wigner-Hough Transform. In Proceedings of the 4th International Conference on Wireless Communications, Networking and Mobile Computing, Dalian, China, 12–17 Oocober 2008.

- Zhang, L.; Shi, P. Stability, gain and asynchronous control of discrete-time switched systems with average dwell time. IEEE Trans. Autom. Control 2009, 54, 2192–2199. [Google Scholar] [CrossRef]

- Li, L.; Ji, H.B.; Jiang, L. Quadratic time-frequency analysis and sequential recognition for specific emitter identification. IET Signal Process. 2011, 5, 568–574. [Google Scholar] [CrossRef]

- Swiercz, E. Automatic Classification of LFM Signals for Radar Emitter Recognition Using Wavelet Decomposition and LVQ Classifier. Acta Phys. Pol. A 2011, 119, 488–494. [Google Scholar] [CrossRef]

- Zhang, G.; Li, X. A new recognition system for radar emitter signals. Kybernetes 2012, 41, 1351–1360. [Google Scholar]

- Wu, Z.; Yang, Z.; Sun, H.; Yin, Z.; Nallanathan, A. Hybrid radar emitter recognition based on rough k-means classifier and support vector machine. EURASIP J. Adv. Signal Process. 2012, 2012, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Ji, H.B.; Jin, Y. Fuzzy Passive-Aggressive classification: A robust and efficient algorithm for online classification problems. Inf. Sci. 2013, 220, 46–63. [Google Scholar] [CrossRef]

- Haykin, S.; Bhattacharya, T. Modular learning strategy for signal detection in a nonstationary environment. IEEE Trans. Signal Process. 1997, 45, 1619–1637. [Google Scholar] [CrossRef]

- Li, Q.; Shui, P.; Lin, Y. A New Method to Suppress Cross-Terms of WVD via Thresholding Superimposition of Multiple Spectrograms. J. Electron. Inf. Technol. 2006, 28, 1435–1438. [Google Scholar]

- Wu, Z.; Yang, Z.; Yin, Z.; Quan, T. A Novel Radar Detection Approach Based on Hybrid Time-Frequency Analysis and Adaptive Threshold Selection. In Knowledge Engineering and Management; Springer: Berlin Heidelberg, Germany, 2014; pp. 79–89. [Google Scholar]

- Kobayashi, T.; Otsu, N. Three-way auto-correlation approach to motion recognition. Pattern Recognit. Lett. 2009, 30, 212–221. [Google Scholar] [CrossRef]

- Kanamori, T.; Hido, S.; Sugiyama, M. Efficient direct density ratio estimation for non-stationarity adaptation and outlier detection. In Proceedings of the Twenty-Second Annual Conference on Neural Information Processing Systems, Vancouver, Vancouver, British Columbia, BC, Canada, 8–11 December 2008; pp. 809–816.

- Deng, J.; Zhang, Z.; Marchi, E.; Schuller, B. Sparse autoencoder-based feature transfer learning for speech emotion recognition. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction (ACII), Geneva, Switzerland, 2–5 Spetember 2013; pp. 511–516.

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y. Deep learning of representations for unsupervised and transfer learning. Unsuperv. Transf. Learn. Chall. Mach. Learn. 2012, 7, 17–36. [Google Scholar]

- Deng, J.; Xia, R.; Zhang, Z.; Liu, Y.; Schuller, B. Introducing shared-hidden-layer autoencoders for transfer learning and their application in acoustic emotion recognition. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 4818–4822.

- Bengio, Y.; Lamblin, P.; Popovici, D.; Larochelle, H. Greedy layer-wise training of deep networks. Adv. Neural Inf. Process. Syst. 2007, 19, 153–160. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Tipping, M. Sparse Bayesian learning and the relevance vector machine. J. Mach. Learn. Res. 2001, 1, 211–244. [Google Scholar]

- Tipping, M.E.; Faul, A.C. Feature extraction of radar emitter signals based on symbolic time series analysis. In Proceedings of the Ninth International Workshop on Artificial Intelligence and Statistics, Beijing, China, 2–4 November 2003.

- Ma, J. The measurement capability and performance of the NMD-GBR radar. Aerosp. Electron. Warf. 2002, 5, 1–8. [Google Scholar]

| No. | Modulation | RF (MHz) | PW (μs) | FTR (MHz/μs)/CS |

|---|---|---|---|---|

| 1 | LFM | [4890, 5050], [5240, 5370], [5510, 5630] | [0.6, 1.2] | 7.8 |

| 2 | Mono-pulse | [5010, 5220], [5350, 5510] | [0.2, 0.5] | - |

| 3 | BPSK | [5260, 5550] | [0.3, 0.7] | Barker (7) |

| 4 | QPSK | [5410, 5510], [5630, 5680] | [0.6, 1.1] | Frank (16) |

| 5 | LFM | [5290, 5580] | [0.3, 0.6] | 0.1 |

| 6 | QPSK | [5500, 5620], [5660, 5730] | [1.0, 1.4] | Frank (16) |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Qiu, W.; Sun, H.; Nallanathan, A. Robust Radar Emitter Recognition Based on the Three-Dimensional Distribution Feature and Transfer Learning. Sensors 2016, 16, 289. https://doi.org/10.3390/s16030289

Yang Z, Qiu W, Sun H, Nallanathan A. Robust Radar Emitter Recognition Based on the Three-Dimensional Distribution Feature and Transfer Learning. Sensors. 2016; 16(3):289. https://doi.org/10.3390/s16030289

Chicago/Turabian StyleYang, Zhutian, Wei Qiu, Hongjian Sun, and Arumugam Nallanathan. 2016. "Robust Radar Emitter Recognition Based on the Three-Dimensional Distribution Feature and Transfer Learning" Sensors 16, no. 3: 289. https://doi.org/10.3390/s16030289

APA StyleYang, Z., Qiu, W., Sun, H., & Nallanathan, A. (2016). Robust Radar Emitter Recognition Based on the Three-Dimensional Distribution Feature and Transfer Learning. Sensors, 16(3), 289. https://doi.org/10.3390/s16030289