Real-time Imaging Orientation Determination System to Verify Imaging Polarization Navigation Algorithm

Abstract

:1. Introduction

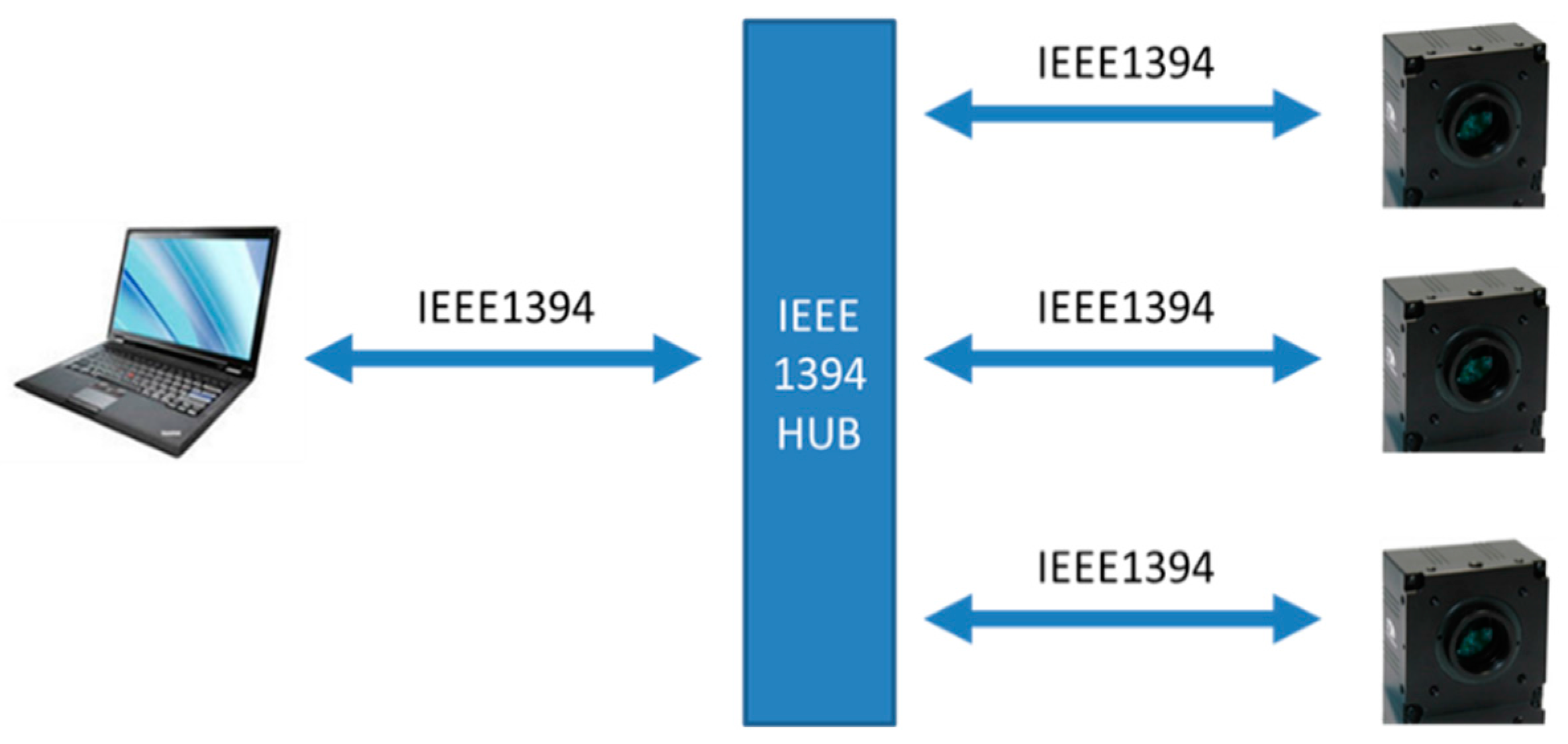

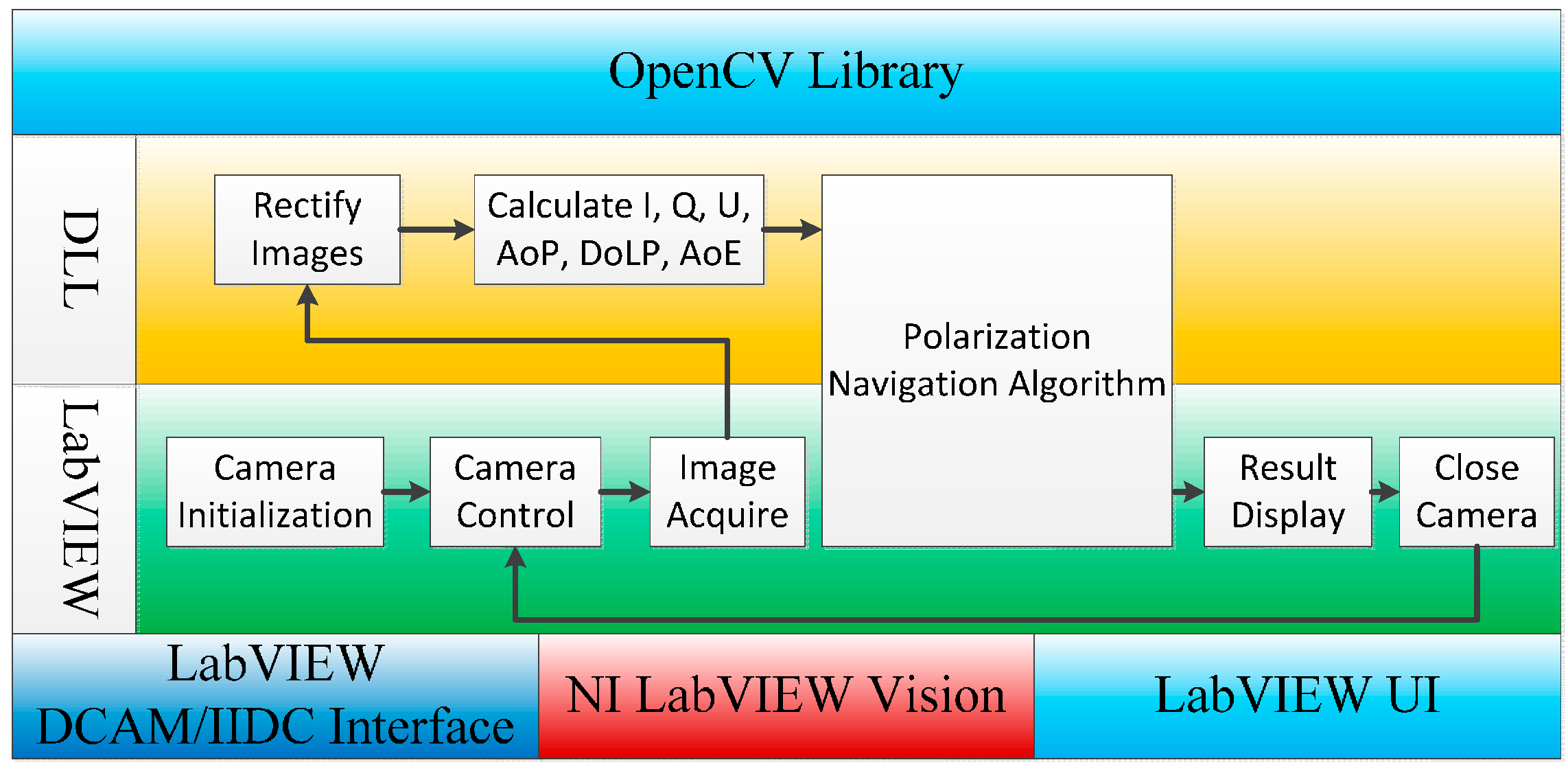

2. Fundamentals of Instrument Description

2.1. Principle of the System

2.2. Structure of the System

3. Calibration

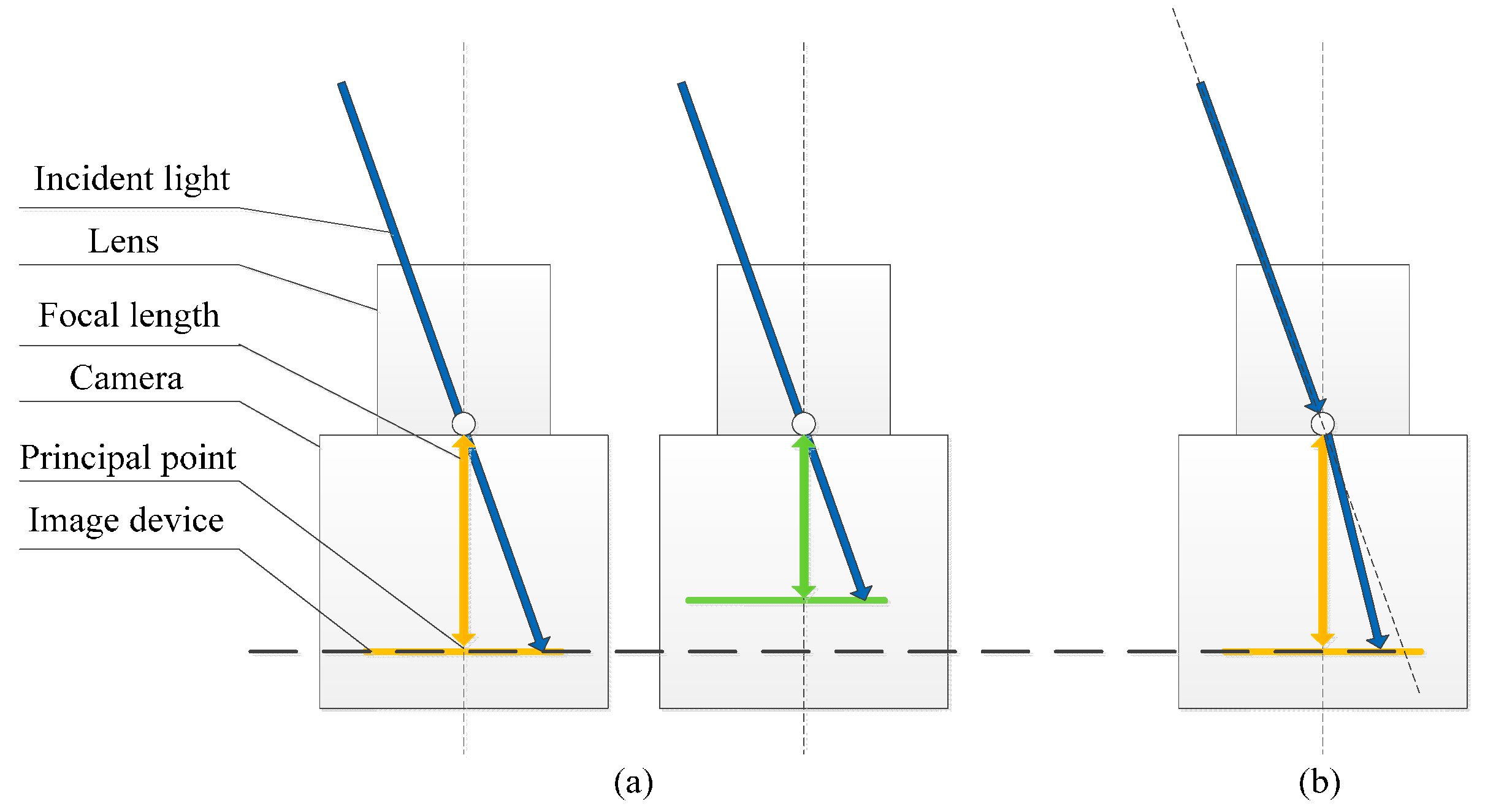

3.1. Calibration of Camera Parameters

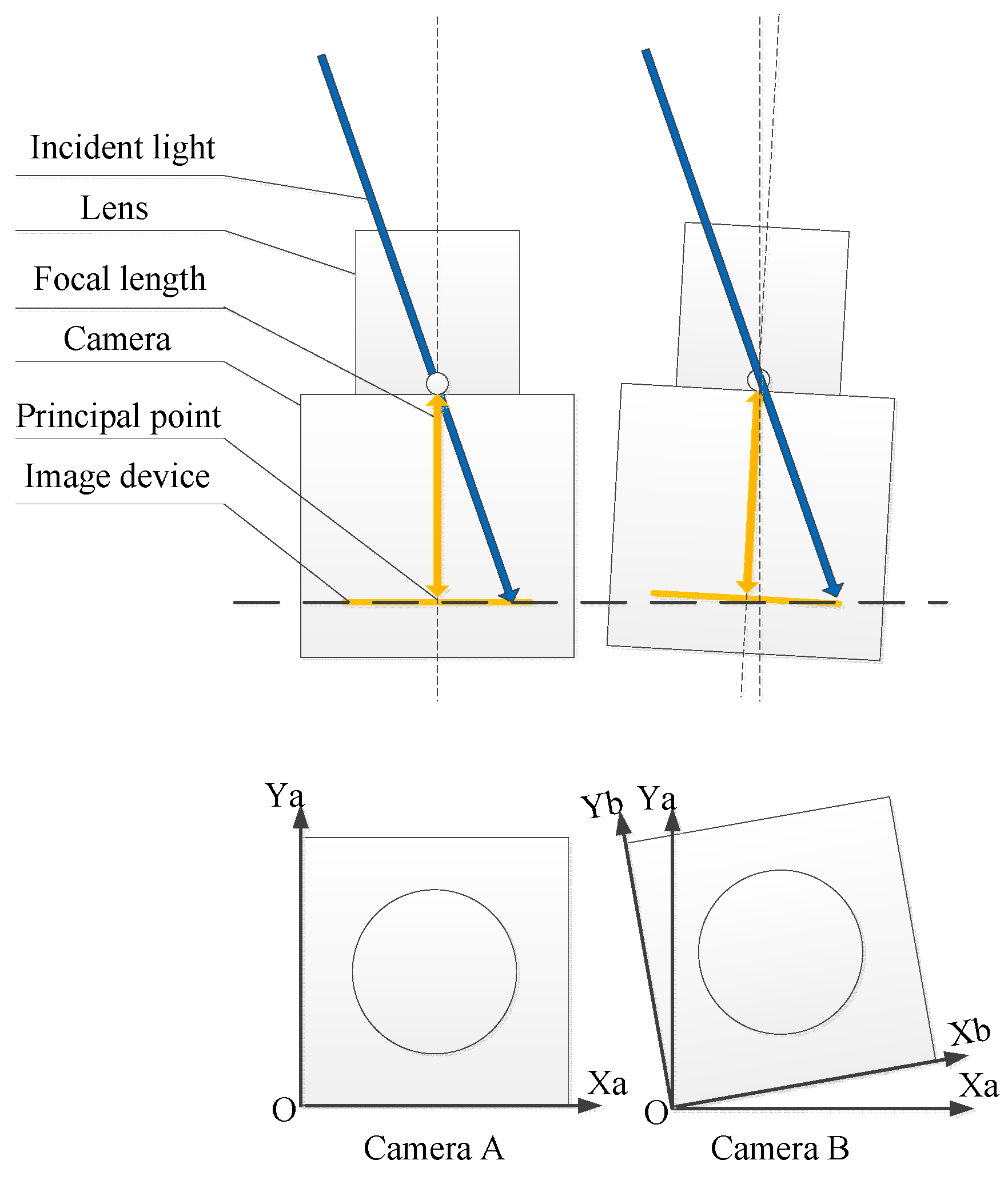

3.2. Calibration of Inconsistency of Cameras

3.3. Correction

4. Polarization Navigation Algorithm

5. Experimental Results

5.1. Calibration Results

| Camera A | Camera B | Camera C | |

|---|---|---|---|

| Intrinsic parameters | |||

| Principal point | (616.68, 510.45) | (651.25, 560.96) | (643.76, 525.58) |

| Focal length | (1622.43, 1621.89) | (1619.19, 1619.91) | (1616.81, 1616.49) |

| Distortion coefficient | [−0.104 0.125] | [−0.091 0.059] | [−0.092 0.032] |

| Extrinsic parameters | |||

| [0 0 0] | [−0.014 0.013 −0.002] | [−0.014 0.0150.002] | |

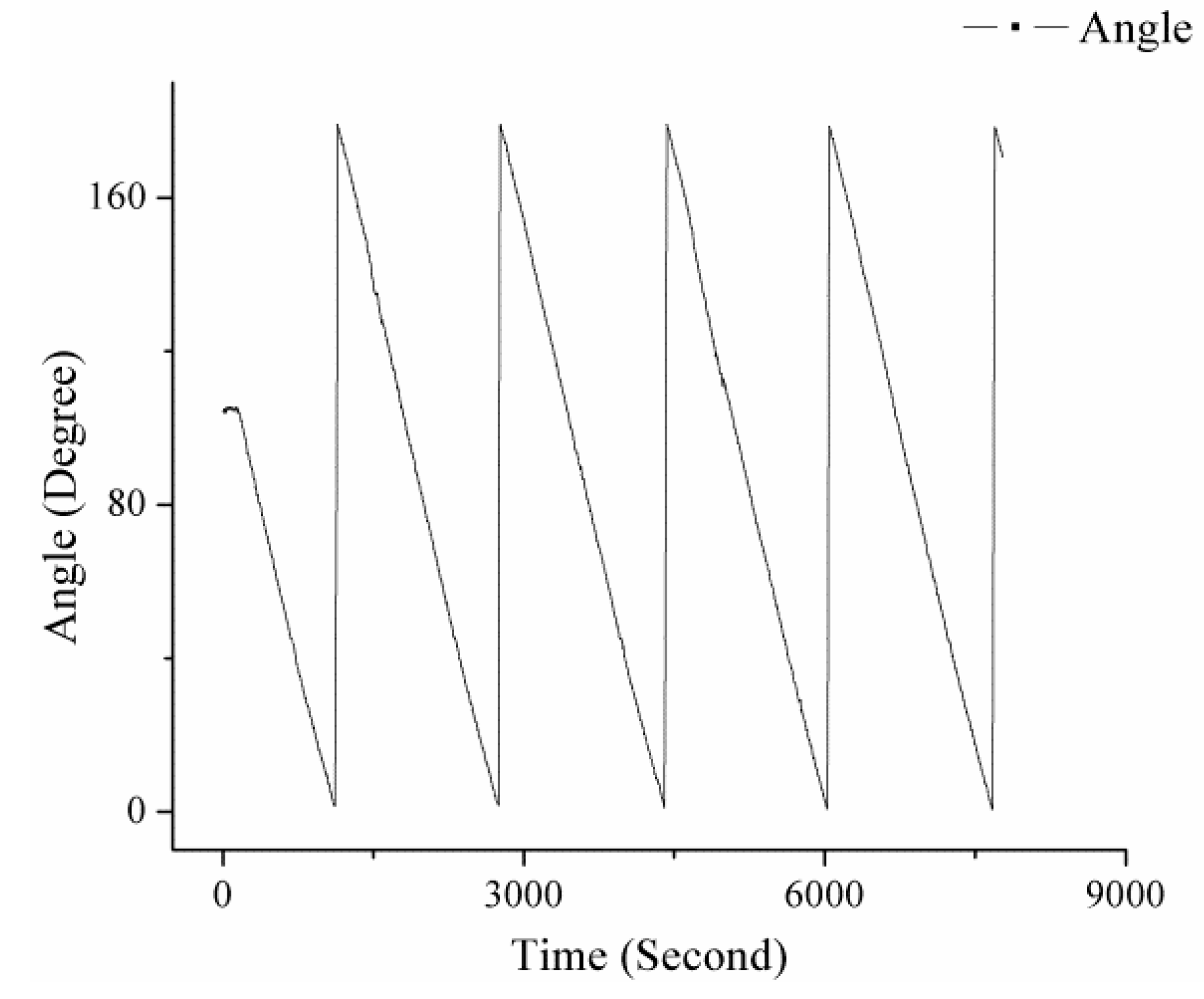

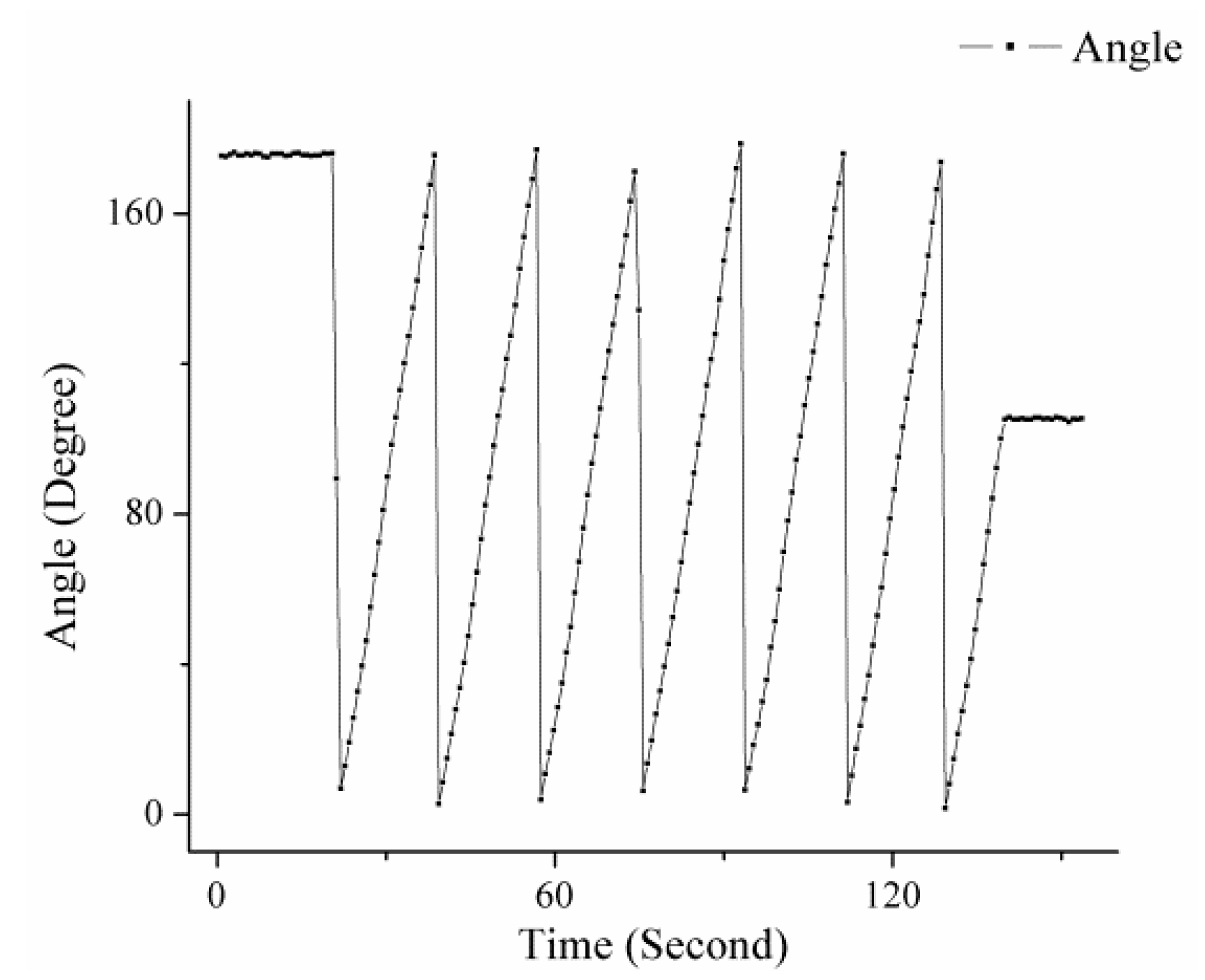

5.2. Orientation Experiment

| Group | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| Index | Data | |||||

| 1 | 10.480 | 25.150 | 39.738 | 53.545 | 68.600 | 84.390 |

| 2 | 10.520 | 25.325 | 39.394 | 53.535 | 68.707 | 84.198 |

| 3 | 10.413 | 25.350 | 39.621 | 53.442 | 68.779 | 84.080 |

| 4 | 10.692 | 25.325 | 39.629 | 53.532 | 68.750 | 83.950 |

| 5 | 10.660 | 25.467 | 39.358 | 53.250 | 68.850 | 83.839 |

| 6 | 10.717 | 25.375 | 39.439 | 53.324 | 69.040 | 84.105 |

| 7 | 10.600 | 24.950 | 39.369 | 53.448 | 68.792 | 83.867 |

| 8 | 10.600 | 25.517 | 39.621 | 53.313 | 68.667 | 84.242 |

| 9 | 10.717 | 25.167 | 39.425 | 53.455 | 68.808 | 84.023 |

| 10 | 10.530 | 25.183 | 39.550 | 53.332 | 68.575 | 84.077 |

| 11 | 10.660 | 25.400 | 39.421 | 53.362 | 67.653 | 84.050 |

| 12 | 10.575 | 25.183 | 39.330 | 53.593 | 68.418 | 84.183 |

| 13 | 10.640 | 25.325 | 38.983 | 53.513 | 68.428 | 84.196 |

| 14 | 10.520 | 25.350 | 39.075 | 53.543 | 68.535 | 84.215 |

| Statistics | ||||||

| Mean | 10.595 | 25.291 | 39.425 | 53.442 | 68.614 | 84.101 |

| Σ | 0.089 | 0.143 | 0.201 | 0.104 | 0.313 | 0.146 |

6. Conclusions and Outlooks

| Group | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|

| Index | Data | |||||

| 1 | 99.691 | 115.710 | 129.540 | 141.860 | 156.760 | 171.980 |

| 2 | 99.733 | 115.680 | 129.710 | 141.810 | 156.640 | 172.070 |

| 3 | 99.743 | 115.520 | 129.770 | 141.970 | 156.710 | 171.850 |

| 4 | 99.811 | 115.670 | 129.630 | 141.790 | 156.820 | 171.840 |

| 5 | 99.871 | 115.560 | 129.590 | 141.690 | 156.780 | 171.920 |

| 6 | 99.647 | 115.640 | 129.530 | 141.780 | 156.760 | 171.880 |

| 7 | 99.797 | 115.790 | 129.660 | 141.830 | 156.600 | 172.040 |

| 8 | 99.629 | 115.810 | 129.560 | 141.820 | 156.810 | 172.060 |

| 9 | 99.546 | 115.990 | 129.540 | 141.850 | 156.530 | 172.020 |

| 10 | 99.664 | 115.630 | 129.800 | 141.790 | 156.580 | 171.920 |

| 11 | 99.648 | 115.750 | 129.490 | 141.730 | 156.440 | 171.910 |

| 12 | 99.763 | 115.760 | 129.800 | 141.900 | 156.660 | 171.960 |

| 13 | 99.586 | 115.680 | 129.650 | 141.850 | 156.720 | 171.750 |

| 14 | 99.708 | 115.780 | 129.990 | 141.870 | 156.640 | 171.820 |

| Statistics | ||||||

| Mean | 99.703 | 115.712 | 129.661 | 141.824 | 156.675 | 171.930 |

| Σ | 0.087 | 0.112 | 0.134 | 0.067 | 0.107 | 0.093 |

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Rössel, S.; Wehner, R. Polarization vision in bees. Nature 1986, 323, 128–131. [Google Scholar] [CrossRef]

- Labhart, T.; Keller, K. Fine structure and growth of the polarization-sensitive dorsal rim area in the compound eye of larval crickets. Naturwissenschaften 1992, 79, 527–529. [Google Scholar] [CrossRef]

- Chahl, J.; Mizutani, A. Biomimetic attitude and orientation sensors. IEEE Sens. J. 2012, 12, 289–297. [Google Scholar] [CrossRef]

- Chu, J.; Zhao, K.; Wang, T.; Zhang, Q. Research on a novel polarization sensor for navigation. In Proceedings of the IEEE Conference on Information Acquisition, Seogwipo-si, Korea, 8–11 July 2007; pp. 241–246.

- Chu, J.; Zhao, K.; Zhang, Q.; Wang, T. Construction and performance test of a novel polarization sensor for navigation. Sens. Actuators A Phys. 2008, 148, 75–82. [Google Scholar] [CrossRef]

- Lambrinos, D.; Möller, R.; Labhart, T.; Pfeifer, R.; Wehner, R. A mobile robot employing insect strategies for navigation. Robot. Auton.Syst. 2000, 30, 39–64. [Google Scholar] [CrossRef]

- Xian, Z.; Hu, X.; Lian, J.; Zhang, L.; Cao, J.; Wang, Y.; Ma, T. A Novel Angle Computation and Calibration Algorithm of Bio-Inspired Sky-Light Polarization Navigation Sensor. Sensors 2014, 14, 17068–17088. [Google Scholar] [CrossRef] [PubMed]

- Karman, S.B.; Diah, S.Z.M.; Gebeshuber, I.C. Bio-Inspired polarized skylight-based navigation sensors: A review. Sensors 2012, 12, 14232–14261. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Zhao, K.; You, Zheng.; Huang, Kaoli. Angle algorithm based on Hough transform for imaging polarization navigation sensor. Opt. Express 2015, 23, 7248–7262. [Google Scholar] [CrossRef] [PubMed]

- Ma, T.; Hu, X.; Zhang, L.; Lian, J.; He, X.; Wang, Y.; Xian, Z. An Evaluation of Skylight Polarization Patterns for Navigation. Sensors 2015, 15, 5895–5913. [Google Scholar] [CrossRef] [PubMed]

- Tyo, J.S.; Goldstein, D.L.; Chenault, D.B.; Shaw, J.A. Review of passive imaging polarimetry for remote sensing applications. Appl. Opt. 2006, 45, 5453–5469. [Google Scholar] [CrossRef] [PubMed]

- Horváth, G.; Barta, A.; Gál, J.; Suhai, B.; Haiman, O. Ground-based full-sky imaging polarimetry of rapidly changing skies and its use for polarimetric cloud detection. Appl. Opt. 2002, 41, 543–559. [Google Scholar] [CrossRef] [PubMed]

- Voss, K.J.; Souaidia, N. POLRADS: Polarization radiance distribution measurement system. Opt. Express 2010, 18, 19672–19680. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhao, H.; Song, P.; Shi, S.; Xu, W.; Liang, X. Ground-based full-sky imaging polarimeter based on liquid crystal variable retarders. Opt. Express 2014, 22, 8749–8764. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Hu, X.; Lian, J.; Zhang, L.; Xian, Z.; Ma, T. Design of a Device for Sky Light Polarization Measurements. Sensors 2014, 14, 14916–14931. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Liang, H.; Zhu, H.; Zhang, S. A Bionic Camera-Based Polarization Navigation Sensor. Sensors 2014, 14, 13006–13023. [Google Scholar] [CrossRef] [PubMed]

- McMaster, W.H. Polarization and the Stokes Parameters. Am. J. Phys. 1954, 22. [Google Scholar] [CrossRef]

- Li, L.; Li, Z.Q.; Wendisch, M. Simulation of the influence of aerosol particles on Stokes parameters of polarized skylight. In Proceedings of the IOP conference series: Earth and environmental science, Beijing, China, 21–26 April 2014; pp. 12–26.

- Pust, N.J.; Shaw, J.A. All-sky polarization imaging. In Proceedings of the SPIE 6682, Polarization Science and Remote Sensing III, San Diego, CA, USA, 13 September 2007.

- Miyazaki, D.; Ammar, M.; Kawakami, R.; Ikeuchi, K. Estimating sunlight polarization using a fish-eye lens. IPSJ Trans. Comput. Vis. Appl. 2009, 1, 288–300. [Google Scholar] [CrossRef]

- Voss, K.J.; Chapin, A.L. Upwelling radiance distribution camera system, NURADS. Opt. Express 2005, 13, 4250–4262. [Google Scholar] [CrossRef] [PubMed]

- Voss, K.J.; Zibordi, G. Radiometric and geometric calibration of a spectral electro-optic “fisheye” camera radiance distribution system. J. Atmos. Ocean. Technol. 1989, 6, 652–662. [Google Scholar] [CrossRef]

- Powell, S.B.; Gruev, V. Calibration methods for division-of-focal-plane polarimeters. Opt. Express 2013, 21, 21039–21055. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, H.; Zhao, K.; Wang, X.; You, Z.; Huang, K. Real-time Imaging Orientation Determination System to Verify Imaging Polarization Navigation Algorithm. Sensors 2016, 16, 144. https://doi.org/10.3390/s16020144

Lu H, Zhao K, Wang X, You Z, Huang K. Real-time Imaging Orientation Determination System to Verify Imaging Polarization Navigation Algorithm. Sensors. 2016; 16(2):144. https://doi.org/10.3390/s16020144

Chicago/Turabian StyleLu, Hao, Kaichun Zhao, Xiaochu Wang, Zheng You, and Kaoli Huang. 2016. "Real-time Imaging Orientation Determination System to Verify Imaging Polarization Navigation Algorithm" Sensors 16, no. 2: 144. https://doi.org/10.3390/s16020144