1. Introduction

With rapid economic development, vehicle ownership worldwide has been increasing in recent years. Unfortunately, in addition to increasingly severe road congestion, the growing number of vehicles is posing a threat to traffic safety and social security. Statistics released by the Ministry of Public Security show that there existed 283 million vehicles and 335 million drivers in China at the end of March 2016 [

1]. What is worse, about 60 thousand people die and over 200 thousand people get wounded in traffic accidents every year and more than ninety percent of fatal accidents are caused by offensive driving behavior [

2]. Under some circumstances, cautious drivers may have to suffer as a consequence of the actions of those who do not take the responsibility of driving seriously and even those who engage in aggressive behaviors for thrill-seeking purposes. To admonish aggressive drivers and eliminate this phenomenon, many articles [

3,

4,

5,

6,

7] have discussed and emphasized the recognition of typical driving behaviors. Apparently, recognizing drivers’ behaviors (including normal driving behaviors and aggressive driving behaviors), recording their driving patterns and feeding information on their driving behaviors back to themselves or relevant departments can help to promote safer driving, reduce traffic accidents and contribute to social safety.

Automobile manufacturers are installing advanced driver assistance systems (ADAS) in some high-end cars with the purpose of balancing safety and efficiency among road traffic [

8]. The ADAS mainly consists of an electronic stability program (ESP), an adaptive cruise control system, and a lane departure warning (LDW) system [

9]. The relatively high cost of each part limits the deployment of ADAS in economical cars which constitute the majority of cars on the road. In addition, the ADAS mainly concentrates on driving assistance rather than evaluating driving style.

Similar to ADAS, utilizing hybrid signals (including signals sampled from video, microphone, GPS, pressure and inertial sensors, and etc.), authors in [

10,

11] discussed and summarized several approaches to recognize driving behaviors and monitor drivers. When being taken as inputs of driving behavior recognition systems [

5,

7], however, these signals are difficult to transmit and process, which increases computing complexity and affects system automation. Consequently, the utilization of these information can be difficult to some extent [

12]. What is more, it has been proved that the output signals by inertial sensors can fully meet the requirements of recognizing different driving behaviors [

3,

4,

6,

12,

13,

14].

Generally speaking, there are two different ways for researchers to obtain dynamic information about moving vehicles: the Controller Area Network (CAN) bus and micro electro mechanical system (MEMS). A CAN bus is a standard vehicular bus to facilitate communication between microcontrollers and devices without a host computer [

15], and it carries all the necessary data to describe the state of a car [

13]. Through the On-Board Diagnostic (OBD) port of vehicles, people can obtain very limited access to the information of CAN bus. In recent years, MEMS has been fast growing in popularity, becoming smaller in size, lighter in weight and lower in energy consumption, which makes it possible to integrate different sensor units such as accelerometers, gyroscopes, magnetometers, temperature transmitters, GPS, and so on. Mounted in the cab, the MEMS system can provide people with an approach to obtain information on the moving car [

13]. Motion sensors and smart phones are among the typical applications of MEMS systems.

Though a CAN bus carries all the vehicle signals, the information people can extract through an OBD port depends on the mastery of private protocol of the particular types of cars. With concerns for safety, automobile manufacturers hesitate to release the private protocol of OBD ports to the public, mainly in consideration of intellectual property and vehicle security. In addition, some researches have revealed that many vehicle components, can be accessed through OBD [

16,

17], which leaves potential for criminals to control the vehicle. Consequently, OBD products pose a threat to the vehicle and human security to some extent. Furthermore, the work of [

13], using the CAN bus data, generated a worse performance than that of a portable device. Therefore, in the process of driving behavior recognition, the data collection platform is more appropriate based on the MEMS system rather than the OBD port and CAN bus.

With the proliferation of smart phones, exploiting the resources of smart phones has become increasingly popular. In some cases, researchers utilized smart phones as sensor platforms and presented some satisfying results [

4,

7,

18]. Though smart phones and motion sensors are all integrated with MEMS systems, they perform differently in terms of driving behavior recognition. Smart phones are embedded with abundant sensors, largely providing better human-device interaction. When more computing resources are allocated to driving behavior recognition, smart phones may experience lag which may result in negative user experience. Also, different types of smart phones may be equipped with different MEMS systems and, as such, perform quite differently. Furthermore, drivers use the smartphones mainly for navigation and communication when driving, which can interrupt recognition and feedback processes within the system. Utilizing motion sensors can avoid such situations since the integrated three-axis accelerometer, three-axis gyroscope and three-axis magnetometer are sufficient to identify different driving behaviors without redundant sensors occupying more processor resources. Compared with smart phones, the motion sensors are mostly low-cost, perform more professionally and attain higher accuracy in the process of driving behavior recognition.

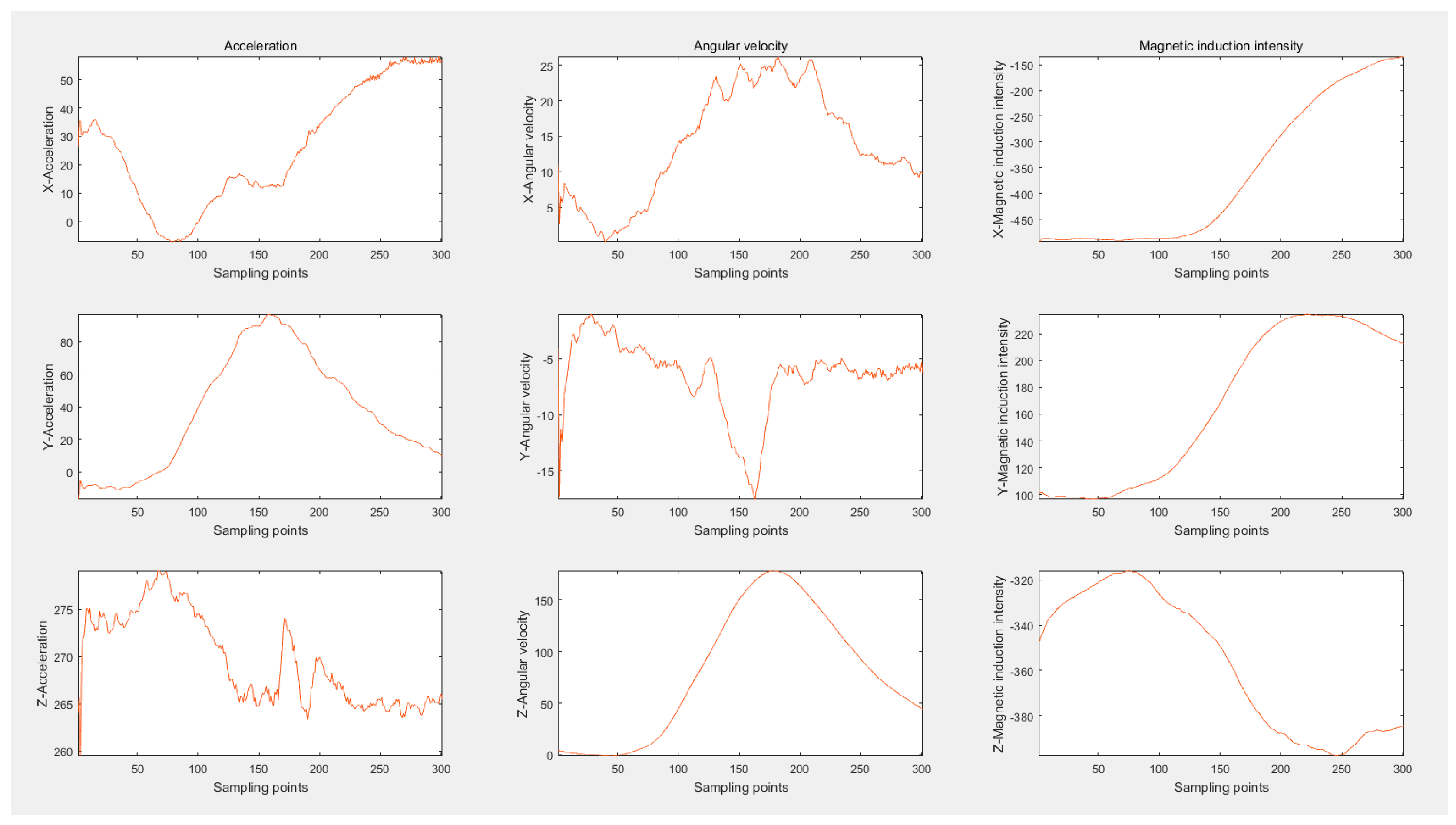

During motion, a vehicle can be treated as a rigid body. Then, the relevant theory relating to rigid body dynamics can be used to explain the change rule of the data (acceleration, angular velocity and magnetic induction intensity) collected by the motion sensors.

In the prior works aiming at recognizing driving events and driving styles, researchers adopted machine learning methods purely to extract data features and deal with classification [

3,

7,

12,

13]. However, all these works ignore the theoretical analysis and seem to be done in a black box, which obstructs the further optimization and research of systems. Our work successfully solves the problem above by establishing a physical model to depict a moving vehicle and reveal the data change of each axis. Innovatively, we utilize this physical model throughout all stages of our proposed system, removing noise from data using a Kalman filter, extracting valid data using an adaptive time window, extracting effective data features and classifying data using various classifiers (support vector machine, Bayes network, and so on). The physical model ensures good performance.

In summary, our work makes the following contributions:

We propose a novel model-based driving behavior recognition system using motion sensors.

We build a physical model to describe the car moving process and reveal the change rule of the data collected by the motion sensors including a three-axis accelerometer, a three-axis gyroscope and a three-axis magnetometer.

Based on the physical model built, differently from the prior research, we eliminate the noise from data using a Kalman filter.

Based on the new physical model, we propose a novel and effective method to extract the valid data from the car moving process utilizing an adaptive time window.

Based on the new physical model, we extract features from the valid data to prepare for the classification.

Based on the built physical model, we classify and recognize different driving behaviors using statistics learning methods. The performance of different classifiers is analyzed and the best one is chosen.

The rest of this article is organized as follows.

Section 2 introduces the related works in recognition of driving behaviors or driving styles.

Section 3 presents the experimental environment and provides a systematic overview of our work. In

Section 4, we present a new physical model which depicts the car moving process and explains the data change of each axis. In

Section 5, we introduce the Kalman filter to deal with noise elimination and propose a novel method to extract valid data using an adaptive time window as well as feature extraction from valid data based on the new physical model. The results of driving behavior recognition, the performance of various classifiers and the evaluation of the system are reported in

Section 6. Conclusions and future works are discussed in the last section.

3. System Description

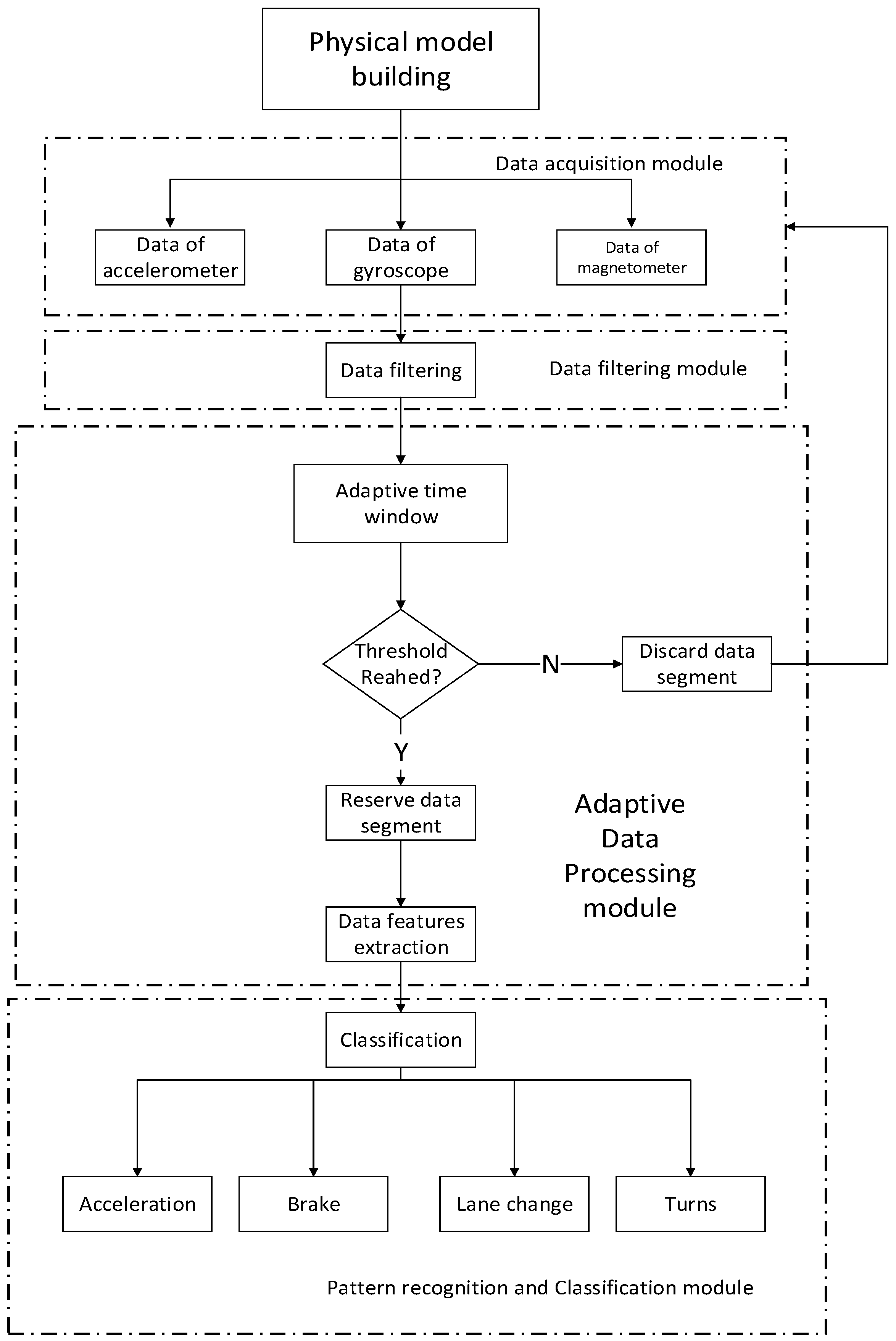

The proposed system consists of three components: the hardware part contains motion sensors and serves as the acquisition platform, the software part is designed to handle the acquired data, and the theoretical physical model provides guidance for the whole system.

The motion sensor platform used in our system is iNEMO V2 developed by STMicroelectronics, and the data collector will be set in different cars. The sensors sample the data at a frequency of 50 Hz. CUP module carried on this platform undertakes the task of computing and scheduling resources. The data acquisition is carried out within the iNEMO platform automatically, without using the resources of other devices. In addition, the data collected in the experiment is stored in SD cards and utilized for the research only, without compromising driver privacy and car safety.

The software part consists of the following components: data filtering module, adaptive data processing module and pattern recognition and classification module. Specifically, data filtering module shoulders the responsibility of eliminating noise from raw data. The adaptive data processing module is in charge of extracting valid data and then the feature extraction from it. Pattern recognition and classification module puts extracted features into classifiers to recognize different driving behaviors.

The theoretical support part combines the basic theory of rigid-body dynamics and the analysis of car in motion to build a physical model, deduce the data change rule of different driving behaviors and provide a theoretical basis for the system.

We devote ourselves to establishing a specialized, stable and credible driving behavior recognition system.

3.1. Experimental Setup and Environment

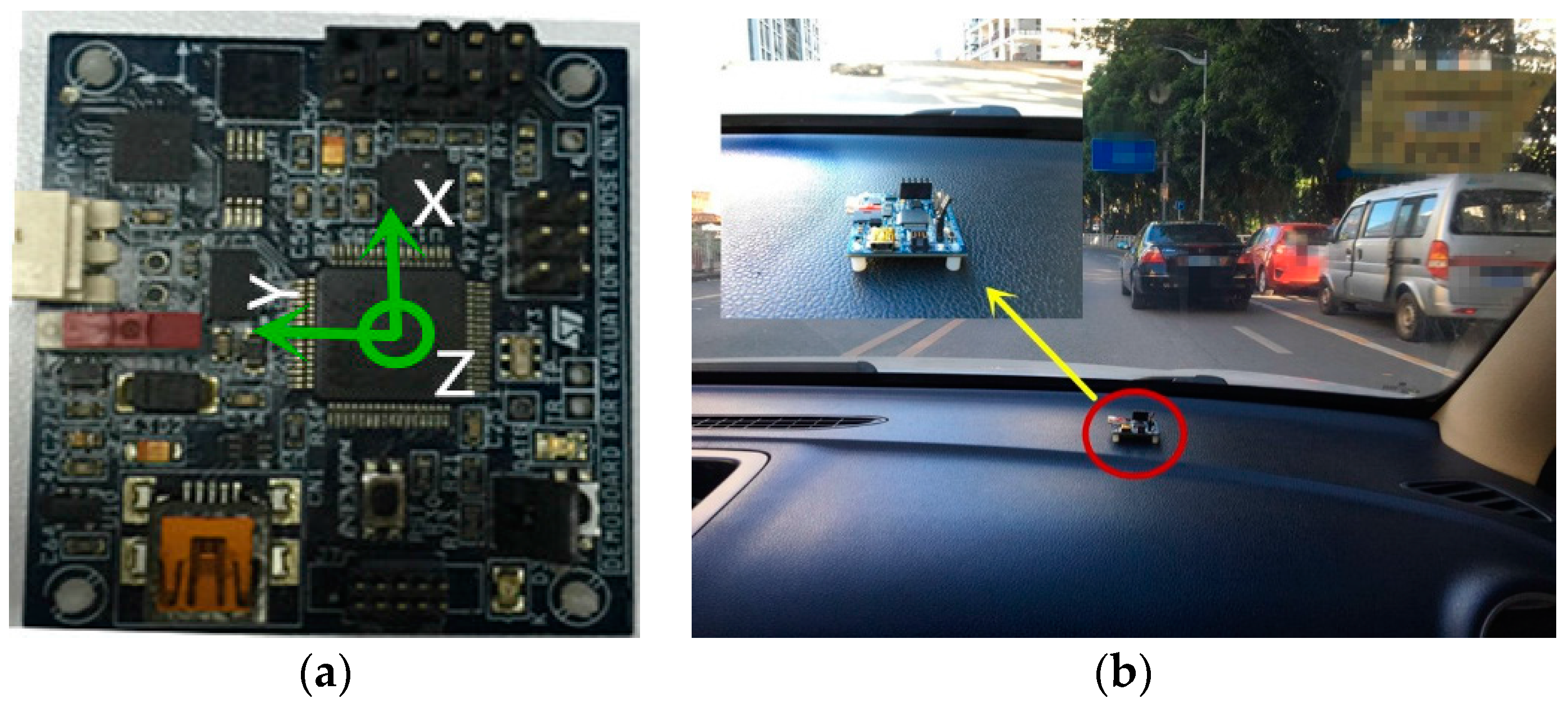

In the data acquisition process, the placement of the motion sensors, mainly the rotation and angle, will influence the outcome of the recognition system. Specific to this experiment, the motion sensor is placed on the dashboard, parallel to the ground with its

X-axis pointing to the car moving direction, the

Y-axis coinciding with the car’s lateral direction, pointing to the left side, and the

Z-axis being vertically upward.

Figure 1a shows the axis pointing and

Figure 1b displays the placement of the board. The reference coordinate system is the ENU (east, north and up) coordinate system.

In the process of driving, the total drive time is up to 20 h and driving mileage is over 1200 km, covering many roads in Shenzhen, China. In the future research, the driving time and mileage will be substantially longer. The route map covering part of the routes taken in the data collection process is displayed in

Figure 2.

In the data collection process, cars move along the different roads marked in red as shown in

Figure 2. Without the restriction of time, speed and destination, on the premise of observing traffic laws, drivers can maneuver cars according to their own desires. Five drivers and five different cars contribute to the obtainment of the original data. The information about cars used is given in

Table 1.

These cars are used repeatedly and provide the corresponding datasets. Though being small in quantity, these five cars could represent most types of family cars and taxis on the market. In addition, for the driving behavior recognition system, we can consider the moving car as a rigid body or a particle. At the macroscopic level, the physical processes of moving cars are coincident. The data acquired by motion sensors is concerned only with car motion. Consequently, for different types of cars, motion sensors (the data acquisition platform) are universal.

3.2. System Overview

The proposed driving behavior recognition system is mainly composed of the following six segments, which form the research process.

Based on the theory of rigid body kinematics, we analyze the relationship between acceleration and linear velocity and that between linear velocity and angular velocity. Following the analysis concerning magnetic induction intensity variation, we build the physical model to depict the car moving process and reveal the change rule of data. We prepare a theoretical basis for the whole system.

We set up the motion sensor as shown in

Figure 1 to gather nine-axis data of different motion states. We expand and consummate the database constantly.

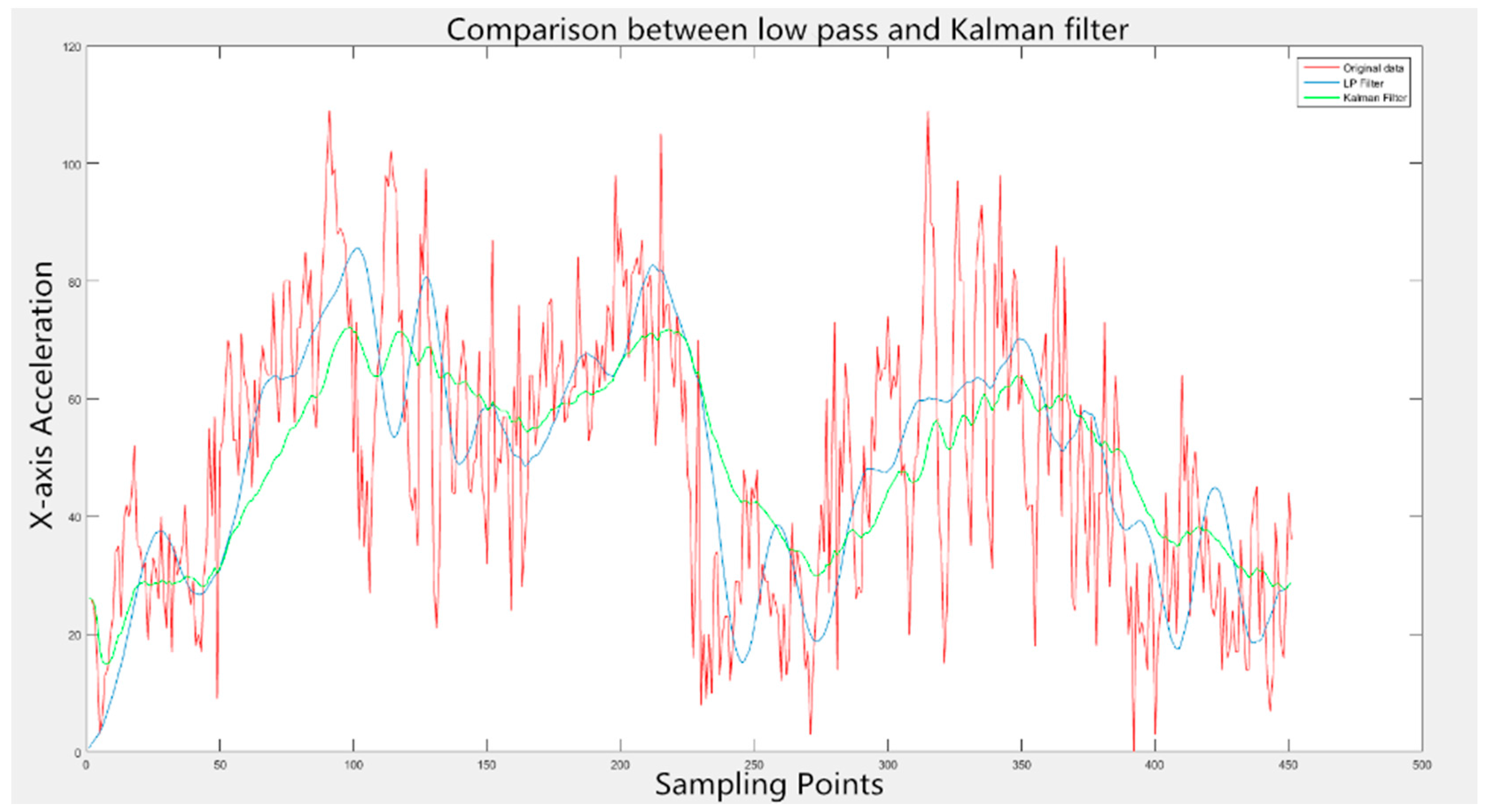

We analyze the components and characteristics of noise within the motion data and compare the performance in eliminating clutter of the low pass and Kalman filters. Based on the physical model built, we choose a more appropriate filter to avoid the negative effects on classification and recognition of driving behaviors.

Based on the new physical model, we extract the valid data (data segment denoting the driving behaviors, such as accelerating, turning and so on) from the long-time and irregular driving process. Specifically, we leverage the adaptive time window and the novel proposed methods to detect the start and end of valid data. The whole data is partitioned automatically, avoiding labelling data manually, which is more economical and efficient.

We extract the data feature vectors, mainly consisting of mean value, peak value, covariance and so on, from the valid data. Guided by the physical model or not, the performances of different feature sets are compared according to the criterion of classification accuracy. We choose the suitable features and prepare for the next step.

We analyze the application range and characteristics of different statistical learning methods. Then, we employ various classifiers to classify diverse driving behaviors. We analyze and evaluate the performance of different classifiers and choose the best one.

Figure 3 illustrates the architecture of the proposed driving behavior recognition system.

Ignoring the influence of bad weather, the black spots can be classified as follows: long straight roads, roads with small curve radius and the linear combination of various conditions [

23]. Long straight roads make drivers sleepy and cause visual fatigue, which induces unconscious acceleration and lane change. Similarly, roads with small curve radius are often accompanied by braking and turning. Corresponding to the third case, a combination of different driving behaviors can always be observed. Considering the factors above, we choose the driving behaviors listed in

Table 2 for the task of recognition.

Generally, driving behaviors to be detected are divided into two categories: normal behaviors and aggressive behaviors. So far, we consider seven specific maneuvers in each category and plan to increase the number gradually in our future work. Driving behaviors in

Table 2 cover the most typical ones generated by different drivers in real traffic conditions. By recognizing these behaviors, we can persuasively evaluate driving habits and styles of drivers and construct their archives, which is important in promoting cautious driving.

4. Establishment of Physical Model

The works [

24,

25,

26] thoroughly studied the dynamic model or the state observation of vehicles. Relevant knowledge such as vehicle dynamics, different tire models, side-slip angels, velocity and acceleration is incorporated. In the area of driving behavior recognition, however, some of this knowledge can be redundant. When recognizing driving behaviors, we can take a moving car as a unit and ignore its inner structure. The theory of rigid body kinematics is adopted to establish the physical model.

When vehicles are moving in real traffic conditions, their main behaviors include going straight, changing lanes and making turns. In the process mentioned above, acceleration and angular velocity of vehicles change according to certain rules of rigid body kinematics. Specifically, the relationship between acceleration and angular velocity can be represented as follows

In the time domain, and can be decomposed separately into and .

Furthermore, the variation of magnetic induction intensity information measured by the motion sensors is not only related to the vehicle behaviors but also connected with its geographic position. The distribution of the geomagnetic field decides the intensity and direction of all places on the earth. Similarly, it can also be divided into

. To account for the change rule of data systematically, we, first and foremost, develop the physical model. Taking the Northern Hemisphere, for instance, the physical model is portrayed in

Figure 4. The cube in

Figure 4 represents a moving car. The red three-dimensional system of coordinates is set inside the motion sensor and the arrow marked with

N points to the north. The two arrows with

g and

M represent the gravitational acceleration and geomagnetic field, respectively. Take RT for instance, the car moves along the blue arc with turning radius of

r. Its linear velocity (the arrow marked with

v) points to tangent direction of circular arc and according to Equation (2), the angular velocity is vertically upward (the arrow marked with

w). In addition, the geomagnetic field has the downward direction in the area north of the equator. The magnetic field quantified by sensors will vary with the car in motion.

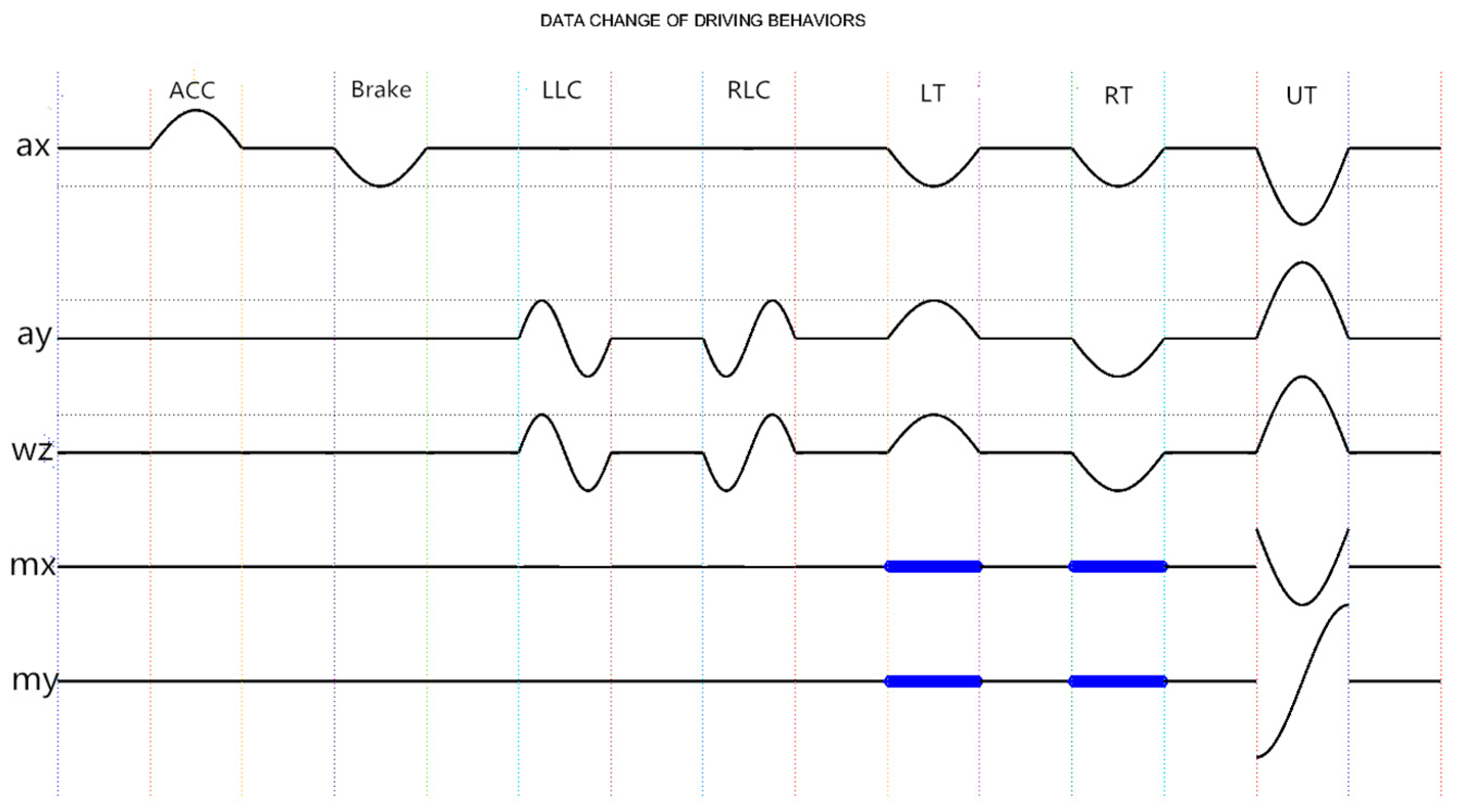

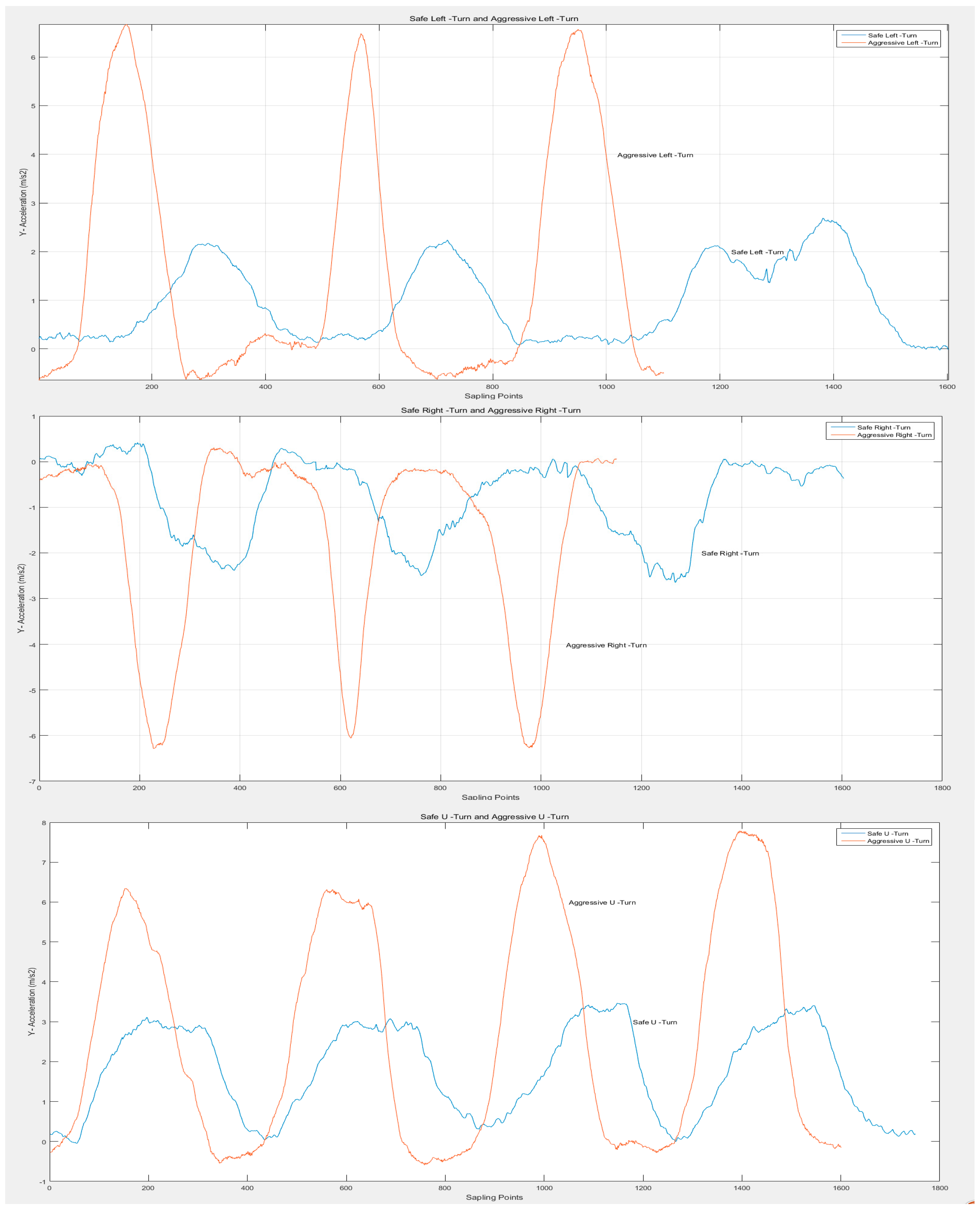

Based on the physical model we built and Equations (1) and (2), the change of data on behalf of different driving behaviors can be deduced and the outcome is demonstrated in

Figure 5. Motion sensor is set up as described in

Section 3. Furthermore, in the process of moving, five of the nine axes show data variation, which are

,

. The waveform in

Figure 5 reveals the corresponding data change rule of specific driving behavior.

Apparently, different driving behaviors bring about data changes of different axes. When going straight, car movements such as acceleration and brake possess the strong pertinence with acceleration information, independent of angular velocity. Other behaviors such as turning and lane changing will also cover the information concerning angular velocity. Take the brake and U turn for a more specific example. When braking, according to Equation (1), radial acceleration (

) of a car reduces to a negative value from zero and then returns to zero again. Meanwhile, detected magnetic field varies with the geographic position and other axes remain invariable. When making a U turn at a west-to-east direction, the radial acceleration of a car changes conformably with the brake while lateral acceleration (

) varies oppositely from the X-axis data. According to Equation (2), the changing tendency of

Z-axis angular velocity (

) is consistent with lateral acceleration. The geomagnetic field radiates from south to north and its latitudinal component is very close to zero. After the process of making a U-turn, the

X-axis magnetic induction intensity (

) of the car basically returns to the original value acquired at the start of the U-turn and the vector

points to the opposite direction with the modulus remaining unchanged. Similarly, the output of magnetometer is dependent on the geographic position. The rest axes are not involved in the U turn behavior. It should be pointed out that when going straight, changing lanes and making turns (excluding U turns), data change concerning magnetic field is chaotic and does not follow a uniform rule. Consequently, the magnetic field data is obscured (the blue segments in

Figure 5) in the process of establishing a physical model.

Without regard to the data change rules governing driving behaviors, the previous works [

3,

7,

12,

13] sent data features into the classifiers for training and classification purposes. Though the performance was barely satisfactory, no discussion of the classification basis has been provided in detail, which comprises the system’s logicality and integrality.

6. Results and Analysis

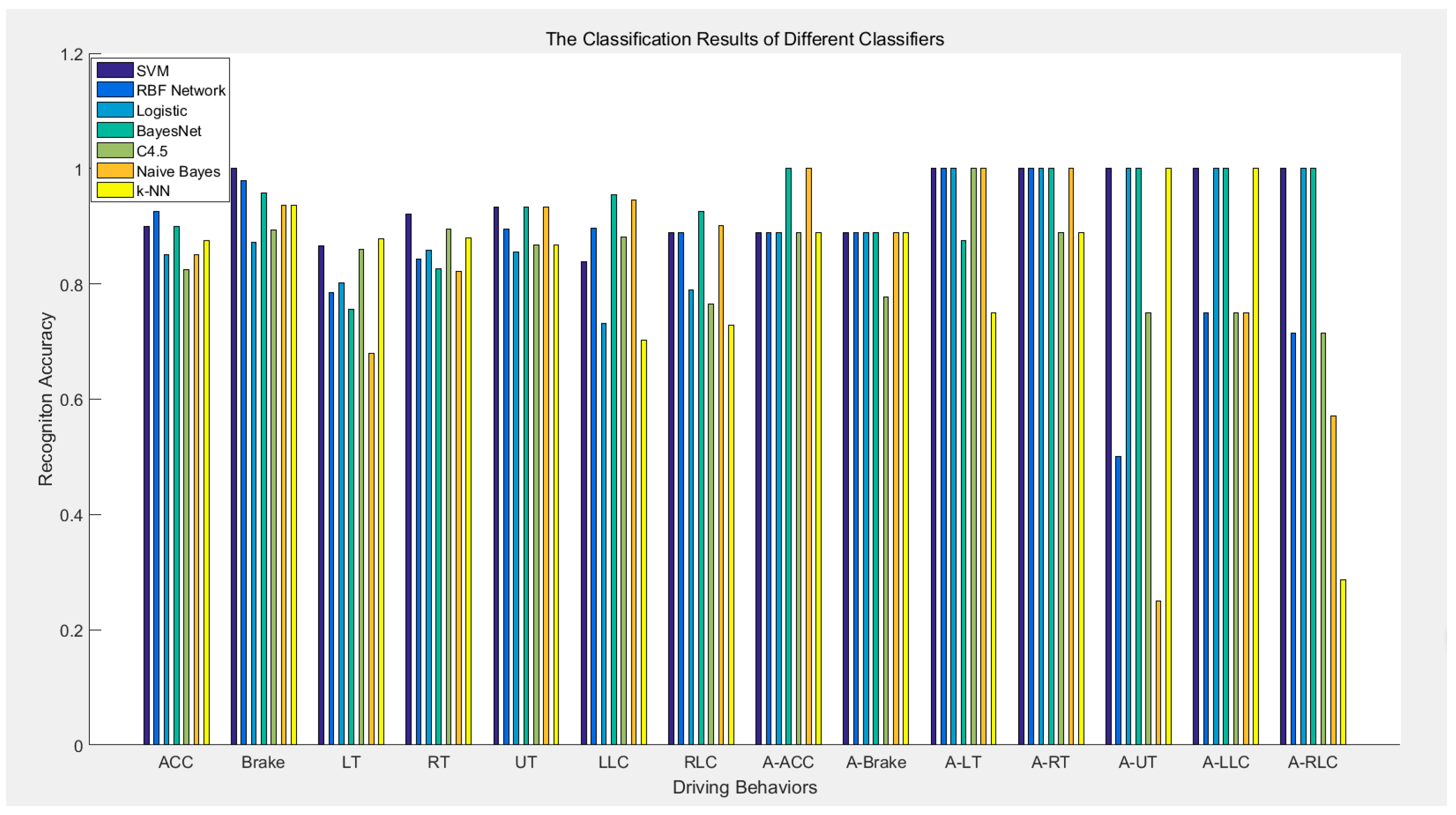

The machine learning methods are introduced for automatic driving behavior recognition. In this section, we present the final results of the proposed system generated by various classifiers.

In our work, SVM algorithm, RBF Network (radial basis function network), Logistic (logistic regression algorithm), BayesNet (Bayesian network), C4.5 decision tree algorithm, k-NN (k-nearest neighbor) algorithm and naïve Bayes algorithm are utilized to complete the recognition process. Take k-NN and SVM algorithms for example. k-NN calculates the distance to find the k nearest neighbors of the testing sample. Then, the testing sample will be assigned to the class that the majority of the k neighbors belongs to. We set k = 4 in our work. SVM is a binary classifier which divides the samples by an optimal separating hyperplane to maximize the margin between support vectors and all possible separating hyperplanes. Furthermore, the one-versus-one classification can be utilized to apply SVM in multi-class problems.

Supporting by the 100% recognition accuracy between ACC and Brake with the mean value information of

only,

Table 3 lists some examples which can be classified even by the information of a single axis. Obviously, the enormous difference between different driving behaviors provides a solid basis for the one-to-one classification and can promise SVM a satisfying performance.

In our work, 14 types and a total of 735 driving behaviors are recognized. Specifically, there exist 681 normal and 54 aggressive samples. Using the cars listed in

Table 1, all the samples are collected in real traffic conditions. Having eliminated the noises by Kalman filter, extracted valid data using an adaptive time window and extracted feature vectors under the guidance of data change rule, we send processed data to various classifiers. A 10-fold cross-validation is applied to avoid dependency on data. The classification results are demonstrated in

Figure 12.

The recognition rates of classifiers vary greatly. The quantitative results indicating the average accuracy of 14 types of driving behaviors for different classifiers are shown in

Table 6.

Recognizing 93.25% of the driving behaviors correctly, SVM possesses the best performance among these seven classifiers. As mentioned earlier, the significant difference between each pair of driving behaviors and their separability with several features promote the performance of SVM. Taking into account the independence of different features, which can be proved by the synchronous change of and , BayesNet follows SVM with an accuracy of 91.1%. Logistic achieves a recognition rate of 89.3%, and the RBF Network, C4.5, naïve Bayes and k-NN have relatively poor performances. The recognition results suggest that SVM performs best for such a database and data processing procedure.

Different from conventional methods, we process the data based on the data change rule in every stage, trying to make the samples easy to distinguish. Compared with previous works, the performance of our whole system is convincing. The difference in emphasis and applied algorithms distinguish the recognized catalogue of driving behaviors with a comparison shown in

Table 7. The article [

3] utilized a low pass filter to reduce noise, a waveform segmentation technique to segment data and HMM (Hidden Markov Model) to classify driving behaviors. This article reached an average accuracy of 91%. Besides the low pass filter, [

7] extracted valid data using SMA (simple moving average) of energy and utilized DTW and k-NN to get a 91% recognition rate. In [

13], driving behaviors were labelled manually. Then, LDA and SFS were applied to simplify the feature set, and SVM algorithm achieved an accuracy of 89%. Unable to provide a clear idea for further optimization, ignoring the data change rule and just leveraging the knowledge of machine learning can restrict the improvement in system performance. Apparently, benefitting from this solid theoretical support, our recognized catalogue is more substantial with a higher total accuracy of classification. The utilization of a physical model in processing data and classifying advances the system greatly.

In our work, the classification results output by SVM of specific driving behaviors are displayed in

Table 8. The normal driving behaviors are composed of 40 ACC, 47 Brake, 172 LT, 191 RT, 76 UT, 74 LLC and 81 RLC. Considering personal safety and traffic safety, we collect fewer aggressive samples. Specifically, there exist 9 A-ACC, 9 A-Brake, 8 A-LT, 9 A-RT, 4 A-UT, 8 A-LLC and 7 A-RLC behaviors. The significant difference between normal and aggressive samples makes them easy to be distinguished.

Among all samples, 4 ACC, 22 LT, 15 RT, 5 UT, 12 LLC, 9 RLC, 1 A-ACC and 1 A-Brake behaviors are wrongly classified. The quantitative recognition rates output by SVM are displayed in

Table 9.

Generally, the recognition results can be labelled according to three situations: complete, easy and difficult to distinguish. The Brake, A-LT, A-RT, A-UT, A-LLC and A-RLC belong to the first situation, with all samples recognized correctly. With an accuracy rate of around 90%, the ACC, RT, UT, RLC, A-ACC and A-Brake belong to the second situation. Being hard to distinguish, LT and LLC belong to the third one. By analyzing the recognition results, we summarize that there might exist some factors affecting the performance of classifiers.

● The dataset is not completely ideal.

All the data used in this work is acquired in the real traffic situations and diverse road conditions. When a car drives on the bumpy road segments or through a deceleration strip, its vibrations will introduce chaotic noise to motion sensors. In addition, different drivers have different habits while driving. In most cases, in the process of manipulating the cars, drivers usually apply many redundant operations, such as momentarily shifting gears, accelerating, braking, and so on. These operations will also introduce noise to the motion sensors. Though we utilize the Kalman filter to eliminate noise from data, the fact described above will also cause the data change to deviate from the rule as in

Figure 5.

● The traffic flow is complex.

The growing number of vehicles exacerbates road congestion and makes traffic flow more and more complex. In some cases, certain incidents can be counterproductive. For example, even if the drivers are changing lanes to the left, they may brake just to avoid left-hand cars for safety. Consequently, the driving behavior becomes difficult to recognize.

● Similarity exists among different driving behaviors.

The data change pattern depicted in

Figure 5 and the actual driving experience all reveal that similarity exists among different driving behaviors. Take the U turn for instance. Vehicles in China are with the left rudder and right line, and the UT mostly turns to the left, making a LT similar to a UT in all data changing trends except

and

. Consequently, UT can be considered as the combination of two LT to a large extent. It is the main reason why classifiers classify four UTs as LTs and four LTs as UTs. For another example, in some cases, changing lanes is accompanied by the variation of

X-axis acceleration, which makes LLC or RLC similar to LT or RT. That is why 10 LLC behaviors are classified as LTs improperly and the accuracy is lower than others. What is more, the similarity also causes 12 LTs to be recognized as LLCs, nine RTs as RLCs and seven RLCs as RTs.

● The existence of singular data.

Data acquisition in real traffic conditions may be dangerous. Some behaviors, mainly the aggressive driving maneuvers, cannot be done integrally for the consideration of traffic safety. In addition, in the early stage of analyzing driving data and building physical models, the driving behaviors need to be labelled manually. Because of the limitation in energy and concentration, people may misclassify driving samples, which is unavoidable. That is why singular data exists in the dataset.

● The combination of different driving behaviors.

Different driving behaviors collected in real traffic conditions are not always completely distinguished and independent, which makes the data portray combinative events. The acceleration is often accompanied by turning at traffic lights. Having turned to another direction, driver may adjust the velocity and lanes of the car to gain a better driving experience. The condition above can explain the process of classifying three ACCs as LTs and four RTs as ACCs.

Though there are some factors prejudicing the performance of classifiers, the final accuracy of 93.25% achieved by SVM algorithm is persuasive. The overwhelming majority of samples in the dataset conforms to the data change rule deduced from the physical model and completely satisfies the demands of classifiers. The recognition results are quite acceptable and have validated the reliability of the proposed system.

7. Conclusions

In this work, we proposed a novel model-based driving behavior recognition system using motion sensors. The physical model built and data change rule deduced promise the system a good performance with an average accuracy of 93.25% in classifying all 14 types of driving behaviors acquired in real traffic conditions. In spite of different cars and drivers, the proposed system can overcome these differences and performs well universally.

Firstly, based on the related knowledge of rigid body kinematics, we built a physical model to depict car motion on roads and then derived the change rule of data in motion sensors. The established physical model provides the whole system with a theoretical foundation and the data change rule reveals the great difference among different driving behaviors, which provides a clear direction for the following data processing; Secondly, we built the database with 20 h and 1200 km driving in real traffic conditions; Thirdly, having analyzed the main components of noise existing in the data, we utilize the Kalman filter to remove noise. Compared with the conventional low pass filter, the Kalman filter is more in line with the physical model. Then, based on the derived data change rule, we proposed a novel method utilizing the slope, waveform and energy information to extract valid data from the whole driving process. By removing unnecessary data, the memory space of motion sensors can be saved to a great extent. Also, the whole process is automatic, with manual work being avoided.

After the valid data extraction stage, guided by the data change rule, we effectively extracted the data features reflecting the difference among driving behaviors. Compared with the dimensionality reduction methods or the empirical selection of feature sets, our approach of features selection possesses a definite direction and uses less computational resources, which makes the whole system concise and efficient. In the end, we utilized seven distinct classifiers to classify and recognize driving behaviors. An accuracy of 93.25% is achieved by SVM, which is the best among the work in the area of driving behavior recognition. According to the data change rule and real traffic flow, we analyzed the performance of classifiers and the reasons why some samples are classified improperly. Under the guidance of the proposed physical model and data change rule, a driving behavior recognition system was basically established.

In conclusion, our work is very convincing and the proposed system is totally feasible.

In future work, we will extend our network on a large scale and establish a more complete and comprehensive database to provide better data support for the recognition system. In addition, there still exists much room for optimization of features extraction. Therefore, other combinations of features contributing to the recognition accuracy need to be explored. What is more, from the established physical model we can see the potential to recognize more driving behaviors, such as climbing or descending slopes. The recognition of these behaviors will make our system more integrated. Without being restricted by the motion sensors only, we are currently developing a specific module which carries the driving behavior recognition system and can be integrated into other electronic products, such as automobile data recorders and Bluetooth products. A more comprehensive driving evaluation mechanism will be established in the future.