Radar Sensing for Intelligent Vehicles in Urban Environments

Abstract

: Radar overcomes the shortcomings of laser, stereovision, and sonar because it can operate successfully in dusty, foggy, blizzard-blinding, and poorly lit scenarios. This paper presents a novel method for ground and obstacle segmentation based on radar sensing. The algorithm operates directly in the sensor frame, without the need for a separate synchronised navigation source, calibration parameters describing the location of the radar in the vehicle frame, or the geometric restrictions made in the previous main method in the field. Experimental results are presented in various urban scenarios to validate this approach, showing its potential applicability for advanced driving assistance systems and autonomous vehicle operations.1. Introduction

In the past few years, robotic vehicles have been increasingly employed for highly challenging applications including mining, agriculture, search and rescue, planetary exploration, and urban driving. In these applications, the surrounding environment is dynamic and often unknown (i.e., a priori information is not available). Under these conditions, in order to enable safe autonomous operations, imaging sensors are required. These sensors can provide obstacle avoidance, task-specific target detection and generation of terrain maps for navigation. Lidar is a common sensing device employed in unmanned robotic vehicles to generate high-resolution maps of the environment. However, due to the near-infrared wavelength they operate at, these sensors are adversely effected in poor weather conditions where airborne obscurants may be present [1]. Similarly, vision sensors are strongly affected by day/night cycles and/or by the presence of environmental factors. Sonar can be a valid alternative for sensing in adverse visibility conditions but it has other issues including noise, atmospheric attenuation, and cross-reflections. Millimetre-wave (MMW) radar overcomes the shortcomings of laser, vision and sonar. The term millimetre-wave in the radar context, refers to electromagnetic radiation with a wave-length ranging between 1 cm and 1 mm. Therefore, MMW radar can operate successfully in the presence of airborne obscurants or low lighting conditions [2]. In addition, radar can provide information of distributed and multiple targets that appear in a single observation. For these reasons, radar perception is increasingly being employed in ground vehicle applications [3].

This paper addresses the general issue of ground detection that consists of identifying and extracting data pertaining to the ground, using a radar sensor, tested in an urban environment. A novel algorithm called Radar-Centric Ground Detection (RCGD) is presented, based on a theoretical model of the radar ground echo, which is used to classify a given sensor reading as either ground or non-ground. Since the RCGD method works directly in the reference frame of the sensor, it does not require a separate synchronised navigation system, nor any calibration with respect to the vehicle reference frame. As will be shown, it also relaxes the geometric assumptions adopted by existing methods in the literature by the same authors for radar-based ground detection.

The ability of detecting and distinguishing ground from non-ground can be studied in different environments. In this research, the main field of interest is the urban environment. Its choice is not related to any application in particular, but arises from the extended variety of cases and problems to be dealt with, as will be discussed further. In general, roads and drivable surfaces are considered to be examples of ground, whereas non-ground is anything different from this: buildings, pedestrians, footpaths, cars, trees, and obstacles in general.

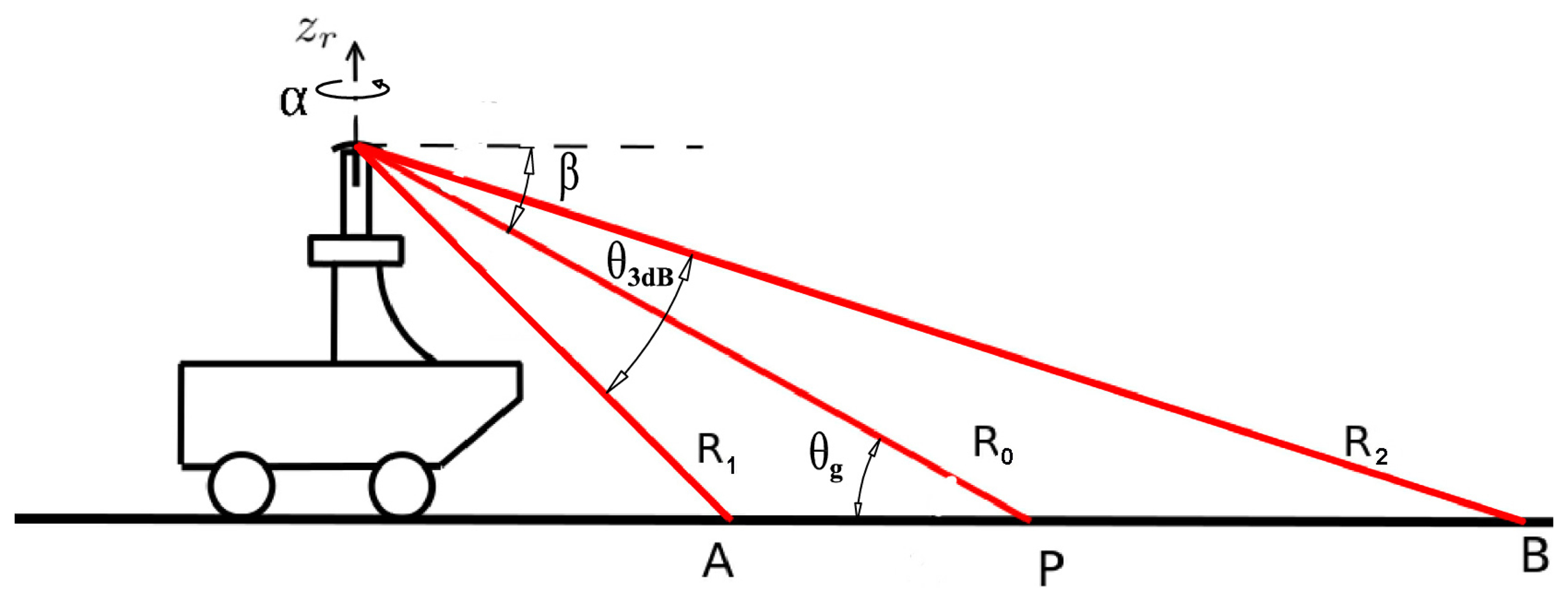

The sensing configuration used in this research is shown in Figure 1, where a MMW radar is mounted on the top of the vehicle to survey the surrounding environment. The sensor can sweep around its vertical axis producing a general overview of the environment in a constant time interval (about 0.77 s). Each revolution produces a scan, which is a polar radar image, or plan position indicator (PPI)-scope. An example is shown in Figure 2, where the radar output and the corresponding scene (acquired by an approximately co-located camera) are shown. The forward direction of the vehicle is marked by the black line. Amplitude values above the noise level suggest the presence of objects with significant reflectivity. Amplitude close to or below the noise level generally corresponds to the absence of objects. The radar image provides a clear distinction between road and obstacles of the scene: for instance the spot characterised by high intensity values in the top-left of Figure 2a is the blue van in front of the vehicle in Figure 2b. At a lower range, the white car coming towards the vehicle and the brown car on the red bus lane are also seen respectively to the right and left of the black heading line. The long narrow stripe in the right of Figure 2a is the wall on the right side of the vehicle. Ground areas are visible in front of and behind the vehicle and are characterised by being wide regions with medium intensity values. Clearly, ground appearance in the polar image depends on the relative position between vehicle and road. The further we are from the condition no-roll, no-pitch, flat, horizontal ground, the more difficult to detect the ground, since the difference between obstacles and ground for each beam is less recognisable.

The remainder of the paper is organized as follows. A survey of the literature in the field is provided in Section 2, pointing out the novel aspects of the proposed approach. Section 3 recalls the basic principles of radar sensing and details the adopted ground echo model. The ground classifier is discussed in Section 4, and experimental results obtained with an experimental test bed operating in urban environment are presented in Section 5. Conclusions are drawn in Section 6.

2. Related Works

Ground segmentation is the ability of distinguishing ground from other objects that can exist in the scene. In many cases, ground is an unwanted element [4] and many techniques have been provided in order to eliminate its effects [5]. On the other hand, ground detection is a key requirement for autonomous navigation [6]. Recent sensor developments (Velodyne, Riegl, Ibeo, etc.) have led to increased perceptual ability. For instance, laser is still the main sensor used to survey the three-dimensional (3D) shape of terrain [7] and its comparatively high resolution makes it the preferred choice for obstacle avoidance [8]. Computer monocular- and stereo-vision is used if lighting conditions are appropriate; for vehicular applications this typically restricts operations to daylight hours [9–11]. Relatively limited research has been devoted to investigate the use of MMW radar for ground vehicle applications. In [12], a radar has been developed for the measurements in ore-passes and to survey the internal structures of dust and vapour filled cavities. Radar capability to perceive the environment has been demonstrated in challenging conditions [13], including a polar environment (Antarctica) [14]. Radar sensing has also been employed for autonomous guidance of cargo-handling vehicles [15] and occupancy mapping [16]. In [17], the combination of radar with monocular vision is proposed within a statistical self-supervised approach. In the automotive field, various perception methods have been proposed that mostly use radar technology for collision avoidance and obstacle detection [18–20].

Several works from the literature dealt with the problem of detecting ground, but few of them employed radar as the main sensor. One of them, previously proposed by the authors [21] and called Radar Ground Segmentation (RGS), represents the starting-point of this research. The RGS algorithm consists of two steps:

Prediction of the expected location of the ground within the sensor data by using a 6-DOF localisation system. This provides the starting point for the ground search within the radar data. This step is called background extraction.

Labelling of each radar observation as ground, obstacles or uncertain, according to the goodness of fit to the ground-echo model. This is the ground segmentation step.

It should be noted that the background extraction is a necessary but not sufficient condition for a given observation to be labelled properly. This means that if the first part of the algorithm fails, the second part will inevitably fail too. It can happen if one of the assumptions made in the RGS algorithm is not verified. As shown later, transporting the algorithm from a rural to an urban environment causes some of the original assumptions to be degraded.

The main contribution of this work is a novel method, namely the RCGD algorithm that is able to detect the ground surfaces within radar scans of the environment, while relaxing the geometric assumptions made by the previous algorithm. Specific differences between this research and the previous RGS method are highlighted throughout the paper and in particular in Sections 3.2 and 3.3.

3. Principles of Radar Sensing

In this section the basic mathematics that describe radar sensing is recalled. The theoretical model of the radar ground echo that is used in this research is, then, developed in detail.

3.1. Radar Fundamentals

Keeping in mind the scheme of Figure 1, under the assumption of a pencil (narrow) beam and uniformly illuminated antenna, it is possible to describe the beam-pattern by a cone of aperture θ3dB, which is the half-power beam-width. The cone intersects the ground with a footprint delimited by the proximal and the distal border denoted as a and B, respectively R1 and R2 are the proximal and distal range, whereas R0 is the bore-sight range in which the peak of the radiation is concentrated. The angle of intersection between the bore-sight and ground is denoted by the grazing angle θg. Radar uses electromagnetic energy that is transmitted to and reflected from the reflecting object. The radar equation expresses this relationship. The received energy is a small fraction of the transmitted energy. Specifically, the received energy by the radar can be calculated as

Usually, the reflectivity σ0 in place of σt is preferred

Ac being the clutter area or footprint. It can be shown that Ac depends on the range R and on the secant of the grazing angle θg. Thus combining all constant terms in k′, Equation (2) can be rewritten as

This equation can be converted to dB:

3.2. Ground Model

Equation (5) shows that the ground echo depends only on θg, R, and G. In turn, the gain G can be expressed as a function of the bore-sight R0 and grazing angle. Specifically, G is maximum when the target is located along the antenna's bore-sight, and it reduces with angle off bore-sight, as defined by the antenna's radiation pattern. If a Gaussian antenna pattern is adopted, an elevation angle θel, measured from the radar bore-sight, can be defined as

The antenna gain can then be approximated by [22] as

θ3dB being the 3dB beamwidth (θ3dB = 3 deg for the radar used in this research). This will allow to write Equation (5) as

Equation (5) is the ground-echo model used in this research. The main difference with the model previously proposed in [21] is that the grazing angle θg is not treated as a constant but it can change as a function of the terrain inclination.

Equation (5) is completely defined once K is known. K can be obtained, for example, by evaluating PrdB at R0, as proved in the Appendix. Equation (5) defines the ground echo in terms of power return. However, the location or range spread of the ground echo can also be predicted, based on geometric considerations (please refer again to Figure 1). The proximal and distal borders can be expressed as a function of θg and bore-sight R0

Equation (5) must be interpreted in this way: given a ground radar observation, PrdB(R0) is known. The other terms G, σ0 and K depend only on R0 and the grazing angle. The dependence is complex, therefore the couple (R0, θg) can be found only by investigating all the possible couples and comparing each corresponding RCGD ground-echo model against the radar observation until a satisfactory agreement is reached.

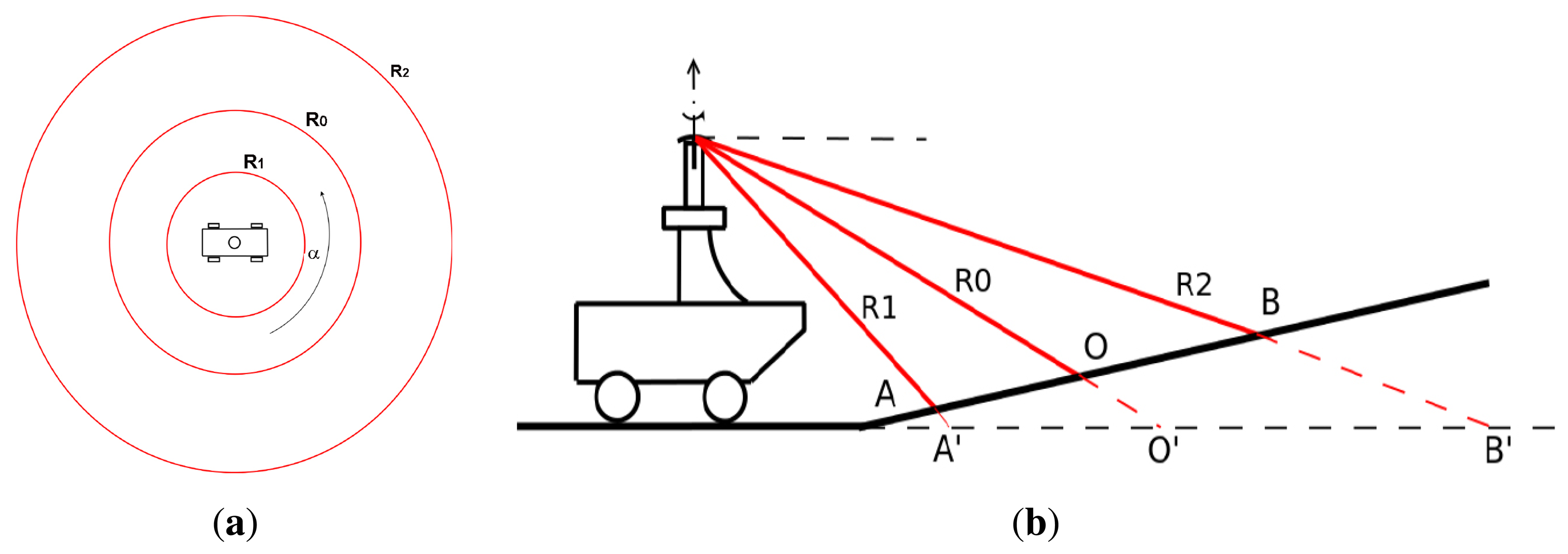

In order to evaluate the validity of the RCGD ground-echo model, Figure 3a shows the change of the model as a function of the grazing angle, whereas R0 is maintained constant. As the grazing angle increases, the RCGD ground-echo model becomes narrower and the range spread decreases. This is in line with Figure 3b; the first configuration denoted as ground plane 1 is characterised by the grazing angle θ1, whereas the second is denoted as ground plane 2 and it is characterised by the grazing angle θ2: θ2 > θ1, whereas R0 is the same for both configurations. It is apparent that the range spread (R2 − R1) for the first configuration (OB − OA) is higher than the range spread of the second configuration (OB′ − OA′). Indeed, increasing the grazing angle leads to a higher R1 (OA′ > OA) and a lower R2 (OB′ < OB).

3.3. Comparison with Existing Literature

Previous RGS method in this field showed that, knowing the pose of the vehicle and the characteristics of the radar beam, it is theoretically possible to locate the ground footprint. For example, for the radar configuration used in this work and under the assumptions of quasi-horizontal ground, the area illuminated by the radar on the terrain is shown in Figure 4a. Clearly, the effect of roll and pitch will be to deform the three annuli (stretching them along roll and pitch axes resulting in three ellipses). The main assumptions underlying the previously proposed RGS method can be summarized as follows

The radar position relative to the vehicle reference frame is known by initial calibration and fixed during travel.

The pose (six degree of freedom position and orientation) estimation of the vehicle with respect to the ground is available from an onboard navigation system.

There are negligible time synchronisation errors between the navigation solution and the radar data.

Approximately horizontal ground in view of the radar.

The ground plane is coincident with the plane of vehicular motion.

These assumptions pose some limitation to the RGS system, leading to the following main reasons of failure

The assumption of quasi-horizontal ground is too restrictive. For example, in urban environment, several uphill and downhill roads exist.

An onboard navigation system is not always available.

Faster vehicle dynamics (e.g., higher speeds and sharper bumps) magnify errors due to time synchronisation.

The plane of vehicular motion is not always coincident with the plane of the area to be detected.

The first reason of failure is the presence of a climb or a descent or, more generally, when the ground plane is different from the plane of the vehicular motion. Figure 4b shows that the background location prediction of the RGS is A′O′B′, which differs from the real plane AOB.

There is another aspect to be discussed. The background extraction step of the RGS method requires the knowledge of the calibration parameters in order to take into account the relative position between the radar and the vehicle. If they are not exact and some errors are present, there will be a double systematic error in the results: the first is in the background extraction itself and the second is in the projection of the results in the world reference frame. Later, it will be shown that the RCGD algorithm does not require the calibration parameters for the ground detection (even if they are still necessary to project the results in the world reference frame).

4. The Radar-Centric Ground Detection Algorithm

A novel ground detection algorithm, referred to as the RCGD algorithm, is presented that operates directly in the sensor frame of the radar, without the need for a separate synchronised navigation source or any geometric restriction of the area investigated. Let us refer to the general case where the plane of vehicular motion is not coincident with the ground plane in view of the radar. In addition, the two planes can be freely tilted in the space and no assumption is made about their location. These observations lead to the representation in Figure 5. Therefore, the main idea is that the ground-echo depends only on the information described by the sensor frame and the relative position between the bore-sight and the ground plane, defined by the grazing angle. This is suggested by Equation (5), which is theoretically independent of the navigation solution.

In order to define a classification scheme to label radar data pertaining to the ground, a given radar observation (i.e., the single radar reading obtained for a given scan angle or azimuth angle) is compared with the ground model. The underlying hypothesis is that a good fit between the theoretical model and experimental data suggests high confidence of ground. Conversely, a poor fit would indicate low likelihood of ground due, for example, to an obstacle.

4.1. Fitting the Ground Echo Model

In Section 3.2, it was proved that the ground-echo model can be expressed as a function of the bore-sight R0, and the grazing angle θg. Moreover, once these parameters are defined, the ground echo model is unique. In this section, it is explained how to use the ground echo model to detect ground areas. The problem is to find the location or range spread (R1, R2) and the couple (R0, θg) that defines the RCGD ground-echo model, which best fits the sensory data. In the RCGD algorithm, no assumption is made, therefore the search for R0 can be performed theoretically by an exhaustive iteration over the whole plausible range of values. However, the search is limited in practice to the interval 8-22 m. The upper bound can be explained when considering that the assumption of near-field region is violated beyond this limit and Equation (5) loses its validity. The lower bound is chosen based on the maximum road slope expected in urban environments. The search for θg is based on geometric considerations related to the inclination of the vehicle and of the road. In principle, an interval of 0−90° can be chosen, but this means a high computational time. In this approach, the variation range of the grazing angle was limited to 2–15° with an increment of 0.5°, to match the limits of the gradients in our urban test environment. This presents an optimisation problem, which could be solved with a variety of standard optimisation procedures. In this work, we chose to limit the search bounds and employ exhaustive search.

For a given radar observation, the objective is to find the model that best fits the experimental data. In order to investigate the set of all possible pairs (R0,θg), two loops are performed by varying R0 and θg, respectively, in their interest range. Each iteration defines a candidate RCGD ground model according to Equation (5), which can be fitted against this radar reading. The corresponding goodness of fit can be evaluated by the squared error (SE). The candidate (R̅0,θ̄g) resulting in the lowest SE is retained as the RCGD ground model for the given radar observation

SE(j) is the lowest squared error evaluated at the j – th radar observation

W is the the number of points in the window of interest (defined by Equation (9))

Ij(p) is the p – th intensity value within the window of interest of the j – th radar observation

PrdB(p) is the RCGD ground echo model defined by the best couple (R̅0,θ̄g) evaluated at the p–th point within the window of interest

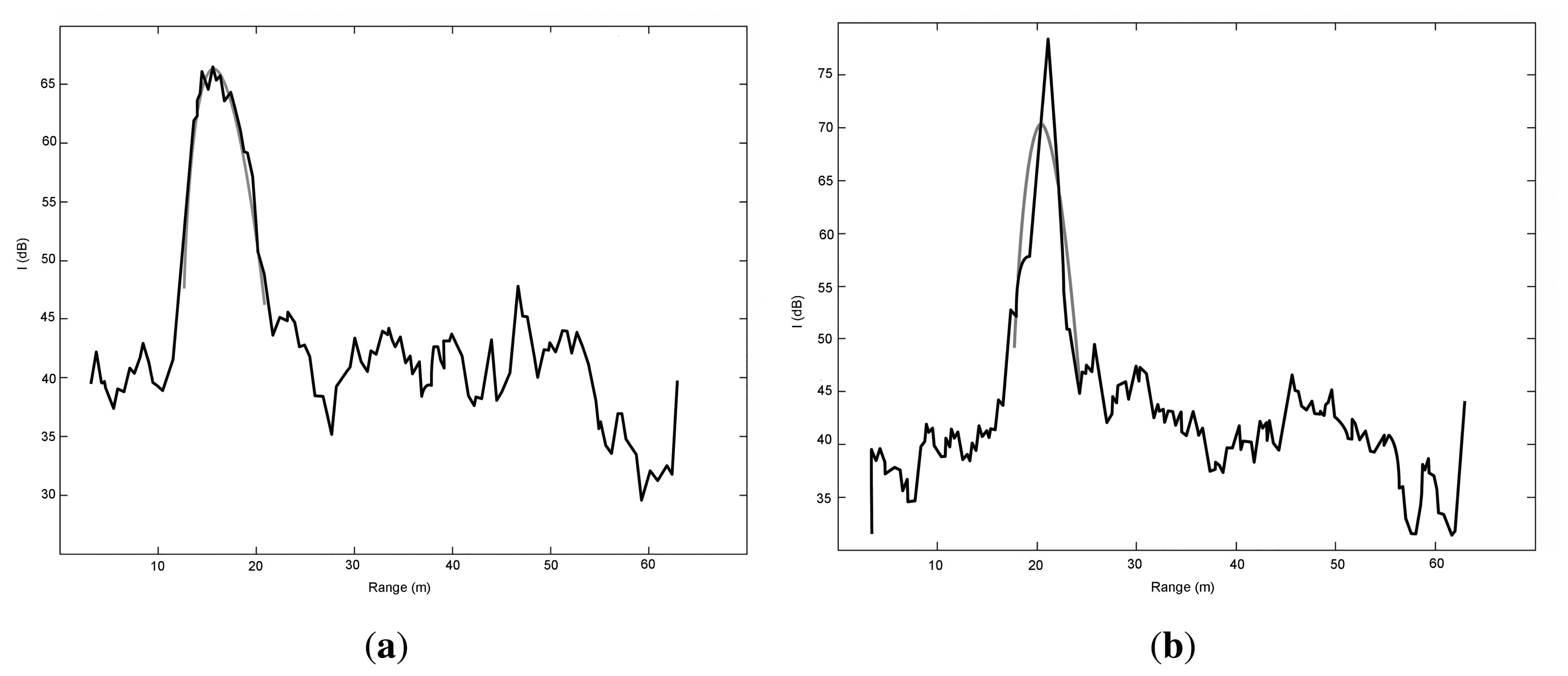

The idea is that a good fit (i.e., low SE) between the RCGD model and experimental data indicates high likelihood of ground, whereas a poor fit (i.e., high SE) indicates low confidence in ground. Two sample results are plotted in Figure 6. Specifically, in Figure 6a, the model matches the experimental data very well, thus attesting to the presence of ground. Conversely, Figure 6b shows an example where the SE is high; in this case a low confidence in ground echo is associated with the given observation. In practice, a threshold SEth is determined by inspection, and the observation j is labeled as ground if SE(j) exceeds SEth.

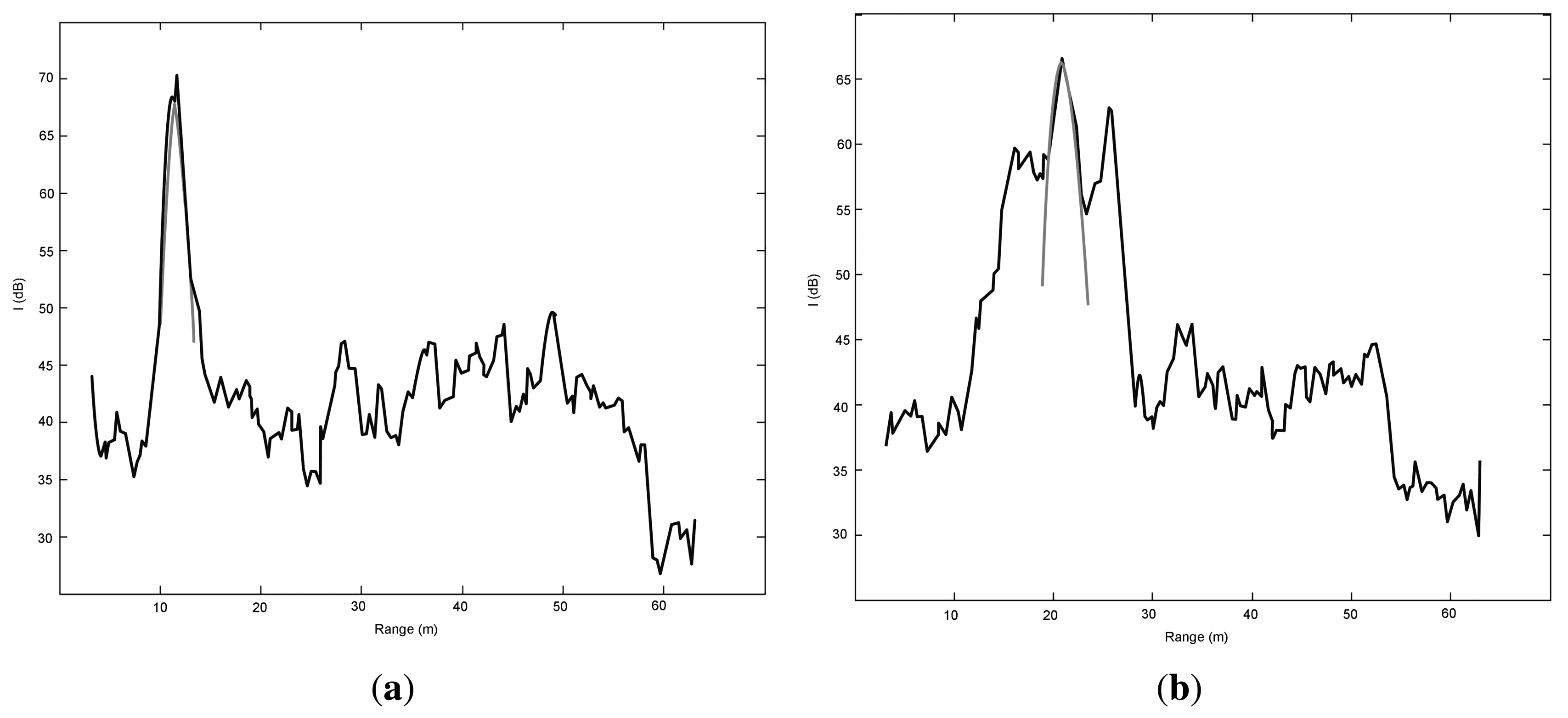

However, relying on the squared error only, may lead to many false positives, i.e., obstacles that are erroneously labelled as ground. An example is shown in Figure 7a, where a ground return would be seemingly detected based on the value of SE, which results lower than the threshold and comparable to a ground observation, when there is actually an obstacle. Nevertheless, it can be observed that data fitting gets worse in the proximity of R0, due to the difference in the peak value between the radar observation and the ground echo model. This suggests a possible solution to this issue by defining an absolute change in the maximum intensity value between the radar observation Imax and the model , . Therefore, non-ground will be flagged by the system when ΔP exceeds an experimentally defined threshold ΔPth.

It should also be noted that obstacles are generally characterised by higher reflection values than ground radar observations. Thus, a check can be performed by considering , which is the peak of the RCGD ground-echo model obtained from a given radar observation. This radar observation will be labelled as non-ground if exceeds an experimental threshold Pth.

Finally, Figure 7b shows a second type of false positive, due to the simultaneous presence of ground and obstacle. The obstacle “attracts” the algorithm, which labels erroneously the observation as ground with an R0 equal to the peak of the obstacle signal. An additional check is effective in this occurrence. The range spread ΔR = (R2 − R1) of the RCGD ground echo model results narrower than that of the experimental data. Therefore, an observation is denoted as non-ground if its range spread is lower than a threshold, ΔRth, defined by inspection as will be explained in Section 4.2.

In summary, Table 1 collects the classification rules chosen for the algorithm. Those rules represent our physical understanding of the problem and they are not unique. Indeed, other rules may be conceived and implemented to improve the performance of the system.

4.2. Setting the Thresholds for the Classification Rules

N observations were manually labelled by verifying against the corresponding frames provided by the camera. They were further divided into a training set of size M, which was used for setting the thresholds of the classification rules, and an independent group of size Q for evaluation, as will be explained later in Section 5. The squared error, range-spread ΔR, and values of the ground-labeled returns in the training set were stored and the results are illustrated in Figure 8. These plots show the variability of these parameters. They also demonstrate that no ground observation was detected with a squared error higher than 400 dB2, a range-spread less than 6 m and a higher than 68 dB. These values are the chosen thresholds for the classification rules of Table 1. The ΔP threshold was manually tuned by observing the resulting classified obstacles and a similar process of manual verification using the camera images as a reference. The threshold chosen was 3 dB.

5. Results

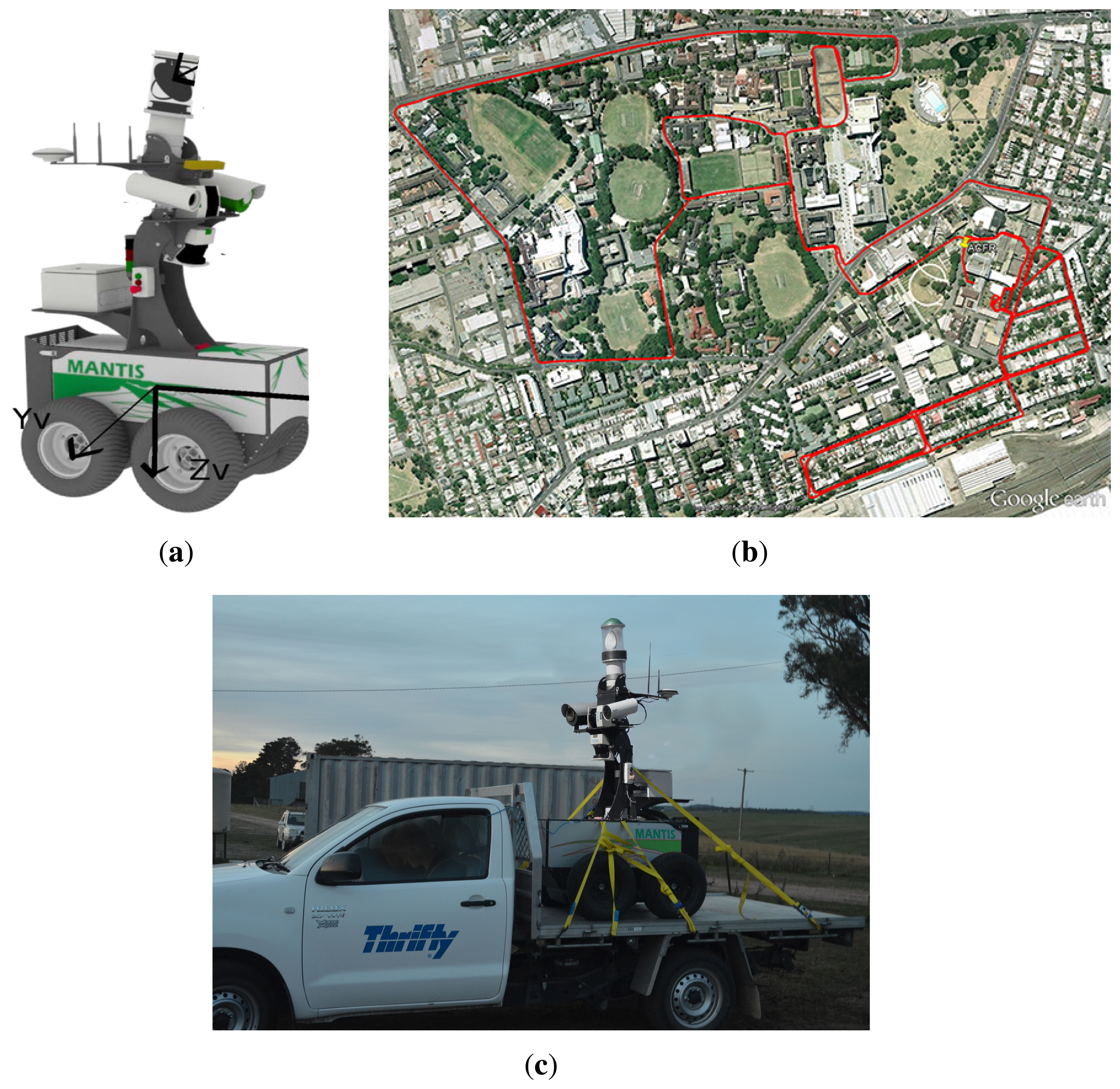

In this section, experimental results are presented to validate the RCGD algorithm. The system was tested in the field using Mantis (Figure 9a), a mobile platform fastened on a manned utility vehicle (Figure 9c). On the top of Mantis is mounted the HSS radar employed in this research. The sensor, custom built at the Australian Centre for Field Robotics (ACFR), is a 95 GHz frequency-modulated continuous wave (FMCW) radar that reports the amplitude of echoes at range between 1 and 60 m. The wavelength is λ = 3.16 mm and the 3-dB beam-width is 3° in azimuth. The antenna scans across the angular range of 360°. The main sensor properties are collected in Table 2. The robot is also equipped with other sensors, including one 2D Sick laser range-scanner, a colour camera, and a GPS/INS unit that provides pose estimation of the vehicle during the experiments.

The test field is a path around the Redfern Campus (The University of Sydney, NSW, AU), and it is visible in Figure 9b. Along the path, the vehicle encountered various obstacles (cars, buildings, people), slopes and climbs. During the experiment, radar images were collected and stored for processing offline.

A typical result obtained from the RCGD approach is shown in Figure 10, referring to time t = 22.31 min, when the vehicle drove on a double-lane road in regular traffic. Blue dots are the given observations labelled as ground, whereas red dots denote radar-labelled obstacles. The road in front of and behind the vehicle, as well as the car on the right and the car and the wall on the left are properly detected.

Figure 11 shows another result obtained in a cluttered environment with many cars simultaneously present in the scene. The algorithm succeeds also to detect ground areas between the several obstacles in the scene. In this scene blue dots are the given observation labelled as ground. Everything else not denoted by blue spots is an obstacle.

Figure 12 refers to two consecutive frames during the negotiation of a hump. The RCGD algorithm is not affected by time-synchronisation errors, as previously explained, and the ground areas are adequately detected even in the presence of pitch oscillations. It should be noted that in Figure 12b the rear ground section is not detected, due to the low grazing angle.

Overall, the data set used for the RCGD testing consisted in 87 labelled frames. Note that only radar observations that fall within the camera field of view and can therefore be hand-labeled by visual inspection were considered (i.e., about 8000 observations). A quantitative evaluation of the system performance was obtained by measuring detection rates, precision, recall and F1-score [23]. The results are collected in Table 3. It resulted in a true positive rate (the fraction of ground observations which were correctly classified as ground) of 86.0% and a false positive rate (the fraction of non-ground observations, which were erroneously classified as ground by the system) of 3.3%. The overall accuracy, i.e., the fraction of correct detections with respect to the total number of classifications, was of 90.1%.

As a final remark, it should be recalled that, since the RCGD approach operates in the radar reference frame, it does not require any navigation system. In contrast, this was a requirement for the previous RGS method. This also made impossible a direct comparison of the two methods, due to the relatively low accuracy and large local rifts in the navigation solution caused by multi-path and satellite occlusions in the urban “canyons”.

6. Conclusions

This paper addressed the issue of radar-based perception of autonomous vehicles in urban environments. The use of the radar for the perception of the surrounding environment is a recent research area which is strictly correlated to the available technologies. Specifically, the radar used in this thesis is a MMW radar and this choice is manly due to the demand of good resolution required to the sensor. Moreover, although the algorithm addresses specifically urban environments, this research provides a general overview of the field and can inspire other works for different environments. A novel method to perform ground segmentation was proposed using a radar mounted on a ground vehicle. It is based on the development of a physical model of the ground echo that is compared against a given radar observation to assess the membership confidence to the general class of ground or non-ground. The RCGD method was validated in the field via field experiments showing good performance metrics. This technique can be successfully applied to enhance perception for autonomous vehicles in urban scenarios or more generally for ground-based MMW radar terrain sensing applications.

Future research will focus on the possible extension of the RCGD approach to other similar sensor modalities, the use of statistical learning approaches to automatically build the ground model, and obstacle classification using, for example, intensity and geometric features (e.g., wall-angle).

Acknowledgements

The authors are thankful to the Australian Department of Education, Employment and Workplace Relations for supporting the project through the 2010 Endeavour Research Fellowship 1745_2010. The financial support of the ERA-NET ICT-AGRI through the grant Ambient Awareness for Autonomous Agricultural Vehicles (QUAD-AV) is also gratefully acknowledged. The authors would also like to thank Luigi Paiano for this work towards the Master's Degree thesis.

Author Contributions

All authors have made significant contributions to the conception and design of the research, data analysis and interpretation of results. David Johnson and James Underwood focused on the experimental activity and data analysis (i.e., set-up of the system, design and realisation of experiments). Giulio Reina mainly dealt with data analysis and interpretation, and writing of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix

If the intensity value (in dB) at R0 is known (PrdB(R0)), it is possible, from Equation (5), to write:

It should be noted that, according to the frequency of the radar used in this research (i.e., 94 Hz), the reflectivity can be also assumed as a function of the grazing angle θg

Please refer to [24] for more information on the value of the parameters involved.

References

- Pascoal, J.; Marques, L.; de Almeida, A.T. Assessment of laser range finders in risky environments. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, 2008 (IROS 2008), Nice, France, 22–26 September 2008; pp. 3533–3538.

- Peynot, T.; Underwood, J.; Scheding, S. Towards reliable perception for unmanned ground vehicles in challenging conditions. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, 2009 (IROS 2009), St. Louis, MO, USA, 10–15 October 2009.

- Reina, G.; Milella, A.; Underwood, J. Self-learning classification of radar features for scene understanding. Robot. Auton. Syst. 2012, 60, 1377–1388. [Google Scholar]

- Currie, N.C.; Hayes, R.D.; Trebits, R.N. Millimeter-Wave Radar Clutter; Artech House: Norwood, MA, USA, 1992. [Google Scholar]

- Lakshmanan, V.; Zhang, J.; Hondl, K.; Langston, C. A Statistical Approach to Mitigating Persistent Clutter in Radar Reflectivity Data. Sel. Top. Appl. Earth Obs. Remote Sens. IEEE J. 2012, 5, 652–662. [Google Scholar]

- Papadakis, P. Terrain traversability analysis methods for unmanned ground vehicles: A survey. Eng. Appl. Artif. Intell. 2013, 26, 1373–1385. [Google Scholar]

- Lalonde, J.; Vandapel, N.; Huber, D.; Hebert, M. Natural terrain classification using three-dimensional ladar data for ground robot mobility. J. Field Robot. 2006, 23, 839–861. [Google Scholar]

- Cherubini, A.; Spindler, F.; Chaumette, F. Autonomous Visual Navigation and Laser-Based Moving Obstacle Avoidance. IEEE Trans. Intell. Transp. Syst. 2012, 15, 2101–2110. [Google Scholar]

- Reina, G.; Ishigami, G.; Nagatani, K.; Yoshida, K. Vision-based estimation of slip angle for mobile robots and planetary rovers. Proceedings of IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 486–491.

- Reina, G.; Milella, A. Towards Autonomous Agriculture: Automatic Ground Detection Using Trinocular Stereovision. Sensors 2012, 12, 12405–12423. [Google Scholar]

- Hadsell, R.; Sermanet, P.; Ben, J.; Erkan, A.; Scoffier, M.; Kavukcuoglu, K.; Muller, U.; LeCun, Y. Learning long-range vision for autonomous off-road driving. J. Field Robot. 2009, 26, 120–144. [Google Scholar]

- Brooker, G.; Hennesy, R.; Lobsey, C.; Bishop, M.; Widzyk-Capehart, E. Seeing through dust and water vapor: Millimeter wave radar sensors for mining applications. J. Field Robot. 2007, 24, 527–557. [Google Scholar]

- Peynot, T.; Scheding, S.; Terho, S. The Marulan Data Sets: Multi-sensor Perception in a Natural Environment with Challenging Conditions. Int. J. Robot. Res. 2010, 29, 1602–1607. [Google Scholar]

- Foessel-Bunting, A.; Chheda, S.; Apostolopoulos, D. Short-range millimeter-wave radar perception in a polar environment. Proceedings of the 5th International Conference on Field and Service Robotics, Port Douglas, Australia, 29–31 July 1999; pp. 133–138.

- Durrant-Whyte, H.F. An autonomous guided vehicle for cargo handling applications. Int. J. Robot. Res. 2002, 15, 407–441. [Google Scholar]

- Mullane, J.; Adams, D.M.; Wijesoma, W.S. Robotic Mapping Using Measurement Likelihood Filtering. Int. J. Robot. Res. 2009, 28, 172–190. [Google Scholar]

- Milella, A.; Reina, G.; Underwood, J. A Self-learning Framework for Statistical Ground Classification using Radar and Monocular Vision. J. Field Robot. 2015, 32, 20–41. [Google Scholar]

- Kaliyaperumal, K.; Lakshmanan, S.; Kluge, K. An Algorithm for Detecting Roads and Obstacles in Radar Images. IEEE Trans. Veh. Technol. 2001, 50, 170–182. [Google Scholar]

- Alessandretti, G.; Broggi, A.; Cerri, P. Vehicle and Guard Rail Detection Using Radar and Vision Data Fusion. IEEE Trans. Intell. Trans. Syst. 2007, 8, 95–105. [Google Scholar]

- Wu, S.; Decker, S.; Chang, P.; Camus, T.; Eledath, J. Collision Sensing by Stereo Vision and Radar Sensor Fusion. IEEE Trans. Intell. Trans. Syst. 2009, 10, 606–614. [Google Scholar]

- Reina, G.; Underwood, J.; Brooker, G.; Durrant-Whyte, H. Radar-based Perception for Autonomous Outdoor Vehicles. J. Field Robot. 2011, 28, 894–913. [Google Scholar]

- Brooker, G. Introduction to Sensors; SciTech Publishing: New Dehli, India, 2005. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer-Verlag: New York, NY, USA, 2003. [Google Scholar]

- Johnson, D.; Calleija, M.; Brooker, G.; Nettleton, E. Development of a Dual-Mirror-Scan Elevation-Monopulse Antenna System. Proceedings of the 2011 European Radar Conference (EuRAD), Manchester, UK, 12–14 October 2011.

| Class | Parameters of the Regression Model | |||

|---|---|---|---|---|

| SEth = 400 dB2, ΔPth = 3 dB | ||||

| Pth = 68 dB, ΔRth = 6 m | ||||

| SE | ΔP | P | ΔR | |

| Ground (all conditions must be verified) | < SEth | < ΔPth | <Pth | > ΔRth |

| Non-ground (one condition must be verified) | ≥ SEth | ≥ Δ Pth | ≥ Pth | ≤ Rth |

| Model | Max. Range | Raw Range Resolution | Horizontal FOV | Instantaneous FOV | Angle Scan Rate | Angular Resolution | |

|---|---|---|---|---|---|---|---|

| Radar | ACFR custom-built | 60 m | 0.15 m | 360° | 3.0 × 3.0° | ≃1.75 rps | 0.77° |

| RCGD | |

|---|---|

| True positive rate | 86.0% |

| False positive rate | 3.3% |

| True negative rate | 96.7% |

| Precision | 97.1% |

| Accuracy | 90.1% |

| F1-score | 90.7% |

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reina, G.; Johnson, D.; Underwood, J. Radar Sensing for Intelligent Vehicles in Urban Environments. Sensors 2015, 15, 14661-14678. https://doi.org/10.3390/s150614661

Reina G, Johnson D, Underwood J. Radar Sensing for Intelligent Vehicles in Urban Environments. Sensors. 2015; 15(6):14661-14678. https://doi.org/10.3390/s150614661

Chicago/Turabian StyleReina, Giulio, David Johnson, and James Underwood. 2015. "Radar Sensing for Intelligent Vehicles in Urban Environments" Sensors 15, no. 6: 14661-14678. https://doi.org/10.3390/s150614661

APA StyleReina, G., Johnson, D., & Underwood, J. (2015). Radar Sensing for Intelligent Vehicles in Urban Environments. Sensors, 15(6), 14661-14678. https://doi.org/10.3390/s150614661