Unscented Kalman Filtering for Single Camera Based Motion and Shape Estimation

Abstract

: Accurate estimation of the motion and shape of a moving object is a challenging task due to great variety of noises present from sources such as electronic components and the influence of the external environment, etc. To alleviate the noise, the filtering/estimation approach can be used to reduce it in streaming video to obtain better estimation accuracy in feature points on the moving objects. To deal with the filtering problem in the appropriate nonlinear system, the extended Kalman filter (EKF), which neglects higher-order derivatives in the linearization process, has been very popular. The unscented Kalman filter (UKF), which uses a deterministic sampling approach to capture the mean and covariance estimates with a minimal set of sample points, is able to achieve at least the second order accuracy without Jacobians’ computation involved. In this paper, the UKF is applied to the rigid body motion and shape dynamics to estimate feature points on moving objects. The performance evaluation is carried out through the numerical study. The results show that UKF demonstrates substantial improvement in accuracy estimation for implementing the estimation of motion and planar surface parameters of a single camera.1. Introduction

The problem of estimating positions and velocities of moving features in space leads to the problem of estimating motion and shape parameters of moving features from their corresponding image data observed over time. This is important in various engineering applications, such as robotics and machine vision [1–6]. A dynamical systems approach to machine vision is introduced in [1], in which the problem of motion and shape parameter estimation is described as an inverse problem associated with a pair of coupled Riccati partial differential equations. Identification of the motion and shape parameters using the estimation technique was discussed in [5,6]. A CCD camera with a laser range finder mounted on a mobile robot for better motion and shape parameters identification can be seen in [5]. A sliding mode approach was proposed to estimate the motion of a moving body with the aid of a CCD camera [6]. For performance improvement, various estimation techniques have been adopted to reduce the noise. The algebraic methods yielded feasible results, but they were computationally unrealistic. The Kalman filter method is used in the estimation of the motion parameters to reduce the effect of the measurement errors which are inevitable in the real world. The Kalman filter (KF) method [7] for estimation of the 3D camera motion in imagine sequences for the applications to the video coding system is proposed by Kim et al. [8]. Kano et al. [9] performed a numerical study and compared an extended Kalman filter (EKF) based recursive algorithm with a non-recursive algebraic method for estimating motion and planar surface parameters.

The KF is theoretically attractive because it has been shown to be the one that minimizes the variance of the estimation mean square error (MSE). The nonlinear filter is used for nonlinear dynamics and/or nonlinear measurement relationships. The problem of estimating the state variables of the nonlinear systems may be solved using the nonlinear version of the Kalman filter. The most popular form is the EKF. The fact that EKF highly depends on a predefined dynamics model forms a major drawback. To achieve good filtering results, the designers are required to have the complete a priori knowledge on both the dynamic process and measurement models, in addition to the assumption that both the process and measurement are corrupted by zero-mean Gaussian white sequences. If the input data does not reflect the real model, the estimates may not be reliable.

Similar to the EKF, the unscented Kalman filter (UKF) [10–17] focuses on approximating the prediction probability characteristics and use the standard minimum mean square error estimator. The UKF has been developed in the context of state estimation of dynamic systems as a nonlinear distribution (or densities in the continuous case) approximation method. The UKF is superior to EKF not only in theory but also in many practical situations. The algorithm performs the prediction of the statistics with a set of carefully chosen sample points for capturing mean and covariance of the system. These sample points are sometimes referred to as the sigma points employed to propagate the probability of state distribution. The basic premise behind the UKF is it is easier to approximate a Gaussian distribution than it is to approximate an arbitrary nonlinear function. Instead of linearizing using Jacobian matrices as in the EKF and achieving first-order accuracy, the UKF can capture the states up to at least second order by using a deterministic sampling approach to capture the mean and covariance estimates with a minimal set of sample points. The deterministic sampling based UKF with applications on estimation of rigid body motion and shape dynamics are presented to estimate the feature points on the moving object. Results obtained shows that UKF is able to provide more accurate and reliable estimation accuracy of the object. Investigation of the UKF approach to the motion and shape estimation problem has not been seen in the literature.

This paper is organized as follows. In Section 2, preliminary background on rigid body motion is reviewed. The shape dynamics and optical flow dynamics are discussed in Sections 3 and 4, respectively. The unscented Kalman filter is introduced in Section 5. Results and Discussion are given in Section 6. Conclusions are given in Section 7.

2. Rigid Body Motion

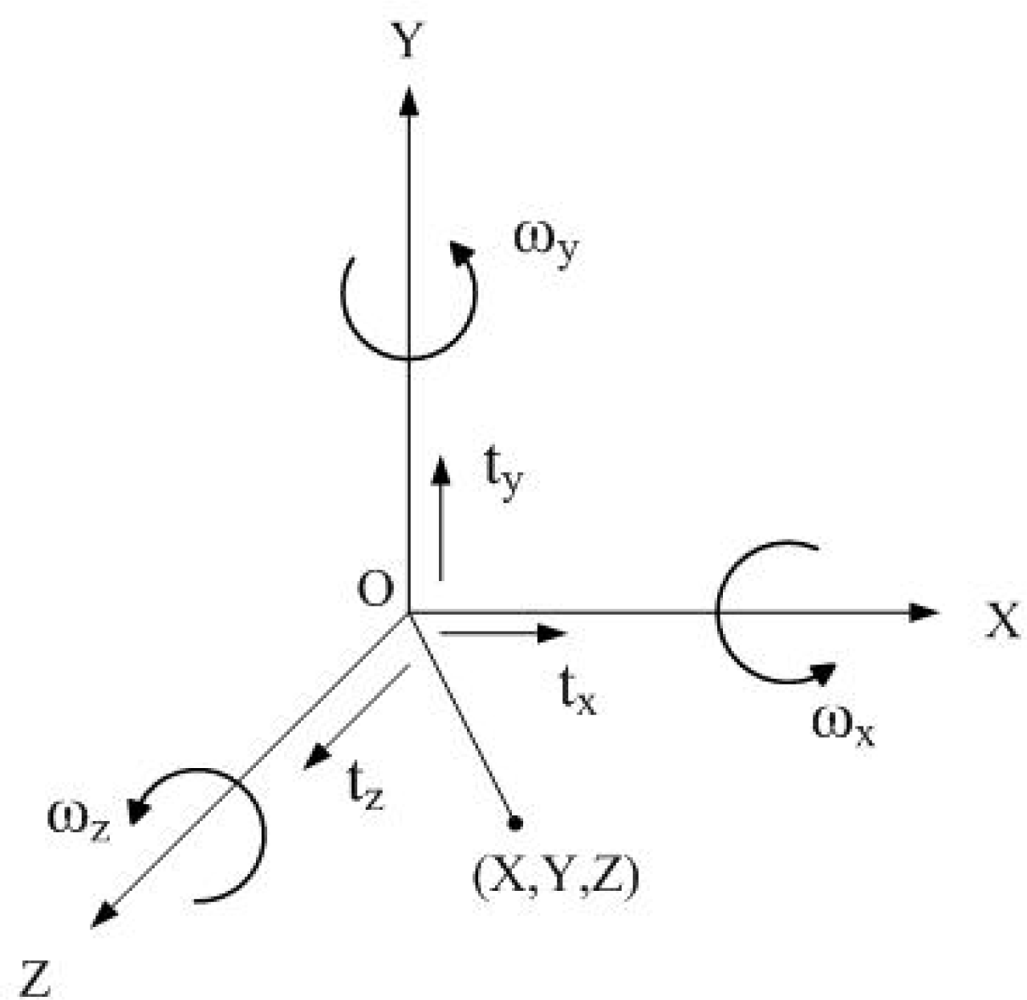

The mathematical description of a 3D point undergoing a rigid transformation about the camera axes is given as follows. Let ωx, ωy and ωz represent the angle of rotation about the X, Y and Z axes, respectively. Figure 1 illustrates the world coordinate system. An arbitrary rotation R can be represented by successive rotations around the X, Y and Z axes, respectively, as:

Assuming infinitesimal rotations, the zeroth order terms of the Taylor series expansion of the trigonometric functions and cos provide the following approximations:

Using the approximations in Equation (2), R can be approximated in the skew-symmetric matrix form in terms of angular velocity:

Let the vector T = [tx ty tz]T represent the translational velocity, where the elemental components tx, ty, and tz represent the translational velocities in the X, Y and Z directions, respectively. The velocity vector V = [Ẋ Ẏ Ż]T of a point in the world coordinates P = [X Y Z]T with respect to camera coordinates undergoing rigid transformation is represented as:

Consider the dynamical system Equation (5) where it is assumed that [ω1 ω2 ω3]T ≠ 0. If:

3. Shape Dynamics

The motion field describing the motion of individual points on the surface might undergo a change in shape. The dynamical system which describes the changing shape of the surface is called the shape dynamics. Let (X, Y, Z) be the world coordinate frame wherein we have a surface defined by:

Assume that S is smooth enough so that its derivatives with respect to each of the variables are defined everywhere. The motion field is assumed to be described by:

How the surface as in Equation (10) moves as points on the surface move following the motion field as in Equation (11) can be described by the quasi-linear partial differential equation called the “shape dynamics”:

Consider the initial condition:

The pair of Equations (12) and (13) constitutes an example of a Riccati partial differential equation. The surface defined by Equation (10) is assumed to be a plane described by:

Using Equation (15), Equation (5) can be written as:

The shape dynamics can be obtained by differentiating Equation (17) with respect to time t:

4. Optical Flow Dynamics

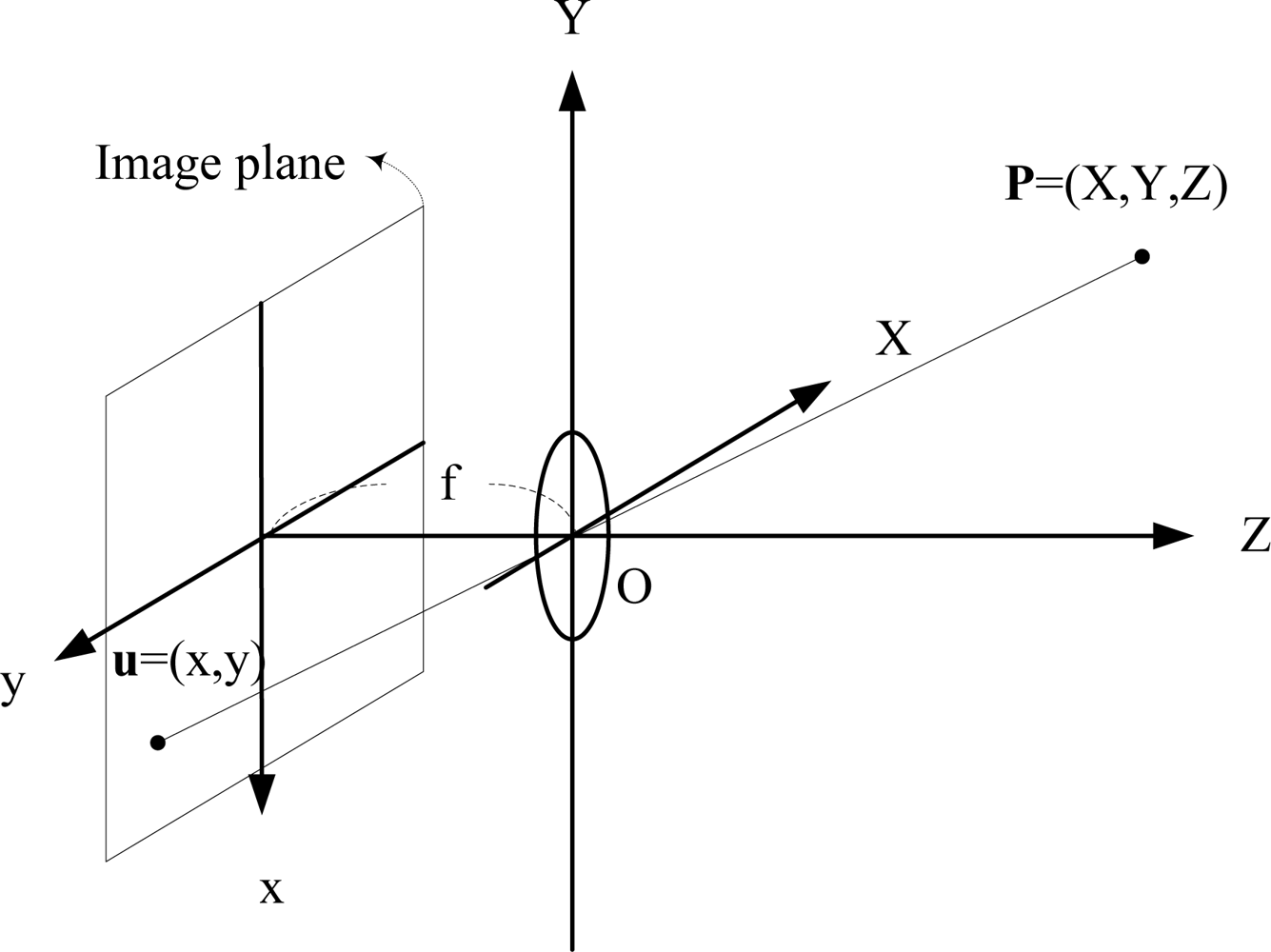

As shown in Figure 2, the 3D vector P = (X, Y, Z) is assumed to be observed via perspective projection onto a plane parallel to the (x, y) axes and located at Z = 1 by defining the relationship between an image point u = (x, y) and a scene point (X, Y, Z) given by:

The focal length f = 1 is usually used without loss of generality. With the relation given by Equation (5), differentiating Equation (20) leads to the relations:

Equations (24) and (25) denote the optical flow equations. Even when the motion field is time invariant, the parameters of the optical flow equations could be time-varying due to the fact that the shape parameters are changing in time as a result of motion field described by Equation (11). As t → ∞, we have:

In particular, if b ∈ ImΩ, the shape parameters p(t), q(t) and r(t) are periodic functions satisfying the Riccati Equation [2,9]:

Once the modeling of shape dynamics and optical flow dynamics is accomplished, the estimation algorithm can be employed for implementing the estimation of motion and planar surface parameters. In addition to the algebraic methods, the recursive estimation algorithms are applicable. The recursive algorithm presented by Kano et al. [9] is an EKF-based algorithm. The motivation of the paper is to carry out the UKF-based approach, which has not been seen in the literature for the motion and shape estimation problem. A brief introduction of the UKF is provided in Section 5.

5. The Unscented Kalman Filter

The UKF is a nonlinear filter which deals with the case governed by the nonlinear stochastic difference equations:

5.1. The Unscented Transformation

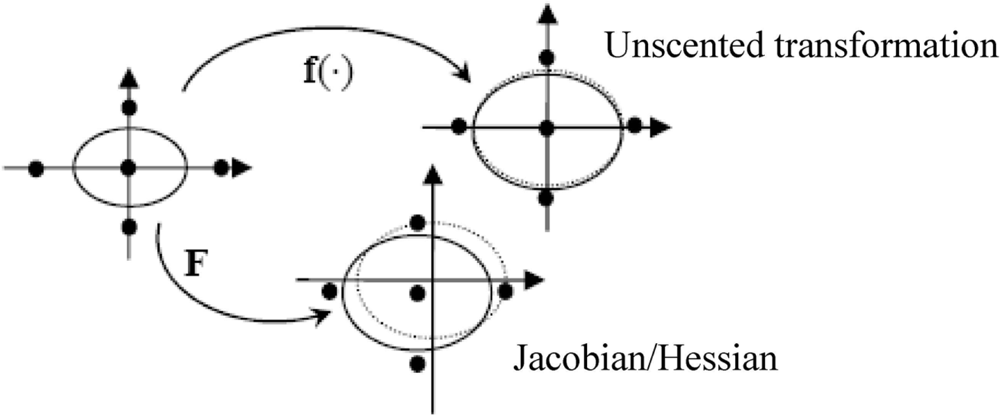

The first step in the UKF is to sample the prior state distribution, i.e., generate the sigma points through the unscented transformation (UT). Figure 3 illustrates the true means and covariances as compared to those obtained by the mapping of the UKF versus that of the EKF. The dot-line ellipse represents the true covariance. The UKF is implemented through the transformation of the nonlinear function f(·), shown as the solid-line ellipse on the top portion of the figure; the EKF is accomplished through the Jacobian F = ∂f/∂x, shown as the solid-line ellipse at the bottom portion of the figure. The UKF approach estimates are expected to be closer to the true values than the EKF approach.

Several UT’s are available. One of the popular approaches is the scaled unscented transformation [15–17]. Consider an n dimensional random variable x, having the mean x̂ and covariance P, and suppose that it propagates through an arbitrary nonlinear function f. The unscented transform creates 2n +1 sigma vectors X (a capital letter) and weighted points, given by:

The sigma vectors are propagated through the nonlinear function to yield a set of transformed sigma points:

The mean and covariance of yi are approximated by a weighted average mean and covariance of the transformed sigma points as follows:

5.2. The Unscented Kalman Filter

A high level of operation of the unscented Kalman filter is shown in Figure 4. To look at the detailed algorithm of the UKF, firstly, the set of sigma points are created by Equation (30). After the sigma points are generated, the time update (prediction step) of the UKF involves the following steps:

The measurement update (correction) step of the UKF involves the following steps:

The samples are propagated through true nonlinear equations, which can capture the states up to at least second order.

6. Results and Discussion

Simulation experiments have been carried out to evaluate the performance of the UKF approach in comparison with the conventional EKF approach for estimating motion and shape parameters. Computer code was developed using MATLAB. It is assumed that, at each time instant k, a set of three feature points (xi,k, yi,k), i = 1, 2, 3 are observed. The state vector xk is given by:

The associated state equation in discretized form is given by:

The process noise covariance matrix is given by Q = 10−10 × I14 and the parameters utilized in the UKF are given as follows: α = 1e − 4, β = 2, κ = 0. The sigma points capture the same mean and covariance irrespective of the choice of matrix square root which is used. The numerical efficient and stable method such as the Cholesky factorization has been used in obtaining the sigma points.

Experiment was conducted on a simulated three feature points: (2.5,2.5), (1.5,0.5) and (1.0,1.5) locations. The motion and shape parameters Ω is set as (ω1, ω2, ω3) = (−0.2, 0.1, −0.1), with initial values for shape parameters are (p0, q0, r0) = (0.1, 0.1, 2.0). For comparative purposes, the following two cases are considered [2].

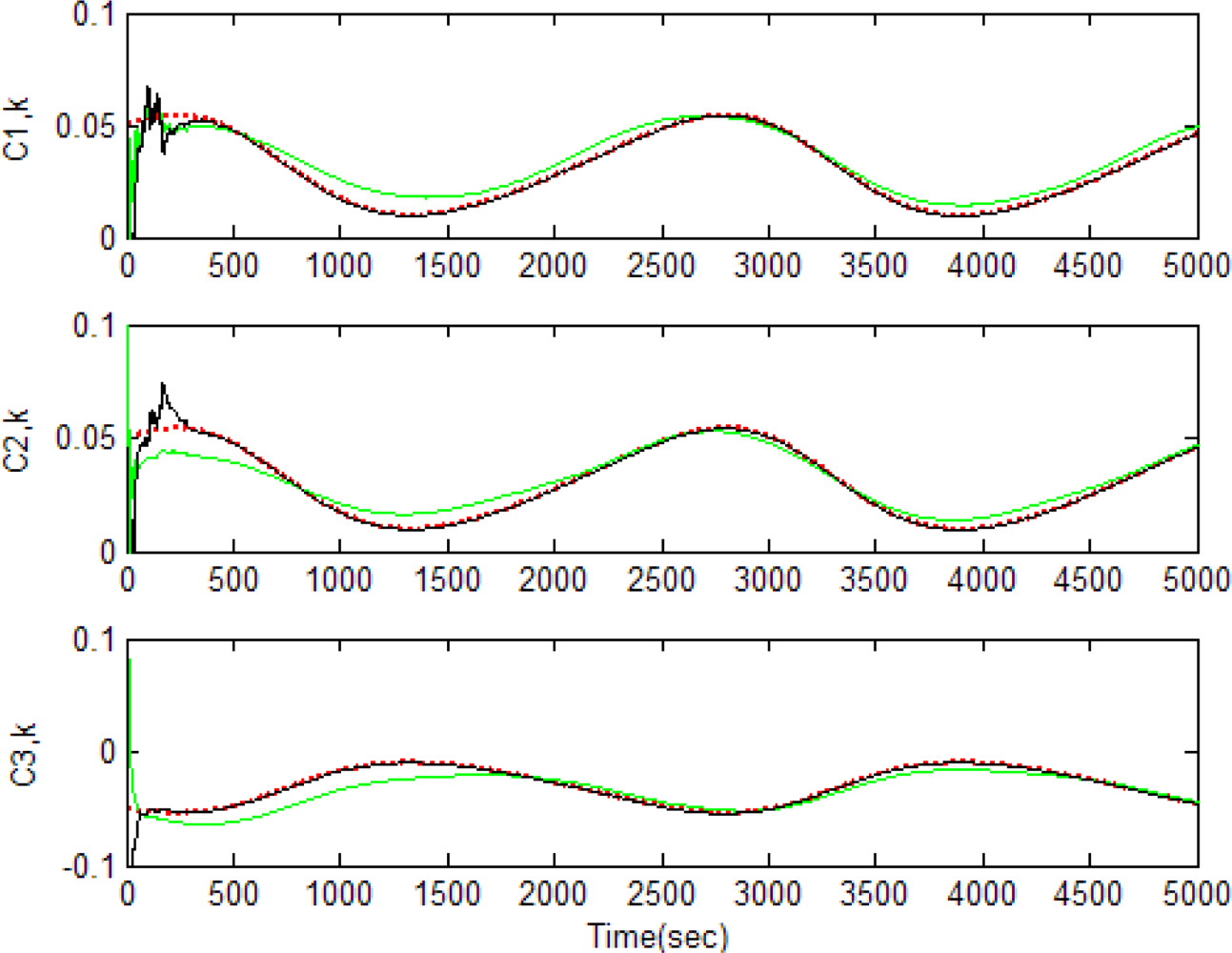

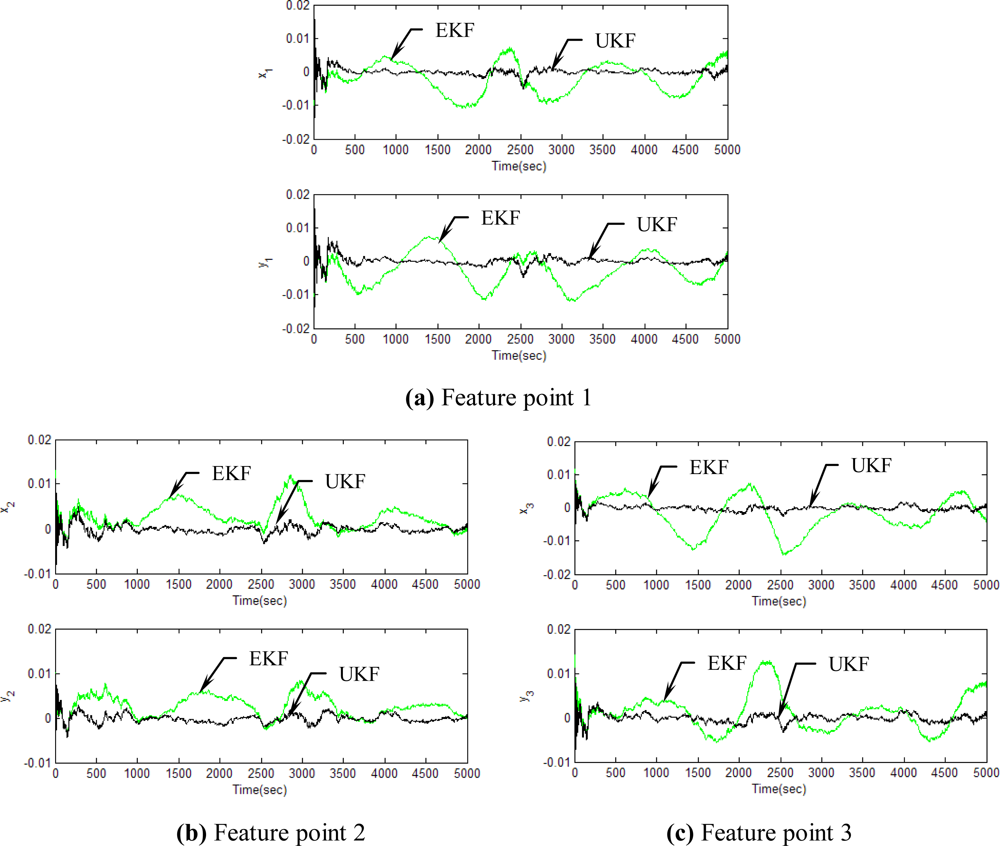

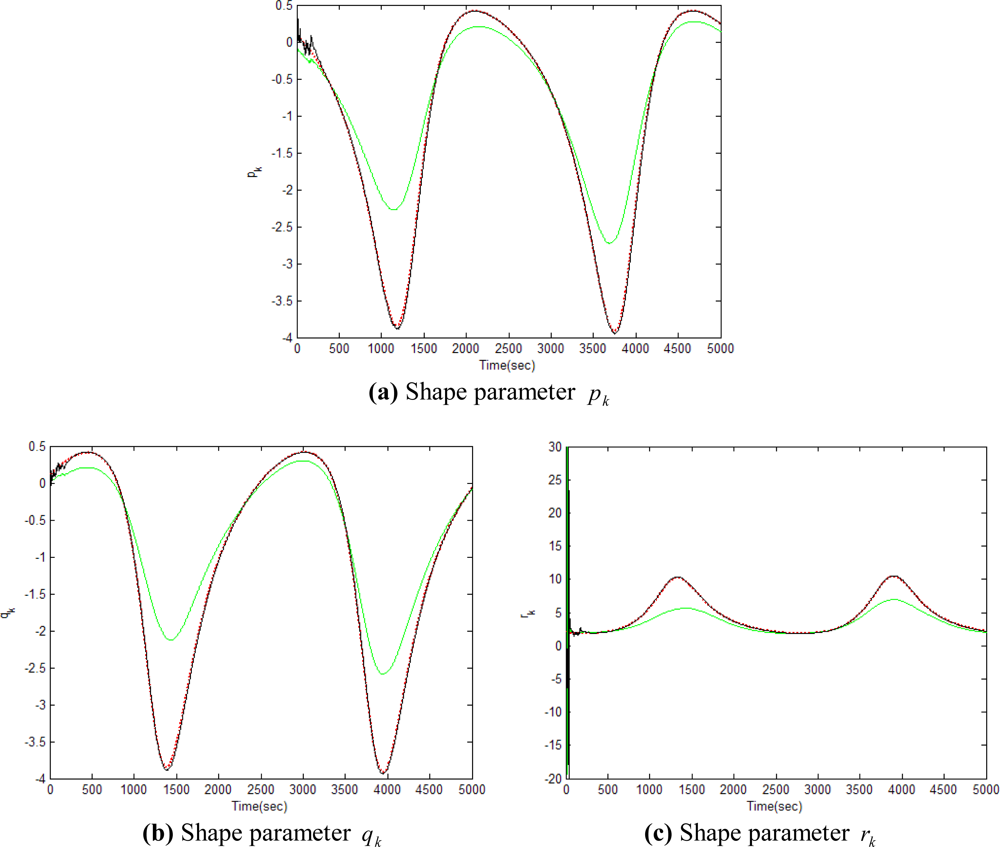

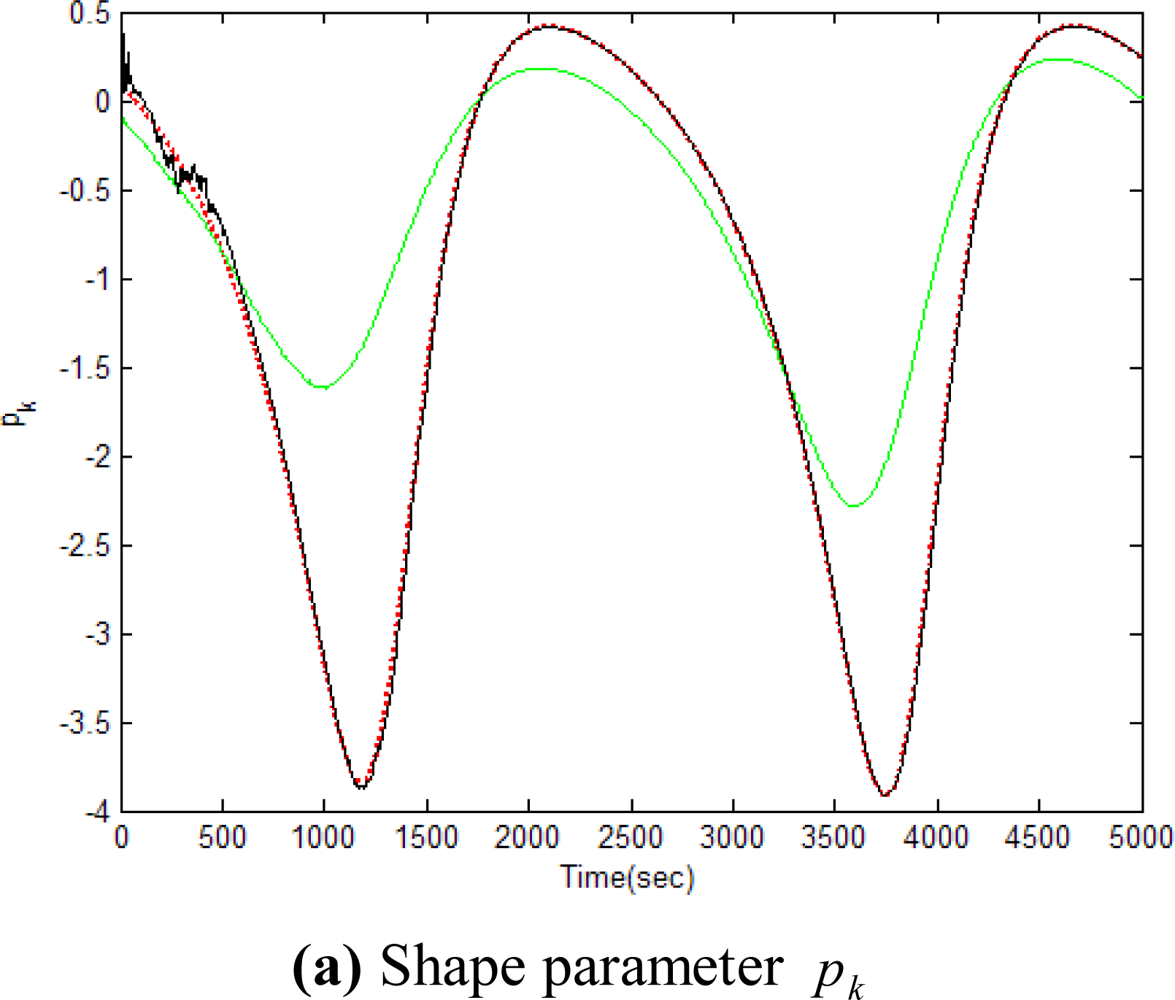

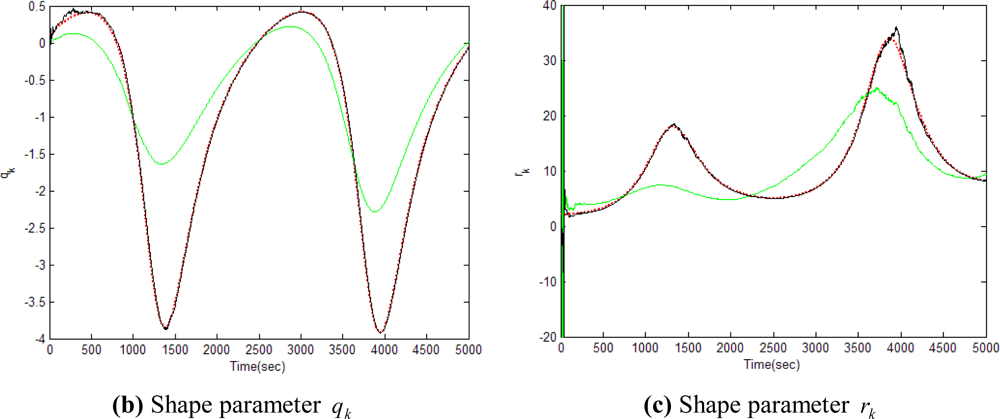

Figures 5–12 show the results for the numerical experiments. Figure 5 shows the sample trajectories for the three feature points on image plane for the case b ∈ ImΩ. It can be seen that the motions are circular and periodic around the axis of rotation. Figures 6–8 are the results for the case when b ∈ ImΩ. Estimation results for parameters c1, c2 and c3 for the three feature points are given in Figure 6. Three curves, shown in red, green, and black colors, denote the true, EKF-based, and UKF-based estimated values, respectively. For better clarity, the estimation errors for the three feature points are shown in Figure 7, which illustrates the advantage of UKF. Figure 8 gives the estimation accuracy for the shape parameters pk, qk and rk. The EKF is not working very well whereas the UKF demonstrates its good capability for capturing the true trajectories. Comparison of RMSE (in the unit of mm) of the three feature points on the image plane using the EKF and UKF is summarized in Table 1. Comparison of RMSE (in the unit of mm) for the feature points of the scene point using the EKF and UKF is summarized in Table 2.

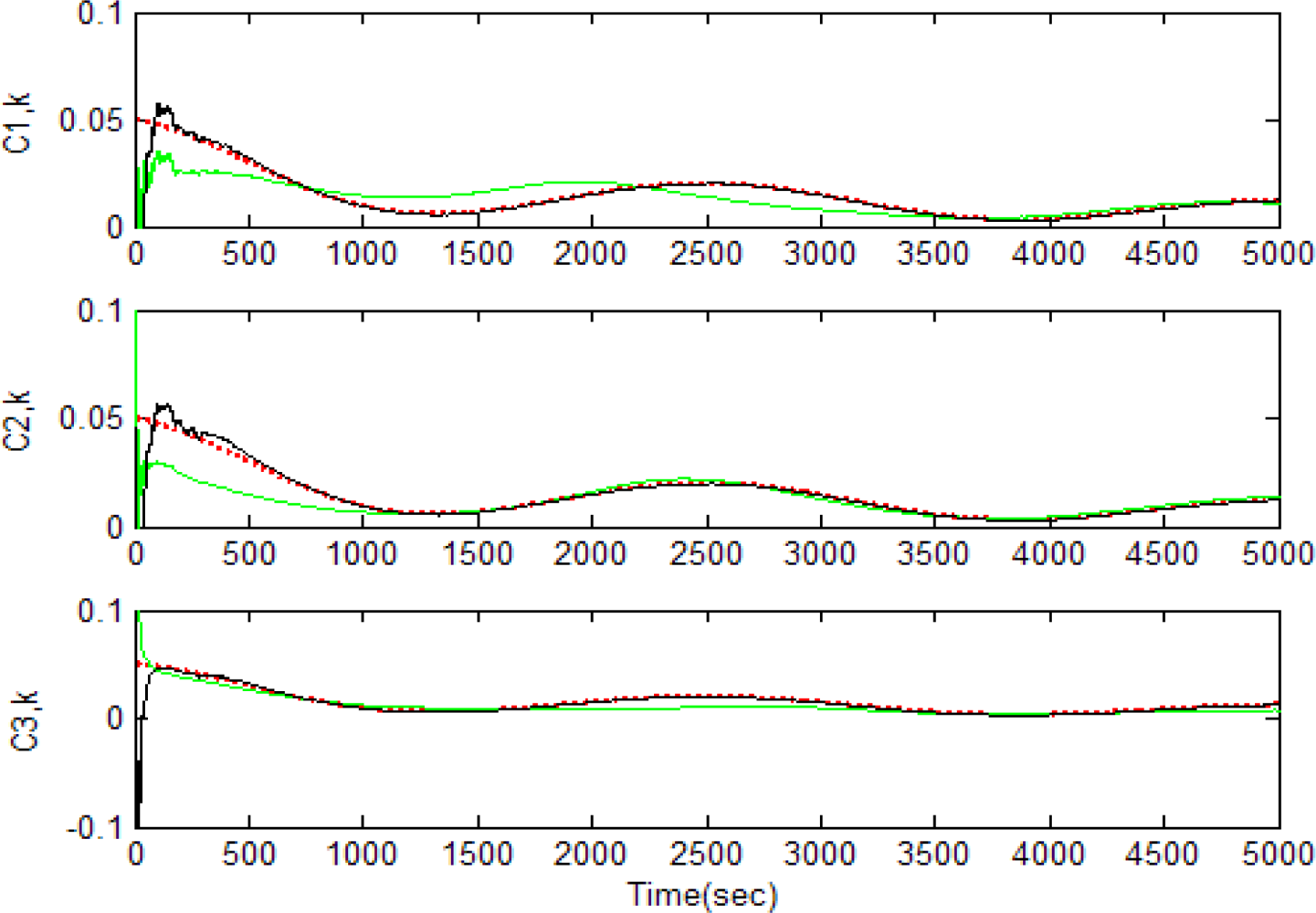

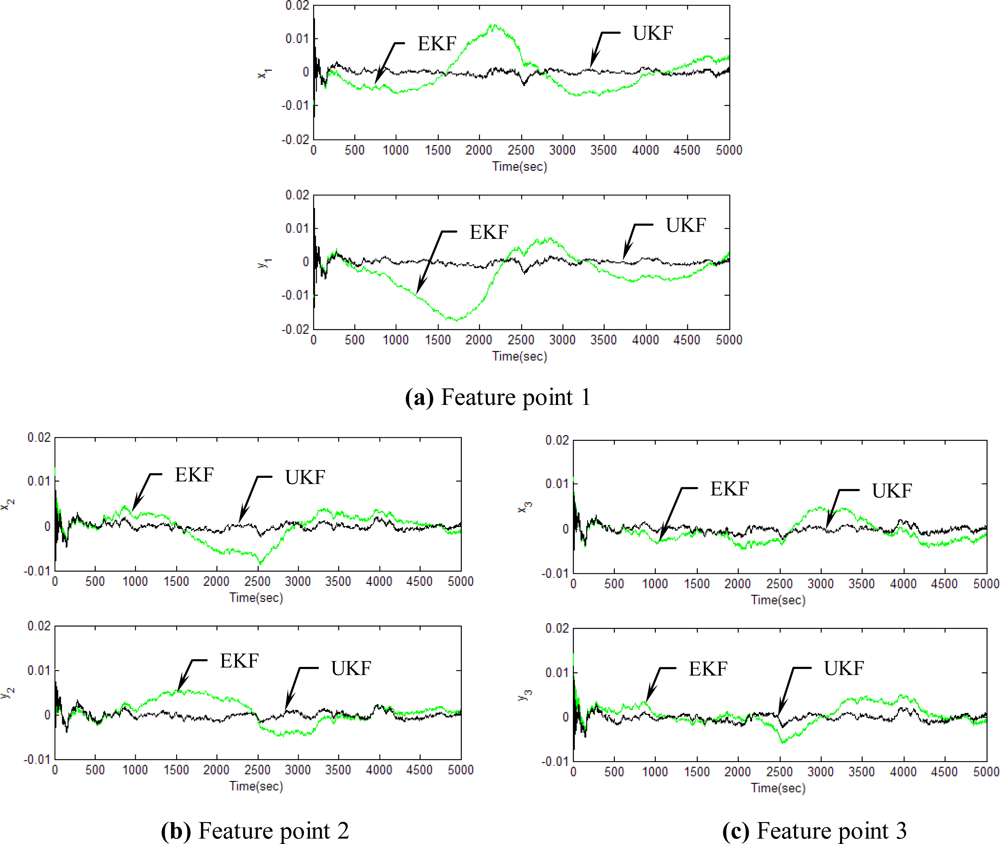

Figure 9 shows the sample trajectories for the three feature points on image plane for the case b ∉ ImΩ. The performance comparison for EKF and UKF in the case of b ∉ ImΩ is basically similar to the results obtained for b ∈ ImΩ. The motions are receding to infinitely linearly in time. Figures 10–12 provide the results for the case when b ∉ ImΩ. Figure 10 shows the estimation results for parameters c1, c2 and c3and for the three feature points; Figure 11 provides the estimation errors for the three feature points; and Figure 12 gives the estimation accuracy for the shape parameters pk, qk and rk. Comparison of RMSE (in the unit of mm) of the three feature points on the image plane using the EKF and UKF is summarized in Table 3. Comparison of RMSE (in the unit of mm) for the feature points of the scene point using the EKF and UKF is summarized in Table 4.

In general, utilization of an adequate nonlinear model with suitable filter makes it possible to achieve improved estimation performance. For the problem of single camera based motion and shape estimation, the UKF-based recursive estimation algorithm is a good alternative adopted for implementing the estimation of motion and planar surface parameters. The states and the dynamic process are related nonlinearly. The nonlinearity/uncertainty in the state estimate is suitably taken care of in the UKF, which has therefore demonstrated substantial state estimation accuracy improvement as compared to the EKF based approach. When compared with EKF, the UKF method exhibits superior performance since the series approximations in the EKF algorithm can lead to poor representations of the nonlinear functions and probability distributions of interest.

7. Conclusions

Various engineering applications, such as robotics and machine vision, require the estimation of positions and velocities of moving features in space, leading to the problem of estimating motion and shape parameters of moving features from their corresponding image data observed over time. To alleviate the noise and obtain better estimation accuracy in feature points on the moving objects, the filtering/estimation approach can be used. A variety of estimation techniques have been adopted to reduce the noise. One of the most important ones is the extended Kalman filter (EKF).

As compared to the EKF’s linear approximation, the unscented transformation is accurate to the second order for any nonlinear function. In light of unscented Kalman filter’s superiority over the extended Kalman filter, this paper has presented a deterministic sampling of UKF approach for estimating motion and shape parameters of feature points on the moving object. Such a motion is described by the nonlinear model with skew symmetric matrix Ω, which is widely used in the theory of machine vision. The reason is due to the fact that the UKF is able to deal with the nonlinear formulation, which will ensure better accurate parameter estimation. For the nonlinear estimation problem, alternatives for the classical model-based extended Kalman filter (EKF) can be employed. The UKF is a nonlinear distribution approximation method, which uses a finite number of sigma points to propagate the probability of state distribution through the nonlinear dynamics of system. The UKF exhibits superior performance when compared with conventional EKF since the series approximations in the EKF algorithm can lead to poor representations of the nonlinear functions and probability distributions of interest.

The analyses were confirmed by simulation studies. For both cases, b ∈ ImΩ and b ∉ ImΩ, motion and shape parameters were recovered successfully with remarkable accuracy improvement. The results obtained shows that the proposed UKF method have been compared to the EKF and have demonstrated substantial improvement in obtaining the motion and shape of moving objects.

Acknowledgments

This work has been supported in part by the National Science Council of China under grant NSC 97-2221-E-019-012 and NSC 98-2221-E-019-021-MY3. Valuable suggestions and detailed comments by the anonymous reviewers are gratefully acknowledged.

References

- Loucks, T; Ghosh, BK; Lund, J. An Optical Flow Based Approach for Motion and Shape Parameter EstimaTion in Computer Vision. Proceedings of the 31st IEEE Conference on Decision and Control, Tucson, AZ, USA, 16–18 December 1992; pp. 819–823.

- Ghosh, BK; Loucks, EP. A perspective theory for motion and shape estimation in machine vision. SIAM J. Control Optim 1995, 33, 1530–1559. [Google Scholar]

- Derpanis, KG. The Motion Field and its Affine Approximation, 2004. Available online: http://www.cse.yorku.ca/~kosta/CompVis_Notes/motion_field_review.pdf/ (accessed on 7 July 2011).

- Kano, H; Ghosh, BK. Identification of Relative Position and Orientation of Two Cameras from Motion and Shape Parameters of Moving Rigid Body. Proceedings of the 39th IEEE Conference on Decision and Control, Sydney, Australia, 12–15 December 2000; pp. 5169–5174.

- Takahashi, S; Ghosh, BK. Motion and Shape Parameters Identification with Vision and Range. Proceedings of the American Control Conference, Arlington, VA, USA, 25–27 June 2001; pp. 4626–4631.

- Dogan, E; Yilmaz, B; Unel, M; Sabanovic, A. A Sliding Mode Approach to Visual Motion Estimation. Proceedings of the 9th IEEE International Workshop on Advanced Motion Control, Istanbul, Turkey, 27–29 March 2006; pp. 733–738.

- Brown, RG; Hwang, PYC. Introduction to Random Signals and Applied Kalman Filtering; John Wiley & Sons: New York, NY, USA, 1997. [Google Scholar]

- Kim, ET; Han, J-K; Kim, H-M. A Kalman-Filtering Method for 3D Camera Motion Estimation from Imagine Sequences. Proceedings of the International Conference on Image Processing, ICIP'97, Washington, DC, USA, 26–29 October 1997; 3, pp. 630–633.

- Kano, H; Ghosh, BK; Kanai, H. Single camera based motion and shape estimation using extended kalman filtering. Math. Comput. Modell 2001, 34, 511–525. [Google Scholar]

- Wan, EA; van der Merwe, R. The Unscented Kalman Filter for Nonlinear Estimation. Proceedings of the Adaptive Systems for Signal Processing, Communication and Control (AS-SPCC) Symposium, Alberta, AB, Canada, 1–4 October 2000; pp. 153–156.

- Wan, EA; van der Merwe, R. The Unscented Kalman Filter. In Kalman Filtering and Neural Networks; Haykin, S, Ed.; Wiley: New York, NY, USA, 2001. [Google Scholar]

- Simon, D. Optimal State Estimation: Kalman, H Infinity, and Nonlinear Approaches; John Wiley & Sons: New York, NY, USA, 2006. [Google Scholar]

- Julier, SJ; Uhlmann, JK. Reduced Sigma Point Filters for the Propagation of Means and Covariances through Nonlinear Transformations. Proceedings of the American Control Conference, Anchorage, AK, USA, 8–10 May 2002; pp. 887–892.

- Li, Y; Wang, J; Rizos, C; Mumford, P; Ding, W. Low-Cost Tightly Coupled GPS/INS Integration Based on a Nonlinear Kalman Filter Design. Proceedings of the 2006 National Technical Meeting of The Institute of Navigation, Monterey, CA, USA, 18–20 January 2006; pp. 958–966.

- Julier, SJ. The Scaled Unscented Transformation. Proceedings of the American Control Conference, Anchorage, AK, USA, 8–10 May 2002; pp. 4555–4559.

- Van der Merve, R; Doucet, A; de Freitas, N; Wan, E. The Unscented Particle Filter; Technical Report CUED/F-INFENG/TR 380; Cambridge University Engineering Department: Cambridge, MA, USA; August; 2000. [Google Scholar]

- Pourtakdoust, SH; Ghanbarpour; Asl, H. An adaptive unscented Kalman filter for quaternion-based orientation estimation in low-cost AHRS. Aircr. Eng. Aerosp. Technol 2007, 79, 485–493. [Google Scholar]

| EKF | UKF | |||||

|---|---|---|---|---|---|---|

| x | y | x-y | x | y | x-y | |

| Feature point 1 (x1,y1) | 0.0051 | 0.0057 | 0.0077 | 0.0011 | 0.0011 | 0.0016 |

| Feature point 2 (x2,y2) | 0.0037 | 0.0037 | 0.0052 | 0.0009 | 0.0008 | 0.0012 |

| Feature point 3 (x3,y3) | 0.0058 | 0.0043 | 0.0072 | 0.0008 | 0.001 | 0.0013 |

| EKF | UKF | |||||||

|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X-Y-Z | X | Y | Z | X-Y-Z | |

| Feature point 1 (x1,y1) | 5.35 | 5.6286 | 5.4815 | 9.5053 | 1.5783 | 1.7815 | 0.7082 | 2.4832 |

| Feature point 2 (x2,y2) | 2.4956 | 1.0329 | 1.7686 | 3.2284 | 0.621 | 0.2423 | 0.3968 | 0.7757 |

| Feature point 3 (x3,y3) | 1.6892 | 2.546 | 1.9205 | 3.6088 | 0.5264 | 0.8431 | 0.5515 | 1.1367 |

| EKF | UKF | |||||

|---|---|---|---|---|---|---|

| x | y | x-y | x | y | x-y | |

| Feature point 1(x1,y1) | 0.0056 | 0.0073 | 0.0092 | 0.001 | 0.0011 | 0.0015 |

| Feature point 2 (x2,y2) | 0.0033 | 0.0029 | 0.0044 | 0.0009 | 0.0008 | 0.0011 |

| Feature point 3 (x3,y3) | 0.0028 | 0.0025 | 0.0037 | 0.0008 | 0.001 | 0.0013 |

| EKF | UKF | |||||||

|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X-Y-Z | X | Y | Z | X-Y-Z | |

| Feature point 1 (x1,y1) | 2.9116 | 2.557 | 5.1281 | 6.4276 | 0.476 | 1.4102 | 0.7018 | 1.6455 |

| Feature point 2 (x2,y2) | 0.8623 | 1.0275 | 1.6439 | 2.1217 | 0.0938 | 0.0894 | 0.1173 | 0.1748 |

| Feature point 3 (x3,y3) | 0.8424 | 1.1548 | 1.9177 | 2.3918 | 0.0562 | 0.087 | 0.1245 | 0.1619 |

© 2011 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Jwo, D.-J.; Tseng, C.-H.; Liu, J.-C.; Lee, H.-D. Unscented Kalman Filtering for Single Camera Based Motion and Shape Estimation. Sensors 2011, 11, 7437-7454. https://doi.org/10.3390/s110807437

Jwo D-J, Tseng C-H, Liu J-C, Lee H-D. Unscented Kalman Filtering for Single Camera Based Motion and Shape Estimation. Sensors. 2011; 11(8):7437-7454. https://doi.org/10.3390/s110807437

Chicago/Turabian StyleJwo, Dah-Jing, Chien-Hao Tseng, Jen-Chu Liu, and Hsien-Der Lee. 2011. "Unscented Kalman Filtering for Single Camera Based Motion and Shape Estimation" Sensors 11, no. 8: 7437-7454. https://doi.org/10.3390/s110807437