1. Introduction

Estimation of entropies of molecules is an important problem in molecular sciences. Internal configurational entropy of a molecule is the entropy of the joint distribution of the internal molecular coordinates (bond lengths, bond angles, and dihedral angles), and as such it is a measure of random fluctuations in these coordinates. Most significant contribution to the internal configurational entropy of a molecule comes from the fluctuations in dihedral angles (also called internal-rotation angles). Many important properties of complex molecules, such as their stability and adopted conformation, depend on random fluctuations in their internal coordinates. Estimation of the internal configurational entropy of molecules is therefore important for understanding many chemical and biological processes, such as the spontaneity of a chemical reaction, protein folding, intermolecular protein-protein interactions, and protein-ligand interactions. It is also a key in the design of drugs that can stabilize the normally folded molecular structure or correct a misfolded structure, since protein misfolding is a cause of several diseases such as Alzheimer disease, mad cow disease, cystic fibrosis, and some types of cancer.

Estimation of the internal entropy of macromolecules, such as proteins, is a challenging problem because of the large number of correlated internal molecular coordinates. A commonly used method of estimating the internal entropy of a molecule, known as the quasi-harmonic approach, is based on the assumption of a multivariate normal distribution for the internal molecular coordinates [

1]. Misra

et al. [

2] discussed the decision theoretic estimation of the entropy of a multivariate normal distribution and obtained improvements over the best affine equivariant estimator under the squared error loss function. However, the assumption of a multivariate normal distribution for the internal coordinates of a molecule is appropriate only at low temperatures, when the fluctuations in its internal coordinates are small. At higher temperatures, the dihedral angles of a complex molecule exhibit multimodes and skewness in their distributions, and the multivariate normal distribution becomes inadequate.

Demchuk and Singh [

3] discussed a circular probability approach for modeling the dihedral angles of a molecule in the estimation of internal rotational entropy. As an illustration, they modeled the torsional angle of the methanol molecule by a trimodal von Mises distribution and derived a bath-tub-shaped distribution for the torsional potential energy of the molecule. Singh

et al. [

4] introduced a torus version of a bivariate normal distribution for modeling two dihedral angles. The marginal distributions of the model are symmetric unimodal or symmetric bimodal depending on the configurations of the parameters. A multivariate generalization of this bivariate model has been proposed by Mardia

et al. [

5]. Hnizdo

et al. [

6] and Darian

et al. [

7] used a Fourier series expansion approach for modeling univariate and bivariate distributions of molecular dihedral angles. Complex molecules, however, have many significantly correlated dihedral angles, whose joint distribution can take an arbitrary form. For this reason, a non-parametric approach for estimating the entropy of a circular random vector of arbitrary dimensions is desirable.

Several non-parametric estimators of the entropy of an

m-dimensional random variable

X have been discussed in the literature. A common approach is to replace the probability density function (pdf)

in the definition of the differential entropy,

by its non-parametric kernel or histogram density estimator [

8,

9]. However, in most practical situations, implementation of such estimates in higher dimensions becomes difficult. In one dimension (

), several authors have proposed estimates of entropy in the context of testing goodness of fit [

10,

11]. Singh

et al. [

12] proposed the following asymptotically unbiased and consistent nearest-neighbor (NN) estimator of the entropy

:

Here,

is the Euclidean distance of a point

to its

,

nearest (in the Euclidean-distance sense) neighbor in a random sample

from the distribution

;

is Euler’s constant,

,

, and

is the usual gamma function. For

, the estimator

reduces to the NN estimator proposed by Kozachenko and Leonenko [

13]. Results similar to those of [

12] have been also reported by Goria

et al. [

14]. For the purpose of the estimation of the information-theoretic quantity of mutual information, Kraskov

et al. [

15] generalized the first-nearest-neighbor estimator of [

13] in terms of

nearest-neighbor distances in a general metric, giving, however, explicit expressions only for the maximum and Euclidean metrics and without providing formal proofs of asymptotic unbiasedness and consistency. For

, Tsybakov and van der Meulen [

16] established the mean-square-root-

n consistency of a truncated version of

. Earlier, Loftsgaarden and Quesenberry [

17] had used NN distances to construct non-parametric estimates of a multivariate pdf. Recently, Mnatsakanov

et al. [

18] studied

k-NN estimators of entropy in which the parameter

k is assumed to be a function of the sample size.

The NN entropy estimator (2) uses Euclidean distances between the sample points. However, when the random variable

X is circular, it is natural to base an NN estimate of entropy on a circular distance rather than the Euclidean distance. A circular observation can be regarded as a point on a circle of unit radius. Once an initial direction and an orientation of the circle have been chosen, each circular observation can be specified by the angle from the initial direction to the point on the circle corresponding to the observation. In this paper, we construct estimates of the entropy of an

m-dimensional circular random vector

based on two different definitions of circular distances. Let

and

be two observations on an

m-dimensional circular random vector Θ. We define two circular distance functions

and

as follows:

and

Note that

, is the arc length between the points

and

on the unit circle

. On the other hand,

is the Euclidean distance between the points

and

on the unit circle

.

In

Section 2 and

Section 3, we propose explicit expressions for NN estimators of entropy based on the circular distance functions

and

, respectively, and prove their asymptotic unbiasedness and consistency (with some mathematical details given in an

Appendix). In

Section 4, we compare the performance of the estimator based on the circular distance

with that of the Euclidean-distance estimator (2) using Monte Carlo simulations from an analytic 6-dimensional circular distribution, where the exact joint entropy is known. We there also apply the

-distance estimator to the problem of estimating the entropy of a 7-dimensional joint distribution of the internal-rotation angles in the molecule of tartaric acid, using a large sample of these angles obtained by a realistic molecular-dynamics simulation.

2. Nearest Neighbor Estimates of Entropy Based on Circular Distance

For constructing nearest neighbor estimates of the entropy of a circular random vector based on the distance function (3), we first derive the expression for the volume of a ball

centered at

and having a radius

,

being the maximum value of

.

Lemma 2.1. Let

,

and let

be the volume of the ball

, defined by (5). Then

where

denotes the cumulative distribution function of the sum of

m independent and identically distributed random variables each having a beta distribution with parameters

,

.

Proof. Without loss of generality, we may take

. Then

where

,

and

are independent and identically distributed uniform random variables over the interval

. Define

,

. Then

are independent and identically distributed beta random variables, having parameters

,

. Hence the result follows.

Remark 2.1. (i) For

and

, we have

. For

and

, it can be verified that

(ii) For

and

, it can be verified that

(iii) For a general

m (

) and

, it can be verified that

(iv) For any

and for

,

satisfies the following recursion relation

where

is the pdf of a beta random variable with parameters

and

.

The circular distance (3) becomes the Euclidean distance

when

. Circular-distance balls

, where

and

, thus tend to the corresponding Euclidean-distance balls as

. We can therefore apply the Lebesgue differentiation theorem [

19] to the probability density function

of a circular random variable

in the form

where

is given by (6). Equation (7) suggests that, given a sufficiently large random sample

from the distribution of Θ, the probability density function

can be approximated at almost all

by

where

denotes the cardinality of the set

and

r is sufficiently small.

Guided by this insight, we will now construct nearest neighbor estimates of entropy for an

m-variate circular random vector Θ, having a probability density function

. Let

be a random sample from the distribution of Θ and let

be a given positive integer. For

, let

denote the circular distance of

from its

closest neighbor, with respect to the circular distance

,

i.e.,

Assume that the sample size

n is sufficiently large, so that the distances

are small, on the average. Then, based on approximation (8), a reasonable estimator of

is

and thus a reasonable estimator of the entropy

is

In the following theorem, we derive the expression for the asymptotic mean of the estimator

. Apart from the arguments for the interchange of the limit and the integral signs, the proof is similar to that of Theorem 8 of Singh

et al. [

12], who in their proof interchange the limit and the integral signs without mentioning the conditions under which it is allowed.

Theorem 2.1. Suppose that there exists an

, such that

and

Then, for a fixed

(not depending on

n)

where

is defined by (9),

,

and

is Euler’s constant.

Proof. Let

Then

are identically distributed random variables. Therefore,

where, for a given

,

is a random variable having the same distribution as that of the conditional distribution of

given

.

where

denotes the inverse function of

. Using standard arguments, we get

where

is defined by (5). For a fixed

,

and for almost all values of

, using Lemma 2.1, we have

Therefore, using the Poisson approximation to the binomial distribution, we get

for almost all values of

. For a fixed

, let

be a random variable having the pdf

Then, in view of (13),

where

stands for the convergence in distribution. For each fixed

, it can be verified that

Under the condition (10), it can be shown (for details, see the

Appendix) that, for almost all values of

, there exists a constant

C (not depending on

n) such that for all sufficiently large values of

n

Then, in view of (14) and the moment convergence theorem, it follows that

for almost all values of

Using Fatou’s lemma, we get

by (10). Therefore,

Now the result follows from (12) and (16).

Since the estimator

is not asymptotically unbiased, we propose the following (asymptotic) bias corrected estimator for estimating the entropy

:

Thus, we have the following corollary to Theorem 2.1.

Corollary 2.1. Under the assumptions of Theorem 2.1, the estimator is asymptotically unbiased for estimating the entropy .

The following theorem provides conditions under which the estimator is consistent for estimating the entropy .

Theorem 2.2. Suppose that there exists an

, such that

and

Then, for a fixed

(not depending on

n),

and thus

is a consistent estimator of the entropy

.

Under conditions (18) and (19), in the proof of Theorem 2.2, the steps involved in justifying the interchange of the limit and the integral sign are tedious but virtually identical to the arguments used in the proof of Theorem 2.1. The remaining part of the proof is identical to the proof of Theorem 11 of Singh

et al. [

12]. We therefore omit the proof of Theorem 2.2.

Remark 2.2. For small values of , the function involved in the evaluation of estimate can be computed using numerical integration. For moderate and large values of m, which is the case with many molecules encountered in molecular sciences, using the central limit theorem one can get a reasonable approximation of by the cumulative distribution function of a normal distribution having mean and variance .

3. Nearest Neighbor Estimates of Entropy Based on Circular Distance

Let

. In order to construct nearest neighbor estimates of entropy based on the distance function

, defined by (4), we require the volume of the ball to be

centered at

and having radius

.

Lemma 3.1. Let

,

and let

be the volume of the ball

, defined by (20). Then

where

denotes the cumulative distribution function of the sum of

m independent and identically distributed random variables each having a beta distribution with parameters

and

Proof. We have

where

are independent and identically distributed uniform random variables over the interval

.

Define . Then, are independent and identically distributed beta random variables, having parameters . Hence the result follows.

Remark 3.1. (i) For

and

,

(ii)

satisfies a similar recursion relation as that satisfied by

and given in Remark 2.1 (iv).

For

, let

denote the circular distance of

from its

closest neighbor with respect to the circular distance

, defined by (4). Assume that the sample size

n is sufficiently large, so that on average the distances

are small. Then, based on approximation (7), a reasonable estimator of

is

and thus a reasonable estimator of the entropy

is

The proof of the following theorem is identical to the proof of Theorem 2.1 and therefore it is omitted.

Theorem 3.1. Suppose that there exists an

such that (10) holds and

Then, for a fixed

(not depending on

n),

where

is defined by (21).

Since the estimator

is not asymptotically unbiased, we propose the following (asymptotic) bias corrected estimator for estimating the entropy

:

Thus, we have the following corollary to Theorem 3.1.

Corollary 3.1. Under the assumptions of Theorem 3.1, the estimator is asymptotically unbiased for estimating the entropy .

The following theorem provides conditions under which the estimator is consistent for estimating the entropy . The proof of the theorem follows using the arguments similar to the one given for the proof of Theorem 2.2.

Theorem 3.2. Suppose that there exists an

such that (18) holds and

Then, for a fixed

(not depending on

n),

and therefore, under conditions (18) and (24),

is a consistent estimator of the entropy

.

Remark 3.2. (i) Using Remark 3.1 (i) and the fact that

is a decreasing function of

, for

and

, we have

since

Therefore, for

and

, we have

Thus for

, estimators

and

, based on circular distance functions

and

respectively, are identical.

(ii) For small values of , the function involved in the evaluation of estimate can be computed using numerical integration. For moderate and large values of m, which is the case with many molecules encountered in molecular sciences, using the central limit theorem one can get a reasonable approximation of by the cumulative distribution function of a normal distribution having mean and variance .

With , (17) and (23) define two classes of estimators for the entropy . The biases and variances of these estimators depend on k, the sample size n, and the pdf and its dimensions m. It would be useful to have the knowledge of the biases and variances as functions of k, n, m, and some characteristic of , such as , where is a function such that a reliable estimate of μ can be obtained using the available data on Θ. We have not been able to derive any meaningful expressions for the biases and variances of the proposed estimators, and this problem is under further investigation.

4. Monte Carlo Results and a Molecular Entropy Example

The performance of an entropy estimator can be investigated rigorously by using Monte Carlo samples from a distribution for which the entropy is known exactly. While analytic distributions of more than two correlated circular variables with exactly calculable entropic attributes do not seem available, one may construct a distribution of higher dimensionality as a product of a suitable number of bivariate distributions. To test the performance of the circular-distance estimator (17), we used an analytic 6-dimensional circular distribution given as the product of three bivariate circular distributions, each of the form [

4]

which, as a circular analogue of the bivariate normal distribution, can be called the bivariate von Mises distribution. Details pertaining to the 6-dimensional distribution used and the Monte Carlo sampling are given in [

20], where the same circular distribution was used in an investigation of the combined mutual-information-expansion and Euclidean-distance-NN method of entropy estimation.

Table 1.

Circular- and Euclidean-distance estimates and , respectively, from samples of size n of the analytic distribution of 6 circular variables; the exact entropy value is (to 4 decimals) .

Table 1.

Circular- and Euclidean-distance estimates and , respectively, from samples of size n of the analytic distribution of 6 circular variables; the exact entropy value is (to 4 decimals) .

| | | | | |

|---|

| 0.05 | 1.86851 | 1.92019 | 1.96379 | 2.00395 | 2.03653 |

| | 2.01225 | 2.09929 | 2.16540 | 2.22380 | 2.27390 |

| 0.10 | 1.81503 | 1.86968 | 1.90481 | 1.92899 | 1.95164 |

| | 1.95640 | 2.01952 | 2.07339 | 2.11255 | 2.14865 |

| 0.20 | 1.80070 | 1.83713 | 1.85672 | 1.87498 | 1.89122 |

| | 1.92305 | 1.96691 | 2.00110 | 2.03086 | 2.05650 |

| 0.40 | 1.8007 | 1.81582 | 1.82840 | 1.83904 | 1.84862 |

| | 1.89363 | 1.92652 | 1.95001 | 1.96939 | 1.98668 |

| 0.60 | 1.80066 | 1.80693 | 1.81496 | 1.82296 | 1.83097 |

| | 1.88696 | 1.90952 | 1.92764 | 1.94358 | 1.95783 |

| 0.80 | 1.79837 | 1.80297 | 1.81063 | 1.81660 | 1.82222 |

| | 1.87936 | 1.89971 | 1.91649 | 1.92946 | 1.94125 |

| 1.00 | 1.79539 | 1.80017 | 1.80566 | 1.81030 | 1.81566 |

| | 1.87317 | 1.89238 | 1.90694 | 1.91850 | 1.92915 |

| 2.00 | 1.79660 | 1.79533 | 1.79736 | 1.79970 | 1.80266 |

| | 1.86471 | 1.87555 | 1.88493 | 1.89298 | 1.90073 |

| 4.00 | 1.79673 | 1.79383 | 1.79404 | 1.79480 | 1.79613 |

| | 1.85696 | 1.86477 | 1.87136 | 1.87702 | 1.88211 |

| 6.00 | 1.79795 | 1.79491 | 1.79385 | 1.79419 | 1.79458 |

| | 1.85403 | 1.86071 | 1.86552 | 1.87029 | 1.87414 |

| 8.00 | 1.79893 | 1.79484 | 1.79373 | 1.79322 | 1.79337 |

| | 1.85240 | 1.85745 | 1.86197 | 1.86545 | 1.86891 |

| 10.00 | 1.80036 | 1.79562 | 1.79426 | 1.79350 | 1.79329 |

| | 1.85170 | 1.85578 | 1.85969 | 1.86287 | 1.86583 |

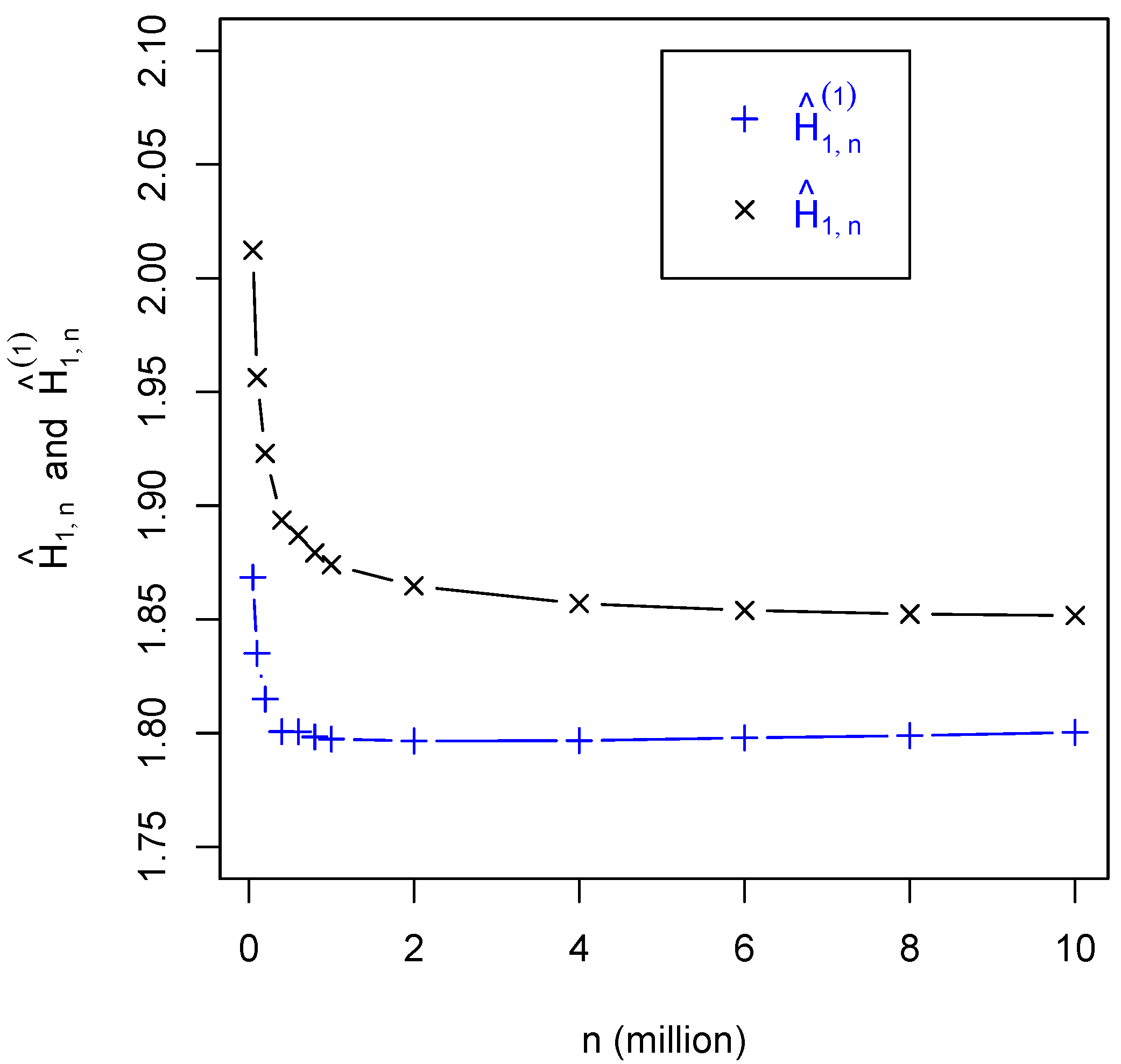

Table 1 presents the circular-distance estimates

,

obtained from samples of sizes in the range

–

, together with the corresponding Euclidean-distance estimates

.

Figure 1 displays the estimates

and

as functions of the sample size

n. Noting that the exact entropy value here is, to 4 decimal places,

, we observe that as

n increases the circular-distance estimates initially “undershoot” the exact value and then start to approach it slowly from below. In contrast, the Euclidean-distance estimates approach the exact value monotonically from above. Interestingly, the biases of the two kinds of estimates at sample sizes

million are approximately equal in absolute value. The behavior of the circular-distance estimates at

is similar to that at

, and the estimate values at different

k’s become very close at

million.

Figure 1.

Plots of the circular- and Euclidean-distance estimates and , respectively, as functions of the sample size n, for the analytic distribution of 6 circular variables; the exact entropy value is (to 4 decimals) .

Figure 1.

Plots of the circular- and Euclidean-distance estimates and , respectively, as functions of the sample size n, for the analytic distribution of 6 circular variables; the exact entropy value is (to 4 decimals) .

To investigate the usefulness of circular-distance NN estimators in the problem of evaluating the configurational entropy of internal rotations in molecules, we used the circular-distance estimator

to estimate the entropy of the joint distribution of internal-rotation angles in the molecule of tartaric acid, where the number of variables is

. Samples of size

n up to 14.4 million of the internal-rotation angles were obtained from a molecular dynamics simulation of the (R,S) stereoisomer of this molecule [

21].

Figure 2 shows marginal histograms, smoothed using a Gaussian kernel, of the seven internal-rotation angles of tartaric acid; note that these marginals display markedly non-Gaussian features. The code ANN [

22] (with our modification for the circular distance

), which utilizes a

k-

d tree algorithm [

23], was used for finding the

k-th NN distances between sample points.

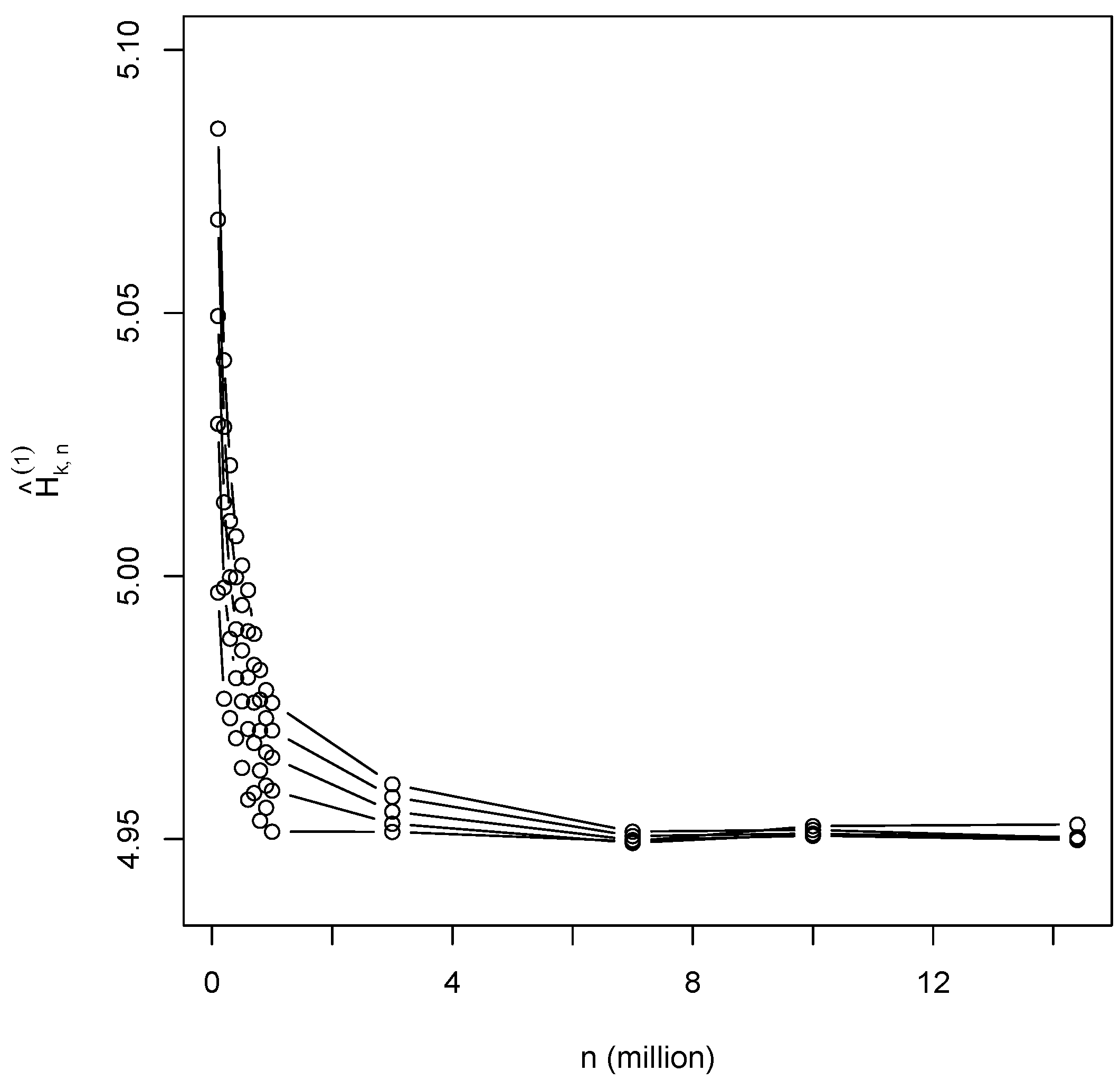

Figure 3 presents the estimates

,

as functions of the sample size

n. The values of

decrease as

n increases, while, at a fixed value of

million, they increase as

k increases; at greater values of

n, the estimates at different

k’s become quite close in value.

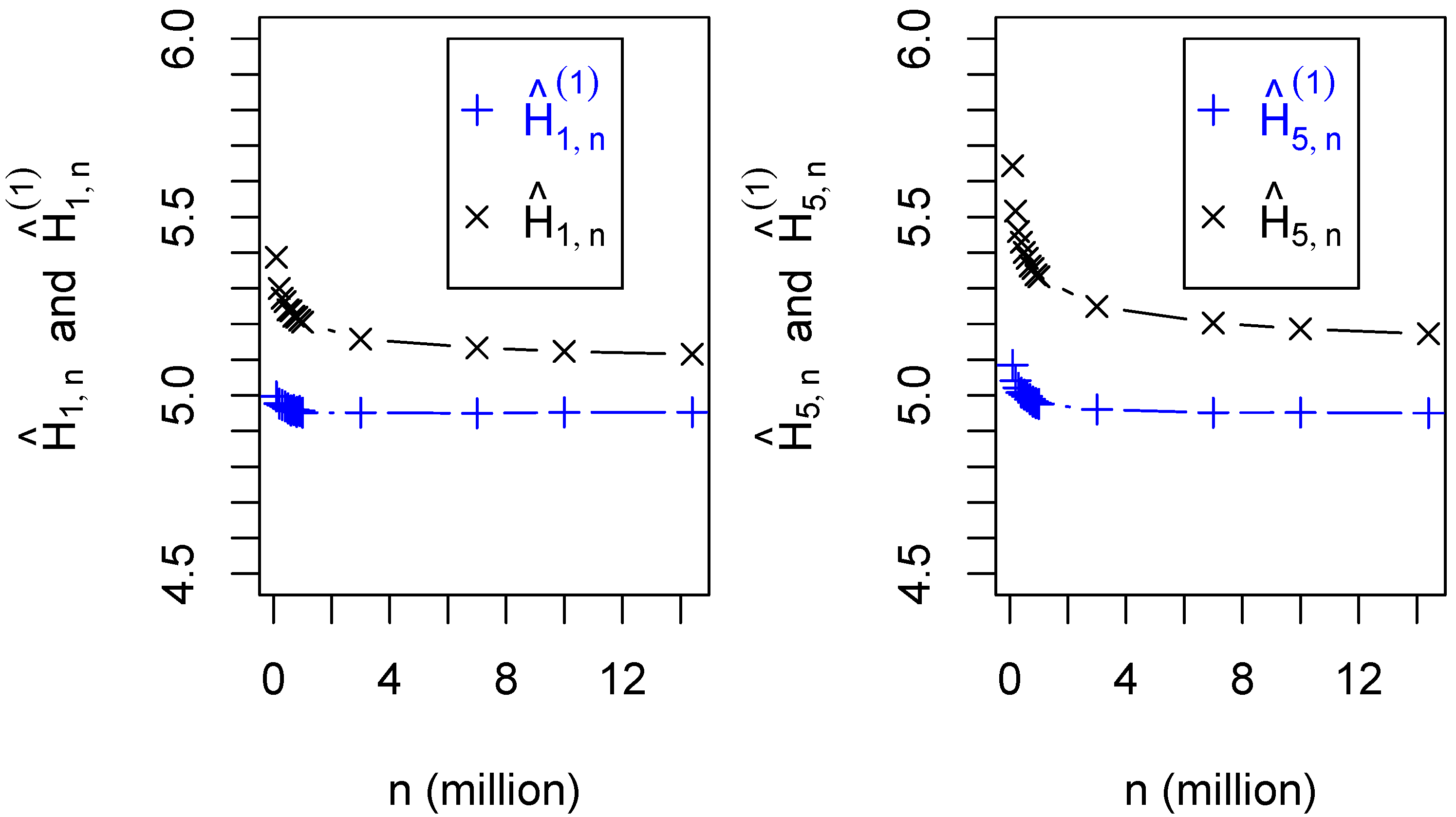

Figure 4 compares the circular-distance estimates

and

with the corresponding Euclidean-distance estimates

. We note that an

extrapolated Euclidean-distance estimate

was obtained for this entropy in [

21]. Again, as in the case of the analytic circular distribution, this value approximately equals the arithmetic mean of the circular and Euclidean distance estimates at sample sizes

million.

Figure 2.

Smoothed marginal histograms of the internal-rotation angles , of the (R,S) isomer of tartaric acid obtained by molecular dynamics simulations.

Figure 2.

Smoothed marginal histograms of the internal-rotation angles , of the (R,S) isomer of tartaric acid obtained by molecular dynamics simulations.

Figure 3.

Circular-distance nearest-neighbor estimates , of the entropy of the 7-dimensional joint distribution of internal-rotation angles in the (R,S) isomer of tartaric acid as functions of the sample size n. The estimates at a fixed million increase in value as k increases.

Figure 3.

Circular-distance nearest-neighbor estimates , of the entropy of the 7-dimensional joint distribution of internal-rotation angles in the (R,S) isomer of tartaric acid as functions of the sample size n. The estimates at a fixed million increase in value as k increases.

Figure 4.

Circular-distance nearest-neighbor estimates

and

of the internal-rotation entropy of tartaric acid as functions of the sample size

n compared with the Euclidean-distance nearest-neighbor estimates

and

. An

extrapolated estimate is

[

21].

Figure 4.

Circular-distance nearest-neighbor estimates

and

of the internal-rotation entropy of tartaric acid as functions of the sample size

n compared with the Euclidean-distance nearest-neighbor estimates

and

. An

extrapolated estimate is

[

21].

Perhaps surprisingly, the results of both the analytic-distribution and molecular-simulation studies undertaken here indicate that only when relatively small data samples are available, the use of a circular-distance estimator has some advantage over the Euclidean-distance estimator. On samples of large size, needed for sufficient convergence of an NN estimate of the entropy of a multivariate distribution, the circular-distance estimates obtained did not have a significantly smaller bias than the Euclidean-distance estimates. In view of such findings, one may question whether the additional computational complexity of a circular-distance estimate is worth the effort. However, we observed that as the sample size increased, the circular NN distances in the sample became quickly so small that the circular-distance estimator coincided in value with the simpler Euclidean-distance estimator in which the same NN-distance values were used. This is explained by the fact that when the circular NN distances , the estimator can be replaced with the estimator in which the NN distances are substituted for the Euclidean NN distances ; this fact follows directly from Remark 2.1 (iii). The only extra computational effort is then expended in finding the circular, instead of Euclidean, NN distances in a given sample.