1. Introduction

One of the important application scenarios of the fifth-generation mobile communication network is massive machine-type communication [

1]. Worldwide, massive sensor devices are widely deployed to perform the tasks of environment monitoring, surveillance, and data collection to help build intelligent homes and intelligent cities, which greatly facilitate human life and improve manufacturing efficiency [

2,

3]. The IoT and traditional cellular networks form a terrestrial heterogeneous network, which can provide users with stable communication services.

UAV-assisted air–ground heterogeneous networks will be an important network architecture and deployment scenario in 5G and beyond [

4,

5]. When natural disasters occur and ground networks are destroyed due to the damage of communication infrastructure, UAV communication provides a promising solution to restore the communication of ground users by quickly setting up an air base station (BS) and establishing UAV-assisted air-ground heterogeneous networks [

6]. Compared with ground BS, UAV communication has the advantages of flexible deployment, high mobility, and strong line-of-sight (LoS) paths, and has been widely used in military, public and civilian fields [

7].

However, there are still many challenges in the air–ground heterogeneous network. On the one hand, although the deployment of the UAV can restore the communication of the target area, there are still areas that are not illuminated.The ground cellular users (CUs) and IoT users (or sensor users (SUs)) located in the coverage hole cannot build direct communication with the UAV due to poor channel conditions. In this case, device-to-device (D2D) technology [

8] can be employed to establish multi-hop communication for users outside the coverage area, then the data is transmitted through relays in a decode and forward (DAF) manner. To save energy, relay selection should be performed in an energy-effective way. In other words, the users outside the coverage area should comprehensively consider their own energy cost and rate requirements, and select the relay that can provide the required rate and consume as little energy as possible.

On the other hand, NOMA transmission provides an effective solution to satisfy the transmission requirement for different users using limited system bandwidth. Compared with orthogonal multiple access (OMA), NOMA can increase the spectrum efficiency by allowing multiple users sharing the same channel resource. Reducing energy consumption and guaranteeing the QoS of users are the key problems in NOMA-enabled networks. Since ground CUs and IoT devices are energy-constrained, power control is a crucial issue to be handled. In addition, to facilitate rescue, the rate requirement of users should be guaranteed. Therefore, the trade-off between reducing energy consumption and ensuring the QoS of users in the air–ground heterogeneous network should be achieved, which pose a huge challenge to the traditional orthogonal resource allocation scheme. However, in the literature, there is little research focusing on reducing energy consumption while guaranteeing the different QoS requirements of users in NOMA-enabled heterogeneous networks. Hence, the optimization of relay selection and resource allocation in NOMA transmission is needed.

Motivated by the aforementioned analysis, we propose an energy-effective relay selection and NOMA-based resource allocation for a UAV-assisted heterogeneous network. First, to tackle the problem of limited coverage of a single UAV network, we propose to associate inside users and outside users by formulating a many-to-one matching game [

9,

10], which considers the power consumption and the QoS requirements of outside users. Hence, the serving area of the UAV can be effectively extended and the relay selection is performed at the minimum energy cost. To relay the data for outside users, different QoS requirements are needed for the relays, hence the QoS requirements for users are diversified.

Second, to serve multiple users using limited resources, we adopt NOMA transmission scheme to increase spectrum efficiency. The interference in the network is incurred due to the non-orthogonal resource allocation in NOMA transmission. Thus, the sub-band allocation scheme should be carefully designed and uplink transmit power should be fine-tuned to alleviate the interference and guarantee the QoS of users. To this end, we propose a deep reinforcement learning (DRL)-based resource allocation algorithm to select appropriate power level and sub-band for ground users, which can achieve the trade-off between reducing energy consumption and guaranteeing user’s QoS.

The main contributions of this paper can be summarized as follows: (1) we model the problem of relay selection and resource allocation for NOMA transmission in UAV-assisted heterogeneous networks and propose an energy-effective relay selection and NOMA-based resource allocation algorithm. (2) We perform the relay selection for outside users and inside users by designing a low-complexity many-to-one matching algorithm, which can save energy and guarantee the QoS of the users. (3) To satisfy the diverse QoS requirements of inside users, we propose an DRL-based power and sub-band allocation algorithm, which can achieve the balance of saving energy and guaranteeing QoS. (4) The performance of the proposed algorithm is validated under different network parameters. Simulation results demonstrate that the proposed algorithm can achieve better performance than state-of-the-art NOMA schemes and OMA schemes in terms of energy consumption and QoS satisfaction.

The rest of this paper is organized as follows: the related works are concluded in

Section 2. In

Section 3, single UAV-assisted heterogeneous network models and problem formulation are presented in detail. In

Section 4, we illustrate our proposed energy-effective relay selection and DRL-based NOMA transmission scheme. The simulation results are presented and discussed in

Section 5. Finally, we draw the conclusions in

Section 6.

2. Related Works

In the literature, UAV-assisted air–ground heterogeneous networks have been extensively studied. To further increase the spectrum efficiency, the combination of NOMA and UAV communication has been studied. In [

11], the authors studied the NOMA transmission model in UAV-assisted IoT systems. First, the authors used matching game to optimize the resource block (RB) allocation in the system, and then successive convex approximation was used to optimize the transmission power and UAV’s height. In [

12], the authors considered a terrestrial heterogeneous IoT where multiple ground SUs and CUs coexist, and proposed an effective successive interference cancellation (SIC)-free NOMA transmission scheme to optimize RB allocation and power allocation. In [

13], the authors proposed a game theory-based NOMA scheme to maximize energy efficiency (EE) in NOMA-based fog UAV wireless networks. In [

14], a channel gain-based NOMA scheme was proposed and network EE was optimized using alternating optimization. In [

15], the authors proposed jointly optimizing UAV trajectory planning and sub-slot allocation to maximize the sum-rate of IoT devices in UAV-assisted IoT. The authors of [

16] studied the multi-NOMA-UAV assisted IoT system to increase the number of served IoT nodes and improve the system EE. In [

17], the power allocation was optimized in NOMA clustering-enabled UAV-IoT. In [

18], the joint optimization of UAV deployment and power allocation was proposed to maximize the sum-rate of ground users.

Due to the scarcity of spectrum resources and energy constraints, resource allocation and power optimization have also become the focus of research [

19,

20,

21,

22,

23,

24]. The authors in [

19,

20,

21,

22,

23] studied the ground data collection assisted by UAV and minimized the energy consumption of IoT devices in an UAV-assisted ground IoT by optimizing the UAV trajectories [

19,

20] and 3D deployment [

23]. The authors in [

21] also considered the UAV-assisted IoT, aiming to minimize the total time of data collection for the UAV to save energy. By applying alternation optimization and successive convex approximation, the UAV’s trajectory and transmit power have been optimized, thereby saving energy while ensuring the users’ QoS. The authors in [

22] optimized the UAV’s trajectory in terms of flight speed and acceleration, and obtained the optimal solution of UAV trajectory and uplink transmission power under two modeling problems of maximizing the minimum average rate and maximizing EE. The authors in [

24] comprehensively considered the power optimization, RB allocation and UAV location optimization under the SAG-IoT architecture to achieve the optimization of energy efficiency.

The research on relay-based transmission has also been carried out. The network performance exploiting UAVs [

25,

26,

27] and mobile devices as relays [

28,

29,

30] are studied respectively. The authors in [

28] exploited ground devices to dispatch the files transmitted by UAV by establishing ground D2D links and adopted graph theory to optimize the resource allocation in NOMA-enabled transmission. The authors in [

29,

30] designed a multi-hop communication algorithm for the ground IoT, and obtained the outage probability for relay links.

In addition, the security problem in UAV-assisted network was widely studied [

31,

32]. The control schemes based on blockchain and artificial intelligence were proposed to secure drone networking [

33,

34]. In [

35], an enhanced authentication protocol for drone communications, and the authors proved that their algorithm could better fight drone capture attacks. In [

36], a lightweight mutual authentication scheme based on physical unclonable functions for UAV-ground BS authentication was proposed. In [

37], the authors studied the physical layer security issue in UAV-assisted cognitive relay system, and proposed alternate optimization-based algorithm to maximize average worst-case secrecy rate.

The research comparison of energy saving for users and coverage expand for UAVs in the existing literature has been listed in

Table 1. It can be seen that these studies either did not reduce the energy consumption of ground users while guaranteeing the user QoS, or did not adopt the NOMA transmission scheme to increase spectrum efficiency. Therefore, the research in the existing literature cannot guarantee the users’ QoS and save energy under insufficient coverage and limited resources of UAV. In addition, energy saving in relay selection is not considered enough, and few studies focus on user QoS and energy consumption in NOMA-enabled air-ground networks. The existing research is performed on the premise that the system bandwidth is sufficient and the users’ QoS can be guaranteed. However, for the scenario with limited system bandwidth and massive users, it is difficult to ensure the QoS requirements of all the users. Motivated by this, we propose our energy-effective relay selection and resource allocation algorithm in NOMA UAV-assisted heterogeneous networks.

3. System Model

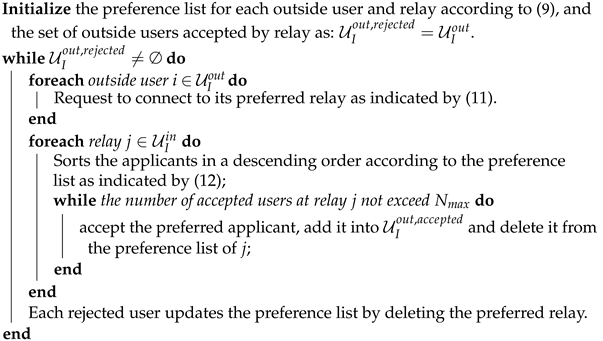

In this paper, we consider a single UAV-assisted heterogeneous network, as shown in

Figure 1. After the disaster, the ground BS in the disastrous area is out of service. To deliver the emergency calls and messages of ground CUs and the monitoring data of IoT users (sensor users), a rotary-wing UAV is deployed to hover above the target area and acts as aerial BS to serve ground users. In this paper, we focus on the scenario where the serving range of the UAV can not cover all the users in the target area. Therefore, we ignore the impact of surrounding UAVs and consider the single UAV network. Without loss of generality, we consider that there are some users that locate outside the coverage area and cannot directly connect to the UAV. Therefore, the D2D-enabled multi-hop transmission is employed. For simplicity, we consider the number of hops is two, that is, a user outside the UAV coverage transmits its data to the relay who is in the coverage area of the UAV, then the data will be delivered to the UAV from the relay.In addition, we assume that the channel resource in the network is limited. In this case, OMA transmission fails to provide service to a large number of ground users, and we adopt NOMA scheme to transmit data for inside users. The reason is that NOMA allows multiple users to transmit on the same channel resource, which greatly improves the spectrum efficiency. This enables UAV to serve a large number of users with limited resources.

In

Figure 1, the coverage of UAV is partitioned into multiple rings. Clustering is performed by selecting the users from different rings, e.g., inside CU #1, inside CU #2 and inside SU #1 form a NOMA cluster. The users in the same NOMA cluster occupy a unique part of the system bandwidth, and there is no interference among the users in different clusters.

We denote the set of ground users as

, which is comprised of CUs and SUs denoted as

and

, i.e.,

. The set of SUs are divided into two parts,

and

, which corresponds to the SUs inside and outside the UAV coverage. We consider that the outside users only include SUs and each outside SU can only select an inside SU as its relay. However, the proposed model and algorithm can be extended to other complicated scenarios.We delineate the UAV coverage based on path loss. Specifically, when the path-loss of a local user to the UAV is less than the predefined threshold [

38], the user is regarded as an inside user and can be served by the UAV. Otherwise, it is regarded as an outside user which can only communicate with UAVs through two-hop relay links. For simplicity, we assume the CUs and SUs have different QoS requirements, which are denoted as

and

. In addition, we denote the positions of the UAV and user

as

and

, where

is the horizontal location of the UAV and

h is the height of the UAV, which is fixed after the deployment.

Hence, the distance between the UAV and user

k is calculated as:

Then, the probability of the line-of-sight link between the UAV and user

k is calculated as:

where

a and

b are the constants determined by the environment.

is the elevation angle between the UAV and user

k, which is calculated as:

.

Hence, the path-loss between the UAV and user

k is calculated as:

where

denotes the carrier frequency,

c is the speed of light,

and

denote the attenuation loss in LoS and NLoS links.

For the transmission of the air–ground uplink, we adopt NOMA to increase the spectrum efficiency. In a NOMA-based system, a user will suffer the potential interference from the users that occupy the same spectrum resource. In our proposed scenario, it is assumed that the UAV is equipped with a SIC receiver that can demodulate the target signal from the superimposed signal. At the same time, it is assumed that the demodulation sequence is from the users with the highest received power to the users with the lowest received power. For user

k, the interference comes from the users whose received power is lower than user

k. Therefore, the uplink signal-to-interference-plus-noise ratio (SINR) of user

k at sub-band

n is calculated as:

where

is the uplink transmit power of user

k,

is the set of users who reuse sub-band

n and whose received power level at the UAV is lower than that of user

k,

is the small-scale fading between the UAV and user

k at sub-band

n, which follows the exponential distribution with unit mean.

is the noise power.

Further, the uplink transmission rate of inside user

k (CU or SU) is calculated as:

where

is the binary indicator to show that whether user

k transmit on sub-band

n.

is the bandwidth of a sub-band of the uplink air-ground channel.

For the relay link, the transmission rate is calculated as:

where

is the associated relay for outside user

k,

is the channel gain from outside user

k to relay

,

is the small-scale fading between outside user

k and relay

, which follows the exponential distribution with unit mean.

is the distance between user

k and relay

,

is the bandwidth of a resource block in the relay link.

It is worthwhile to note that the interference among the relay links is not considered as the orthogonal frequency is employed for different links. In addition, we assume the relay links and the air-ground links reuse the same frequency band. However, the interference from the relay links to the UAV can be neglected due to low transmission power and long distance.

In this paper, we characterize the QoS requirement of CUs and SUs as the minimum transmission rate. For CU-

k and SU-

k, the QoS requirement is represented as the minimum transmission rate

and

, respectively. For each relay node

k, its total QoS requirement is constituted by the transmission rate requirements of its own air-ground link and all the outside users connecting to

k.

where

is the set of outside sensor users choosing

k as the relay node.

In this paper, we aim to reduce the energy consumption while guaranteeing the QoS of the users. For the users in

, the transmit power and resource allocation should be optimized to alleviate the intra-cell interference and increase user rate. For the users in

, the relay selection should also be performed in an energy-effective manner. The transmit power of all users is used to characterize the power consumption. In addition, the QoS satisfaction is characterized using the indicator function to denote whether the QoS requirement of a user is satisfied. We design the target problem as follows:

where

is the transmit power of user

k, and

and

are the QoS requirements for CU-

k and SU-

k, respectively.

is the QoS indicator function.

is the weighting factor to characterize the importance of power consumption and users’ QoS satisfaction.

is the tuning coefficient to adjust the transmit power and QoS indicator to the same order of magnitude. C1 and C2 denote the power constraint for CUs and SUs,

and

are the minimum transmit power for CU and SU,

and

are the maximum transmit power for CU and SU. C3 indicates that each inside user can only occupy one sub-band. The symbols used in the paper are described in

Table 2.

4. Proposed Algorithm

As can be seen, problem (

8) is computationally hard. Let us assume that the uplink transmission power of inside users can be discretized into

levels and the total number of sub-bands in the network is

. For inside users, the worst case for the number of combination of power level and sub-band is

. For outside users, the worst case for the number of combination of power level is

. Hence, the total number of combination of power level and sub-band in the network is

, which makes solving problem (

8) time consuming.

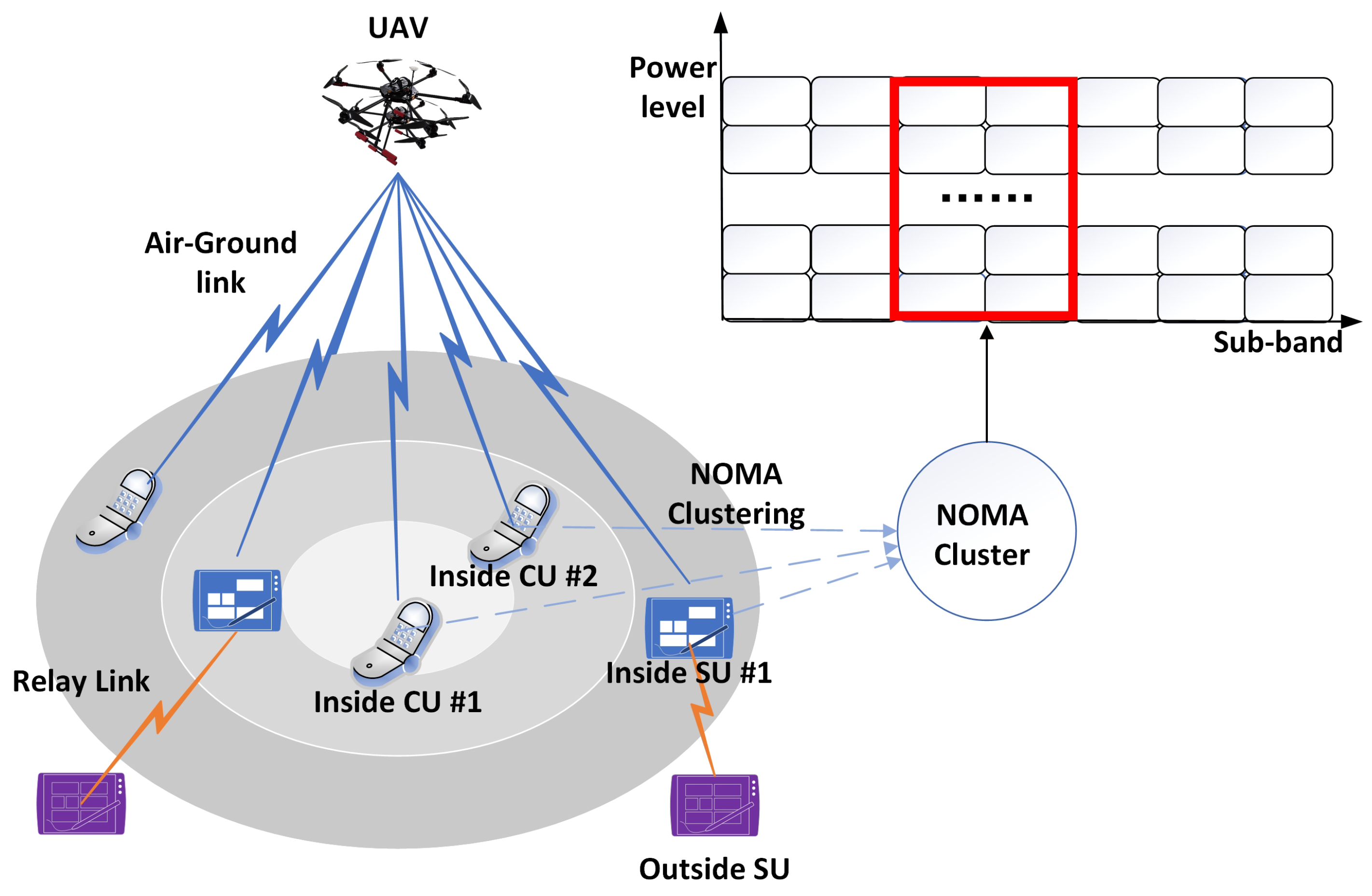

Due to the huge number of combinations of power and sub-bands, we decompose the target problem and optimize the target function for inside users and outside users, respectively. The flowchart of our proposed algorithm is shown in

Figure 2. First, the relay selection is performed to select relay for each outside SU and determine the association between outside SUs and inside SUs. Next, based on the association result, DRL-based power and resource selection algorithm is performed for inside users. Finally, the optimal power and resource selection strategy for inside users is obtained.

In this section, we first propose an energy-efficient relay selection scheme for outside users exploiting the many-to-one matching game, and the energy consumption and QoS requirements in the relay selection process are considered. After determining the relay for each outside user, we perform the joint power and sub-band selection algorithm for the inside users. The deep reinforcement learning is adopted to adjust the power and sub-band selection dynamically in different environment.

4.1. Energy-Efficient Relay Selection Algorithm

To restore the connection for outside users, we first associate the outside SUs and relays (i.e., inside SUs) in an energy-effective manner. The essence of our scheme is to determine the association scheme according to the consumed energy of outside users. We derive the preference list for the relays and outside SUs, respectively, by calculating the utility function related to the consumed energy and sorting them in a descending order. After each outside user proposes their preferred relays, the relays accept the application according to the pre-built preference list and the maximum number of accepted users for each relay. The outside users are more inclined to select the relay that consume as little energy as possible.

The utility for user

to connect to a candidate relay

and the utility for relay

j to accept applicant

i are calculated as:

where

is the static circuit energy consumption at SU,

is the minimum transmit power for user

i to reach the QoS requirement when connected to relay

j, which is calculated as:

In our paper, the association between the outside users and inside users is formulated as a many-to-one matching game. In the proposed matching game, the players are modeled as outside users and inside users. Each player has a preference list and the final association result is obtained based on the preference order:

Definition 1 (preference order). For each outside user (or relay), the preference order is defined as a complete, reflexive, and transitive binary relation over the entire set of relays (or outside users).

For each outside user

, the preference relation over all the possible relays is defined as follows:

which means that user

i is more likely to select

j rather than

as its relay.

For each relay

, the preference relation over all the outside users is defined as follows:

which means that relay

j is preferable for acting as the relay of user

i.

The detailed process of our proposed energy-effective relay-selection algorithm is summarized in Algorithm 1. At the beginning, the outside users and relays exchange their channel state information and other related information. Each outside user and relay builds the preference list according to the utility, calculated as (

9). Then, each outside user makes the connection request to its preferred relay. After receiving the proposal, each relay ranks the applicants according to the preference list. The maximum number of outside users that can be accepted by the relay is set to

. Each relay accepts its preferred applicant and the remaining applicants will be rejected. Then, each rejected applicant proposes the next preferable relay. The association process terminates when all the outside users are associated with a relay.

| Algorithm 1: Energy-efficient relay selection for outside users. |

![Drones 07 00141 i001]() |

4.2. Deep Reinforcement Learning-Based Power and Sub-Band Selection for NOMA Transmission

In this subsection, we perform power and sub-band selection for inside users in a single UAV-assisted heterogeneous network. After executing the relay selection algorithm for outside users, the D2D links between the outside users and the inside relays are established. Therefore, the inside users have different QoS requirements in terms of transmission rate. In this case, the allocation of limited resource among inside users has a significant impact on system performance.

Therefore, we adopt NOMA scheme for the transmission of inside users, which can increase spectrum efficiency by allowing multiple users share the same channel resource. To reduce the complexity of SIC, NOMA clustering is performed by dividing the inside users into different clusters according to their geometry locations [

39]. The users in the same cluster perform the joint power and sub-band selection to reduce the power consumption while ensuring the QoS requirements of the users can be reached. In the NOMA clustering, each cluster occupies an orthogonal frequency band (i.e., sub-band) and there is no interference among the users from different clusters.

However, the resource allocation and interference cancellation inside the NOMA cluster is a crucial issue to be handled. When an external environment (i.e., channel state information, transmission power, or resource occupancy status) changes, traditional NOMA schemes need iterative calculation or to obtain the power and channel selection scheme. In addition, the traditional NOMA schemes fail to guarantee the QoS of users with limited resources.

To solve this problem, we resort to deep reinforcement learning (DRL) for dynamic and adaptive resource allocation to achieve the goal of reducing energy consumption while guaranteeing the users’ QoS. Deep reinforcement learning has the general intelligence to solve complex problems, and can automatically obtain the optimal resource allocation strategy from the changing external environment. DRL is built on the learning and prediction function of reinforcement learning, and uses deep neural networks to provide an autonomous decision-making mechanism for learning agents by forming a powerful approximation function. Compared with traditional NOMA schemes, the proposed NOMA scheme can adaptively obtain the optimal power and channel selection strategy for the inside users using a pre-trained strategy.

Therefore, we first give a brief introduction to DRL, then we illustrate the framework and implementation of our proposed DRL-based power and sub-band selection.

4.2.1. Basis of Deep Reinforcement Learning

The target problem (

8) involves competitive relation between users, which is not convex and cannot be solved by traditional optimization technique. In this case, reinforcement learning (RL) can effectively solve the problem through interacting with the unknown environment and improve the decision-making to maximize the target value.

RL is a method or framework for learning, prediction, and decision-making, which has the natural advantage of automatically obtaining the optimal strategy through the interaction between agents and the environment. The basic elements of reinforcement learning can be represented by a five-tuple . The agents learn and make decisions by sensing the state of the environment . In the time slot, the agent executes an action following the strategy to select a sub-band and transmission power level according to current state . The transition of different states and the obtained rewards are both stochastic, which can be modeled as a Markov decision process (MDP). When the agent takes action , the state transitions from to and the acquisition of can be characterized by the conditional transition probability .

The main goal of reinforcement learning is to find the strategy that maximizes the cumulative discounted reward, which not only considers immediate rewards, but also takes into consideration the discounted future rewards:

where

is the instantaneous reward received by the agent at time slot

t.

is the discount factor, when

is close to 0, the agent is more concerned about short-term rewards; when

is close to 1, long-term rewards is considered more important.

In this paper, we adopt the most commonly-used reinforcement learning method, Q-learning, to solve the MDP problem. In Q-learning, a Q-table is constructed to reflect the strategy, which stores the Q-value of different state-action pairs. According to the Bellman equation and the temporal difference learning method [

40], after the agent observes the state

at time

t and executes an action

, the corresponding Q-value is updated as:

where

is the learning rate. With the state transitions in the learning process, the Q-table gradually stabilizes, and the optimal strategy function for each agent can be obtained.

However, Q-learning (QL) can only effectively solve the problems whose states and actions are discrete and limited due to that the Q-tables can only record the Q-value of a limited number of state–action pairs. However, in many practical problems or tasks, the number of states and actions is large, which makes QL inefficient in solving the problem. In this case, deep Q-learning (DQL) can be used to learn the strategy from the state transitions in high-dimensional and continuous state space successfully using deep learning (DL), which has been widely studied and applied in UAV networks [

41,

42].

The core idea of DQL is approximating a complex nonlinear Q-function

using deep Q-network (DQN):

where

is the Q-value function approximated by DQN with parameter

,

is the Q-value function with the best future reward.

In DQN, the input is the state vector, and the output is the value function vector containing the Q-value of each action under the state. At time

t, the agent observes the state

, chooses the action

following the strategy, and receives the reward

. Then, the optimal DQN parameter can be calculated by minimizing the following loss function:

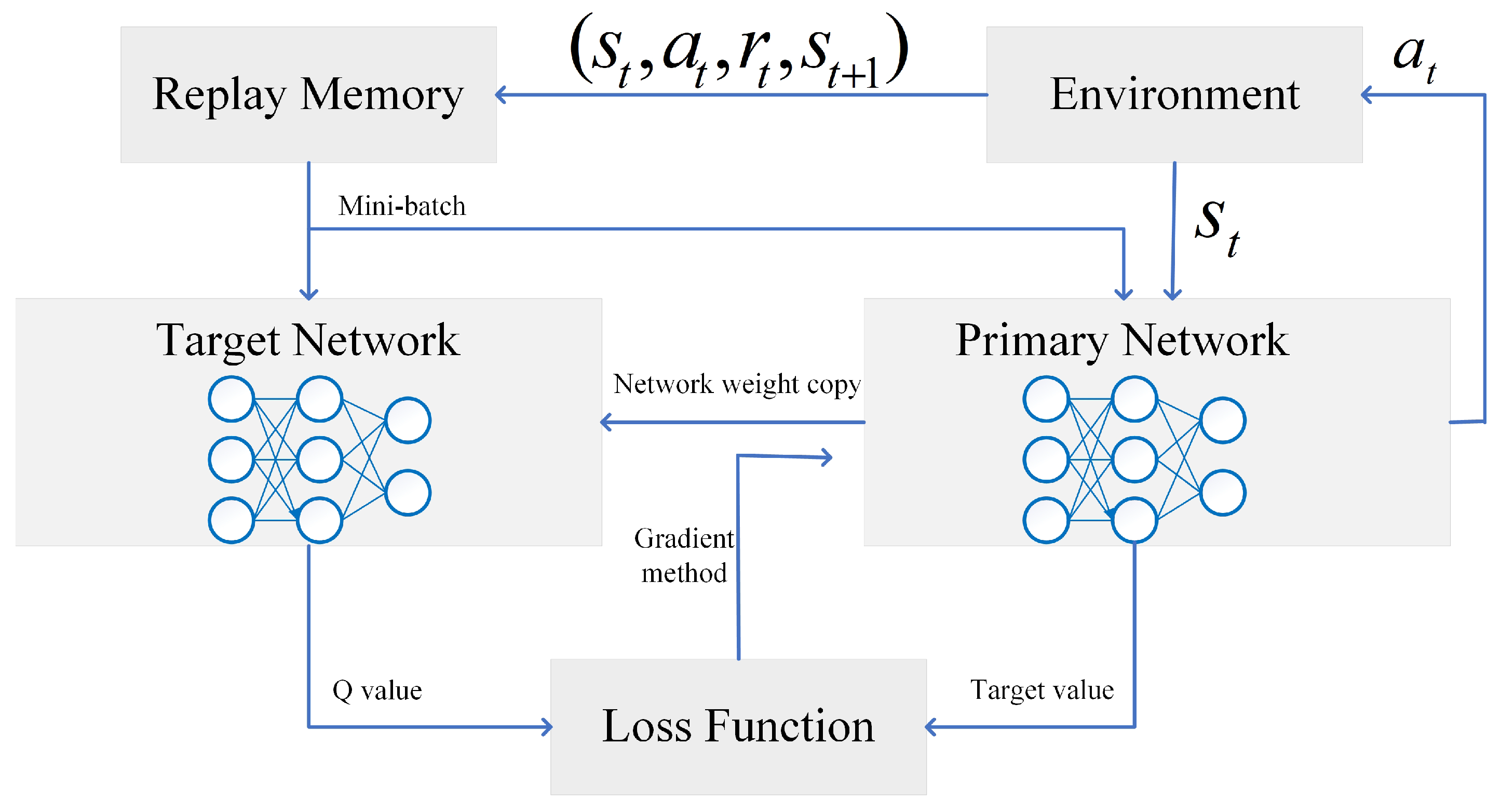

In addition, to overcome the problems of non-convergence and instability, experience replay and target network [

43] are introduced into DQN to stabilize the learning process. To reduce the gap between the target Q-value obtained through experience replay and the Q-value calculated by the primary Q-network, the parameter

of the neural network is updated through the back propagation using the gradient-descent method [

40]. Hence, the loss function to be minimized is defined as:

where

is the mini-batch set selected from replay buffer. The detailed structure of DQN is shown in

Figure 3.

4.2.2. Customized DQN Design

In the proposed reinforcement learning framework, the agents are the inside users, and the actions are all the combinations of power level and sub-band that can be selected by each agent. The uplink transmission power of inside users is discretized into levels, and each cluster is assigned to sub-bands, hence the dimension of the action space of each agent is . The observable environment state of each agent k at time slot t consists of the following parts:

The instantaneous channel state information of all the sub-bands at time-slot : , where is the number of sub-bands of each cluster, and is the small-scale channel gain of sub-band n between the UAV and user k at time-slot .

The interference power level that user k receives in each sub-band at time-slot : , where denotes the potential interference at sub-band n that agent k receives at time-slot .

The ACK indicator vector of users in the same cluster with the agent: , where means that the QoS requirement of user k is reached and is the total number of users in the same cluster.

Therefore, the observed state at time

t for each agent can be expressed as

.According to the agent’s observed state

and the selected action

at time-slot

t, the environment will transit to a new state

, and the agent will receive an immediate reward

. In this paper, we aim to reduce the energy consumption of inside users while ensuring that the QoS of outside users is not degraded. In the proposed reinforcement learning framework, the reward function is consistent with the objective function (

8), which consists of two parts, namely the power consumption and the QoS indication summation of all the users in the same cluster. Specifically, the instantaneous reward function for agent

k (inside CU or SU) at time-slot

t is designed as:

where

and

denote the CUs and SUs in the same cluster with user

k,

.

4.2.3. Implementation of Deep Q-Learning

In deep Q-learning, there are two stages: offline training and testing. Unlike DL, there is no concept of a training data set or test data set in DQN, and each agent learns the optimal strategy by collecting experience from the transition of states, and then the performance of learned policy is tested in realistic environment. In the offline training stage, the agent traverses the states as much as possible by interacting with the environment, continuously learns and improves its action selection strategy, and finally obtains a stable Q-function approximation. When the offline training process is completed, the agent already has the knowledge of which action to perform under different environment to get the best cumulative discounted reward. In the testing phase, the agent exploits the learned policy (approximation function) to guide the action selection in different environment state.

Due to the independence of the agents’ execution of actions, when the actions are performed synchronously, the agent has no knowledge of the actions selected by other agents. In this case, the value of in the input state vector is not latest updated, and the state observed by each agent cannot accurately represent the environment in real time. To this end, the agents are designed to update their actions asynchronously: in each time slot, only one agent performs the action selection. Under the asynchronous strategy, each agent can observe the environmental change caused by the behavior of other agents through recording and in the input state vector. In this way, the wrong action selection caused by inaccurate observation of the environment state can be mitigated.

The detailed process of implementing DQL is presented in Algorithm 2. At the beginning of training, the structure of two deep neural networks and other parameters are initialized. Then, each agent interacts with the environment in an alternative manner. The agent chooses an action according to the

-greedy strategy, which is a commonly-used random strategy in deep reinforcement learning. The agent randomly selects an action with probability

, and executes the strategy of softmax action selection with probability

[

40]. Under softmax policy, the possibility of the agent choosing action

a is calculated as:

where

is the environment factor,

is the Q-value of action

a under state

.

| Algorithm 2: Deep Q-learning for power and sub-band selection. |

![Drones 07 00141 i002]() |

5. Numerical Results

In this section, we analyze the convergence and effectiveness of the proposed algorithm. We assume that the target area is a circle with radius of 500 m, where a UAV with fixed height is deployed as UAV-BS. Fixing the height and the coverage radius of the UAV, 128 users are randomly distributed in the considered area, and 64 users are located in the coverage of UAV served by air-ground links, while 64 users are located outside the coverage, which can only be served by relay links. The system bandwidth is 10 MHz, which can be shared by inside users and divided into multiple sub-bands. We set the number of available sub-bands for each cluster to four, which means each sub-band occupies a quarter of the bandwidth allocated to each cluster. Hence, the bandwidth of each sub-band is related to the number of clusters. For example, the bandwidth of each sub-band under four clusters is twice of that under eight clusters.

To characterize the performance gain of proposed NOMA, we compared the performance of the proposed algorithm with the following algorithms:

The simulation is conducted by using MATLAB/Simulink; environment modeling in MATLAB and simulink training with a deep reinforcement learning algorithm is adopted. In the proposed DQN, the neural network structure is constituted by an input layer, two fully connected layers and an output layer, and each fully connected layer has 50 neurons. The size of replay buffer and mini-batch are set to 30,000 and 32, respectively. At the beginning of the training stage,

is initialized to 0.9 for extensive exploration of all the possible actions under different states, and gradually decreases to 0.1 as the training progresses to speed up the convergence. The discount factor

is set to 0.9, and the target network is updated by copying the weight from the primary network every 200 time slots. The execution of DQN is performed in epochs, where each epoch consists of 100 time slots and the final state of current epoch is delivered into the next epoch as the input state. The tuning factor

is set as follows: in the objective function (

8), when the user’s transmission power is set to 25 dBm and the user’s QoS is satisfied, the power term of the inside users is equal to the QoS satisfaction term of the users. When

,

, and

is dynamically adjusted according to different value of

. The detailed system parameters are shown in

Table 3.

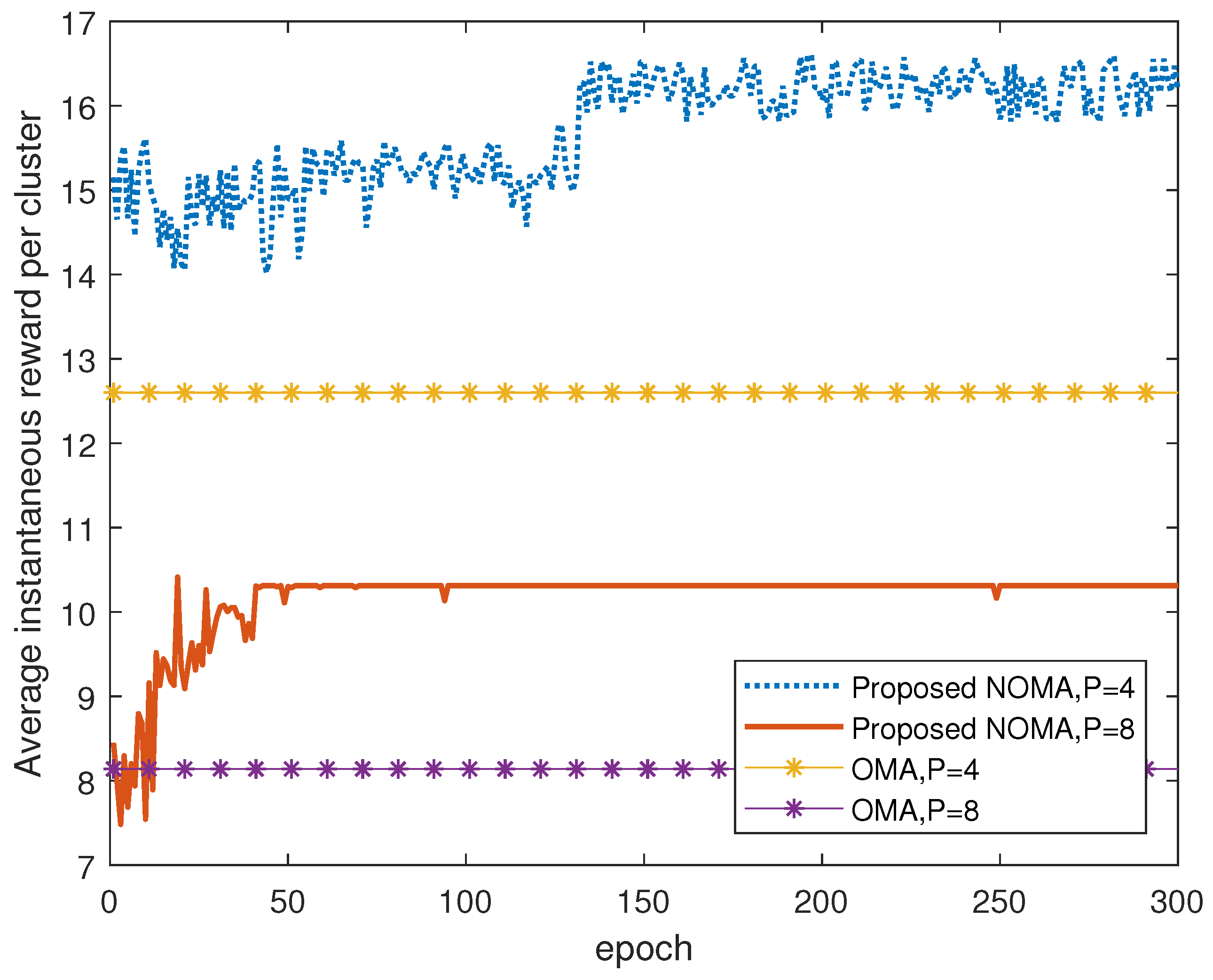

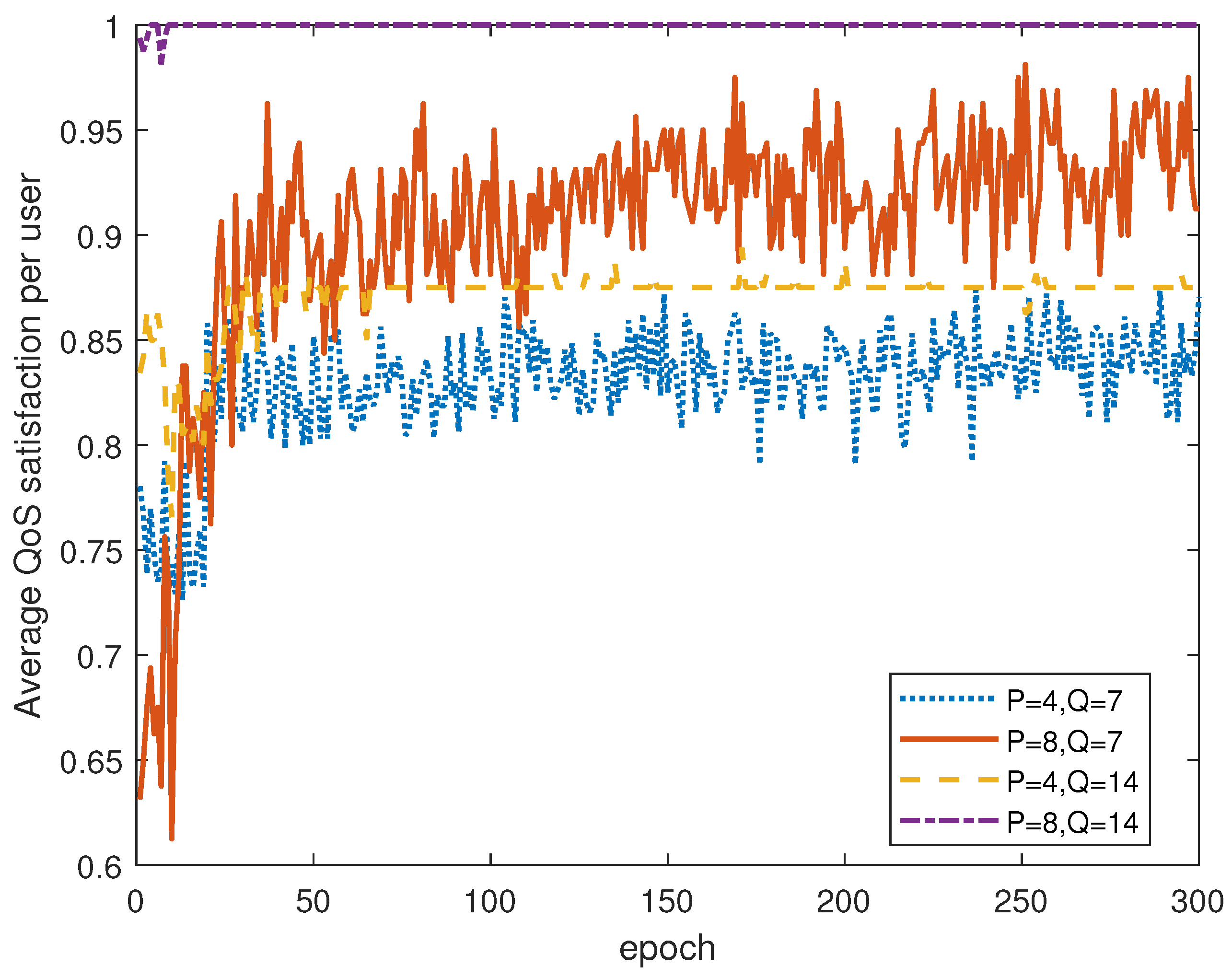

We first verify the convergence of the proposed NOMA algorithm by showing the change of the average real-time reward of clusters during the DQN training process. The number of users is fixed at 32. It can be seen from

Figure 4 that the average reward under proposed NOMA outperforms OMA under different numbers of clusters,

P. At the beginning of training, the agents tend to select random actions to traverse the environment and evaluate the Q-value of the possible actions. With the continuous increase of experience samples and the improvement of DQN’s approximation of the Q-function, the real-time reward increases rapidly. As the learning progresses, the strategy gradually stabilizes, and the real-time reward converges. After several epochs, the DQN curve tends to converge, which means that the optimal strategy has been learned. Another observation is that in the case of

, it takes a longer time (around 135 epochs) to converge than the case of

. When

, the users in the same cluster is increased to 16, the interaction process between each agent and the environment is more complicated due to the increased number of agents, and therefore the speed of learning the optimal strategy slows down.

Next, we explore the impact of several key factors on system performance, including the number of power levels and clusters, the number of users in the system, and the QoS requirement for users.

In

Figure 5 and

Figure 6, we explore the power consumption and QoS satisfaction changes with the number of clusters,

P, and the number of power levels,

Q. In

Figure 5, we plot the average transmit power for our proposed NOMA under different

P and

Q. When the number of clusters

P is fixed, the power consumption decreases as the number of power levels

Q increases. The reason for this is the agent is more declined to select a lower power level under the motivation to increase the reward. Under this circumstance, more power levels enable the agent to have more actions to select in the learning process, and the agent tends to reduce its own power consumption while ensuring QoS to increase the reward. When

Q is fixed, the power consumption of

clusters is greater than that of

. As the number of users in the cluster is reduced, the number of users in each sub-band is reduced, and the interference level among users sharing the same sub-band is reduced. In this case, complex SIC is unnecessary. Therefore, compared with

, an upper transmission power is needed to meet the QoS requirements of users, thus increasing the energy consumption.

Figure 6 shows how the QoS satisfaction varies with different

P and

Q. QoS satisfaction is defined as the ratio of the number of users whose QoS requirement is satisfied to the total number of users. In the case of

, when

Q increases from 7 to 14, the agent has more feasible actions to select, thereby increasing the probability of increasing the transmission rate and reward. More power levels are beneficial to SIC, thereby increasing the QoS satisfaction. However, when the number of clusters is increased to eight, there is only a slight difference in the QoS satisfaction under two different power levels. This is because there are only four users in each cluster, sharing two sub-bands. Therefore, the interference between users is trivial and the interfering signals can be readily differentiated by SIC, which guarantees that most users can reach their QoS requirements.

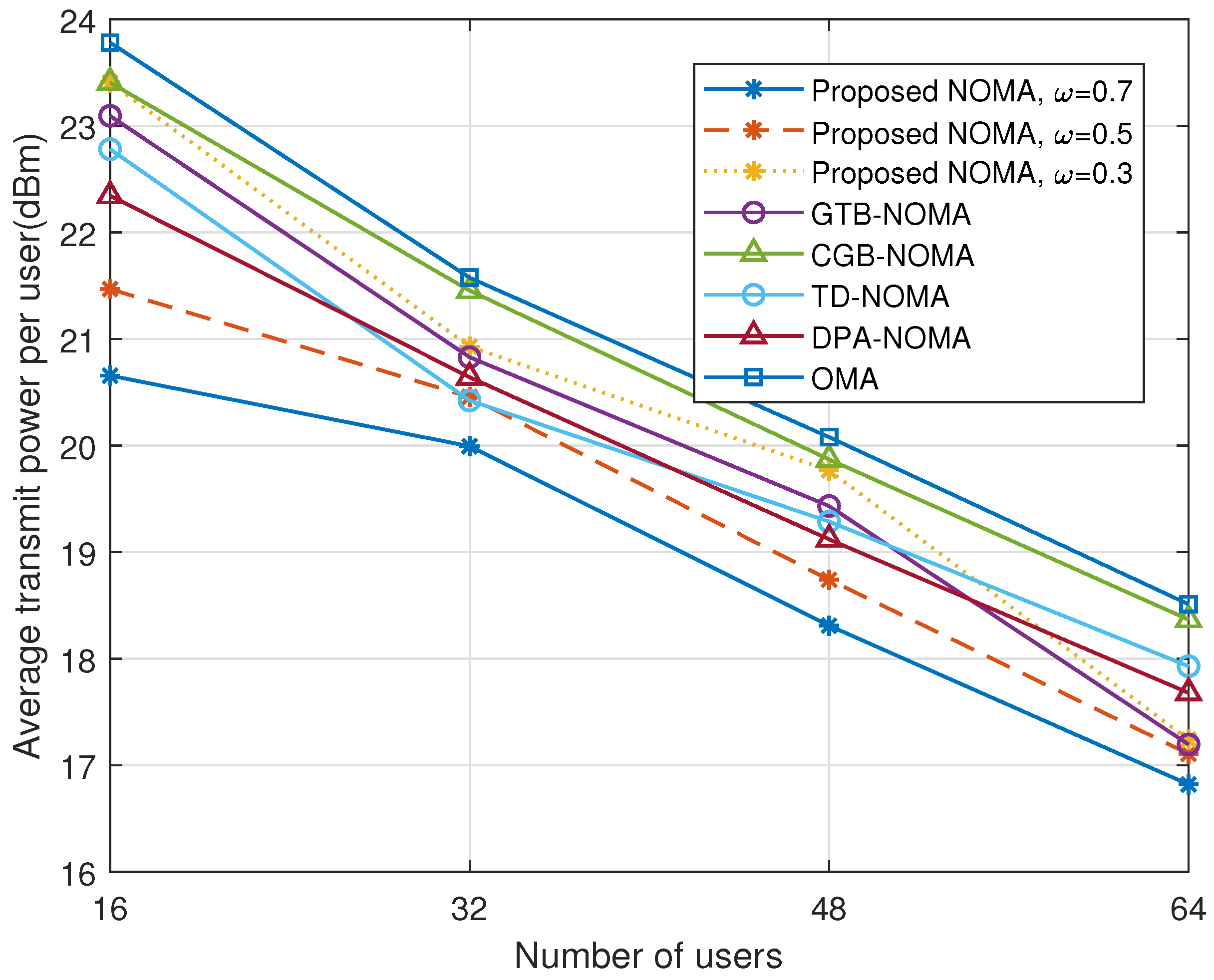

In

Figure 7 and

Figure 8, we evaluate the impact of the number of users on system performance. The average transit power per user and average QoS satisfaction per user under two algorithms are presented, respectively. It can be seen from the figures that as the number of users increases from 16 to 64, the average transmit power per user and QoS satisfaction per user both decrease. Compared with state-of-art NOMA schemes and OMA scheme, the proposed NOMA can achieve better performance in terms of transmit power and QoS satisfaction, which further validates the effectiveness of the proposed algorithm. For the proposed NOMA, as users increase, the number of users in each cluster increase. In this case, the interference within the cluster increases, resulting in the decrease in QoS satisfaction of the network. However, NOMA can provide a larger sub-band bandwidth than OMA, and reduce intra-cluster interference using SIC. Therefore, better performance can be achieved under NOMA compared with OMA. In addition, the proposed NOMA also outperforms state-of-art NOMA schemes thanks to the improved power and sub-band allocation strategy obtained by DRL. We also explore the effect of

on system performance. As can be seen, when

is increased from 0.3 to 0.7, the average transmit power decreases and the QoS satisfaction decreases. The reason is that the increased

makes the reward function put more emphasis on saving energy, hence the transmit power decreases. On the other hand, the emphasis on QoS satisfaction is lowered, which results in degraded QoS satisfaction.

In

Figure 9, we fix

bit/s and explore the impact of different

on system performance. We fix the number of clusters

and the number of power levels

, and investigate the power consumption of proposed NOMA. It can be seen from the figure that as the user’s QoS requirement increases, the users’ power consumption gradually increases. When

is increased from 80 bit/s to 200 bit/s, the power consumption increases. The reason for this is that as the required rate increases, it becomes more difficult to reach

, especially for inside users acting as relays whose rate requirements become even larger. Hence, the users can only increase the transmit power to increase the transmission rate, which leads to the growth of power consumption. However, as

increases from 200 bit/s to 240 bit/s, energy consumption decreases. The reason for this is that when

is too large, the QoS requirements of a large number of users cannot be satisfied. From the perspective of increasing instantaneous reward, the agents tend to lower their transmit power, which leads to the reduced energy consumption.

6. Conclusions

In this paper, we consider a single-UAV heterogeneous network where sensor users and cellular users coexist and share the same frequency band. To enlarge the coverage area of the UAV, we propose to build relays for remote users that cannot be covered by the UAV-BS. In such a scenario, we optimize the network performance from the perspective of saving energy while guaranteeing the QoS. First, we propose an energy-effective relay-selection algorithm for outside users based on matching theory. Next, we propose a NOMA-based transmission scheme for inside users. Then, we formulate the problem of joint power and sub-band selection for inside users and use DQN to solve the problem. The simulation results show that our proposed NOMA transmission scheme can effectively decrease the energy consumption and improve the QoS satisfaction compared to benchmark schemes.

However, in this paper, we mainly focus on the scenario where relay transmission is required due to insufficient coverage in the single UAV-assisted network. For simplicity, we ignore the complex interference problem under multi-UAV networks. At the same time, we assume that the UAV is stationary rather than mobile. In the future, we plan to perform in-depth research of the following aspects: (1) the network scenario can be extended to a multi-UAV network, and the power and resource-allocation scheme under multiple UAV networks can be designed based on deep reinforcement learning. (2) The optimization of the UAV’s trajectory can be designed, and the impact of UAV’s movement on the user’s energy consumption and QoS should be explored. (3) To further extend the coverage of UAV, we will investigate the case that the multi-hop (the number of hop > 2) transmission in relay transmission can be considered, and the transmission delay, user energy consumption, and QoS should be simultaneously considered.