1. Introduction

The predictive maintenance of multiple operational assets is one of the responsibilities of infrastructure stakeholders. Regular inspections are mandatory for assessing and preserving the serviceability and safety of components. The lack of effective communication, execution, or interpretation of inspection procedures in large infrastructures can be the cause of terrible incidents, as was the case of the Grenfell Tower fire [

1] in London in 2017 and the Deepwater Horizon oil spill [

2] in the Gulf of Mexico in 2010. In the first case [

1], the external cladding system installed during the building renovation was not compliant with the building regulations in place. The system irregularities may not have been recognized and communicated effectively across the risk management organization and contributed to the combustion process during the fire event. In the oil spill incident [

2], operator and contractor personnel misinterpreted a test to assess the integrity of a cement barrier, which triggered a series of events that led to the rig’s explosion, with the massive release of 4 million barrels of hydrocarbons into the ocean. Besides the enormous environmental and financial damages, these catastrophic accidents have the immeasurable cost of human lives. Therefore, regular and efficient inspections of industrial and construction components are essential activities to avoid severe hazards and tragic failures.

Conventionally, the implementation of inspection tasks and the analysis of inspection data rely primarily on human assessment [

3,

4,

5]. However, modern industries demand alternative approaches that can improve the current practices and provide well-timed, safe, systematic, and accurate inspection performance for the large inventory of existing industrial and construction components. In this scenario, aerial platforms can benefit the non-destructive test (NDT) of structures by increasing time efficiency, data consistency, and safety [

6,

7,

8]. Remote inspection using aerial platforms is one of the most beneficial solutions to replace conventional methods. Drone-based inspection can solve challenges such as limited physical access and inspection time and provide new ways to conduct more advanced inspections. As a result, many studies focus on presenting aerial platforms and drone payloads for various industrial inspections [

9,

10,

11,

12,

13].

With the rise of interest in implementing NDT4.0 in various industries, companies are motivated to invest in finding alternative solutions to reduce the time and cost of inspection and advance assessment and analysis procedures. Processing pipelines for analyzing data acquired during an inspection can benefit from automation to: (a) improve performance, (b) decrease the time of analysis, (c) minimize possible human errors, and (d) increase the quality of the results. Accordingly, these benefits for thedata analysis of industrial inspections have motivated many studies to focus on developing automated process pipelines in recent years [

14,

15]. Achieving more accurate, time- and cost-efficient solutions can be endorsed by automating repetitive tasks during analysis. More than that, state-of-the-art computational techniques can be used and explored to advance the identification and interpretation of patterns in NDT test results [

16,

17].

Multi-modal systems use multiple sensors for data acquisition and processing. The combination of multi-modal systems and aerial platforms can be employed to inspect large and complex structures. Multi-modal platforms can effectively cover the weaknesses of individual sensors and provide complementary information from coupling imaging sensors, especially for drone-based inspection [

18,

19,

20]. Such systems can significantly assist inspectors during the acquisition and analysis process for possible maintenance. Furthermore, some scenarios require comprehensive information about the specimen’s geometry and texture to detect and characterize defects. Coupled thermal and visible sensors are one type of multi-modal system that can greatly improve the thermographic inspection of industrial and construction infrastructures. For instance, Lee et al. employed coupled thermal and visible sensors in a multi-modal system for solar panel inspection [

21]. Coupled thermal and visible cameras can be employed to enhance the data analysis of acquired data during an inspection.

This research explores new avenues for employing coupled thermal and visible cameras to enhance abnormality detection and provide a more comprehensive data analysis in the drone-based inspection of industrial components. This study uses four case studies to thoroughly describe the targeted challenges as well as proposed solutions using multi-modal data processing.

The first case study focuses on the adverse effect of low illumination and contrast, as well as shadows, on the automatic visual inspection of paved roads. Further, it proposes a method for the automatic crack detection of paved roads using coupled thermal and visible images, which successfully addresses the challenge of the explained visible camera’s vulnerability. The introduced method uses the fusion of thermal and visible modalities to enhance the visibility of visual features in areas affected by shadows, low illumination, or contrast. Furthermore, the method includes a deep learning-based crack detection method using the fused image to detect cracks on pavement surfaces. Finally, the case study presents the proposed method’s effectiveness and reliability based on the experiment conducted in an experimental road inside the Montmorency Forest Laboratory of Université Laval.

The second case study explains the issue of possible data misinterpretation in the remote thermographic inspection of piping systems, where distinguishing between surface and subsurface defects is challenging. It proposes a method using coupled thermal and visible images to detect and classify the possible abnormalities in a piping setup. The introduced method individually segments pre-aligned thermal and visible images based on local texture. Later, the extracted regions are compared and used to classify them into surface and subsurface defects. The proposed method is evaluated using an experiment on an indoor piping setup.

The third and fourth case studies focus on using texture segmentation to enhance the analysis of drone-based industrial and construction infrastructure inspection. A deep learning-based texture segmentation method is introduced. The main idea is to use the proposed texture segmentation method on visible images to extract the regions of interest in both thermal and visible images for further analysis. Additionally, two experiments are conducted to evaluate the presented approaches. The third case study uses the presented approach for drone-based multi-modal pavement inspection. Additionally, the fourth case study investigates the use of the presented method for drone-based multi-modal bridge inspection. In this case study, the texture segmentation method is used to extract concrete components.

The structure of this paper is presented as follows.

Section 2 provides a brief description of key concepts and a review of the literature related to the drone-based multi-modal inspection of structures and texture analysis. The introduced method for texture segmentation is explained in

Section 3. Lastly,

Section 4 describes the four case studies, and

Section 5 presents and discusses the results obtained from our experiments.

3. Texture Segmentation Using Supervised Deep Learning Approach

Deep convolutional neural networks (CNN) have become the staple standard for multiple computer vision tasks, such as image classification and instance and semantic segmentation [

63]. Moreover, numerous benchmark cross-domain datasets, such as Cityscapes [

64], KiTYY-2012 [

65] and IDDA [

66], have been proposed in the last decade. The CNN structures used in this study follow a U-Net architecture [

67], its later evolution Unet++ [

68], an DeepLabV3+ [

69], which has been widely used for semantic segmentation tasks, especially in the medical and self-driving vehicle fields. The chosen encoders were ResNet-18, Resnet-50 [

69], and DenseNet-121. They were mainly chosen for their small size of 6–23 million parameters and proven capabilities as a feature extractor. The PyTorch implementation of the networks used for this study was provided by Pavel Iakubovskii [

70].

The texture segmentation task was modeled as a multi-class classification problem, where each pixel from the input image represented a sample to be classified. The model was trained following a recipe heavily inspired by [

71] and modified to follow the segmentation task instead of the classification task. The employed loss function was the multi-class cross-entropy loss function. The classes were equally weighted; however, each image was given a sampling probability equal to the datasets’ inverse squared frequency of class densities. The optimization strategy used the classical stochastic gradient descent with momentum coupled with the cosine annealing with the warm restarts learning rate (CA-LR) scheduler [

72].

Furthermore, trivial augment (TA) [

73] was used as the default augmentation for all training sessions while being modified for the segmentation setting where only morphological augmentations were applied to both inputs and targets. Otherwise, augmentations were applied only to the input image. The training sessions ran for 300 epochs and were stopped early if necessary to prevent overfitting or if performances were unsatisfactory. All training procedures were implemented with the PyTorch v1.12 library and ran on a server equipped with a RTX2080-Ti GPU and an AMD Ryzen Threadripper 1920X CPU. All the training and validation hyper-parameters are presented in

Table 2.

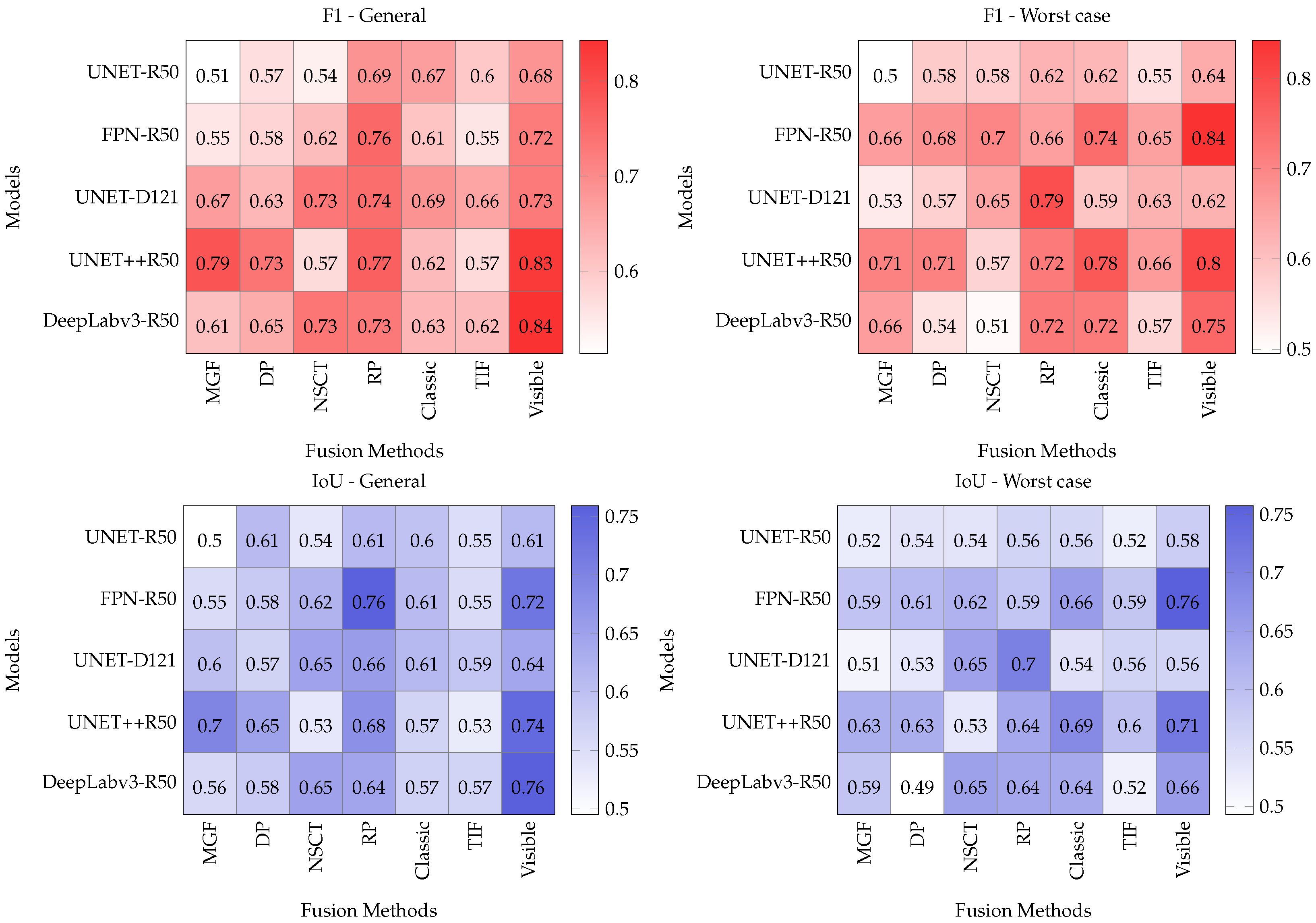

In order to evaluate the trained models, three segmentation metrics were adopted: (a) intersection over union (IoU), (b)

F1-score, and (c) structural similarity index metric (SSIM) [

74]. As described above, each pixel represented a sample to be classified. From this definition, four possible outcomes exist: (a) true positives (TP) and true negatives (TN), where the pixel is said to be rightly classified, and (b) false positives (FP) and false negatives (FN), where the pixel is attributed to the wrong class. Equation (

1) presents the formula for

F1-score, where the precision is equal to

and the recall is

.

The IoU metric relates the overlap ratio between the model’s prediction and the target. As the name suggests, this is accomplished by dividing the intersection of the target and prediction by their union. Equation (

2) shows the formula for IoU.

The SSIM metric measures the perceptual structural information difference between two given images or class maps in the texture segmentation case. This metric captures the visual difference by cross-comparing the local luminance and contrast measurements between two samples. For a thorough explanation of the inner workings of this metric, we refer the reader to the original publication [

74].

4. Case Studies

This section investigates the benefits of using coupled thermal and visible imagery sensors to enhance the non-destructive testing of industrial and construction structures. The coupled modalities can be combined and analyzed to solve the shortcomings of each sensor. For instance, thermal cameras present a visualization of thermal measurements while being unable to provide texture information. On the other hand, visible cameras can sense color and texture information while being vulnerable to low illumination.

The rise of interest in remote inspection using aerial platforms and automating the analysis of acquired data during recent years motivated different studies to focus on addressing the challenges and advancing the methods and technologies in these areas. The use of coupled sensors is one of the approaches to (a) solve the natural limitations of the involved sensors and (b) provide a more comprehensive analysis based on extra information gathered from the environment using the multi-modal data acquisition approach. This study provides a comprehensive exploratory investigation regarding the use of coupled thermal and visible images to enhance the data analysis in the NDT inspection.

This section includes four case studies of employing coupled thermal and visible cameras aiming toward the enhancement of post-analysis during a drone-based automated process pipeline in different inspection scenarios in different industries. Before explaining the use cases, since the registration of thermal and visible images are a preliminary step for all use cases, the description of manual registration is explained in

Section 4.1. The first case study in

Section 4.2 explains the benefits of fusing visible images with thermal images to enhance the defect detection process.

Section 4.3 explains the multi-modal approach for abnormality classification in piping inspection. Employing visible images to extract the region of interest in thermal images to enhance the drone-based thermographic inspection of roads is described in

Section 4.4. Finally, a drone-based inspection of concrete bridges using coupled thermal and visible cameras is investigated in

Section 4.5. The source code and part of the employed datasets are available at this study’s GitHub repository (

https://github.com/parham/lemanchot-analysis (accessed on 5 October 2022)). Also, the full set of employed hyperparameters, training results, and resulting metrics can be found in this study’s Comet-ML repository (

https://www.comet.com/parham/comparative-analysis/view/OIZqWwU2dPR1kOhWH9268msAC/experiments (accessed on 14 November 2022)).

4.1. Manual Registration

Although coupled sensory platforms can be designed to have similar fields of view with considerable overlap, the thermal and visible images need to be aligned to use them as complementary data. Many multi-sensory platforms have a built-in registration process customized based on the system requirement; however, the registration process generally is a preliminary step for multi-modal data processing. The automated registration of thermal and visible images is not in the scope of this study. Therefore, a manual registration approach was employed for aligning the modalities. First, the user manually selected the matched control points in both modalities. Next, the homography matrix was estimated using the matched points. Finally, the matrix was used to align the thermal and visible images.

This study employed two approaches to use manual registration. The first approach was when the relative position of the camera toward the surface was approximately constant. The first coupled images were used to calculate the homography matrix. Later, the matrix was used to align the remaining images. The second approach calculated the homography matrix for all coupled images.

4.2. Case Study 01: Enhancing Visual Inspection of Roads Using Coupled Thermal and Visible Cameras

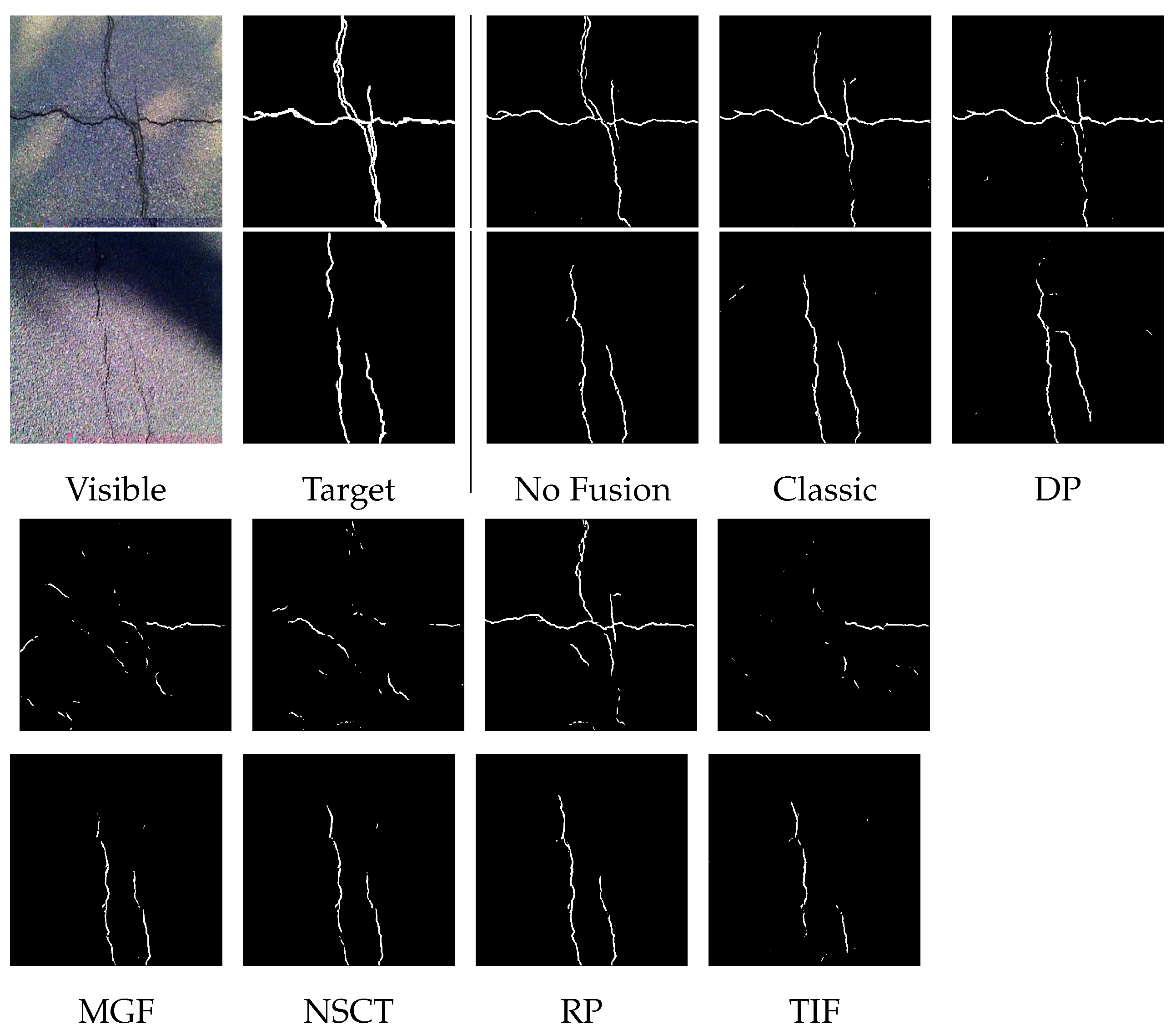

Thermal cameras capture the thermal radiation emitted from a specimen and surrounding area and present the information as an image. Since they work with emitted thermal radiation, the visible illumination does not affect the thermal visibility. Thus, one of the applications for coupled thermal and visible sensors is to enhance defect detection in visual inspection using thermal images in case of illumination or contrast issues, where the indications of surface defects are visible in thermal and visible images. This section presents a process pipeline for automatic crack detection using coupled thermal and visible images. The objective is to demonstrate the effect of the thermal-visible image fusion on crack detection in typical- and worst-case scenarios. The worst-case scenario occurs when shadows, low illumination, or low contrast disrupt the detection process.

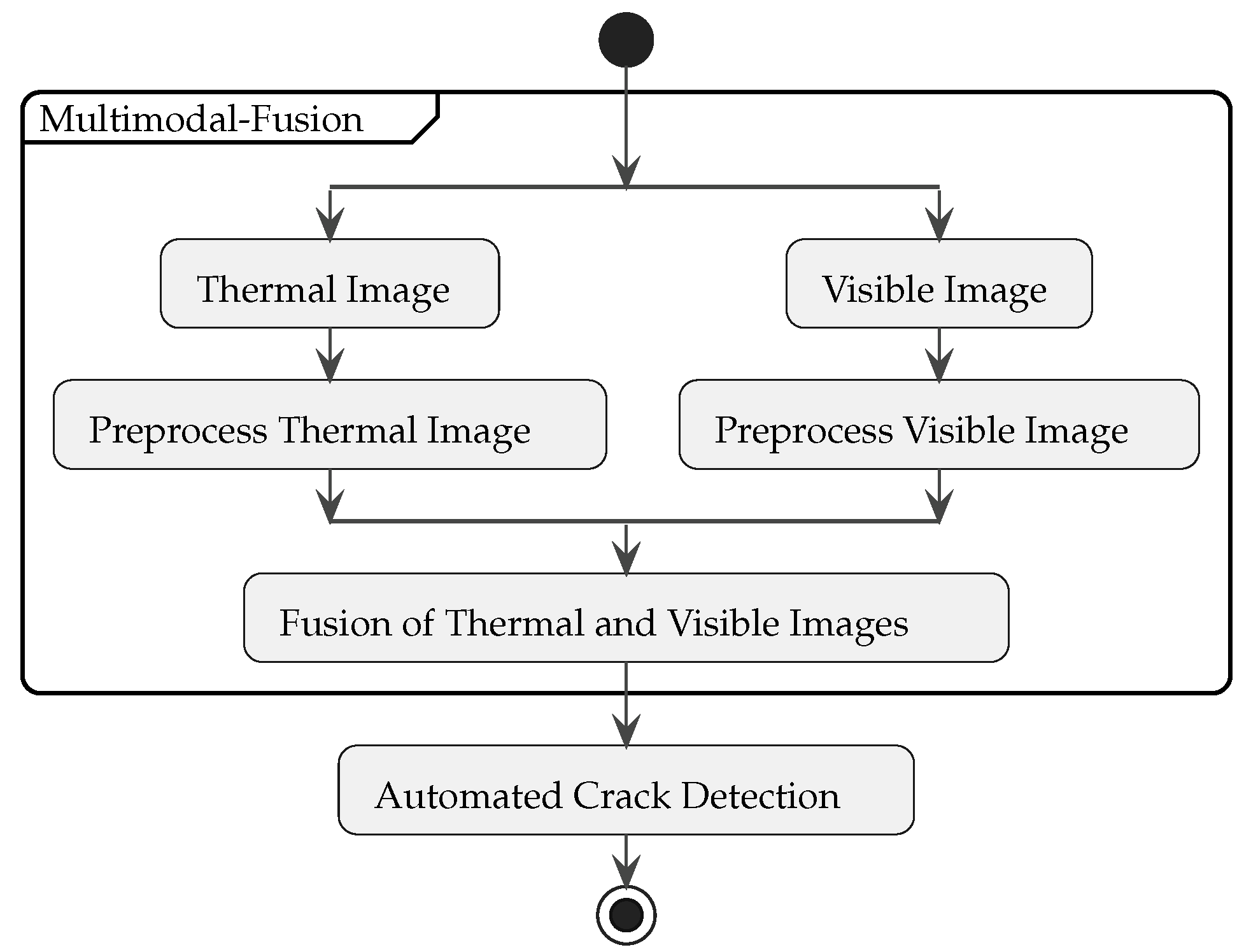

This method consists of two main parts: (a) the fusion of thermal and visible images and (b) automatic crack detection. In order to focus on the main objectives, it is assumed that the modalities are pre-aligned and ready for fusion. As shown in

Figure 3, the visible and thermal images were preprocessed to balance the illumination and contrast. The contrast-limited adaptive histogram equalization (CLAHE) method was used to correct and balance the illumination and contrast of visible images [

75]. Additionally, the thermal images were enhanced using adaptive plateau equalization (APE) [

76], which is a proprietary FLIR method. Later, both modalities were passed to the fusion method.

The resulting image was processed to detect cracks using a deep learning approach. This case study employed a deep learning approach for detecting cracks on road pavements. The deep learning networks available were a U-Net network [

67], a Unet++ [

68], a FPN [

77] and a DeepLabV3+ [

69] model. The decoder models were coupled with ResNet-18, ResNet-50 [

69] and DenseNet-121 [

78] encoders that were pre-trained on the ImageNet dataset.

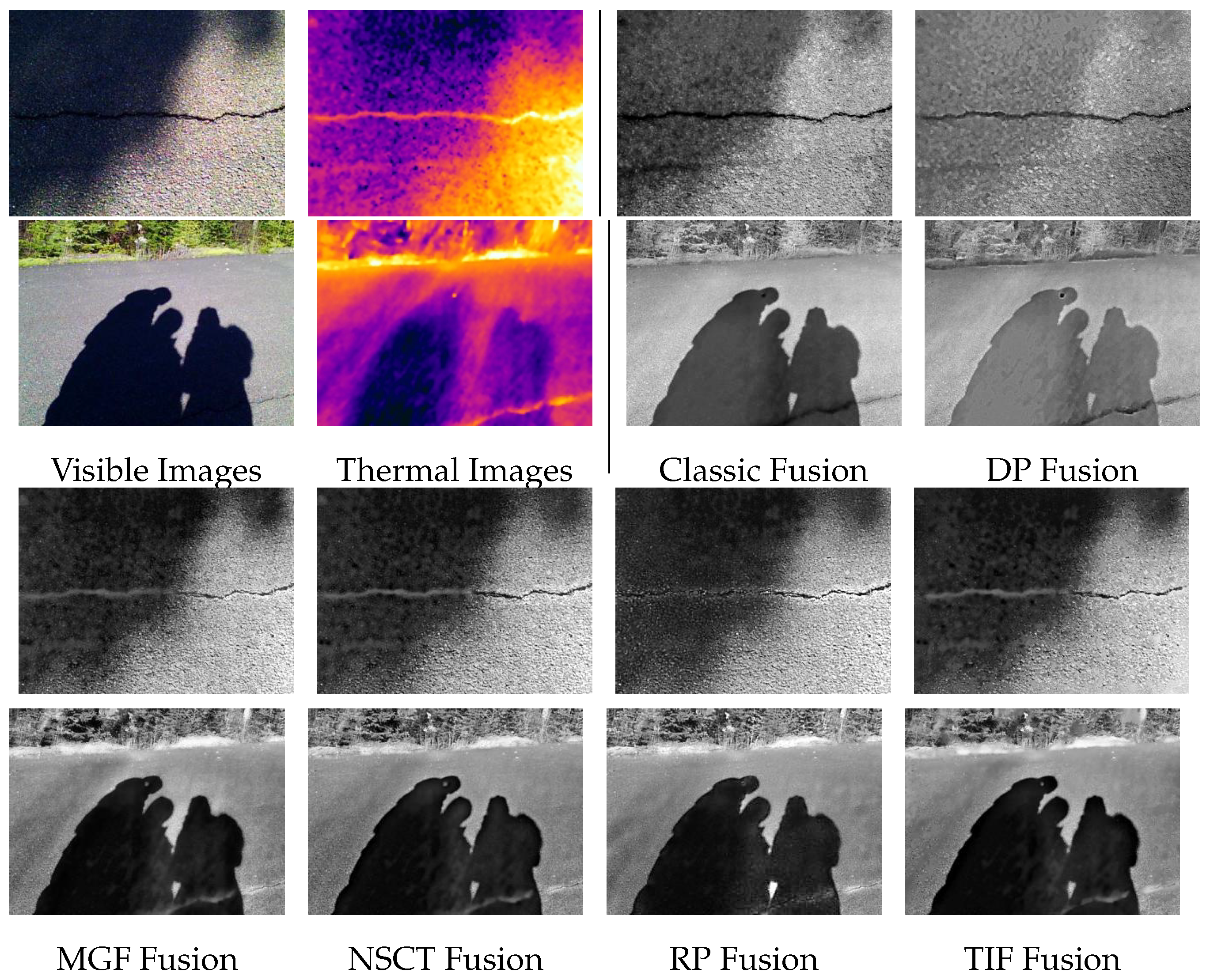

4.2.1. Fusion of Thermal and Visible Images

In this case study, six multi-modal fusion algorithms were tested for the fusion of pre-aligned thermal and visible images, named DP, classic [

79], TIF, MGF, RP, and NSCT. First, a deep learning (DP) method presented in [

80] was adopted. This method is a deep learning-based technique that fuses both modalities while preserving their features. Each modality is decomposed into base and detailed parts in this approach using the optimization method introduced in [

81]. Later, the base parts were fused using the weighted-averaging method. The multi-layer features were extracted using a deep learning network for the detailed parts. Next, the candidates were generated using

-norm and weighted-average methods as fused results. The fusion of detail parts was finalized based on the max selection strategy. Finally, the resulting fusion was reconstructed by combining the fused base and detailed parts. The deep learning network used for extracting multi-layer features was VGG-19 [

82] trained on the ImageNet dataset. Next, the two-scale image fusion (TIF) method [

43] was tested, which is based on two-scale image decomposition and saliency detection. During the image decomposition, complementary and relevant information from visible and infrared images were extracted. However, only visually significant information from both source images proceeded to the fused image. To that aim, the proposed weight map construction process assigned more weight to pixels with relevant information. Next, weight maps, details, and base layers were integrated to form the fused images.

Another tested method was multi-scale guided filtered-based fusion (MGF) [

41], which is a multi-scale image decomposition method that can extract visual saliency using a guided image filter (GF). It consists of decomposing the source images using GF, generating saliency maps based on detail layer information, computing weight maps by normalizing the saliency maps, and combining the detail and base layers to generate the fused images. Other than MGF, two other popular multi-scale transforms were tested: ratio of low-pass pyramid (RP) [

83] and nonsubsampled contourlet transform (NSCT) [

84]. RP employs multiresolution contrast decomposition to perform hierarchical image fusion. The method preserves the details that are important to human visual perception, such as high local luminance contrast [

83]. NSCT is a shift-invariant version of the contourlet transform (CT), which uses non-subsampled pyramid filter banks (NSPFBs) for multi-scale decomposition and non-subsampled directional filter banks (NSDFBs) for directional decomposition [

84]. The resulting fused image contained the texture and color information of the visible image, while the areas with low feature visibility were enhanced with the thermal information.

4.2.2. Automated Crack Detection

This case study employed a deep learning approach for detecting cracks on paved roads. Similar to the texture segmentation task, a variety of state-of-the art architectures and encoders were tested, including Unet [

67], FPN [

77] and Unet++ [

68] combined with the Resnet18, Resnet50 [

69], and DenseNet-121 [

78] encoders. The top five models presenting the best results were chosen as possible candidate models. To do so, two main criteria were analysed: (a) the overall quantitative performance on the validation set and (b) the perceived qualitative quality of the predictions on the validation set. All networks were trained and validated using a combination of publicly available datasets containing segmented cracks on pavement [

85,

86]. Details on the used datasets are presented in the next section. Similar to the previous training recipe, unweighted multi-class cross-entropy loss was employed to train the models on the visible part of the acquired data. This time, the Adam optimizer [

87] was selected as the optimization strategy. In order to manage the learning rate during the training, the cosine annealing method [

72] was employed again. Trivial augment (TA) [

73] was also used as the default augmentation strategy. Additionally, following [

86]’s training procedure, the targets were symmetrically dilated by 1 pixel. The hyperparameters for the crack segmentation models are presented in

Table 3. The aforementioned parameters serve as a baseline for optimization, and small variations may occur if a given model needs further optimization.

4.2.3. Dataset

The training and validation set were randomly generated following a 80%/20% split ratio from four datasets found in [

85,

86], named CrackTree260, CrackLS315, CRKWH100 and Stone331. CrackTree260 was the first dataset employed in this study [

85]. It contains 260 visible images of paved surfaces at a monotone distance. Two different resolutions are found in this dataset,

and

pixels. CRKWH100 is a dataset collected for pavement crack detection [

86]. It contains grayscale images of size

and manually annotated ground truths. The images were collected at a close range and perpendicular to the pavement. The 100 available images display traces of noise, such as oil spills and shadows. CrackLS315 is comprised of images of laser-illuminated paved roads. The 315 samples were captured by a line-array camera at a controlled ground sampling distance. Stone331 contains 331 visible images,

pixels in size, of cracks on stone surfaces [

86]. The samples were captured with visible-light illumination by an area-array sensor. Moreover, a mask is provided, identifying the region of interest in each sample.

For testing this case study, a dataset containing the coupled thermal and visible images of a road inspection was employed for training and testing the model and evaluating the multi-modal fusion technique. The road inspection was conducted on an experimental road belonging to the Montmorency Forest Laboratory of Université Laval, located north of Quebec City. The inspection was performed on 27 October 2021, using a FLIR E5-XT Wifi camera that can collect

pre-aligned thermal and visible images. In addition to the acquired data, a subset of data was used to create additional images containing augmented shadows and low illumination and contrast.

Figure 4 shows samples of the acquired dataset.

An inspector walked along the road at a steady height from the pavement for data acquisition. For this experiment, a total of 330 coupled thermal and visible images were collected, presenting the cracked areas of the road. Parts of the acquired visible images affected by shadows were later used to investigate the effect of using fused images for crack detection. In addition to the images affected by shadows, other samples with augmented shadows and low illumination and contrast conditions were generated to be used for evaluation purposes.

4.3. Case Study 02: Abnormality Classification Using Coupled Thermal and Visible Images

Another area in which coupled thermal and visible images can be beneficial is remote inspection when physical access is limited. In such scenarios, comprehensive information in different modalities is needed to avoid data misinterpretation. In the case of thermographic inspection, the abnormalities are recognizable in thermal images, and several methods exist that can semi-automate the detection process. However, distinguishing between surface and subsurface defects is hard or impossible with only thermal information in an automated process pipeline. To address this challenge, coupled thermal and visible images can be employed to enhance the classification process.

In this case study, thermal and visible images were used to classify detected defects into surface and subsurface abnormalities using texture analysis. To do so, the thermal and visible images were aligned using a manual registration. Later, the region of interest was selected in both modalities. After applying the preprocessing steps to the extracted regions, the thermal image was passed to an unsupervised deep learning-based method to segment the thermal image into regions with different thermal patterns. Next, a conventional texture segmentation algorithm was used on the coupled visible images to segment the areas with similar patterns. Finally, the extracted regions in both modalities were combined to determine surface and subsurface areas. The steps for the processing pipeline are shown in

Figure 5.

4.3.1. Unsupervised Thermal Image Segmentation

This case study employed an unsupervised segmentation technique introduced in [

88] for segmenting thermal images. The method used a convolutional neural network with random initialization and an iterative training strategy to segment thermal images. The final result was obtained after passing each sample image through the model for a certain amount of iterations. The iterative process continued until the number of extracted classes reached a predefined criterion. Additionally, the method used a loss function that did not need a target to calculate the loss value, as explained in Algorithm 1. The schema of the network architecture is shown in

Figure 6.

| Algorithm 1 The loss method for segmenting thermal images. |

| 1: procedure Loss for Thermal Image Segmentation(I, c, , ) |

| Ensure: The thermal image I cannot be None. |

| Require: The number of channel c. |

| Require: The similarity factor and continuity factor . |

| 2: | ▹ Calculate the maximum matrix across the first dimension (outputś channel). |

| 3: | ▹ The width w and height h are the size of the image I. |

| 4: |

| 5: |

| 6: |

| 7: |

| 8: | |

| 9: | ▹ and are used for calculating the continuity factor. |

| 10: | ▹ Cross-entropy calculates as a similarity factor. |

| 11: | ▹ Loss is the return value for this method. |

| 12: end procedure |

4.3.2. Texture-Based Image Segmentation

In this case study, the expected abnormalities in the piping setup contain sharp and steady patterns in different shapes and colors. Therefore, an entropy-based segmentation approach based on statistical texture analysis was used for segmentation. Algorithm 2 describes the employed technique for texture segmentation of visible images.

| Algorithm 2 The method for texture segmentation of visible images. |

| 1: procedure Texture Image Segmentation(I, t, p) |

| Ensure: The visible image I cannot be None. |

| Require: The threshold t for binarizing the image should be defined. |

| Require: The minimum number of pixels p for regions that need to be removed. |

| 2: convert to grayscale (I). |

| 3: calculate local entropy of the image (). |

| 4: | ▹ function forms an output for which each pixel value is the standard deviation of neighboring pixels. |

| 5: | ▹ function forms an output for which each pixel value is the maximum value, with a minimum value of neighboring pixels. |

| 6: |

| 7: |

| 8: | ▹ Binarize E using the given threshold value T. |

| 9: | ▹ Remove small regions with numbers of pixels lower than p. |

| 10: | ▹ Close the open regions and fill the holes morphologically using floating windows with a size of 9. |

| 11: | ▹ T is defined as the functionś return. |

| 12: end procedure |

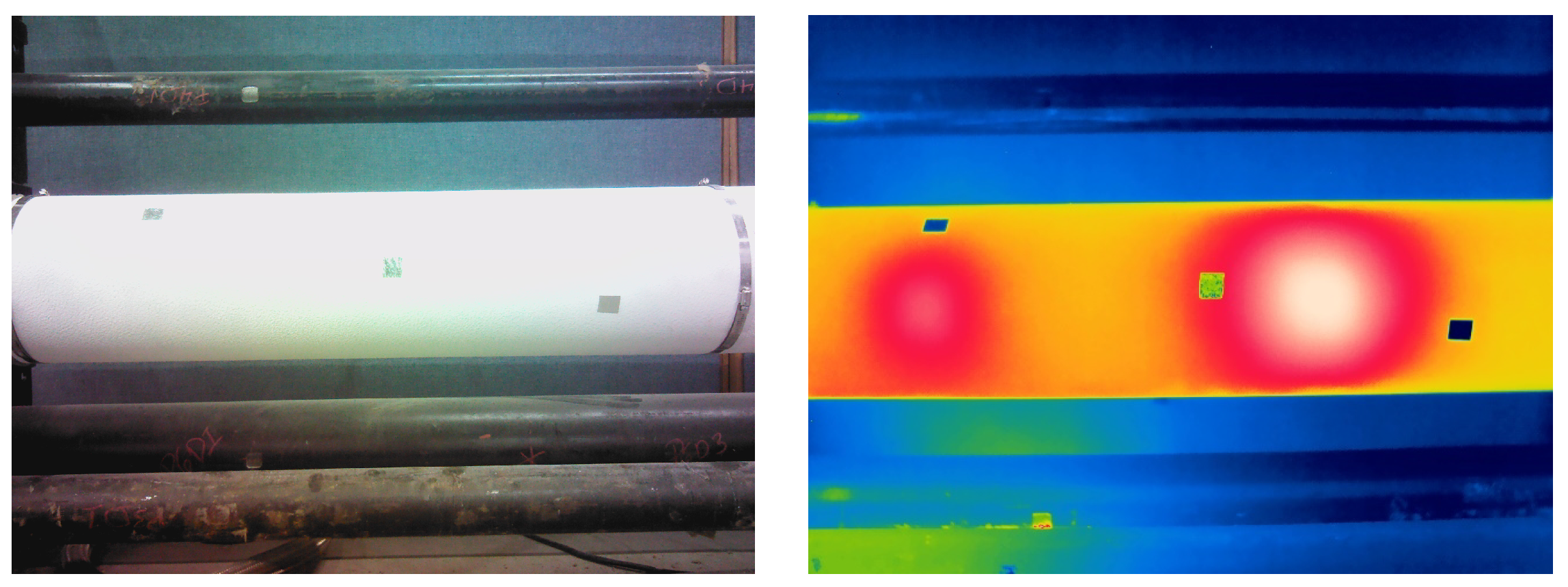

4.3.3. Dataset

For this case study, a dataset containing coupled thermal and visible images of a piping inspection was used to evaluate the proposed approach. For this experiment, an indoor piping setup was prepared with different carbon steel pipes wrapped with wool isolation. The pipes’ isolation layer was defected manually to create surface defects. During the experiment, the hot oil was pumped into the pipes as a heating mechanism for active thermography. An FLIR T650sc camera was used to collect thermal and visible images with

resolution while placed in multiple fixed locations.

Figure 7 shows samples of collected thermal and visible images.

4.4. Case Study 03: Enhancing the Analysis of Drone-Based Road Inspection Using Coupled Thermal and Visible Images

Visible images can be employed to segment the region of interest in thermal images in various applications. The visible images can be analyzed to identify different materials based on their textures. The gathered information can be used to segment thermal images and provide more accurate results. This information can help the automated process pipeline to (a) differentiate between materials or (b) extract the region of interest and avoid data misinterpretation. For instance, one of the possible applications of the presented concept is multi-modal road inspection. Thermographic inspection can be used to detect delamination in concrete structures [

89] or sinkholes in pavement roads [

90]. However, for an automated process pipeline, it is required to extract regions of interest to ensure the accurate analysis of concrete or pavement structures.

In this case study, the use of visible images for helping to extract the region of interest in thermal images was investigated comprehensively for the drone-based inspection of road pavement. Firstly, the thermal images were preprocessed to enhance the visibility of the thermal patterns. Next, the visible and thermal images were aligned via manual alignment. Since the drone’s altitude was fixed during each data session, the homography matrix was calculated for the first coupled images and used for the remaining frames. Later, the visible images were passed to the deep learning-based texture segmentation method explained in

Section 3 to detect the regions representing the pavement area. Later, the generated mask was used to filter out regions in thermal images. Finally, the extracted regions were analyzed to detect possible abnormalities.

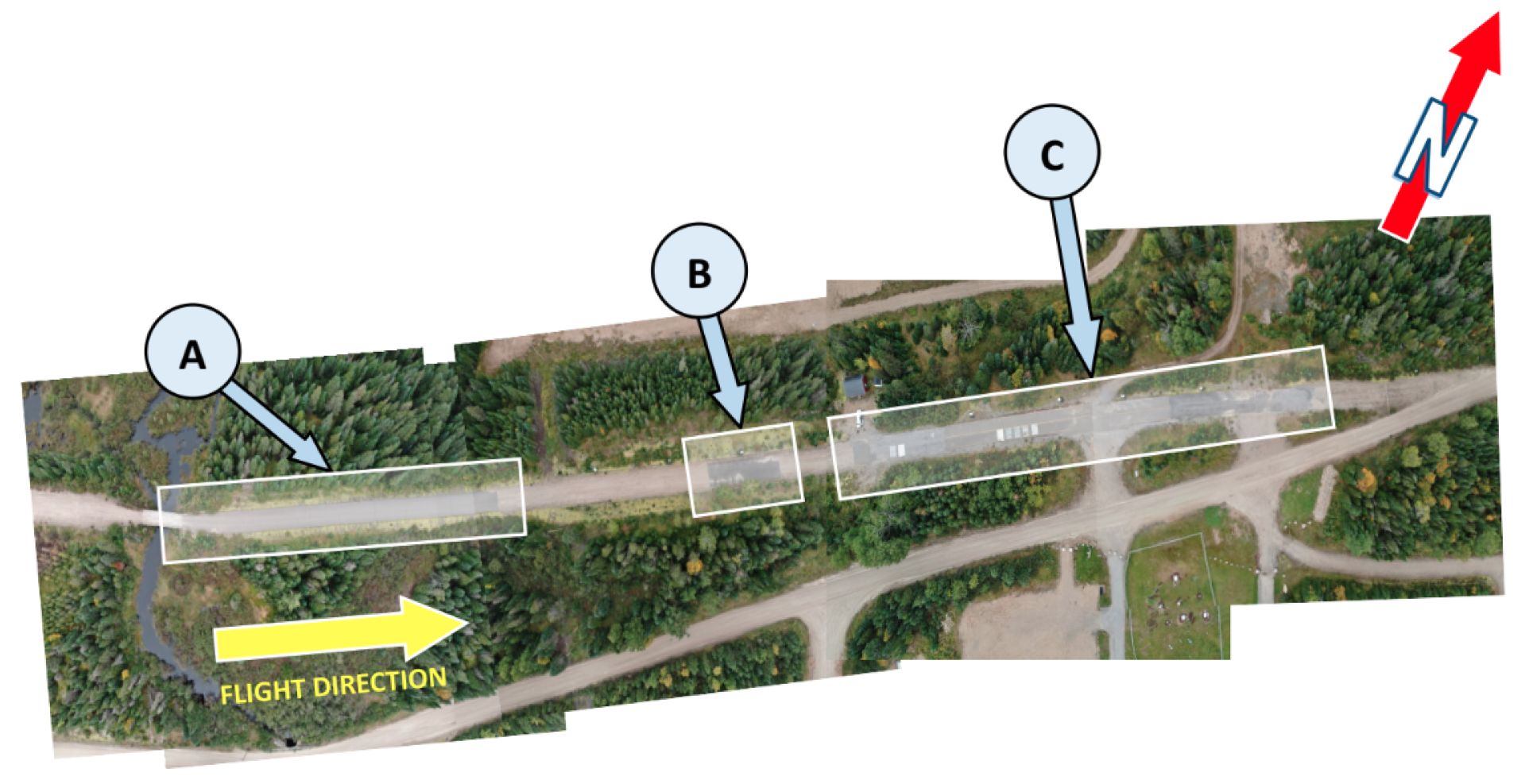

Dataset

For this case study, an experiment was conducted on an experimental road with a length of 386 m belonging to the Montmorency Forest Laboratory of Université Laval, located in the north of Quebec City. The road was intended to test pavement paints, laying techniques, and simulating inspections.

Figure 8 shows the inspection map and the dedicated sections. For this experiment, a DJI M300 drone equipped with a Zenmuse H20T camera was employed for acquiring thermal and visible images. The provided datasets contained 578 and 614 coupled thermal and visible images of the road consecutively for the first and second data sessions at an altitude of 15 m.

Table 4 explains the conditions of the inspection.

Figure 9 shows sample photos of acquired data from the inspected road.

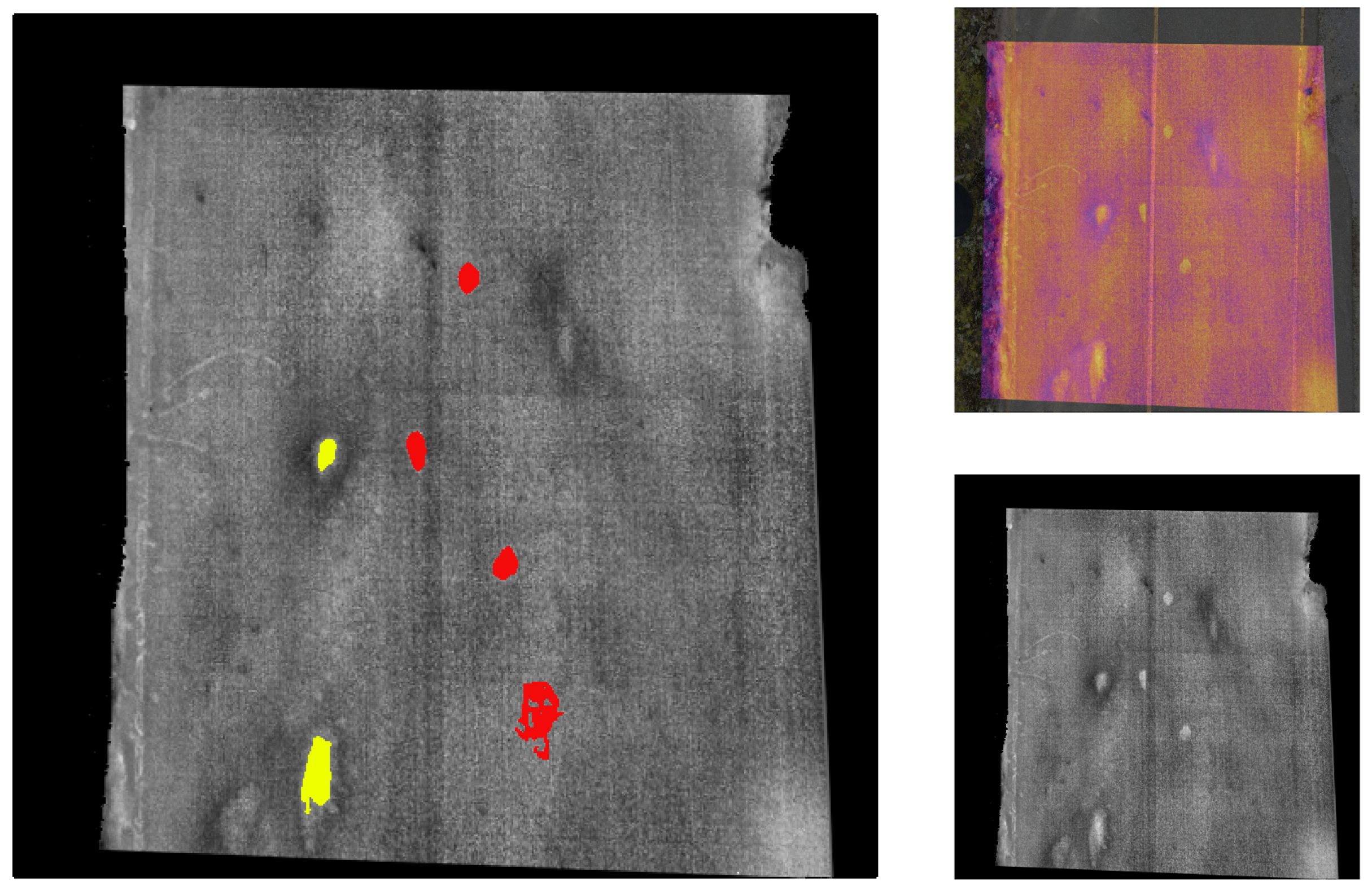

4.5. Case Study 04: Enhancing the Analysis of Bridge Inspection Using Coupled Thermal and Visible Images

As mentioned in

Section 4.4, using visible images to extract the regions of interest in thermal images is one of the main applications of coupled sensors. In addition to using visible images to enhance the analysis of thermal images, extracting regions of interest in thermal images also reduces the complexity of analysis in an automated pipeline by removing extra information that may cause data misinterpretation.

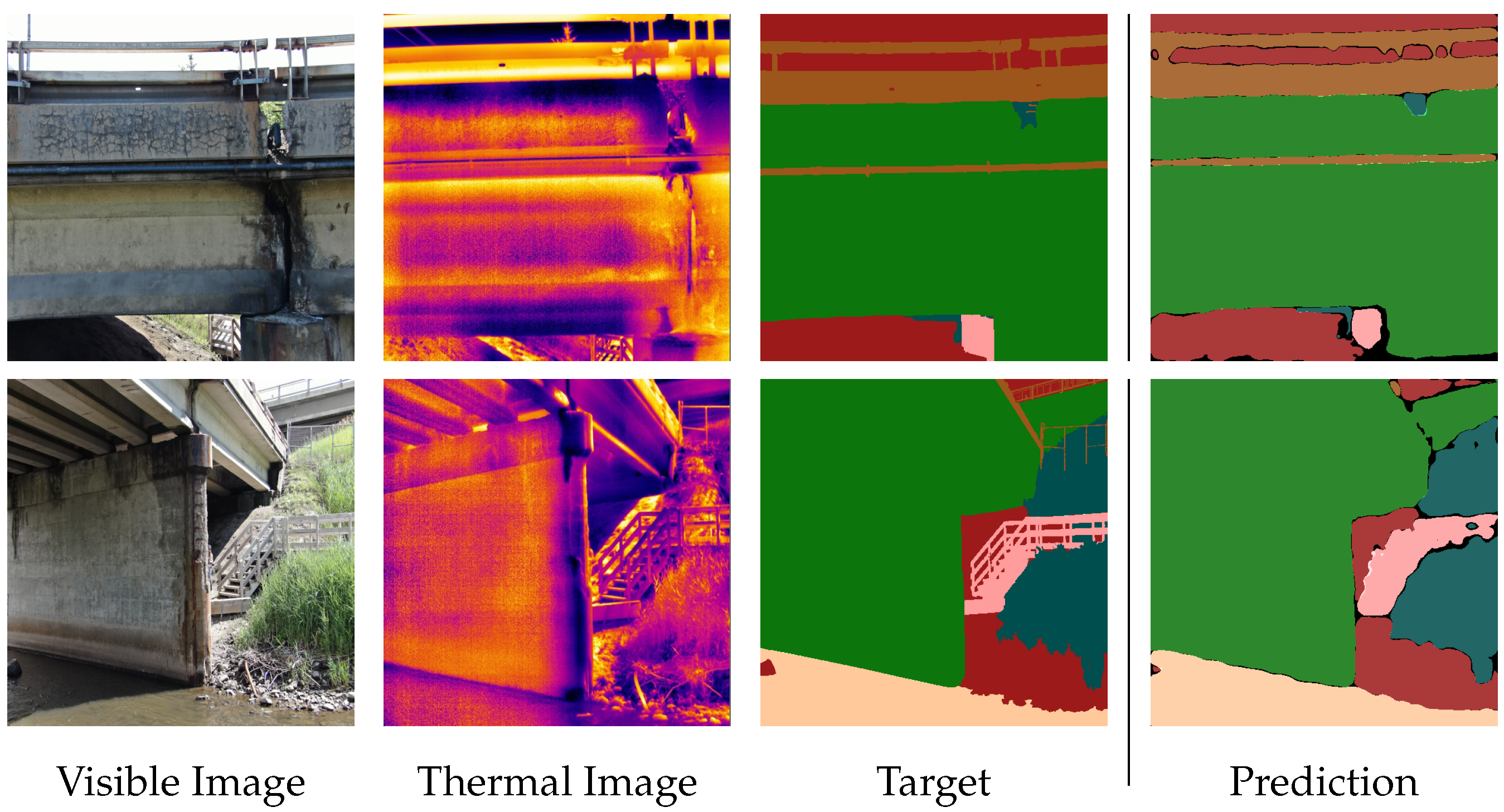

This case study investigated the use of coupled thermal and visible cameras to enhance the drone-based thermographic inspection of concrete bridges. For this case study, firstly, the thermal images were preprocessed to enhance the visibility of thermal patterns. Later, the thermal and visible images were aligned. Next, the visible images were passed to the proposed texture segmentation method explained in

Section 3 to find the areas representing the concrete surface. Finally, the segmented images were used to extract the concrete regions in thermal and visible images. Finally, the extracted regions were used to detect possible abnormalities.

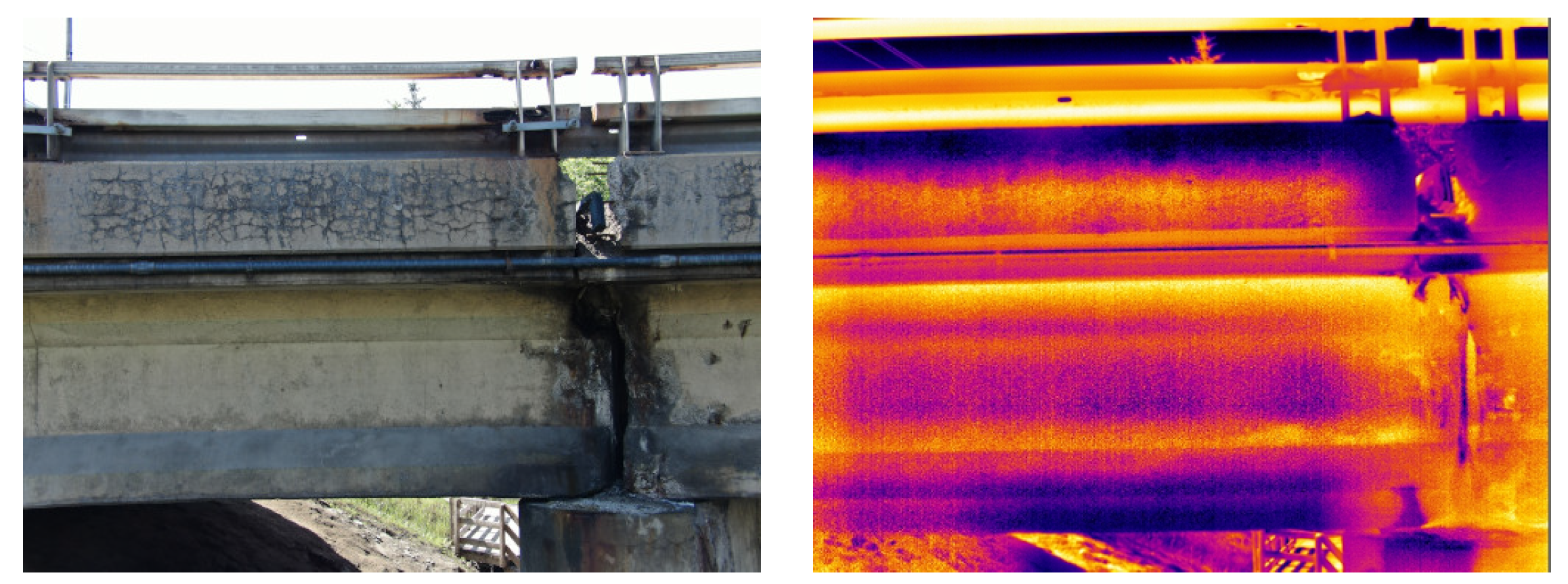

Dataset

For this case study, an inspection was conducted on a concrete bridge in Quebec City. A DJI M300 drone equipped with a Zenmuse H20T camera was used for this inspection. Three flight sessions were performed, including two horizontal passes across the bridge girders at a fixed distance and a pass under the bridge while the drone’s camera aimed at the cross beams and the bottom of the bridge deck. The gathered dataset contains a total of 273 coupled thermal and visible images. The inspection conditions are described in

Table 5.

Figure 10 demonstrates the sample photos of acquired multi-modal data from the bridge inspection.

6. Conclusions

The aging of existing industrial and civil infrastructure has been a recurrent concern for owners, workers, and users. While public and private agencies aspire to preserve life expectancy and serviceability, the network of assets to be inspected, repaired, and maintained continues to grow. This scenario shows the existing and upcoming demand for innovative approaches to improve the current practices for inspecting and analyzing the large inventory of industrial and construction components.

This study is aimed to investigate the benefits of using coupled thermal and visible modalities for the drone-based multi-modal inspection of industrial and construction components. Additionally, it studies enhancing the interpretation and characterization of abnormalities in a multi-modal inspection using texture analysis. Four case studies were evaluated in this paper, tackling various infrastructure components and inspection requirements. The comprehensive experiment and analysis performed in this study led to the following conclusions:

- (i)

The outcome of the first case study showed that the use of coupled thermal and visible images in paved roads could effectively enhance the detection of cracks, especially in cases of low illumination, low contrast, or in the presence of shadows. The segmentation metrics for fused images were smaller than the results from visible images, primarily because of the different damage thicknesses segmented in thermal images compared to visible and target images.

- (ii)

The second case study investigated the use of coupled thermal and visible images and texture analysis to differentiate between surface and subsurface abnormalities during an inspection of piping. The presented method combined an unsupervised segmentation approach to automatically detect faulted regions in thermal images and a texture segmentation method for visible images. As a result, the proposed multi-modal processing pipeline allowed for semi-automated classification of abnormalities during the piping inspection, which can be potentially implemented during drone inspection.

- (iii)

The third case study introduced a multi-modal processing pipeline for drone-based road inspection. The developed method employed texture segmentation to extract the region of interest in both modalities. The conducted experiment showed that it is possible to detect surface and sub-surface defects during a drone-based road pavement inspection.

- (iv)

The fourth case study, similar to the third one, investigated the use of texture analysis to enhance the drone-based thermographic inspection of bridges. The processing pipeline extracted the concrete regions in thermal images using the texture analysis of visible images. The findings showed that the proposed solutions on multi-modal inspection analysis are not linear but prone to case-scenario adaptations over the user/client case.

Generally, the results of this study provide supporting evidence that the exploration of texture patterns in visible images can conveniently advance the thermographic inspection of industrial and construction components and avoid possible data misinterpretation, especially in drone-based inspection due to limited physical access. More than that, the use of coupled drone-based thermal and visible images allied with automatic or semi-automatic computational methods is a promising alternative to tackle with efficiency the growing demand for inspection and analysis tasks in the civil and oil and gas infrastructure domains.

Future research should certainly assess whether the texture-based analysis could help register multi-modal images, an open issue for coupled infrared and visible scenes containing few perceptual references. Furthermore, a more extensive data collection depicting a larger variety of worst-case scenarios, where the added value of the thermal imaging acquisition would be more prominent, is necessary. Indeed, similarly to the autonomous vehicle research field, the drone inspection field would greatly benefit from standardizing the creation of benchmark cross-domain datasets.