1. Introduction

The inherent black-box nature of neural networks makes it difficult to trust. Over the last two decades various works showed that it is possible to determine the knowledge stored into neural networks by extracting symbolic rules. Specifically, Andrews et al. introduced a taxonomy to characterize all rule extraction techniques [

1]. With the advent of social networks, a growing interest aiming at determining emotional reactions of individuals from text has been observed. Generally,

Sentiment Analysis techniques try to gauge people feelings with respect to new products, artistic events, or marketing/political campaigns. Generally, a binary polarity is assumed, such as like/dislike, good/bad, etc. For instance, a typical problem is to determine whether a product review is positive or not. Sentiment analysis techniques are grouped into three main categories [

2]: knowledge-based techniques; statistical methods; and hybrid approaches. Knowledge-based techniques analyze text by detecting unambiguous words such as happy, sad, afraid, and bored [Ort90]. Statistical methods use several components from machine learning such as latent semantic analysis [

3], Support Vector Machines (SVMs) [

4], Bag of Words (BOW) [

5] and Semantic Orientation [

6]. More sophisticated methods try to determine grammatical relationships of words by deep parsing of the text [

7]. Hybrid approaches involve both machine learning approaches and elements from knowledge representation such as ontologies and semantic networks, the goal being to determine subtle semantic components [

8]. Recently, deep learning models have been applied to sentiment analysis [

9]. Such models differ from the ones used in this work, since they take the word order into account, which is not possible with BOW. However, rule extraction from deep networks, like Convolutional Neural Networks (CNN) is much more difficult and represents an open research problem, which is not tackled here.

Diederich and Dillon presented a rule extraction study on the classification of four emotions (

boom, confident, regret and

demand) from SVMs [

10]. To the best of our knowledge we are not aware of other works relating rule extraction from connectionist models trained with sentiment analysis data. One of the main reasons is that with datasets including thousands of words, rule extraction becomes very challenging [

10]. As a consequence Diederich and Dillon restricted their study to only 200 inputs and 914 samples. Our purpose in this work is to fill this gap by generating rules from ensembles of neural networks and SVMs trained on sentiment analysis problems related to positive and negative polarities. In this manner, we aim at shedding light on how sentiment polarity is determined from the rules and also at discovering the complexity of rulesets. We propose to use the Discretized Interpretable Multi Layer Perceptron (DIMLP) to generate unordered propositional rules from both network ensembles [

11,

12] and Support Vector Machines (SVMs) [

13]. In many classification problems, a simple Logistic Regression [

14] or a naive Bayes baseline [

15] on top of a bag of words can give good results. However, by simply looking at the magnitude of the weights for checking which words influence the classification output the most, we will only obtain a “macroscopic” explanation of the important words. Specifically, we will not know how words are used in various arrangements by a classifier. Therefore, in sentiment analysis we will ignore how subsets of positive and negative words are combined with respect to the classification of data samples. In this work, we generate from DIMLPs and QSVMs propositional rules that represent subsets of positive/negative words. They provide us with a precise explanation of the classification of data samples, which cannot be accomplished by simply inspecting weight magnitudes, as in Logistic Regression or naive Bayes.

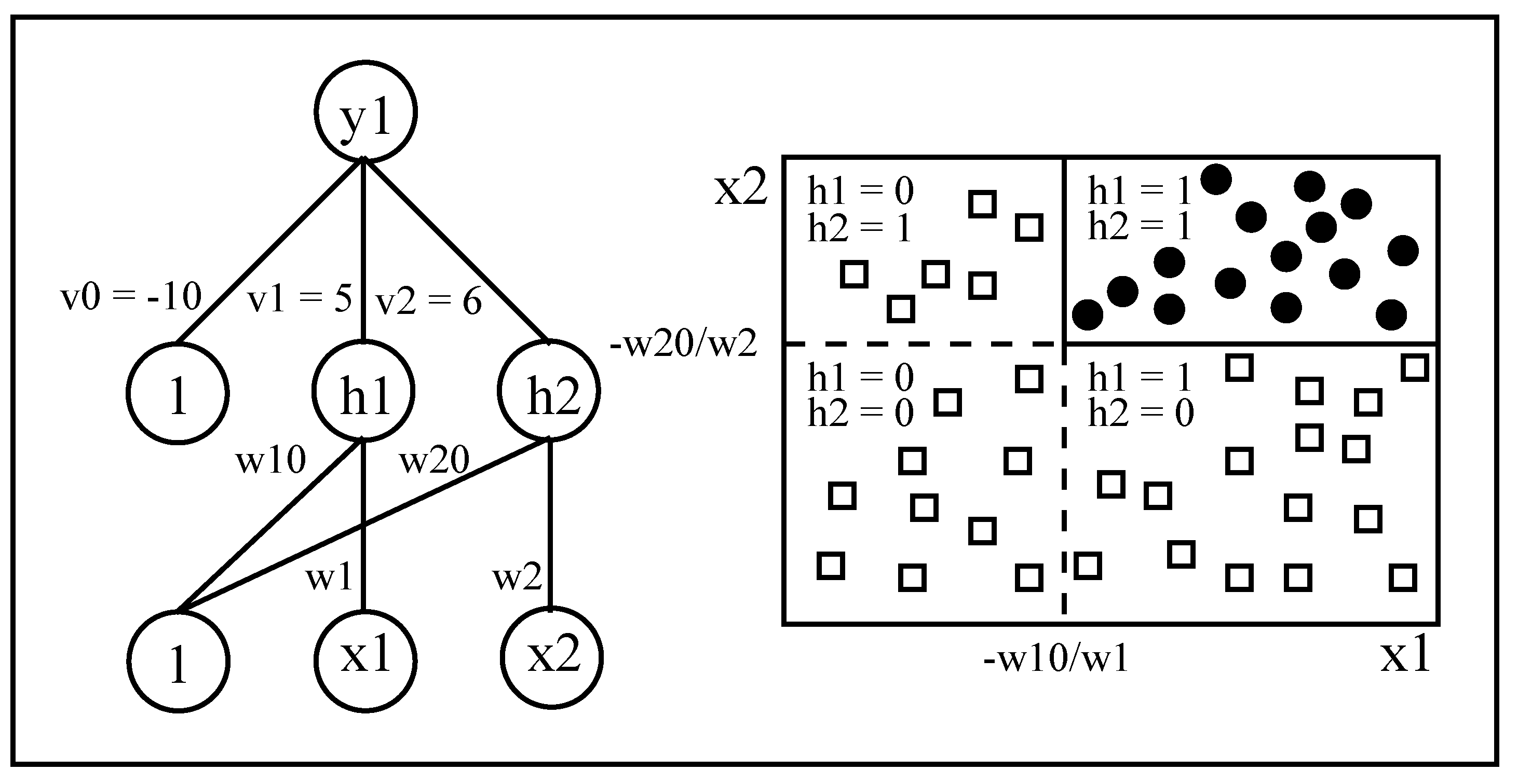

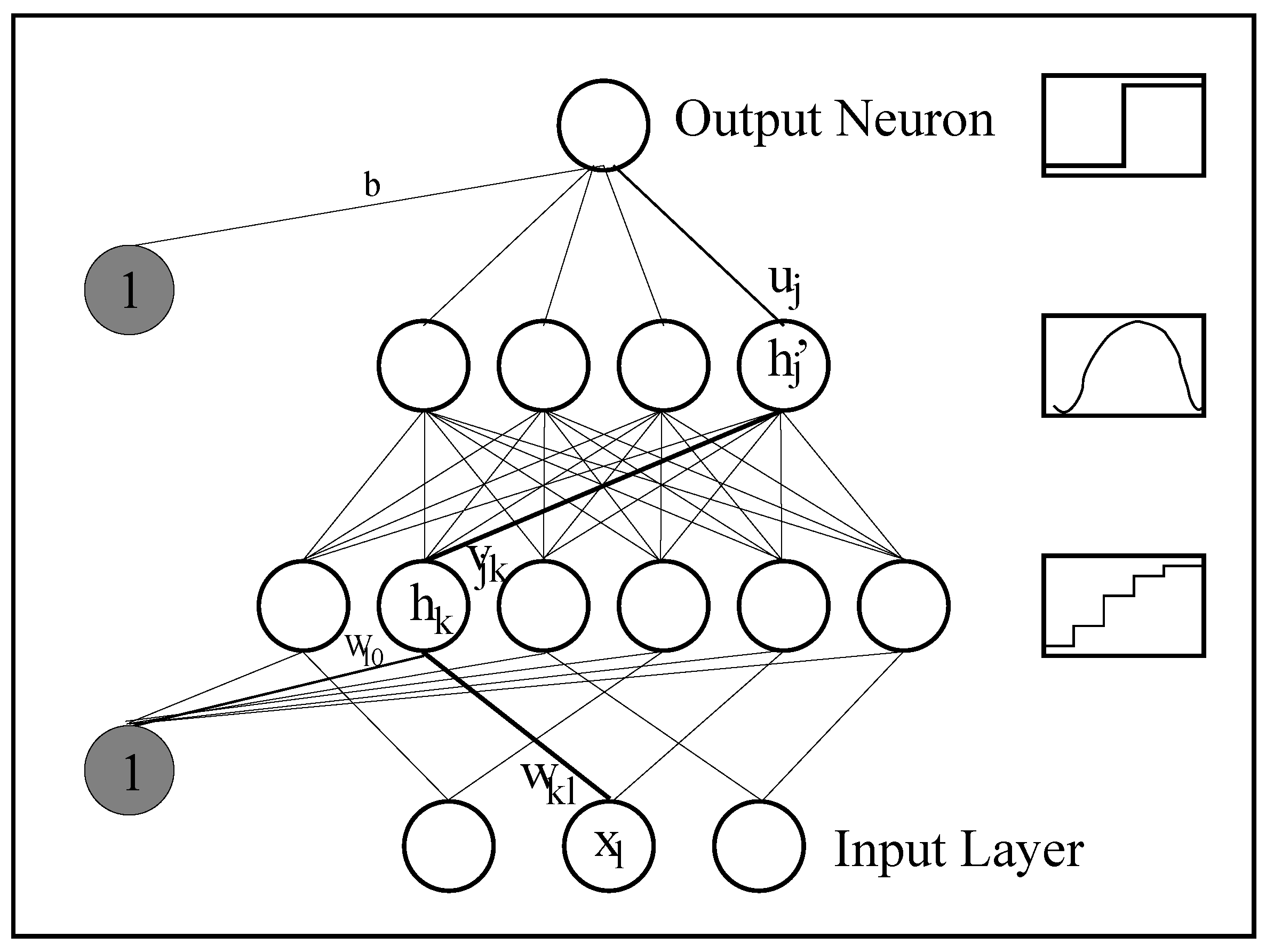

A number of systems have been introduced to extract first-order logic rules directly from data [

16]. However, our approach focuses on propositional rules, as it represents a standard way to explain the knowledge embedded within neural networks. The key idea behind rule extraction from DIMLPs is the precise localization of axis-parallel discriminative hyperplanes. In other words, the multi-layer architecture of this model, which is similar to the standard Multi Layer Perceptron (MLP) makes it possible to split the input space into hyper-rectangles representing propositional rules. Specifically, the first hidden layer creates for each input variable a number of axis-parallel hyperplanes that are effective or not, depending on the weight values of the neurons above the first hidden layer. In practice, during rule extraction the algorithm knows the exact location of axis-parallel hyperplanes and determines their presence/absence in a given hyper-rectangle of the input space. A distinctive feature of this rule extraction technique is that fidelity, which is the degree of matching between network classifications and rules’ classifications is equal to 100%, with respect to the training set. More details on the rule extraction algorithm can be found in [

17].

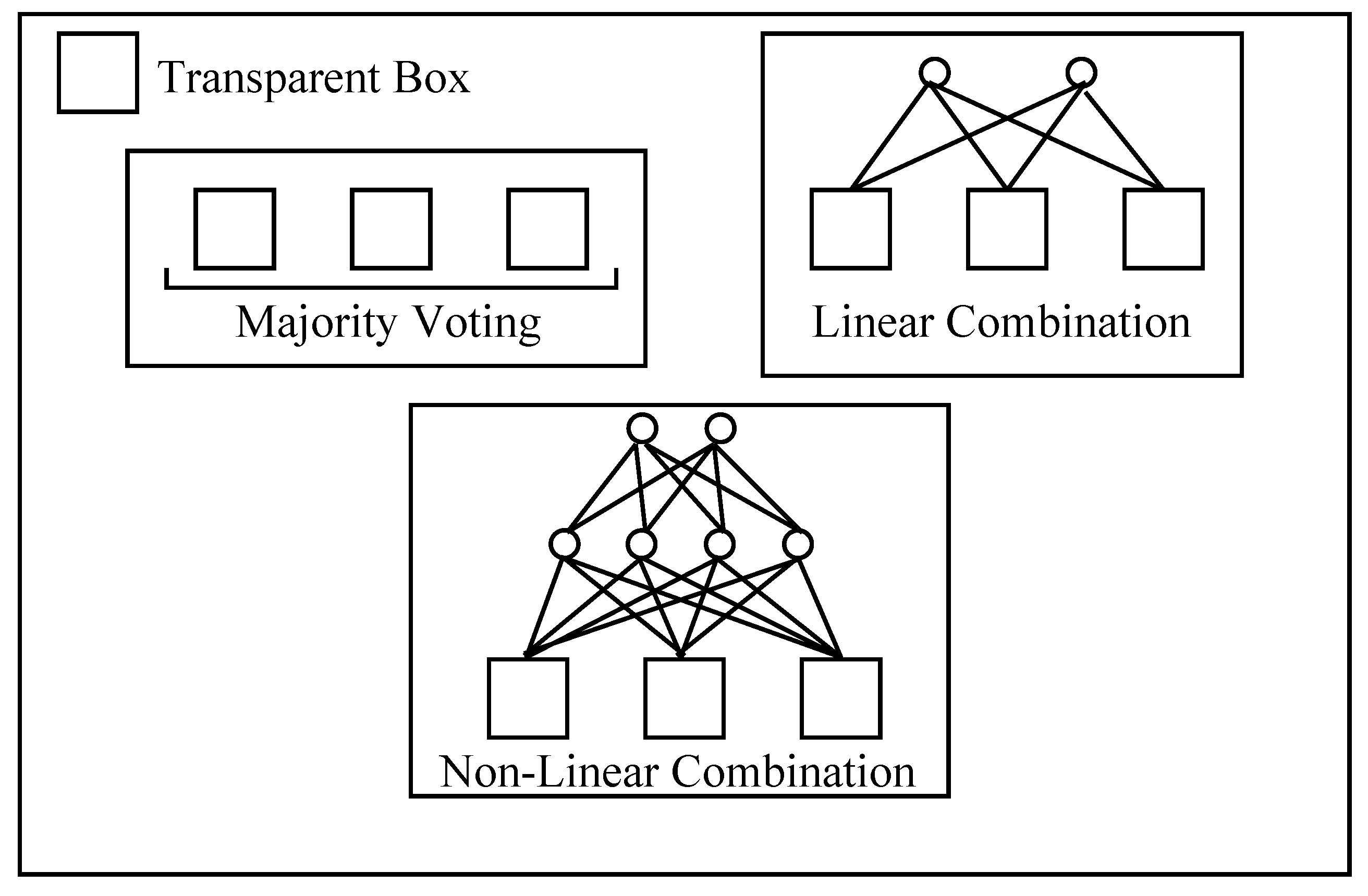

Few authors proposed rule extraction from neural network ensembles. The

Rule Extraction from Neural Network Ensemble algorithm (REFNE) generates new samples from an ensemble used as an oracle [

18]. During rule extraction, input variables are discretized and rules are limited to three antecedents, in order to produce general rules. For König et al., rule extraction from ensembles is an optimization problem in which a trade-off between accuracy and comprehensibility must be taken into account [

19]. They used a genetic programming technique to produce rules from ensembles of 20 neural networks. Hara and Hayashi introduced rule extraction from “two-MLP ensembles” [

20] and “three-MLP” ensembles [

21]. The related rule extraction technique uses C4.5 decision trees and back-propagation to train MLPs, recursively.

Support Vector Machines are functionally equivalent to Multi Layer Perceptrons (MLPs), but are trained in a different way [

4]. One of their main advantages with respect to single MLPs is that they can be very robust in very highly dimensional problems. As a consequence, several rule extraction techniques have been proposed to better understand how classification decisions are taken. Diederich et al. published a book on techniques to extract symbolic rules from SVMs [

22] and Barakat and Bradley reviewed a number of rule extraction techniques applied to SVMs [

23]. To generate rules from SVMs a number of techniques apply a pedagogical approach [

24,

25,

26,

27]. As a first step, training samples are relabeled according to the target class provided by the SVM. Then, the new dataset is learned by a transparent model, such as decision trees [

28], which approximately learn what the SVM has learned. As a variant, only a subset of the training samples are used as the new dataset [

29]. Martens et al. generate additional learning examples close to randomly selected support vectors, before the training of a decision tree algorithm that generates rules [

27]. In another technique Barakat and Bradley generate rules from a subset of the support vectors using a modified covering algorithm, which refines a set of initial rules determined by the most discriminative features [

30]. Fu et al. proposed a method aiming at determining hyper-rectangles whose upper and lower corners are defined by determining the intersection of each of the support vectors with the separating hyperplane [

31]. Specifically, this is achieved by solving an optimization problem depending on the Gaussian kernel. Nunez et al. determine prototype vectors for each class [

32,

33]. With the use of the support vectors, these prototypes are translated into ellipsoids or hyper-rectangles. An iterative process is defined in order to divide ellipsoids or hyper-rectangles into more regions, depending on the presence of outliers and the SVM decision boundary. Similarly, Zhang et al. introduced a clustering algorithm to define prototypes from the support vectors. Then, small hyper-rectangles are defined around these prototypes and progressively grown until a stopping criterion is met. Note that for these two last methods the comprehensibility of the rules is low, since all input features are present in the rule antecedents. More recently, Zhu and Hu presented a rule extraction algorithm that takes into account the distribution of samples [

34]. Then, consistent regions with respect to classification boundaries are formed and a minimal set of rules is derived. In [

35], a general rule extraction technique was proposed. Specifically, this technique generates samples around training data with low confidence in the output score. These new samples are labelled according to the black-box classifications and a transparent model (like decision trees) learns the new dataset. In this work, experiments were performed on 25 classification problems.

Human interpretability of Machine Learning models has become a topic of very active research. Ribeiro et al. presented the LIME framework for generating locally faithful explanations [

36]. The basic idea in LIME is to consider a query point and to perturb it many times, in order to obtain many hypothetical points in the neighborhood. Afterwards, an interpretable model is trained on this hypothetical neighborhood and an explanation is generated. Very recently, human interpretability of Machine Learning models has been strongly discussed (

https://arxiv.org/html/1708.02666) and several general challenges have been formulated [

37]. Henelius et al. introduced the ASTRID technique, which can be applied to any model [

38]. Specifically, it provides insight into opaque classifiers by determining how the inputs are interacting. As stated by the authors, characterizing these interactions makes models more interpretable. Rosenbaum et al. introduced e-QRAQ [

39], in which the explanation problem is itself treated as a learning problem. In practice, a neural network learns to explain the results of a neural computation. As stated by the authors, this can be achieved either with a single network learning jointly to explain its own predictions or with separate networks for prediction and explanation. In the following sections we present first the results obtained with two sentiment analysis problems based on 10-fold classification trials, including examples of important words present in rules, then a discussion and finally the methods describing DIMLP ensembles and QSVMs, followed by the conclusions.

2. Results

RT-2k: standard full-length movie review dataset [

40];

RT-s: short movie reviews [

41].

Target values of the datasets represent positive and negative polarities of user sentiment.

Table 1 illustrates the statistics of the datasets in terms of number of samples, proportion of the majority class, average number of words per sample, and number of input features used for training. Each sample is represented by a vector of binary components representing presence/absence of words. For the RT-2k dataset the number of input vector components is obtained by discarding words that appear less than five times and more than 500 times, while for RT-s all frequent words are taken into account.

In the experiments we compare ensembles of DIMLP networks [

17] and QSVMs [

13]. The number of DIMLPs in an ensemble is equal to 25, since it has been observed many times that for bagging the most substantial improvement in accuracy is achieved with the first 25 networks. Moreover, DIMLPs have a unique hidden layer with a number of hidden neurons equal to the number of input neurons. DIMLP learning parameters related to the backpropagation algorithm are set to default values (as in [

11,

12,

17]):

learning parameter: ;

momentum: ;

Flat Spot Elimination: FSE = 0.01;

number of stairs in the staircase function: .

For QSVMs, we used the dot kernel as the input dimensionality of the two datasets is very large. For this model the default number of stairs in the staircase function is equal to 200. We trained QSVMs with the least-square method [

42]. All experiments are based on one repetition of ten-fold cross-validation. Samples were not shuffled. For instance, for the RT-2k classification problem the first testing fold has the first 100 samples of positive polarity and the first 100 samples of negative polarity, and so on.

2.1. Evaluation of Rules

The complexity of generated rulesets is often defined as a measure of two variables: number of rules; and number of antecedents per rule. Rules can be ordered in a sequential list or unordered. Ordered rules are represented as “If Antecedents Conditions are true then Consequent; Else ...”, whereas unordered rules are formulated without “Else”. Thus, unordered rules represent single pieces of knowledge that can be scrutinized in isolation. With ordered rules, the conditions of the preceding rules are implicitly negated. When one tries to understand a given rule, the negated conditions of previous rules have to be taken into account. Hence, knowledge acquisition is difficult with long lists of rules.

With unordered rules, an unknown sample belonging to the testing set activates zero, one, or several rules. Several activated rules of different class involve an ambiguous decision process. To eliminate conflicts we take into account the classification responses provided by the used model. Specifically, when rules of at least two different classes are activated we discard all the rules that do not correspond to the class provided by the model. If all rules are discarded the classification of a sample is considered wrong, since rules are ambiguous. Note that for the training set the degree of matching between DIMLP classifications and rules, also denoted as Fidelity is equal to 100%. Finally, the rule extraction algorithm ensures that there are no conflicts on this set.

Predictive accuracy is the proportion of correct classified samples of an independent testing set. With respect to the rules it can be calculated by three distinct strategies:

Classifications are provided by the rules. If a sample does not activate any rule the class is provided by the model without explanation.

Classifications are provided by the rules, when rules and model agree. In case of disagreement no classification is provided. Moreover, if a sample does not activate any rule the class is provided by the model (without explanation).

Classifications are provided by the rules, only when rules and model agree. In case of disagreement the classification is provided by the model without any explanation, Moreover, if a sample does not activate any rule the class is again provided by the model (without explanation).

By following the first strategy, the unexplained samples are only those that do not activate any rule. For the second one, in case of disagreement between rules and models no classification response is provided; in other words the classification is undetermined. Finally, the predictive accuracy of rules and models are equal in the last strategy, but with respect to the first strategy we have a supplemental proportion of uncovered samples; those for which rules and models disagree.

2.2. Experiments with RT-2k Dataset

Table 2 shows the results obtained with the RT-2k dataset by DIMLP ensembles trained by bagging (DIMLP-B), QSVMs using the dot kernel (QSVM-D) and naive Bayes as a baseline. From left to right are given the average values of: predictive accuracy of the models; predictive accuracy of the rules; predictive accuracy of the rules when rules and models agree; fidelity; number of rules; number of antecedents per rule; and proportion of default rule activations. With respect to the work reported by Pang and Lee, QSVM-D obtained very close average predictive accuracy (86.4% versus 86.2%) [

41]. The average predictive accuracy reached by DIMLP ensembles is lower than that of QSVM-D (84.1% versus 86.4%); however average fidelity is better (91.2% versus 86.5%), with less generated rules (205.6 versus 250.4), on average.

As stated above (cf.

Section 2.1), predictive accuracy of rules can be calculated in three different ways. Firstly, predictive accuracy is measured with the rules (cf. third column of

Table 2). In this case, unexplained classifications are only those related to the proportion of default rule activations, denoted as

D. Secondly, it is possible to consider that classification responses are undetermined when models and rules disagree. This happens on a proportion of testing samples equal to

, with

F as fidelity, the proportion of undetermined or unexplained classifications being equal to

. Lastly, predictive accuracy is again calculated with respect to the rules when rules and models agree. Without undetermined classifications, the proportion of unexplained responses is equal to

, with unexplained responses provided by the models. Thus, predictive accuracy of the rules corresponds to predictive accuracy of the models with a proportion of uncovered samples greater than that related to the first case.

As an example, the third way to calculate predictive accuracy of rules for QSVM-D gives a value equal to 86.4%, with a proportion of unexplained classifications equal to 16.8% (1 − 86.5% + 3.3%). Using the second strategy we obtain predictive accuracy of rules equal to 87.3%, with undetermined classifications for 13.5% of the testing samples and a proportion of unexplained or unclassified samples equal to 16.8% (13.5% + 3.3%). From these numbers we can clearly see a trade-off between predictive accuracy of rules and the proportion of explained samples. Specifically, predictive accuracy of rules calculated with the first strategy is equal to 78.3% and the proportion of explained samples is equal to 96.7%, but predictive accuracy rise up to 86.4% when rules explain 83.2% (1 − 16.8%) of the testing samples.

During cross-validation trials, extracted rules are ranked in descending order according to the number of covered samples in the training set. The first rule obtained for each training set covers approximately 10% of the training data. The majority of the rules has only a word that must be present and many words that must be absent.

Table 3 and

Table 4 illustrate the words that must be present in the top 20 rules generated from QSVM-D. Moreover, each cell shows between parenthesis the polarity of the rule and the number of words that must be absent. Note that for each fold the first rule has only absent words.

Let us illustrate the knowledge embedded within the rules. Rules generated from QSVMs or DIMLP ensembles are structured as conjunctions of present/absent words. For instance, with respect to the first testing fold the fourth rule is given as: “If stupid and not different and not excellent and not hilarious and not others and not performances and not supposed then Class = Negative”. Again in this fold, the second rule tells us that when word performances is present and a list of words absent (awful, boring, disappointing, embarrassing, fails, falls, laughable, material, obvious, predictable, ridiculous, save, supposed, ten, unfortunately, unfunny, wasted, worst), then the sentiment polarity is positive. Note that a substantial proportion of absent words are clearly related to negative polarity. Now, let us give a non-exhaustive list of sentences activating this rule (which is correct ten times out of eleven):

… is one of the very best performances this year … performances are all terrific …

djimon hounsou and anthony hopkins turn in excellent performances

… but the music and live performances from the band, play a big part in the movie … well-paced movie with a solid central showing by wahlberg and energetic live performances

there are good performances by hattie jacques as the matron …

The fourth rule (first testing fold), which is correct seven times out of eight is activated when ridiculous is present and seven other words absent: (+, 17, damon, happy, perfect, progresses, supervisor). Examples of sentences activating this rule are:

… schumacher tags on a ridiculous self-righteous finale that drags the whole unpleasant experience down even further

… for this ridiculous notion of a sports film

… and the ridiculous words these characters use …

Relatively to the first fold, the seventh rule is correct ten times out of eleven. It is activated when boring is present and the following words are absent: accident; allows; brilliant; different; flaws; highly; memorable; others; outstanding; performances; solid; strong; true. Examples of sentences covered by this rule are:

… and then prove to be just something boring

… the story is just plain boring … it was boring for stretches

… it’s now * twice * as boring as it might have been

… isn’t complex, it’s just stupid. and boring

… then it’s going to be incredibly boring

… that could turn out to be the most boring movie ever made

… boring film without the spark that enlivened his previous work

The last example is related to rule number fourteen, which is true with the presence of hilarious and without awful, boring, embarrassing, laughable, looks, mess, potential, stupid, ten, unfunny, worse, worst, writers. This rule is correct five times out of five. All the sentences activating it are:

some of the segments are simply hilarious

… still sit around delivering one caustic, hilarious speech after another

… real problems told in an often hilarious way

hilarious situations, …

… as he’s going through the motions of his job are insightful and often hilarious

From the naive Bayes classifier, since the discriminant boundary is linear in the log-Space, we determine the most important words according to the highest weight magnitudes. The following list illustrates the top 15 negative/positive words, with respect to the first training fold:

worst, boring, stupid, supposed, looks, unfortunately, ridiculous, reason, waste, awful, wasted, maybe, mess, none, worse;

perfect, performances, especially, true, different, wonderful, throughout, perfectly, others, shows, works, strong, hilarious, memorable, excellent.

In

Table 5 we show for QSVM-D how varies the complexity of rules according to the number of training samples for rule extraction. For instance, in the first row only 5% of training samples are used. As a matter of fact, the less the number of samples the lowest fidelity and complexity in terms of number of rules and number of antecedents per rule. Similarly, predictive accuracy of rules (second column) decreases with fidelity. Nevertheless, predictive accuracy of rules when network and rules agree (third column) reaches a peak at 88%, when 30% of the samples are used. Finally,

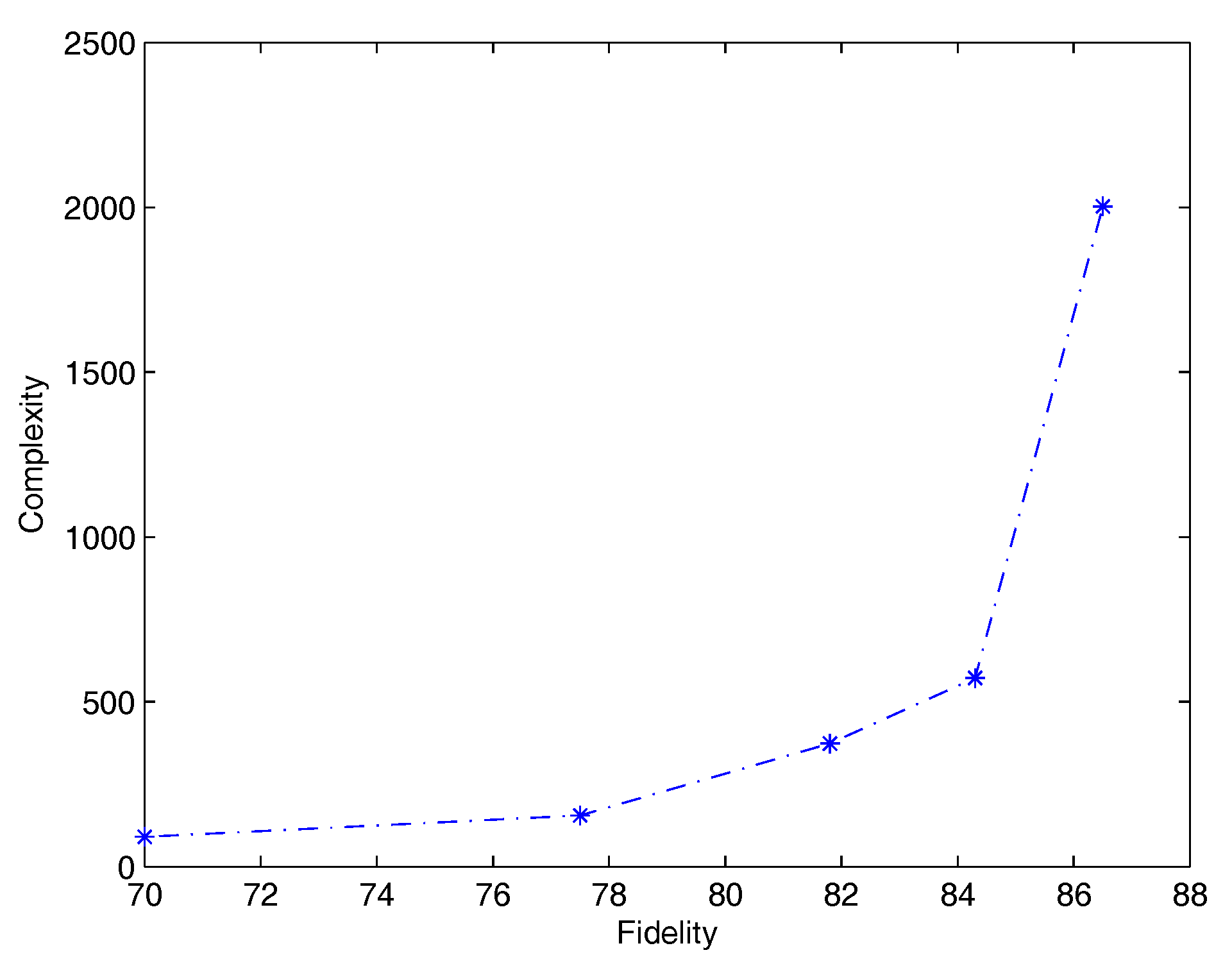

Figure 1 shows average complexity (which is the product of the average number of rules by the average number of antecedents per rule) versus average fidelity. It is worth noting how the slope becomes significantly steeper for the last segment, when fidelity is between 84.3% and 86.5%.

2.3. Experiments with RT-s Dataset

Table 6 shows the results obtained with the RT-s dataset. From left to right are given the average values of: predictive accuracy of the models; predictive accuracy of the rules; predictive accuracy of the rules when rules and models agree; fidelity; number of rules; number of antecedents per rule; and proportion of default rule activations. Following the same experimental protocol reported in [

43], QSVM-D obtained very close average predictive accuracy (76.0% versus 76.2%). The average predictive accuracy obtained by Naive Bayes was also very good, with a value equal to 75.9%. It is well-known that depending on the classification problem, this simple classifier can perform remarkably. The average predictive accuracy reached by DIMLP ensembles is lower than that of QSVM-D (74.9% versus 76.0%); however fidelity is higher (94.8% versus 93.8%), with less generated rules (801.1 versus 919.8). Here the number of rules is greater than that obtained in the RT-2k dataset; the reason is likely to be the number of samples, which is also greater (10,662 versus 2000).

Likewise the RT-2k dataset, the majority of the rules has only a word that must be present and many words that must be absent.

Table 7 and

Table 8 illustrate the words that must be present in the top 20 rules generated from QSVMs. Moreover, each cell shows the polarity of the rule and the number of words that must be absent. Note that for each fold the first rule has only words that must be absent.

Let us have a look to the 18th rule of the second testing fold. Specifically, it requires the presence of bad and the absence of good, families, flaws, perfectly, solid, usual, wonderful. This rule of negative polarity is correct 22 times out of 23; a few examples of sentences are:

the tuxedo wasn’t just bad; it was, as my friend david cross would call it, ’hungry-man portions of bad’

a seriously bad film with seriously warped logic …

… afraid of the bad reviews they thought they’d earn. they were right

… and reveals how bad an actress she is

much of the cast is stiff or just plain bad

new ways of describing badness need to be invented to describe exactly how bad it is

With respect to the last testing fold we illustrate examples of the presence of important words in positive sentences; they are are shown in bold. Many times for these sentences more than one rule is activated. These rules, often ranked after the twentieth rule are not shown because they can involve numerous words required to be absent.

a POWERFUL, CHILLING, and AFFECTING STUDY of one man’s dying fall

it WORKS ITS MAGIC WITH such exuberance and passion that the film’s length becomes a PART of ITS FUN

… GIVES his BEST screen PERFORMANCE WITH AN oddly WINNING portrayal of one of life’s ULTIMATE losers

YOU needn’t be steeped in ’50s sociology , pop CULTURE OR MOVIE lore to appreciate the emotional depth of haynes’ WORK. Though haynes’ STYLE apes films from the period …

the FILM HAS a laundry list of MINOR shortcomings, but the numerous scenes of gory mayhem are WORTH the price of admission … if gory mayhem is your idea of a GOOD time

BEAUTIFULLY crafted and brutally HONEST, promises OFFERS an UNEXPECTED window into the complexities of the middle east struggle and into the humanity of ITS people

buy is an ACCOMPLISHED actress, and this is a big, juicy role

Note that in the illustrated positive tweets many words have a clear positive connotation, such as: powerful; magic; fun; best; winning; beautifully; fun; accomplished; worth; good; etc. Now, let us have a look to examples of relevant words extracted from rules of negative polarity.

despite its visual virtuosity, ’naqoyqatsi’ is BANAL in its message and the choice of MATERIAL to convey it

a great script brought down by LOUSY direction. SAME GUY with BOTH hats. big MISTAKE

a MEDIOCRE EXERCISE in target demographics, unaware that it’s the butt of its OWN JOKE

DESPITE the opulent lushness of EVERY scene, the characters NEVER seem to match the power of their surroundings

simone is NOT a BAD FILM. it JUST DOESN’T have anything REALLY INTERESTING to say

all mood and NO MOVIE

it has the right approach and the right opening PREMISE, but it LACKS the zest and it goes for a PLOT twist INSTEAD of trusting the MATERIAL

earnest YET curiously tepid and CHOPPY recycling in which predictability is the only winner

…we assume HE had a bad run in the market or a costly divorce, because there is NO earthly REASON other than MONEY why THIS distinguished actor would stoop so LOW

From the naive Bayes classifier, we determine the most important words according to the highest weight magnitudes. The following list illustrates the top 15 negative/positive words, with respect to the first training fold:

movie, too, bad, like, no, just, or, so, have, only, this, more, ?, than, but;

film, with, an, funny, performances, best, most, its, love, both, entertaining, heart, life, you, us.

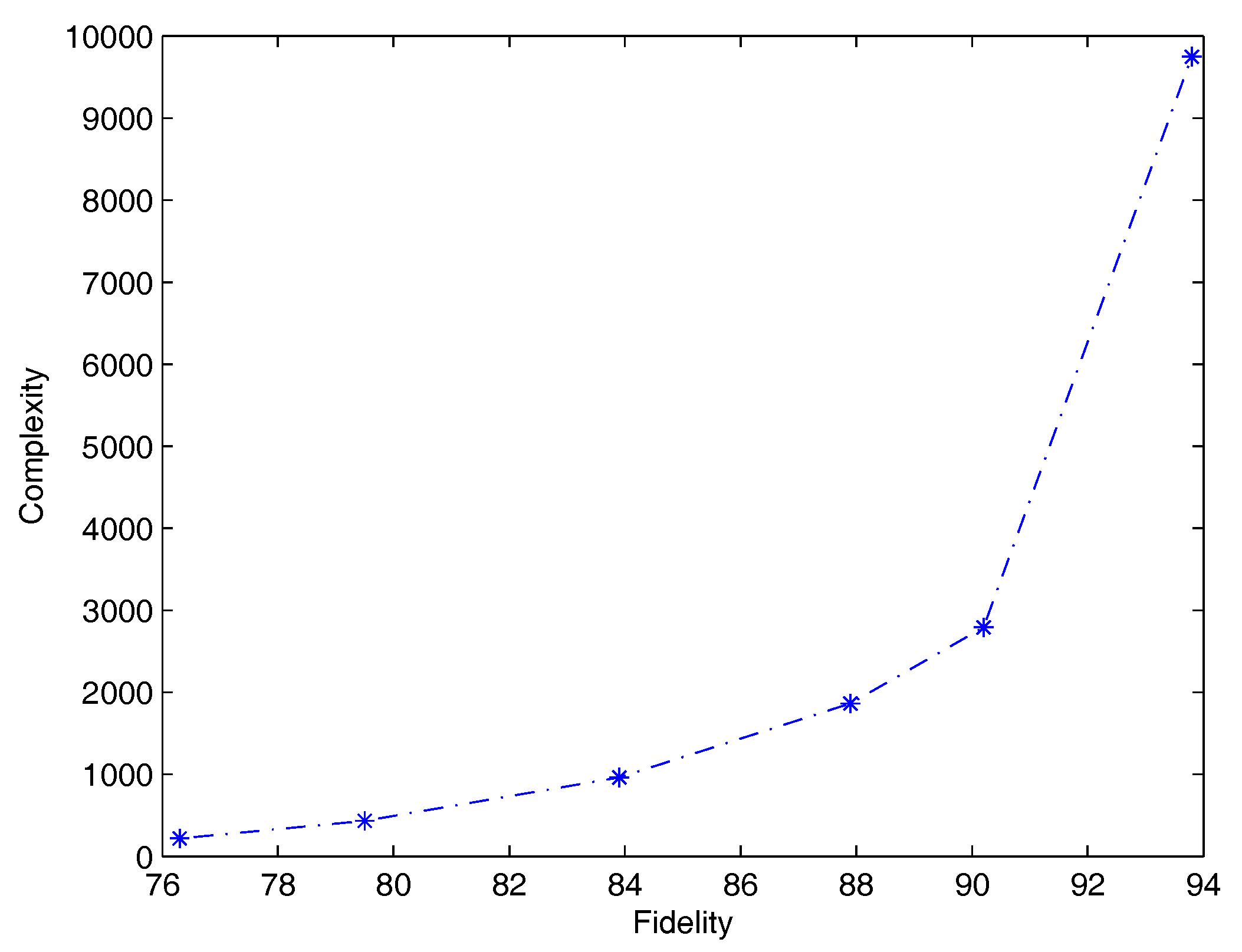

Table 9 illustrates for QSVM-D how varies the complexity of rules according to the number of training samples for rule extraction. As in the RT-2k classification problem, the less the number of samples the lowest fidelity and complexity. Moreover, predictive accuracy of rules (second column) decreases with fidelity. Note however that predictive accuracy of rules when network and rules agree (third column), reaches a peak at 77.1% with only 5% of training samples used during rule extraction. Finally,

Figure 2 shows average complexity versus average fidelity.

3. Discussion

Based on cross-validation, our purpose was first to train models able to reach average predictive accuracy similar to that obtained in [

41,

43]. Then, after rule extraction we determined the complexity of the rulesets, as well as their aspect. In many cases rules can be viewed as feature detectors requiring the presence of only one word and the absence of several words. We also observed that a clear tradeoff between accuracy, fidelity, and covering is clearly present and that one of these parameters can be privileged to the detriment of the others. For instance, at some point the increment of ruleset complexity is very strong with respect to few percentages of fidelity improvement. In other words, the last percentages of fidelity increment are at a high cost of interpretation. Thus, it would not be worth generating supplementary samples from QSVM classifications in order to try to reach 100% fidelity, since ruleset complexity would augment very quickly.

In previous work related to rule extraction from QSVMs and ensembles of DIMLPs applied to benchmark classification problems [

13], less average number of rules and less average antecedents were generated than in this study. One of the reasons to explain this difference could be the highest number of inputs and perhaps the characteristics of the classification problems. Specifically, positive/negative polarities in sentiment analysis are related to a large number of words in which synonyms play an important role. Thus, it is plausible to observe a large number of antecedents, especially with unordered rules rather than ordered rules involving implicit antecedents.

The top 20 rules generated from the ten training folds require in almost all the cases the presence of a unique word. For the first problem many of these mandatory words such as: {annoying; awful; boring; bland; dull; fails; lame; mess; poor; predictable; ridiculous; stupid; unfortunately; waste; wasted; worse; worst} clearly designate negative polarities. Likewise, for the positive polarities we have: {absolutely; brilliant; completely; definitely; enjoyed; enjoyable; extremely; hilarious; memorable; outstanding; perfect; perfectly; powerful; realistic; solid; terrific; wonderful}. For the second problem, the required words for the top 20 rules are less relevant to the positive/negative polarity. Nevertheless, the related long lists of words that must be absent contain relevant words, as well as many words in rules covering less training samples than the twentieth rule.

The average predictive accuracy obtained with the RT-2k dataset is higher than that related to RT-s. The reason is likely to be that movie reviews in RT-2k are substantially longer; thus, discriminatory words are more likely to be encountered. This could also explain why words that have to be present in the top rules present less relevant words with respect to the RT-2k problem.