Reinforcement Learning and Stochastic Optimization with Deep Learning-Based Forecasting on Power Grid Scheduling

Abstract

:1. Introduction

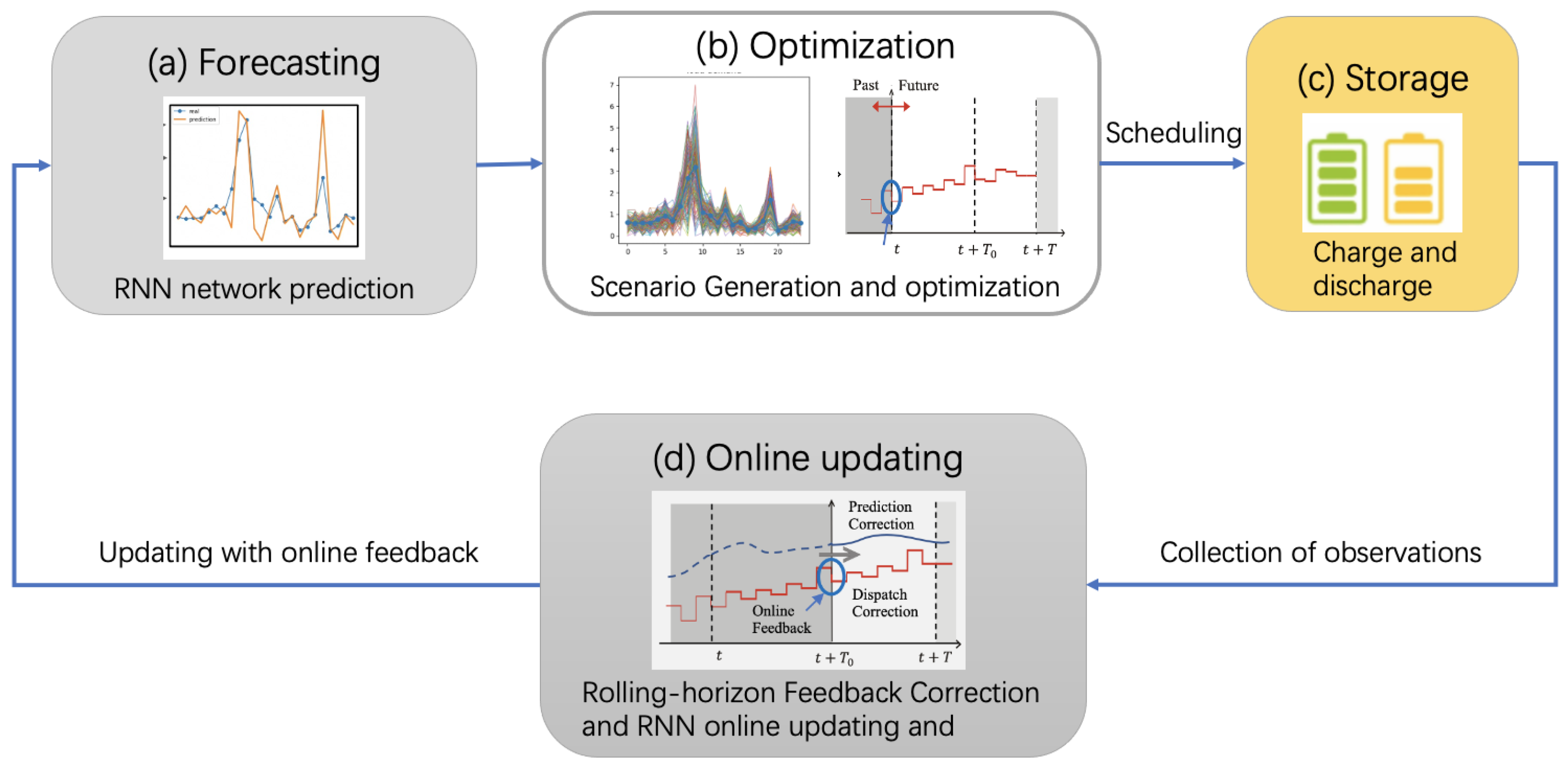

- To adapt to uncertainty, we propose two modules to achieve robust scheduling. One module combines deep learning-based prediction techniques with stochastic optimization methods, while the other module is an online data augmentation strategy, including stages of model pre-training and fine-tuning.

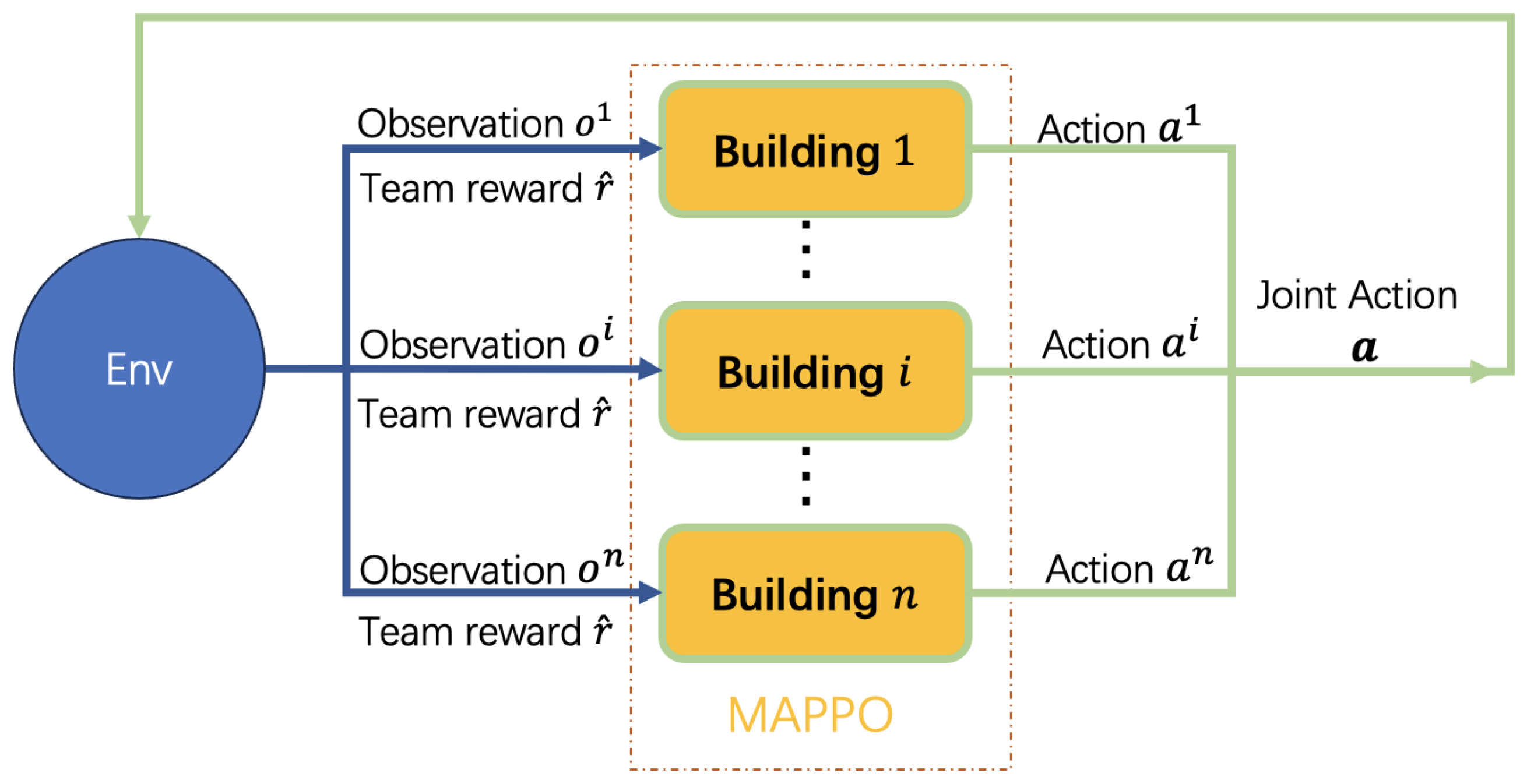

- In order to realize sharing rewards among buildings, we propose to use multi-agent PPO to simulate each building. Additionally, we provide the ensemble method between reinforcement learning and optimization methods.

- We conducted extensive experiments on a real-world scenario and the results demonstrate the effectiveness of our proposed framework.

2. Problem Statement

- (2)

- Electricity need bounds from national grid: larger than zero and without upper bounds.

- (3)

- () denotes the lower bound of each electricity generation device, such as solar generation, while () denotes the upper bound.

- (4)

- () represents the upper limit for battery/storage charging at timestamp t, while () represents the upper limit for discharging.

- (5)

- represents the lower value of soc (state of charge), while denotes the upper value, and the second equation denotes the updating of the soc.

- (6)

- This equation makes sure the power grid is stable (the sum of power generation is equal to the sum of power consumption).

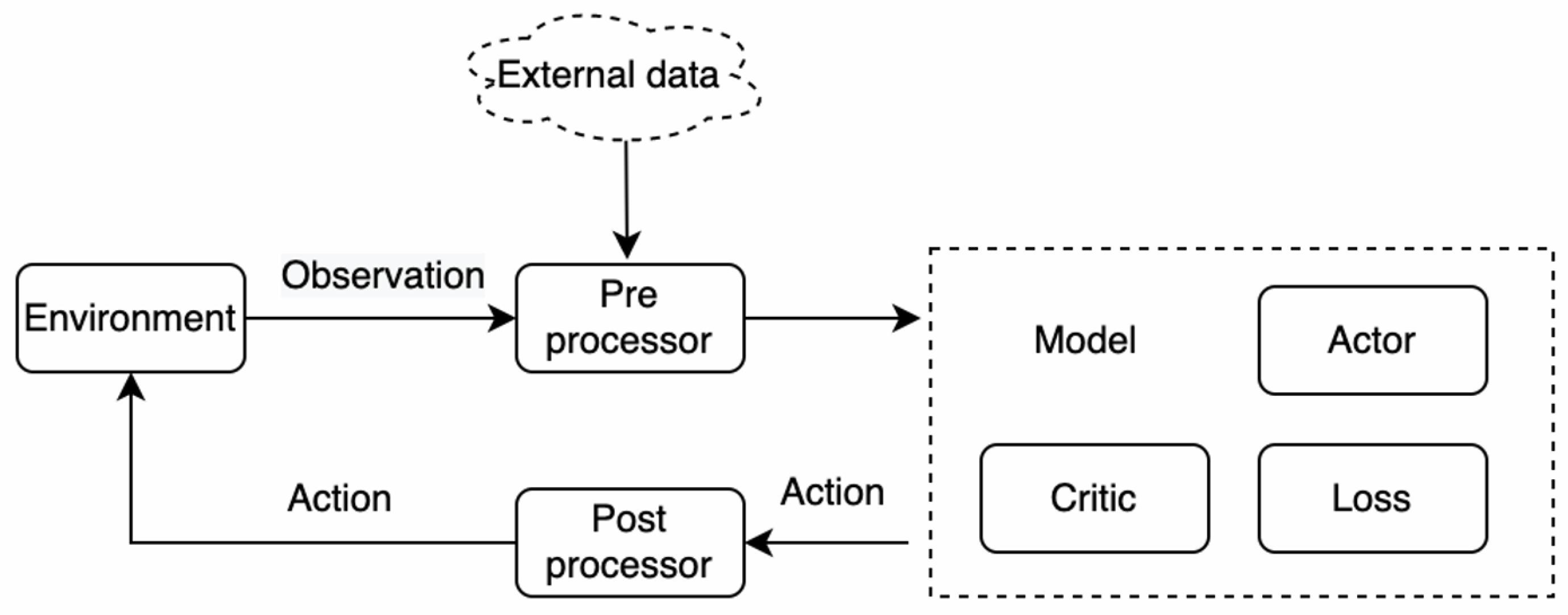

3. Framework

3.1. Feature Engineering

- The user loads of past months;

- The electricity generation of past months;

- The radiance of solar direct or diffuse;

- Detailed time including the hour of the day, the day of the week, and the day of the month;

- The forecasting weather information including the values of humidity, temperature, and so on;

- The key components detailed before;

- The predictions of user load and electricity generation;

- The number of solar generation units in each building;

- The efficiency and capacity of the storage in each building;

- Market prices including the values for electricity and carbon;

3.2. Deep Learning-Based Forecasting Model

3.3. Reinforcement Learning

3.4. Optimization

3.4.1. Stochastic Optimization

3.4.2. Online Data Augmentation

Pre-Training and Fine-Tuning

Rolling-Horizon Feedback Correction

4. Experiments

4.1. Experiment Setup

4.1.1. Dataset

4.1.2. Metric

4.1.3. Baseline

- RBC: Rule-Based Control method. We tested several strategies and selected the best one: charging the battery by 10% of its capacity between 10 a.m. to 2 p.m., followed by discharging it by the same amount between 4 p.m. to 8 p.m.

4.1.4. Implementations

4.2. Results

4.3. Ablation Studies

4.3.1. Analysis of Online Data Augmentation

4.3.2. Analysis of Forecasting Models

4.3.3. Analysis of Stochastic Optimization

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gama, J.; Žliobaitė, I.; Bifet, A.; Pechenizkiy, M.; Bouchachia, A. A survey on concept drift adaptation. ACM Comput. Surv. (CSUR) 2014, 46, 1–37. [Google Scholar] [CrossRef]

- Elsheikh, A.H.; Saba, A.I.; Panchal, H.; Shanmugan, S.; Alsaleh, N.A.; Ahmadein, M. Artificial intelligence for forecasting the prevalence of COVID-19 pandemic: An overview. Healthcare 2021, 9, 1614. [Google Scholar] [CrossRef] [PubMed]

- Torres, J.F.; Hadjout, D.; Sebaa, A.; Martínez-Álvarez, F.; Troncoso, A. Deep learning for time series forecasting: A survey. Big Data 2021, 9, 3–21. [Google Scholar] [CrossRef] [PubMed]

- Lara-Benítez, P.; Carranza-García, M.; Riquelme, J.C. An experimental review on deep learning architectures for time series forecasting. Int. J. Neural Syst. 2021, 31, 2130001. [Google Scholar] [CrossRef] [PubMed]

- Sina, L.B.; Secco, C.A.; Blazevic, M.; Nazemi, K. Hybrid Forecasting Methods—A Systematic Review. Electronics 2023, 12, 2019. [Google Scholar] [CrossRef]

- Camacho, E.F.; Alba, C.B. Model Predictive Control; Springer Science & Business Media: London, UK, 2013. [Google Scholar]

- Hewing, L.; Wabersich, K.P.; Menner, M.; Zeilinger, M.N. Learning-based model predictive control: Toward safe learning in control. Annu. Rev. Control Robot. Auton. Syst. 2020, 3, 269–296. [Google Scholar] [CrossRef]

- Muralitharan, K.; Sakthivel, R.; Vishnuvarthan, R. Neural network based optimization approach for energy demand prediction in smart grid. Neurocomputing 2018, 273, 199–208. [Google Scholar] [CrossRef]

- Elmachtoub, A.N.; Grigas, P. Smart “predict, then optimize”. Manag. Sci. 2022, 68, 9–26. [Google Scholar] [CrossRef]

- Lauro, F.; Longobardi, L.; Panzieri, S. An adaptive distributed predictive control strategy for temperature regulation in a multizone office building. In Proceedings of the 2014 IEEE International Workshop on Intelligent Energy Systems (IWIES), San Diego, CA, USA, 8 October 2014; pp. 32–37. [Google Scholar]

- Heirung, T.A.N.; Paulson, J.A.; O’Leary, J.; Mesbah, A. Stochastic model predictive control—How does it work? Comput. Chem. Eng. 2018, 114, 158–170. [Google Scholar] [CrossRef]

- Yan, S.; Goulart, P.; Cannon, M. Stochastic model predictive control with discounted probabilistic constraints. In Proceedings of the 2018 European Control Conference (ECC), IEEE, Limassol, Cyprus, 12–15 June 2018; pp. 1003–1008. [Google Scholar]

- Paulson, J.A.; Buehler, E.A.; Braatz, R.D.; Mesbah, A. Stochastic model predictive control with joint chance constraints. Int. J. Control 2020, 93, 126–139. [Google Scholar] [CrossRef]

- Shang, C.; You, F. A data-driven robust optimization approach to scenario-based stochastic model predictive control. J. Process Control 2019, 75, 24–39. [Google Scholar] [CrossRef]

- Bradford, E.; Imsland, L.; Zhang, D.; del Rio Chanona, E.A. Stochastic data-driven model predictive control using gaussian processes. Comput. Chem. Eng. 2020, 139, 106844. [Google Scholar] [CrossRef]

- Ioannou, P.A.; Sun, J. Robust Adaptive Control; Courier Corporation: Chelmsford, MA, USA, 2012. [Google Scholar]

- Åström, K.J.; Wittenmark, B. Adaptive Control; Courier Corporation: Chelmsford, MA, USA, 2013. [Google Scholar]

- Liu, X.; Paritosh, P.; Awalgaonkar, N.M.; Bilionis, I.; Karava, P. Model predictive control under forecast uncertainty for optimal operation of buildings with integrated solar systems. Sol. Energy 2018, 171, 953–970. [Google Scholar] [CrossRef]

- Yu, C.; Velu, A.; Vinitsky, E.; Gao, J.; Wang, Y.; Bayen, A.; Wu, Y. The Surprising Effectiveness of PPO in Cooperative Multi-Agent Games. Adv. Neural Inf. Process. Syst. 2022, 35, 24611–24624. [Google Scholar]

- Aicrowd. Neurips 2022 Citylearn Challenge. Available online: https://www.aicrowd.com/challenges/neurips-2022-citylearn-challenge (accessed on 18 July 2022).

- Cho, K.; van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder–Decoder Approaches. In Proceedings of the SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation, Doha, Qatar, 25 October 2014; pp. 103–111. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Graves, A. Generating sequences with recurrent neural networks. arXiv 2013, arXiv:1308.0850. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Wurdemann, H.A.; Stilli, A.; Althoefer, K. Lecture notes in computer science: An antagonistic actuation technique for simultaneous stiffness and position control. In Proceedings of the Intelligent Robotics and Applications: 9th International Conference, ICIRA 2015, Portsmouth, UK, 24–27 August 2015; Proceedings, Part III. Springer: Cham, Switzerland, 2015; pp. 164–174. [Google Scholar]

- Sultana, W.R.; Sahoo, S.K.; Sukchai, S.; Yamuna, S.; Venkatesh, D. A review on state of art development of model predictive control for renewable energy applications. Renew. Sustain. Energy Rev. 2017, 76, 391–406. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, Red Hook, NY, USA, 4–9 December 2017; pp. 3149–3157. [Google Scholar]

- Kathirgamanathan, A.; Twardowski, K.; Mangina, E.; Finn, D.P. A Centralised Soft Actor Critic Deep Reinforcement Learning Approach to District Demand Side Management through CityLearn. In Proceedings of the 1st International Workshop on Reinforcement Learning for Energy Management in Buildings & Cities, RLEM’20, New York, NY, USA, 17 November 2020; pp. 11–14. [Google Scholar]

- Varelas, K.; Auger, A.; Brockhoff, D.; Hansen, N.; ElHara, O.A.; Semet, Y.; Kassab, R.; Barbaresco, F. A comparative study of large-scale variants of CMA-ES. In Proceedings of the Parallel Problem Solving from Nature—PPSN XV: 15th International Conference, Coimbra, Portugal, 8–12 September 2018; Proceedings, Part I 15. Springer: Cham, Switzerland, 2018; pp. 3–15. [Google Scholar]

- Vázquez-Canteli, J.R.; Kämpf, J.; Henze, G.; Nagy, Z. CityLearn v1.0: An OpenAI gym environment for demand response with deep reinforcement learning. In Proceedings of the 6th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, New York, NY, USA, 13–14 November 2019; pp. 356–357. [Google Scholar]

- MindOpt. MindOpt Studio. Available online: https://opt.aliyun.com/platform/overview (accessed on 20 October 2022).

| Methods | Overall Performance | |||

|---|---|---|---|---|

| Average Cost | Emission | Price | Grid | |

| RBC | 0.921 | 0.964 | 0.817 | 0.982 |

| MPC | 0.861 | 0.921 | 0.746 | 0.916 |

| AMPC | 0.827 | 0.859 | 0.750 | 0.872 |

| ES | 0.812 | 0.863 | 0.748 | 0.827 |

| SAC | 0.834 | 0.859 | 0.737 | 0.905 |

| MAPPO | 0.810 | 0.877 | 0.726 | 0.826 |

| Optimization | 0.804 | 0.871 | 0.719 | 0.822 |

| Ensemble | 0.801 | 0.864 | 0.718 | 0.821 |

| Forecast Model | Online Update | Dispatch | Forecast (WMAPE) | ||

|---|---|---|---|---|---|

| Average | Time | Load | Solar | ||

| Linear | ✗ | 0.878 | 8 s | 42.1% | 27.3% |

| GBDT | 0.875 | 8 s | 44.7% | 10.7% | |

| RNN | 0.876 | 9 s | 46.0% | 10.7% | |

| Transformer | 0.879 | 11 s | 45.3% | 10.6% | |

| Linear | ✓ Self-Adaptive Linear Correction | 0.871 | 8 s | 39.4% | 21.2% |

| GBDT | 0.868 | 9 s | 39.5% | 9.4% | |

| RNN | 0.866 | 10 s | 39.3% | 9.3% | |

| Transformer | 0.869 | 11 s | 39.9% | 9.1% | |

| RNN | ✓ Online Fine-tuning | 0.862 | 11 s | 39.0% | 9.0% |

| Transformer | 0.864 | 12 s | 39.3% | 9.1% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, C.; Zhang, J.; Jiang, W.; Wang, L.; Zhang, H.; Yi, Z.; Lin, F. Reinforcement Learning and Stochastic Optimization with Deep Learning-Based Forecasting on Power Grid Scheduling. Processes 2023, 11, 3188. https://doi.org/10.3390/pr11113188

Yang C, Zhang J, Jiang W, Wang L, Zhang H, Yi Z, Lin F. Reinforcement Learning and Stochastic Optimization with Deep Learning-Based Forecasting on Power Grid Scheduling. Processes. 2023; 11(11):3188. https://doi.org/10.3390/pr11113188

Chicago/Turabian StyleYang, Cheng, Jihai Zhang, Wei Jiang, Li Wang, Hanwei Zhang, Zhongkai Yi, and Fangquan Lin. 2023. "Reinforcement Learning and Stochastic Optimization with Deep Learning-Based Forecasting on Power Grid Scheduling" Processes 11, no. 11: 3188. https://doi.org/10.3390/pr11113188