1. Introduction

Visual tracking can be considered as finding the minimum distance in feature space between the current position of the tracked object to the subspace represented by the previously stored data or previous tracking results. Visual tracking systems have, for a long time, employed hand-crafted similarity metrics for this purpose, such as the Euclidean distance [

1], Matusita metric [

2], Bhattacharyya coefficient [

3], and Kullback–Leibler [

4] and information-theoretic divergences [

5].

Visual tracking has seen tremendous progress in recent years in robotics and monitoring applications. It aims to address the issues caused by noise, clutter, occlusion, illumination changes, and viewpoints (e.g., in mobile or aerial robotics). There have been numerous attempts to design and deploy a robust tracking method; however, the work continues. Since the seminal work of Bolme et al. [

6], correlation-based filters have enjoyed immense popularity in the tracking community. Recent developments in Correlation Filter Trackers (CFTs) [

7] have accelerated the development of visual object tracking. Despite their impressive performance, these discriminative learning tracking systems, for example CFTs, still have several limitations. Firstly, they tend to drift while tracking over a long period of time. This drift can be more closely observed in a dynamic environment and while tracking multiple targets, thereby affecting its accuracy. Secondly, in such discriminative learning methods, negative samples of the image are equally as important as the positive samples. In fact, training with a higher number of negative samples (image patches from different locations and scales) has been found to be highly advantageous to better training of any tracking algorithm. However, any increase in the samples can lead to a higher computational burden, which can adversely affect the time-sensitive nature of trackers. Along a similar line, limiting the samples, however, can sacrifice performance, which is a tradeoff.

Kernelized Correlation Filter (KCF) [

8] addresses this issue of handling thousands of samples of data while keeping the computation load low by exploring tools of the kernel trick and, most importantly, properties of circulant matrices. Circulant matrices also allow us to enrich the toolset provided by classical signal processing like that of correlation filters, by working in the Fourier domain, making the training process faster.

Despite the increased efficiency brought by KCF, the current number and variety of datasets that can be used for real-time performance studies are inadequate. In the past, most experiments conducted on available state-of-the-art trackers (TDAM [

9], MDP [

10], DP-NMS [

11], and NOMT [

12]), were carried out on sample videos. This observation is sometimes very significant since it is obvious that for real-life robotic monitoring and surveillance, and due to various factors such as environmental changes, the tracking accuracy depicted by online trackers may not reflect real-time scenarios. The limitations include using their validations only on a few datasets (example videos) or shorter-length videos. To cover for the lack of needed datasets for given scenarios, we collected our own dataset in real time using the Microsoft Kinect sensor. This dataset reflects real-time scenarios in different settings. We refer readers to a comprehensive review of visual tracking using datasets in [

13,

14,

15] and specifically using the correlation filter with the kernel trick in [

7].

In this paper, we study some of the key components that contribute to the design of KCF, a detailed overview on its working principles, and its performance under various challenging scenarios such as variation in illumination, appearance, change due to viewpoints, occlusions, speed of the subject (slow, medium, or fast), type of crowd (tracking a single target with no crowd and tracking a single target with a crowd of 10–15 people), the position of the camera, etc. We chose these factors while keeping in mind that these are some of the most challenging factors the field of visual tracking has been facing. The experiments are performed using the RGB-D sensor in a live, open environment to ensure real-time scenarios. The environment chosen is the public hallway of a university with students as subjects to replicate the real-time scenario. Moreover, the duration of tracking time varies from 10 s to 2–4 min in order to allow enough time to observe the performance of the tracker. These factors ensure that we have worked in various scenarios in order to make the surrounding environment as diverse as possible. We also compared the performance of KCF with other state-of-the-art trackers evaluated on OTB-50, VOT 2015, and VOT 2019.

The remainder of the paper is organized as follows:

Section 2 of the paper gives a general overview of the KCF tracker and its key practical properties, followed by

Section 3, which goes through a key analytical description of the method;

Section 4 shows the KCF pipeline and details the dataset used.

Section 5 presents the results of our experimental studies using various scenarios and measures in terms of precision curves and other metrics.

Section 6 discusses the performance comparison of KCF with other state-of-the-art trackers evaluated on OTB-50, VOT 2015, and VOT 2019. Finally, the paper presents concluding remarks in

Section 7.

3. Mathematical Exposition of KCF

KCF is based on the discriminative method, which formulates the tracking problem as a binary classification task and distinguishes the target from the background by using a discriminative classifier [

17]. Any modern framework in addressing recognition and classification problems in the field of computer vision is usually supported by a learning algorithm. The objective is to find a function

that can classify through learning from a given set of examples. It should be able to classify an unseen data representation of an image patch from an arbitrary distribution within a certain error bound. One such linear discriminator is the Linear Ridge Regression (LRR). Mathematically, it is defined as:

where

is the input sampled variable (here image feature),

is the weight vector,

is the true value/ground truth (obtained by observation or measurement),

is the squared error between the actual and predicted variable, and

is a squared norm regularizer with

as the regularizer to prevent overfitting. In simple words, in determining the weight vector

w for minimizing the square of the model error

, it is bound to a certain limit with the help of a regularizer

. For a better understanding of the mathematical representations, the authors feel the need to make certain clarifications in the representations.

and

will be used as independent variables. The transpose of

will be defined as

. The authors will use bold fonts in a variable to represent vectors. For example,

represents a concatenated vector with each element/entry in the vector represented as

i.e., in the space of all integers (

).

Traditionally, for learning, research has been focused on utilizing positive samples (samples that are similar to the target object); however, negative samples (samples that do not resemble the target object) have been found to be equally important in discriminative learning. Hence, the higher the number of negative samples, the better the discriminative power of the learning algorithm. If we can use a higher number of features (and as a result, more negative samples), it will be beneficial for the learning algorithm. For instance, though raw pixels of an image are good features in themselves, using features such as HOG can be advantageous because of the larger number of data (features). Though effective in helping with improved accuracy, the availability of a large number of samples can create a bottleneck in terms of complexity and speed.

The Kernelized Correlation Filter tracker attempts to use a large number (high-dimensional data) of negative samples, but with reduced complexity. It achieves this using (a) the dual space for learning high-dimensional data using the kernels trick and (b) the property of diagonalizing the circulant matrix in the Fourier domain. These factors make the tracking algorithm computationally inexpensive and faster, respectively. We will discuss the concept of the circulant matrix, the kernel trick, and the Fourier domain in more detail in upcoming sections.

The KCF algorithm exploits this circulant structure in the learning algorithm in using a sample 2D image patch of the target and generating additional samples (using all the possible translations of it, leading to a circulant structure). This results in several virtual negative samples, which can be used in the learning stage to make our discriminative classifier efficient. For example, in 2D images, we obtain circulant blocks using a similar concept of circulant blocks. Let us assume we have a 2D image represented as a data matrix in the following form:

where

represents the image features. Now, construct a matrix

that has four rows of

denoted by

as below:

where

defines a function that can generate circulant values of the input. More specifically, we can write it as:

We can now construct a

circulant matrix as:

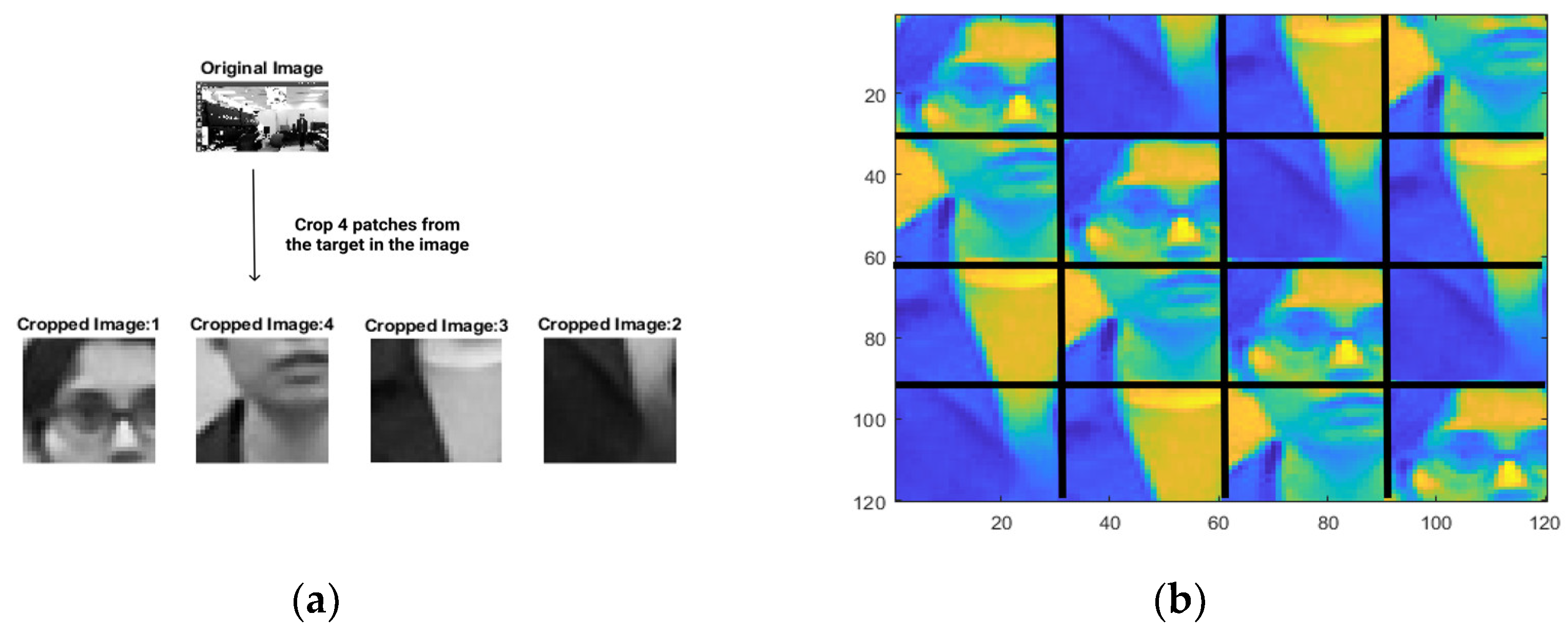

This structure of X is called the Block-Circulant Circulant Matrix (BCCM), i.e., a matrix that is circulant at the block level, composed of blocks that are circulant themselves. Visualization of a BCCM on a sample 2D image patch of the target taken from a Kinect RGB camera can be seen in

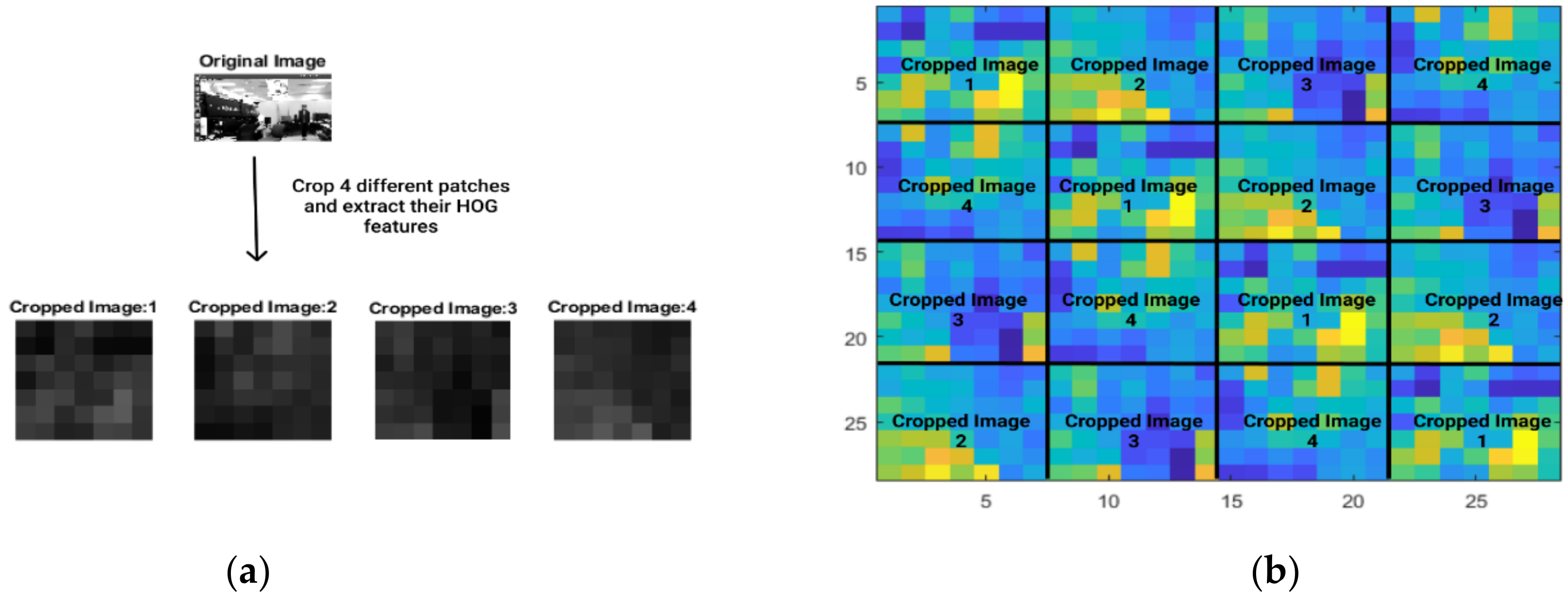

Figure 1. Similar visualization can be performed on features, such as HOG features, of the target image patch we used in our visual tracking experiment, as shown in

Figure 2.

Since we know that (a) a large amount of data is beneficial for our learning algorithm and (b) translating samples in a circulant manner creates a circulant structure of a large amount of data, we can generate and exploit the circulant nature of HOG features for a visual tracking advantage. Hence, we can now define as very high-dimensional data that represent all possible translations of the base sample HOG features (in the case of a 1D image) or base patch HOG features (in the case of a 2D image). Such a data matrix (with shifted translations) is relevant for a learning algorithm because it represents various ways (distribution of inputs) in which the samples can be encountered by the learning algorithm.

Now, with features in hand, we can use our learning algorithm (e.g., linear ridge regression (LRR from Equation (1)). The goal of the objective function is to find a weight

that will help in classifying the data. Mathematically, the optimal solution of LRR is given by:

where

is a data matrix (also called the design matrix). In our case, the data matrix is the circulant matrix. It can be observed from Equation (6) that we need to take the inverse of

, which is an

matrix. In most cases,

i.e., the feature space is very large, making the computation very expensive. This problem can be addressed by solving the Linear Ridge Regression in dual space [

18] as explained in

Appendix A. Mathematically, the dual solution for the Linear Ridge Regression can be written as:

where

is a vector of coefficients that defines the solution. Here,

is called the Gram Matrix. The Gram Matrix will be helpful later. In general, for any two pair of samples

, the Gram Matrix can be written as:

Hence, for of size where is the number of examples (samples) and is the number of features, dual space allows us to compute the inverse of dimensional data, unlike the in previous case. It is advantageous in scenarios where .

In the linear learning model (i.e., dual LLR), the objective is to find a hyperplane that can linearly separate our training data. However, there is an issue. Our preferred feature space i.e., HOG is non-linear in nature. One way to resolve this issue is to investigate some non-linear hyperplane pattern classifiers (i.e., higher-order surfaces). This is not a practical approach in comparison with a simple linear one. There are a number of such transformation techniques, such as the powerful kernel trick [

19] and their efficient applications in correlation filter-based tracking [

18,

20]. By applying the kernel trick in a non-linear feature space, we would be able to conduct the computation in the non-linear feature space without explicitly instantiating a vector in the space.

In many cases, the non-linear data points can be linearly separable if we can map the original data points to some other

feature space using a transformation, say

. Hence, it is expected that, in this new

feature space, the points will be linearly separable. Hence, for any two training data points

, if we can somehow find a function

(called kernel function), it can map non-linear data to a higher-dimensional feature space such that:

where

is the dot product. The data in the new

feature space are now linearly separable. The functions

that make these transformations are called kernel functions.

A kernel function helps us evaluate the dot product in the lower-dimensional original data space without having to transform it to the higher dimensional feature space. In this process, we can benefit from the linearity in the transformed high-dimensional (mapped with ) feature space. This is called the kernel trick. There are various types of kernels such as linear kernels, polynomial kernels, and gaussian kernels. Understanding the kernel trick will help us better understand its applications in the KCF tracker. For our explanation, we will stick with Gaussian kernels because of their ability to transform data into an infinite feature space.

If we can map our current non-linear features to a high-dimension

feature space using the kernel function

, we can now use standard machine-learning techniques, such as linear ridge regression. For simplicity in explanations, let our original data points be denoted by

. We will call this space the

input space. Let us assume that for some kernel function

, we can transform our data points from the input space to a higher-dimensional

feature space with the data

i.e.,

such that

. Hence, we can define a solution for the dual case using the kernel function

as:

where

. Here,

is called the Kernel matrix (equivalent to the Gram Matrix

).

is the circulant for the kernel, like in Gaussian kernels. We keep stating high-dimensional

feature space brought by

yet we have not stated what these

features are. For Gaussian kernels, we can obtain the explicit features using the Taylor expansion [

21] of these Gaussian kernel functions as follows:

where

and

enumerate over all selections of

co-ordinates of

. Now, in KCF, the learned function

for some sample

is given by:

To calculate this learned function, we need the value of

. It can be computed using the relation between the Circulant Matrix

(readers are suggested to refer to Equations (2) to (5) for details of the Circulant Matrix)

and sample

[

8] such that:

where

is the inverse transform of

,

is the Fourier transform, and

is the inverse Fourier transform. Using Equations (14) and (11), it is possible to diagonalize

(the concept is similar in the linear case) and obtain:

From the right-hand side of the above equation, we can see that multiple computations are taking place in the higher-dimensional space. However, these computations can be made very fast if we can somehow make the computations a simple dot product i.e., using Discrete Fourier transform. Taking DFT on both sides,

where

is the first row of the kernel matrix

and a hat

denotes DFT of a vector,

can be computed and taken as the inverse Fourier transform of

.

From Equation (13), using kernelized Ridge Regression, we can finally evaluate

, which represents the learned function on several image locations i.e., for several cyclic patches such as:

where

Hence, we only need the first row of the Kernel matrix

for our computation. This is one of the main components that can offer much lower computation compared to

or

computation in the original input feature space of the data matrix

In addition, from Equation (16), we have

, the full detection response i.e., a vector containing the output for all cyclic shifts of

. Equation (16) can be computed more efficiently by diagonalizing it to obtain:

where

represents the dot product. This increase in computation speed is due to the element-wise multiplication in the Fourier Domain and also the computation in high-dimensional data using the kernel trick. Once we are able to locate the target in a subsequent frame, we can use the prior knowledge of the target at the first frame and the calculated response at the second to track the target. This method can be employed for all subsequent frames. Hence, for any instance in the image, with the current target location and prior memory, the target can be tracked over time. As mentioned before, this type of tracking methodology is called

tracking-by-detection and has been used in research time and again.

Tracking-by-Detection breaks down the task of tracking into detection-learning and then detection, where tracking and detection complement each other i.e., results provided by the tracker are then used for the algorithm to learn and improve its detection.

4. KCF Tracker Pipeline and Dataset

The dataset used in the evaluation was collected by the author using the Kinect V2 RGB camera. The environment was chosen to replicate the real-time environment for testing the KC tracker. Subjects varied in size, the color of the dress, etc.

Figure 3 gives an overview of the sequence of images used in the experiment.

Motivated by the suggested performance of the KCF tracking algorithm, we realize the need to further analyze and study the experimental performance of the tracker under similar conditions also exists. Commonly, such a study is accomplished using standard datasets. However, we realized the tracker performance needs to also be validated in real-time under different challenging possible scenarios. The current standard benchmark does not test it in real-time settings, leading to a major drawback in its validity when used using a real-time setup, e.g., by observing people via a camera in real time under different scenarios. This section builds on this motivation and discusses tracker performance.

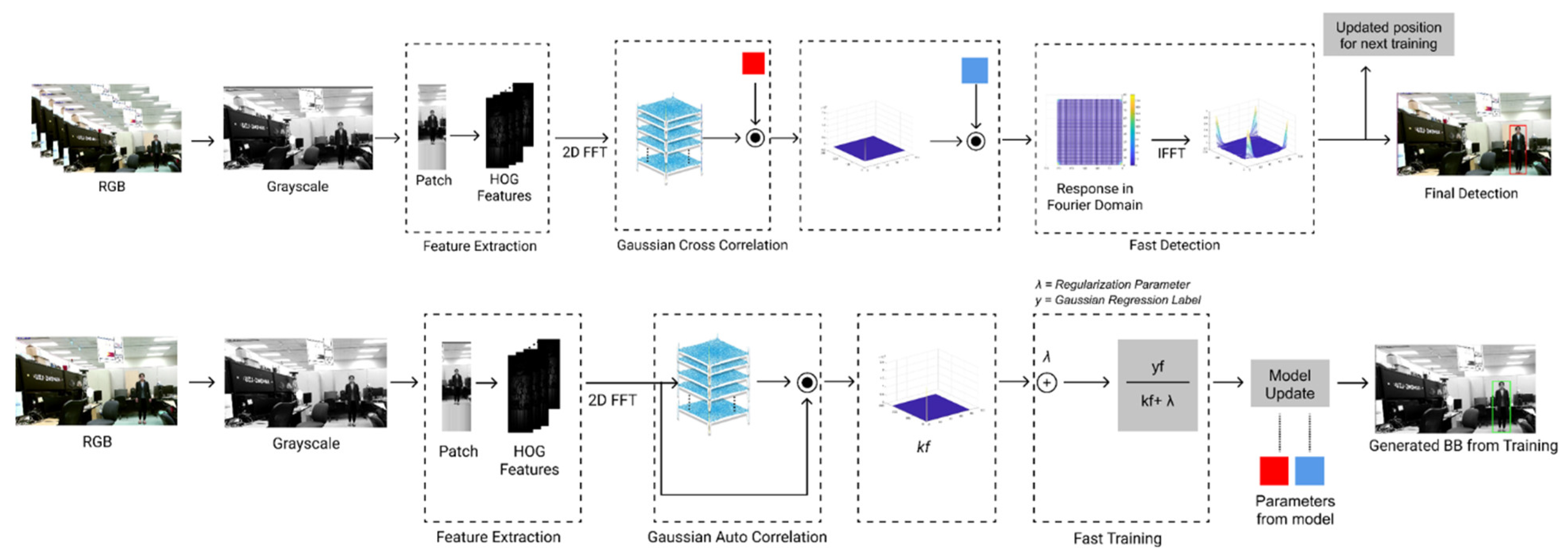

Figure 4 shows the CF tracking pipeline in detail. It has the following main components: (a) Pre-processing stage; (b) feature extraction stage; (c) learning stage; and (d) updating stage. A preprocessing step is performed first on every captured frame of the video before it is passed to the tracker.

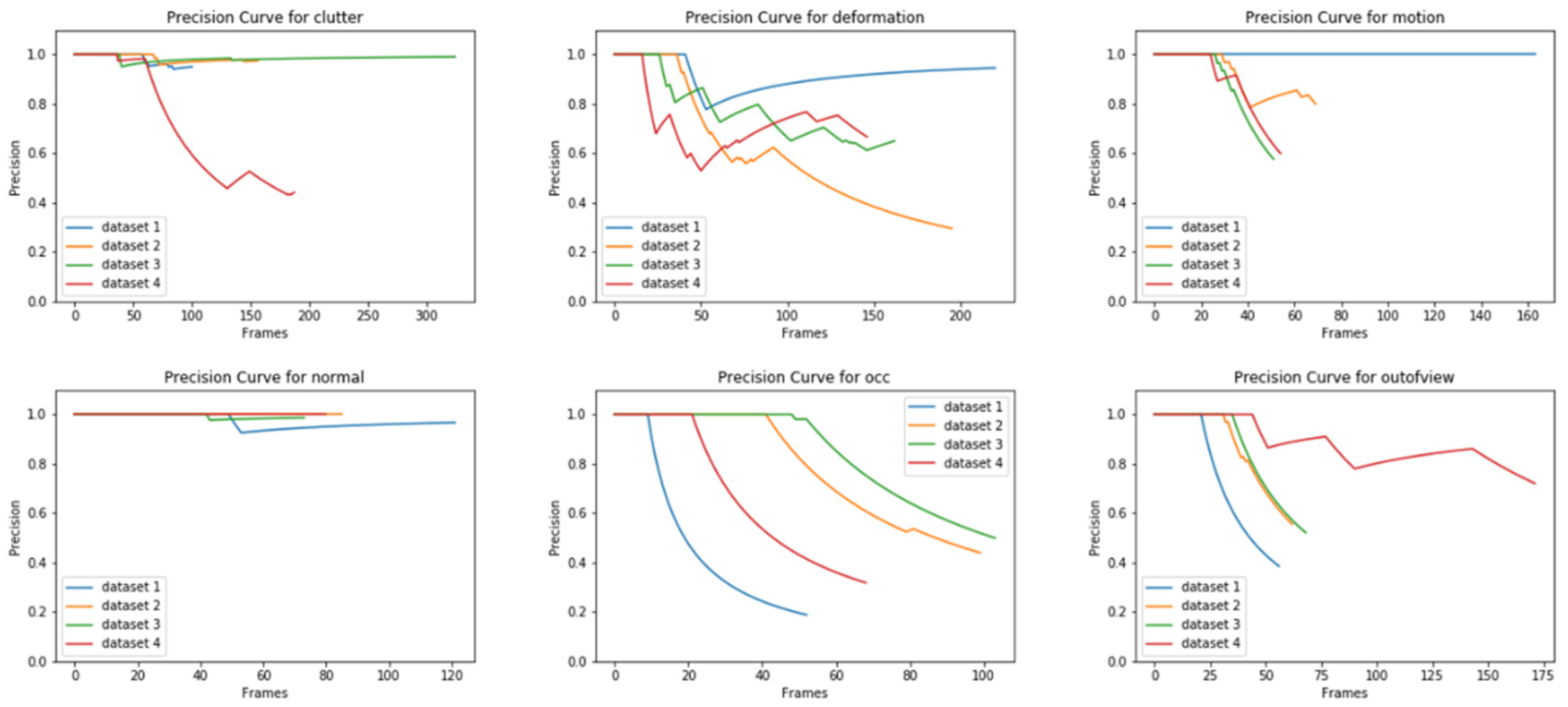

Scenarios used for the analysis were selected based on the major challenges faced by the visual tracking community. These include scenarios such as (a) normal, (b) background clutter, (c) deformation, (d) occlusion, (e) fast motion, and (f) out-of-view scenes.

Figure 5 shows a sample representation of our data. We refer readers to

Figure 3 for a complete overview of all scenarios.

A brief description of these scenarios is given below:

Normal: Normal scenarios include no occlusion, deformation, illumination, etc.

Background Clutter: In this scenario, scenes are composed of several familiar objects with similar colors and textures, making it harder to recognize the target by an algorithm.

Deformation: In the case of deformation, the location of the object as a whole or object parts often vary in frames of a video.

Motion: This indicates how fast the target is moving (faster objects cause motion blur).

Out-of-view: Out-of-view is a state when the target, during its observation, moves out of the visible range of the sensor (for example, the camera). This scenario is different from occlusion since in occlusion, the target is still in the visible range of the sensor but is temporarily hidden. However, in out-of-view, the target disappears from the visible range altogether. In such cases, the model loses information of the target, making it harder to re-detect.

Occlusion: One of the most challenging scenarios is occlusion, where the target under observation has their body parts or movements hidden.

Numerous performance measures have been suggested, which have become popular in the visual tracking community. However, none of them have been singled out as a de facto standard. In most cases, standard datasets are used for comparative results. However, since our purpose was to analyze the tracker in a real-time environment, we collected our own dataset using different subjects from different scenarios in order to test the robustness against, for example, occlusions, out-of-view, etc.

Table 1 gives a more detailed description of the number of datasets collected for individual scenarios. In total, they averaged approximately 6000 images collected using Kinect V2 and were manually annotated to generate ground truth. Inspired by previous work [

22], we determine a general definition for the description of the object state in a dataset of N sequences as:

where

denotes a center of the object and

denotes the region of the object at any time

. Hence, for any particular example and at any particular time

, the center can be denoted as:

A subject in any particular tracking algorithm is tested against the ground-truth (the true value of the object location). We define our ground truth annotations as and tracker’s predicted annotation as where are the top-left x co-ordinates, top-left y coordinates, width, and height, respectively, of the subject in question.