1. Introduction

Deep Learning (DL) has become the dominant paradigm in the field of Computer Vision (CV). ImageNet Challenge (ILSVRC) has been proven to be a driving force for many novel neural network architectures, which have prevailed in the CV research community [

1]. However, there are many CV tasks that include the classification of more abstract visual forms, like clouds in the sky [

2], abstract images [

3] and paintings [

4].

The artistic style (or artistic movement) of a painting is a descriptor that contains valuable data about the painting itself, providing, at the same time, a framework of reference for further analysis. In this context, artistic style recognition is an important task in CV taking into consideration that authentic artwork carries a high value (aesthetic, historic and economic) [

4]. Artwork style recognition, artist classification and other CV tasks related to paintings had been studied before the “DL revolution” [

5,

6,

7].

A great deal of research work has been done in this field leading to many impressive results [

4,

5,

6,

7,

8,

9,

10,

11,

12]. Although many techniques have been deployed, the architectures based on Convolutional Neural Networks (CNNs) have prevailed. Nevertheless, the current technology appears to have reached a plateau in model performance, highlighting the need for new designs. In recent months, there have been a plethora of new DL-based CV models that perform really well on many tasks. However, these models have not been tested, to the best of our knowledge, on the artwork style recognition task.

Transformers have become the dominant architecture in use in the field of Natural Language Processing (NLP), outperforming previous models in various tasks [

13]. Transformers are based on the attention mechanism. Attention allows to derive information from any state of a given text sequence. Introducing the attention layer, it is possible to access all previous states and weigh them according to a learned measure of relevancy to the current token, providing sharper information about far-away relevant tokens [

13]. Transformers have proven that the recurrence is unnecessary.

In addition, modified architectures have been successfully applied on object detection tasks [

14]. Until recently in the task of image recognition, attention has been complementary to convolutions. Extending the idea of attention only mechanisms to CV, a few modifications to the basic transformer architecture are required [

15].

Another recent proposal in DL-based CV is MLP Mixer [

16]. MLP Mixer is based solely on multi-layer perceptrons (MLPs) and does not make use of either convolutions or attention mechanisms. MLP Mixer may be proven to be a valid alternative to many CV tasks, where there are not so many training data or where the available hardware does not support more expensive (in computational terms) architectures.

Following [

17], we present the motivation for this work, along with the letter contributions and the organization of the rest of this work.

1.1. Motivation

The guiding motivation for this research is twofold. On the one hand, there is a need to test the newly proposed DL models on more complicated CV tasks and to demonstrate their applicability. On the other hand, artwork style recognition is a complex problem that needs to be studied further from the research community, since it poses interesting questions about aesthetics, artistic movements, the connection between different styles etc.

1.2. Contribution

The main contributions of this work are as follows

We propose Vision Transformers as the main ML method to classify artistic style.

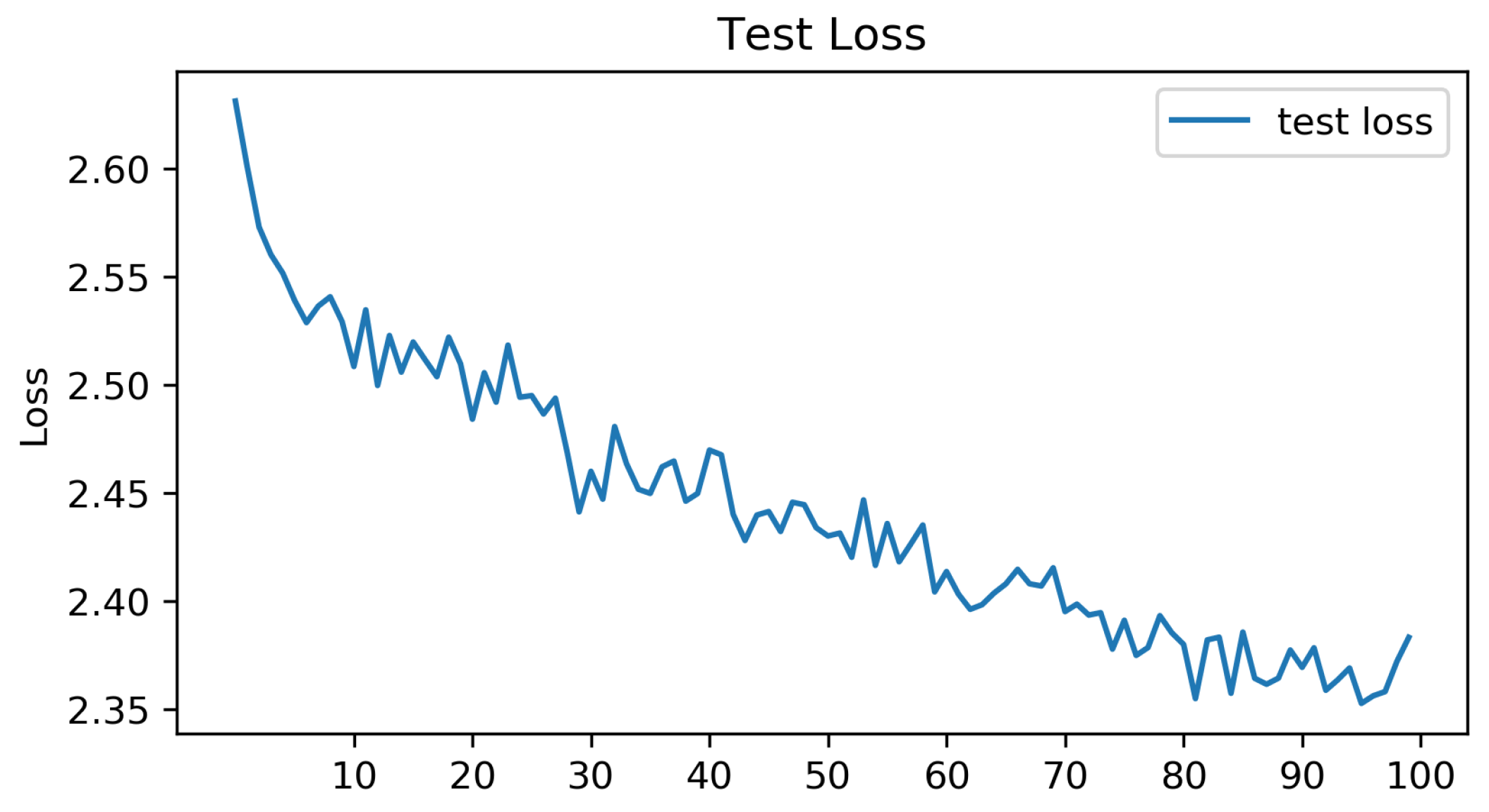

We train Vision Transformers from scratch in the task of artwork style recognition, achieving over 39% prediction accuracy for 21 style classes on the WikiArt paintings dataset.

We conduct a comparative study between the most common optimizers obtaining useful information for future studies.

We compare the results compared with MLP Mixer’s performance on the same task, examining in this way two very different DL architectures on a complex pattern recognition framework.

To the best of the authors knowledge, this is the first time that Vision Transformers have been applied to the specific problem. The results obtained in this work provide a minimum benchmark for future studies regarding the application of ViT and MLP Mixer in the artwork style recognition task and possibly to other CV tasks, which may include a diverse set of training images.

1.3. Organization of the Paper

The rest of the paper is organized as follows:

Section 2 briefly describes related work. In

Section 3 Transformers, Vision transformers, MLP Mixer and the basic information about DL optimizers are discussed and details about WikiArt paintings dataset are provided. We elaborate and present the numerical results in

Section 4. Finally,

Section 5 concludes this work.

2. Related Work

ML and DL techniques have been successfully deployed in the task of Artistic Style recognition. In [

4], researchers conducted a comprehensive study of CNNs applied to the task of style classification of paintings and analyzed the learned representation through correlation analysis with concepts derived from art history. In [

8,

9,

10,

11,

12], many DL and Image Processing techniques are deployed in order to improve accuracy. The advantages and disadvantages of these methods are presented in

Table 1 following the presentation in [

18].

Another field of study is the Image Style Transfer. Gatys et al. [

19], by separating and recombining the image content and style of images, managed to produce new images that combine the content of an arbitrary photograph with the appearance of numerous well-known artworks. Many modifications and optimizations have been proposed since [

20,

21]. However, in style transfer, it is necessary to separate style from content as much as possible, whereas, in artistic style recognition, the description of the content is used as an additional feature [

8].

An active research area is the use of a Generative Adversarial Network (GAN) for conditional image synthesis (ArtGAN) [

22,

23]. The proposed model is capable of creating realistic artwork as well as generate compelling real world images.

Vision Transformers have gained much research interest. The first model based solely on attention is ViT [

15], while [

16] introduces MLP Mixer. To the best of our knowledge, this is the first time that ViT and MLP Mixer are implemented on the task of artistic style classification.

Table 1.

Artwork style recognition based on DL methods.

Table 1.

Artwork style recognition based on DL methods.

| Paper | Advantages | Disadvantages |

|---|

| Elgammal A., et al. [4] | • Study of many CNN architectures | No comparison |

| | • Interpretation and representation | with previous works |

| Lecoutre A., et. al. [8] | • Comprehensive methodology | Full analysis is |

| | • Plenty techniques used | provided only for Alexnet |

| Bar Y., et. al. [21] | • Combination of | test only one |

| | low level descriptors and CNNs | CNN architecture |

| Cetinic E., et. al. [10] | • Fine-tuning | No interpretation |

| | • Analysing image similarity | |

| Huang X., et. al. [11] | • Two channels used; the RGB channel | No interpretation |

| | and the brush stroke information | |

| Sandoval C., et al. [12] | • Novel two stage approach | Only pre-trained models |

3. Materials and Methods

3.1. Vision Transformers

Vision Transformer (ViT) was proposed as an alternative to convolutions in deep neural networks. The model was pre-trained on a large dataset of images collected by Google and later fine-tuned to downstream recognition benchmarks. A large dataset is necessary in order to achieve state of the art results.

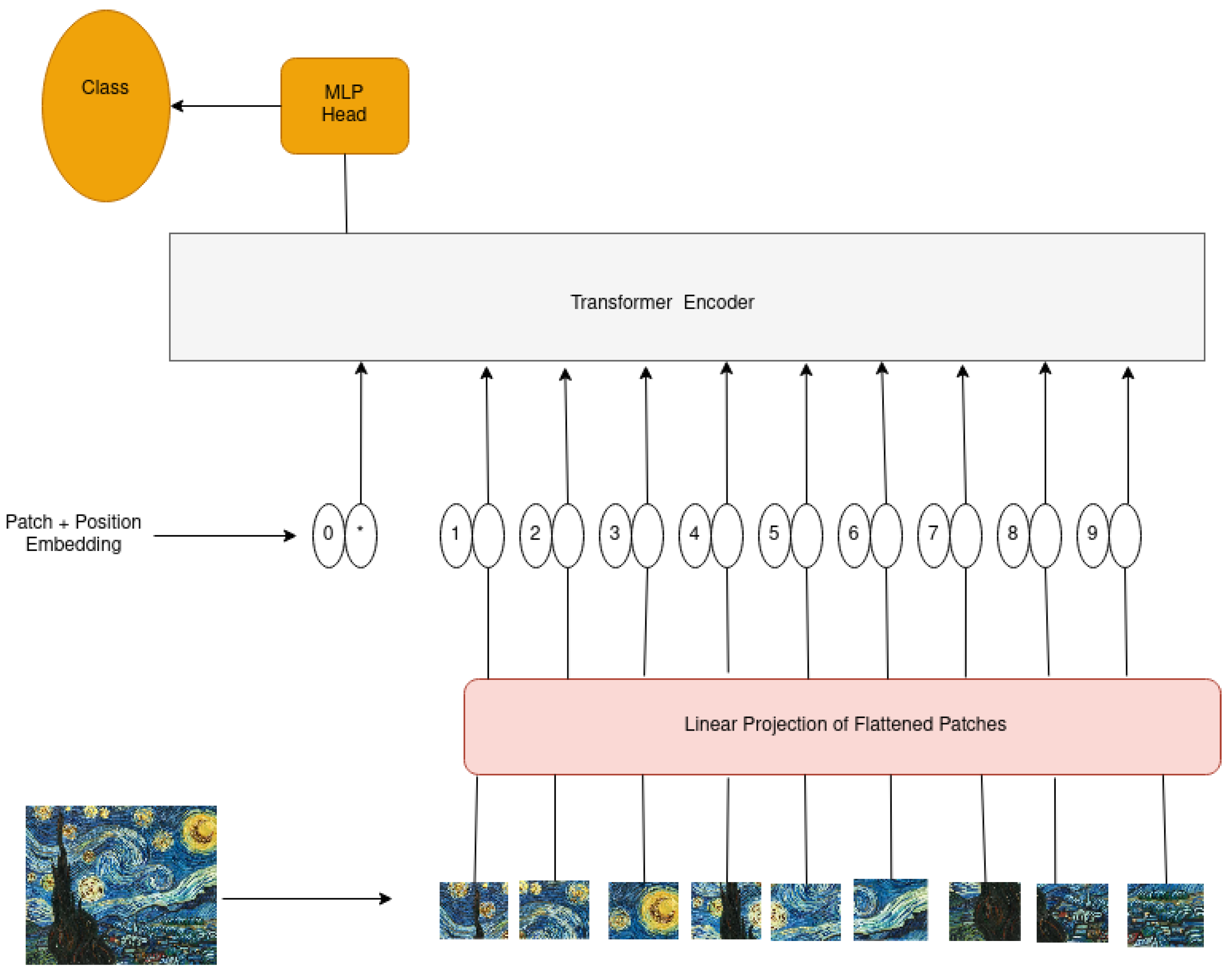

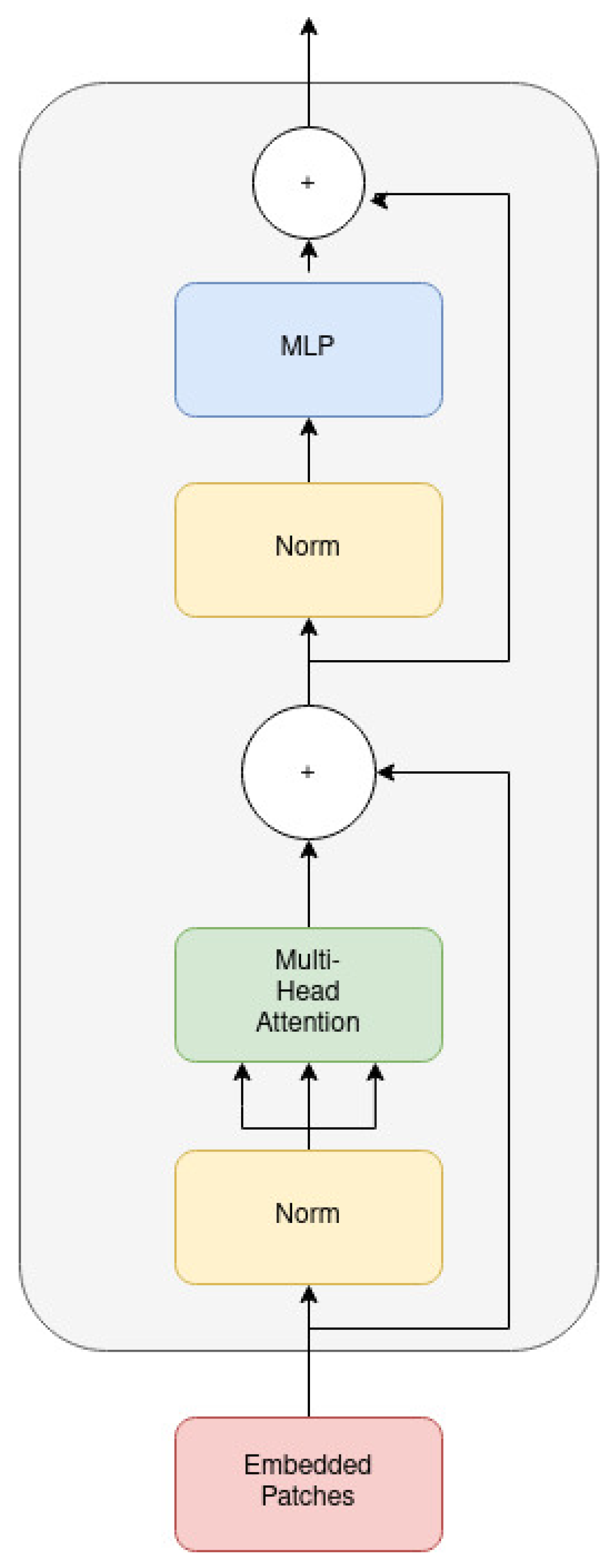

The main architecture of the model is depicted in

Figure 1 and

Figure 2. ViT processes 2D images patches that are flattened in a vector form and fed to the transformer as a sequence. These vectorized patches are then projected to a patch embedding using a linear layer, and position embedding is attached to encode location information. In addition, at the beginning of the input, a classification token is attached to the transformer. The output representation corresponding to the first position is then used as the global image representation for the image classification task.

3.2. MLP Mixer

MLP Mixer was recently introduced [

16] as a CV model based solely on MLPs, without using either convolutions or attention mechanisms. The core idea behind MLP Mixer is to separate in a clear way using only MLPs, the per-location (channel-mixing) operations and the cross-location (token-mixing) operations.

Mixer takes as input a sequence of S non-overlapping image patches, each one projected to a desired hidden dimension C, thus obtaining a matrix . If the original input image has resolution (H, W), and each patch has resolution (P, P), then the number of patches is . All patches are linearly projected with the same projection matrix. Mixer consists of multiple layers of identical size, and each layer consists of two MLP blocks.

Unlike ViT, MLP Mixer does not use position embeddings because the token-mixing MLPs are sensitive to the order of the input tokens.

3.3. Optimizers

Hyper-parameter optimization is a crucial part of DL training process. Image classification is usually considered a Supervised Learning task. In this framework, given a dataset, the learning algorithm is trained in such a way to minimize a suitably chosen cost function

. An optimizer is needed to achieve the minimum of this function. For an extensive review of the most common used optimizers in DL, one may refer to [

24]. Here the weights’ update rule for each method is provided. In order to evaluate each optimizer “equally”, none of the “tricks” that are proposed in the literature were used.

In the following,

means the weight at step

t,

is the learning rate, and

is the momentum. The rest of the parameters are explained in [

24,

25,

26].

Stochastic Gradient Descent: Stochastic Gradient Descent (SGD) is one of the most used optimizers. SGD allows to update the network weights per each training image (online training).

Momentum Gradient Descent: SGD may lead to oscillations during training. The best way to avoid them is the knowledge of the right direction for the gradient. This information is derived from the previous position, and, when considering the previous position, the updating rule adds a fraction of the previous update, which gives the optimizer the momentum needed to continue moving in the right direction. The weights in the Momentum Gradient Descent (MGD) are updated as

Adam: Adam has been introduced as an algorithm for the first-order gradient-based optimization of stochastic objective functions, based on adaptive estimates of lower-order moments [

25]. Adam has been established as one of the most successful optimizers in DL.

AdaMax: AdaMax is a generalisation of Adam from the

norm to the

norm [

25].

Optimistic Adam: Optimistic Adam (OAdam) optimizer [

26] is a variant of the ADAM optimizer. The only difference between OAdam and Adam is the weight update,

RMSProp: Using some Adaptive Gradient Descent Optimizers leads, in some cases, the learning rate to decrease monotonically because every added term is positive. After many epochs, the learning rate is so small that it stops updating the weights. The RMSProp method proposes

3.4. WikiArt Dataset

The dataset contains 81,446 images tagged with one corresponding style among the 27 following styles: Abstract Expressionism (2782 images), Action Painting (92 images), Analytical Cubism (110 images), Art Nouveau (4334 images), Baroque (4241 images), Color Field Painting (1615 images), Contemporary Realism (481 images), Cubism (2235 images), Early Renaissance (1391 images), Expressionism (6736 images), Fauvism (934 images), High Renaissance (1343 images), Impressionism (13,060), Mannerism (Late Renaissance) (1279 images), Minimalism (1337 images), Naive Art/Primitivism (2405 images), New Realism (314 images), Northern Renaissance (2552 images), Pointillism (513 images), Pop Art (1483 images), Post Impressionism (6451 images), Realism (10,733 images), Rococo (2089 images), Romanticism (7049 images), Symbolism (4528 images), Synthetic Cubism (216 images) and Ukiyo-e (1167 images).

The WikiArt dataset is highly unbalanced. To avoid some of the issues that may follow, Action Painting and Pointillism classes were dropped. In addition, Analytical Cubism and Synthetic Cubism classes were incorporated into the Cubism class and similarly Contemporary Realism and New Realism were transferred into Realism class. The resulting dataset was comprised of 21 classes and 80,835 images in total. The train, validation and test sets were 60%, 20% and 20% of the whole dataset respectively.

In

Figure 3, two samples of the dataset are shown, highlighting the diversity of the artwork style.

5. Conclusions

In this paper, the Vision Transformers ViT and MLP Mixer were successfully applied on the WikiArt dataset in the artistic style recognition task. ViT was trained from scratch in the WikiArt dataset achieving over 39% accuracy for 21 classes, thus, setting a minimum benchmark in accuracy prediction for future studies. In addition, a comparative study was conducted among the most common used optimizers, which showed that training with the Adam optimizer and Optimistic Adam optimizer resulted in better performance. Using the above results, MLP Mixer was trained from scratch, performing close to ViT in terms of prediction accuracy. As suggested by our experiments and literature, the use of larger datasets with richer resources should improve the accuracy of the models.

Future work on this subject will be focused on improvements on the models’ hyper-parameters through parametric studies and other experiments. Variations of the models that were used here may provide better results, especially with the combination of other CV techniques. In addition, the creation of a larger dataset will provide a better overview of the tested models’ prediction accuracy.