Advancements in Artificial Intelligence Circuits and Systems (AICAS)

Abstract

:1. Introduction

1.1. Background

1.2. Background Significance of AICAS

1.3. Scope of Review

2. Materials and Methods

2.1. Early Developments

| Year | Milestone | Impact/Significance |

|---|---|---|

| 1950s | Invention of the Integrated Circuit | Foundation for modern computing and AI hardware [18] |

| 1965 | Moore’s Law Prediction | Predicted the exponential growth of computing power [19] |

| 1980s | Rise of Personal Computers | Expanded the use of computing, setting the stage for advanced AI [20] |

| 1997 | Deep Blue Defeats Kasparov | Demonstrated AI’s potential in problem-solving and complex tasks [21] |

| 2006 | Introduction of Multi-core Processors | Enhanced processing capabilities, crucial for AI applications [22] |

| 2012 | Breakthrough in Deep Learning—AlexNet | Revolutionized AI with deep neural networks, impacting various AI fields [23] |

| 2019 | Development of Quantum Computing | Potential to dramatically increase processing power for AI [24] |

| 2023 | Advancements in Neuromorphic Computing | Mimicking human brain processes, leading to more efficient AI systems [18,24] |

2.2. Milestones in AICAS Evolution

- Advent of Specialized Hardware: The development of specialized hardware like Graphics Processing Units (GPUs) and, later, Tensor Processing Units (TPUs) marked a significant milestone. These hardware advancements provided the necessary computational power to handle complex AI algorithms, thus broadening the horizon of AICAS [2,25].

- Neuromorphic Computing: Inspired by the human brain’s architecture and functioning, the advent of neuromorphic computing marked a significant stride in AICAS evolution. Neuromorphic chips like IBM’s TrueNorth and Intel’s Loihi have propelled the field toward creating efficient and powerful AI-driven circuit systems [26,27].

- Quantum Computing Circuits: The exploration of quantum computing circuits in the realm of AICAS has opened up new vistas, promising exponential growth in computational capabilities. Although in its infancy, quantum AICAS is a burgeoning field with the potential to redefine the paradigms of computing [30,31].

3. Foundational Principles of AICAS

3.1. Basic Architectures

- Neuromorphic Architecture: Drawing inspiration from the neural networks of the human brain, neuromorphic architectures endeavor to emulate synaptic and neuronal functionalities, fostering low-power and efficient computation [36].

3.2. Core Technologies and Algorithms

- Machine Learning (ML) and Deep Learning (DL): ML and DL algorithms are the linchpins that drive the intelligence in AICAS, enabling data-driven learning and decision-making [44].

- Data Analytics and Processing Technologies: The capability to process and analyze copious amounts of data in real time is facilitated by advanced data analytics and processing technologies [47].

3.3. Hardware-Software Co-Design

4. State-of-the-Art AICAS Architectures

4.1. Neuromorphic Computing

4.2. Quantum Computing Circuits

4.3. In-Memory Computing

4.4. Advanced Processing Units: GPUs, TPUs, and NPUs

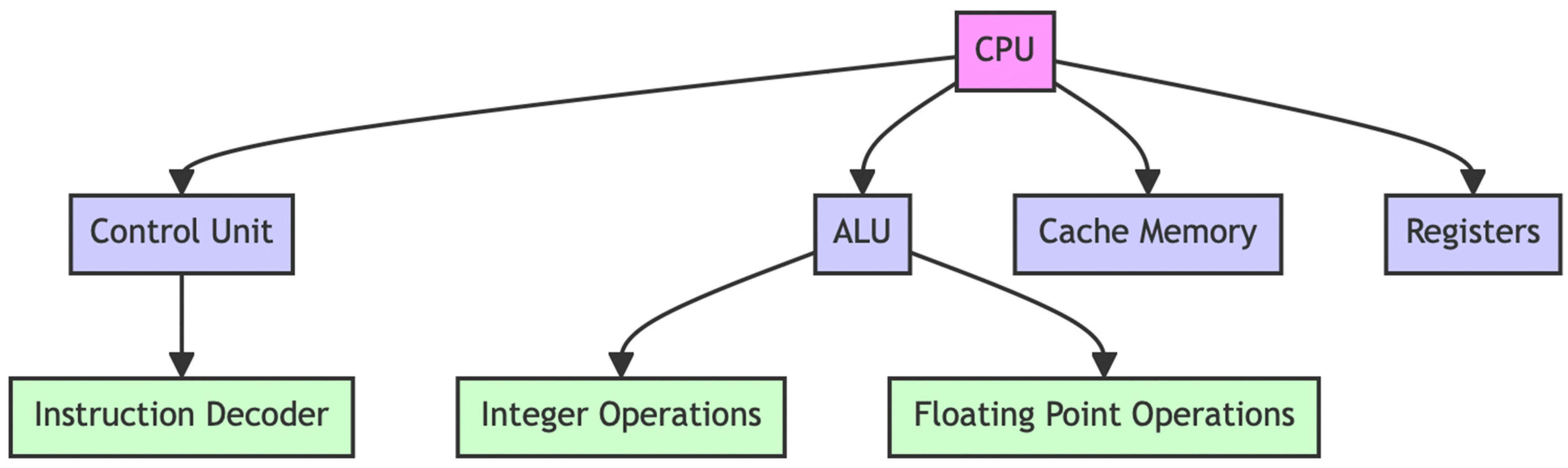

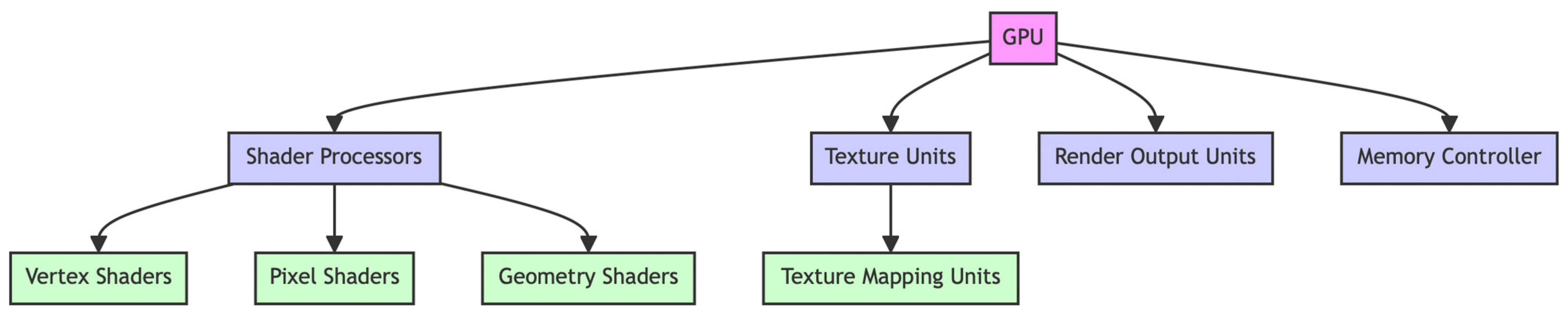

4.4.1. Graphics Processing Units (GPUs)

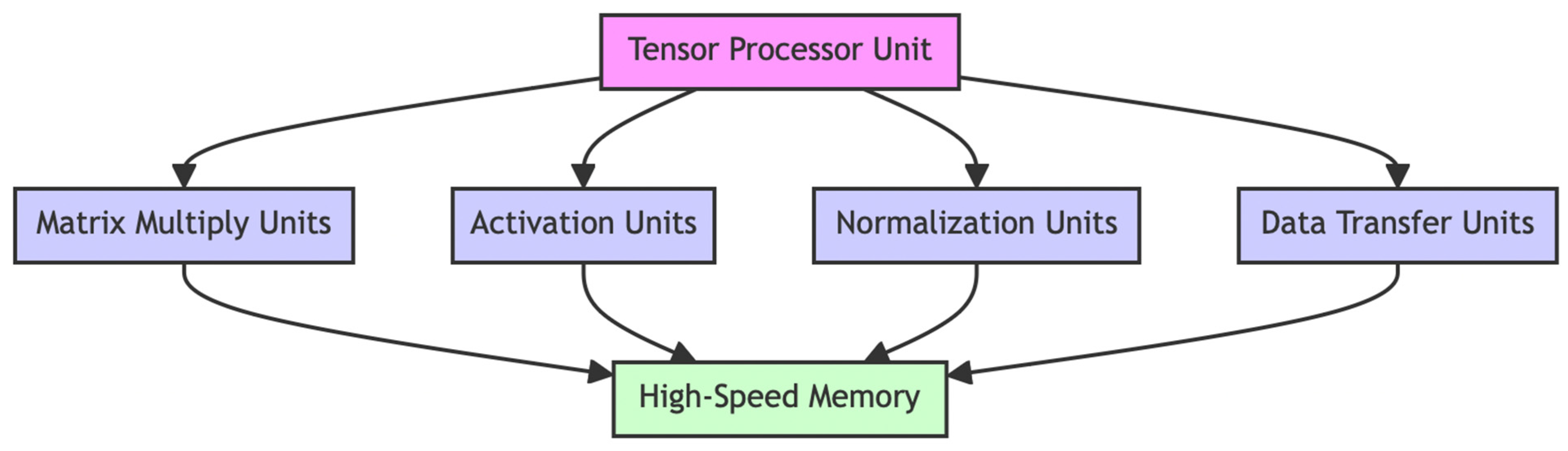

4.4.2. Tensor Processing Units (TPUs)

4.4.3. Neural Processing Units (NPUs)

5. Design Paradigms

5.1. Energy-Efficient Designs

5.2. Scalable and Modular Designs

5.3. Robust and Fault-Tolerant Designs

6. Applications of AICAS

6.1. Energy Efficiency Optimization

6.2. Next-Generation Cognitive Computing Systems

6.3. Real-Time Processing and Edge Computing

6.4. Autonomous Systems and Robotics

6.5. Healthcare and Bioinformatics

7. Challenges and Solutions

7.1. Scalability Challenges

7.2. Robustness and Reliability

7.3. Addressing Resource Constraints

8. Emerging Trends and Future Directions

8.1. New Materials and Technologies

8.2. Integrating AICAS with Other Emerging Technologies

8.3. Policy and Ethical Considerations

9. Conclusions

9.1. Summary of Key Findings

9.2. Implications and Recommendations for Future Research

Author Contributions

Funding

Conflicts of Interest

References

- Zhao, S.; Blaabjerg, F.; Wang, H. An Overview of Artificial Intelligence Applications for Power Electronics. IEEE Trans. Power Electron. 2021, 36, 4633–4658. [Google Scholar] [CrossRef]

- Shastri, B.J.; Tait, A.N.; Ferreira de Lima, T.; Pernice, W.H.P.; Bhaskaran, H.; Wright, C.D.; Prucnal, P.R. Photonics for Artificial Intelligence and Neuromorphic Computing. Nat. Photonics 2021, 15, 102–114. [Google Scholar] [CrossRef]

- Chang, R.C.-H.; Lee, G.G.C.; Delbruck, T.; Valle, M. Introduction to the Special Issue on the 1st IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS 2019). IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 595–597. [Google Scholar] [CrossRef]

- Hong, T.; Wang, P. Artificial Intelligence for Load Forecasting: History, Illusions, and Opportunities. IEEE Power Energy Mag. 2022, 20, 14–23. [Google Scholar] [CrossRef]

- Gams, M.; Kolenik, T. Relations between Electronics, Artificial Intelligence and Information Society through Information Society Rules. Electronics 2021, 10, 514. [Google Scholar] [CrossRef]

- Khan, F.H.; Pasha, M.A.; Masud, S. Advancements in Microprocessor Architecture for Ubiquitous AI—An Overview on History, Evolution, and Upcoming Challenges in AI Implementation. Micromachines 2021, 12, 665. [Google Scholar] [CrossRef]

- Sanni, K.A.; Andreou, A.G. A Historical Perspective on Hardware AI Inference, Charge-Based Computational Circuits and an 8 Bit Charge-Based Multiply-Add Core in 16 Nm FinFET CMOS. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 532–543. [Google Scholar] [CrossRef]

- Tomazzoli, C.; Scannapieco, S.; Cristani, M. Internet of Things and Artificial Intelligence Enable Energy Efficiency. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 4933–4954. [Google Scholar] [CrossRef]

- Himeur, Y.; Ghanem, K.; Alsalemi, A.; Bensaali, F.; Amira, A. Artificial Intelligence Based Anomaly Detection of Energy Consumption in Buildings: A Review, Current Trends and New Perspectives. Appl. Energy 2021, 287, 116601. [Google Scholar] [CrossRef]

- Mishra, A.; Ray, A.K. A Novel Layered Architecture and Modular Design Framework for Next-Gen Cyber Physical System. In Proceedings of the 2022 International Conference on Computer Communication and Informatics (ICCCI), Chiba, Japan, 1–3 July 2022; IEEE: New York, NY, USA, 2022; pp. 1–8. [Google Scholar]

- Wang, Y.; Kinsner, W.; Kwong, S.; Leung, H.; Lu, J.; Smith, M.H.; Trajkovic, L.; Tunstel, E.; Plataniotis, K.N.; Yen, G.G. Brain-Inspired Systems: A Transdisciplinary Exploration on Cognitive Cybernetics, Humanity, and Systems Science Toward Autonomous Artificial Intelligence. IEEE Syst. Man. Cybern. Mag. 2020, 6, 6–13. [Google Scholar] [CrossRef]

- Zador, A.; Escola, S.; Richards, B.; Ölveczky, B.; Bengio, Y.; Boahen, K.; Botvinick, M.; Chklovskii, D.; Churchland, A.; Clopath, C.; et al. Catalyzing Next-Generation Artificial Intelligence through NeuroAI. Nat. Commun. 2023, 14, 1597. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, X.; Cao, X.; Huang, C.; Liu, E.; Qian, S.; Liu, X.; Wu, Y.; Dong, F.; Qiu, C.-W.; et al. Artificial Intelligence: A Powerful Paradigm for Scientific Research. Innovation 2021, 2, 100179. [Google Scholar] [CrossRef]

- Zhao, W.; Ma, X.; Ju, J.; Zhao, Y.; Wang, X.; Li, S.; Sui, Y.; Sun, Q. Association of Visceral Adiposity Index with Asymptomatic Intracranial Arterial Stenosis: A Population-Based Study in Shandong, China. Lipids Health Dis. 2023, 22, 64. [Google Scholar] [CrossRef]

- Fayazi, M.; Colter, Z.; Afshari, E.; Dreslinski, R. Applications of Artificial Intelligence on the Modeling and Optimization for Analog and Mixed-Signal Circuits: A Review. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 2418–2431. [Google Scholar] [CrossRef]

- Talib, M.A.; Majzoub, S.; Nasir, Q.; Jamal, D. A Systematic Literature Review on Hardware Implementation of Artificial Intelligence Algorithms. J. Supercomput. 2021, 77, 1897–1938. [Google Scholar] [CrossRef]

- Serrano-Gotarredona, T.; Valle, M.; Conti, F.; Li, H. Introduction to the Special Issue on the 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS 2020). IEEE J. Emerg. Sel. Top. Circuits Syst. 2020, 10, 403–405. [Google Scholar] [CrossRef]

- Xu, S. AICA Development Challenges. In Autonomous Intelligent Cyber Defense Agent (AICA); Springer: Cham, Switzerland, 2023; pp. 367–394. [Google Scholar]

- Costa, D.; Costa, M.; Pinto, S. Train Me If You Can: Decentralized Learning on the Deep Edge. Appl. Sci. 2022, 12, 4653. [Google Scholar] [CrossRef]

- Golder, A.; Raychowdhury, A. PCB Identification Based on Machine Learning Utilizing Power Consumption Variability. In Proceedings of the 2023 IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hangzhou, China, 11–13 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–4. [Google Scholar]

- de Goede, D.; Kampert, D.; Varbanescu, A.L. The Cost of Reinforcement Learning for Game Engines. In Proceedings of the 2022 ACM/SPEC on International Conference on Performance Engineering, Beijing, China, 9–13 April 2022; ACM: New York, NY, USA; pp. 145–152. [Google Scholar]

- Fariselli, M.; Rusci, M.; Cambonie, J.; Flamand, E. Integer-Only Approximated MFCC for Ultra-Low Power Audio NN Processing on Multi-Core MCUs. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar]

- Agyeman, M.; Guerrero, A.F.; Vien, Q.-T. Classification Techniques for Arrhythmia Patterns Using Convolutional Neural Networks and Internet of Things (IoT) Devices. IEEE Access 2022, 10, 87387–87403. [Google Scholar] [CrossRef]

- Mladenov, V. AICAS—PAST, PRESENT, AND FUTURE. Electronics 2023, 12, 1483. [Google Scholar] [CrossRef]

- Berggren, K.; Xia, Q.; Likharev, K.K.; Strukov, D.B.; Jiang, H.; Mikolajick, T.; Querlioz, D.; Salinga, M.; Erickson, J.R.; Pi, S.; et al. Roadmap on Emerging Hardware and Technology for Machine Learning. Nanotechnology 2021, 32, 012002. [Google Scholar] [CrossRef]

- Miranda, E.; Suñé, J. Memristors for Neuromorphic Circuits and Artificial Intelligence Applications. Materials 2020, 13, 938. [Google Scholar] [CrossRef]

- Sun, B.; Guo, T.; Zhou, G.; Ranjan, S.; Jiao, Y.; Wei, L.; Zhou, Y.N.; Wu, Y.A. Synaptic Devices Based Neuromorphic Computing Applications in Artificial Intelligence. Mater. Today Phys. 2021, 18, 100393. [Google Scholar] [CrossRef]

- Kim, D.; Yu, C.; Xie, S.; Chen, Y.; Kim, J.-Y.; Kim, B.; Kulkarni, J.P.; Kim, T.T.-H. An Overview of Processing-in-Memory Circuits for Artificial Intelligence and Machine Learning. IEEE J. Emerg. Sel. Top. Circuits Syst. 2022, 12, 338–353. [Google Scholar] [CrossRef]

- Ielmini, D.; Pedretti, G. Device and Circuit Architectures for In-Memory Computing. Adv. Intell. Syst. 2020, 2, 2000040. [Google Scholar] [CrossRef]

- Kusyk, J.; Saeed, S.M.; Uyar, M.U. Survey on Quantum Circuit Compilation for Noisy Intermediate-Scale Quantum Computers: Artificial Intelligence to Heuristics. IEEE Trans. Quantum Eng. 2021, 2, 2501616. [Google Scholar] [CrossRef]

- Mangini, S.; Tacchino, F.; Gerace, D.; Bajoni, D.; Macchiavello, C. Quantum Computing Models for Artificial Neural Networks. Europhys. Lett. 2021, 134, 10002. [Google Scholar] [CrossRef]

- Norlander, A. Command in AICA-Intensive Operations. In Autonomous Intelligent Cyber Defense Agent (AICA) A Comprehensive Guide; Springer: Cham, Switzerland, 2023; pp. 311–339. [Google Scholar] [CrossRef]

- Theron, P. Alternative Architectural Approaches. In Autonomous Intelligent Cyber Defense Agent (AICA) A Comprehensive Guide; Springer: Cham, Switzerland, 2023; pp. 17–46. [Google Scholar] [CrossRef]

- Yayla, M.; Thomann, S.; Buschjager, S.; Morik, K.; Chen, J.-J.; Amrouch, H. Reliable Binarized Neural Networks on Unreliable Beyond Von-Neumann Architecture. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 69, 2516–2528. [Google Scholar] [CrossRef]

- Coluccio, A.; Vacca, M.; Turvani, G. Logic-in-Memory Computation: Is It Worth It? A Binary Neural Network Case Study. J. Low Power Electron. Appl. 2020, 10, 7. [Google Scholar] [CrossRef]

- Mack, J.; Purdy, R.; Rockowitz, K.; Inouye, M.; Richter, E.; Valancius, S.; Kumbhare, N.; Hassan, M.S.; Fair, K.; Mixter, J.; et al. RANC: Reconfigurable Architecture for Neuromorphic Computing. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2021, 40, 2265–2278. [Google Scholar] [CrossRef]

- Gebregiorgis, A.; Du Nguyen, H.A.; Yu, J.; Bishnoi, R.; Taouil, M.; Catthoor, F.; Hamdioui, S. A Survey on Memory-Centric Computer Architectures. ACM J. Emerg. Technol. Comput. Syst. 2022, 18, 1–50. [Google Scholar] [CrossRef]

- Shanbhag, N.R.; Roy, S.K. Benchmarking In-Memory Computing Architectures. IEEE Open J. Solid-State Circuits Soc. 2022, 2, 288–300. [Google Scholar] [CrossRef]

- Zhu, D.; Linke, N.M.; Benedetti, M.; Landsman, K.A.; Nguyen, N.H.; Alderete, C.H.; Perdomo-Ortiz, A.; Korda, N.; Garfoot, A.; Brecque, C.; et al. Training of Quantum Circuits on a Hybrid Quantum Computer. Sci. Adv. 2019, 5, eaaw9918. [Google Scholar] [CrossRef] [PubMed]

- Marvania, D.B.; Parikh, D.S.; Patel, D.P. Comparative Performance of CMOS Active Inductor. In Proceedings of the International e-Conference on Intelligent Systems and Signal Processing; Springer: Singapore, 2022; pp. 391–401. [Google Scholar] [CrossRef]

- Navaneetha, A.; Bikshalu, K. FinFET Based Comparison Analysis of Power and Delay of Adder Topologies. Mater. Today Proc. 2021, 46, 3723–3729. [Google Scholar] [CrossRef]

- Mladenov, V. Application of Metal Oxide Memristor Models in Logic Gates. Electronics 2023, 12, 381. [Google Scholar] [CrossRef]

- Yousefzadeh, A.; van Schaik, G.-J.; Tahghighi, M.; Detterer, P.; Traferro, S.; Hijdra, M.; Stuijt, J.; Corradi, F.; Sifalakis, M.; Konijnenburg, M. SENeCA: Scalable Energy-Efficient Neuromorphic Computer Architecture. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 371–374. [Google Scholar] [CrossRef]

- Neuman, S.M.; Plancher, B.; Duisterhof, B.P.; Krishnan, S.; Banbury, C.; Mazumder, M.; Prakash, S.; Jabbour, J.; Faust, A.; de Croon, G.C.H.E.; et al. Tiny Robot Learning: Challenges and Directions for Machine Learning in Resource-Constrained Robots. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 296–299. [Google Scholar]

- Lin, W.-F.; Tsai, D.-Y.; Tang, L.; Hsieh, C.-T.; Chou, C.-Y.; Chang, P.-H.; Hsu, L. ONNC: A Compilation Framework Connecting ONNX to Proprietary Deep Learning Accelerators. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hsinchu, Taiwan, 18–20 March 2019; IEEE: New York, NY, USA, 2019; pp. 214–218. [Google Scholar]

- Huang, J.; Kelber, F.; Vogginger, B.; Wu, B.; Kreutz, F.; Gerhards, P.; Scholz, D.; Knobloch, K.; Mayr, C.G. Efficient Algorithms for Accelerating Spiking Neural Networks on MAC Array of SpiNNaker 2. In Proceedings of the 2023 IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hangzhou, China, 11–13 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Theron, P.; Kott, A. When Autonomous Intelligent Goodware Will Fight Autonomous Intelligent Malware: A Possible Future of Cyber Defense. In Proceedings of the MILCOM 2019—2019 IEEE Military Communications Conference (MILCOM), Norfolk, VA, USA, 12–14 November 2019; IEEE: Washington, DC, USA, 2019; pp. 1–7. [Google Scholar]

- Wang, H.; Cao, S.; Xu, S. A Real-Time Face Recognition System by Efficient Hardware-Software Co-Design on FPGA SoCs. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–2. [Google Scholar]

- Jiang, Z.; Yang, K.; Ma, Y.; Fisher, N.; Audsley, N.; Dong, Z. I/O-GUARD: Hardware/Software Co-Design for I/O Virtualization with Guaranteed Real-Time Performance. In Proceedings of the 2021 58th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 5–9 December 2021; IEEE: New York, NY, USA, 2021; pp. 1159–1164. [Google Scholar]

- Jayakodi, N.K.; Doppa, J.R.; Pande, P.P. A General Hardware and Software Co-Design Framework for Energy-Efficient Edge AI. In Proceedings of the 2021 IEEE/ACM International Conference On Computer Aided Design (ICCAD), Munich, Germany, 1–4 November 2021; IEEE: New York, NY, USA, 2021; pp. 1–7. [Google Scholar]

- Dubey, A.; Cammarota, R.; Varna, A.; Kumar, R.; Aysu, A. Hardware-Software Co-Design for Side-Channel Protected Neural Network Inference. In Proceedings of the 2023 IEEE International Symposium on Hardware Oriented Security and Trust (HOST), San Jose, CA, USA, 1–4 May 2023; IEEE: New York, NY, USA, 2023; pp. 155–166. [Google Scholar]

- Huang, P.; Wang, C.; Liu, W.; Qiao, F.; Lombardi, F. A Hardware/Software Co-Design Methodology for Adaptive Approximate Computing in Clustering and ANN Learning. IEEE Open J. Comput. Soc. 2021, 2, 38–52. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Z.; Chen, Y.; Xu, Y.; Wang, T.; Yu, Y.; Narayanan, V.; George, S.; Yang, H.; Li, X. WeightLock: A Mixed-Grained Weight Encryption Approach Using Local Decrypting Units for Ciphertext Computing in DNN Accelerators. In Proceedings of the 2023 IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hangzhou, China, 11–13 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Marković, D.; Mizrahi, A.; Querlioz, D.; Grollier, J. Physics for Neuromorphic Computing. Nat. Rev. Phys. 2020, 2, 499–510. [Google Scholar] [CrossRef]

- Roy, K.; Jaiswal, A.; Panda, P. Towards Spike-Based Machine Intelligence with Neuromorphic Computing. Nature 2019, 575, 607–617. [Google Scholar] [CrossRef] [PubMed]

- Davies, M.; Wild, A.; Orchard, G.; Sandamirskaya, Y.; Guerra, G.A.F.; Joshi, P.; Plank, P.; Risbud, S.R. Advancing Neuromorphic Computing With Loihi: A Survey of Results and Outlook. Proc. IEEE 2021, 109, 911–934. [Google Scholar] [CrossRef]

- Cho, S.W.; Kwon, S.M.; Kim, Y.-H.; Park, S.K. Recent Progress in Transistor-Based Optoelectronic Synapses: From Neuromorphic Computing to Artificial Sensory System. Adv. Intell. Syst. 2021, 3, 2000162. [Google Scholar] [CrossRef]

- Ha, M.; Sim, J.; Moon, D.; Rhee, M.; Choi, J.; Koh, B.; Lim, E.; Park, K. CMS: A Computational Memory Solution for High-Performance and Power-Efficient Recommendation System. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 491–494. [Google Scholar] [CrossRef]

- Srinivasan, G.; Lee, C.; Sengupta, A.; Panda, P.; Sarwar, S.S.; Roy, K. Training Deep Spiking Neural Networks for Energy-Efficient Neuromorphic Computing. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 8549–8553. [Google Scholar] [CrossRef]

- Rathi, N.; Chakraborty, I.; Kosta, A.; Sengupta, A.; Ankit, A.; Panda, P.; Roy, K. Exploring Neuromorphic Computing Based on Spiking Neural Networks: Algorithms to Hardware. ACM Comput. Surv. 2023, 55, 243. [Google Scholar] [CrossRef]

- Li, Y.; Xuan, Z.; Lu, J.; Wang, Z.; Zhang, X.; Wu, Z.; Wang, Y.; Xu, H.; Dou, C.; Kang, Y.; et al. One Transistor One Electrolyte-Gated Transistor Based Spiking Neural Network for Power-Efficient Neuromorphic Computing System. Adv. Funct. Mater. 2021, 31, 2100042. [Google Scholar] [CrossRef]

- van Doremaele, E.R.W.; Ji, X.; Rivnay, J.; van de Burgt, Y. A Retrainable Neuromorphic Biosensor for On-Chip Learning and Classification. Nat. Electron. 2023, 6, 765–770. [Google Scholar] [CrossRef]

- Baumgartner, S.; Renner, A.; Kreiser, R.; Liang, D.; Indiveri, G.; Sandamirskaya, Y. Visual Pattern Recognition with on On-Chip Learning: Towards a Fully Neuromorphic Approach. In Proceedings of the 2020 IEEE International Symposium on Circuits and Systems (ISCAS), Seville, Spain, 12–14 October 2020; IEEE: New York, NY, USA, 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Yoo, J.; Shoaran, M. Neural Interface Systems with On-Device Computing: Machine Learning and Neuromorphic Architectures. Curr. Opin. Biotechnol. 2021, 72, 95–101. [Google Scholar] [CrossRef]

- Hsu, K.-C.; Tseng, H.-W. Accelerating Applications Using Edge Tensor Processing Units. In Proceedings of the 2021 International Conference for High Performance Computing, Networking, Storage and Analysis, St. Louis, MI, USA, 14–19 November 2021; ACM: New York, NY, USA, 2021; pp. 1–14. [Google Scholar] [CrossRef]

- Kochura, Y.; Gordienko, Y.; Taran, V.; Gordienko, N.; Rokovyi, A.; Alienin, O.; Stirenko, S. Batch Size Influence on Performance of Graphic and Tensor Processing Units During Training and Inference Phases. In Advances in Computer Science for Engineering and Education II; Springer: Cham, Switzerland, 2020; pp. 658–668. [Google Scholar] [CrossRef]

- Adjoua, O.; Lagardère, L.; Jolly, L.-H.; Durocher, A.; Very, T.; Dupays, I.; Wang, Z.; Inizan, T.J.; Célerse, F.; Ren, P.; et al. Tinker-HP: Accelerating Molecular Dynamics Simulations of Large Complex Systems with Advanced Point Dipole Polarizable Force Fields Using GPUs and Multi-GPU Systems. J. Chem. Theory Comput. 2021, 17, 2034–2053. [Google Scholar] [CrossRef]

- Seritan, S.; Bannwarth, C.; Fales, B.S.; Hohenstein, E.G.; Isborn, C.M.; Kokkila-Schumacher, S.I.L.; Li, X.; Liu, F.; Luehr, N.; Snyder, J.W.; et al. A Graphical Processing Unit Electronic Structure Package forAb Initio Molecular Dynamics. WIREs Comput. Mol. Sci. 2021, 11, e1494. [Google Scholar] [CrossRef]

- Schölkopf, B. Causality for machine learning. In Probabilistic and Causal Inference: The Works of Judea Pearl; Association for Computing Machinery: New York, NY, USA, 2022; pp. 765–804. [Google Scholar] [CrossRef]

- Ishida, K.; Byun, I.; Nagaoka, I.; Fukumitsu, K.; Tanaka, M.; Kawakami, S.; Tanimoto, T.; Ono, T.; Kim, J.; Inoue, K. SuperNPU: An Extremely Fast Neural Processing Unit Using Superconducting Logic Devices. In Proceedings of the 2020 53rd Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Athens, Greece, 17–21 October 2020; IEEE: New York, NY, USA, 2020; pp. 58–72. [Google Scholar]

- Lee, K.J. Architecture of Neural Processing Unit for Deep Neural Networks. Adv. Comput. 2021, 122, 217–245. [Google Scholar]

- Fang, Q.; Yan, S. Graphics Processing Unit-Accelerated Mesh-Based Monte Carlo Photon Transport Simulations. J. Biomed. Opt. 2019, 24, 1. [Google Scholar] [CrossRef]

- Kussmann, J.; Laqua, H.; Ochsenfeld, C. Highly Efficient Resolution-of-Identity Density Functional Theory Calculations on Central and Graphics Processing Units. J. Chem. Theory Comput. 2021, 17, 1512–1521. [Google Scholar] [CrossRef]

- Boeken, T.; Feydy, J.; Lecler, A.; Soyer, P.; Feydy, A.; Barat, M.; Duron, L. Artificial Intelligence in Diagnostic and Interventional Radiology: Where Are We Now? Diagn. Interv. Imaging 2023, 104, 1–5. [Google Scholar] [CrossRef]

- Raschka, S.; Patterson, J.; Nolet, C. Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence. Information 2020, 11, 193. [Google Scholar] [CrossRef]

- Sharma, S.; Krishna, C.R.; Kumar, R. Android Ransomware Detection Using Machine Learning Techniques: A Comparative Analysis on GPU and CPU. In Proceedings of the 2020 21st International Arab Conference on Information Technology (ACIT), Giza, Egypt, 28–30 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Reuther, A.; Michaleas, P.; Jones, M.; Gadepally, V.; Samsi, S.; Kepner, J. Survey and Benchmarking of Machine Learning Accelerators. In Proceedings of the 2019 IEEE High Performance Extreme Computing Conference (HPEC), Waltham, MA, USA, 24–26 September 2019; IEEE: New York, NY, USA, 2019; pp. 1–9. [Google Scholar]

- Patel, P.; Thakkar, A. The Upsurge of Deep Learning for Computer Vision Applications. Int. J. Electr. Comput. Eng. 2020, 10, 538. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, J.; Chen, Y.; Yang, W.; Zhang, W.; He, Y. Real-Time Strawberry Detection Using Deep Neural Networks on Embedded System (Rtsd-Net): An Edge AI Application. Comput. Electron. Agric. 2022, 192, 106586. [Google Scholar] [CrossRef]

- Pandey, P.; Basu, P.; Chakraborty, K.; Roy, S. GreenTPU. In Proceedings of the 56th Annual Design Automation Conference 2019, Las Vegas, NV, USA, 2–6 June 2019; ACM: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- You, Y.; Zhang, Z.; Hsieh, C.-J.; Demmel, J.; Keutzer, K. Fast Deep Neural Network Training on Distributed Systems and Cloud TPUs. IEEE Trans. Parallel Distrib. Syst. 2019, 30, 2449–2462. [Google Scholar] [CrossRef]

- Ravikumar, A.; Sriraman, H.; Sai Saketh, P.M.; Lokesh, S.; Karanam, A. Effect of Neural Network Structure in Accelerating Performance and Accuracy of a Convolutional Neural Network with GPU/TPU for Image Analytics. PeerJ Comput. Sci. 2022, 8, e909. [Google Scholar] [CrossRef] [PubMed]

- Shahid, A.; Mushtaq, M. A Survey Comparing Specialized Hardware and Evolution in TPUs for Neural Networks. In Proceedings of the 2020 IEEE 23rd International Multitopic Conference (INMIC), Bahawalpur, Pakistan, 5–7 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Ji, Y.; Wang, Q.; Li, X.; Liu, J. A Survey on Tensor Techniques and Applications in Machine Learning. IEEE Access 2019, 7, 162950–162990. [Google Scholar] [CrossRef]

- Sharma, N.; Sharma, R.; Jindal, N. Machine Learning and Deep Learning Applications-A Vision. Glob. Transit. Proc. 2021, 2, 24–28. [Google Scholar] [CrossRef]

- Jouppi, N.; Kurian, G.; Li, S.; Ma, P.; Nagarajan, R.; Nai, L.; Patil, N.; Subramanian, S.; Swing, A.; Towles, B.; et al. TPU v4: An Optically Reconfigurable Supercomputer for Machine Learning with Hardware Support for Embeddings. In Proceedings of the 50th Annual International Symposium on Computer Architecture, Orlando, FL, USA, 17–21 June 2023; ACM: New York, NY, USA, 2023; pp. 1–14. [Google Scholar]

- Mrozek, D.; Gȯrny, R.; Wachowicz, A.; Małysiak-Mrozek, B. Edge-Based Detection of Varroosis in Beehives with IoT Devices with Embedded and TPU-Accelerated Machine Learning. Appl. Sci. 2021, 11, 11078. [Google Scholar] [CrossRef]

- Alibabaei, K.; Assunção, E.; Gaspar, P.D.; Soares, V.N.G.J.; Caldeira, J.M.L.P. Real-Time Detection of Vine Trunk for Robot Localization Using Deep Learning Models Developed for Edge TPU Devices. Future Internet 2022, 14, 199. [Google Scholar] [CrossRef]

- Oh, Y.H.; Kim, S.; Jin, Y.; Son, S.; Bae, J.; Lee, J.; Park, Y.; Kim, D.U.; Ham, T.J.; Lee, J.W. Layerweaver: Maximizing Resource Utilization of Neural Processing Units via Layer-Wise Scheduling. In Proceedings of the 2021 IEEE International Symposium on High-Performance Computer Architecture (HPCA), Seoul, Republic of Korea, 27 February–3 March 2021; IEEE: New York, NY, USA, 2021; pp. 584–597. [Google Scholar]

- Choi, Y.; Rhu, M. PREMA: A Predictive Multi-Task Scheduling Algorithm For Preemptible Neural Processing Units. In Proceedings of the 2020 IEEE International Symposium on High Performance Computer Architecture (HPCA), San Diego, CA, USA, 22–26 February 2020; IEEE: New York, NY, USA, 2020; pp. 220–233. [Google Scholar]

- Jeon, W.; Lee, J.; Kang, D.; Kal, H.; Ro, W.W. PIMCaffe: Functional Evaluation of a Machine Learning Framework for In-Memory Neural Processing Unit. IEEE Access 2021, 9, 96629–96640. [Google Scholar] [CrossRef]

- Tan, T.; Cao, G. Deep Learning on Mobile Devices Through Neural Processing Units and Edge Computing. In Proceedings of the IEEE INFOCOM 2022—IEEE Conference on Computer Communications, New York, NY, USA, 2–5 May 2022; IEEE: New York, NY, USA, 2022; pp. 1209–1218. [Google Scholar]

- Lee, S.; Kim, J.; Na, S.; Park, J.; Huh, J. TNPU: Supporting Trusted Execution with Tree-Less Integrity Protection for Neural Processing Unit. In Proceedings of the 2022 IEEE International Symposium on High-Performance Computer Architecture (HPCA), Seoul, Republic of Korea, 2–6 April 2022; IEEE: New York, NY, USA, 2022; pp. 229–243. [Google Scholar]

- Park, J.-S.; Park, C.; Kwon, S.; Jeon, T.; Kang, Y.; Lee, H.; Lee, D.; Kim, J.; Kim, H.-S.; Lee, Y.; et al. A Multi-Mode 8k-MAC HW-Utilization-Aware Neural Processing Unit With a Unified Multi-Precision Datapath in 4-Nm Flagship Mobile SoC. IEEE J. Solid-State Circuits 2023, 58, 189–202. [Google Scholar] [CrossRef]

- Verhelst, M.; Murmann, B. Machine Learning at the Edge. In NANO-CHIPS 2030: On-Chip AI for an Efficient Data-Driven World; Springer: Cham, Switzerland, 2020; pp. 293–322. [Google Scholar] [CrossRef]

- Jobst, M.; Partzsch, J.; Liu, C.; Guo, L.; Walter, D.; Rehman, S.-U.; Scholze, S.; Hoppner, S.; Mayr, C. ZEN: A Flexible Energy-Efficient Hardware Classifier Exploiting Temporal Sparsity in ECG Data. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 214–217. [Google Scholar]

- Hu, J.; Leow, C.S.; Goh, W.L.; Gao, Y. Energy Efficient Software-Hardware Co-Design of Quantized Recurrent Convolutional Neural Network for Continuous Cardiac Monitoring. In Proceedings of the 2023 IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hangzhou, China, 11–13 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Wan, Z.; Zhang, Y.; Raychowdhury, A.; Yu, B.; Zhang, Y.; Liu, S. An Energy-Efficient Quad-Camera Visual System for Autonomous Machines on FPGA Platform. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar]

- Zhou, S.; Chen, X.; Kim, K.; Liu, S.-C. High-Accuracy and Energy-Efficient Acoustic Inference Using Hardware-Aware Training and a 0.34nW/Ch Full-Wave Rectifier. In Proceedings of the 2023 IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hangzhou, China, 11–13 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Zimmer, B.; Venkatesan, R.; Shao, Y.S.; Clemons, J.; Fojtik, M.; Jiang, N.; Keller, B.; Klinefelter, A.; Pinckney, N.; Raina, P.; et al. A 0.32–128 TOPS, Scalable Multi-Chip-Module-Based Deep Neural Network Inference Accelerator With Ground-Referenced Signaling in 16 Nm. IEEE J. Solid-State Circuits 2020, 55, 920–932. [Google Scholar] [CrossRef]

- Hao, C.; Chen, D. Software/Hardware Co-Design for Multi-Modal Multi-Task Learning in Autonomous Systems. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Wu, Y.; Ding, B.; Xu, Q.; Chen, S. Fault-Tolerant-Driven Clustering for Large Scale Neuromorphic Computing Systems. In Proceedings of the 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Genova, Italy, 31 August 2020–2 September 2020; IEEE: New York, NY, USA, 2020; pp. 238–242. [Google Scholar] [CrossRef]

- Li, X.; Yan, G.; Liu, C. Fault-Tolerant Deep Learning Processors. In Built-in Fault-Tolerant Computing Paradigm for Resilient Large-Scale Chip Design; Springer Nature: Singapore, 2023; pp. 243–302. [Google Scholar] [CrossRef]

- Gao, Z.; Zhang, H.; Wei, X.; Xiao, J.; Zeng, S.; Ge, G.; Wang, Y.; Reviriego, P. Ensemble of Pruned Networks for Reliable Classifiers. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, C.; Chu, C.; Xu, D.; Wang, Y.; Wang, Q.; Li, H.; Li, X.; Cheng, K.-T. HyCA: A Hybrid Computing Architecture for Fault-Tolerant Deep Learning. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2022, 41, 3400–3413. [Google Scholar] [CrossRef]

- Panayides, A.S.; Amini, A.; Filipovic, N.D.; Sharma, A.; Tsaftaris, S.A.; Young, A.; Foran, D.; Do, N.; Golemati, S.; Kurc, T.; et al. AI in Medical Imaging Informatics: Current Challenges and Future Directions. IEEE J. Biomed. Health Inform. 2020, 24, 1837–1857. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Wang, Z.; Yang, H.; Yang, L. Artificial Intelligence Applications in the Development of Autonomous Vehicles: A Survey. IEEE/CAA J. Autom. Sin. 2020, 7, 315–329. [Google Scholar] [CrossRef]

- Pallathadka, H.; Ramirez-Asis, E.H.; Loli-Poma, T.P.; Kaliyaperumal, K.; Ventayen, R.J.M.; Naved, M. Applications of Artificial Intelligence in Business Management, e-Commerce and Finance. Mater. Today Proc. 2023, 80, 2610–2613. [Google Scholar] [CrossRef]

- Gupta, S.; Modgil, S.; Lee, C.-K.; Sivarajah, U. The Future Is Yesterday: Use of AI-Driven Facial Recognition to Enhance Value in the Travel and Tourism Industry. Inf. Syst. Front. 2023, 25, 1179–1195. [Google Scholar] [CrossRef]

- Yang, L.W.Y.; Ng, W.Y.; Foo, L.L.; Liu, Y.; Yan, M.; Lei, X.; Zhang, X.; Ting, D.S.W. Deep Learning-Based Natural Language Processing in Ophthalmology: Applications, Challenges and Future Directions. Curr. Opin. Ophthalmol. 2021, 32, 397–405. [Google Scholar] [CrossRef]

- Trivedi, K.S. Fundamentals of Natural Language Processing. In Microsoft Azure AI Fundamentals Certification Companion: Guide to Prepare for the AI-900 Exam; Apress: Berkeley, CA, USA, 2023; pp. 119–180. [Google Scholar] [CrossRef]

- Mah, P.M.; Skalna, I.; Muzam, J. Natural Language Processing and Artificial Intelligence for Enterprise Management in the Era of Industry 4.0. Appl. Sci. 2022, 12, 9207. [Google Scholar] [CrossRef]

- Aldunate, Á.; Maldonado, S.; Vairetti, C.; Armelini, G. Understanding Customer Satisfaction via Deep Learning and Natural Language Processing. Expert. Syst. Appl. 2022, 209, 118309. [Google Scholar] [CrossRef]

- Johnson, K.B.; Wei, W.; Weeraratne, D.; Frisse, M.E.; Misulis, K.; Rhee, K.; Zhao, J.; Snowdon, J.L. Precision Medicine, AI, and the Future of Personalized Health Care. Clin. Transl. Sci. 2021, 14, 86–93. [Google Scholar] [CrossRef]

- Dalzochio, J.; Kunst, R.; Pignaton, E.; Binotto, A.; Sanyal, S.; Favilla, J.; Barbosa, J. Machine Learning and Reasoning for Predictive Maintenance in Industry 4.0: Current Status and Challenges. Comput. Ind. 2020, 123, 103298. [Google Scholar] [CrossRef]

- Haenlein, M.; Kaplan, A.; Tan, C.-W.; Zhang, P. Artificial Intelligence (AI) and Management Analytics. J. Manag. Anal. 2019, 6, 341–343. [Google Scholar] [CrossRef]

- Rahmani, A.M.; Rezazadeh, B.; Haghparast, M.; Chang, W.-C.; Ting, S.G. Applications of Artificial Intelligence in the Economy, Including Applications in Stock Trading, Market Analysis, and Risk Management. IEEE Access 2023, 11, 80769–80793. [Google Scholar] [CrossRef]

- Rasouli, J.J.; Shao, J.; Neifert, S.; Gibbs, W.N.; Habboub, G.; Steinmetz, M.P.; Benzel, E.; Mroz, T.E. Artificial Intelligence and Robotics in Spine Surgery. Global Spine J. 2021, 11, 556–564. [Google Scholar] [CrossRef]

- Tambare, P.; Meshram, C.; Lee, C.-C.; Ramteke, R.J.; Imoize, A.L. Performance Measurement System and Quality Management in Data-Driven Industry 4.0: A Review. Sensors 2021, 22, 224. [Google Scholar] [CrossRef]

- Pistrui, B.; Kostyal, D.; Matyusz, Z. Dynamic Acceleration: Service Robots in Retail. Cogent Bus. Manag. 2023, 10, 2289204. [Google Scholar] [CrossRef]

- Villar, A.S.; Khan, N. Robotic Process Automation in Banking Industry: A Case Study on Deutsche Bank. J. Bank. Financ. Technol. 2021, 5, 71–86. [Google Scholar] [CrossRef]

- Barbuto, V.; Savaglio, C.; Chen, M.; Fortino, G. Disclosing Edge Intelligence: A Systematic Meta-Survey. Big Data Cogn. Comput. 2023, 7, 44. [Google Scholar] [CrossRef]

- Wen, S.-C.; Huang, P.-T. Design Exploration of An Energy-Efficient Acceleration System for CNNs on Low-Cost Resource-Constraint SoC-FPGAs. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 234–237. [Google Scholar] [CrossRef]

- Abbasi, M.; Cardoso, F.; Silva, J.; Martins, P. Scalable and Energy-Efficient Deep Learning for Distributed AIoT Applications Using Modular Cognitive IoT Hardware. In International Conference on Disruptive Technologies, Tech Ethics and Artificial Intelligence; Springer: Cham, Switzerland, 2023; pp. 85–96. [Google Scholar] [CrossRef]

- Wan, Z.; Lele, A.; Yu, B.; Liu, S.; Wang, Y.; Reddi, V.J.; Hao, C.; Raychowdhury, A. Robotic Computing on FPGAs: Current Progress, Research Challenges, and Opportunities. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 291–295. [Google Scholar] [CrossRef]

- Gruel, A.; Vitale, A.; Martinet, J.; Magno, M. Neuromorphic Event-Based Spatio-Temporal Attention Using Adaptive Mechanisms. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 379–382. [Google Scholar] [CrossRef]

- Sengupta, J.; Kubendran, R.; Neftci, E.; Andreou, A. High-Speed, Real-Time, Spike-Based Object Tracking and Path Prediction on Google Edge TPU. In Proceedings of the 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Genova, Italy, 31 August 2020–2 September 2020; IEEE: New York, NY, USA, 2020; pp. 134–135. [Google Scholar] [CrossRef]

- Qin, M.; Liu, T.; Hou, B.; Gao, Y.; Yao, Y.; Sun, H. A Low-Latency RDP-CORDIC Algorithm for Real-Time Signal Processing of Edge Computing Devices in Smart Grid Cyber-Physical Systems. Sensors 2022, 22, 7489. [Google Scholar] [CrossRef]

- Zou, Z.; Jin, Y.; Nevalainen, P.; Huan, Y.; Heikkonen, J.; Westerlund, T. Edge and Fog Computing Enabled AI for IoT-An Overview. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hsinchu, Taiwan, 18–20 March 2019; IEEE: New York, NY, USA, 2019; pp. 51–56. [Google Scholar] [CrossRef]

- Chuang, Y.-T.; Hung, Y.-T. A Real-Time and ACO-Based Offloading Algorithm in Edge Computing. J. Parallel Distrib. Comput. 2023, 179, 104703. [Google Scholar] [CrossRef]

- Lee, J.; Kim, C.; Han, D.; Kim, S.; Kim, S.; Yoo, H.-J. Energy-Efficient Deep Reinforcement Learning Accelerator Designs for Mobile Autonomous Systems. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Lee, J.; Jo, W.; Park, S.-W.; Yoo, H.-J. Low-Power Autonomous Adaptation System with Deep Reinforcement Learning. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 300–303. [Google Scholar] [CrossRef]

- Faraone, A.; Delgado-Gonzalo, R. Convolutional-Recurrent Neural Networks on Low-Power Wearable Platforms for Cardiac Arrhythmia Detection. In Proceedings of the 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Genova, Italy, 31 August 2020–2 September 2020; IEEE: New York, NY, USA, 2020; pp. 153–157. [Google Scholar] [CrossRef]

- Dave, S.; Dave, A.; Radhakrishnan, S.; Das, J.; Dave, S. Biosensors for Healthcare: An Artificial Intelligence Approach. In Biosensors for Emerging and Re-Emerging Infectious Diseases; Elsevier: Amsterdam, The Netherlands, 2022; pp. 365–383. [Google Scholar] [CrossRef]

- Li, J.; Liu, J.; Hu, X.; Zhang, Y.; Yu, G.; Qian, S.; Mao, W.; Du, L.; Li, Y.; Du, Y. Grand Challenge on Software and Hardware Co-Optimization for E-Commerce Recommendation System. In Proceedings of the 2023 IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hangzhou, China, 11–13 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- de Moura, R.F.; Carro, L. Scalable and Energy-Efficient NN Acceleration with GPU-ReRAM Architecture. In International Symposium on Applied Reconfigurable Computing; Sringer: Cham, Switzerland, 2023; pp. 230–244. [Google Scholar] [CrossRef]

- Zanghieri, M.; Benatti, S.; Conti, F.; Burrello, A.; Benini, L. Temporal Variability Analysis in SEMG Hand Grasp Recognition Using Temporal Convolutional Networks. In Proceedings of the 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Genova, Italy, 31 August 2020–2 September 2020; IEEE: New York, NY, USA, 2020; pp. 228–232. [Google Scholar] [CrossRef]

- Sakai, Y.; Pedroni, B.U.; Joshi, S.; Akinin, A.; Cauwenberghs, G. DropOut and DropConnect for Reliable Neuromorphic Inference under Energy and Bandwidth Constraints in Network Connectivity. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hsinchu, Taiwan, 18–20 March 2019; IEEE: New York, NY, USA, 2019; pp. 76–80. [Google Scholar] [CrossRef]

- Liang, D.; Kreiser, R.; Nielsen, C.; Qiao, N.; Sandamirskaya, Y.; Indiveri, G. Robust Learning and Recognition of Visual Patterns in Neuromorphic Electronic Agents. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hsinchu, Taiwan, 18–20 March 2019; IEEE: New York, NY, USA, 2019; pp. 71–75. [Google Scholar] [CrossRef]

- Rüegg, T.; Giordano, M.; Magno, M. KP2Dtiny: Quantized Neural Keypoint Detection and Description on the Edge. In Proceedings of the 2023 IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hangzhou, China, 11–13 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Yoon, M.; Choi, J. Architecture-Aware Optimization of Layer Fusion for Latency-Optimal CNN Inference. In Proceedings of the 2023 IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hangzhou, China, 11–13 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Gill, S.S.; Xu, M.; Ottaviani, C.; Patros, P.; Bahsoon, R.; Shaghaghi, A.; Golec, M.; Stankovski, V.; Wu, H.; Abraham, A.; et al. AI for next Generation Computing: Emerging Trends and Future Directions. Internet Things 2022, 19, 100514. [Google Scholar] [CrossRef]

- Rasch, M.J.; Moreda, D.; Gokmen, T.; Le Gallo, M.; Carta, F.; Goldberg, C.; El Maghraoui, K.; Sebastian, A.; Narayanan, V. A Flexible and Fast PyTorch Toolkit for Simulating Training and Inference on Analog Crossbar Arrays. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Zanotti, T.; Puglisi, F.M.; Pavan, P. Smart Logic-in-Memory Architecture For Ultra-Low Power Large Fan-In Operations. In Proceedings of the 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Genova, Italy, 31 August 2020–2 September 2020; IEEE: New York, NY, USA, 2020; pp. 31–35. [Google Scholar] [CrossRef]

- Yang, J.; Li, N.; Chen, Y.-H.; Sawan, M. Towards Intelligent Noninvasive Closed-Loop Neuromodulation Systems. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Inchon, Republic of Korea, 13–15 June 2022; IEEE: New York, NY, USA, 2022; pp. 194–197. [Google Scholar] [CrossRef]

- Kott, A. Autonomous Intelligent Cyber Defense Agent (AICA): A Comprehensive Guide; Springer Nature: Cham, Switzerland, 2023; Volume 87, ISBN 3031292693. [Google Scholar]

- Sun, Q.; Wang, Q.; Wang, X.; Ji, X.; Sang, S.; Shao, S.; Zhao, Y.; Xiang, Y.; Xue, Y.; Li, J.; et al. Prevalence and Cardiovascular Risk Factors of Asymptomatic Intracranial Arterial Stenosis: The Kongcun Town Study in Shandong, China. Eur. J. Neurol. 2020, 27, 729–735. [Google Scholar] [CrossRef]

- Caballero-Rico, F.C.; Roque-Hernández, R.V.; de la Garza Cano, R.; Arvizu-Sánchez, E. Challenges for the Integrated Management of Priority Areas for Conservation in Tamaulipas, México. Sustainability 2022, 14, 494. [Google Scholar] [CrossRef]

| AI Application | Healthcare Impact | Automotive Impact | Retail Impact | Finance Impact |

|---|---|---|---|---|

| Image Recognition | Diagnostic Imaging, Patient Data Analysis [106] | Autonomous Driving, Vehicle Inspection [107] | Customer Behavior Analysis, Inventory Management [108] | Fraud Detection, Customer Identification [109] |

| Natural Language Processing (NLP) | Patient Interaction, Clinical Documentation [110] | Voice Commands, In-Car Assistance [111] | Chatbots, Customer Service [112] | Sentiment Analysis, Automated Customer Support [113] |

| Predictive Analytics | Disease Prediction, Treatment Personalization [114] | Predictive Maintenance, Design Optimization [115] | Sales Forecasting, Stock Optimization [116] | Risk Assessment, Algorithmic Trading [117] |

| Robotics | Surgical Assistance, Patient Care Robotics [118] | Manufacturing Automation, Quality Control [119] | Warehouse Automation, In-Store Robotics [120] | Process Automation, Compliance Monitoring [121] |

| Institution | Location | Contributions |

|---|---|---|

| Massachusetts Institute of Technology (MIT) | United States | Pioneering work in neural networks and cognitive science |

| Stanford University | United States | Research in machine learning algorithms and robotics |

| University of California Berkeley | United States | Advancements in computer vision and deep learning |

| Tsinghua University | China | Innovations in AI chip design and quantum computing |

| ETH Zurich | Switzerland | Breakthroughs in machine learning and AI ethics |

| University of Oxford | United Kingdom | Development of AI in healthcare and ethical AI |

| National University of Singapore (NUS) | Singapore | Leading research in AI and computer engineering |

| Technical University of Munich (TUM) | Germany | Research in artificial intelligence and robotics |

| University of Tokyo | Japan | Advancements in robotics and computer vision |

| Indian Institute of Technology (IIT) | India | Focus on machine learning and AI applications in healthcare |

| University of Toronto | Canada | Notable research in deep learning and neural networks |

| Korea Advanced Institute of Science and Technology (KAIST) | South Korea | Engaged in research in robotics and machine intelligence |

| Imperial College London | United Kingdom | Significant contributions in AI and machine learning |

| École Polytechnique Fédérale de Lausanne (EPFL) | Switzerland | Work in machine learning and AI ethics |

| Australian National University (ANU) | Australia | Engages in AI research, especially in machine learning and AI ethics |

| University of São Paulo | Brazil | Focus on computational intelligence and data science |

| Sorbonne University | France | Research in artificial intelligence and computational science |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miller, T.; Durlik, I.; Kostecka, E.; Mitan-Zalewska, P.; Sokołowska, S.; Cembrowska-Lech, D.; Łobodzińska, A. Advancements in Artificial Intelligence Circuits and Systems (AICAS). Electronics 2024, 13, 102. https://doi.org/10.3390/electronics13010102

Miller T, Durlik I, Kostecka E, Mitan-Zalewska P, Sokołowska S, Cembrowska-Lech D, Łobodzińska A. Advancements in Artificial Intelligence Circuits and Systems (AICAS). Electronics. 2024; 13(1):102. https://doi.org/10.3390/electronics13010102

Chicago/Turabian StyleMiller, Tymoteusz, Irmina Durlik, Ewelina Kostecka, Paulina Mitan-Zalewska, Sylwia Sokołowska, Danuta Cembrowska-Lech, and Adrianna Łobodzińska. 2024. "Advancements in Artificial Intelligence Circuits and Systems (AICAS)" Electronics 13, no. 1: 102. https://doi.org/10.3390/electronics13010102

APA StyleMiller, T., Durlik, I., Kostecka, E., Mitan-Zalewska, P., Sokołowska, S., Cembrowska-Lech, D., & Łobodzińska, A. (2024). Advancements in Artificial Intelligence Circuits and Systems (AICAS). Electronics, 13(1), 102. https://doi.org/10.3390/electronics13010102