Research on Energy-Saving Routing Technology Based on Deep Reinforcement Learning

Abstract

:1. Introduction

- (1)

- We analyze that the existing routing algorithms are difficult to deal with the balance between energy-saving and network performance in the data plane, and propose an intelligent routing algorithm Ee-Routing that jointly optimizes the energy-saving and network performance.

- (2)

- The traffic scheduling optimization goals of elephant flows and mice flows are established, a DDPG algorithm framework suitable for improving energy-saving and network performance advantages is proposed, and the CNN structure is adapted for the algorithm convergence efficiency.

- (3)

- Using Fat Tree as the network topology, the convergence, energy-saving, and network performance advantages of the Ee-Routing algorithm are verified under different traffic intensities.

2. Modeling of Energy-Efficient Routing

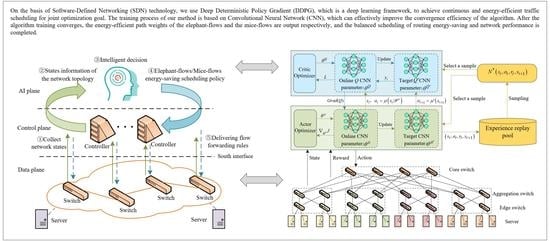

3. Energy-Saving Routing Architecture under SDN

4. Energy-Saving Routing Scheme Based on DDPG

4.1. Ee-Routing Algorithm Framework

- (1)

- Update the online network: The online network consists of the actor online network and the critic online network, in which the actor online network can generate the current action = according to the current state and random initialization parameter , and interact with the environment to obtain the reward and the next state . The combination of and is input to the online critic network, the current action value function is generated by the online critic iteration, and is a random initialization parameter. The critic online network provides gradient information for the actor online network to help the actor online network update the network, and the calculation process of the actor online network gradient is shown in Equation (16).

- (2)

- Update the target network: In order to ensure the effectiveness and convergence of network training, the DDPG framework provides the actor target network and the critic target network with the same structure as the online network. The actor target network selects the next state from the experience replay pool, and obtains the next optimal action = after iterative training. The network parameter is obtained by periodically copying the actor online network parameter , the action and the state are combined input to the critic target network, the the critic target network is iteratively trained to obtain the target value function , and the parameter is obtained by periodically copying the actor online network parameter . The calculation process of the critic target network providing the target return value for the critic online network is shown in Equation (18).

- (3)

- Experience replay pool D: The idea of experience replay is used to store information such as state, action, and reward during the interaction between the agent and the environment, and the valid information is transferred and stored in the tuple as a sample, and priority is assigned to each sample. In order to avoid correlation between the training data, the sample data can be sampled for error value calculation based on the priority when updating the policy.

4.2. Ee-Routing Neural Network Interaction with Environment

- (1)

- State space

- (2)

- Action space

- (3)

- Reward function

4.3. Implementation of Ee-Routing Algorithm

| Algorithm 1 Ee-Routing Algorithm Process |

|

5. Experimental Evaluation

5.1. Experimental Environment and Parameter Configuration

5.2. Experimental Comparison

5.2.1. Convergence of the Algorithm

5.2.2. Energy-Saving Comparison

5.2.3. Performance Comparison

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| QoS | Quality of Service |

| QoE | Quality of Experience |

| SDN | Software-Defined Network |

| DDPG | Deep Deterministic Policy Gradient |

| CNN | Convolutional Neural Network |

| DQN | Deep Q Network |

| DPG | Deterministic Policy Gradient |

| LTR | Link Transmission Rate |

| LUR | Link Utilization Rate |

| LEC | Link Energy Consumption |

References

- Nsaif, M.; Kovásznai, G.; Rácz, A.; Malik, A.; de Fréin, R. An Adaptive Routing Framework for Efficient Power Consumption in Software-Defined Datacenter Networks. Electronics 2021, 10, 3027. [Google Scholar] [CrossRef]

- Fan, K.; Wang, Y.; Ba, J.; Li, W.; Li, Q. An approach for energy efficient deadline-constrained flow scheduling and routing. In Proceedings of the 2019 IFIP/IEEE symposium on integrated network and service management (IM), Washington, DC, USA, 8–12 April 2019; pp. 469–475. [Google Scholar]

- Keshari, S.K.; Kansal, V.; Kumar, S. A systematic review of quality of services (QoS) in software defined networking (SDN). Wirel. Pers. Commun. 2021, 116, 2593–2614. [Google Scholar] [CrossRef]

- Shimokawa, S.; Taenaka, Y.; Tsukamoto, K.; Lee, M. SDN-Based In-network Early QoE Prediction for Stable Route Selection on Multi-path Network. In Proceedings of the International Conference on Intelligent Networking and Collaborative Systems, Victoria, BC, Canada, 31 August–2 September 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 480–492. [Google Scholar]

- Ye, B.; Luo, W.; Wang, R.; Gu, Z.; Gu, R. Deep Reinforcement Learning Based Routing Scheduling Scheme for Joint Optimization of Energy Consumption and Network Throughput. In Proceedings of the International Conference on Telecommunications and Communication Engineering, Singapore, 4–6 December 2020. [Google Scholar]

- Chen, X.; Wang, X.; Yi, B.; He, Q.; Huang, M. Deep Learning-Based Traffic Prediction for Energy Efficiency Optimization in Software-Defined Networking. IEEE Syst. J. 2020, 15, 5583–5594. [Google Scholar] [CrossRef]

- Tang, F.; Mao, B.; Fadlullah, Z.M.; Kato, N.; Mizutani, K. On Removing Routing Protocol from Future Wireless Networks: A Real-time Deep Learning Approach for Intelligent Traffic Control. IEEE Wirel. Commun. 2018, 25, 1–7. [Google Scholar] [CrossRef]

- Zhou, X.; Guo, H. An intelligent routing optimization strategy based on deep reinforcement learning. In Proceedings of the Journal of Physics; Conference Series; IOP Publishing: Diwaniyah, Iraq, 2021; Volume 2010, p. 012046. [Google Scholar]

- Liu, W.X.; Cai, J.; Chen, Q.C.; Wang, Y. DRL-R: Deep reinforcement learning approach for intelligent routing in software-defined data-center networks. J. Netw. Comput. Appl. 2021, 177, 102865. [Google Scholar] [CrossRef]

- Rao, Z.; Xu, Y.; Pan, S. A deep learning-based constrained intelligent routing method. PEER -Peer Netw. Appl. 2021, 14, 2224–2235. [Google Scholar] [CrossRef]

- Peng, D.; Lai, X.; Liu, Y. Multi path routing algorithm for fat-tree data center network based on SDN. Comput. Eng. 2018, 44, 41–45. [Google Scholar]

- Hu, Y.; Li, Z.; Lan, J.; Wu, J.; Yao, L. EARS: Intelligence-driven experiential network architecture for automatic routing in software-defined networking. China Commun. 2020, 17, 149–162. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, T.; Shen, H. A Resource Allocation Algorithm for Ultra-Dense Networks Based on Deep Reinforcement Learning. Int. J. Comput. Commun. Control. 2021, 16, 1–11. [Google Scholar] [CrossRef]

- Li, G. Design and Implementation of Network Energy Consumption Optimization Method Based on Machine Learning; Beijing University of Posts and Telecommunications: Beijing, China, 2020. [Google Scholar]

- Yao, Z.; Wang, Y.; Qiu, X. DQN-based energy-efficient routing algorithm in software-defined data centers. Int. J. Distrib. Sens. Networks 2020, 16, 1550147720935775. [Google Scholar] [CrossRef]

- Rasol, K.A. Flexible architecture for the future internet scalability of SDN control plane. Int. J. Comput. Commun. Control. 2022, 16, 1841–9844. [Google Scholar]

- Meng, L.; Yang, W.; Guo, B.; Huang, S. Deep Reinforcement Learning Enabled Network Routing Optimization Approach with an Enhanced DDPG Algorithm. In Proceedings of the Asia Communications and Photonics Conference. Optical Society of America, Beijing, China, 24–27 October 2020; p. M4A-206. [Google Scholar]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.; Asari, V.K. A state-of-the-art survey on deep learning theory and architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.; Chai, R.; Huang, L.; Zhang, L.; Mei, C. Joint Network Function Deployment and Route Selection for Energy Consumption Optimization in SDN. In Proceedings of the 2020 International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 21–23 October 2020; pp. 625–630. [Google Scholar]

- Tang, Q.; Zhang, H.; Dong, J.; Zhang, L. Elephant Flow Detection Mechanism in SDN-Based Data Center Networks. Sci. Program. 2020, 2020, 1–8. [Google Scholar] [CrossRef]

- Zaher, M.; Alawadi, A.H.; Molnár, S. Sieve: A flow scheduling framework in SDN based data center networks. Comput. Commun. 2021, 171, 99–111. [Google Scholar] [CrossRef]

- Andrews, M.; Anta, A.F.; Zhang, L.; Zhao, W. Routing for Power Minimization in the Speed Scaling Model. IEEE/ACM Trans. Netw. 2012, 20, 285–294. [Google Scholar] [CrossRef]

- Yu, C.; Lan, J.; Guo, Z.; Hu, Y. DROM: Optimizing the routing in software-defined networks with deep reinforcement learning. IEEE Access 2018, 6, 64533–64539. [Google Scholar] [CrossRef]

- Santos de Oliveira, R.L.; Shinoda, A.A.; Schweitzer, C.M.; Prete, L.R. Using mininet for emulation and prototyping software-defined networks. In Proceedings of the 2014 IEE Colombian Conference On Communications And Computing (colcom), Bogota, Colombia, 4–6 June 2014; p. 6. [Google Scholar]

- Lonare, A.; Gulhane, V. Addressing agility and improving load balance in fat-tree data center network—A review. In Proceedings of the 2nd International Conference on Electronics and Communication Systems (ICECS), Coimbatore, India, 26–27 February 2015; pp. 965–971. [Google Scholar]

- Chen, Y.H.; Chin, T.L.; Huang, C.Y.; Shen, S.H.; Huang, R.Y. Time efficient energy-aware routing in software defined networks. In Proceedings of the 2018 IEEE 7th international conference on cloud networking (CloudNet), Tokyo, Japan, 22–24 October 2018; 2018; pp. 1–7. [Google Scholar]

| Experimental Parameters | Parameter Value |

|---|---|

| training steps T | 80.000 |

| learning rate of actor/critic | 0.002/0.001 |

| target network parameter update rate | 0.001 |

| size of the experience replay pool D | 4500 |

| training steps of the experience replay pool M | 150 |

| discount factor | 0.7 |

| greedy | 0.01 |

| momentum m | 0 |

| raining batch size | 128 |

| reward weight parameter | 0–1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, X.; Huang, W.; Wang, S.; Zhang, J.; Zhang, H. Research on Energy-Saving Routing Technology Based on Deep Reinforcement Learning. Electronics 2022, 11, 2035. https://doi.org/10.3390/electronics11132035

Zheng X, Huang W, Wang S, Zhang J, Zhang H. Research on Energy-Saving Routing Technology Based on Deep Reinforcement Learning. Electronics. 2022; 11(13):2035. https://doi.org/10.3390/electronics11132035

Chicago/Turabian StyleZheng, Xiangyu, Wanwei Huang, Sunan Wang, Jianwei Zhang, and Huanlong Zhang. 2022. "Research on Energy-Saving Routing Technology Based on Deep Reinforcement Learning" Electronics 11, no. 13: 2035. https://doi.org/10.3390/electronics11132035