Abstract

Nowadays, the data flow architecture is considered as a general solution for the acceleration of a deep neural network (DNN) because of its higher parallelism. However, the conventional DNN accelerator offers only a restricted flexibility for diverse network models. In order to overcome this, a reconfigurable convolutional neural network (RCNN) accelerator, i.e., one of the DNN, is required to be developed over the field-programmable gate array (FPGA) platform. In this paper, the sparse optimization of weight (SOW) and convolutional optimization (CO) are proposed to improve the performances of the RCNN accelerator. The combination of SOW and CO is used to optimize the feature map and weight sizes of the RCNN accelerator; therefore, the hardware resources consumed by this RCNN are minimized in FPGA. The performances of RCNN-SOW-CO are analyzed by means of feature map size, weight size, sparseness of the input feature map (IFM), weight parameter proportion, block random access memory (BRAM), digital signal processing (DSP) elements, look-up tables (LUTs), slices, delay, power, and accuracy. An existing architectures OIDSCNN, LP-CNN, and DPR-NN are used to justify efficiency of the RCNN-SOW-CO. The LUT of RCNN-SOW-CO with Alexnet designed in the Zynq-7020 is 5150, which is less than the OIDSCNN and DPR-NN.

1. Introduction

Artificial intelligence (AI) is generally a primeval field of computer science and it is extensive worldwide dealing with all the parts of imitating cognitive functions for real-world issue resolving and creating systems which study and think like humans. Hence, it is referred to as machine intelligence instead of human intelligence. This AI is utilized in the integration of computer science and cognitive science. The practical accomplishments in machine learning increases the interest in the field of AI [1]. Examination and application directions of AI technology are learning intelligence, behavior intelligence, thinking intelligence, perception intelligence, and so on [2]. In general, neural networks are used in spacecraft control, vehicle control, pattern recognition, robotics, military equipment, analysis and decision making in the Internet of Things systems, drone control, health care, and so on [3,4,5,6]. Specifically, the CNN is one of the modern AI approaches which generates a complex feature when it is processed with a huge model and sufficient training dataset. Therefore, the features from the CNN provide better performance than the typical handcrafted features [7].

The specialized coprocessors comprising application-specific integrated circuits (ASICs), graphics processing units (GPUs), and FPGA use the natural parallelization and offer higher data throughput. The deep neural network is mainly considered in this computing revolution because of the higher parallelizability and general computational requirements [8]. CNN generally includes the intensive multiplication and accumulation operations. These operations are performed sequentially using general-purpose processors that result in low efficiency. The GPU offers the Giga to Tera FLOPs per seconds, but it suffers a high energy cost. FPGA generally uses one order of magnitude less power when compared to GPUs and, also, it provides significant speed improvement compared to the CPUs [9,10,11]. An effective way to implement the neural network is to utilize the FPGA, due to their effective parallel computing, reconfigurability, and robust flexibility. However, the FPGA also has some limitations, such as implementation complexity, circuit reprogramming, high cost, lack of machine learning libraries, and implementation complexity [12,13,14,15]. The proposed SOW and CO optimizations are utilized individually in some of the conventional FPGA applications. However, this research applies SOW and CO techniques to attain advanced quality of performances, which are explained briefly in the following sections.

The contribution of this paper is mentioned as follows:

- This research exploits SOW and CO to decrease the memory print by separating the feature map into minor pieces and storing them in FPGA’s high-throughput on-chip memory. This diminishes the weight sizes of RCNN accelerator and accomplishes even higher performances.

- The RCNN accelerator is developed by the FPGA along with the SOW and CO for minimizing the hardware resources.

- The data reuse approach used in the SOW helps to minimize the power consumption. Moreover, a considerable amount of calculation is decreased using the sparse matrix operations.

- Execution speed of the RCNN accelerator is improved by using the loop unrolling approach of SOW, whereas this loop unrolling also minimizes the computing resources.

The remaining paper is arranged as follows: the related work about the existing CNN architectures are described in Section 2. The problems from the related work are specified along with the solutions given by the proposed research in Section 3. Section 4 provides the detailed explanation about the RCNN-SOW-CO architecture. The performance and comparative analysis of the RCNN-SOW-CO are given in Section 5. Lastly, the conclusion is made in Section 6.

2. Related Work

The related work about the existing CNN architectures created over the FPGA are given as follows:

Pang et al. [16] presented an end-to-end FPGA-based accelerator to perform an effective operation of fine-grained pruned CNNs. Here, the load imbalances were created after the fine-grained pruning. The accelerator’s load imbalances and internal buffer misalignments were resolved by developing a group pruning algorithm with group sparse regularization (GSR). The sparse processing elements design and on and off chip buffers scheduling are used to optimize the accelerator. However, the loss of accuracy was out of control when there was an increment in the number of pruned weights.

Mo et al. [17] developed the deep neural network over the small-scale FPGA to perform the odor identification. The odor identification with depthwise separable CNN (OIDSCNN) was developed for minimizing the parameters and accelerating the hardware design. According to the quantization method, namely the saturation-flooring KL divergence approach was used to design the OI-DSCNN over a Zynq-7020 SoC chip. The optimization in the parameters was used to achieve higher speed. However, the OIDSCNN required a high amount of DSP resources.

Li et al. [18] implemented the AlphaGo policy network and, also, effective hardware architectures were developed for accelerating the deep CNN (DCNNs). The policy network was implemented to perform the sample actions in the game of Go. The policy network was adjusted to achieve the accurate goal of winning games by combining the reinforcement learning with supervised learning. The designed accelerator was fit in various FPGAs that provided the balance among the hardware resources and processing speed. Due to full utilization of on-chip resources and the parallelism of FPGA, the developed DCNN consumed a high amount of hardware resources.

Vestias [19] designed a hardware-oriented pruning of CNN over the FPGA. In general, the pruning was a model optimization approach which prunes the links among the layers for minimizing the number of weights and operations. The sparsity was introduced in the weights to minimize the computational efficiency of the conventional pipelined architectures. Subsequently, the block pruning was used on the pipelined data path to eliminate the sparsity issue created by the pruning. However, the accuracy of CNN was high only in low-density FPGAs.

Abd El-Maksoud et al. [20] developed the low-power dedicated CNN (LP-CNN) hardware accelerator according to the GoogLeNet CNN. The size of the memory was minimized by applying the weights pruning and quantization. The CNN was processed layer by layer because of the timesharing/pipelined structure-based accelerator. The developed CNN accelerator was used only in an on-chip memory to store the weights and activations. Moreover, the shifting operations were used instead of the multiplications. The accuracy of the LP-CNN was affected because of a huge number of spare weights.

Zhao [21] presented the light music online system by designing the FPGA and CNN. Important tasks performed by the cell neural organization architecture are initiation capacity, ordinariness, pooling, and convolution. However, a huge amount of hardware resources were required to design the CNN-based light music online system.

You and Wu [22] developed a software/hardware co-optimized reconfigurable sparse CNN (RSNN) over the FPGA. The sparse convolution data flow was developed with simple control logic, which used the element-vector multiplication. Here, the software-based load-balance-aware pruning approach was developed for balancing the computation load over dissimilar processing units. The DSP utilization of RSNN was increased because of the simultaneous execution of two 16-bit fixed-point MACs.

Irmak et al. [23] presented an architecture of a high-performance and flexible neural network (NN) accelerator over the FPGA. In this work, dynamic partial reconfiguration (DPR) was developed for realizing the various NN accelerators by updating only a portion of FPGA design. A different processing element (PE) with identical interfaces was designed for computing the layers in various NN. However, the developed NN failed to perform the reconfiguration in FPGA, which resulted in high power consumption.

3. Problem Statement

The problems found from related work are provided along with the solutions given by the proposed research.

The accuracy of the overall system is degraded because of the restricted pruning space [16]. For an effective system, the amount of hardware resources is required to be less to minimize the DSP consumption and to improve the speed. However, a high amount of DSP resources is required in OIDSCNN [17]. Moreover, high power consumption is caused because of the NN without any reconfiguration in FPGA [23].

Solution: In this research, the reconfigurable convolutional neural network is developed over the FPGA. The design of the RCNN accelerator with the SOW and CO is used to minimize the feature map and weight sizes. Moreover, the RCNN-SOW-CO minimizes the number of calculations according to the optimization approach. This helps to minimize the amount of hardware resources and, accordingly, it increases the speed.

4. Overview of Architecture

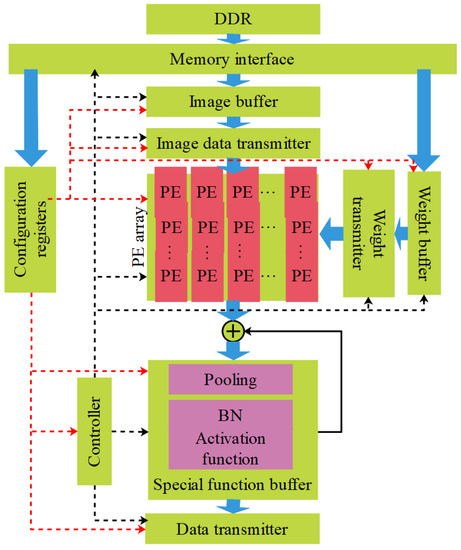

Figure 1 shows the data-flow-based reconfigurable architecture. Different from the existing structures, the configuration register is introduced, followed by all the accelerator’s hardware modules configured in the RCNN accelerator. The architecture is reconfigured with the configuration register according to the configuration instructions saved in double data rate (DDR). Next, the parameters of weight and image are transmitted to the buffer of weight and image. The PE array streams calculate the outcomes into the special function buffer because it comprises the static random-access memory (SRAM) banks, which are connected in parallel. SRAM cell contains four NMOS transistors and two poly-load resistors. Two NMOS transistors are referred to as pass-transistors. These two transistors take their gates integrated with the word line and attach the cell to the columns. The other two NMOS transistors are connected to pull-downs of the flip-flop inverters. Due to this type of arrangement, integrated SRAM provides faster access to data and can be used for a computer’s cache memory. Moreover, the special function buffer has the special functional layers, such as pooling, batch normalization (BN), and activation. These special functional layers minimize data access among the on-chip buffer and DDR. The suggested architecture provides suitable transferability to diverse RCNN models because of its reconfigurable PE array, which can be modified to adapt to numerous filter sizes of the systems. In the meantime, a reconfigurable on-chip buffer procedure is improved while considering the projected model, which completely depends on the restriction ratio property of diverse layers. Furthermore, the RCNN accelerator improves its flexibility by manipulating the sparsity features of the input feature map.

Figure 1.

Data-flow-based reconfigurable architecture.

4.1. Architecture of Reconfigurable PE Used for Convolution

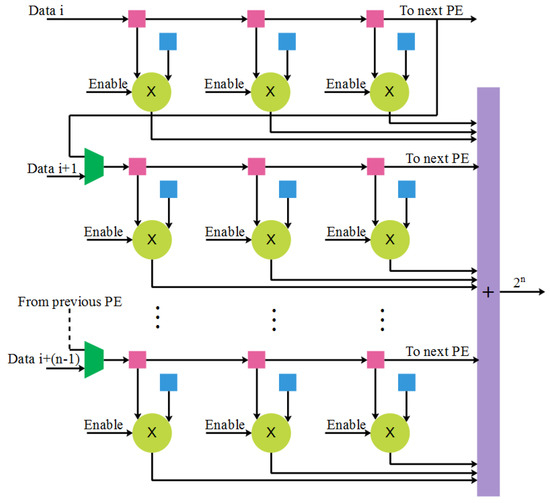

A spatial 2D PE array is implemented to accomplish the tradeoff among the flexibility, throughput, and complexity, whereas the designed spatial 2D PE array is an essential component of the accelerate solution. The spatial 2D structure works better with the complex network structures that have various kernel sizes. Figure 2 displays the architecture of reconfigurable PE.

Figure 2.

Architecture of reconfigurable PE.

The conventional PE design has one multiplier, but the reconfigurable PE has eight multipliers for realizing the convolution operations of 1 × 1 or 3 × 3, which are the foremost operations in the conventional DNN. Here, eight image registers and eight weight registers are connected to the multipliers. In this design, the image registers are utilized as shift registers and send the image data among the PE. The work status of the PE is decided by the enable signals connected to the multipliers, which helps to minimize the energy consumption. For the inactivated multipliers, the outputs are fixed as zero. The designed reconfigurable PE is used to accomplish the 1 × 1 or 3 × 3 convolution operations. The accelerator deals with large filter sizes, such as 5 × 5 or 7 × 7, according to the designed PE array. Moreover, the operation of 11 × 11 is also performed by using 16 PEs. The specific configuration of 5 × 5 and 7 × 7 is shown in Figure 3 and Figure 4, respectively. The usage of multipliers for various kernel sizes is given in the Table 1.

Figure 3.

Convolutional operation of 5 × 5.

Figure 4.

Convolutional operation of 7 × 7.

Table 1.

Peak multiplier utilization for various kernel sizes.

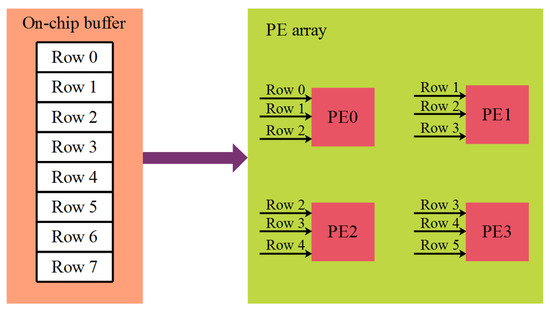

The rows of the image are used again for reducing the on-chip data movement, which lessens the power consumption. The reuse approach for the filter size of 3 × 3 and a stride of 1 is shown in the Figure 5. Therefore, this reuse approach is used to decrease the usage of SRAM banks.

Figure 5.

Row data reuse strategy.

In this RCNN accelerator, the fully connected (FC) layers are used as the special convolutional layers by using the padding of 0, 1 × 1 filter, 1 × 1 IFM, and stride of 1. The FC layer has a huge number of weight parameters where the technique used to calculate the weight parameter is explained in the following section.

4.2. Sparseness Optimization for Weight

The resources of on-chip memory are inadequate embedded design. A huge amount of energy is utilized for the data access among the on-chip and external memory. Therefore, the data reuse approaches are used in the RCNN accelerator to minimize the power. There are two ideal reuse approaches that are used in the certain layer, such as saving all IFM data on chip and storing all weight parameters on chip. An amount of weight parameter and image information highly differs between various convolutional layers. The approach used to calculate the weight parameter is described as follows.

The spare weight RCNN accelerator is introduced that is considered as applicable for the hardware implementation. Consider the sparse weight has , and zero. The sparse weight RCNN accelerator contains hidden weights while training on the GPU. From the hidden weight, the sparse weight is defined, which is expressed in Equation (1):

where the threshold to differentiate the zero weight and a non-zero one is represented as .

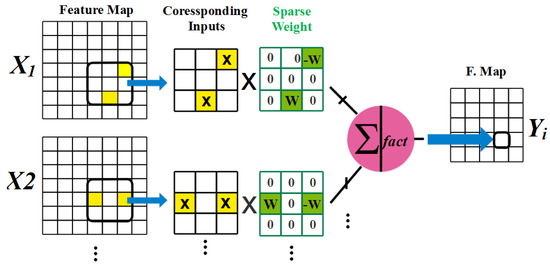

An example of sparse convolution operation is shown in Figure 6. Moreover, the weight value is always taken as or for the baseline CNN. Therefore, there is no probability of disconnection between the neurons. The weight 0 state defines the disconnections at the sparse weight RCNN accelerator. The sparse weight RCNN’s matrix representation is a sparse one; hence, the operations of sparse matrix is applied for reducing the number of calculations.

Figure 6.

Example of sparse convolution operation.

The operation of sparse weight convolutional is realized using the zero-weight skip calculation. Since the address in respect to the non-zero weight is stored, when the pre-trained RCNN accelerator includes a zero-weight, consequently, the typical CNN with zero-weight skip one is used to accomplish the sparse weight convolutional operation. The developed convolutional execution needs words, where an amount of non-zero weights is represented as . Fewer memory accesses are required by the RCNN accelerator with zero weights; however, this RCNN with sparse weight is faster than the 2D calculation.

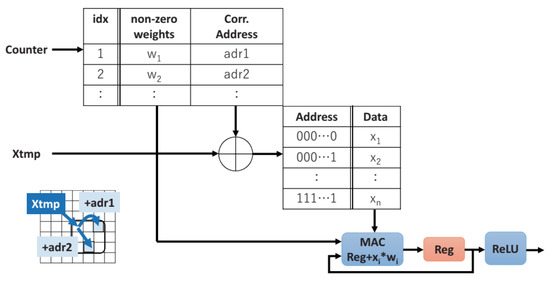

An indirect memory access is used to perform the zero-skip calculation, whereas the indirect memory access for sparse convolution is shown in Figure 7. Initially, this memory access simultaneously reads the non-zero weight and respective address. Next, this memory access identifies the address for the respective input. Moreover, memory access reads the respective one, followed by it accomplishing the multiply accumulation (MAC) operation. The activation function, i.e., ReLU, is applied by replicating the aforementioned operations for all the non-zero weights in the kernel.

Figure 7.

Indirect memory access operation.

4.3. Convolutional Optimization

The convolution optimization of the convolution (CONV) layer is used for enhancing the performance density. From the Roofline model, the performance density of FPGA accelerator is formulated under definite hardware resource conditions, as shown in Equation (2):

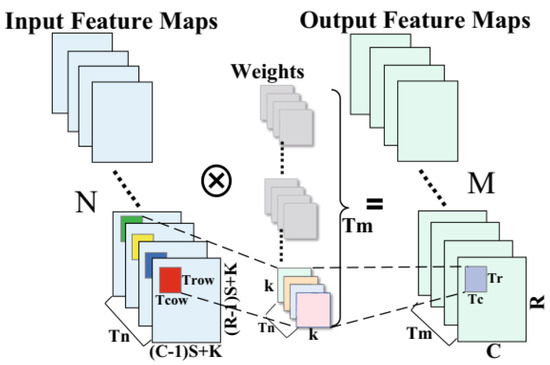

where and denote the layer of input, output, row and column feature maps, respectively; represents the kernel; tile sizes of the input, output, row and column are and , and ; the time complexities of the convolutional and pooling layer are and , respectively. A number of multiply–add operations are used to estimate the time complexity of a certain layer at the RCNN accelerator. Moreover, a number of input channels and convolution kernels in the CONV layer are denoted as and .

More than 90% of operation in the RCNN accelerator is occupied by the convolution operations; therefore, is higher than the sum of and . Moreover, the tile sizes , whereas the tile sizes of and are variable. Equation (1) shows that the bottleneck of performance is defined only by and , which are highly limited by resources of on-chip DSP. The methods used in this convolutional optimization are defined below.

4.3.1. Loop Unrolling

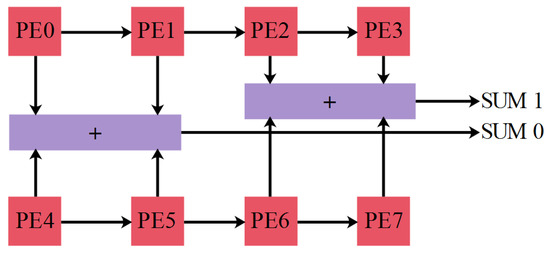

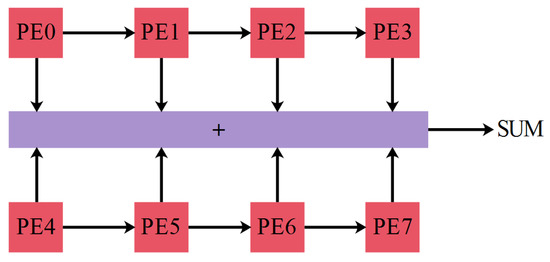

The parallelism among CONV kernels is used in the loop unrolling approach to accomplish the parallel execution of many CONV executions. In CONV, the parallel pipeline multiplication and addition are accomplished by partially expanding the two dimensions and . Here, each layer consists of IFMs. The pixel blocks in the identical location and related weights are acquired from autonomous IFMs. Hence, the IFMs require a time of to read and compute. The calculation of loop unrolling according to the is used to mitigate the resource wastage. The parallel multiplication units are used to multiply the input pixel blocks of for performing the multiplication operations, and addition trees of return the product addition output in the output buffer. The local parallel structure is realized by the loop unrolling, as shown in Figure 8. This loop unrolling is used to maximize the speed and concurrently increases the performance for each calculating resource.

Figure 8.

Calculation of parallel block.

4.3.2. Loop Tiling

The data locality computed by the convolution is used in the loop tiling. In data locality, entire data are divided as multiple smaller blocks, which are preserved in the on-chip buffers. The designed blocks are shown in Figure 8. From the DRAM, the pixel blocks of and respective weights of IFMs are acquired in this tiling process. Next, the weight parameters and pixel blocks of the feature map are used again on the chip. An external memory access is minimized, latency is reduced, and performance is improved by preserving the intermediate results in the on-chip cache. After obtaining the final output pixel blocks, the pixel blocks of output feature maps are taken as output in this tiling process.

5. Results and Discussion

This section provides the performance and comparative analysis of the RCNN-SOW-CO architecture. The design of RCNN-SOW-CO is evaluated with three well-known CNNs, such as AlexNet, VGG16, and VGG19. The implemented design is evaluated in the Xilinx Zynq 7020 FPGA device. Here, the fixed-point data are utilized with 16-bit for input and output feature maps, 8-bits for CONV layer weight, 4-bits for fully connected (FC) layer weight, and 32-bits for partial addition. Here, the Vivado HLS 2019.1 is utilized for synthesizing the accelerator written in C++ into the register transfer level (RTL) design. Subsequently, Vivado 2019.1 is used for compiling the RTL code into a bitstream. Moreover, the MNIST dataset [24] is used for testing the proposed RCNN-SOW-CO architecture.

5.1. Performance Analysis of RCNN-SOW-CO Architecture

The performance analysis of RCNN-SOW-CO architecture is analyzed by means of feature map size, weight size, sparseness of the IFM, weight parameter proportion, and FPGA performances. Here, the performances are analyzed for the CNN accelerator with SOW-CO and without SOW-CO.

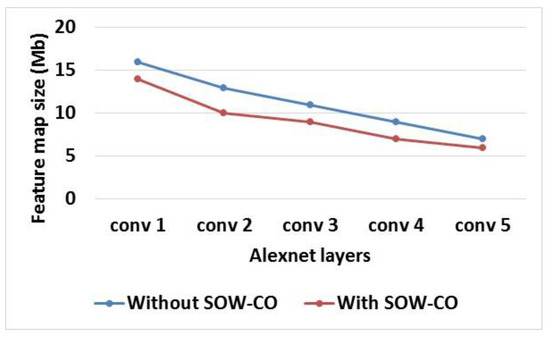

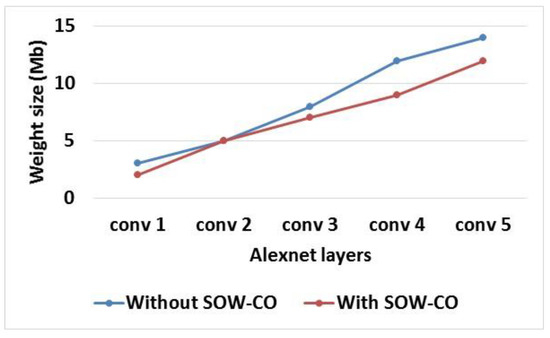

The investigation of feature map size and weight size for RCNN-Alexnet with and without SOW-CO is shown in Table 2. Figure 9 and Figure 10 show the graphical illustration of feature map size and weight size, respectively. From the table and figures, it is known that the feature map and weight sizes of RCNN-Alexnet with SOW-CO are less when compared to the RCNN without SOW-CO. The sizes of the feature map and weight are reduced in the RCNN with SOW-CO due to the parameter reduction achieved by using sparse matrix.

Table 2.

Analysis of feature map and weight sizes for Alexnet.

Figure 9.

Graphical illustration of feature map size for Alexnet.

Figure 10.

Graphical illustration of weight size for Alexnet.

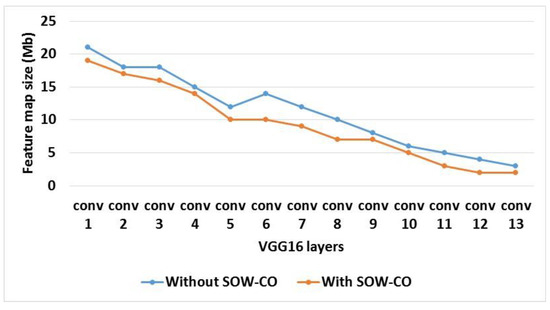

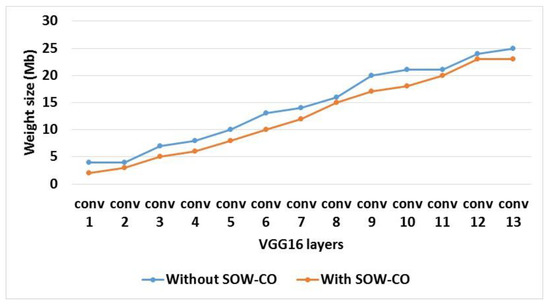

Table 3 shows the analysis of feature map and weight sizes for RCNN-VGG16 with and without SOW-CO. Additionally, the comparison of feature map and weight sizes for feature map size and weight size are shown in Figure 11 and Figure 12, respectively. This analysis shows that the feature map and weight sizes of the RCNN with SOW-CO are less when compared to the RCNN without SOW-CO. The architecture without SOW-CO causes higher feature map and weight sizes, because it does not have optimized architecture for convolution, as well as the weight value not being optimized during the computation process.

Table 3.

Analysis of feature map and weight sizes for VGG16.

Figure 11.

Graphical illustration of feature map size for VGG16.

Figure 12.

Graphical illustration of weight size for VGG16.

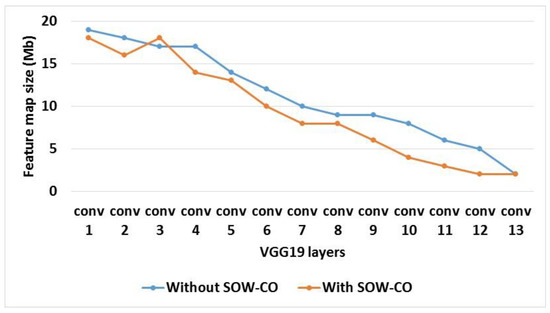

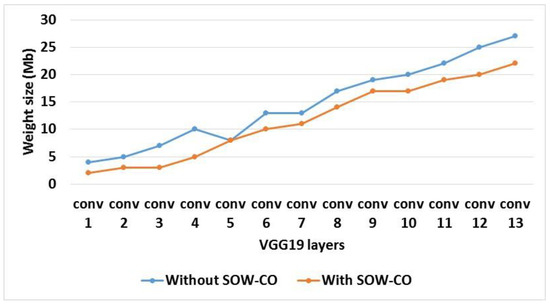

The investigation of feature map and weight sizes for RCNN-VGG19 with and without SOW-CO is shown in Table 4. Figure 13 and Figure 14 show the graphical illustrations of feature map and weight sizes, respectively. From the analysis, it is known that the feature map and weight sizes of RCNN-VGG19 with SOW-CO are less than the RCNN without SOW-CO. The utilization of sparse matrix in weight and convolutional optimization reduces the sizes of the feature map and weight.

Table 4.

Analysis of feature map and weight sizes for VGG19.

Figure 13.

Graphical illustration of feature map size for VGG19.

Figure 14.

Graphical illustration of weight size for VGG19.

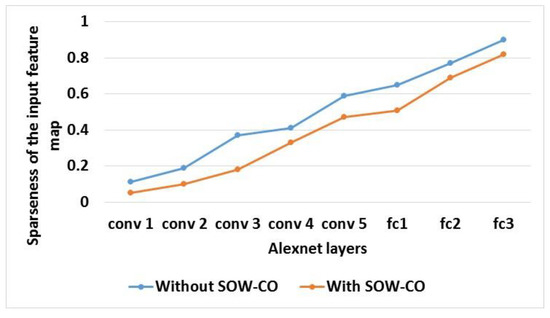

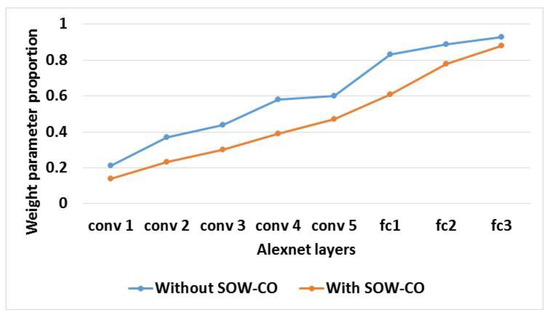

An evaluation of sparseness of the IFM and weight parameter proportion for RCNN-Alexnet with and without SOW-CO is shown in Table 5. The graphical illustrations of the IFM’s sparseness and weight parameter proportion for RCNN-Alexnet are shown in Figure 15 and Figure 16, respectively. This analysis shows that the IFM’s sparseness and weight parameter proportion of RCNN-Alexnet with SOW-CO are less when compared to the RCNN-Alexnet without SOW-CO. The optimization of weight using the sparsity is used to optimize the IFM’s sparseness and weight parameter.

Table 5.

Analysis of sparseness of the IFM and weight parameter proportion for Alexnet.

Figure 15.

Graphical illustration of IFM’s sparseness for Alexnet.

Figure 16.

Graphical illustration of weight parameter proportion for Alexnet.

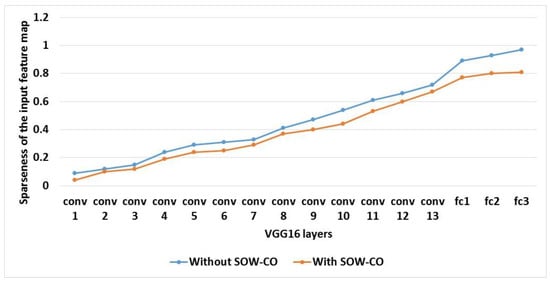

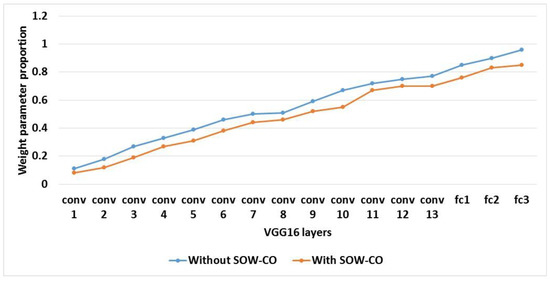

Table 6 shows the analysis of sparseness of the IFM and weight parameter proportion for RCNN-VGG16 with and without SOW-CO. Additionally, the comparison of feature map size and weight size for IFM’s sparseness and weight parameter proportion are shown in Figure 17 and Figure 18, respectively. This analysis shows that the IFM’s sparseness and weight parameter proportion of the RCNN with SOW-CO are less when compared to the RCNN without SOW-CO. The IFM’s sparseness and weight parameter proportion are increased because of the CNN without any convolutional and weight optimization.

Table 6.

Analysis of sparseness of the IFM and weight parameter proportion for VGG16.

Figure 17.

Graphical illustration of IFM’s sparseness for VGG16.

Figure 18.

Graphical illustration of weight parameter proportion for VGG16.

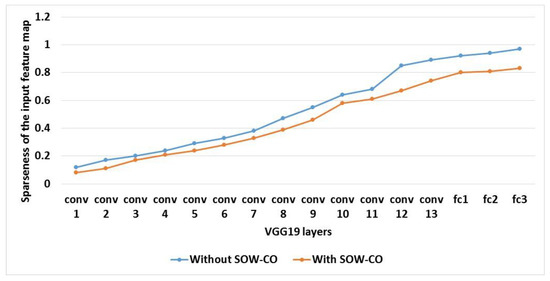

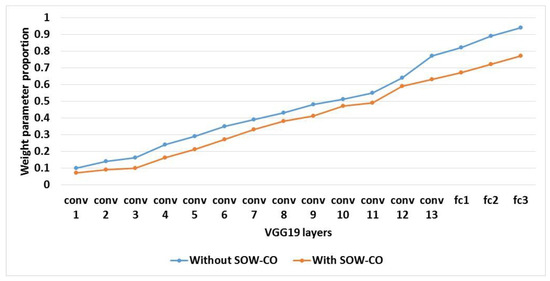

An evaluation of sparseness of the IFM and weight parameter proportion for RCNN-VGG19 with and without SOW-CO is shown in Table 7. The graphical illustrations of the IFM’s sparseness and weight parameter proportion for RCNN-VGG19 are shown in Figure 19 and Figure 20, respectively. This analysis shows that the IFM’s sparseness and weight parameter proportion of RCNN-VGG19 with SOW-CO are less when compared to the RCNN-VGG19 without SOW-CO.

Table 7.

Analysis of sparseness of the IFM and weight parameter proportion for VGG19.

Figure 19.

Graphical illustration of IFM’s sparseness for VGG19.

Figure 20.

Graphical illustration of weight parameter proportion for VGG19.

The FPGA performances of RCNN are analyzed in terms of BRAM, DSP, LUT, slices, delay, power, and accuracy. Here, the performances are analyzed for the RCNN with and without SOW-CO architecture. Table 8, Table 9 and Table 10 show the analysis of FPGA performances for the AlexNet, VGG16, and VGG19, respectively. From the analysis, it is clear that the RCNN with SOW-CO architecture provides better performance than the RCNN without SOW-CO in terms of accuracy and execution time. The power consumption is minimized by using the data reuse accomplished in the RCNN with SOW-CO. Moreover, the loop unrolling used in the convolutional optimization helps to minimize the delay for the RCNN with SOW-CO.

Table 8.

Analysis of FPGA performances for AlexNet.

Table 9.

Analysis of FPGA performances for VGG16.

Table 10.

Analysis of FPGA performances for VGG19.

5.2. Comparative Analysis

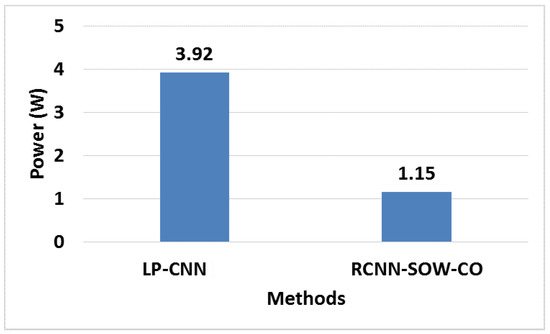

The efficiency of the RCNN-SOW-CO architecture is evaluated by comparing it with recent CNN architectures. Existing architectures used to evaluate the RCNN-SOW-CO are OIDSCNN [17] and DPR-NN [23]. Here, the comparison is made with different FPGA devices, such as Zynq-7020 and Virtex-7. The comparative analyses of the Zynq-7020 and Virtex-7 for RCNN-SOW-CO architecture are shown in Table 11 and Table 12, respectively. Figure 21 shows the comparison of BRAM for Zynq-7020. The proposed RCNN-SOW-CO is analyzed under various architecture layers, which are: AlexNet, VGG16, and VGG19. While considering the AlexNet layer, it attains BRAM performance of 40.1, 157 DSP, and 5150 LUTs. Meanwhile, the VGG16 layer achieves the BRAM performance of 43.14, 160 DSP, and 5180 LUTs. Then, finally, VGG19 attains 42.02 BRAM, 140 DSP, and 5120 LUTs. These details are clearly tabulated below in Table 11. This comparative analysis shows that the RCNN-SOW-CO architecture achieves a better performance than LP-CNN [20] under GoogLeNet case study for Virtex-7. A number of calculations in the RCNN are decreased by using the sparse matrix and convolutional optimization, which resulted in less hardware resources than the LP-CNN [20] for Virtex-7, which are tabulated in Table 12. Figure 22 shows the comparative analysis of power for Virtex-7. For the analysis, both the proposed RCNN-SOW-CO and existing LP-CNN [20] are processed with NVIDIA GPU. Alternatively, the spiking PE does not employ any DSPs, since there is no increase in spiking layers. Although, it takes additional hardware resources, such as LUTs. From the analysis, it is concluded that the proposed RCNN-SOW-CO with NVIDIA GPU achieves lower power consumption of 1.15 W when compared with the existing LP-CNN [20], which consumes 3.92 W.

Table 11.

Comparative analysis of RCNN-SOW-CO architecture for Zynq-7020.

Table 12.

Comparative analysis of RCNN-SOW-CO architecture for Virtex-7.

Figure 21.

Comparison of BRAM. OIDSCNN [17], DPR-NN [23].

Figure 22.

Comparison of power for Virtex-7. LP-CNN [20].

6. Conclusions

In this paper, the RCNN accelerator is proposed over the FPGA, along with weight optimization and convolutional optimization. The configuration instructions saved in the DDR are used to reconfigure the RCNN with the configuration register. Data access among the on-chip buffer and DDR are minimized by using the special functional layer, which includes a pooling, BN, and activation. The power utilized by this RCNN-SOW-CO is minimized in two ways; one is the reduction in on-chip data movement and the other one is a data reuse approach accomplished in the RCNN accelerator. Here, an number of computations used in the RCNN accelerator are minimized based on the matrix representation of sparse weight. Moreover, the loop unrolling used in the convolutional optimization is used to increase the RCNN accelerator’s speed. From the results, it is concluded that the RCNN-SOW-CO provides the improved performances compared to the OIDSCNN, LP-CNN, and DPR-NN. The LUT of RCNN-SOW-CO with AlexNet designed in the Zynq-7020 is 5150, which is less than the OIDSCNN and DPR-NN. In the future, the proposed RCNN accelerator can be utilized to perform faster real-time object detection.

Author Contributions

The paper investigation, resources, data curation, writing—original draft preparation, writing—review and editing, and visualization were conducted by K.M.V.G. and S.M. The paper conceptualization and software were conducted by A.A. The validation, formal analysis, methodology, supervision, project administration, and funding acquisition of the version to be published were conducted by S.R. and P.B.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are openly available in MNIST dataset at doi: 10.1109/MSP.2012.2211477, reference number [24].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and Explainability of Artificial Intelligence in Medicine. WIREs Data Min. Knowl. Discov. 2019, 9, e1312. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, X.; Dahu, W. Application of Artificial Intelligence Algorithms in Image Processing. J. Vis. Commun. Image Represent. 2019, 61, 42–49. [Google Scholar] [CrossRef]

- Shymkovych, V.; Telenyk, S.; Kravets, P. Hardware Implementation of Radial-Basis Neural Networks with Gaussian Activation Functions on FPGA. Neural Comput. Appl. 2021, 33, 9467–9479. [Google Scholar] [CrossRef]

- Subashini, M.M.; Sahoo, S.K.; Sunil, V.; Easwaran, S. A Non-Invasive Methodology for the Grade Identification of Astrocytoma Using Image Processing and Artificial Intelligence Techniques. Expert Syst. Appl. 2016, 43, 186–196. [Google Scholar] [CrossRef]

- Levi, T.; Nanami, T.; Tange, A.; Aihara, K.; Kohno, T. Development and Applications of Biomimetic Neuronal Networks Toward BrainMorphic Artificial Intelligence. IEEE Trans. Circuits Syst. II 2018, 65, 577–581. [Google Scholar] [CrossRef]

- Ghani, A.; See, C.H.; Sudhakaran, V.; Ahmad, J.; Abd-Alhameed, R. Accelerating Retinal Fundus Image Classification Using Artificial Neural Networks (ANNs) and Reconfigurable Hardware (FPGA). Electronics 2019, 8, 1522. [Google Scholar] [CrossRef] [Green Version]

- Guo, K.; Sui, L.; Qiu, J.; Yu, J.; Wang, J.; Yao, S.; Han, S.; Wang, Y.; Yang, H. Angel-Eye: A Complete Design Flow for Mapping CNN Onto Embedded FPGA. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2018, 37, 35–47. [Google Scholar] [CrossRef]

- Duarte, J.; Harris, P.; Hauck, S.; Holzman, B.; Hsu, S.-C.; Jindariani, S.; Khan, S.; Kreis, B.; Lee, B.; Liu, M.; et al. FPGA-Accelerated Machine Learning Inference as a Service for Particle Physics Computing. Comput. Softw. Big Sci. 2019, 3, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Liang, S.; Yin, S.; Liu, L.; Luk, W.; Wei, S. FP-BNN: Binarized Neural Network on FPGA. Neurocomputing 2018, 275, 1072–1086. [Google Scholar] [CrossRef]

- Wang, X.; Li, C.; Song, J. Motion Image Processing System Based on Multi Core FPGA Processor and Convolutional Neural Network. Microprocess. Microsyst. 2021, 82, 103923. [Google Scholar] [CrossRef]

- Teodoro, A.A.M.; Gomes, O.S.M.; Saadi, M.; Silva, B.A.; Rosa, R.L.; Rodríguez, D.Z. An FPGA-Based Performance Evaluation of Artificial Neural Network Architecture Algorithm for IoT. Wireless Pers. Commun. 2021, 1–32. [Google Scholar] [CrossRef]

- Sarić, R.; Jokić, D.; Beganović, N.; Pokvić, L.G.; Badnjević, A. FPGA-Based Real-Time Epileptic Seizure Classification Using Artificial Neural Network. Biomed. Signal Process. Control 2020, 62, 102106. [Google Scholar] [CrossRef]

- Liu, Q.; Liu, J.; Sang, R.; Li, J.; Zhang, T.; Zhang, Q. Fast Neural Network Training on FPGA Using Quasi-Newton Optimization Method. IEEE Trans. VLSI Syst. 2018, 26, 1575–1579. [Google Scholar] [CrossRef]

- Novickis, R.; Justs, D.J.; Ozols, K.; Greitāns, M. An Approach of Feed-Forward Neural Network Throughput-Optimized Implementation in FPGA. Electronics 2020, 9, 2193. [Google Scholar] [CrossRef]

- Zairi, H.; Kedir Talha, M.; Meddah, K.; Ould Slimane, S. FPGA-Based System for Artificial Neural Network Arrhythmia Classification. Neural Comput. Appl. 2020, 32, 4105–4120. [Google Scholar] [CrossRef]

- Pang, W.; Wu, C.; Lu, S. An Energy-Efficient Implementation of Group Pruned CNNs on FPGA. IEEE Access 2020, 8, 217033–217044. [Google Scholar] [CrossRef]

- Mo, Z.; Luo, D.; Wen, T.; Cheng, Y.; Li, X. FPGA Implementation for Odor Identification with Depthwise Separable Convolutional Neural Network. Sensors 2021, 21, 832. [Google Scholar] [CrossRef]

- Li, Z.; Zhu, C.; Gao, Y.-L.; Wang, Z.-K.; Wang, J. AlphaGo Policy Network: A DCNN Accelerator on FPGA. IEEE Access 2020, 8, 203039–203047. [Google Scholar] [CrossRef]

- Véstias, M. Efficient Design of Pruned Convolutional Neural Networks on FPGA. J. Signal Process. Syst. 2021, 93, 531–544. [Google Scholar] [CrossRef]

- El-Maksoud, A.J.A.; Ebbed, M.; Khalil, A.H.; Mostafa, H. Power Efficient Design of High-Performance Convolutional Neural Networks Hardware Accelerator on FPGA: A Case Study with GoogLeNet. IEEE Access 2021, 9, 151897–151911. [Google Scholar] [CrossRef]

- Zhao, L. Light Music Online System Based on FPGA and Convolutional Neural Network. Microprocess. Microsyst. 2021, 80, 103556. [Google Scholar] [CrossRef]

- You, W.; Wu, C. RSNN: A Software/Hardware Co-Optimized Framework for Sparse Convolutional Neural Networks on FPGAs. IEEE Access 2021, 9, 949–960. [Google Scholar] [CrossRef]

- Irmak, H.; Corradi, F.; Detterer, P.; Alachiotis, N.; Ziener, D. A Dynamic Reconfigurable Architecture for Hybrid Spiking and Convolutional FPGA-Based Neural Network Designs. JLPEA 2021, 11, 32. [Google Scholar] [CrossRef]

- Deng, L. The MNIST Database of Handwritten Digit Images for Machine Learning Research [Best of the Web]. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).