3.1. Datasets

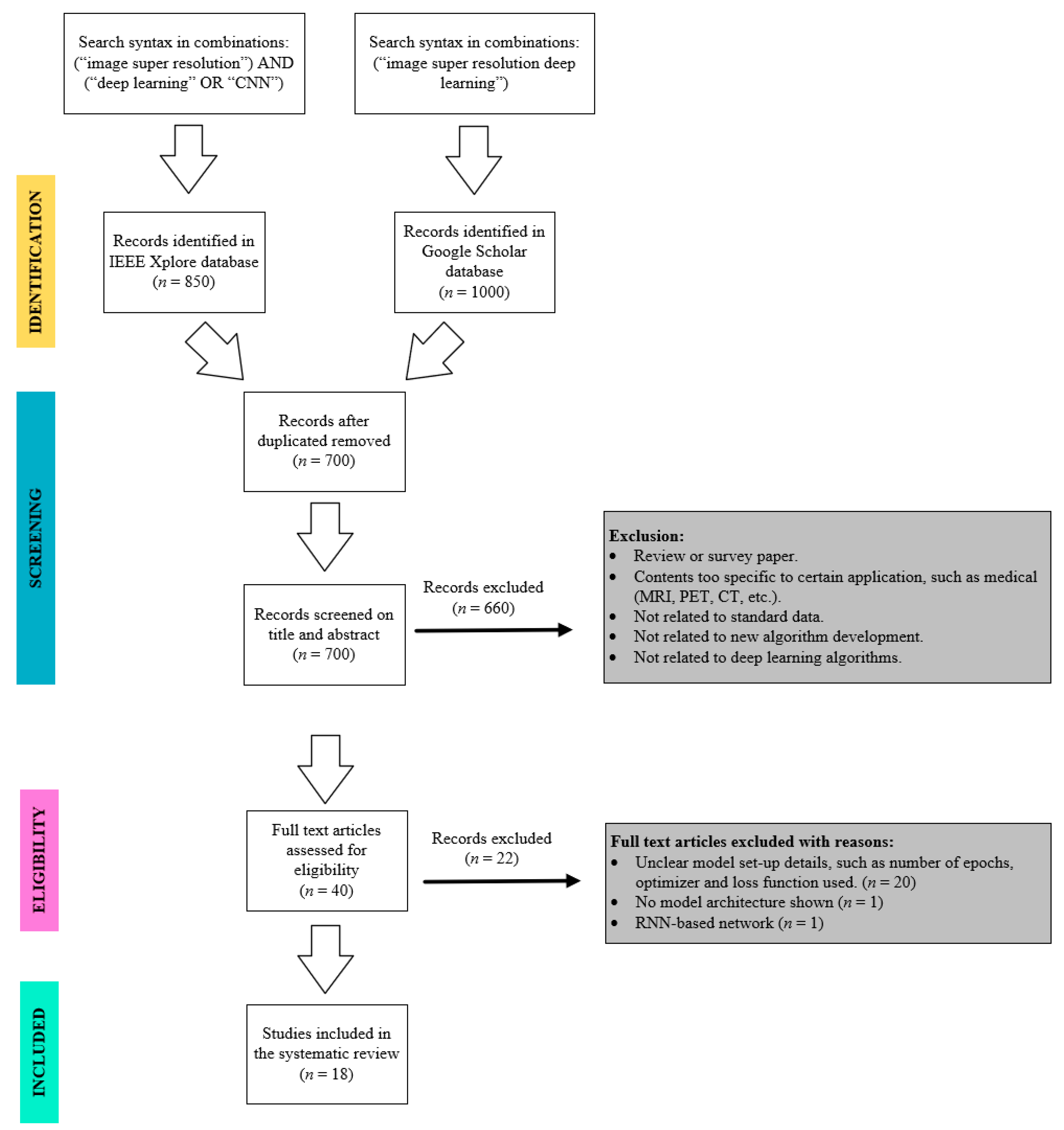

A dataset is commonly used in machine learning applications, including deep learning, to teach a model network to solve a specific problem. In the image super-resolution development field, there are various types of datasets being used by many researchers to build a model and to test the model performance. From the 18 articles that have been reviewed, 11 datasets were discovered.

The T91 dataset that contains 91 images is one of the image datasets used for model training. The T91 dataset has an average resolution of 264 × 204 pixels and average pixels of 58,853. The contents in the dataset are made up of car, flower, fruit, human face, and so on [

3]. SRCNN [

24], fast super resolution convolution neural network (FSRCNN) [

27], very deep super resolution (VDSR) [

28], deep recursive convolution network (DRCN) [

29], deep recursive residual network (DRRN) [

30], global learning residual learning (GLRL) network [

31], deep residual dense network (DRDN) [

32] and fast global learning residual learning (FGLRL) network [

33] are the algorithms that used T91 datasets as a training dataset.

Since the number of images in T91 was insufficient for the training purpose, some researchers included the Berkeley segmentation dataset 200 (BSDS200). The BSDS200 dataset contains images of animals, buildings, food, landscape, people, and plants as content. A total of 200 images can be found in this dataset with an average resolution of 435 × 367 pixels, and average pixels of 154,401 [

34]. VDSR, DRRN, GLRL, DRDN, and FGLRL were the models built with BSDS200 as an additional training dataset. However, for FSRCNN, this dataset was used as a testing dataset. Dilated residual dense network (Dilated-RDN) [

35] was another algorithm that used BSDS200 for training the model.

Another dataset that is widely used by many researchers is the DIVerse 2K resolution (DIV2K) image dataset. DIV2K dataset consists of 800 training images and 200 validation images. The images have an average resolution of 1972 × 1437 pixels and average pixels of 2,793,250. Environment, flora, fauna, handmade objects, people, and scenery are the key items found in the dataset [

36]. Enhanced deep residual network (EDSR) [

37], multi-connected convolutional network for super-resolution (MCSR) [

38], cascading residual network (CRN) [

39], enhanced residual network (ERN) [

39], residual dense network (RDN) [

40], Dilated-RDN [

35], dense space attention network (DSAN) [

41] and dual-branch convolutional neural network (DBCN) [

42] were the algorithms that used the DIV2K dataset as their training dataset.

The ImageNet dataset is also used in two algorithms among the 18 articles. Efficient sub-pixel convolutional neural network (ESPCN) [

43] and super resolution dense connected convolutional network (SRDenseNet) [

44] were the two algorithms that use this dataset to train a model. The ImageNet dataset is one of the largest datasets with more than 3.2 million images that are open publicly for data analytics development purposes [

45]. The ImageNet dataset contains images of mammals, birds, fish, reptiles, amphibians, vehicles, furniture, musical instruments, geological formations, tools, flowers and fruit.

The DIV2K and BSDS200 datasets were also used as testing datasets to evaluate model performance. EDSR, MCSR, CRN, ERN, and RDN included DIV2K for model evaluation, whereas FSRCNN, ESPCN, and DRDN included BSDS200 to evaluate the model. There are two datasets, which are Set5 and Set14, used by all algorithms, except for MCSR and DRDN, for model evaluation. The Set5 dataset contained only five images, which are images of a baby, a bird, a butterfly, a head, and a woman. The images are in PNG format at an average resolution of 313 × 336 pixels and average pixels of 113,491. The Set14 dataset consisted of 14 images with key items of humans, animals, insects, flowers, vegetables, comics, and slides. The average resolution and average pixels of the images in this dataset were 492 × 446 pixels and 230,203, respectively.

Three more types of datasets were also found to be used for model evaluation. They were the Berkeley segmentation dataset 100 (BSDS100), Urban100, and Manga109 [

46]. BSDS100 was used by VDSR, EDSR, CRN, ERN, DRCN, DRRN, GLRL, FGLRL, SRDenseNet, RDN, Dilated-RDN, DBCN, and SICNN as testing dataset. This dataset contained 100 images with key contents of animals, buildings, food, landscapes, people, and plants. Meanwhile, Urban100 contained 100 images and an average resolution of 984 × 797 pixels with average pixels of 774,314. The contents of the images were related to objects, such as architecture, cities, structures, and urban. It was used by VDSR, EDSR, CRN, ERN, DRCN, FGLRL, SRDenseNet, RDN, Dilated-RDN, DBCN, and SICNN.

The Manga109 dataset was a dataset with manga volumes [

47]. There were 109 PNG format images found in this dataset at an average resolution of 826 × 1169 pixels and average pixels of 966,111. Only RDN and SICNN included Manga109 datasets as testing datasets for the model.

Table 1 summarizes the characteristics of datasets that have been used in image super-resolution algorithm development.

Table 2 lists all the algorithms with their corresponding training and testing datasets. In

Table 2, the label “T” stands for training dataset, whereas “E” stands for evaluation or testing dataset.

3.2. Loss Function

A loss function is a type of learning strategy used in machine learning to measure prediction error or reconstruction error, and it provides a guide for model optimization [

3]. Two common loss functions were found in the 18 articles. One of the loss functions was a mean square error (MSE), which is also known as L2 loss. MSE can be expressed as in Equation (1).

where

h is the height of the image,

w is the width of the image,

c is the number of channels of the image,

was the constructed individual pixels value at row

i, column

j and channel

k,

was the original individual pixel value.

L2 loss is good for a model to get a high peak-signal-to-noise ratio (PSNR) [

48], an indicator to evaluate model performance which will be discussed in

Section 3.3. SRCNN, FSRCNN, ESPCN, VDSR, DRRN, GLRL, DRDN, FGLRL and SRDenseNet were the algorithms that use MSE as learning strategies.

Another type of loss is the mean absolute error (MAE), also known as

L1 loss. Although

L1 loss may not help the model in achieving a better PSNR as compared to

L2 loss,

L1 loss provides a powerful accuracy and convergence ability to the model [

39]. EDSR, CRN, ERN, DRCN, RDN, Dilated-RDN, DSAN, DBCN, and SICNN were using MAE as learning strategies during the model training. MAE can be expressed as in Equation (2).

MCSR proposed a custom loss function capable of coping with the outliers properly, which MSE and MAE cannot do. The proposed loss function,

L(

r,

α,

β) was defined as in Equation (3)

where

r is the pixel-wise error between predicted and actual HR images,

ρ(

α) = max(1,2-

α),

α is the shape parameter that controls the robustness of the loss, and

β is the scale parameter that controls the size of the loss’s quadratic bowl. Equation (3) was a generalized equation that can be represented as

L1 loss,

L2 loss, Charbonnier loss (

L1 −

L2 loss) by changing the value of

α [

49]. For example, when

α = 2, Equation (3) will behave similarly to the

L2 loss. When

α = 1, Equation (3) will behave like the

L1 loss. Therefore, the tunable parameters provided a flexibility to the model to minimize the loss value and optimizing the training process without constraining use to a single type of loss function. As such, MCSR used

α = 1.11 and

β = 0.05 as the loss function settings.

Table 3 summarized all the algorithms corresponding to the loss function used.

3.3. Evaluation Metrics

Evaluation metrics provided an indicator to researchers to evaluate the model performance developed. It is also a standard benchmarking so that the performance between the different models can be compared. Two types of metric indicators were found, which were the peak signal-to-noise ratio (PSNR) and structural similarity (SSIM).

PSNR was used to measure the quality of the reconstruction image [

3]. It was defined as the ratio of the maximum pixel value over the mean squared error between the original image and the reconstructed image. In simple expression, PSNR can be expressed as in Equation (4):

where

L was the maximum pixel value (equals to 255 when an 8-bit pixel value was used),

N was the number of images,

was the original image, and

was the reconstructed image.

SSIM measured the structural similarity between images in terms of luminance, contrast, and structures [

3]. The comparison expression for luminance and contrast denoted as

and

can be obtained from Equations (5) and (6), respectively:

where

is the mean value of the original image intensity,

is the mean value of the reconstructed image intensity,

is the standard deviation of the original image intensity, and

is the standard deviation of the reconstructed image intensity.

and

were the constants for avoiding instability, which were expressed as

and

, respectively, where

k1 << 1 and

k2 << 1.

Image structure was related to the correlation coefficient between the original image

I and the reconstructed image

. Therefore, for structure comparison, it can be obtained from Equation (7):

where

was the covariance between

and

that can be expressed as Equation (8) and

C3 was the constant for stability.

From Equations (5)–(7), SSIM can be calculated as in Equation (9):

where

α,

β, and

γ were the control parameters for adjusting the importance of luminance, contrast, and structure, respectively.

Table 3 visualized the evaluation metrics used by different algorithms.

3.4. Algorithms

In this subsection, a total of 18 algorithms will be reviewed. Their network design deployed and the results obtained will be compared.

3.4.1. Super-Resolution Convolutional Neural Network (SRCNN)

SRCNN was the pioneering work of using a convolutional neural network (CNN) in image super-resolution reconstruction development [

24,

48]. The idea of SRCNN was inspired by sparse coding-based super-resolution methods. SRCNN consisted of three main parts, namely patch extraction, non-linear mapping, and image reconstruction, which can be observed in

Figure 2. The patch extraction extracts features from the bicubic interpolation. Extracted features were then passed through non-linear mapping, where each of the high-dimensional features was mapped on another high-dimensional feature. Finally, the output feature from the last layer of non-linear mapping was reconstructed into the HR image through the convolution process.

When comparing SRCNN with sparse coding-based methods, they have similar operations, which include patch extraction, non-linear mapping, and image reconstruction. The difference observed between them was that the filters in SRCNN were available for optimization through end-to-end mapping, but in a sparse coding-based method, only certain operations were. Another advantage observed from SRCNN was that different filter sizes can be set in non-linear mapping, and therefore the whole operation can utilize the information obtained. These two advantages have shown that the PSNR value of SRCNN was greater than the sparse coding-based method.

3.4.2. Fast Super-Resolution Convolutional Neural Network (FSRCNN)

Dong et al. [

27] later discovered that SRCNN required more convolutional layers in the non-linear mapping to get a better result. However, increasing the number of layers increases the running time, and is difficult for the PSNR value to converge during the training process. Therefore, FSRCNN was proposed to overcome these problems. FSRCNN contained five major parts, shown in

Figure 3, namely, feature extraction, shrinking, non-linear mapping, expanding, and image reconstruction. One of the differences in FSRCNN from SRCNN was the addition of a shrinking layer and an expanding layer in FSRCNN. The shrinking layer reduces the dimension of extracted features from the previous layer. Meanwhile, the expanding layer works in reverse to the shrinking process, where it expands the output feature of the last layer in non-linear mapping. Besides, FSRCNN used deconvolution as an upsampling module.

FSRCNN was significantly proven that its PSNR value achieved a 1.3% improvement compared to SRCNN. Besides, FSRCNN also achieved an average 78% reduction in running time compared to SRCNN. The improvements were due to the following changes: (a) deconvolutional kernel was used instead of bicubic interpolation which proved that deconvolutional kernel outperformed the bicubic kernel; (b) shrinking layer was used, which reduced the overall number of parameters in the model, which saved training time and reduced memory consumption; (c) number of filters used in FSRCNN was less than that in SRCNN, which in turn improved the model performance and reduced running time; (d) filter size in FSRCNN was smaller than SRCNN, resulting in fewer parameters and the network was able to train more efficiently in a shorter time.

3.4.3. Efficient Sub-Pixel Convolutional Neural Network (ESPCN)

ESPCN was developed to overcome the complexity issue in SRCNN as it grew quadratically. This drawback in SRCNN caused high computation cost with a factor of

n2 when upscaling factor,

n, was applied to LR image using bicubic interpolation. Besides, the interpolation method did not bring additional information to solve the ill-posed reconstruction problem [

43]. The design of ESPCN is shown in

Figure 4. The design was the same as that in SRCNN, exception for the upsampling module. In ESPCN, sub-pixel convolution was used instead of bicubic interpolation.

The deployment of sub-pixel convolution in ESPCN has demonstrated a positive result in PSNR value compared to SRCNN. It was observed that the weight of the first layer filter and the last layer filter in ESPCN after using sub-pixel convolution has a strong similarity in terms of their features. Shi et al. [

39] performed an additional experiment to compare the effect of using a tanh function and rectifier linear unit (ReLU) as activation functions after the sub-pixel convolution. As a result, the tanh activation function showed better results when compared to the ReLU activation function.

3.4.4. Very Deep Super Resolution (VDSR)

The network design of VDSR is shown in

Figure 5. It was proposed by Kim et al. [

28] to overcome the problem of requiring more mapping layers to get better model performance in SRCNN. VDSR introduced residual learning between the input and output of the final feature mapping layer. Residual learning added output features from the final layer to the interpolated features through a skip connection. Since low-level features and high-level features are highly correlated, the skip connection helped to utilize the features from the low-level layer by combining them with the high-level features and improved the model performance. Therefore, the skip connection was able to solve the vanishing gradients problem caused by the increasing number of layers in the model. The deployment of residual learning in VDSR has two benefits over SRCNN. First, it helped the network to achieve convergence in a shorter time since the LR image has a high correlation with the HR image. A total of 93.9% reduction in running time was observed in the VDSR model. Second, VDSR provided a better PSNR value than SRCNN.

3.4.5. Enhanced Deep Residual Network (EDSR)

Inspired by the residual network in VDSR and network architecture in SRResNet, EDSR was proposed to overcome the problem of heavy computation time and memory consumption due to the application of bicubic interpolation as an upsampling technique [

37]. EDSR as showed in

Figure 6 contained three major modules, which were feature extraction, residual block module, and upsampling module. The idea of the residual block came from SRResNet, whereas the skip connection idea was from VDSR. Within the individual residual block, residual learning was also applied between the input and output features. The difference between VDSR and EDSR was that some of the layers in VDSR were replaced with residual blocks. Besides, EDSR used sub-pixel convolution as an upsampling module while VDSR used bicubic interpolation. As compared to SRResNet, batch normalization (BN) layers were removed in the residual block in the EDSR model.

EDSR utilized the information from each residual block by introduced residual learning within the block, and this significantly improved the PSNR value by about 3.84% compared to VDSR. Besides, the adoption of sub-pixel interpolation also helped model performance improvement, other than computation time and memory consumption reduction. By comparing with SRResNet, removing BN that contained the same amount of memory as the preceding convolution layer also saved about 40% of memory usage during the training process. Besides, removing BN can improve model performance as BN will get rid of the range of flexibility after normalizing the features.

3.4.6. Multi-Connected Convolutional Network for Super-Resolution (MCSR)

Chu et al. [

38] mentioned that EDSR failed to utilize the low-level features although it reduced the vanishing-gradient problem, in which EDSR still has the potential to get better performance. Besides, the residual learning in VDSR is only adopted in between the first layer and last layer of non-linear mapping in which the performance may degrade. Therefore, MCSR, with network design shown in

Figure 7, was developed to overcome the problems. Instead of using a single path network in the residual block from EDSR, MCSR modified it to a multi-connected block (MCB) that used a multi-path network. In MCSR, the residual learning used the concatenation technique to concatenate features instead of adding the features as in EDSR.

The custom design loss function used in MCSR provided flexibility to the model to minimize loss value and optimized the training process. As a result, MCSR showed an improvement of 0.79% in PSNR value compared to EDSR. Besides the help from the loss function, the improvement made was also due to rich information in local features (feature in multi-connected block) being extracted and combined with high-level features via concatenation.

3.4.7. Cascading Residual Network (CRN)

Recently, Lee et al. [

39] designed CRN, as shown in

Figure 8, to overcome the massive parameters in the EDSR network structure caused by increasing depth substantially to improve the performance of the EDSR model. The network design of CRN was also inspired by the EDSR network by replacing the residual block in EDSR with a locally sharing group (LSG). The LSG consisted of a number of local wider residual blocks (LWRB). LWRB were the same as the residual block in EDSR except that the number of the channel used in the convolutional layer before ReLU activation was bigger, whereas a smaller channel was used in the second convolutional layer. Each LSG and each LWRB were adopted with the residual learning network, which can be observed in

Figure 8.

The experiment showed that the performance of the CRN model was comparative with the EDSR model. Although the PSNR value of CRN was slightly lower than that in EDSR, CRN performed four times faster than EDSR. This is because CRN utilized all the features, including LWRB, with the aid of residual learning. As a result, CRN required smaller depth to achieve the close performance to EDSR, and thus shorter time was required to achieve convergence in PSNR value. For example, EDSR used 32 residual blocks; while CRN used 4 LSGs with each LSG made up of 4 LWRBs, which is equivalent to 16 residual blocks.

3.4.8. Enhanced Residual Network (ERN)

Lee et al. [

39] also proposed another network called ERN, which performed slightly better than CRN. The structure of ERN was also an idea coming from EDSR with an additional skip connection between LR and output of the last LWRB via multiscale block (MSB), which has been illustrated in

Figure 9. The purpose of MSB was to extract low-level features directly from the original image at different scales. Instead of using LSG, ERN deployed LWRB in non-linear mapping.

By comparing ERN with EDSR, ERN’s performance still has some small gaps to achieve the same performance as EDSR. However, ERN worked better than CRN. Since ERN only used 16 LWRBs in the model, which was a significantly smaller depth compared to EDSR, CRN has a shorter running time, which is four times faster than EDSR. As both CRN and ERN adopted residual learning to utilize all the feature information from low levels in the network, this also became one of the benefits over FSRCNN and ESPCN.

3.4.9. Deep-Recursive Convolutional Network (DRCN)

DRCN was the first algorithm that applied a recursive method for image super-resolution [

29]. As shown in

Figure 10, DRCN consisted of three major parts, namely embedding net, inference net, and reconstruction net. The embedding net extracted features from the interpolated image. Extracted features were then passed through an inference net in which all the filters sharing the same weight. All the intermediate outputs from each convolutional layer in the inference net and interpolated features were convoluted before they were added together to form an HR image.

DRCN was designed to overcome the problem of requiring many mapping layers to achieve better performance in SRCNN. Since the recursive method was used, shared weight allowed the network to widen the receptive field without increasing the model capacity, and therefore, fewer resources were required during the training process. Besides, the assembling of all intermediate outputs from the inference net significantly improved the model. The residual learning was also included in the network, which gave additional benefit to the model to achieve better convergence. Overall, an improvement of 2.44% was observed in DRCN compared to SRCNN.

3.4.10. Deep-Recursive Residual Network (DRRN)

Two drawbacks were observed from DRCN; one of them was that DRCN requires supervision on every recursion, which was a burdening process. Second, there was only a single type of weight being shared among all convolutional layers in the inference net. With these drawbacks observed, DRRN was developed with the network structure shown in

Figure 11a. By using the basic idea from DRCN, the inference net was replaced with the recursive block. The recursive block consisted of multiple residual units, with each residual unit having two convolutional layers. One of the convolutional layers in each residual unit (light blue block in

Figure 11b) shared the same weight, while the other convolutional layers (light green block in

Figure 11b) shared the other same weight.

The PSNR value of DRRN showed a 0.7% improvement compared to DRCN. The improvement made was observed for the following points. First, the involvement of residual learning in the residual block helped to solve the degradation problem affecting the model performance. Second, many computation resources can be saved as many layers share the same weights. Besides that, DRRN relieved the burden of supervision on every recursion by designing a recursive block with a multi-path structure.

3.4.11. Global Learning Residual Learning Network (GLRL)

Inspired by the work in SRCNN, DRCN, and DRRN, GLRL was developed by Han et al. [

31] with the network structure illustrated in

Figure 12. GLRL combined the basic design from SRCNN, intermediate output design from DRCN, and recursive block design from DRRN. In non-linear mapping, a local residual block (LRB) was used, and each residual block has a similar structure with the recursive block in DRRN. Some modifications have been made for the residual block. First, a parameter rectifier linear unit (PReLU) was applied after the convolutional layer. Second, an additional process was made to the input feature before they were added to each residual unit. All the intermediate outputs from each local residual block performed a similar step as in DRCN for reconstruction purposes.

A comparison has been made between SRCNN and GLRL in terms of their PSNR value. The results showed that GLRL achieved a 0.8% improvement as compared to SRCNN. An additional experiment was carried out to compare the effect of the number of LRB on the model performance. The greater the number of LRBs, the better the performance of the model. The adoption of the PReLU layer consisted of a learnable negative coefficient able to avoid the “death structure” caused by zero gradients in the ReLU.

3.4.12. Fast Global Learning Residual Learning Network (FGLRL)

Han et al. [

33] later extended their work to modify the network structure in GLRL, and FGLRL was created. In GLRL, SRCNN design was used as a base design, but in FGLRL, FSRCNN was used as a base design. Therefore, FGLRL consisted of five parts, as shown in

Figure 13, which were patch extraction, shrinking, non-linear mapping, expanding, and reconstruction. PReLU was used as an activation function in this network. In GLRL, bicubic interpolation was used as upsampling kernel, whereas, in FGLRL, the deconvolutional layer was used in the upsampling module.

FGLRL worked better in the Set5 dataset at the scale factor when compared to the DRCN model. When compared to GLRL, FGLRL showed a 1.3% improvement in PSNR value. Although the model performance of FGLRL may not be satisfactory, the model running time still got the advantage over DRCN and GLRL. FGLRL was running two times faster than DRCN and GLRL. This is because the shrinking layer that reduced the dimension of patch extraction features helped to reduce memory resources and running time.

3.4.13. Deep Residual Dense Network (DRDN)

In DRRN, the skip-connection is only applied between the input feature and the output of the residual unit. DRRN was modified for further improvement, and DRDN was created. DRDN’s structure is illustrated in

Figure 14. DRDN consisted of shallow feature extraction, residual dense network, and fusion reconstruction. Since the dense connection was introduced within the residual block, the residual block in DRDN was also known as a dense block (DB). Fusion reconstruction concatenated all the intermediate output from each dense block before the HR image was reconstructed.

Dense connection brought all the input features to all inputs of each convolutional layer. Besides, every output from each convolutional layer will also be brought to the input of the subsequent layer. Therefore, a dense connection linked all the features from each of the convolutional layers within the DB. DRDN benefitted in terms of computing cost and ran faster than DRRN because the number of network layers used in DRDN was less than that in DRRN. Besides, the combination of all intermediate outputs from the dense block at different depths helped the model to converge faster, which was two times faster than DRRN.

3.4.14. Super Resolution Dense Connected Convolutional Network (SRDenseNet)

In SRCNN and VDSR, these two networks did not fully utilize all the features, especially only involving the high-level features at the very deep end for reconstruction. This may be caused by loss of rich information, and the model performance will be limited. Therefore, SRDenseNet, as shown in

Figure 15, was proposed to overcome the problem. SRDenseNet design was inspired by the idea of DenseNet that has the capability of improving the flow of information through the network. Five major parts were performed in the network design, namely, feature extraction, residual dense network, bottleneck layer, upsampling module, and reconstruction module. In the residual dense network, the individual dense block contained a series of convolutional process with deployed dense connections.

SRDenseNet improved the PSNR value by about 4.3% when compared to SRCNN. The model also improved the PSNR value by 2.0% when compared to VDSR and DRCN. Therefore, the adoption of dense connection in dense blocks significantly showed that it was able to solve the gradient vanishing problem, which often happens when the network becomes deeper. Besides, the adoption of the deconvolutional layer also helped in improving the reconstruction process since the layers are able to learn the upscaling filters.

3.4.15. Residual Dense Network (RDN)

SRDenseNet still had a minor disadvantage, although it performed well compared to SRCNN, VDSR, and DRCN. The disadvantage was that the mode will be hard to train when it got wider with dense blocks. Thus, RDN [

40] was proposed, with its network design shown in

Figure 16. In RDN, residual dense block (RDB) was used instead of DB. Other than dense connection, residual learning is also adopted within RDB. The residual learning in RDB is also known as local residual learning. Other than that, the intermediate output from each RDB was fused through concatenation before global residual learning was applied.

The combination of global residual learning, local residual learning, and dense connection have shown that RDN performed better compared to SRDenseNet. An improvement of 1.3% was achieved compared to SRDenseNet. The improvement can be explained by the contiguous memory mechanism used in the network, which allowed the state of preceding RDBs to direct each layer of the current RDB, which strengthens the relationship between a lower feature and a high feature. Besides, the local feature fusion in RDB allowed a larger growth rate while maintaining the stability of the network.

3.4.16. Dilated Residual Dense Network (Dilated-RDN)

Dilated-RDN as shown in

Figure 17 was developed by Shamsolmoali et al. [

35]. The network design was inspired by the idea from DenseNet and RDN, and therefore the overall structure was very similar to the RDN network. One of the unit parts in this network was the introduction of an optimized unit (OUnit) activation function. OUnit was a learnable activation function that was better than ReLU activation that depended on the threshold settings of the function. RDB structure was the same as that in RDN. One of the major differences between Dilated-RDN and RDN was the upsampling module. Dilated-RDN used bicubic interpolation, while RDN used sub-pixel convolution for upsampling.

An improvement of about 3.0% was observed in the PSNR value when compared to RDN and DRRN. By looking at running time as well, Dilated-RDN also ran faster than RDN and DRRN. The secret behind the improvement achieved was the use of dilated filters for all convolutional layers. Shamsolmoali et al. mentioned that removing striding was able to improve the image resolution, but it reduced the receptive field in the subsequent layers, which resulted in a lot of rich information possibly being lost. Therefore, dilated convolution was used to increase the receptive field in higher layers and in removing the striding.

3.4.17. Dense Space Attention Network (DSAN)

A recent algorithm called DSAN [

41] was developed with inspiration from RDN. Both DSAN and RDN have a similar structure except in the residual block. In RDN, the residual dense block (RDB) was used, whereas, in DSAN, dense space attention block (DSAB) was used. The difference between DSAB and RDB was the addition of the convolution block attention module (CBAM) in DSAB, which can be observed in

Figure 18. CBAM was an attention mechanism that adaptively amplified and shrank features from each channel.

The PSNR value of DSAN when compared to SRDN, SRCNN and VDSR showed an improvement of 1.2%, 5.5%, and 2.5%, respectively. The improvement result showed that the adoption of SBAM gave a great advantage to the network by giving more attention to the useful channel of the features and enhances its discrimination abilities. Besides, both global residual learning and local residual learning also helped to utilize all features from low feature till the end of the network to improve the model performance. Other than that, the deployment of dense connection further utilized the features within DSAB, which gave an additional benefit to the model.

3.4.18. Dual-Branch Convolutional Neural Network (DBCN)

For most of the algorithms that have been reviewed in the previous section, they have simply stacked convolution layers in a chain way. This has increased the running time and memory complexity of the model. Therefore, a dual branch-based image super-resolution algorithm was proposed by Gao et al. [

42], named DBCN.

Figure 19 showed the network design of a DBCN. In DBCN, the network split into two branches, where one branch adopted a convolutional layer with Leaky ReLU as the activation function, while the other branch adopted dilated convolutional layer with Leaky ReLU as the activation function. The output from each branch would then be fused through the concatenation process before it was upsampled. Another point that showed that DBCN was different from other networks was the combination of both bicubic interpolation with other upsampling methods, such as deconvolutional kernel for the reconstruction process.

A few beneficial aspects can be observed from the DBCN network perspective. First, the dual-branch structure solved the complex network problem that is often observed in chain-way-based networks. Second, the adoption of a dilated convolutional filter enhanced image quality during the reconstruction. Third, residual learning gave additional benefit to the model to achieve convergence faster. From these aspects mentioned, DBCN improved at a rate of 0.68% compared to DRCN, whereas 0.74% improvement was made compared to VDSR.

3.4.19. Single Image Convolutional Neural Network (SICNN)

Another type of dual-branch-based network was proposed, known as SICNN, as shown in

Figure 20. The operation of SICNN was slightly different from DBCN. In SICNN, one of the branches was processed using deconvolutional kernel as upsample kernel; meanwhile, the other branch was processed using a bicubic interpolation kernel. The output from each branch was then fused through concatenation. Similar to DBCN, features from the bicubic interpolated image were added to the fused features before an HR image was reconstructed.

SICNN achieved about 2.0% improvement in PSNR value compared to RDN, while it achieved about 4.4% improvement when compared to SRCNN. The contribution to the improvement was due to the following behaviors. First, a different scale from the LR image was extracted through different branches, which allowed useful information to be included during the model training. Besides, the deconvolutional layer enlarged the feature map and simplified the calculation, and sped up the convergence. Finally, the adoption of residual learning gave an additional point to the model by making the model converge faster.

Table 4 summarizes the quantitative results obtained by authors for each of the algorithms developed.

In terms of qualitative evaluation, the characteristics of the images produced by each algorithm were observed. By comparing SRCNN with the bicubic interpolation method, it can be seen that the image produced by the interpolation method was blurry, and a lot of details cannot be observed clearly as compared to SRCNN. Not much difference can be observed from the outputs of FSRCNN and ESPCN when these outputs are being compared with the output from SRCNN. However, FSRCNN and ESPCN have a better running speed compared to SRCNN.

With the introduction of residual learning in VDSR, the texture of the image was better than SRCNN. The enhancement of the model through residual learning, such as EDSR, MCSR, CRN, and ERN made the image texture better. DRCN that deployed both recursive and residual learning produced sharper edges with respect to patterns. When compared to SRCNN, the edge of SRCNN was blurred. DRRN achieved even better and sharper edges when compared to DRCN. Both GLRL and FGLRL produced much clearer images than DRCN. However, the texture of the images in GLRL and FGLRL were not as good as DRCN.

The image produced by DRDN achieved a better texture compared to VDSR. SRDenseNet reconstructed images with a better texture pattern and was able to avoid the distortions, which DRCN, VDSR, and SRCNN could not surpass. RDN and dilated-RDN suppressed the blurring artifacts and recovered sharper edges which DRRN could not do better. DSAN recovered high-frequency information in both texture and edge areas; thus, the texture and edge were better compared to SRCNN and VDSR. DBCN showed a better restoration of collar texture without extra artifacts, thus having a better visual result than DRCN. SICNN had better ability to restore edges and textures when compared to SRCNN and RDN.

3.4.20. Summary

Table 5 summarizes the type of upsampling strategy and network design strategy used by each of the algorithms that have been reviewed in the previous section. Meanwhile, details of the advantages and disadvantages of each algorithm are also summarized in

Table 6.