1. Introduction

With the implementation of communication abilities in mass production vehicles [

1], the realization of cooperative driving features in commercial vehicles is near. Cooperative driving is a form of automated driving where vehicles exploit communication with each other or with infrastructure [

2], via vehicle-to-vehicle (V2V) communication, vehicle-to-infrastructure (V2I) communication, or a combination of both. The most commonly known application of cooperation among road vehicles is cooperative adaptive cruise control (CACC) [

3], which is an enhanced version of adaptive cruise control (ACC) using information received through V2V communication. CACC can result in improvements in safety and ride comfort [

4], increased traffic throughput [

3], and fuel savings up to 20% for commercial vehicles [

5].

A wide variety of communication structures and control approaches have been proposed for CACC [

2,

3], varying from simple uni-directional to more complex multi-directional communication solutions. In addition, cooperation among vehicles can be used for exploration [

6], traffic light scheduling [

7], and path planning [

8]. Longitudinal and lateral cooperative driving is realized via decoupled [

9,

10,

11] and combined approaches [

12], where the latter is a preferred design approach because of the coupled longitudinal and lateral vehicle dynamics [

12]. Control of lateral vehicle motions can be divided into path following and trajectory following [

12]. Path following is a time independent spatial approach, and trajectory following relies on a time dependent signal, imposing requirements on the velocity and acceleration. Platooning is a term that is used for vehicles driving together (cooperating), typically in the form of a string of vehicles following each other on a short distance. For platooning, where the short inter-vehicle distance can significantly limit the view [

13], a vehicle following approach is preferred [

12]. Cooperative driving technologies mostly use a combination of on-board sensor information (e.g., radar) and V2V communicated information. With use of V2V communication and on-board sensors, preceding vehicles can be accurately followed [

14]. It is shown in [

15] that the localization accuracy can be improved if more vehicles are equipped with V2V communication. However, a factor limiting the performance of automated drivign features is the available hardware [

16]. For example, current ACC applications require sensor update rates from

to

, but future safety applications may require update rates up to

[

16]. Applying a control approach similar to [

12] requires detailed information of state components of the lead vehicle, which is typically not measurable from the following vehicle. In the remainder of the work, the lead vehicle is referred to as the target vehicle and the following vehicle is referred to as the host vehicle. The required state information for control approaches like presented in [

12] can be obtained via V2V communication, and an estimator can be used to improve the signal quality and the continuity of the signals [

15].

Common dynamic models used for vehicle tracking range from simple kinematic models to more complex models like the single-track model [

16]. One of the determining factors of the model complexity is the specific state components that need to be available for the considered controller. Some of these state components may not be directly measurable and therefore need to be estimated. Bayuwindra [

12] presented an orientation error observer based on an extended unicycle model. Ploeg et al. [

17] presented a state estimator for the longitudinal acceleration of the preceding vehicle in order to (partly) maintain the favorable string stability properties during persistent loss of communication. A Singer acceleration model is a model where the acceleration is described as a first-order system with stochastic disturbances, and such a model has been adopted in [

17], with position and velocity measurements obtained from a radar sensor [

17]. A similar approach is presented in [

18], but in [

18] an adaptive Kalman filter has been used, improving the performance compared to using the Singer acceleration model from [

17] via a continuously updated adaptive acceleration mean. Wei et al. [

14] presented a longitudinal and lateral vehicle following control approach using only a radar and V2V communication, where the heading, acceleration, and yaw rate are communicated. The host vehicle dynamics are estimated using a single-track model [

14]. Ess et al. [

19] presented an object detection and tracking method using vision sensors. A kinematic steering model is used to track vehicles, but the low localization accuracy increases the difficulty of generating vehicle trajectories [

19]. A survey paper [

20] on vision based vehicle detection and tracking reveals that (extended) Kalman filtering and particle filtering are common approaches to predict vehicle motions from vision data. In addition, the use of Kalman filters applied in different applications is widely covered in the literature, e.g., the estimation of battery state of charge and capacity [

21,

22,

23]. Finally, variations of a constant acceleration model with yaw rate have been used in [

24] for tracking incoming traffic in urban scenarios.

In most sampled estimation frameworks, measurements are considered to be available at every sample. However, in practice this is often not the case, especially in multi-sensor set-ups. In the design estimation of the framework the different sensor update rates should be taken into account. In the literature, examples can be found of systems where different operating frequencies are present [

25,

26,

27]. In some studies the issues of multi-rate sensing are discussed in combination with time delays ([

28]) and inter-sample measurements ([

26]). In [

27], a multi-rate setup is considered in the estimation and control of a vehicle. The multi-rate Kalman filter estimate is only updated with a measurement if a new measurement is available, which in the case of [

27] is on a fixed multiple of the sampling time. In between the measurement updates the estimate is solely based on the prediction step of the Kalman filter [

27]. The authors of [

29] discuss the fusion of radar and camera measurements; the estimates of parallel Kalman filters for the different sensors are combined via Bayesian estimation. The method presented in [

29] could also be used to deal with multi-rate issues. In [

30], two fusion methods are discussed; measurement fusion and state fusion. In case of a multi-rate sensor set-up, both methods could be implemented. One method of measurement fusion is based on the augmentation of the measurement vector and the observation matrix [

30]. The observation matrix relates the measurements to the system state components. When multiple measurements are available the measurement vector and observation matrix can be increased in size accordingly [

30]. One of the state fusion methods, which is also known as track-to-track fusion, requires multiple estimators to run simultaneously, and when multiple estimates are close together, they can be fused into one state estimate [

30]. In [

31], the set of measurements considered includes (time-stamped) raw radar and lidar data and bounding boxes obtained through a vision system. Therefore, the size of the measurement set is time-varying, resulting in a measured fusion based approach similar to an example discussed in [

30]. In [

28], a multi-sensor set-up is considered where the Kalman filter is operating at a frequency over 50 times higher than the highest measurement frequency. Similarly to methods discussed in [

25,

26,

30,

31], the estimator in [

28] is only performing a measurement update when a new measurement is available. Another possibility is to introduce a multi-rate estimator as presented in [

21]; two decoupled estimators are operating at a different frequency improving the model accuracy and stability.

From the material presented in

Section 1 thus far, it can be concluded that the longitudinal and lateral control of platoons is widely investigated. However, in cooperative technologies the inputs are typically assumed to be continuously available and of sufficient quality, which usually is not the case in practical scenarios. Even in papers where the use of V2V communication for state estimation or control is discussed [

16,

32,

33], practical impairments such as limited update rates and high measurement noise are not always included in the analysis.

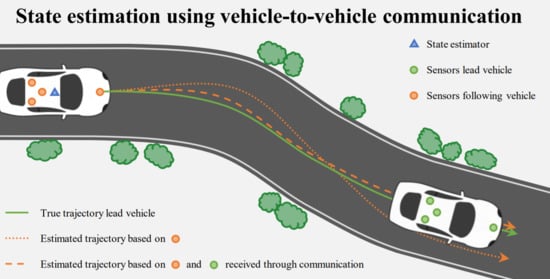

The goal of this study is to present a cooperative state estimation framework for automotive applications. The study is focused on demonstrating the benefits of using V2V communication in the estimation process via simulations. The vehicle dynamics and (virtual) measurements considered in this study are modeled in a simulation environment. Onboard sensing is used in combination with measurements received through V2V communication to estimate the state of a host and target vehicle via a cascaded Kalman filtering approach. The state estimate resulting from the framework presented in this study can be used in a longitudinal and lateral platooning framework such as presented in [

12]. The effect of the communication frequency on the estimation performance is analyzed via a root-mean-square error analysis. For this study perfect vehicle following behavior and a constant time gap are considered. The state estimator is located on the host vehicle, and onboard sensors from the host vehicle are used, such as a radar and odometer, in combination with measurements from a target vehicle received via V2V communication. The effect of different operating frequencies for the different sensors is taken into account in the estimator design. The performance of the state estimator is analyzed via simulations and error analyses, where realistic measurement noise and sensor operating frequencies are used. The framework presented in this study can easily be modified for a different sensor set-up.

The remainder of this study is organized as follows. In

Section 2, the methodology is discussed, consisting of the sensor set-up, driving scenario, vehicle and measurement model, state estimator design, and the selection of the estimator setting. The simulation results and discussion are presented in

Section 3 followed by the conclusions of this study in

Section 4.