Autonomous and Safe Navigation of Mobile Robots in Vineyard with Smooth Collision Avoidance

Abstract

:1. Introduction

2. Pillar Detection from Lidar Sensor Data

| Algorithm 1: Safe Navigation with Feature (Pillar) Detection from Lidar |

|

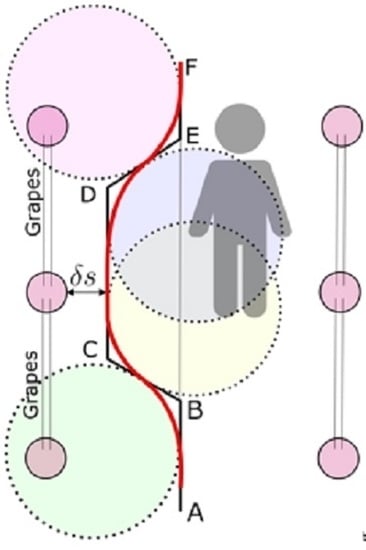

3. Obstacle Avoidance with Path Smoothing

4. Experiment and Results

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Japan’s Ministry of Agriculture, Forestry and Fisheries (MAFF). Census of Agriculture in Japan. 2015. Available online: https://www.maff.go.jp/j/wpaper/w_maff/h26/h26_h/trend/part1/chap2/c2_1_03.html (accessed on 1 July 2021).

- Wikipedia. Quasi-Zenith Satellite System. 2021. Available online: https://en.wikipedia.org/wiki/Quasi-Zenith_Satellite_System (accessed on 12 September 2021).

- Japan Aerospace Exploration Agency (JAXA). About Quasi-Zenith Satellite-1 “MICHIBIKI”. 2021. Available online: https://global.jaxa.jp/projects/sat/qzss/ (accessed on 12 September 2021).

- Ravankar, A.; Ravankar, A.A.; Kobayashi, Y.; Jixin, L.; Emaru, T.; Hoshino, Y. A novel vision based adaptive transmission power control algorithm for energy efficiency in wireless sensor networks employing mobile robots. In Proceedings of the 2015 Seventh International Conference on Ubiquitous and Future Networks, Sapporo, Japan, 7–10 July 2015; pp. 300–305. [Google Scholar] [CrossRef]

- Ravankar, A.; Ravankar, A.A.; Hoshino, Y.; Emaru, T.; Kobayashi, Y. On a Hopping-points SVD and Hough Transform Based Line Detection Algorithm for Robot Localization and Mapping. Int. J. Adv. Robot. Syst. 2016, 13, 98. [Google Scholar] [CrossRef] [Green Version]

- Ravankar, A.A.; Hoshino, Y.; Ravankar, A.; Jixin, L.; Emaru, T.; Kobayashi, Y. Algorithms and a framework for indoor robot mapping in a noisy environment using clustering in spatial and Hough domains. Int. J. Adv. Robot. Syst. 2015, 12, 27. [Google Scholar] [CrossRef] [Green Version]

- Vinbot. 2020. Available online: http://vinbot.eu/ (accessed on 11 July 2021).

- Ly, O.; Gimbert, H.; Passault, G.; Baron, G. A Fully Autonomous Robot for Putting Posts for Trellising Vineyard with Centimetric Accuracy. In Proceedings of the 2015 IEEE International Conference on Autonomous Robot Systems and Competitions, Vila Real, Portugal, 8–10 April 2015; pp. 44–49. [Google Scholar] [CrossRef]

- Igawa, H.; Tanaka, T.; Kaneko, S.; Tada, T.; Suzuki, S. Visual and tactual recognition of trunk of grape for weeding robot in vineyards. In Proceedings of the 2009 35th Annual Conference of IEEE Industrial Electronics, Porto, Portugal, 3–5 November 2009; pp. 4274–4279. [Google Scholar] [CrossRef]

- Thayer, T.C.; Vougioukas, S.; Goldberg, K.; Carpin, S. Multi-Robot Routing Algorithms for Robots Operating in Vineyards. In Proceedings of the 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), Munich, Germany, 20–24 August 2018; pp. 14–21. [Google Scholar] [CrossRef]

- Gao, M.; Lu, T. Image Processing and Analysis for Autonomous Grapevine Pruning. In Proceedings of the 2006 International Conference on Mechatronics and Automation, Luoyang, China, 25–28 June 2006; pp. 922–927. [Google Scholar] [CrossRef] [Green Version]

- Riggio, G.; Fantuzzi, C.; Secchi, C. A Low-Cost Navigation Strategy for Yield Estimation in Vineyards. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2200–2205. [Google Scholar] [CrossRef]

- Nuske, S.; Achar, S.; Bates, T.; Narasimhan, S.; Singh, S. Yield estimation in vineyards by visual grape detection. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 2352–2358. [Google Scholar] [CrossRef] [Green Version]

- Thayer, T.C.; Vougioukas, S.; Goldberg, K.; Carpin, S. Routing Algorithms for Robot Assisted Precision Irrigation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2221–2228. [Google Scholar] [CrossRef]

- Contente, O.M.D.S.; Lau, J.N.P.N.; Morgado, J.F.M.; Santos, R.M.P.M.D. Vineyard Skeletonization for Autonomous Robot Navigation. In Proceedings of the 2015 IEEE International Conference on Autonomous Robot Systems and Competitions, Vila Real, Portugal, 8–10 April 2015; pp. 50–55. [Google Scholar] [CrossRef]

- Gay-Fernández, J.A.; Cuiñas, I. Deployment of a wireless sensor network in a vineyard. In Proceedings of the International Conference on Wireless Information Networks and Systems, Seville, Spain, 18–21 July 2011; pp. 35–40. [Google Scholar]

- Galmes, S. Lifetime Issues in Wireless Sensor Networks for Vineyard Monitoring. In Proceedings of the 2006 IEEE International Conference on Mobile Ad Hoc and Sensor Systems, Vancouver, BC, Canada, 9–12 October 2006; pp. 542–545. [Google Scholar]

- Wigneron, J.; Dayan, S.; Kruszewski, A.; Aluome, C.; AI-Yaari, M.G.A.; Fan, L.; Guven, S.; Chipeaux, C.; Moisy, C.; Guyon, D.; et al. The Aqui Network: Soil Moisture Sites in the “Les Landes” Forest and Graves Vineyards (Bordeaux Aquitaine Region, France). In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3739–3742. [Google Scholar]

- Ye, F.; Qi, W. Design of wireless sensor node for drought monitoring in vineyards. In Proceedings of the International Conference on Advanced Infocomm Technology 2011 (ICAIT 2011), Wuhan, China, 11–14 July 2011. [Google Scholar] [CrossRef]

- Sánchez, N.; Martínez-Fernández, J.; Aparicio, J.; Herrero-Jiménez, C.M. Field radiometry for vineyard status monitoring under Mediterranean conditions. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 2094–2097. [Google Scholar]

- Pérez-Expósito, J.P.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Castedo, L. An IoT Monitoring System for Precision Viticulture. In Proceedings of the 2017 IEEE International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Exeter, UK, 21–23 June 2017; pp. 662–669. [Google Scholar]

- Medela, A.; Cendón, B.; González, L.; Crespo, R.; Nevares, I. IoT multiplatform networking to monitor and control wineries and vineyards. In Proceedings of the 2013 Future Network Mobile Summit, Lisboa, Portugal, 3–5 July 2013; pp. 1–10. [Google Scholar]

- Mouakher, A.; Belkaroui, R.; Bertaux, A.; Labbani, O.; Hugol-Gential, C.; Nicolle, C. An Ontology-Based Monitoring System in Vineyards of the Burgundy Region. In Proceedings of the 2019 IEEE 28th International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises (WETICE), Napoli, Italy, 12–14 June 2019; pp. 307–312. [Google Scholar]

- Ahumada-García, R.; Poblete-Echeverría, C.; Besoain, F.; Reyes-Suarez, J. Inference of foliar temperature profile of a vineyard using integrated sensors into a motorized vehicle. In Proceedings of the 2016 IEEE International Conference on Automatica (ICA-ACCA), Curico, Chile, 19–21 October 2016; pp. 1–6. [Google Scholar]

- Lloret, J.; Bosch, I.; Sendra, S.; Serrano, A. A Wireless Sensor Network for Vineyard Monitoring That Uses Image Processing. Sensors 2011, 11, 6165–6196. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- de Santos, F.B.N.; Sobreira, H.M.P.; Campos, D.F.B.; de Santos, R.M.P.M.; Moreira, A.P.G.M.; Contente, O.M.S. Towards a Reliable Monitoring Robot for Mountain Vineyards. In Proceedings of the 2015 IEEE International Conference on Autonomous Robot Systems and Competitions, Vila Real, Portugal, 8–10 April 2015; pp. 37–43. [Google Scholar]

- Roure, F.; Bascetta, L.; Soler, M.; Matteucci, M.; Faconti, D.; Gonzalez, J.P.; Serrano, D. Lessons Learned in Vineyard Monitoring and Protection from a Ground Autonomous Vehicle. In Advances in Robotics Research: From Lab to Market: ECHORD++: Robotic Science Supporting Innovation; Grau, A., Morel, Y., Puig-Pey, A., Cecchi, F., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 81–105. [Google Scholar] [CrossRef]

- Ravankar, A.; Ravankar, A.A.; Watanabe, M.; Hoshino, Y.; Rawankar, A. Development of a Low-Cost Semantic Monitoring System for Vineyards Using Autonomous Robots. Agriculture 2020, 10, 182. [Google Scholar] [CrossRef]

- Valencia, D.; Kim, D. Quadrotor Obstacle Detection and Avoidance System Using a Monocular Camera. In Proceedings of the 2018 3rd Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), Singapore, 21–23 July 2018; pp. 78–81. [Google Scholar] [CrossRef]

- Touzene, N.B.; Larabi, S. Obstacle Detection from Uncalibrated Cameras. In Proceedings of the 2008 Panhellenic Conference on Informatics, Samos, Greece, 28–30 August 2008; pp. 152–156. [Google Scholar] [CrossRef]

- Jung, J.I.; Ho, Y.S. Depth map estimation from single-view image using object classification based on Bayesian learning. In Proceedings of the 2010 3DTV-Conference: The True Vision—Capture, Transmission and Display of 3D Video, Tampere, Finland, 7–9 June 2010; pp. 1–4. [Google Scholar] [CrossRef]

- Hambarde, P.; Dudhane, A.; Murala, S. Single Image Depth Estimation Using Deep Adversarial Training. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 989–993. [Google Scholar] [CrossRef]

- Kuo, T.Y.; Hsieh, C.H.; Lo, Y.C. Depth map estimation from a single video sequence. In Proceedings of the 2013 IEEE International Symposium on Consumer Electronics (ISCE), Hsinchu, Taiwan, 3–6 June 2013; pp. 103–104. [Google Scholar] [CrossRef]

- Ravankar, A.; Ravankar, A.A.; Kobayashi, Y.; Emaru, T. SHP: Smooth Hypocycloidal Paths with Collision-Free and Decoupled Multi-Robot Path Planning. Int. J. Adv. Robot. Syst. 2016, 13, 133. [Google Scholar] [CrossRef] [Green Version]

- Durham, J.W.; Bullo, F. Smooth Nearness-Diagram Navigation. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 690–695. [Google Scholar] [CrossRef] [Green Version]

- Minguez, J.; Montano, L.; Simeon, T.; Alami, R. Global nearness diagram navigation (GND). In Proceedings of the 2001 ICRA, IEEE International Conference on Robotics and Automation (Cat. No.01CH37164), Seoul, Korea, 21–26 May 2001; Volume 1, pp. 33–39. [Google Scholar] [CrossRef]

- Song, B.; Tian, G.; Zhou, F. A comparison study on path smoothing algorithms for laser robot navigated mobile robot path planning in intelligent space. J. Inf. Comput. Sci. 2010, 7, 2943–2950. [Google Scholar]

- Ravankar, A.; Ravankar, A.; Kobayashi, Y.; Hoshino, Y.; Peng, C.C. Path Smoothing Techniques in Robot Navigation: State-of-the-Art, Current and Future Challenges. Sensors 2018, 18, 3170. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Delling, D.; Sanders, P.; Schultes, D.; Wagner, D. Engineering Route Planning Algorithms. In Algorithmics of Large and Complex Networks; Lecture Notes in Computer Science; Lerner, J., Wagner, D., Zweig, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5515, pp. 117–139. [Google Scholar] [CrossRef] [Green Version]

- LaValle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006; Available online: http://planning.cs.uiuc.edu/ (accessed on 11 February 2016).

- Latombe, J.C. Robot Motion Planning; Kluwer Academic Publishers: Norwell, MA, USA, 1991. [Google Scholar]

- Ravankar, A.; Ravankar, A.A.; Rawankar, A.; Hoshino, Y.; Kobayashi, Y. ITC: Infused Tangential Curves for Smooth 2D and 3D Navigation of Mobile Robots. Sensors 2019, 19, 4384. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dijkstra, E.W. A Note on Two Problems in Connexion with Graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef] [Green Version]

- Hart, P.; Nilsson, N.; Raphael, B. A Formal Basis for the Heuristic Determination of Minimum Cost Paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Stentz, A. The Focussed D* Algorithm for Real-Time Replanning. In Proceedings of the International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 20–25 August 1995; pp. 1652–1659. [Google Scholar]

- Stentz, A.; Mellon, I.C. Optimal and Efficient Path Planning for Unknown and Dynamic Environments. Int. J. Robot. Autom. 1993, 10, 89–100. [Google Scholar]

- Kavraki, L.; Svestka, P.; Latombe, J.C.; Overmars, M. Probabilistic roadmaps for path planning in high-dimensional configuration spaces. IEEE Trans. Robot. Autom. 1996, 12, 566–580. [Google Scholar] [CrossRef] [Green Version]

- Lavalle, S.M. Rapidly-Exploring Random Trees: A New Tool for Path Planning; Technical Report 98-11; Computer Science Department, Iowa State University: Ames, IA, USA, 1998. [Google Scholar]

- LaValle, S.M.; Kuffner, J.J. Randomized Kinodynamic Planning. Int. J. Robot. Res. 2001, 20, 378–400. [Google Scholar] [CrossRef]

- Lavalle, S.M.; Kuffner, J.J., Jr. Rapidly-Exploring Random Trees: Progress and Prospects. Algorithmic Comput. Robot. New Dir. 2000, 5, 293–308. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.P.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. ICRA Workshop Open Source Softw. 2009, 3, 5. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ravankar, A.; Ravankar, A.A.; Rawankar, A.; Hoshino, Y. Autonomous and Safe Navigation of Mobile Robots in Vineyard with Smooth Collision Avoidance. Agriculture 2021, 11, 954. https://doi.org/10.3390/agriculture11100954

Ravankar A, Ravankar AA, Rawankar A, Hoshino Y. Autonomous and Safe Navigation of Mobile Robots in Vineyard with Smooth Collision Avoidance. Agriculture. 2021; 11(10):954. https://doi.org/10.3390/agriculture11100954

Chicago/Turabian StyleRavankar, Abhijeet, Ankit A. Ravankar, Arpit Rawankar, and Yohei Hoshino. 2021. "Autonomous and Safe Navigation of Mobile Robots in Vineyard with Smooth Collision Avoidance" Agriculture 11, no. 10: 954. https://doi.org/10.3390/agriculture11100954