Congenital Unilateral Deafness Affects Cerebral Organization of Reading

Abstract

:1. Introduction

2. Results and Discussion

2.1. Behavioral Results

2.1.1. Control Participants

2.1.2. Patient RA

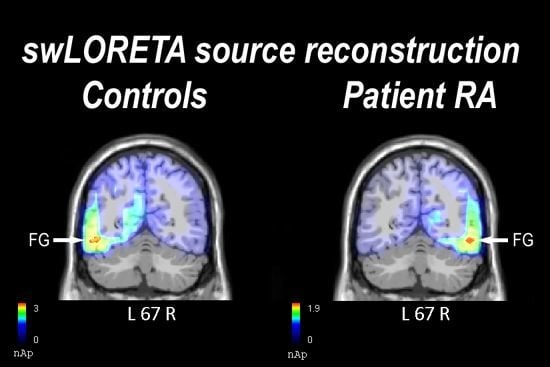

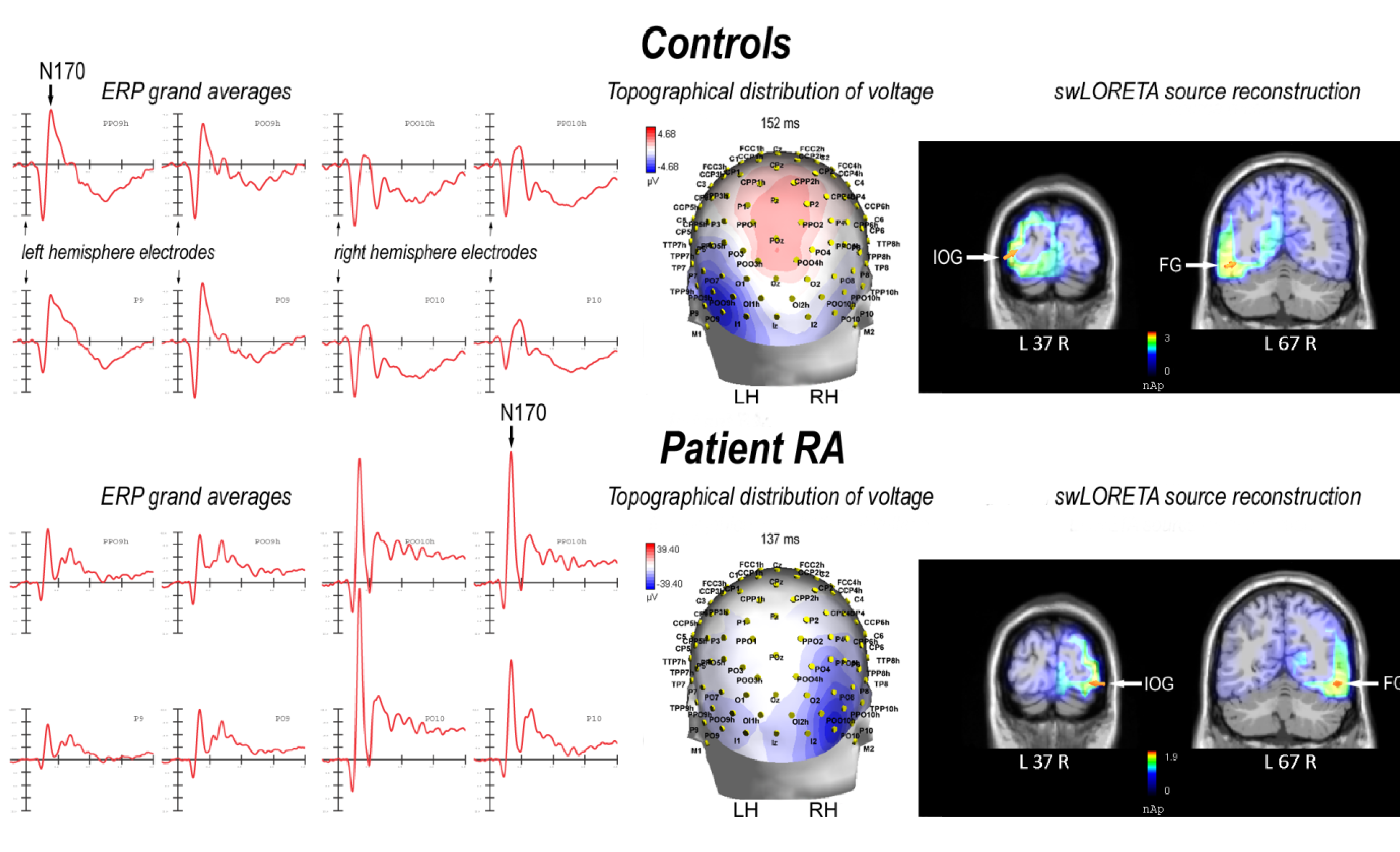

2.2. Electrophysiological Results: Occipito-Temporal N170 Component

2.2.1. Control Participants

| Magnitude (E-10) | T- x [mm] | T- y [mm] | T- z [mm] | Hemisphere | Lobe | Gyrus | BA |

|---|---|---|---|---|---|---|---|

| 28.0 | −38.5 | −87.3 | −4.9 | L | O | Inferior Occipital Gyrus | 18 |

| 27.7 | −48.5 | −66.1 | −10.9 | L | T | Fusiform Gyrus | 19 |

| 11.8 | 21.2 | −16.1 | −22.2 | R | Limbic | Parahippocampal Gyrus | 28 |

| 6.51 | −8.5 | 57.3 | −9 | L | F | Superior Frontal Gyrus | 10 |

| 6.26 | 1.5 | 38.2 | −17.9 | R | F | Medial Frontal Gyrus | 11 |

| 5.12 | 11.3 | 57.3 | −9 | R | F | Superior Frontal Gyrus | 10 |

2.2.2. Patient RA

| Magnitude (E-10) | T- x [mm] | T- y [mm] | T- z [mm] | Hemisphere | Lobe | Gyrus | BA |

|---|---|---|---|---|---|---|---|

| 187 | 40.9 | −86.4 | −12.4 | R | O | Inferior Occipital Gyrus | 18 |

| 180 | 50.8 | −66.1 | −10.9 | R | T | Fusiform Gyrus | 19 |

| 64.6 | −18.5 | −24.5 | −15.5 | L | Limbic | Parahippocampal Gyrus | 35 |

| 64.6 | −28.5 | −15.3 | −29.6 | L | Limbic | Uncus | 20 |

| 35.0 | −8.5 | 57.3 | −9 | L | F | Superior Frontal Gyrus | 10 |

| 28.7 | 1.5 | 38.2 | −17.9 | R | F | Medial Frontal Gyrus | 11 |

| 25.5 | 11.3 | 57.3 | −9 | R | F | Superior Frontal Gyrus | 10 |

| 6.78 | 1.5 | 29.5 | 58.7 | R | F | Superior Frontal Gyrus | 6 |

2.3. N170 Laterality Index

2.4. Discussion

3. Experimental Section

3.1. Participants

3.1.1. Case Report: Patient RA

3.1.2. Control Participants

3.2. Stimuli and Procedure

3.3. EEG Recording and Analysis

4. Conclusions

Acknowledgments

Conflict of Interest

References

- Knecht, S.; Drager, B.; Deppe, M.; Bobe, L.; Lohmann, H.; Floel, A.; Ringelstein, E.B.; Henningsen, H. Handedness and hemispheric language dominance in healthy humans. Brain 2000, 123, 2512–2518. [Google Scholar] [CrossRef]

- Cohen, L.; Jobert, A.; Le Bihan, D.; Dehaene, S. Distinct unimodal and multimodal regions for word processing in the left temporal cortex. NeuroImage 2004, 23, 1256–1270. [Google Scholar] [CrossRef]

- Dehaene, S.; Cohen, L.; Sigman, M.; Vinckier, F. The neural code for written words: A proposal. Trends Cogn. Sci. 2005, 9, 335–341. [Google Scholar] [CrossRef]

- Schlaggar, B.L.; McCandliss, B.D. Development of neural systems for reading. Annu. Rev. Neurosci. 2007, 30, 475–503. [Google Scholar] [CrossRef]

- Cohen, L.; Dehaene, S.; Naccache, L.; Lehericy, S.; Dehaene-Lambertz, G.; Henaff, M.A.; Michel, F. The visual word form area: Spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain 2000, 123, 291–307. [Google Scholar] [CrossRef]

- Bentin, S.; Mouchetant-Rostaing, Y.; Giard, M.H.; Echallier, J.F.; Pernier, J. ERP manifestations of processing printed words at different psycholinguistic levels: Time course and scalp distribution. J. Cogn. Neurosci. 1999, 11, 235–260. [Google Scholar]

- Rossion, B.; Joyce, C.A.; Cottrell, G.W.; Tarr, M.J. Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. NeuroImage 2003, 20, 1609–1624. [Google Scholar] [CrossRef]

- Simon, G.; Bernard, C.; Largy, P.; Lalonde, R.; Rebai, M. Chronometry of visual word recognition during passive and lexical decision tasks: An ERP investigation. Int. J. Neurosci. 2004, 114, 1401–1432. [Google Scholar] [CrossRef]

- Maurer, U.; Brandeis, D.; McCandliss, B. Fast, visual specialization for reading in english revealed by the topography of the N170 ERP response. Behav. Brain Funct. 2005, 1, 13. [Google Scholar] [CrossRef] [Green Version]

- Hauk, O.; Pulvermuller, F. Effects of word length and frequency on the human event-related potential. Clin. Neurophysiol. 2004, 115, 1090–1103. [Google Scholar] [CrossRef]

- Penolazzi, B.; Hauk, O.; Pulvermuller, F. Early semantic context integration and lexical access as revealed by event-related brain potentials. Biol. Psychol. 2007, 74, 374–388. [Google Scholar] [CrossRef]

- Brem, S.; Bucher, K.; Halder, P.; Summers, P.; Dietrich, T.; Martin, E.; Brandeis, D. Evidence for developmental changes in the visual word processing network beyond adolescence. NeuroImage 2006, 29, 822–837. [Google Scholar] [CrossRef]

- Maurer, U.; Brem, S.; Bucher, K.; Brandeis, D. Emerging neurophysiological specialization for letter strings. J. Cogn. Neurosci. 2005, 17, 1532–1552. [Google Scholar]

- Proverbio, A.M.; Wiedemann, F.; Adorni, R.; Rossi, V.; Del Zotto, M.; Zani, A. Dissociating object familiarity from linguistic properties in mirror word reading. Behav. Brain Funct. 2007, 3, 43. [Google Scholar] [CrossRef] [Green Version]

- Proverbio, A.M.; Zani, A.; Adorni, R. The left fusiform area is affected by written frequency of words. Neuropsychologia 2008, 46, 2292–2299. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Adorni, R. Orthographic familiarity, phonological legality and number of orthographic neighbours affect the onset of ERP lexical effects. Behav. Brain Funct. 2008, 4, 27. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Adorni, R. C1 and p1 visual responses to words are enhanced by attention to orthographic vs. Lexical properties. Neurosci. Lett. 2009, 463, 228–233. [Google Scholar]

- Coltheart, M.; Rastle, K.; Perry, C.; Langdon, R.; Ziegler, J. Drc: A dual route cascaded model of visual word recognition and reading aloud. Psychol. Rev. 2001, 108, 204–256. [Google Scholar]

- Kronbichler, M.; Hutzler, F.; Wimmer, H.; Mair, A.; Staffen, W.; Ladurner, G. The visual word form area and the frequency with which words are encountered: Evidence from a parametric fMRI study. NeuroImage 2004, 21, 946–953. [Google Scholar]

- Dien, J. The neurocognitive basis of reading single words as seen through early latency ERPs: A model of converging pathways. Biol. Psychol. 2009, 80, 10–22. [Google Scholar]

- Pammer, K.; Hansen, P.C.; Kringelbach, M.L.; Holliday, I.; Barnes, G.; Hillebrand, A.; Singh, K.D.; Cornelissen, P.L. Visual word recognition: The first half second. NeuroImage 2004, 22, 1819–1825. [Google Scholar]

- Price, C.J.; Devlin, J.T. The interactive account of ventral occipitotemporal contributions to reading. Trends Cogn. Sci. 2011, 15, 246–253. [Google Scholar]

- Price, C.J.; Devlin, J.T. The myth of the visual word form area. NeuroImage 2003, 19, 473–481. [Google Scholar]

- Devlin, J.T.; Jamison, H.L.; Gonnerman, L.M.; Matthews, P.M. The role of the posterior fusiform gyrus in reading. J. Cogn. Neurosci. 2006, 18, 911–922. [Google Scholar]

- Cai, Q.; Lavidor, M.; Brysbaert, M.; Paulignan, Y.; Nazir, T.A. Cerebral lateralization of frontal lobe language processes and lateralization of the posterior visual word processing system. J. Cogn. Neurosci. 2008, 20, 672–681. [Google Scholar]

- Cai, Q.; Paulignan, Y.; Brysbaert, M.; Ibarrola, D.; Nazir, T.A. The left ventral occipito-temporal response to words depends on language lateralization but not on visual familiarity. Cereb. Cortex 2010, 20, 1153–1163. [Google Scholar]

- Van der Haegen, L.; Cai, Q.; Brysbaert, M. Colateralization of broca’s area and the visual word form area in left-handers: fMRI evidence. Brain Lang. 2012, 122, 171–178. [Google Scholar]

- Lipan, M.J.; Eshraghi, A.A. Otologic and audiology aspects of microtia repair. Semin. Plast. Surg. 2011, 25, 273–278. [Google Scholar]

- Voss, P.; Zatorre, R.J. Organization and reorganization of sensory-deprived cortex. Curr. Biol. 2012, 22, R168–R173. [Google Scholar]

- Firszt, J.B.; Ulmer, J.L.; Gaggl, W. Differential representation of speech sounds in the human cerebral hemispheres. Anat. Rec. A Discov. Mol. Cell. Evol. Biol. 2006, 288, 345–357. [Google Scholar]

- Firszt, J.B. Asymmetric hemodynamic responses of the auditory cortex in normal hearing and unilateral hearing loss subjects. Assoc. Res. Otolaryngol. Abstr. 2005, 164, 465. [Google Scholar]

- Locke, J.L. A theory of neurolinguistic development. Brain Lang. 1997, 58, 265–326. [Google Scholar]

- Danelli, L.; Cossu, G.; Berlingeri, M.; Bottini, G.; Sberna, M.; Paulesu, E. Is a lone right hemisphere enough? Neurolinguistic architecture in a case with a very early left hemispherectomy. Neurocase 2012. [Google Scholar] [CrossRef]

- Previc, F.H.; Harter, M.R. Electrophysiological and behavioral indicants of selective attention to multifeature gratings. Percept. Psychophys. 1982, 32, 465–472. [Google Scholar]

- Anllo-Vento, L.; Hillyard, S.A. Selective attention to the color and direction of moving stimuli: Electrophysiological correlates of hierarchical feature selection. Percept. Psychophys. 1996, 58, 191–206. [Google Scholar]

- Proverbio, A.M.; Esposito, P.; Zani, A. Early involvement of the temporal area in attentional selection of grating orientation: An ERP study. Brain Res. Cogn. Brain Res. 2002, 13, 139–151. [Google Scholar] [CrossRef]

- Van der Haegen, L.; Cai, Q.; Seurinck, R.; Brysbaert, M. Further fMRI validation of the visual half field technique as an indicator of language laterality: A large-group analysis. Neuropsychologia 2011, 49, 2879–2888. [Google Scholar] [CrossRef]

- Greve, D.N.; van der Haegen, L.; Cai, Q.; Stufflebeam, S.; Sabuncu, M.R.; Fischl, B.; Bysbaert, M. A surface-based analysis of language lateralization and cortical asymmetry. J. Cogn. Neurosci. 2013. [Google Scholar] [CrossRef]

- Leppänen, P.H.T.; Hämäläinen, J.A.; Guttorm, T.K.; Eklund, K.M.; Salminen, H.; Tanskanen, A.; Torppa, M.; Puolakanaho, A.; Richardson, U.; Pennala, R.; et al. Infant brain responses associated with reading-related skills before school and at school age. Neurophysiol. Clin. 2012, 42, 35–41. [Google Scholar]

- Hasko, S.; Bruder, J.; Bartling, J.; Schulte-Körne, G. N300 indexes deficient integration of orthographic and phonological representations in children with dyslexia. Neuropsychologia 2012, 50, 640–654. [Google Scholar] [CrossRef]

- Booth, J.R.; Burman, D.D.; Meyer, J.R.; Gitelman, D.R.; Parrish, T.B.; Mesulam, M.M. Development of brain mechanisms for processing orthographic and phonologic representations. J. Cogn. Neurosci. 2004, 16, 1234–1249. [Google Scholar]

- Proverbio, A.M.; Manfredi, M.; Zani, A.; Adorni, R. Musical expertise affects neural bases of letter recognition. Neuropsychologia 2013, 51, 538–549. [Google Scholar] [CrossRef]

- Salmaso, D.; Longoni, A.M. Problems in the assessment of hand preference. Cortex 1985, 21, 533–549. [Google Scholar] [CrossRef]

- Oldfield, R.C. The assessment and analysis of handedness: The edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Cornoldi, C.; Friso, G.; Pra Baldi, A. MT Avanzate-2. Prove MT Avanzate di Lettura e Matematica 2 per il Biennio Della Scuola Secondaria di II Grado; Organizzazioni Speciali: Florence, Italy, 2010. [Google Scholar]

- Mondini, S.; Mapelli, D.; Vestri, A.; Bisiacchi, P.S. Esame Neuropsicologico Breve 2. Una Batteria di Test per lo Screening Neuropsicologico; Raffaello Cortina: Milano, Italy, 2003. [Google Scholar]

- Baldi, P.L.; Traficante, D. Epos 2. Elenco Delle Parole Ortograficamente Simili; Carocci: Roma, Italy, 2005. [Google Scholar]

- Bertinetto, P.M.; Burani, C.; Laudanna, A.; Marconi, L.; Ratti, D.; Rolando, C.; Thornton, A.M. Corpus e lessico di frequenza dell’italiano scritto (CoLFIS). Available online: http://www.istc.cnr.it/material/database/colfis/ (accessed on 29 May 2012).

- Oostenveld, R.; Praamstra, P. The five percent electrode system for high-resolution EEG and ERP measurements. Clin. Neurophysiol. 2001, 112, 713–719. [Google Scholar]

- Pascual-Marqui, R.D.; Michel, C.M.; Lehmann, D. Low resolution electromagnetic tomography: A new method for localizing electrical activity in the brain. Int. J. Psychophysiol. 1994, 18, 49–65. [Google Scholar] [CrossRef]

- Palmero-Soler, E.; Dolan, K.; Hadamschek, V.; Tass, P.A. Swloreta: A novel approach to robust source localization and synchronization tomography. Phys. Med. Biol. 2007, 52, 1783–1800. [Google Scholar]

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Adorni, R.; Manfredi, M.; Proverbio, A.M. Congenital Unilateral Deafness Affects Cerebral Organization of Reading. Brain Sci. 2013, 3, 908-922. https://doi.org/10.3390/brainsci3020908

Adorni R, Manfredi M, Proverbio AM. Congenital Unilateral Deafness Affects Cerebral Organization of Reading. Brain Sciences. 2013; 3(2):908-922. https://doi.org/10.3390/brainsci3020908

Chicago/Turabian StyleAdorni, Roberta, Mirella Manfredi, and Alice Mado Proverbio. 2013. "Congenital Unilateral Deafness Affects Cerebral Organization of Reading" Brain Sciences 3, no. 2: 908-922. https://doi.org/10.3390/brainsci3020908