Gene Selection in Cancer Classification Using Sparse Logistic Regression with L1/2 Regularization

Abstract

:1. Introduction

- identification of gene biomarkers will help to classify different types of cancer and improve the prediction accuracy.

- The penalized logistic regression is used as a gene selection method for cancer classification to overcome the over-fitting problem with high-dimensional data and small sample size.

- Experimental results on three GEO lung cancer datasets corroborate our ideas and demonstrate the correctness and effectiveness of penalized logistic regression.

2. Methods

2.1. Regularized Logistic Regression

2.2. Regularized Logistic Regression

| Algorithm 1: A coordinate descent algorithm for penalized logistic regression. |

|

2.3. Classification Evaluation Criteria

3. Datasets

3.1. GSE10072

3.2. GSE19804

3.3. GSE4115

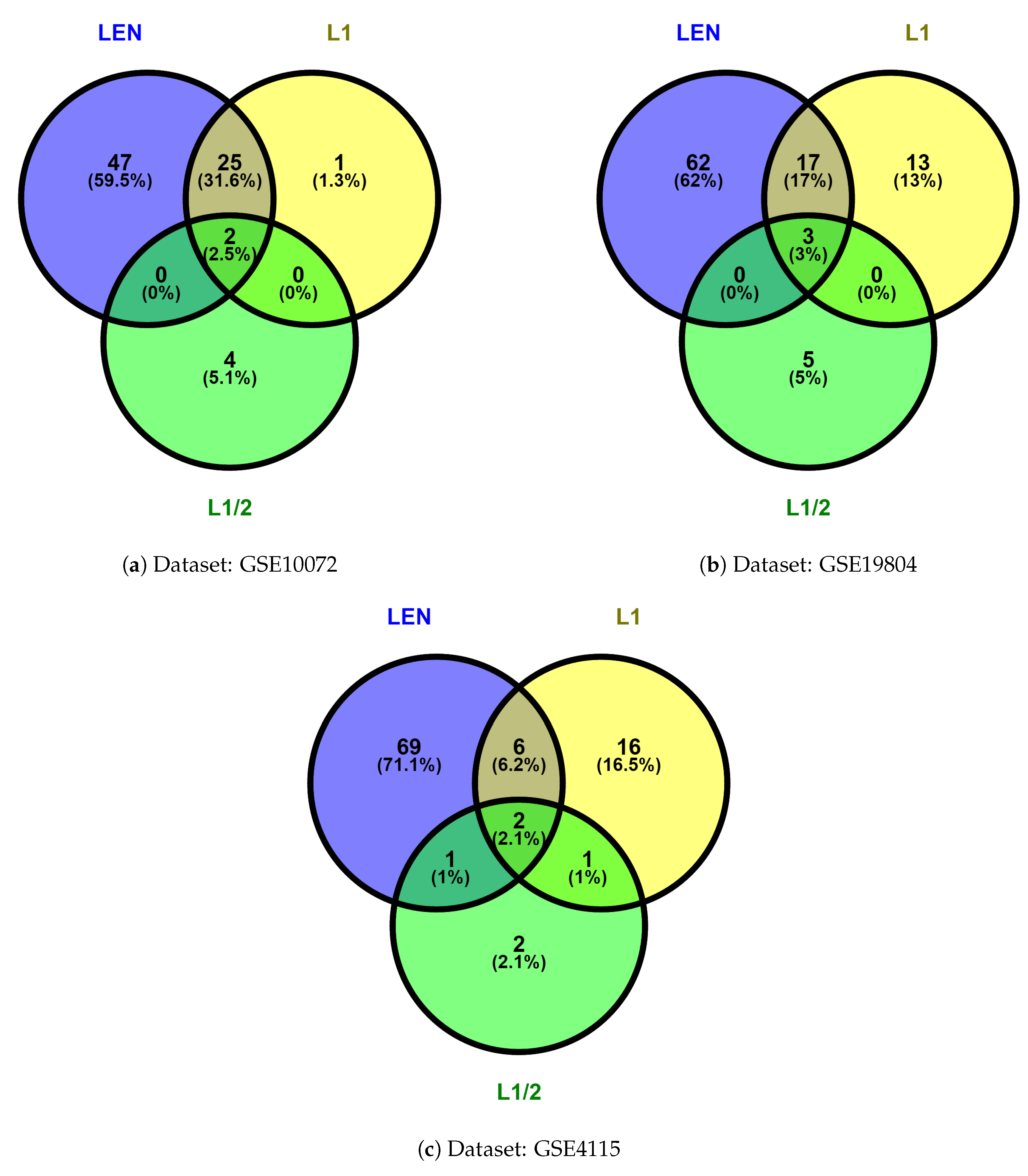

4. Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kalina, J. Classification methods for high-dimensional genetic data. Biocybern. Biomed. Eng. 2014, 34, 10–18. [Google Scholar] [CrossRef]

- Kastrin, A.; Peterlin, B. Rasch-based high-dimensionality data reduction and class prediction with applications to microarray gene expression data. Expert Syst. Appl. 2010, 37, 5178–5185. [Google Scholar] [CrossRef] [Green Version]

- Lotfi, E.; Keshavarz, A. Gene expression microarray classification using PCA–BEL. Comput. Biol. Med. 2014, 54, 180–187. [Google Scholar] [CrossRef] [PubMed]

- Algamal, Z.Y.; Lee, M.H. Penalized logistic regression with the adaptive LASSO for gene selection in high-dimensional cancer classification. Expert Syst. Appl. 2015, 42, 9326–9332. [Google Scholar] [CrossRef]

- Chen, S.X.; Qin, Y.L. A two-sample test for high-dimensional data with applications to gene-set testing. Ann. Stat. 2010, 38, 808–835. [Google Scholar] [CrossRef]

- Yata, K.; Aoshima, M. Intrinsic dimensionality estimation of high-dimension, low sample size data with d-asymptotics. Commun. Stat. Theory Methods 2010, 39, 1511–1521. [Google Scholar] [CrossRef] [Green Version]

- Liang, Y.; Liu, C.; Luan, X.Z.; Leung, K.S.; Chan, T.M.; Xu, Z.B.; Zhang, H. Sparse logistic regression with a L1/2 penalty for gene selection in cancer classification. BMC Bioinform. 2013, 14, 198. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.H.; Liu, X.Y.; Liang, Y. Feature selection and cancer classification via sparse logistic regression with the hybrid L1/2+2 regularization. PLoS ONE 2016, 11, e0149675. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.H.; Liu, X.Y.; Liang, Y.; Chai, H.; Xia, L.Y. Identification of 13 blood-based gene expression signatures to accurately distinguish tuberculosis from other pulmonary diseases and healthy controls. Bio-Med. Mater. Eng. 2015, 26, S1837–S1843. [Google Scholar] [CrossRef] [PubMed]

- Ma, S.; Huang, J. Regularized gene selection in cancer microarray meta-analysis. BMC Bioinform. 2009, 10, 1. [Google Scholar] [CrossRef] [PubMed]

- Deng, H.; Runger, G. Gene selection with guided regularized random forest. Pattern Recognit. 2013, 46, 3483–3489. [Google Scholar] [CrossRef] [Green Version]

- Allen, G.I. Automatic feature selection via weighted kernels and regularization. J. Comput. Graph. Stat. 2013, 22, 284–299. [Google Scholar] [CrossRef]

- Zou, H.; Yuan, M. Regularized simultaneous model selection in multiple quantiles regression. Comput. Stat. Data Anal. 2008, 52, 5296–5304. [Google Scholar] [CrossRef]

- Harrell, F.E. Ordinal logistic regression. In Regression Modeling Strategies; Springer: New York, NY, USA, 2015; pp. 311–325. [Google Scholar]

- Menard, S. Applied Logistic Regression Analysis; Sage: Newcastle upon Tyne, UK, 2002; Volume 106. [Google Scholar]

- Hayes, A.F.; Matthes, J. Computational procedures for probing interactions in OLS and logistic regression: SPSS and SAS implementations. Behav. Res. Methods 2009, 41, 924–936. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Q.; Dai, H.N.; Wu, D.; Xiao, H. Data analysis on video streaming QoE over mobile networks. EURASIP J. Wirel. Commun. Netw. 2018, 2018, 173. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zhang, C.H. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 2010, 38, 894–942. [Google Scholar] [CrossRef]

- Meier, L.; Van De Geer, S.; Bühlmann, P. The group lasso for logistic regression. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2008, 70, 53–71. [Google Scholar] [CrossRef] [Green Version]

- Feng, Z.Z.; Yang, X.; Subedi, S.; McNicholas, P.D. The LASSO and sparse least squares regression methods for SNP selection in predicting quantitative traits. IEEE/ACM Trans. Comput. Biol. Bioinform. (TCBB) 2012, 9, 629–636. [Google Scholar] [CrossRef] [PubMed]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Xu, Z.B.; Guo, H.L.; Wang, Y.; Zhang, H. Representative of L1/2 regularization among Lq (0 < q ≤ 1) regularizations: An experimental study based on phase diagram. Acta Autom. Sin. 2012, 38, 1225–1228. [Google Scholar]

- Xu, Z.; Zhang, H.; Wang, Y.; Chang, X.; Liang, Y. L1/2 regularization. Sci. China Inf. Sci. 2010, 53, 1159–1169. [Google Scholar] [CrossRef]

- Xu, Z.; Chang, X.; Xu, F.; Zhang, H. L1/2 regularization: A thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1013–1027. [Google Scholar] [PubMed]

- Friedman, J.; Hastie, T.; Tibshirani, R. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 2010, 33, 1. [Google Scholar] [CrossRef] [PubMed]

- Xia, L.Y.; Wang, Y.W.; Meng, D.Y.; Yao, X.J.; Chai, H.; Liang, Y. Descriptor Selection via Log-Sum Regularization for the Biological Activities of Chemical Structure. Int. J. Mol. Sci. 2017, 19, 30. [Google Scholar] [CrossRef] [PubMed]

- Sohal, H.; Eldridge, S.; Feder, G. The sensitivity and specificity of four questions (HARK) to identify intimate partner violence: A diagnostic accuracy study in general practice. BMC Fam. Pract. 2007, 8, 49. [Google Scholar] [CrossRef] [PubMed]

- Gene Expression Signature of Cigarette Smoking and Its Role in Lung Adenocarcinoma Development and Survival. Available online: https://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE10072 (accessed on 27 December 2017).

- Genome-Wide Screening of Transcriptional Modulation in Non-Smoking Female Lung Cancer in Taiwan. Available online: https://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE19804 (accessed on 3 June 2018).

- Airway Epithelial Gene Expression Diagnostic for the Evaluation of Smokers with Suspect Lung Cancer. Available online: https://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE4115 (accessed on 27 December 2017).

- Oliveros, J. An Interactive Tool for Comparing Lists with Venn’s Diagrams (2007–2015). Available online: http://bioinfogp.cnb.csic.es/tools/venny/index.html (accessed on 21 May 2018).

- Da Wei Huang, B.T.S.; Stephens, R.; Baseler, M.W.; Lane, H.C.; Lempicki, R.A. DAVID gene ID conversion tool. Bioinformation 2008, 2, 428. [Google Scholar] [CrossRef]

- Rosell, R.; Carcereny, E.; Gervais, R.; Vergnenegre, A.; Massuti, B.; Felip, E.; Palmero, R.; Garcia-Gomez, R.; Pallares, C.; Sanchez, J.M.; et al. Erlotinib versus standard chemotherapy as first-line treatment for European patients with advanced EGFR mutation-positive non-small-cell lung cancer (EURTAC): A multicentre, open-label, randomised phase 3 trial. Lancet Oncol. 2012, 13, 239–246. [Google Scholar] [CrossRef]

- Kobayashi, S.; Boggon, T.J.; Dayaram, T.; Jänne, P.A.; Kocher, O.; Meyerson, M.; Johnson, B.E.; Eck, M.J.; Tenen, D.G.; Halmos, B. EGFR mutation and resistance of non–small-cell lung cancer to gefitinib. N. Engl. J. Med. 2005, 352, 786–792. [Google Scholar] [CrossRef] [PubMed]

- Richards, E. Molecular Profiling of Lung Cancer. Ph.D. Thesis, Imperial College London, London, UK, 2013. [Google Scholar]

| Datasets | No. of Samples | No. of Genes | Class |

|---|---|---|---|

| GSE10072 | 107 | 22283 | Normal/Tumor |

| GSE19804 | 120 | 54675 | Normal/Tumor |

| GSE4115 | 187 | 22215 | Normal/Tumor |

| Datasets | No. of Training (Class 1/Class 2) | No. of Testing (Class 1/Class 2) |

|---|---|---|

| GSE10072 | 75 (35 Normal/40 Tumor) | 32 (14 Normal/18 Tumor) |

| GSE19804 | 84 (46 Normal/38 Tumor) | 36 (14 Normal/22 Tumor) |

| GSE4115 | 131 (67 Normal/64 Tumor) | 56 (31 Normal/25 Tumor) |

| Methods | Datasets | Training Set (5-CV) | Testing Set | ||||

|---|---|---|---|---|---|---|---|

| Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy | ||

| GSE10072 | 1.00 | 1.00 | 1.00 | 0.92 | 0.98 | 0.95 | |

| GSE19084 | 1.00 | 0.98 | 0.99 | 0.87 | 0.72 | 0.81 | |

| GSE4115 | 0.83 | 0.97 | 0.91 | 0.77 | 0.74 | 0.73 | |

| Mean | 0.94 | 0.98 | 0.97 | 0.85 | 0.81 | 0.83 | |

| GSE10072 | 0.98 | 1.00 | 0.99 | 0.93 | 0.94 | 0.94 | |

| GSE19084 | 1.00 | 0.98 | 0.99 | 0.90 | 0.68 | 0.81 | |

| GSE4115 | 0.94 | 0.98 | 0.96 | 0.78 | 0.85 | 0.78 | |

| Mean | 0.97 | 0.99 | 0.98 | 0.87 | 0.82 | 0.84 | |

| GSE10072 | 1.00 | 1.00 | 1.00 | 0.94 | 1.00 | 0.97 | |

| GSE19084 | 1.00 | 1.00 | 1.00 | 0.92 | 0.75 | 0.87 | |

| GSE4115 | 0.98 | 0.99 | 0.98 | 0.78 | 0.93 | 0.83 | |

| Mean | 0.99 | 1.00 | 0.99 | 0.88 | 0.89 | 0.89 | |

| Dataset: GSE10072 | ||

| Prob_ID | Gene Symbol | Gene Name |

| 209074_s_at | FAM107A | family with sequence similarity 107 member A (FAM107A) |

| 200700_s_at | KDELR2 | KDEL endoplasmic reticulum protein retention receptor 2 (KDELR2) |

| 201983_s_at | EGFR | epidermal growth factor receptor (EGFR) |

| 210852_s_at | AASS | aminoadipate-semialdehyde synthase (AASS) |

| 202037_s_at | SFRP1 | secreted frizzled related protein 1 (SFRP1) |

| 203295_s_at | ATP1A2 | ATPase Na+/K+ transporting subunit alpha 2 (ATP1A2) |

| Dataset: GSE19804 | ||

| Prob_ID | Gene Symbol | Gene Name |

| 1555636_at | CD300LG | CD300 molecule like family member g (CD300LG) |

| 206938_at | SRD5A2 | steroid 5 alpha-reductase 2 (SRD5A2) |

| 44654_at | G6PC3 | glucose-6-phosphatase catalytic subunit 3 (G6PC3) |

| 45297_at | EHD2 | EH domain containing 2 (EHD2) |

| 1552696_at | NIPA1 | non-imprinted in Prader–Willi/Angelman syndrome 1 (NIPA1) |

| 45687_at | prr14 | proline-rich 14 (PRR14) |

| 203373_at | SOCS2 | suppressor of cytokine signaling 2 (SOCS2) |

| 210984_x_at | EGFR | epidermal growth factor receptor (EGFR) |

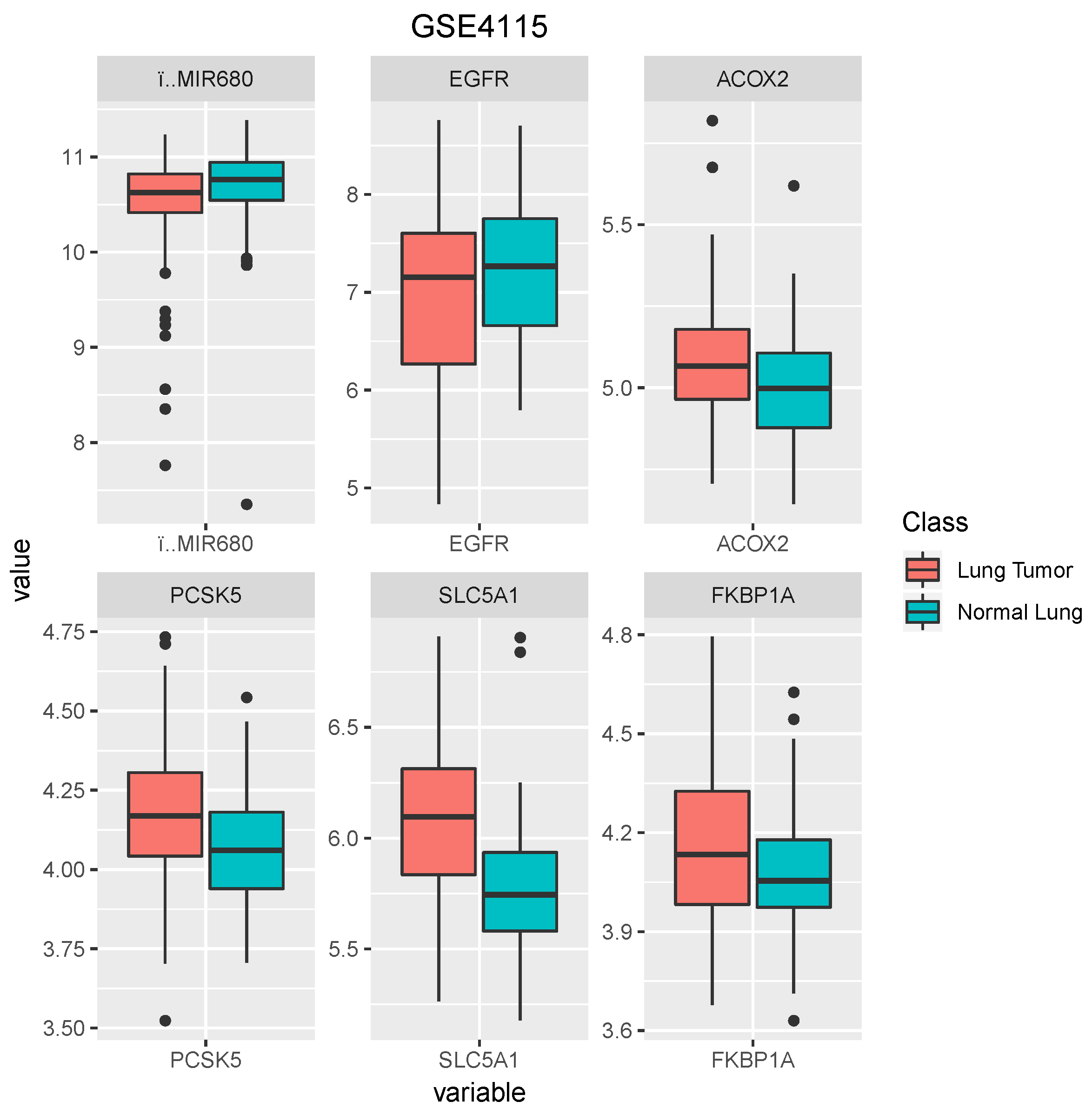

| Dataset: GSE4115 | ||

| Prob_ID | Gene Symbol | Gene Name |

| 205560_at | PCSK5 | pro-protein convertase subtilisin/kexin type 5 (PCSK5) |

| 200003_s_at | MIR680 | microRNA 6805 (MIR6805) |

| 201983_s_at | EGFR | epidermal growth factor receptor (EGFR) |

| 210187_at | FKBP1A | FK506 binding protein 1A (FKBP1A) |

| 205364_at | ACOX2 | acyl-CoA oxidase 2 (ACOX2) |

| 206628_at | SLC5A1 | solute carrier family 5 member 1 (SLC5A1) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, S.; Jiang, H.; Shen, H.; Yang, Z. Gene Selection in Cancer Classification Using Sparse Logistic Regression with L1/2 Regularization. Appl. Sci. 2018, 8, 1569. https://doi.org/10.3390/app8091569

Wu S, Jiang H, Shen H, Yang Z. Gene Selection in Cancer Classification Using Sparse Logistic Regression with L1/2 Regularization. Applied Sciences. 2018; 8(9):1569. https://doi.org/10.3390/app8091569

Chicago/Turabian StyleWu, Shengbing, Hongkun Jiang, Haiwei Shen, and Ziyi Yang. 2018. "Gene Selection in Cancer Classification Using Sparse Logistic Regression with L1/2 Regularization" Applied Sciences 8, no. 9: 1569. https://doi.org/10.3390/app8091569