1. Introduction

In recent years, advances in network construction and computer hardware have led to a thriving Internet of Things (IoT) industry. The IoT system has allowed various types of sensors (temperature, pressure, current, image) to be installed in electronic devices, which enable terminal electronics to collect environmental information. Image sensors can provide textures and colors, as well as some feature information that other sensors cannot provide such as human facial features, vehicle colors, and product appearance. Thus, image sensors are widely used in IoT devices such as the access control systems that automatically identify visitors through image sensors; smart parking lots that use image sensors to identify the license plates of vehicles; and integrated circuit (IC) packaging and testing plants that use image sensors to detect IC defects. Moreover, image sensors can acquire the appearance characteristics of objects in a non-contact manner without any direct impact on the objects. Therefore, the industrial demand for research on computer vision technology is on the rise.

The main goal of image-based smart identification systems is to automatically detect and track objects of interest from video screens, and then obtain the information needed by users. Image-based smart systems can effectively distinguish the foreground and background of a scene using reliable moving object detection technology. The foreground refers to objects that move after the image-based intelligent systems are initialized; objects that do not belong to the foreground are referred to as the background. Conversely, image-based intelligent systems track and identify objects in the foreground and can identify the behavioral patterns of moving objects. By analyzing background images, the systems can detect environmental changes in the monitoring field. In addition, existing image-based smart systems use increasingly higher image resolutions to retain more scene details, leading to increased hardware requirements for real-time identification and analysis of images. Therefore, the use of moving object detection technology that allows intelligent systems to identify the foreground or background can significantly reduce the amount of data that must be identified and analyzed by these systems.

Given the importance of moving object detection technology in image-based smart systems, many scholars have proposed various methods to detect moving objects. Moving object detection methods can be roughly divided into three types: temporal differencing, optical flow, and background subtraction, each of which is elaborated below.

The first method we describe is called temporal differencing, which uses the pixel differences of successive images to detect moving objects. For example, Lipton et al. [

1] conducted color-intensity value subtraction between two successive images in a pixel-by-pixel manner and compared the absolute value of the intensity difference with a predefined intensity-difference threshold. If the absolute value of the color intensity difference of a pixel is greater than the threshold, then the pixel is regarded as a foreground point; otherwise, it is a background point. Although this method is quick and simple given the similarity in the colors of various parts of a moving object with a single-color tone, the color difference between continuous images may be too small to detect. When there is a slow-moving object or when the moving object is temporarily stopped, the object’s positions in the continuous images do not change significantly; therefore, the object is likely to be misjudged as a background, causing incomplete detection or even a totally undetectable case of the moving object. Sindhia et al. [

2] used multiple continuous images for motion detection, wherein they calculated the color difference between the beginning images, end images, and continuous images within a pre-set time interval to highlight the changes in color. However, determining the length of the time interval is a problem that remains unaddressed. Given that the computational complexity of temporal differencing is not high in most cases, the hardware cost is low. However, the detection of moving objects with this method is not accurate, and therefore unsuitable for a high-accuracy smart system.

Next, let us briefly review the optical flow method. The concept of optical flow was first proposed by Gibson [

3] in 1950. In this method, the spatial and temporal changes of pixels in continuous images were employed to calculate the motion information of objects. As the optical flow method provides object movement information in monitored images, optical flow information can be used to detect moving objects. For example, Zinbi et al. [

4] identified moving objects in images by analyzing the changes of the optical flow field in a scene. But because the detected moving objects were incomplete, they further used morphology to fill in the cavities of the objects. When using the optical flow method to detect moving objects, people are likely to obtain incorrect optical flow information owing to correspondence matching error for objects with indistinct textural features. In addition, a high frame rate is required to obtain accurate optical flow information, which results in high hardware costs, in addition to the complexity of optical-flow calculation methods; therefore, this method is used less frequently for commercial products.

Finally, we introduce the background subtraction method, which creates a reference background image and then detects moving objects using the difference between the background image and input image. Given that the background subtraction method uses the background to detect moving objects, even if a moving object temporarily stops moving, it can still be detected with a good foreground detection effect, thereby allowing the application of this method in various monitoring systems. The most important step in this method is to obtain a background image. The most intuitive method to obtain a background image is to detect an image free of any moving object, and to use that image as the background image. However, in practical applications, moving objects are often present on the monitor screen, and the scenarios under which the monitor screen is entirely free of moving objects are rare or totally non-existent. Therefore, a way to correctly extract the background image in the presence of moving objects is a key technology for this type of method. In

Section 2 we will further discuss the relevant background subtraction technology.

Although the concept of background subtraction is simple, the background on the screen in a real scenario is not always the same. It is also subject to many influencing factors such as moving objects and shaking leaves. Therefore, a way to quickly obtain the correct background model in the initial process of the system is a critical and challenging task for the background subtraction method. The following is a list of common challenges encountered in the process of capturing an initial background.

Color disturbance: In low-cost surveillance systems, poor quality cameras and low frame rates are often used to capture images, causing considerable disturbance to the same color. This not only increases the difficulty of background color clustering, but also makes it easier to misjudge the background as a foreground because the color change of the background is too large.

Dynamic background: Ideally, there should only be one color at the same location in the background. However, in the real environment, some backgrounds are dynamic (e.g., shaking trees, flying flags). Such dynamic backgrounds usually have more than two background colors with scattered color distribution. If only one color is used to build the background, this kind of dynamic background may be misjudged as the foreground. Therefore, determining the number and colors of the backgrounds required in a dynamic background is a difficult problem to solve.

Camera shake: Cameras are often installed in places like roadside poles, bridges, and the outside of buildings, where they are less likely to affect the field being monitored. However, these places are usually subjected to strong winds and the force of passing vehicles, which may cause cameras to shake. This leads to the shaking of an otherwise fixed background image, causing the background pixels with large changes in the surrounding colors to have more than two kinds of color changes, which eventually increases the difficulty of establishing an initial background.

Prolonged background masking: When the system initially creates a background, the ideal situation is that there are no moving objects to enable the system to directly grasp the color distribution of the background. However, many moving objects are usually present in the monitoring field, such as moving objects passing by or slow-moving objects—all of which obscure the background for a long period time. This can cause the system to converge the moving objects into a background, leading to erroneous detection results.

A thorough literature search has indicated that none of the recently proposed methods can address the aforementioned issues with respect to capturing initial backgrounds [

5,

6,

7]. Therefore, this paper proposes an initial background extraction algorithm based on color category entropy. The algorithm dynamically creates color categories through a color classifier to distinguish moving objects similar to the background during the initial background extraction process. This paper also proposes the concept of joint categories in which each color is re-clustered based on the relevance of each color category in the color space. The joint categories are allowed to dynamically change with color distribution, resulting in a more efficient establishment of color categories that match the real background distribution. In addition, the number of background categories required by color category entropy was automatically determined in this study, making it possible to establish background categories to detect moving objects even in the presence of dynamic backgrounds or camera shake. When the backgrounds were masked by moving objects for a long time during the initial background extraction process, the background convergence time was dynamically determined via color category entropy to obtain reliable background information.

2. Related Work

Given that obtaining good object detection results from the background subtraction method depends on reliable background building techniques, many scholars have proposed various methods to create background models. For example, Vo et al. [

8] proposed an approach that estimates the background intensity variation of an input image and subtracts the estimate from the input to achieve a flat-background image, which is processed by a global thresholding approach to get the final binary outcome. However, this method of estimating the background through the iterative operations from a single image has a high computational cost, so it is not suitable for application to real-time systems. Zhiwei et al. [

9], based on the assumption that the changing of background colors is very slow for a short period of time, conducted sampling for a specific time period and took the average of the color values of each point in this period as the background to complete background-image establishment. This method is quite simple and fast, but it does not take into account the interference from moving objects, thereby making it easy to generate the wrong background. Cheng et al. [

10] used the color change of continuous images to determine the background-image convergence time, and proposed a two-layered image pyramid structure. Based on the image pyramid concept, they calculated the stable sum of absolute differences (SAD) value for low-dimensional images, and adjusted the weight of the color difference between the foreground and background images according to this value to reduce interference from moving objects or noise. When the color change of an image tended to be stable, the image was converged into the background. However, this method cannot create a background when the background is unstable. Statistical modeling was also used to detect moving objects in images. This method approximates the probability density functions of real images by using specific probability density functions in the feature space, and estimates the occurrence probabilities of subsequent images—images with a high occurrence probability are regarded as backgrounds. For example, Wren et al. [

11] used a single Gaussian background model and images from a certain time period to train the parameters of the probability density functions of each pixel. After the parameter initialization of the probability density functions was completed, if the probability of a newly entered pixel was higher than the threshold, the pixel was regarded as a part of the background; otherwise, it was regarded as part of the foreground. Although the method using a single probability model has the advantage of low computational complexity, it has a tendency to cause poor foreground cutting results in cases of dynamic backgrounds or camera shake. Therefore, Friedman et al. [

12] used multiple Gaussian distributions (generally three to five) to establish a Gaussian mixture model (GMM) for each pixel in the image. After collecting observation data for a period of time, an expectation-maximization algorithm was adopted to determine the initial parameters of each Gaussian distribution to complete the establishment of a multiparameter initial background. In order to improve GMM adaptability for dynamic backgrounds, Stauffer et al. [

13,

14] proposed a high-efficiency parameter update method for GMM. Given that GMM uses multiple Gaussian distributions to estimate the backgrounds, and thereby has a good anti-interference ability, it has become a popular method for background subtraction. However, in the presence of prolonged background masking, such as the presence of slow-moving objects or many moving objects, the GMM is affected by the moving objects, which leads to the establishment of an incorrect background model. Moreover, given that the GMM has many parameters, selecting the initial parameters is a challenge. In order to address these parameter-setting challenges for the GMM, Elgammal et al. [

15] used kernel density estimation (KDE), that is, the superposition of multiple kernel density functions to approximate the probability density function of each pixel. However, the detection results were significantly affected by the quantized bandwidth, and selecting the quantizing bandwidth is another problem. Clustering has also been used to establish backgrounds. For example, Li et al. [

16] used a fixed number of categories to cluster input images over a period of time and treated the clustered category as a background category. If the difference between the subsequent input image and the background category was too large, the category was treated as the foreground. However, the number of background categories in a real environment is not fixed; therefore, Kim et al. [

17,

18] applied the codebook concept to pixel units and proposed six-tuple features as codewords, whose number increased or decreased according to the dynamic change of the scene. When the input image matched the codebook at the corresponding position, the input image was regarded as the background, and otherwise, the foreground. This method has good adaptability to dynamic backgrounds or camera shake but requires excessive memory space. In addition, moving objects—when present during the process of initial-background establishment—tend to interfere with the process, which results in a wrong codeword. Chiu et al. [

19] used a color classifier to create color categories and proposed the concept of category probability. They selected the representative category as the background according to the magnitudes of category probabilities, and updated background category information in an evolutionary manner, which greatly reduced the memory space needed. However, this method was still unable to obtain the correct background when the background was masked for a long time.

Some methods take into account the neighboring information of sampling points in order to increase background identification. For instance, Maddalena et al. [

20] employed a self-organizing structure to incorporate neighboring pixels into the updates of background categories. Barnich et al. [

21] proposed the ViBe algorithm in which the pixels in the adjacent area of each pixel were randomly selected to achieve initial-background modeling. The color information of neighboring points of the pixels, which were determined to be part of the background, was randomly included in background model updates. Huynh-The et al. [

22] proposed a background extraction algorithm called the neighbor-based intensity correction (NIC) method. NIC considers the first frame as an initial background. It detects the background pixel by the comparison of the standard deviation values calculated from two-pixel windows. In order to overcome limitations, NIC adopted a fixed-squared mask for various background models, Huynh-The et al. [

23] proposed a method that includes an automated-directional masking (ADM) algorithm for adaptive background modeling and a historical intensity pattern reference (HIPaR) algorithm for foreground segmentation. The main idea of this paper is to automatically select an appropriate binary mask based on directional features of boundary edges. This leads to significant accuracy enhancement for foreground detection. Meanwhile, some scholars employed machine learning methods to train a background model with a large number of real background images; when the input image matched the background model, it was considered as the background. For example, Han et al. [

24] integrated 11 features such as RGB color model and gradient, as well as Haar-like features, in a kernel density framework, and used support vector machines (SVM) to distinguish between the foreground and background. Junejo et al. [

25] proposed a novel method to perform foreground extraction for freely moving RGBD (depth) cameras. This method used the efficient features from accelerated segment test (FAST) and represented them using the histogram of oriented gradients (HoG) descriptors to learn the foreground object. Then, the method used the trained SVM to classify which of the feature points obtained from the scene belong to a foreground object. However, methods using machine learning frameworks require a large amount of well-classified data for training, which makes them difficult to apply in a real environment.

The key to background subtraction is getting the background image quickly and correctly. However, it is usually impossible to obtain a good initial background in a complex environment such as a dynamic background or a background with prolonged masking in the aforementioned relevant research. Given this context, this study proposes an entropy-based initial background extraction (EBBE) algorithm for the detection of initial backgrounds, as explained below. First, through a color distance classifier, each pixel is clustered and the number of required color categories is automatically increased according to the color change of the scene. Second, the concept of color category entropy is proposed. According to this concept, we can establish a suitable background category by estimating the number of representative color categories at each image position in the dynamic background or camera shake that shows a change of more than two background colors. Third, when the background is masked for a long time, the initial-background convergence time can be dynamically determined via the magnitudes of color category entropy to prevent erroneous detection results due to moving objects being mistaken for a background.

3. Materials and Methods

There are moving objects and background in the sequence of images captured by a fixed camera. Since most of the background does not move, the background color at the same position should be fixed. In practice, however, background colors are often obscured by moving objects that pass by, and therefore background extraction techniques are needed to obtain the background. As shown by previous research, background extraction techniques are based on the concept that the color with the highest occurrence frequency within an observation time window should be considered as the background. The main disadvantage of such methods is that in the presence of background masking, the background observation time is not dynamically changed. Therefore, when the observation time is too short, and the moving object obscures the background for a long time, the moving object will likely be mistaken for the background, causing misjudgment. On the other hand, if the observation time is set for too long, the system initial time will also be too long and the computation cost will increase. Conversely, background colors at the same position are sometimes not only composed of one color, such as the background colors of shaking trees, a flying flag, and other dynamic backgrounds or background shifts caused by camera shake. In order to make background extraction methods adapt to the dynamic background environment, some researchers have used multiple background models to identify real backgrounds [

12,

13,

14]. However, determining the number of background models is also a problem. To address this problem, some researchers regarded all the colors with an occurrence frequency higher than a preset threshold as the background using the color change of input images [

17,

18,

21]. However, this type of method tends to generate incorrect detection results because the established background colors contain the colors of moving objects that pass by.

In order to solve these problems encountered in the initial background extraction process, this paper proposes an EBBE method, which—based on the analysis of the change in color category entropy—can automatically determine the background convergence time required for each pixel. Moreover, the method uses color category entropy to determine the number of background colors needed to obtain not only the correct background colors via the extension of convergence time in the scenario of moving objects frequently passing by, but also to automatically pick out a representative color category to detect moving objects.

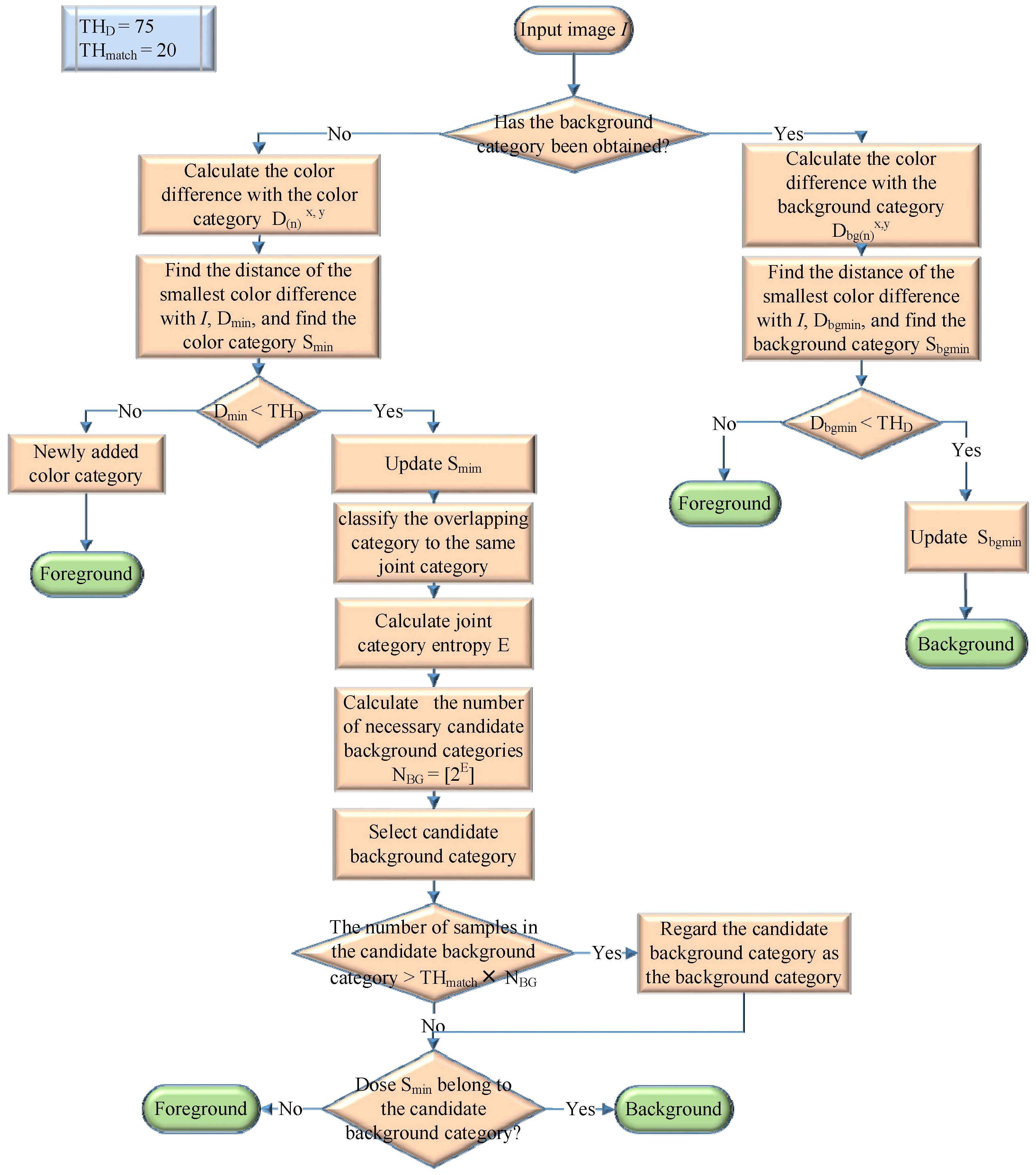

Figure 1 is a flowchart of the proposed method, with the implementation steps elaborated as follows.

We first obtained the input image

I by a camera and defined

,

, and

as the red, green, and blue color values, respectively, of

at the (

x,

y) coordinate. In the beginning, when the background category was not yet acquired at position (

x,

y), we used a color classifier to create the color category

S as shown in Equation (1), where

represents the total number of samples in the

nth color category located at the (

x,

y) coordinate, while

,

, and

represent the red, green, and blue color values in the

nth color category at the (

x,

y) coordinate, respectively.

is the total number of color categories at the (

x,

y) coordinate.

When starting to process the first input image, we created the initial color category

at its corresponding position (

x,

y), namely

,

=

,

=

, and

=

. The color information of each pixel in the third and later images was clustered with a color distance classifier as explained below. First, we used Equation (2) to calculate the Euclidean distance

between the input pixel and all the color categories at its position, where

,

, and

represent the color difference between the input pixel and the red, green, and blue color values in the

nth color category at the location, respectively.

Second, we determined the color category

, which has the smallest color difference among input pixels, and recorded its index

and distance

as shown by Equations (3)–(5). Third, we used a color classifier to define a color difference threshold value

to be compared with

. If

, it means that the input pixel does not belong to any existing color category. We added a new color category with the color information of the pixel and determined its position as a foreground. If

, it means that the input pixel belongs to the

-th color category and we increased the number of samples in this color category according to

. In the meantime, we used Equation (6) to update the color information of this category in a progressive manner. Compared with the method of directly calculating the average value of all samples in the color categories, our method improves the computation speed and saves storage space.

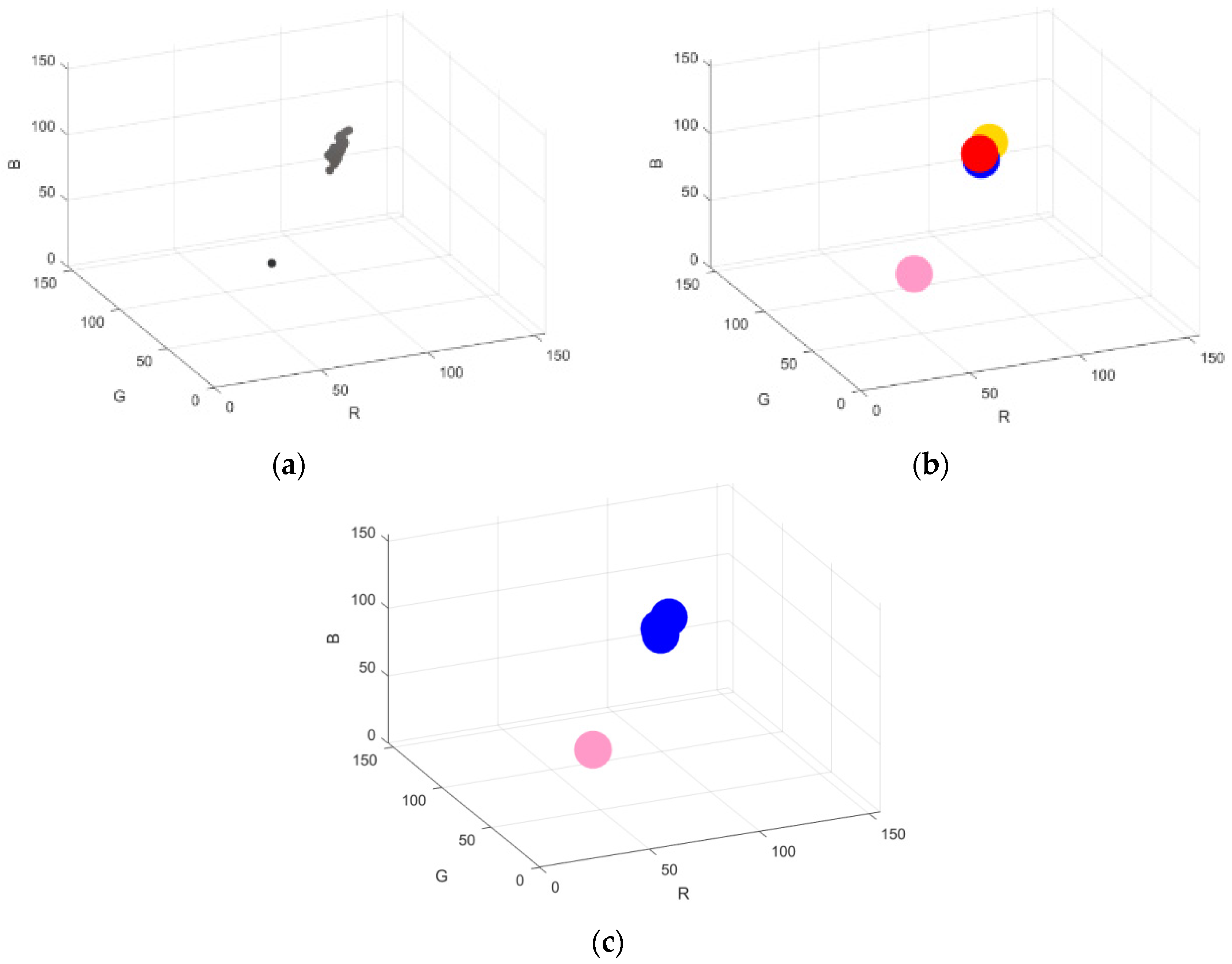

Given that the background color does not change in a short time, when

, this position

is represented as a color class

similar to the input pixel

. If the probability of the occurrence of

is higher, it is more likely that input pixel

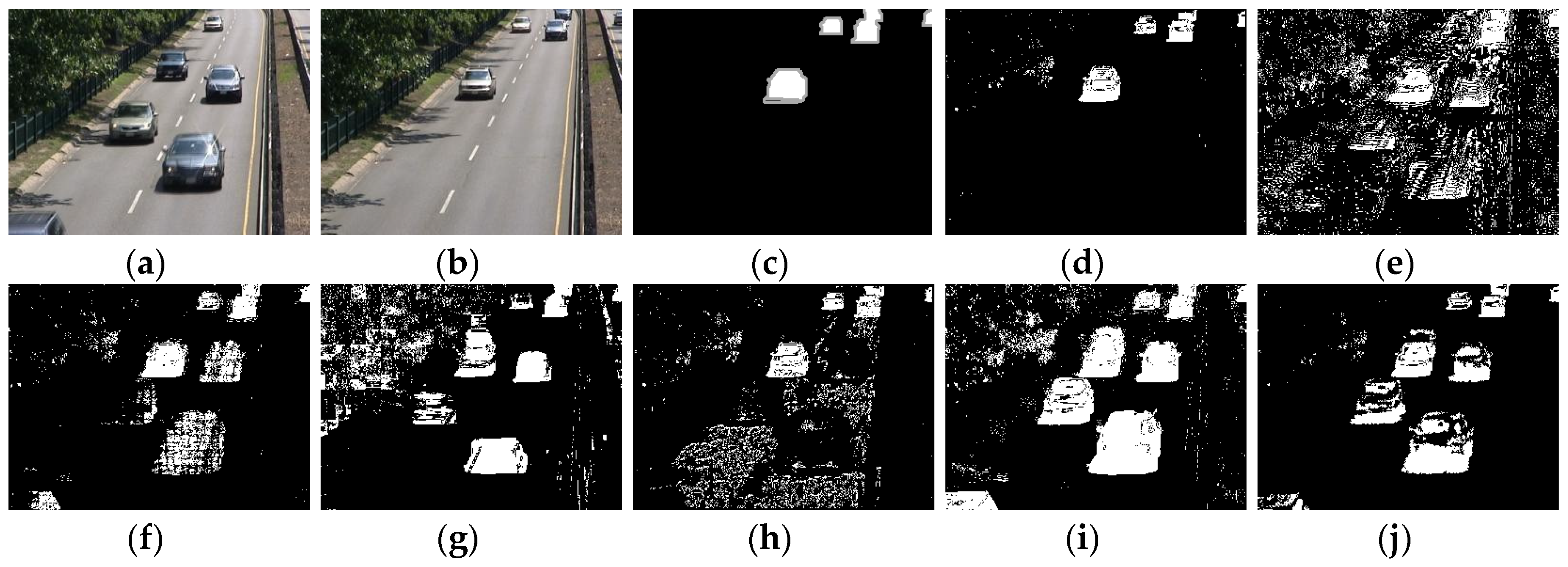

is the background. However, the background will be subject to varying degrees of disturbance depending on image quality in a real environment, as shown in

Figure 2a. If the degree of color disturbance is greater than the color difference threshold

, then the background colors cannot be classified in the same category. As shown by the color classification results in

Figure 2b, the color distribution at the upper right cannot fall into a single-color category. In addition, the color category established by the color distance classifier has a spherical color-distribution range with a radius of

, while the distribution of background colors is irregular depending on the camera quality, as shown in

Figure 2a. The increase of

alone will cause large color errors in the background category. Therefore, a joint category approach is proposed to adapt to the irregular color distribution of a real background. The method for color-category information updates used in this study, as shown by Equation (6), will cause the position of the established color category in the RGB color space to change with the change of the input image, thereby causing some color categories to overlap in the color distribution ranges. In this study, the color categories with overlapping color-distribution ranges were considered to belong to the same joint category as shown in Equation (7), where

represents the

mth joint category. Since the color distribution range of a joint category will be arbitrarily extended depending on the color category, it represents the irregular color distribution of a real background, as shown in

Figure 2c.

Background colors usually do not undergo dramatic changes over a short time; therefore, the joint category with a higher occurrence probability can better represent the distribution of background colors. This study calculated the occurrence probability

of each joint category according to Equation (8), where

represents the count of the

nth color category in the

mth joint category, the running variable

n of ∑ represents all indices of color categories belonging to the

mth joint category, and

T represents the number of input images. After obtaining the appearance probability of each joint category, the joint category with the highest occurrence probability is the background. In order to solve the problem in the real environment, the background colors at the same position do not necessarily consist of only one color; sometimes, there are more than two background colors such as the color of a shaking tree or the color of a flowing flag. Hence, the concept of joint category entropy was proposed and the change in joint category entropy was used to dynamically determine the required number of candidate background categories as elaborated below. First, joint category entropy was calculated according to Equation (9). Second, joint category entropy (

E), was used to automatically determine the number of necessary candidate background categories, as shown in Equation (10), where

represents the number of necessary candidate background categories at the (

x,

y) coordinate. Third, the created joint categories were sorted in the order of decreasing occurrence probabilities, of which the first

joint categories were selected as the candidate background categories

, as shown in Equation (11). Conversely, when no background category has been obtained, if an input pixel is classified as a candidate background category

, then the input pixel is treated as a background. Conversely, if an input pixel does not belong to a candidate background category, then the input pixel is considered as the foreground. When the number of samples belonging to a candidate background category is greater than

, the candidate background category is treated as a background category, to which the subsequent input images will be directly compared, with no more selection of candidate background categories.

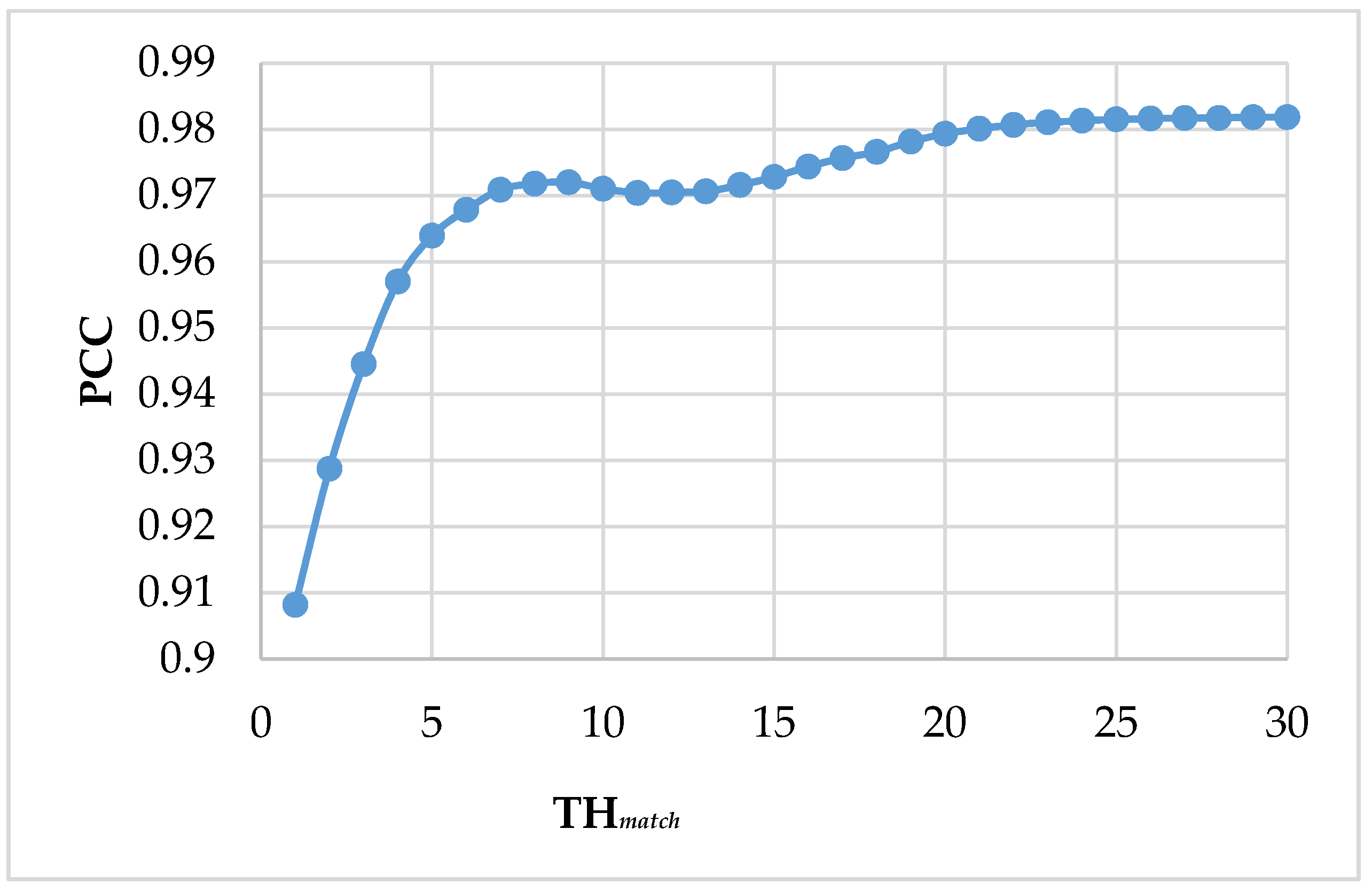

indicates the required number of samples allowing the joint categories to be regarded as the background. The

used in this paper is 20 (see Results and Discussion in

Section 4.3: Determination of parameters).

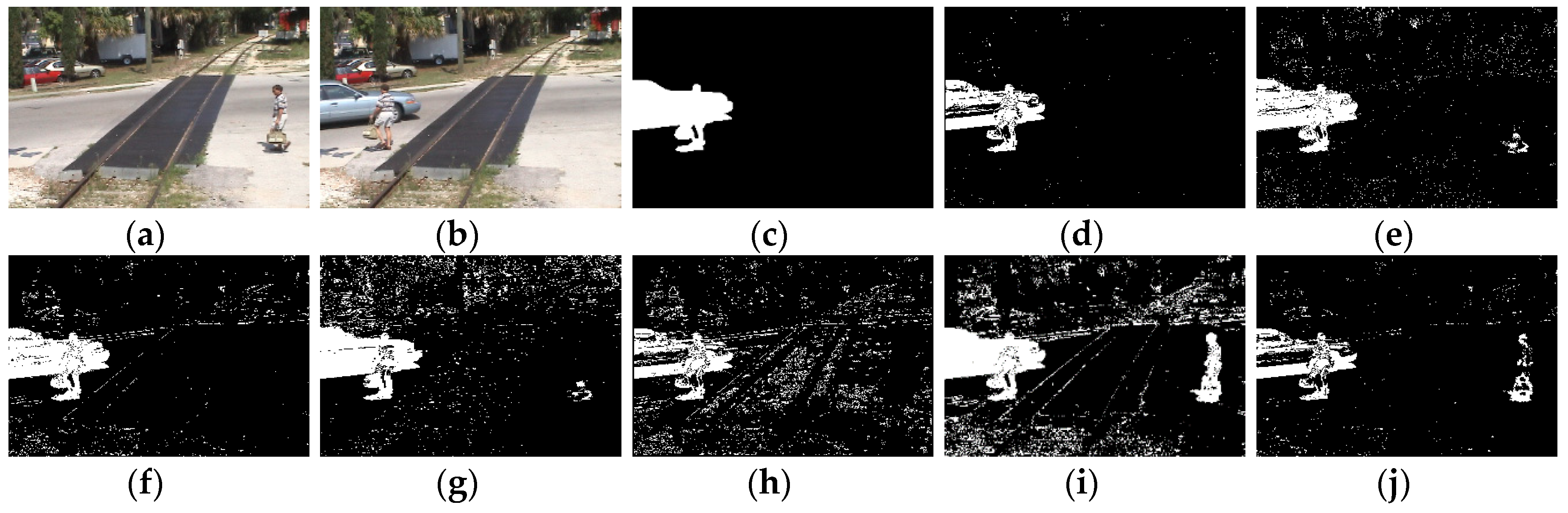

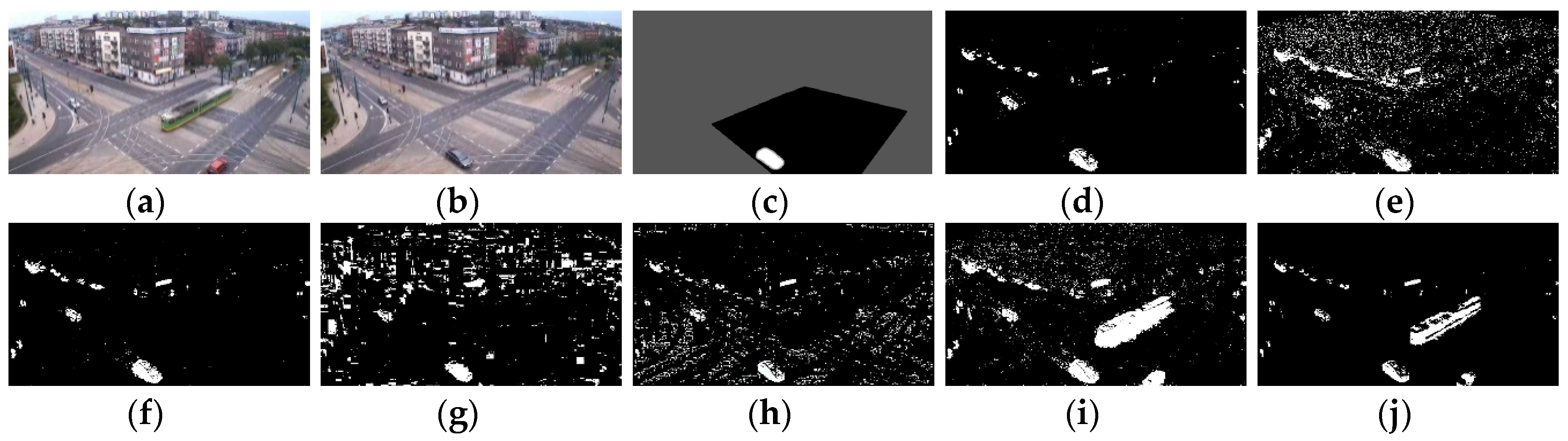

Figure 3 shows the color change of a video series at the sampling position (100, 40). As shown in

Figure 3d, the color distribution at this position is quite concentrated in the first 100 images. This position was a stable background point, but in the 250th image, the color distribution at the position changed because of a pedestrian passing through the sampling position, as shown in

Figure 3e.

Table 1 shows the results of joint category clustering at the sampling position.

Figure 4 shows the color change of an image sequence at the sampling location (72, 38). Owing to the presence of a shaking tree at the sampling location, the color distribution of the sampling location was dispersed, as shown in

Figure 4c.

Table 2 shows the results of joint category clustering at the sampling location.

Table 1 and

Table 2 show that the proposed joint category entropy

E can reflect the stability of pixel color changes and the number of representative categories. A lower

E indicates a more concentrated color distribution of the points, and thereby a smaller number of candidate background categories. A higher

E indicates a more dispersed color distribution of the points, and thereby a larger number of candidate background categories.

The initial-background capture method proposed in this study used a color classifier to create pixel-based color categories for input images. Based on the joint category concept, it could adapt to the scenario in which background pixels were subjected to color disturbance because of poor image quality or a low frame rate. Moreover, based on the color clustering of each pixel in the images, this study proposed the concept of joint category entropy to dynamically determine the number of candidate background categories required for each pixel. This made it possible to establish a suitable number of background categories for foreground detection, even in harsh background environments such as dynamic backgrounds or a background with camera shake.