SampleCNN: End-to-End Deep Convolutional Neural Networks Using Very Small Filters for Music Classification †

Abstract

:1. Introduction

2. Related Work

3. Learning Models

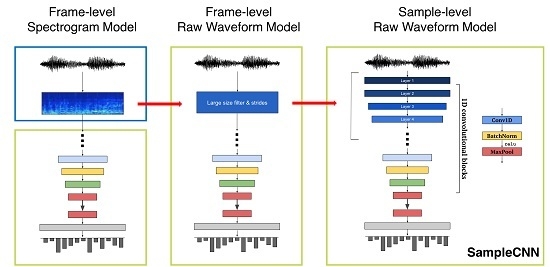

3.1. Frame-Level Mel-Spectrogram Model

3.2. Frame-Level Raw Waveform Model

3.3. Sample-Level Raw Waveform Model: SampleCNN

4. Extension of SampleCNN

4.1. Multi-Level and Multi-Scale Feature Aggregation

4.2. Transfer Learning

5. Experimental Setup

5.1. Datasets

- MagnaTAgaTune (MTAT) [25]: 21,105 songs (15,244/1529/4332), auto-tagging (50 tags)

- Million Song Dataset with Tagtraum genre annotations (TAGTRAUM): 189,189 songs (141,372/10,000/37,817) (This is a stratified split with 80% training data of the CD2C version [26]), genre classification (15 genres)

- Million Song Dataset with Last.FM tag annotations (MSD) [27]: 241,889 songs (201,680/11,774/28,435), auto-tagging (50 tags)

5.2. Training Details

5.3. Mel-Spectrogram and Raw Waveforms

5.4. Downsampling

5.5. Combining Multi-Level and Multi-Scale Features

5.6. Transfer Learning

6. Results and Discussion

6.1. Mel-Spectrogram and Raw Waveforms

6.2. Effect of Downsampling

6.3. Effect of Multi-Level and Multi-Scale Features

6.4. Transfer Learning and Comparison to State-of-the-Arts

7. Visualization

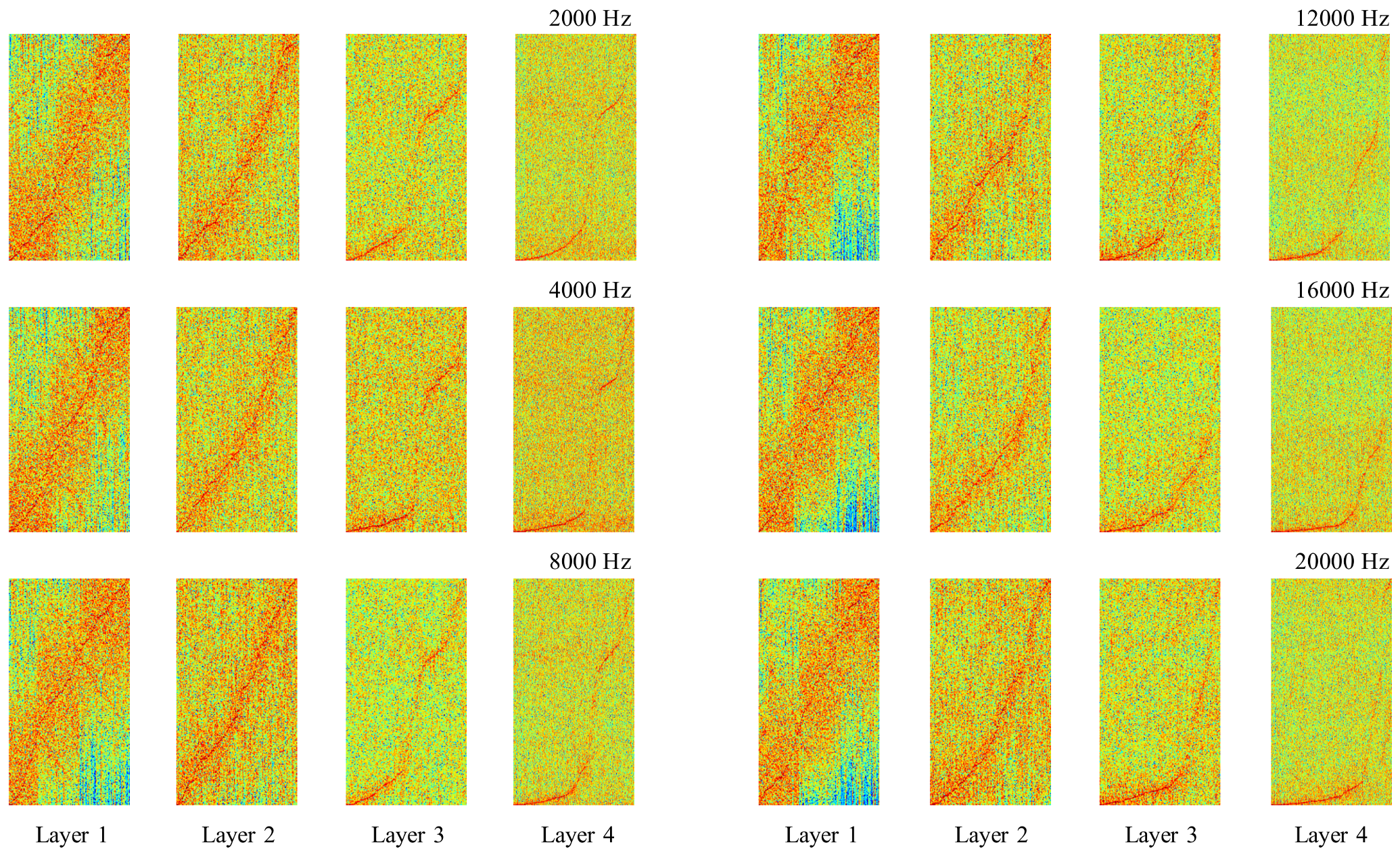

7.1. Learned Filters

7.2. Song-Level Similarity Using t-SNE

8. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Zhang, X.; Zhao, J.; Yann, L. Character-level convolutional networks for text classification. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 649–657. [Google Scholar]

- Kim, Y.; Jernite, Y.; Sontag, D.; Rush, A.M. Character-aware neural language models. arXiv, 2016; arXiv:1508.06615. [Google Scholar]

- Sainath, T.N.; Weiss, R.J.; Senior, A.W.; Wilson, K.W.; Vinyals, O. Learning the speech front-end with raw waveform CLDNNs. In Proceedings of the 16th Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015; pp. 1–5. [Google Scholar]

- Collobert, R.; Puhrsch, C.; Synnaeve, G. Wav2letter: An end-to-end convnet-based speech recognition system. arXiv, 2016; arXiv:1609.03193. [Google Scholar]

- Zhu, Z.; Engel, J.H.; Hannun, A. Learning multiscale features directly from waveforms. In Proceedings of the Annual Conference of the International Speech Communication Association, San Francisco, CA, USA, 8–12 September 2016. [Google Scholar]

- Dieleman, S.; Schrauwen, B. End-to-end learning for music audio. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Florence, Italy, 4–9 May 2014; pp. 6964–6968. [Google Scholar]

- Ardila, D.; Resnick, C.; Roberts, A.; Eck, D. Audio deepdream: Optimizing raw audio with convolutional networks. In Proceedings of the International Society for Music Information Retrieval Conference, New York, NY, USA, 7–11 August 2016. [Google Scholar]

- Thickstun, J.; Harchaoui, Z.; Kakade, S. Learning features of music from scratch. arXiv, 2017; arXiv:1611.09827. [Google Scholar]

- Dai, W.; Dai, C.; Qu, S.; Li, J.; Das, S. Very deep convolutional neural networks for raw waveforms. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 421–425. [Google Scholar]

- Aytar, Y.; Vondrick, C.; Torralba, A. Soundnet: Learning sound representations from unlabeled video. In Proceedings of the International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 892–900. [Google Scholar]

- Lee, J.; Park, J.; Kim, K.L.; Nam, J. Sample-level deep convolutional neural networks for music auto-tagging using raw waveforms. In Proceedings of the Sound Music Computing Conference (SMC), Espoo, Finland, 5–8 July 2017; pp. 220–226. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Lee, J.; Nam, J. Multi-level and multi-scale feature aggregation using pre-trained convolutional neural networks for music auto-tagging. IEEE Signal Process. Lett. 2017, 24, 1208–1212. [Google Scholar] [CrossRef]

- Tokozume, Y.; Harada, T. Learning environmental sounds with end-to-end convolutional neural network. In Proceedings of the IEEE 2017 IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 2721–2725. [Google Scholar]

- Palaz, D.; Doss, M.M.; Collobert, R. Convolutional neural networks-based continuous speech recognition using raw speech signal. In Proceedings of the IEEE 2015 IEEE International Conference on Acoustics, Speech and Signal Processing, South Brisbane, Queensland, Australia, 19–24 April 2015; pp. 4295–4299. [Google Scholar]

- Palaz, D.; Collobert, R.; Magimai-Doss, M. Analysis of CNN-based speech recognition system using raw speech as input. In Proceedings of the 16th Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015; pp. 11–15. [Google Scholar]

- Choi, K.; Fazekas, G.; Sandler, M. Automatic tagging using deep convolutional neural networks. In Proceedings of the 17th International Society of Music Information Retrieval Conference, New York, NY, USA, 7–11 August 2016; pp. 805–811. [Google Scholar]

- Choi, K.; Fazekas, G.; Sandler, M.; Cho, K. Convolutional recurrent neural networks for music classification. In Proceedings of the IEEE 2017 IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 2392–2396. [Google Scholar]

- Pons, J.; Lidy, T.; Serra, X. Experimenting with musically motivated convolutional neural networks. In Proceedings of the IEEE International Workshop on Content-Based Multimedia Indexing (CBMI), Bucharest, Romania, 15–17 June 2016; pp. 1–6. [Google Scholar]

- Lee, J. Music Dataset Split. Available online: https://github.com/jongpillee/music_dataset_split (accessed on 22 January 2017).

- Tzanetakis, G.; Cook, P. Musical genre classification of audio signals. IEEE Trans. Speech Audio Process. 2002, 10, 293–302. [Google Scholar] [CrossRef]

- Kereliuk, C.; Sturm, B.L.; Larsen, J. Deep learning and music adversaries. IEEE Trans. Multimed. 2015, 17, 2059–2071. [Google Scholar] [CrossRef]

- Law, E.; West, K.; Mandel, M.I.; Bay, M.; Downie, J.S. Evaluation of algorithms using games: The case of music tagging. In Proceedings of the International Society for Music Information Retrieval Conference, Kobe, Japan, 26–30 October 2009; pp. 387–392. [Google Scholar]

- Schreiber, H. Improving genre annotations for the million song dataset. In Proceedings of the International Society for Music Information Retrieval Conference, Malaga, Spain, 26–30 October 2015; pp. 241–247. [Google Scholar]

- Bertin-Mahieux, T.; Ellis, D.P.; Whitman, B.; Lamere, P. The million song dataset. In Proceedings of the International Society for Music Information Retrieval Conference, Miami, FL, USA, 24–28 October 2011; Volume 2, pp. 591–596. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the The 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Dieleman, S.; Schrauwen, B. Multiscale approaches to music audio feature learning. In Proceedings of the International Society for Music Information Retrieval Conference, Curitiba, Brazil, 4–8 November 2013; pp. 116–121. [Google Scholar]

- Van Den Oord, A.; Dieleman, S.; Schrauwen, B. Transfer learning by supervised pre-training for audio-based music classification. In Proceedings of the International Society for Music Information Retrieval Conference, Taipei, Taiwan, 27–31 October 2014. [Google Scholar]

- Liu, J.Y.; Jeng, S.K.; Yang, Y.H. Applying topological persistence in convolutional neural network for music audio signals. arXiv, 2016; arXiv:1608.07373. [Google Scholar]

- Güçlü, U.; Thielen, J.; Hanke, M.; van Gerven, M.; van Gerven, M.A. Brains on beats. In Proceedings of the International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2101–2109. [Google Scholar]

- Pons, J.; Slizovskaia, O.; Gong, R.; Gómez, E.; Serra, X. Timbre analysis of music audio Signals with convolutional neural networks. In Proceedings of the 2017 25th European Signal Processing Conference, Kos Island, Greece, 28 August–2 September 2017. [Google Scholar]

- Jeong, I.Y.; Lee, K. Learning temporal features using a deep neural network and its application to music genre classification. In Proceedings of the International Society for Music Information Retrieval Conference, New York, NY, USA, 7–11 August 2016; pp. 434–440. [Google Scholar]

- Park, J.; Lee, J.; Park, J.; Ha, J.W.; Nam, J. Representation learning of music using artist labels. arXiv, 2017; arXiv:1710.06648. [Google Scholar]

- Choi, K.; Fazekas, G.; Sandler, M.; Kim, J. Auralisation of deep convolutional neural networks: Listening to learned features. In Proceedings of the International Society for Music Information Retrieval Conference, Malaga, Spain, 26–30 October 2015; pp. 26–30. [Google Scholar]

- Bittner, R.M.; McFee, B.; Salamon, J.; Li, P.; Bello, J.P. Deep salience representations for f0 estimation in polyphonic music. In Proceedings of the International Society for Music Information Retrieval Conference, Suzhou, China, 23–28 October 2017. [Google Scholar]

- Choi, K.; Fazekas, G.; Sandler, M. Explaining deep convolutional neural networks on music classification. arXiv, 2016; arXiv:1607.02444. [Google Scholar]

- Erhan, D.; Bengio, Y.; Courville, A.; Vincent, P. Visualizing Higher-Layer Features of a Deep Network; University of Montreal: Montreal, QC, Canada, 2009; Volume 1341, p. 3. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks; Springer: Berlin, Germany, 2014; pp. 818–833. [Google Scholar]

- Nguyen, A.; Yosinski, J.; Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In Proceedings of the Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 427–436. [Google Scholar]

| -SampleCNN Model | |||

|---|---|---|---|

| 59,049 Samples (2678 ms) as Input | |||

| Layer | Stride | Output | # of Params |

| conv 3-128 | 3 | 19,683 × 128 | 512 |

| conv 3-128 maxpool 3 | 1 3 | 19,683 | 49,280 |

| conv 3-128 maxpool 3 | 1 3 | 49,280 | |

| conv 3-256 maxpool 3 | 1 3 | 98,560 | |

| conv 3-256 maxpool 3 | 1 3 | 196,864 | |

| conv 3-256 maxpool 3 | 1 3 | 196,864 | |

| conv 3-256 maxpool 3 | 1 3 | 196,864 | |

| conv 3-256 maxpool 3 | 1 3 | 196,864 | |

| conv 3-512 maxpool 3 | 1 3 | 393,728 | |

| conv 3-512 maxpool 3 | 1 3 | 786,944 | |

| conv 1-512 dropout 0.5 | 1 − | 262,656 | |

| sigmoid | − | 50 | 25,650 |

| Total params | |||

| Models, 59,049 Samples as Input | n | Window Size (Filter Size) | Hop Size (Stride Size) | AUC |

|---|---|---|---|---|

| Frame-level (mel-spectrogram) | 4 | 729 | 729 | 0.9000 |

| 5 | 729 | 243 | 0.9005 | |

| 5 | 243 | 243 | 0.9047 | |

| 6 | 243 | 81 | 0.9059 | |

| 6 | 81 | 81 | 0.9025 | |

| Frame-level (raw waveforms) | 4 | 729 | 729 | 0.8655 |

| 5 | 729 | 243 | 0.8742 | |

| 5 | 243 | 243 | 0.8823 | |

| 6 | 243 | 81 | 0.8906 | |

| 6 | 81 | 81 | 0.8936 | |

| Sample-level (raw waveforms) | 7 | 27 | 27 | 0.9002 |

| 8 | 9 | 9 | 0.9030 | |

| 9 | 3 | 3 | 0.9055 |

| Sampling Rate | Input (in Milliseconds) | Models | # of Parameters |

|---|---|---|---|

| 2000 Hz | 5184 samples (2592 ms) | 3-3-3-3-2-2-2-2-2-2 | |

| 4000 Hz | 10,368 samples (2592 ms) | 3-3-3-3-2-2-2-4-2-2 | |

| 8000 Hz | 20,736 samples (2592 ms) | 3-3-3-3-2-2-4-4-2-2 | |

| 12,000 Hz | 31,104 samples (2592 ms) | 3-3-3-3-3-2-4-4-2-2 | |

| 16,000 Hz | 43,740 samples (2733 ms) | 3-3-3-3-3-3-3-5-2-2 | |

| 20,000 Hz | 52,488 samples (2624 ms) | 3-3-3-3-3-3-3-3-4-2 | |

| 22,050 Hz | 59,049 samples (2678 ms) | 3-3-3-3-3-3-3-3-3-3 |

| Features from SampleCNNs Last 3 Layers (Pre-trained with MTAT) | MTAT |

|---|---|

| model | 0.9046 |

| and models | 0.9061 |

| , , and models | 0.9061 |

| , , , , , , and models | 0.9064 |

| Sampling Rate | Training Time (Ratio to 22,050 Hz) | AUC |

|---|---|---|

| 2000 Hz | 0.23 | 0.8700 |

| 4000 Hz | 0.41 | 0.8838 |

| 8000 Hz | 0.55 | 0.9031 |

| 12,000 Hz | 0.69 | 0.9033 |

| 16,000 Hz | 0.79 | 0.9033 |

| 20,000 Hz | 0.86 | 0.9055 |

| 22,050 Hz | 1.00 | 0.9055 |

| MODEL | GTZAN (Acc.) | MTAT (AUC) | TAGTRUM (Acc.) | MSD (AUC) |

|---|---|---|---|---|

| Bag of multi-scaled features [29] | - | 0.898 | - | - |

| End-to-end [8] | - | 0.8815 | - | - |

| Transfer learning [30] | - | 0.8800 | - | - |

| Persistent CNN [31] | - | 0.9013 | - | - |

| Time-frequency CNN [32] | - | 0.9007 | - | - |

| Timbre CNN [33] | - | 0.8930 | - | - |

| 2-D CNN [19] | - | 0.8940 | - | 0.851 |

| CRNN [20] | - | - | - | 0.862 |

| 2-D CNN [24] | 0.632 | - | - | - |

| Temporal features [34] | 0.659 | - | - | - |

| CNN using artist-labels [35] | 0.7821 | 0.8888 | - | - |

| multi-level and multi-scale features (pre-trained with MSD) [15] | 0.720 | 0.9021 | 0.766 | 0.8878 |

| SampleCNN ( model) [13] | - | 0.9055 | - | 0.8812 |

| −3 layer (pre-trained with MSD) | 0.778 | 0.8988 | 0.760 | 0.8831 |

| −2 layer (pre-trained with MSD) | 0.811 | 0.8998 | 0.768 | 0.8838 |

| −1 layer (pre-trained with MSD) | 0.821 | 0.8976 | 0.768 | 0.8842 |

| last 3 layers (pre-trained with MSD) | 0.805 | 0.9018 | 0.768 | 0.8842 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Park, J.; Kim, K.L.; Nam, J. SampleCNN: End-to-End Deep Convolutional Neural Networks Using Very Small Filters for Music Classification. Appl. Sci. 2018, 8, 150. https://doi.org/10.3390/app8010150

Lee J, Park J, Kim KL, Nam J. SampleCNN: End-to-End Deep Convolutional Neural Networks Using Very Small Filters for Music Classification. Applied Sciences. 2018; 8(1):150. https://doi.org/10.3390/app8010150

Chicago/Turabian StyleLee, Jongpil, Jiyoung Park, Keunhyoung Luke Kim, and Juhan Nam. 2018. "SampleCNN: End-to-End Deep Convolutional Neural Networks Using Very Small Filters for Music Classification" Applied Sciences 8, no. 1: 150. https://doi.org/10.3390/app8010150