DeepGait: A Learning Deep Convolutional Representation for View-Invariant Gait Recognition Using Joint Bayesian

Abstract

:1. Introduction

1.1. Proposal of Deep Convolutional Gait Representation

1.2. Joint Bayesian for Modeling View Variance

1.3. Overview

2. Proposed Method

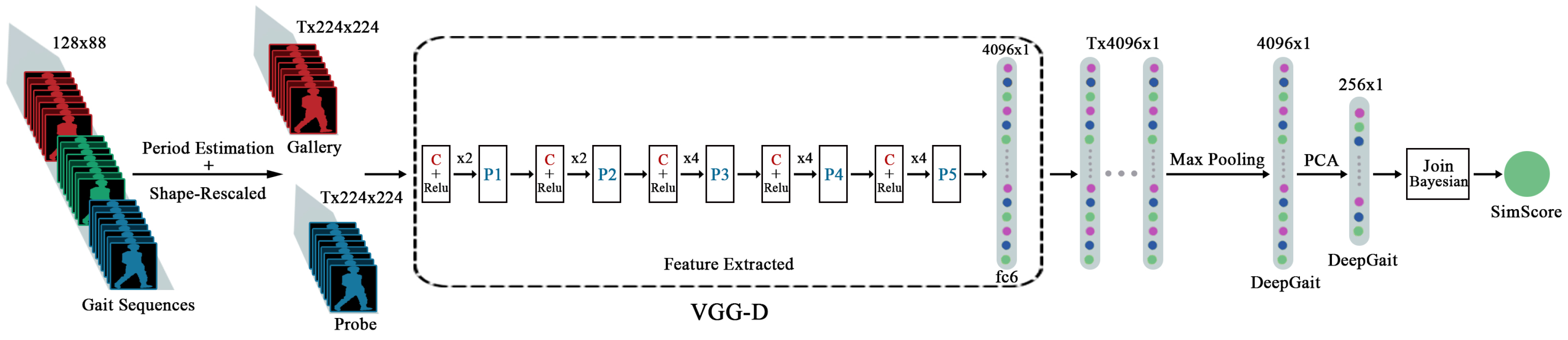

2.1. Deep Convolutional Gait Representation

2.1.1. Gait Period Estimation

2.1.2. Network Structure

2.1.3. Supervised Pre-Training

2.1.4. Feature Extraction

2.1.5. Representation Generalization and Visualization

2.2. Gait Recognition

2.2.1. Gait Verification Using Joint Bayesian

2.2.2. Gait Identification

2.2.3. Dimension Deduction by PCA

2.3. Evaluation Criteria

3. Experiment

3.1. Comparisons of Different Gait Representations for the Non-View Setting

3.2. Results for the Cross-View Setting

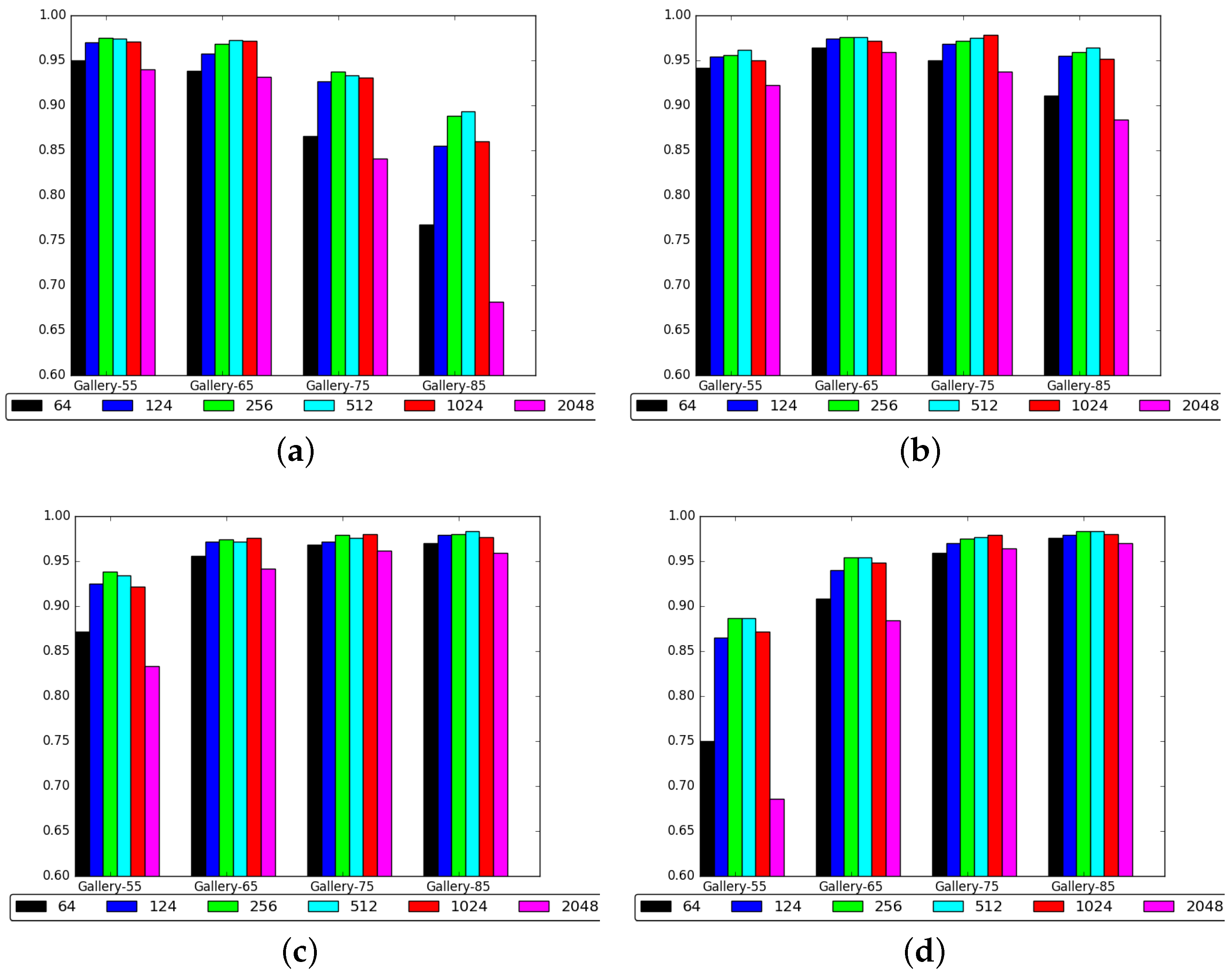

3.2.1. Number of Components Selection for Joint Bayesian

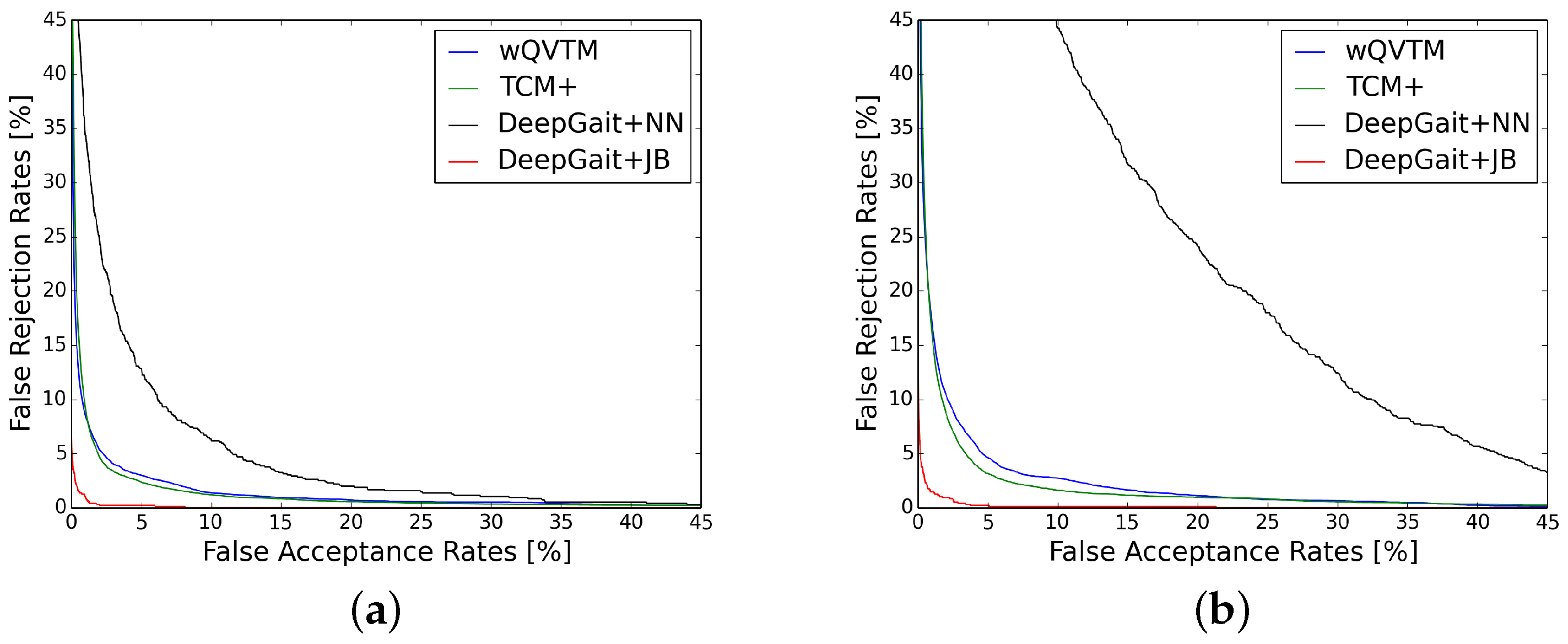

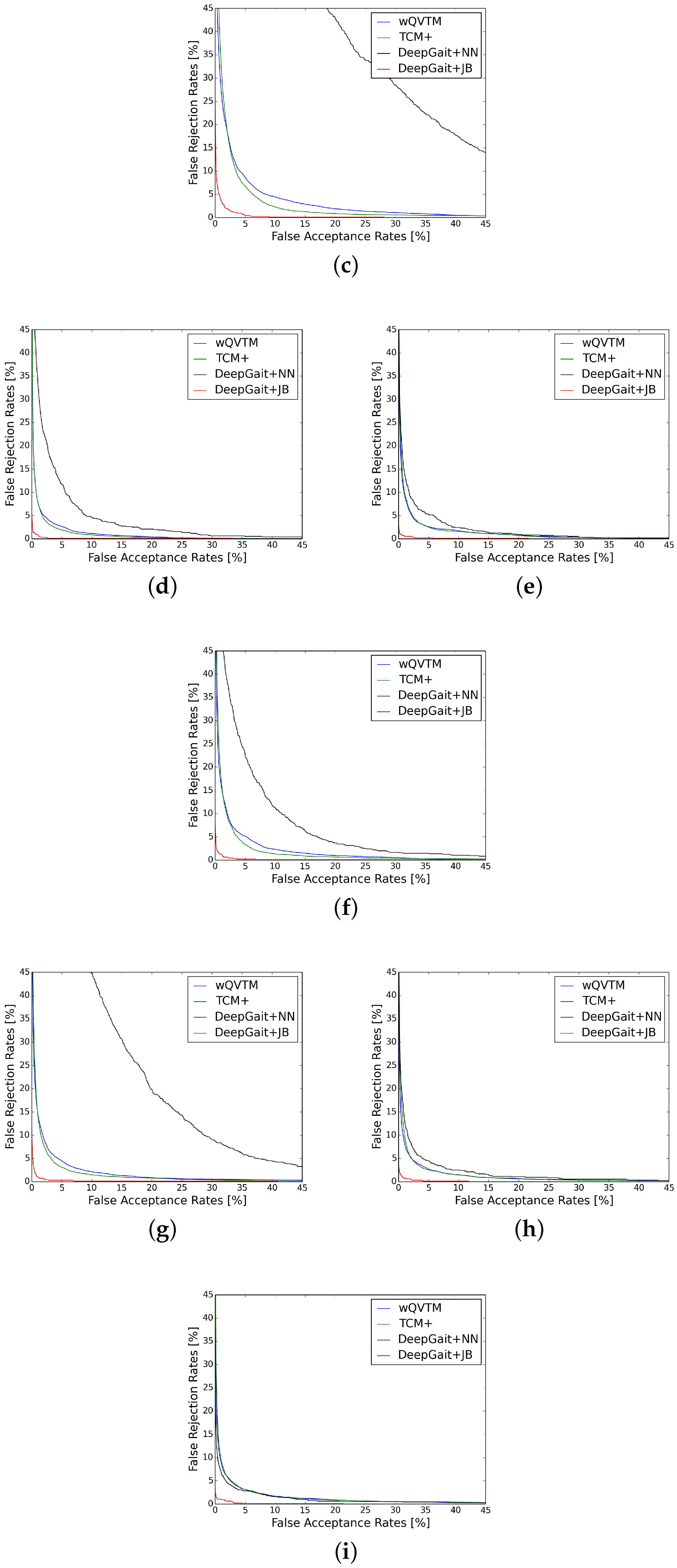

3.2.2. Comparisons with the State-of-the-Art Methods

4. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| DeepGait | Gait representation based on deep convolutional features |

| GEI | Gait energy image |

| MGEI | Masked gait energy image based on gait entropy image |

| GEnI | Gait entropy image |

| GFI | Gait flow image |

| FDF | Frequency-Domain feature |

| CMCs | Cumulative match characteristics |

| ROC | Receiver operating characteristic |

| JB | Joint Bayesian |

| NN | Nearest neighbor classifier based on euclidean distance |

| OULP | the OU-ISIR large population dataset |

References

- Murray, M.P.; Drought, A.B.; Kory, R.C. Walking patterns of normal men. J. Bone Jt. Surg. Am. 1964, 46, 335–360. [Google Scholar] [CrossRef]

- Cutting, J.E.; Kozlowski, L.T. Recognizing friends by their walk: Gait perception without familiarity cues. Bull. Psychon. Soc. 1977, 9, 353–356. [Google Scholar] [CrossRef]

- Man, J.; Bhanu, B. Individual recognition using gait energy image. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 316–322. [Google Scholar]

- Bashir, K.; Xiang, T.; Gong, S. Gait recognition without subject cooperation. Pattern Recognit. Lett. 2010, 31, 2052–2060. [Google Scholar] [CrossRef]

- Lam, T.H.; Cheung, K.H.; Liu, J.N. Gait flow image: A silhouette-based gait representation for human identification. Pattern Recognit. 2011, 44, 973–987. [Google Scholar] [CrossRef]

- Makihara, Y.; Sagawa, R.; Mukaigawa, Y.; Echigo, T.; Yagi, Y. Gait recognition using a view transformation model in the frequency domain. In European Conference on Computer Vision; Springer: Berlin, Germany, 2006; pp. 151–163. [Google Scholar]

- Bashir, K.; Xiang, T.; Gong, S. Gait recognition using gait entropy image. In Proceedings of the 3rd International Conference on Crime Detection and Prevention (ICDP 2009), IET, London, UK, 2–3 December 2009; pp. 1–6.

- Luo, J.; Tang, J.; Tjahjadi, T.; Xiao, X. Robust arbitrary view gait recognition based on parametric 3D human body reconstruction and virtual posture synthesis. Pattern Recognit. 2016, 60, 361–377. [Google Scholar] [CrossRef]

- Bhanu, B.; Han, J. Model-based human recognition—2D and 3D gait. In Human Recognition at a Distance in Video; Springer: Berlin, Germany, 2010; pp. 65–94. [Google Scholar]

- Nixon, M.S.; Carter, J.N.; Cunado, D.; Huang, P.S.; Stevenage, S. Automatic gait recognition. In Biometrics; Springer: Berlin, Germany, 1996; pp. 231–249. [Google Scholar]

- Iwama, H.; Okumura, M.; Makihara, Y.; Yagi, Y. The ou-isir gait database comprising the large population dataset and performance evaluation of gait recognition. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1511–1521. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; NIPS Foundation Inc.: South Lake Tahoe, UV, USA, 2012; pp. 1097–1105. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus Convention Center Columbus, OH, USA, 23–28 June 2014; pp. 580–587.

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4489–4497.

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. Comput. Vision Pattern Recognit. 2013, 647–655. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Xiao, J.; Torralba, A.; Oliva, A. Learning deep features for scene recognition using places database. In Advances in Neural Information Processing Systems; NIPS Foundation Inc.: South Lake Tahoe, UV, USA, 2014; pp. 487–495. [Google Scholar]

- Sun, Y.; Wang, X.; Tang, X. Deep learning face representation from predicting 10,000 classes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus Convention Center Columbus, OH, USA, 23–28 June 2014; pp. 1891–1898.

- Sun, Y.; Chen, Y.; Wang, X.; Tang, X. Deep learning face representation by joint identification-verification. In Advances in Neural Information Processing Systems; NIPS Foundation Inc.: South Lake Tahoe, UV, USA, 3–7 December 2014; pp. 1988–1996. [Google Scholar]

- Sun, Y.; Wang, X.; Tang, X. Deeply learned face representations are sparse, selective, and robust. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2892–2900.

- Shiraga, K.; Makihara, Y.; Muramatsu, D.; Echigo, T.; Yagi, Y. Geinet: View-invariant gait recognition using a convolutional neural network. In Proceedings of the 2016 IEEE International Conference on Biometrics (ICB), Halmstad, Sweden, 13–16 June 2016; pp. 1–8.

- Wolf, T.; Babaee, M.; Rigoll, G. Multi-view gait recognition using 3D convolutional neural networks. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 4165–4169.

- Muramatsu, D.; Makihara, Y.; Yagi, Y. View transformation model incorporating quality measures for cross-view gait recognition. IEEE Trans. Cybern. 2016, 46, 1602–1615. [Google Scholar] [CrossRef] [PubMed]

- Muramatsu, D.; Makihara, Y.; Yagi, Y. Cross-view gait recognition by fusion of multiple transformation consistency measures. IET Biom. 2015, 4, 62–73. [Google Scholar] [CrossRef]

- Kale, A.; Chowdhury, A.K.R.; Chellappa, R. Towards a view invariant gait recognition algorithm. In Proceedings of the IEEE Conference on IEEE Advanced Video and Signal Based Surveillance, Miami, FL, USA, 21–22 July 2003; pp. 143–150.

- Bodor, R.; Drenner, A.; Fehr, D.; Masoud, O.; Papanikolopoulos, N. View-independent human motion classification using image-based reconstruction. Image Vision Comput. 2009, 27, 1194–1206. [Google Scholar] [CrossRef]

- Iwashita, Y.; Baba, R.; Ogawara, K.; Kurazume, R. Person identification from spatio-temporal 3D gait. In Proceedings of the 2010 International Conference on IEEE Emerging Security Technologies (EST), Canterbury, UK, 6-7 September 2010; pp. 30–35.

- Chen, D.; Cao, X.; Wang, L.; Wen, F.; Sun, J. Bayesian face revisited: A joint formulation. In European Conference on Computer Vision; Springer: Berlin, Germany, 2012; pp. 566–579. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM International Conference on Multimedia; ACM: New York, NY, USA, 2014; pp. 675–678. [Google Scholar]

- Learned-Miller, E.; Huang, G.B.; RoyChowdhury, A.; Li, H.; Hua, G. Labeled faces in the wild: A survey. In Advances in Face Detection and Facial Image Analysis; Springer: Berlin, Germany, 2016; pp. 189–248. [Google Scholar]

- Cao, X.; Wipf, D.; Wen, F.; Duan, G.; Sun, J. A practical transfer learning algorithm for face verification. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3208–3215.

| Rank-1/Rank-5 | Dataset | #Subjects | DeepGait | GEI | MGEI | GEnI | FDF | GFI |

|---|---|---|---|---|---|---|---|---|

| rank-1 | View-55 | 3,706 | 90.6 | 85.3 | 79.3 | 75.1 | 83.1 | 61.9 |

| View-65 | 3,770 | 91.2 | 85.6 | 83.2 | 77.3 | 84.7 | 66.6 | |

| View-75 | 3,751 | 91.2 | 86.1 | 84.6 | 79.1 | 86.0 | 69.3 | |

| View-85 | 3,249 | 92.0 | 85.3 | 83.9 | 80.7 | 85.6 | 69.8 | |

| Mean | 92.3 | 85.6 | 82.8 | 78.1 | 84.9 | 66.9 | ||

| rank-5 | View-55 | 3,706 | 96.0 | 91.8 | 89.3 | 85.5 | 91.0 | 75.5 |

| View-65 | 3,770 | 96.0 | 92.3 | 91.5 | 87.7 | 92.3 | 79.5 | |

| View-75 | 3,751 | 96.1 | 92.2 | 92.0 | 88.8 | 92.5 | 81.3 | |

| View-85 | 3,249 | 96.5 | 92.6 | 91.9 | 89.3 | 92.3 | 81.9 | |

| Mean | 96.2 | 92.2 | 91.2 | 87.8 | 92.0 | 79.6 | ||

| Gallery View | Method | Rank-1 [%] | Rank-5 [%] | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 55 | 65 | 75 | 85 | 55 | 65 | 75 | 85 | ||

| 55 | GEINet | (94.7) | 93.2 | 89.1 | 79.9 | ||||

| w/FDF | (92.7) | 91.4 | 87.2 | 80.0 | |||||

| TCM+ | 79.9 | 70.8 | 54.5 | 91.7 | 87.1 | 79.3 | |||

| wQVTM | 78.3 | 64.0 | 48.6 | 90.6 | 82.2 | 73.9 | |||

| DeepGait + NN | (92.7) | 51.5 | 8.2 | 2.9 | (97.2) | 74.1 | 21.1 | 9.3 | |

| DeepGait + JB | (97.4) | 96.1 | 93.4 | 88.7 | (99.2) | 99.1 | 98.6 | 97.1 | |

| 65 | GEINet | 93.7 | (95.1) | 93.8 | 90.6 | ||||

| w/FDF | 92.3 | (93.9) | 92.2 | 88.6 | |||||

| TCM+ | 81.7 | 79.5 | 70.2 | 92.1 | 90.2 | 86.8 | |||

| wQVTM | 81.5 | 79.2 | 67.5 | 91.9 | 90.2 | 84.8 | |||

| DeepGait + NN | 48.5 | (94.4) | 73.7 | 34.3 | 70.2 | (97.6) | 88.8 | 56.9 | |

| DeepGait + JB | 97.3 | (97.6) | 97.2 | 95.4 | 99.5 | (99.5) | 99.3 | 99.2 | |

| 75 | GEINet | 91.1 | 94.1 | (95.2) | 93.8 | ||||

| w/FDF | 88.8 | 92.6 | (93.4) | 91.9 | |||||

| TCM+ | 71.9 | 80.0 | 79.0 | 88.1 | 91.4 | 90.3 | |||

| wQVTM | 70.2 | 80.0 | 78.2 | 87.1 | 91.4 | 89.9 | |||

| DeepGait + NN | 7.5 | 76.3 | (94.5) | 89.2 | 18.7 | 92.3 | (97.6) | 96.6 | |

| DeepGait + JB | 93.3 | 97.5 | (97.7) | 97.6 | 99.1 | 99.3 | (99.4) | 99.1 | |

| 85 | GEINet | 81.4 | 91.2 | 94.6 | (94.7) | ||||

| w/FDF | 80.9 | 88.4 | 92.2 | (93.2) | |||||

| TCM+ | 53.7 | 73.0 | 79.4 | 79.6 | 87.9 | 91.2 | |||

| wQVTM | 51.1 | 68.5 | 79.0 | 75.6 | 85.7 | 91.1 | |||

| DeepGait + NN | 2.8 | 37.2 | 90.5 | (94.8) | 9.9 | 60.9 | 96.5 | (97.8) | |

| DeepGait + JB | 89.3 | 96.4 | 98.3 | (98.3) | 98.3 | 99.3 | 99.1 | (99.1) | |

| Gallery View | Method | 55 | 65 | 75 | 85 |

|---|---|---|---|---|---|

| 55 | GEINet | (1.3) | 1.4 | 1.7 | 2.5 |

| w/FDF | (1.9) | 2.0 | 2.3 | 2.9 | |

| TCM+ | 3.2 | 4.0 | 5.7 | ||

| wQVTM | 3.6 | 4.8 | 6.5 | ||

| DeepGait + NN | (2.9) | 7.9 | 21.6 | 29.4 | |

| DeepGait + JB | (0.8) | 1.0 | 1.3 | 1.9 | |

| 65 | GEINet | 1.2 | (1.0) | 1.3 | 1.6 |

| w/FDF | 1.7 | (1.4) | 1.7 | 2.2 | |

| TCM+ | 3.0 | 3.4 | 4.2 | ||

| wQVTM | 3.5 | 3.4 | 5.1 | ||

| DeepGait + NN | 7.2 | (3.1) | 5.1 | 10.6 | |

| DeepGait + JB | 0.8 | (0.6) | 0.7 | 1.2 | |

| 75 | GEINet | 1.5 | 1.2 | (1.2) | 1.4 |

| w/FDF | 2.0 | 1.5 | (1.6) | 1.7 | |

| TCM+ | 4.0 | 3.4 | 3.8 | ||

| wQVTM | 4.7 | 3.7 | 3.8 | ||

| DeepGait + NN | 19.9 | 4.6 | (2.7) | 3.4 | |

| DeepGait + JB | 1.1 | 0.8 | (0.8) | 1.0 | |

| 85 | GEINet | 2.4 | 1.6 | 1.2 | (1.1) |

| w/FDF | 2.5 | 1.9 | 1.6 | (1.4) | |

| TCM+ | 5.5 | 4.4 | 3.7 | ||

| wQVTM | 6.5 | 4.9 | 3.7 | ||

| DeepGait + NN | 28.5 | 10.0 | 3.4 | (2.3) | |

| DeepGait + JB | 1.6 | 0.9 | 0.9 | (1.0) |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Min, X.; Sun, S.; Lin, W.; Tang, Z. DeepGait: A Learning Deep Convolutional Representation for View-Invariant Gait Recognition Using Joint Bayesian. Appl. Sci. 2017, 7, 210. https://doi.org/10.3390/app7030210

Li C, Min X, Sun S, Lin W, Tang Z. DeepGait: A Learning Deep Convolutional Representation for View-Invariant Gait Recognition Using Joint Bayesian. Applied Sciences. 2017; 7(3):210. https://doi.org/10.3390/app7030210

Chicago/Turabian StyleLi, Chao, Xin Min, Shouqian Sun, Wenqian Lin, and Zhichuan Tang. 2017. "DeepGait: A Learning Deep Convolutional Representation for View-Invariant Gait Recognition Using Joint Bayesian" Applied Sciences 7, no. 3: 210. https://doi.org/10.3390/app7030210