1. Introduction

Traditional image coding techniques are developed for applications where the source is encoded once and the coded data is played back (decoded) many times. Hence, the encoder is very complex, while the decoder is relatively simple and cheap to implement. However, in many modern applications, such as camera arrays for surveillance, it is desirable to reduced the complexity and cost of the encoder.

There are two enabling techniques, which allow reduced complexity encoders to be designed. The first one is distributed coding. Based on the information theoretic work by Wyner and Ziv [

1], the coding allows correlated sources to be encoded independently, while the decoder takes advantage of the correlation between source data to improve the quality of the reconstructed images. It has been applied to both image [

2,

3] and video coding [

4].

The second technique is compressed sensing (CS) [

5,

6]. It has attracted considerable research interest because it allows continuous signals to be sampled at rates much lower than the Nyquist rate for signals that are sparse in some (transform) domain. There have been some application of CS to image coding in the literature. A review is provided in Section 3. Earlier schemes apply CS to the whole image. More recently, block-based coding methods have been proposed [

7–

10]. The image is divided into non-overlapping blocks of pixels, and each image block is encoded independently. These methods generally use the same measurement rate for all image blocks, even though the compressibility and sparsity of the individual blocks can be quite different.

Most proposed coding schemes use a block-based coding approach, where the image is divided into non-overlapping blocks of pixels and each image block is coded independently. They generally use the same measurement rate for all image blocks, even though the compressibility and sparsity of the individual blocks can be quite different [

7–

10]. For effective and simple CS-based image coding, it is desirable to use a block-based strategy that exploits the statistical structure of the image data. In [

11,

12], the measurement rate of each block is adapted to the block sparsity. However, it is not easy to estimate the sparsity or compressibility of an image block from the CS measurements alone.

In this paper, we take the distributed approach to CS block-based image coding. The image is divided into non-overlapping blocks, and CS measurements for each block are then acquired. Some blocks are designated as key blocks and others as non-key blocks. The non-key blocks are encoded at reduced rates. At the decoder, the reconstruction quality of the non-key blocks is improved by using side information generated from the key blocks. This side information is purely in the CS domain and does not require any prior decoding, making it very simple to generate. We also consider key issues, including the choice of sensing matrix, the measurement (sampling) rate and the reconstruction algorithm.

The rest of this paper is organized as follows. Section 2 provides a brief overview of the theory of CS. A review of related works in CS-based image compression is presented in Section 3. Our distributed image coding scheme is presented in Section 4. Section 5 presents experimental results to show the effectiveness of this image codec.

2. Compressed Sensing

Shannon’s sampling theorem stipulates that a signal should be sampled at a rate higher than twice the bandwidth of the signal for a successful recovery. For high bandwidth signals, such as image and video, the required sampling rate becomes very high. Fortunately, only a relatively small number of the discrete cosine transform (DCT) or wavelet transform (WT) coefficients of these signals have significant magnitudes. Therefore, coefficients with small magnitudes can be discarded without affecting the quality of the reconstructed signal significantly. This is the basic idea exploited by all existing lossy signal compression techniques. The idea of CS is to acquire (sense) these significant information directly without first sampling the signal in the traditional sense. It has been shown that if the signal is “sparse” or compressible, then the acquired information is sufficient to reconstruct the original signal with a high probability [

5,

6]. Sparsity is defined with respect to an appropriate basis, such as DCT or WT, for that signal. The theory also stipulates that the CS measurements of the signal must be acquired through a process that is incoherent with the signal. Incoherence makes certain that the information acquired is randomly spread out in the basis concerned. In CS, the ability of a sensing technique to effectively retain the features of the underlying signal is very important. The sensing strategy should provide a sufficient number of CS measurements in a non-adaptive manner that enables near perfect reconstruction.

Let f = {

f1, …,

fN} be

N real-valued samples of a signal, which can be represented by the transform coefficients, x. That is,

where Ψ = [

ψ1, ψ2 …

ψN] is the transform basis matrix and x = [

x1, …,

xN] is an

N-vector of coefficients with

xi =< x

, ψi >. We assume that

x is

S-sparse, meaning that there are only

S << N significant elements in

x. Suppose a general linear measurement process computes

M <

N inner products between f and a collection of vectors,

ϕj, giving

yj = ⟨f

, ϕj⟩

, j = 1, …,

M. If Φ denotes the

M × N matrix with

ϕj as row vectors, then the measurements y = [

y1, …,

yM] is given by:

using

Equation (1) with Θ = ΦΨ. These measurements are non-adaptive if the measurement matrix, Φ, is fixed and does not depend on the structure of the signal [

13]. The minimum number of measurements needed to reconstruct the original signal depends on the matrices, Φ and Ψ.

The reconstruction problem involves using y to reconstruct the length-

N signal, x, that is

S-sparse, given Φ and Ψ. Since

M < N, this is an ill-conditioned problem. The conventional approach to solving ill-conditioned problems of this kind is to minimize the

l2 norm. In this case, the optimization problem is given by:

However, it has been proven that this

l2 minimization can only produce a non-sparse ŝ [

13]. The reason is that the

l2 norm measures the energy of the signal, and signal sparsity properties could not be incorporated in this measure. The

l0 norm counts the number of non-zero entries and, therefore, allows us to specify the sparsity requirement. The optimization problem using this norm can be stated as:

There is a high probability of obtaining a solution using only

M =

S + 1 i.i.d.Gaussian measurements [

14]. However, the solution produced is numerically unstable [

13]. It turns out that optimization based on the

l1 norm is able to exactly recover

S-sparse signals with a high probability using only

M ≥

cSlog(

N/S) i.i.d. Gaussian measurements [

5,

6]. The convex optimization problem is given by:

which can be reduced to a linear program. Algorithms based on basis pursuit (BP) [

15] can be used to solve this problem with a computational complexity of

O(

N3) [

5]. BP is a quadratically constrained

l1-minimization problem:

where

y is the noisy CS measurements with noise

∊. This is the preferred CS reconstruction formulation, as the estimate of noise

∊ may be known or can be computed. A second approach to solving the CS reconstruction problem is to formulate the BP problem as a second order cone program:

This formulation is also known as basis pursuit denoising (BPDN) [

16]. It is tractable due to its bounded convex optimization nature. The term

, which is also known as regularization, can be interpreted as a maximum

a posteriori estimate in a Bayesian setting. BPDN is very popular in signal and image processing applications. The third approach is known as the least absolute shrinkage and selection operator (Lasso) [

17]. It involves the minimization of an

l2 norm subject to

l1 norm constraints:

A number of different algorithms that have been developed to solve these three CS reconstruction problems. They include linear programming (LP) techniques [

5,

6], greedy algorithms [

18–

21], gradient-based algorithms [

22,

23] and iterative shrinkage algorithms [

24,

25].

3. Review of Compressed Sensing-Based Image Coding Techniques

The first application of CS to image coding acquires CS measurements for the whole image [

26]. The transform matrix is based on the wavelet transform, and a random Fourier matrix is used for sensing. The measurements are obtained randomly without considering the image structure or the magnitude of its transform coefficients. It was found that, compared to an ideal

M-term wavelet approximation, the quality of the reconstructed images are similar if 3

M to 5

M CS measurements are used. A similar approach is taken by [

21,

27] to show the effectiveness of their reconstruction algorithms. The main disadvantage of this approach is that the computational and memory requirements at the encoder are relatively high, since the whole image is encoded. These requirements can be reduced by using block-based image coding. A block-based image coding method based on CS was first proposed in [

7]. The image is divided into non-overlapping blocks, and each image block is sampled independently using an appropriate sensing matrix. The image is reconstructed using a variant of the projected Landweber (PL) reconstruction using hard thresholding and iterative Wiener filtering. This framework was later extended by [

8] using directional transforms. Neither of these schemes consider the fact that different blocks may have different compressibility and every image block is encoded with the same measurement rate. In general, the sparsity of individual block is different. Smoother image blocks have a higher degree of sparsity, while texture and edges have a low level of sparsity.

The approach in [

9] utilizes the coefficient structure of the transform. A 2D DCT matrix is used as the sparsifying transform, and the sensing matrix is random Gaussian. A weighting matrix is applied to the sensing matrix to provide different emphasis on different coefficients. This matrix is derived from the JPEG quantization table by taking the inverse of the table entries and adjusting their amplitudes. A different weighting matrix is used for each block, which complicates the scheme significantly. Although the authors have suggested that the weighting matrix for other blocks can be obtained by sub-sampling or interpolating an initial weighting matrix, the implications are not discussed.

A different way to take advantage of the sparsity of different image blocks is proposed in [

10]. The transform coefficients are first randomly permuted before CS measurements are acquired. In [

28], the image is pre-randomized by random permutation. Then, a block-based sensing matrix, known as structurally random matrices (SRM), is applied to the randomized data. Finally, the CS measurements are obtained by randomly down-sampling the data. The SRM is later modified to become the scrambled block Hadamard ensemble (SBHE) in [

29]. This method can be generally applied to different sparse signals.

Wavelet transform can also be used as a sparsifying transform for CS encoding. The benefit is that CS sampling can be adapted to the structure of the wavelet decomposition. This technique is generally called multi-scale CS encoding. It was first proposed in [

30], where CS encoding was applied to measure the fine-scale properties of the signal. A similar approach is adopted in [

31], where an image is separated into dense and sparse components. The dense component is encoded by linear measurements, while the sparse component is encoded by CS. Another multi-scale CS image coding is presented in [

32]. Block-based CS is deployed independently within each sub-band of each decomposition level of the wavelet transform of the image. If the wavelet transform produces

L levels of wavelet decomposition, each sub-band,

s, at level

L is divided into

BL × BL blocks and sampled with a sensing matrix. All the measurements of the baseband are retained. Other levels are sampled at different rates.

A multi-scale CS encoding technique that is based on the Bayesian perspective was proposed in [

33]. The CS reconstruction problem is formulated as a linear regression problem with a constraint (prior) that the underlying signal is sparse. In [

34], a hidden Markov tree is employed, explicitly imposing the belief that if a given coefficient is negligible, then its children coefficients are likely to be so. Other related Bayesian approaches in a multi-scale framework can be found in [

35,

36].

Distributed coding has the potential to provide the best compression rates. In [

37], Wyner–Ziv (WZ) coding for distributed image compression based on CS is proposed. First, random projections of the image are obtained by using a scrambled fast Fourier transform. Then, a nested scalar quantization is applied to each measurement. The quantizer consists of a coarse coset channel coder nested in a fine uniform scalar quantizer. A JPEG decoded image is used at the decoder, which is also randomly measured using CS, and serves as the side information to recover the image. Another distributed approach proposed in [

38] first creates two down-sampled images,

X1 and

X2, from the original image,

X.

X1 is encoded by H.264 intra-frame coding, and

X2 is encoded using CS. At the decoder, the H.264 encoded

X1 is decoded using intra-frame decoding to obtain

. The CS encoded image,

X2, is reconstructed with the help of side information generated from an interpolation of

. Unfortunately, the need for JPEG and H.264 encoding makes these schemes impractical.

None of the above distributed approaches make use of the fact that individual image blocks have different sparsity. One exception can be found in [

12], where the image blocks are classified either as flat or non-flat. Flat blocks are ones with low frequency contents that can be reconstructed using a lower sampling rate. Non-flat blocks contain texture and edges and require a higher sampling rate for successful reconstruction. To recover the full image, individual blocks are reconstructed from their CS measurements using the OMP algorithm [

19]. Blocking artefacts between flat and non-flat blocks are reduced by mean filtering. After mean filtering, the adjacent columns and rows are added to each block, and total variation (TV) minimization is performed. As a result, the computational complexity at the decoder is quite high. Furthermore, in a real CS image acquisition system, the original image data are not available, and so, this method is not applicable.

A somewhat related approach was recently proposed in [

11]. An image block is classified as either compressible or incompressible using the standard deviation (SD) of its CS measurements. The blocks having bigger SD are classified as incompressible, and the ones with lower SD are compressible. After adaptively assigning the measurement rate to each image block, further CS is performed using deterministic and random sensing. Principle component analysis is used for deterministic sensing, and the sensing matrix is trained off-line by a sample set. Three CS sensing matrices formed the full sensing matrix for an image block. This approach is better than that in [

12], since it uses the CS measurements for computing the classification parameter rather than the original image data. The disadvantage is the need to pre-sense each image block. Furthermore, training is required for deterministic sensing, which limits the practical usage of this scheme.

For effective and simple CS-based image coding, it is desirable to use a block-based strategy, which exploits the statistical structure of the image data. In general, the distributed approach, which applies different measurement rates for different blocks, provides much better results. However, it is not easy to estimate the sparsity or compressibility of an image block without applying a sparsifying transform. A new distributed CS image coding method is presented in this article to overcomes some of these problems.

4. The Proposed Distributed Image Codec

The distributed image coding and decoding scheme proposed in this paper is a block-based one that makes use of the correlation between neighbouring blocks. In block-based CS, an image,

X, with dimension

N × N pixels is divided into non-overlapping blocks of

B × B pixels. Let

xi represent the

i-th block; then, its

Mr CS measurements are given by:

where Φ

B is an

Mr×B2 measurement matrix. The measurement rate per block is given by

Mb =

Mr/B2. Since the image data of neighbouring blocks are correlated, we postulate that their CS measurements will also be correlated. This correlation is empirically demonstrated in Section 4.1 for a variety of images.

In our coding scheme, image blocks are classified either as key blocks or non-key (WZ) blocks. Key blocks are encoded with a higher measurement rate than non-key blocks, similar to the idea of key frames and non-key frames in video coding. Two block classification strategies, one adaptive and the other non-adaptive, can be adopted. Details of the encoding scheme will be discussed in Section 4.2, while that of the decoding scheme can be found in Section 4.3. While each key block is reconstructed from its own measurements, the decoding of WZ blocks requires the help of side information, due to a reduced sampling rate. Here, we propose using the CS measurements of key blocks as the side information. This process is discussed in more detail in Section 4.3.2.

4.1. Block Correlation Analysis

Consider an image divided into non-overlapping rectangular blocks of pixels. In general, there are correlations between neighbouring image blocks. If the block size is small, this correlation is high. If the image block data are correlated, we postulate that their CS measurements are also correlated. In this section, this correlation will be demonstrated empirically using four standard 512 × 512 pixel images: “Lena”, “Cameraman”, “Goldhill” and “Boat”. The CS measurement rate for each block is fixed at 50%. Image block similarity is measured through the correlation coefficient and the mean squared error (MSE).

Using a block size of 8 × 8 pixels, we found that the largest correlation coefficients of the CS measurements among image blocks are generally very high (>0.9). This means that we can make use of this correlation for distributed coding of CS measurements. Furthermore, the MSE values of the image data are reflected in those of the CS measurement. Hence, we can use the MSE of the CS measurements as an indication of the MSE of the original image data. Thus, similar image blocks have low MSE values of CS measurements.

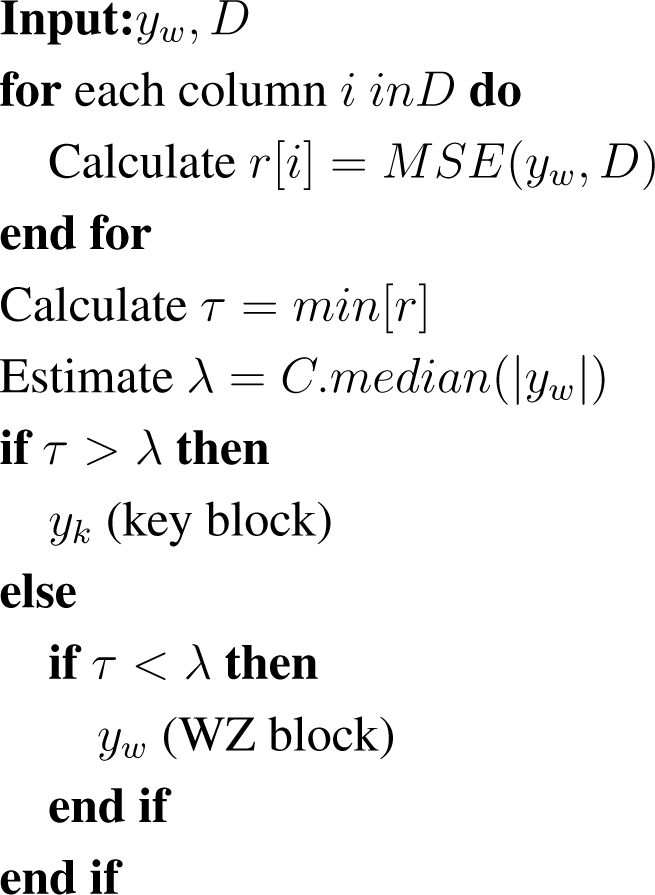

To evaluate the effect of block size on image block similarity, the percentage of correlated blocks exceeding an MSE threshold is computed for block sizes of 64

× 64, 32

× 32, 16

× 16 and 8

× 8.

Figure 1 shows the results for the four images. As expected, there are more correlated blocks for smaller block sizes. Blocks with a size of 64

× 64 can virtually be considered independent. The percentage of blocks that can be considered correlated depends on the MSE threshold. Thus, a smaller block size with appropriate MSE threshold would be desirable for our codec.

4.2. The Encoder

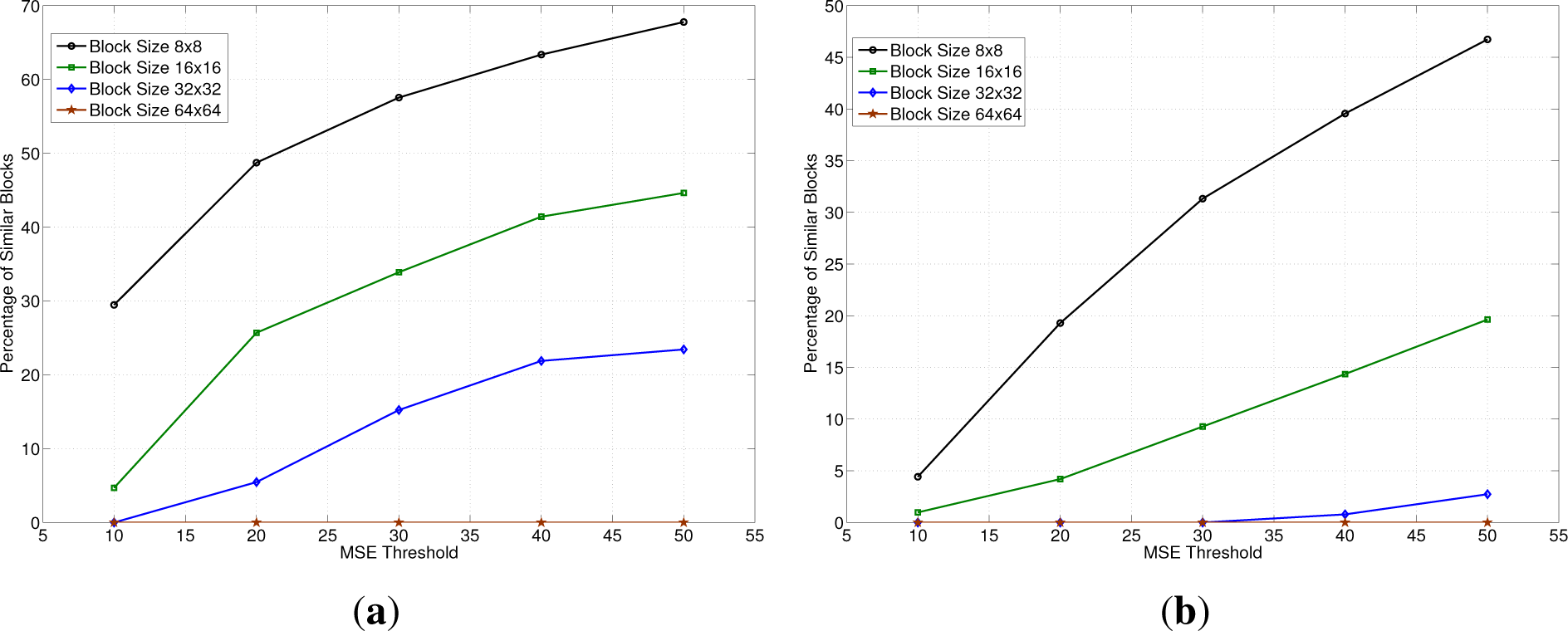

A block diagram of the proposed encoder is shown in

Figure 2a. At the encoder, an image with a dimension of

N × N pixels is divided into

B non-overlapping blocks of

NB × NB pixels. Let

xi represent the

i-th block with CS measurements

yi = Φ

Bxi. Each image block is classified either as a key block or a non-key (WZ) block. They are encoded using CS measurement rates of

Mk and

Mw respectively, where

Mk ≫

Mw. The CS measurements are then quantized using the quantization scheme proposed in our earlier work [

39].

The way by which the image blocks are classified affects the compression ratio, as well as the quality of the reconstructed image. The number of CS measurements required to reconstruct a signal is dependent on the sparsity of the signal. If a block has high sparsity, then it can be reconstructed with fewer measurements compared with a block with low sparsity. If a low sparsity block is encoded with a low measurement rate, the reconstruction quality will be poor. Determining the sparsity of an image block is a challenging problem. Two different block classification schemes, one non-adaptive and the other adaptive, are proposed here.

4.2.1. Non-Adaptive Block Classification

The non-adaptive block classification scheme is designed for very low complexity encoding with minimum computation. In this scheme, the blocks are classified sequentially according to the order position in which they are acquired. A group of consecutive M blocks is referred to as a group of blocks (GOB). The first block in a GOB is a key block. The remaining M − 1 blocks are WZ blocks. Block sparsity is not considered in this scheme. It is similar to the concept of group of picture (GOP) used in traditional video coding. If M = 1, then every block is a key block and is encoded at the same rate. The average measurement rate decreases as M increases.

4.2.2. Adaptive Block Classification

The adaptive block classification scheme can be deployed for encoders with more computing resources. This scheme requires the creation of a dictionary of CS measurements of key blocks. This dictionary is initially empty. The first block of the image is always a key block. The CS measurements of this block become the first entry of the dictionary. For each subsequent image block, the MSE between its CS measurements and the entries in the dictionary is computed. If any of these MSEs computed falls below a threshold, λ, then this block is classified as a WZ block and will be encoded at the lower rate. Otherwise, it is a key block, and its measurements are added to the dictionary as a new entry. In order to reduce the resources required, the size of the dictionary can be limited. When the dictionary is full and a new entry is needed, the oldest entry will be discarded.

The threshold,

λ, is determined adaptively as follows. For each image block,

i, the threshold is obtained by:

where

yi are the CS measurements of block

i and

C is a constant. The value of

C controls the relative number of key blocks and WZ blocks. If

C > 1, the number of WZ blocks will be increased. Choosing

C < 1 will increase the number of key blocks. This method reduces the encoding delay compared with SD-based methods described in Section 3. The entire encoding process is summarized in

Algorithm 1.

Algorithm 1.

Adaptive CS encoding.

Algorithm 1.

Adaptive CS encoding.

4.3. The Decoder

The decoder has to reconstruct the full image from the CS measurements of the individual image blocks. A block diagram of the proposed decoder is shown in

Figure 2b. The key issue that affects the quality of the reconstructed image is the CS reconstruction strategy. This will be discussed in Section 4.3.1. The theory of distributed coding tells us that side information is needed to reconstruct these blocks effectively. So, for a distributed codec, another issue to be addressed is the generation of appropriate side information. This will be addressed in Section 4.3.2.

4.3.1. Reconstruction Approach

There are three approaches by which the full image can be recovered from the block-based CS measurements. The first one is to reconstruct each block from its CS measurements independently of the other blocks, using the corresponding sensing and block sparsifying matrices. This is the independent block recovery approach. The second way is to place a block sparsifying transform in to a block diagonal sparsifying matrix and then use the block diagonal sparsifying transform to reconstruct the full image [

40]. In this approach, instead of reconstructing independent blocks, CS measurements of all the blocks are used to reconstruct the full image. The reconstruction performances of these two approaches are almost the same [

40]. Alternatively, all image blocks can be reconstructed jointly using a full sparsifying matrix [

40]. This approach is known as joint recovery. Instead of using the block sparsifying matrix or the block diagonal sparsifying matrix, a full sparsifying transform is applied to the CS measurements of all sampled blocks combined to reconstruct the full image. This is better than independent and diagonal reconstruction, as a full transform promotes a sparser representation and blocking artefacts can be avoided, as well. Generally speaking, the difference in the peak signal-to-noise ratio (PSNR) between independent and joint recovery is not significant. However, blocking artefacts can be an issue for independently recovered images, as shown in

Figure 3.

Based on this knowledge, the strategy adopted for our decoder is as follows. Each individual image block is recovered as they become available at the decoder. This is used as an initial solution. Once all the blocks are available at the decoder, a joint reconstruction is performed to provide a visually better result.

5. Experimental Results

Twelve standard test images [

41] are used to evaluate the effectiveness of the proposed distributed CS image codec. They are shown in

Figure 4. These images are 512

× 512 pixels in size and contain different content and textures. The visual reconstruction quality of the reconstructed images is evaluated by the peak signal-to-noise ratio (PSNR) and the structural similarity index (SSIM). The compression efficiency of the codec is evaluated by bits per pixel (bpp). The average measurement rate is the average including both the key and WZ blocks.

In Section 4.1, it has been shown that smaller block sizes yield a larger percentage of correlated blocks. Therefore, in these experiments, a block size of 8

×8 pixels is used. A scrambled block Hadamard ensemble (SBHE) sensing matrix is used to obtain the CS measurements, and Daubechies 9/7 wavelets are used as the sparsifying matrix. The SpaRSA [

24] algorithm is used as the reconstruction algorithm at the decoder. The proposed adaptive and non-adaptive approach with and without SI at the decoder is compared with two other techniques. The first one is the encoding of image blocks with the same measurement rate, i.e., no distinction of the key and WZ blocks. It is labelled as “Fixed Rate” in the results. The second technique is first proposed in [

12] and recently used to classify image blocks as compressible or incompressible [

11]. It is labelled as the “SD approach” in the results. The idea is that if the SD of the CS measurements of an image block is higher than the SD of the full image, then the block is classified as incompressible, and more measurements are required for successful reconstruction. The authors of [

12] used the original image data to compute the SD, which is not possible in real-world applications. For the adaptive block selection,

C = 1 is used. All codecs are coded in MATLAB R2012b and simulations run on an Intel i5 3.6 GHz, Windows 7 Enterprise Edition, 64-bit Operating System with 4 GB of RAM. For each measurement rate per image, the experiment is run five times, and then, the average is reported.

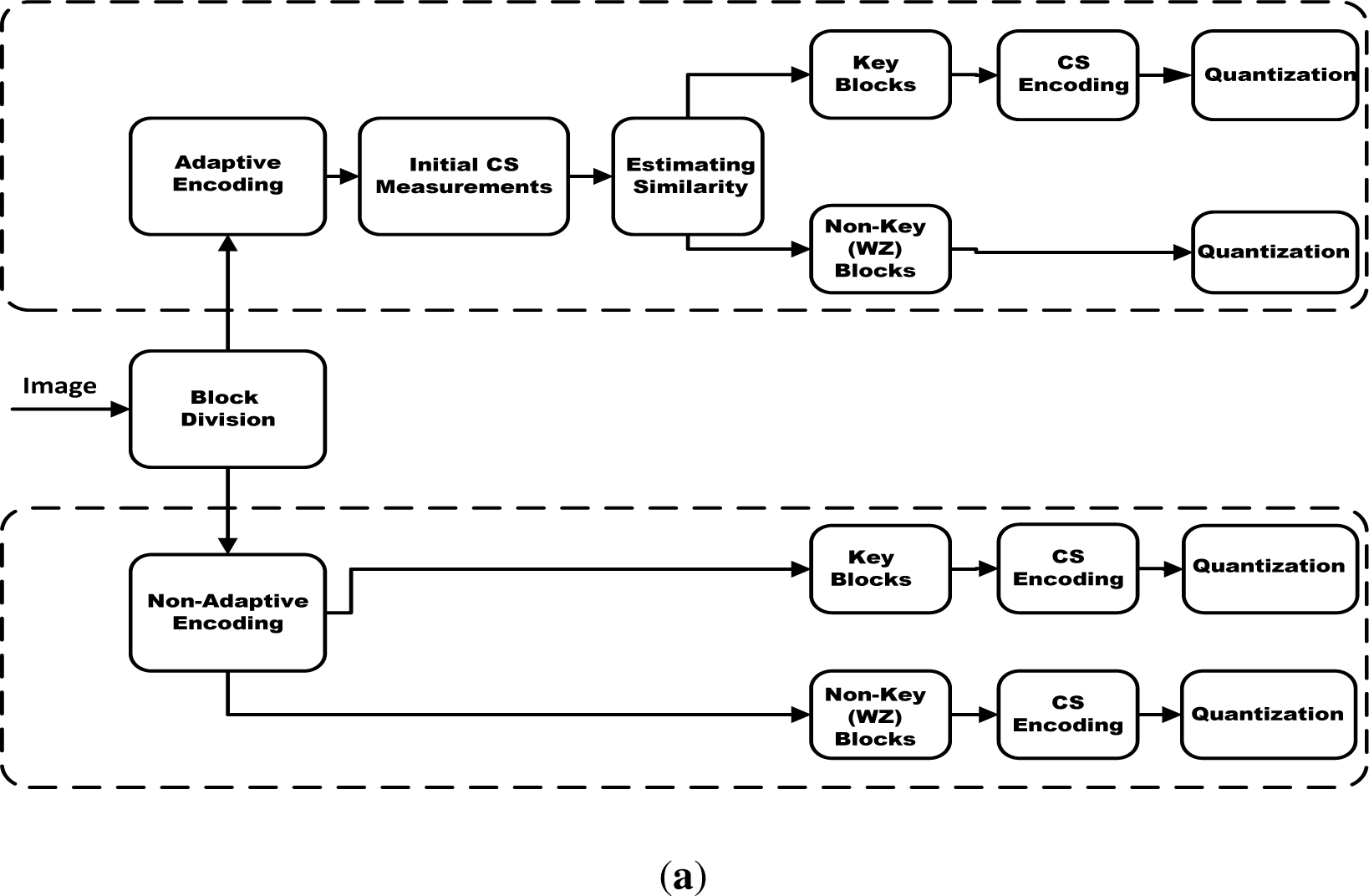

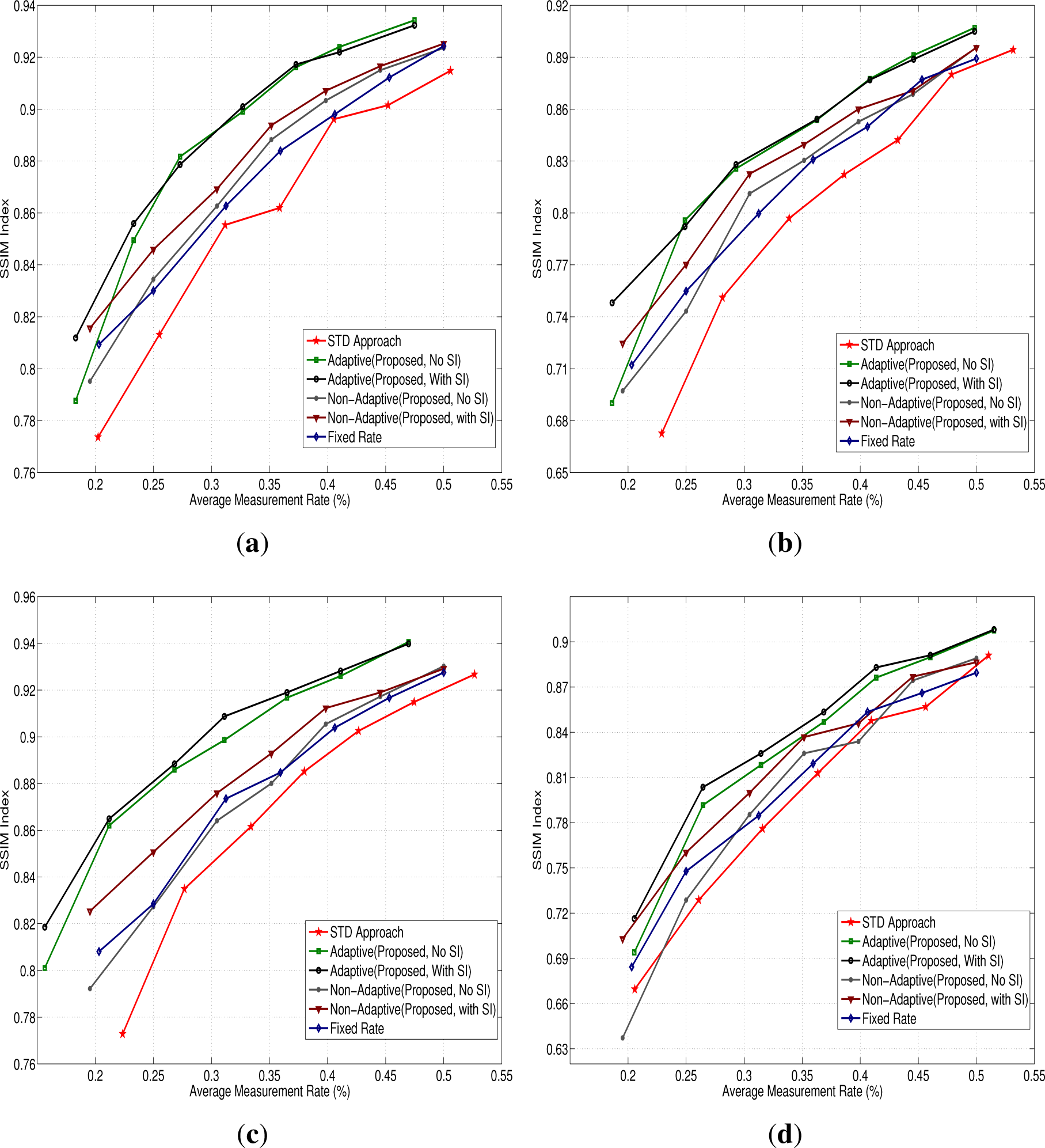

Figure 5 shows the R-D curves for four test images (“Lenna”, ”Boat”, “Cameraman” and “Couple”). The proposed adaptive sensing scheme with and without SI at the decoder outperforms all other algorithms for all test images. The non-adaptive scheme without SI is comparable with “Fixed Rate” and “SD Approach”. The non-adaptive scheme with SI improves the reconstruction quality and produces better results than “Fixed Rate” and “SD Approach”. The visual reconstruction quality evaluation for the above four images on the basis of structure similarity index [

42] is shown in

Figure 6. The visual quality of the adaptive scheme and the non-adaptive scheme with SI is superior for all test images.

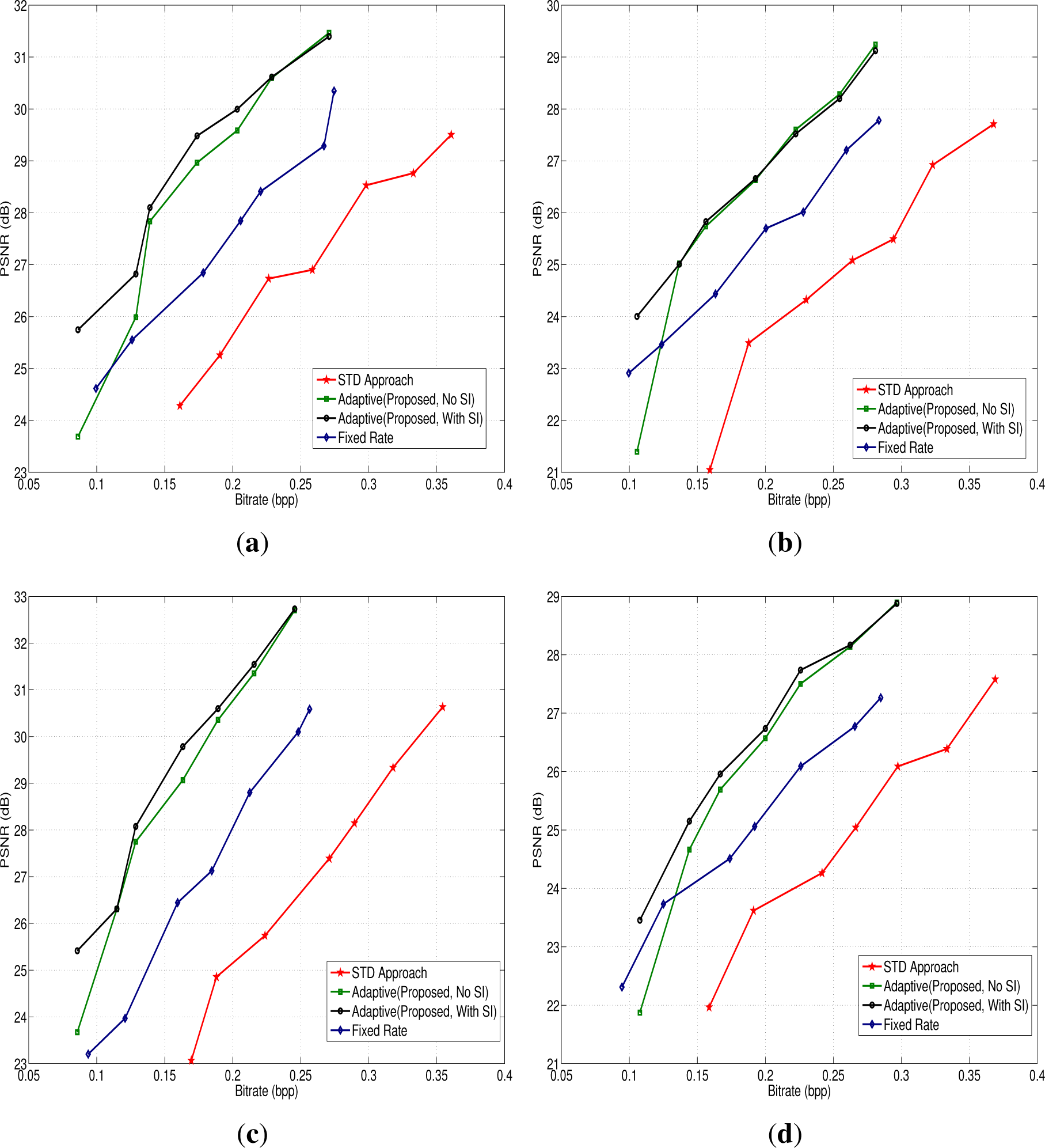

Table 1 shows the R-D performance of all twelve images averaged for all measurement rates. Again, the adaptive scheme outperforms all other schemes. The lowest average MR is obtained for “Cameraman”, “Girl” and “Lena” with the adaptive scheme; the PSNR is more than 1.5 dB better than other schemes. However, for an image with less intra-image similarity, like “Mandrill”, the adaptive scheme requires a higher number of measurements than other approaches. Using the proposed SI generation method improves reconstruction performance for both adaptive and non-adaptive encoding.

To evaluate the compression efficiency of the proposed distributed image codec, the CS measurements are quantized using the quantization scheme proposed in [

39]. Each CS measurement is allotted eight bits for quantization. The quantized CS measurements are then entropy coded using Huffman coding.

Figure 7 shows the PSNR performance of four test images at different bit rates. The compression efficiency of the adaptive scheme with SI is higher than the “Fixed Rate” and “SD” schemes at the same reconstruction quality. At lower average measurement rates, the “Fixed Rate” scheme performs better than the adaptive scheme with no SI for images “Boat” and “Couple”.