Object Detection Performance Evaluation for Autonomous Vehicles in Sandy Weather Environments

Abstract

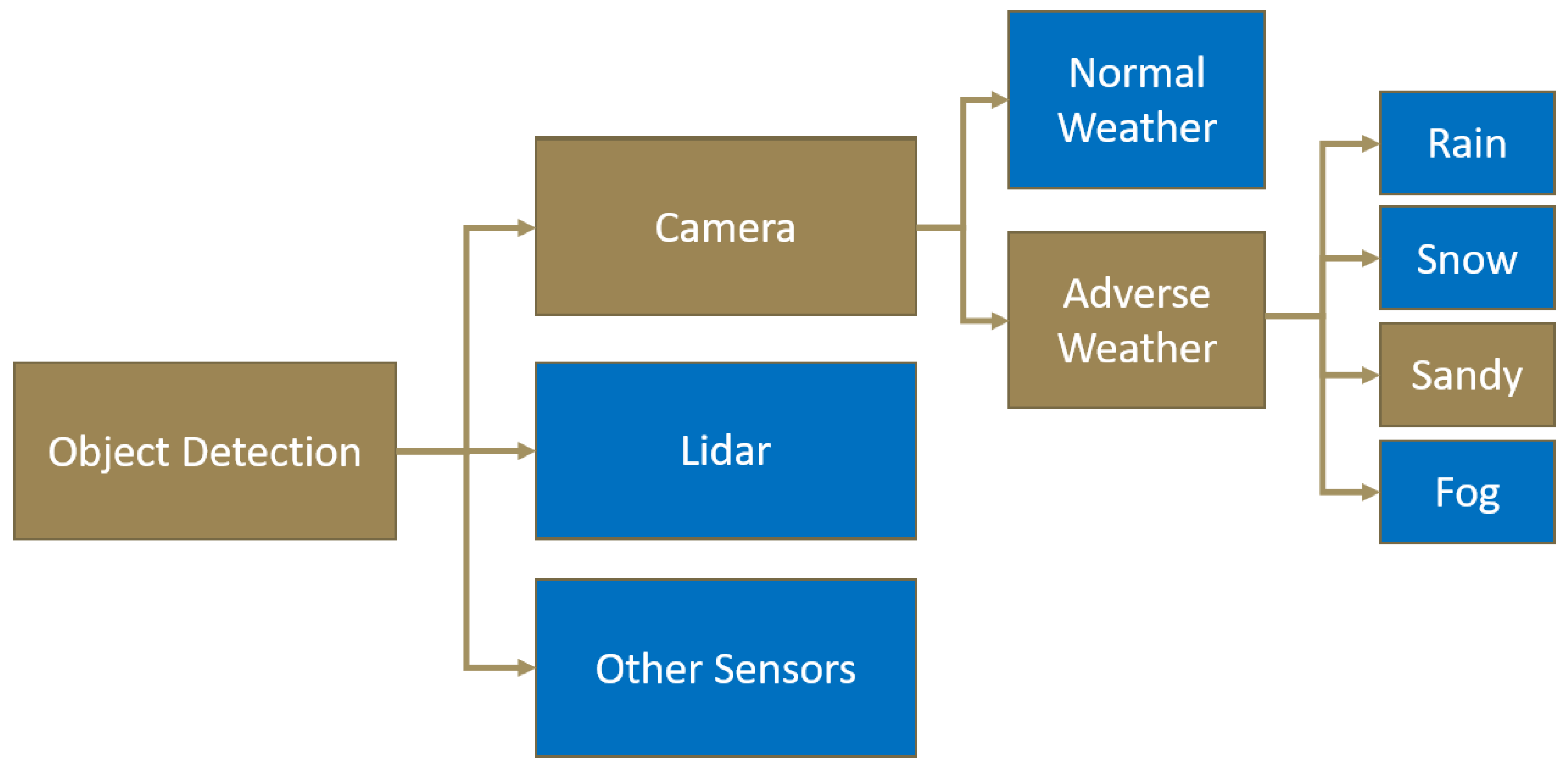

:1. Introduction

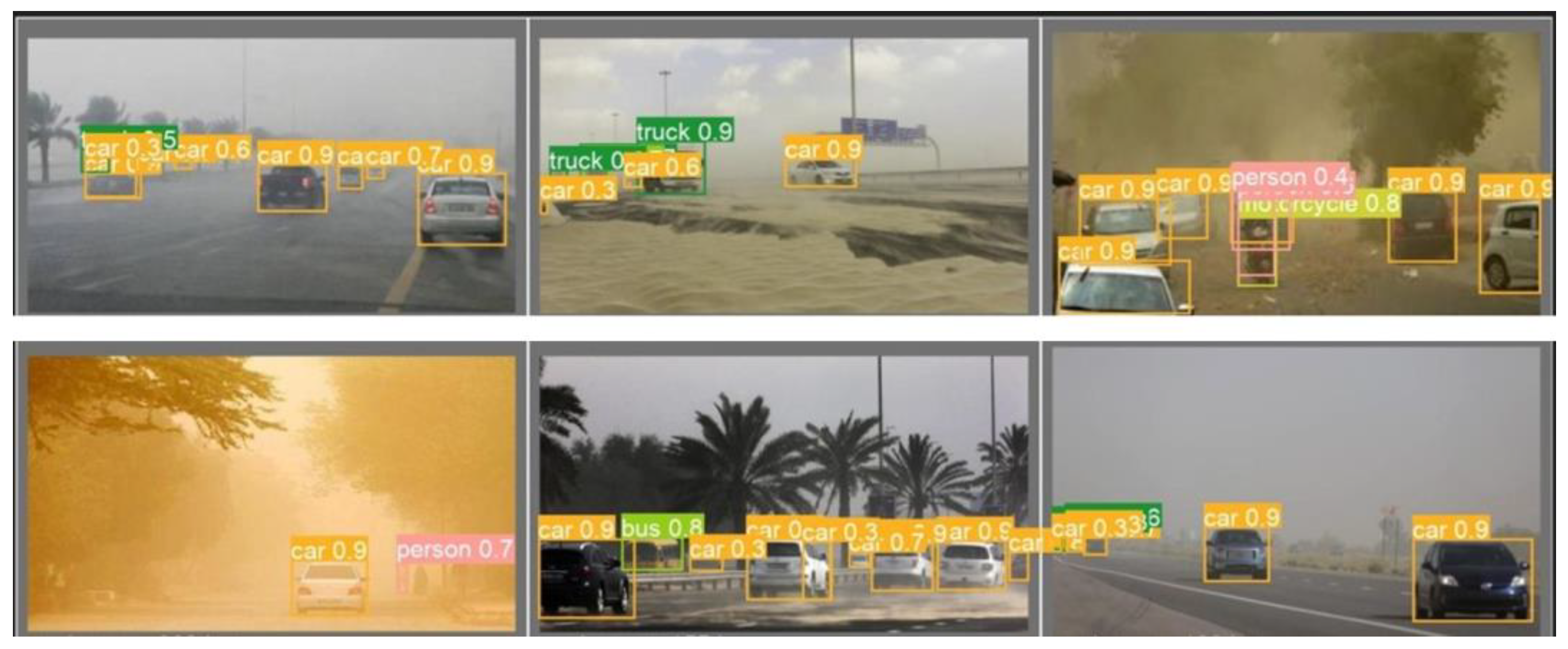

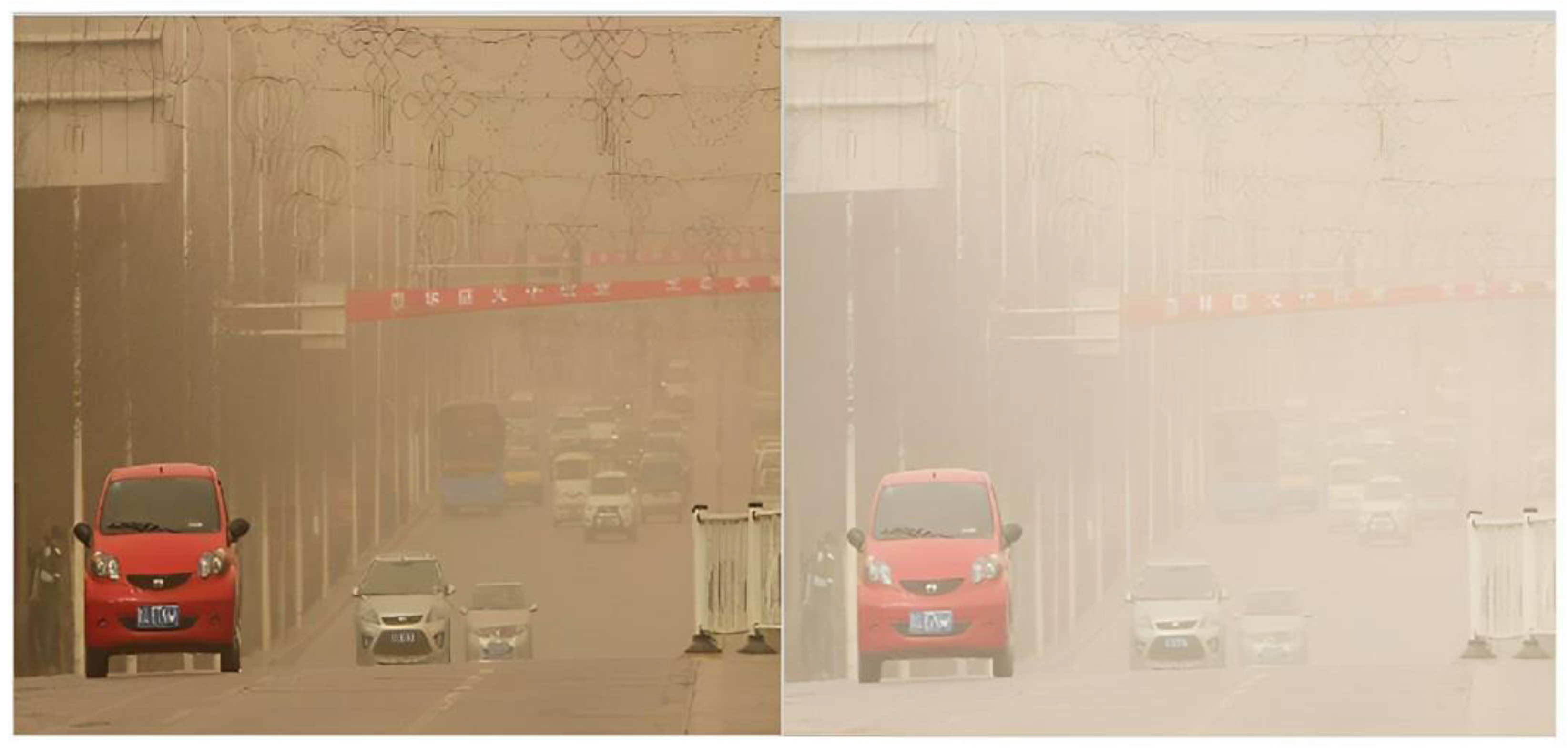

- As a common occurrence in deserts and coastal regions, dust and sand particles in the air can severely impair visibility and reduce the accuracy of object detection algorithms.

- Occlusion is where objects are covered by other objects, making perception very difficult for the deep learning model. Occlusion makes it difficult for detection algorithms to correctly determine object boundaries and characteristics.

- Changes in lighting during storms can affect the performance of cameras and sensors used in object detection.

- Road collisions with wildlife animals are a significant risk for vehicles, and it has been reported that wildlife animals are causing risk for vehicles [12].

- There is a lack of sandy weather datasets, with most of the public datasets focusing on other types of weathers (foggy, snowy, and rainy).

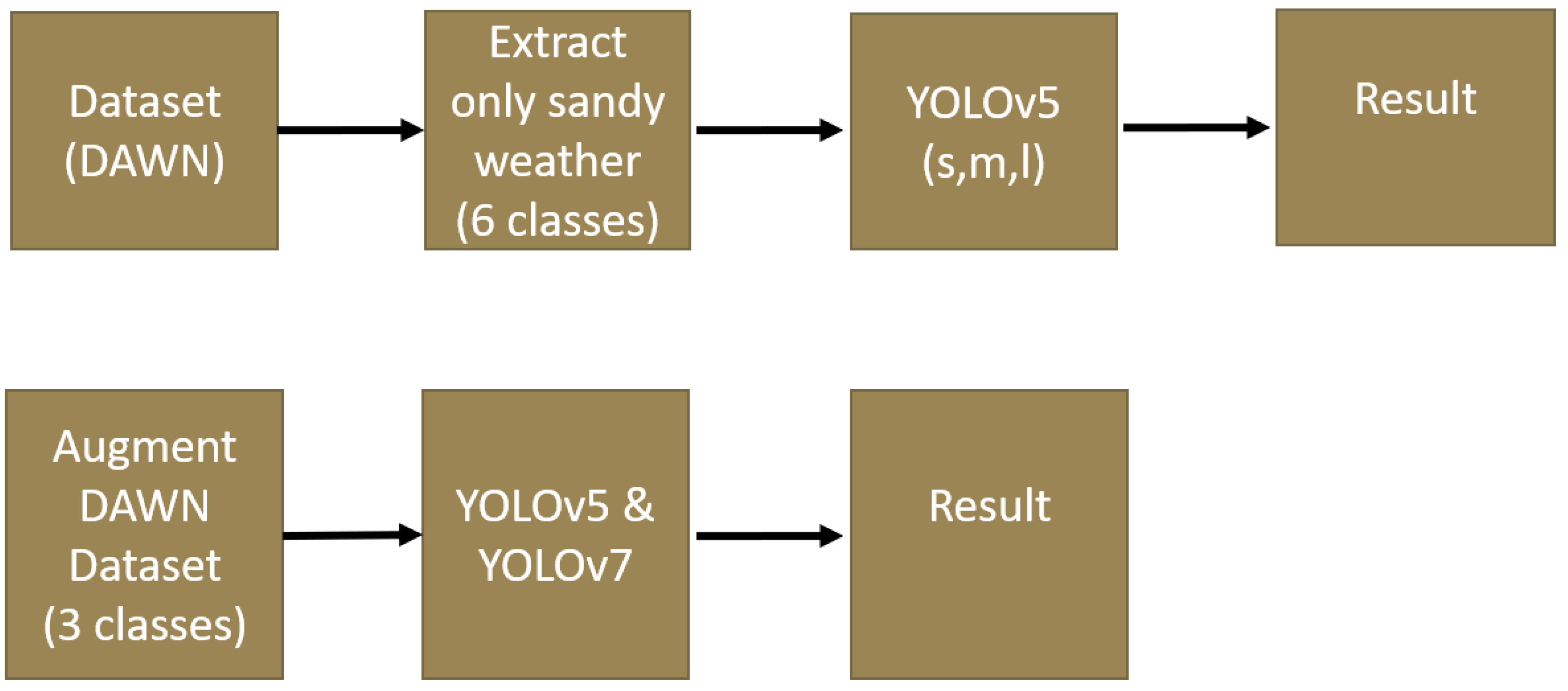

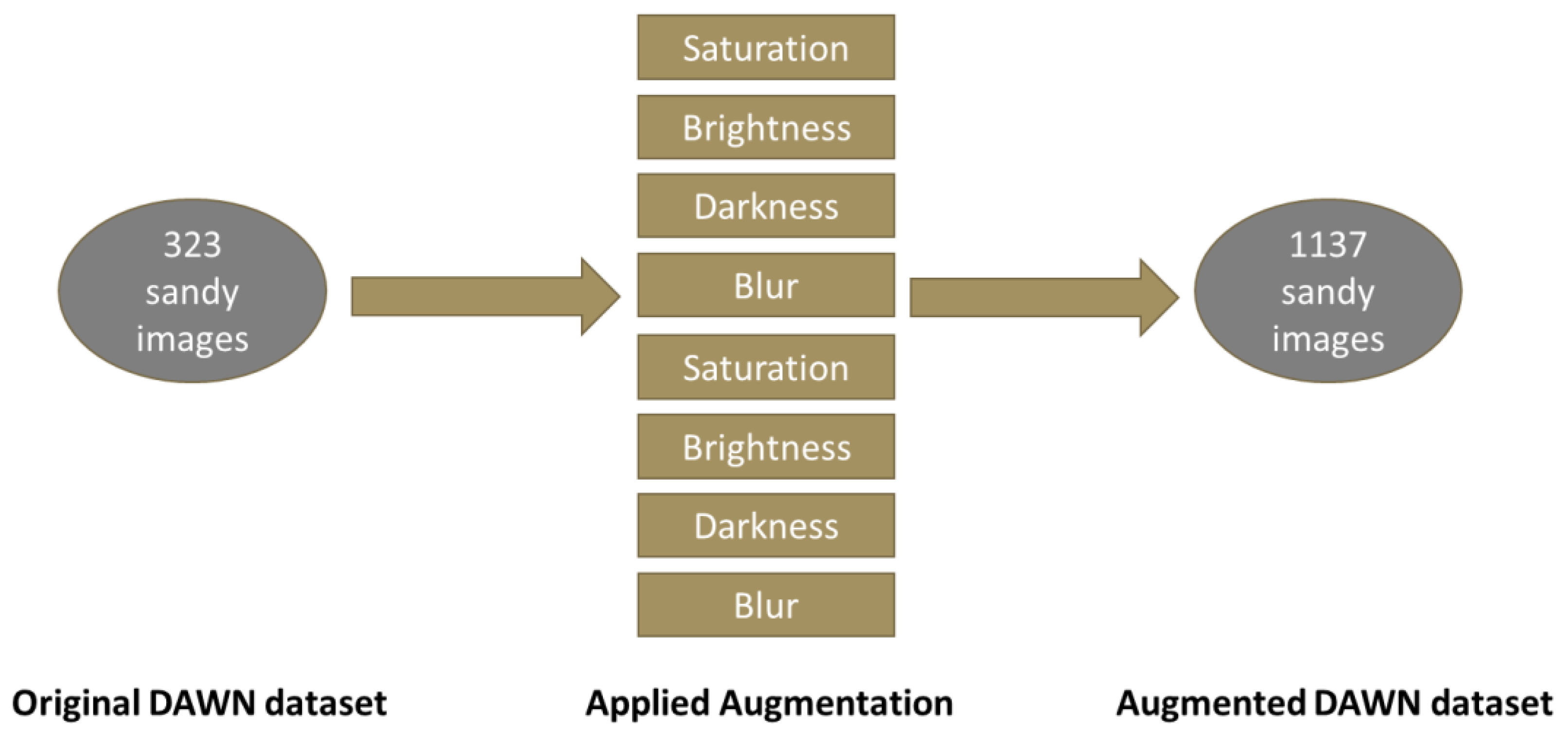

- We extended the Detection in Adverse Weather Nature (DAWN) dataset and added augmented images. The sandy weather dataset was expanded from 323 images to 1137 images. The augmentations that were used include saturation, brightness, darkness, blur, noise, exposure, hue, and gray scale.

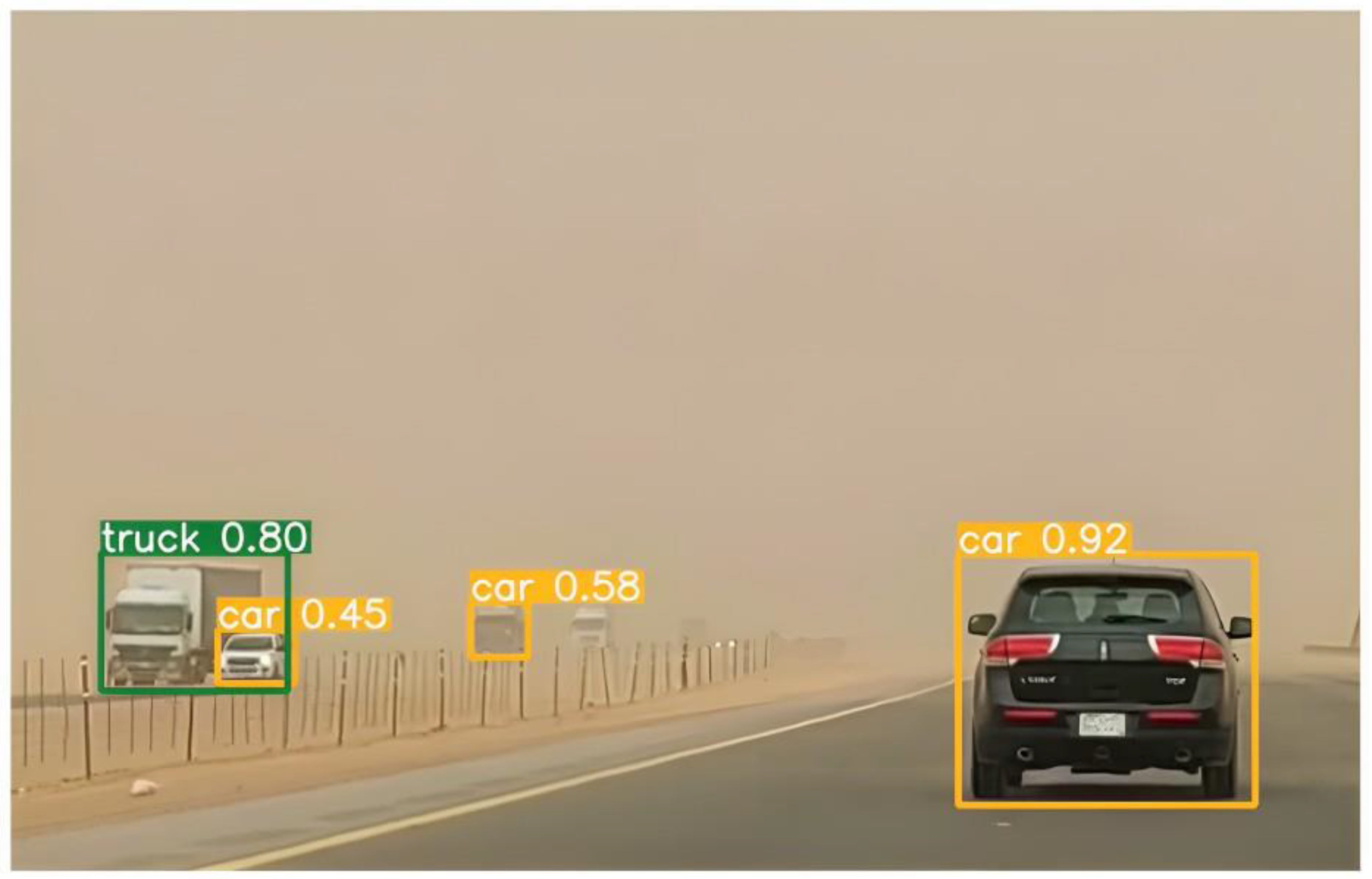

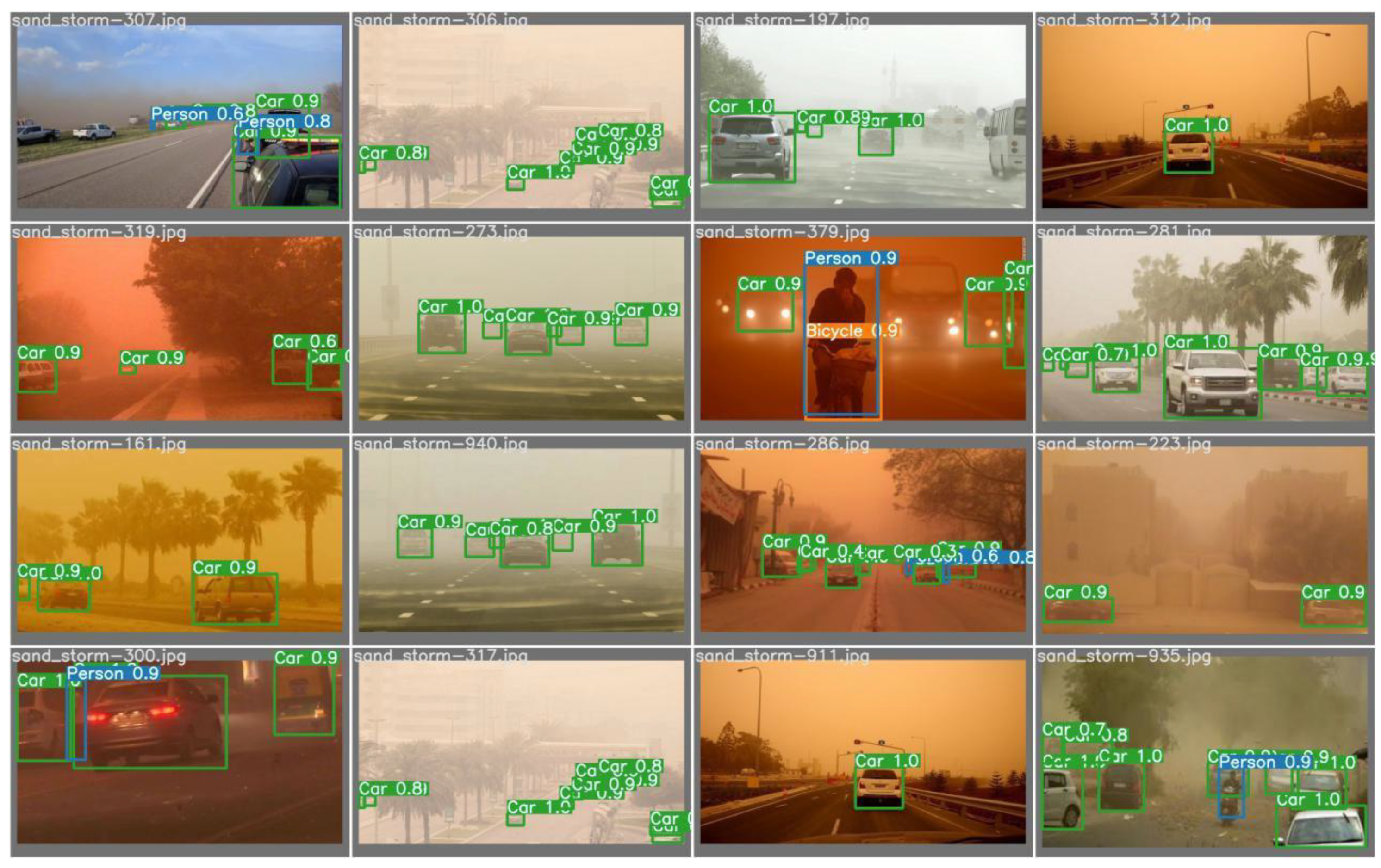

- We used object detection models (YOLOv5 and YOLOv7) as base architectures for detecting three classes of objects (car/vehicle, person, and bicycle) in sandy weather.

- We evaluated different activation functions for detecting objects in sandy weather.

2. Background and Related Work

2.1. Activation Functions

2.1.1. Rectified Linear Unit (ReLU) Function

2.1.2. Leaky Rectified Linear Unit (LeakyReLU) Function

2.1.3. Sigmoid Linear Unit (SiLU) Function

2.2. Object Detection

2.3. Related Work

- Limited resources: there is an absence of reliable datasets accurately depicting sandy conditions.

- Regional and weather bias: the regions where researchers and institutions are located, as well as the weather conditions in those regions, can inadvertently influence the scope of object detection studies.

3. Methodology

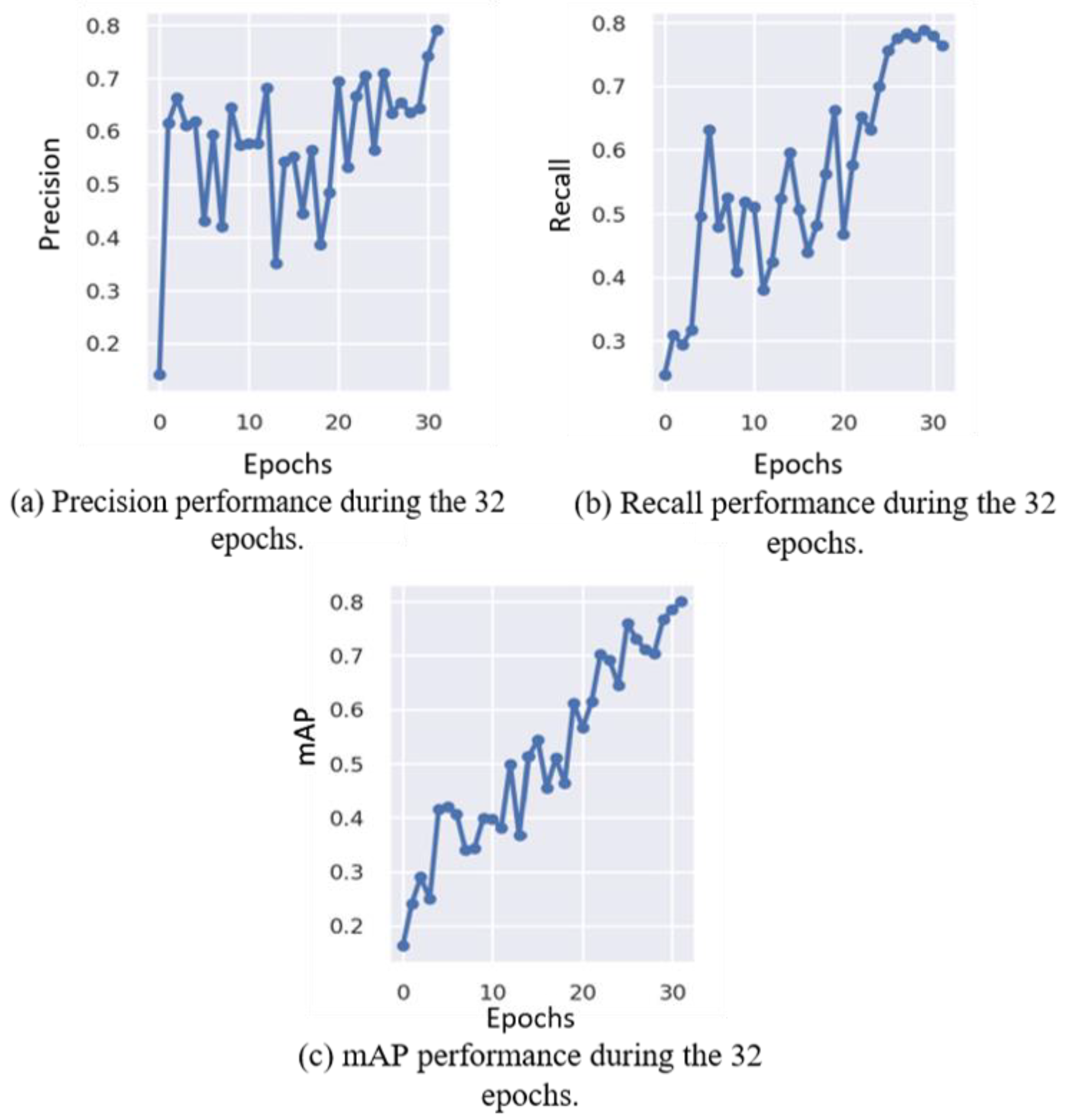

4. Original DAWN and YOLOv5 Results

5. Augmented DAWN Dataset

- Increasing the diversity and variability of the training data, which can help to generalize the model to unrepresented scenarios.

- Improving the model’s robustness against various factors that may affect the object’s appearance or shape, such as different lighting conditions, occlusions, or viewpoint changes.

- Balancing the class distribution in the dataset by oversampling the minority classes or undersampling the majority ones.

- Reducing overfitting by introducing regularization and noise to the training data.

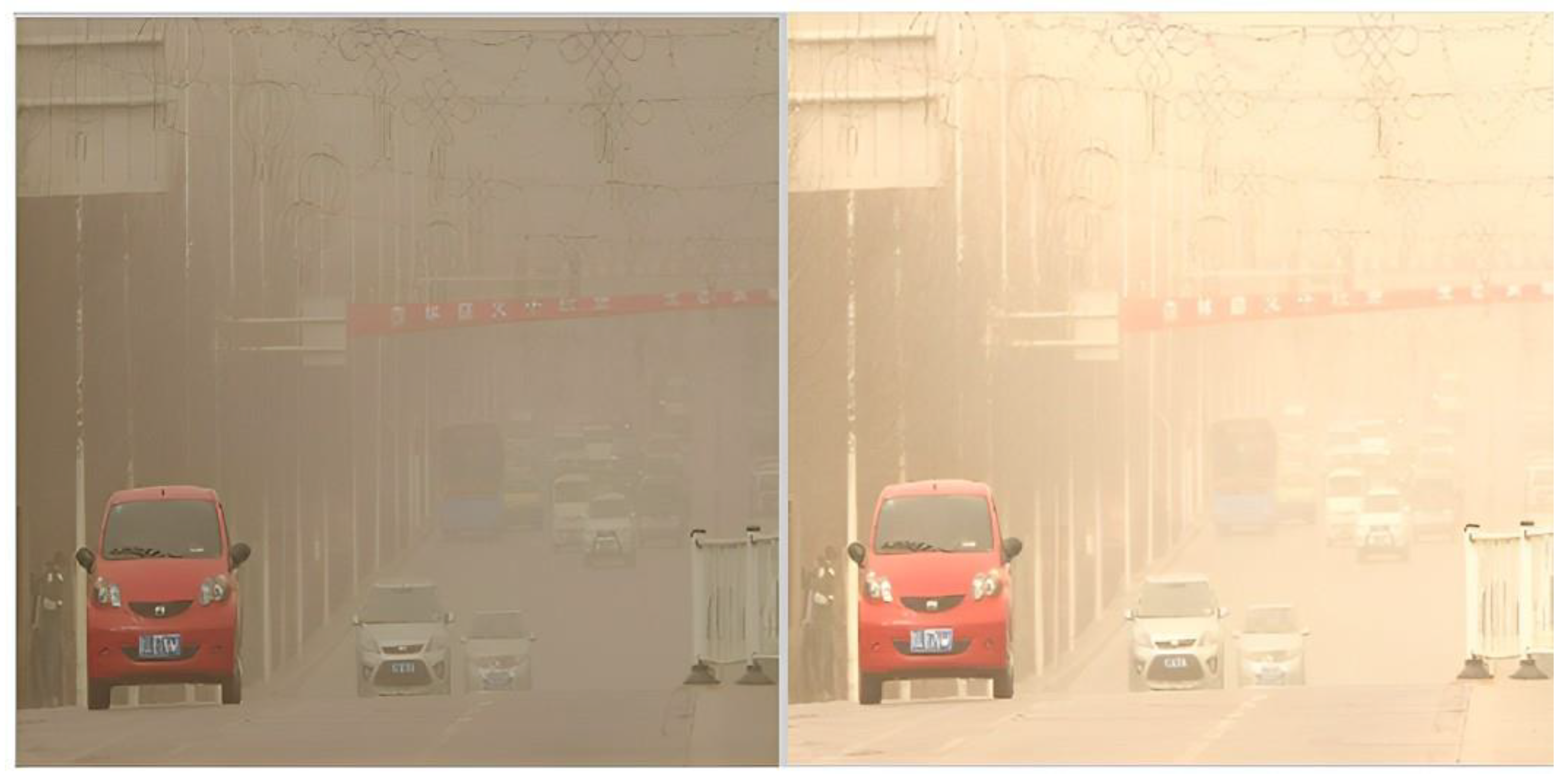

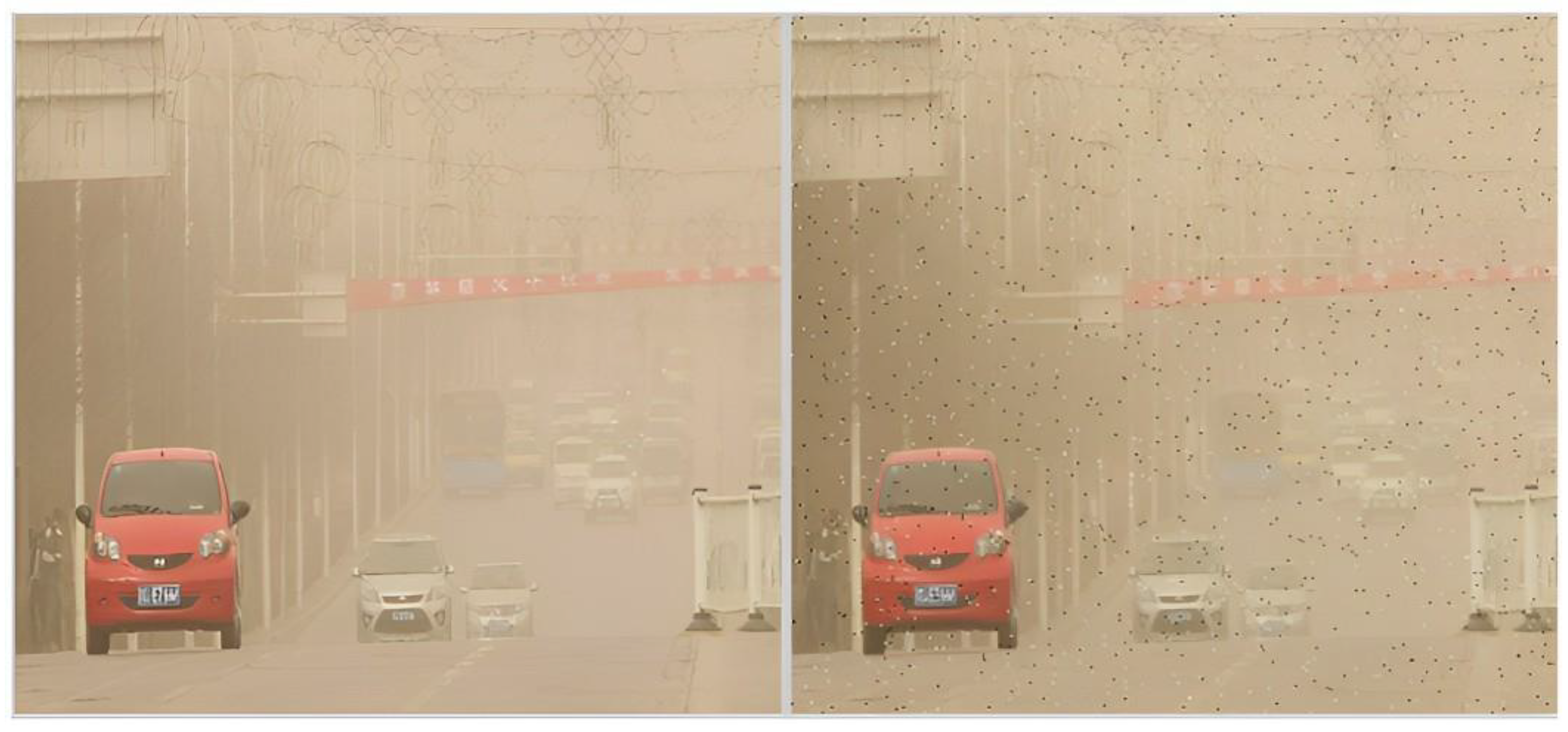

- 5.0.1.

- Blur

- 5.0.2.

- Saturation

- 5.0.3.

- Brightness

- 5.0.4.

- Darkness

- 5.0.5.

- Noise

- 5.0.6.

- Exposure

- 5.0.7.

- Hue

- 5.0.8.

- Grayscale

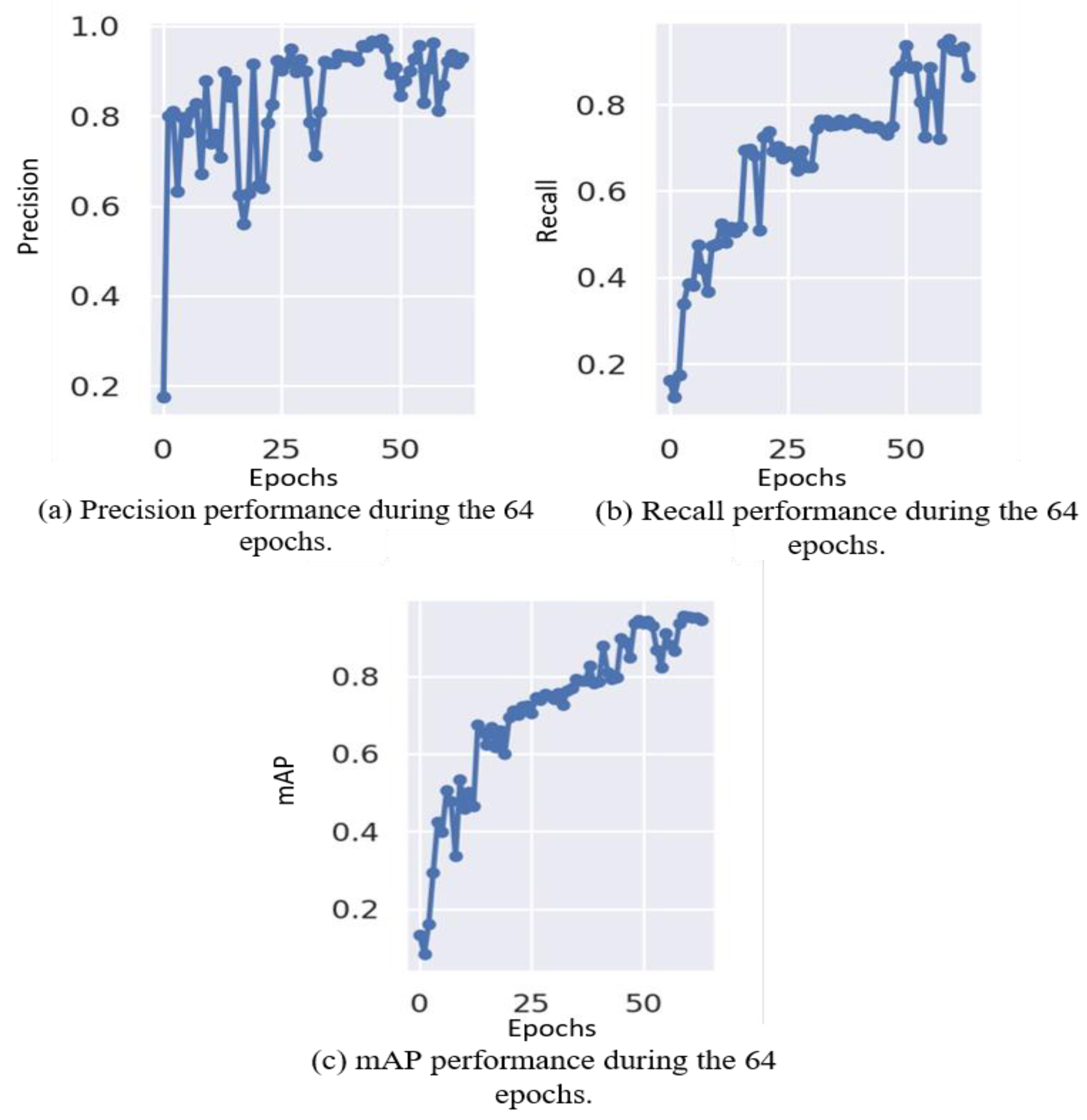

6. Augmented DAWN with YOLOv5 and YOLOv7 Results

7. Conclusions

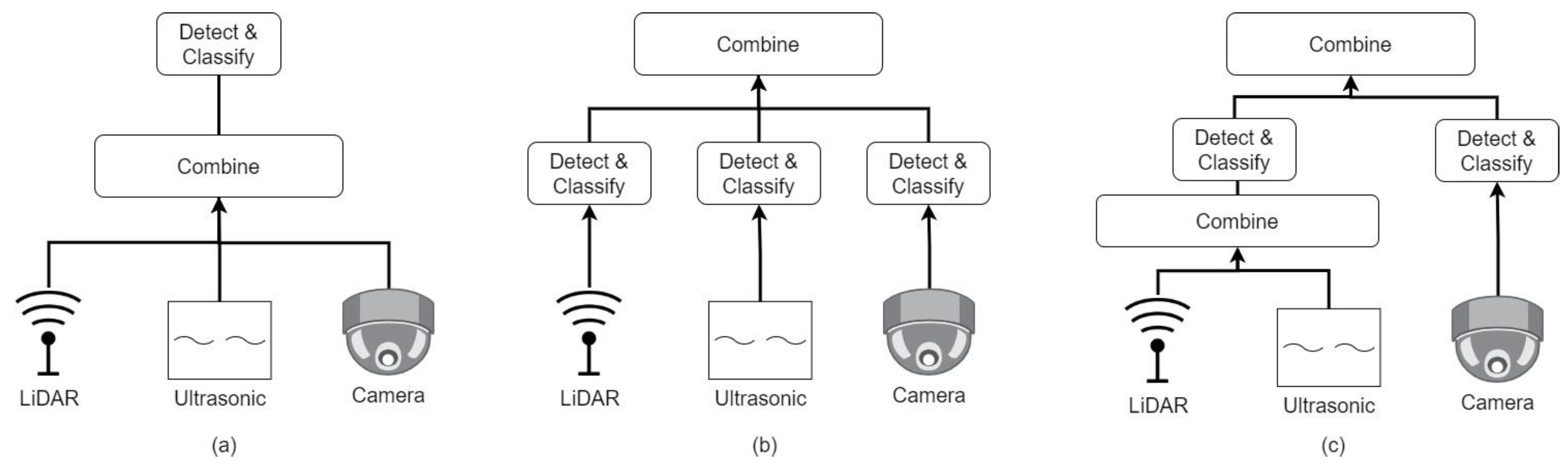

8. Trends and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Liu, T.; Stathaki, T. Faster R-CNN for Robust Pedestrian Detection Using Semantic Segmentation Network. Front. Neurorobot. 2018, 12, 64. [Google Scholar] [CrossRef] [PubMed]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 6569–6578. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Han, J.; Dai, H.; Gu, Z. Sandstorms and desertification in Mongolia, an example of future climate events: A review. Environ. Chem. Lett. 2021, 19, 4063–4073. [Google Scholar] [CrossRef] [PubMed]

- Zijiang, Z.; Ruoyun, N. Climate characteristics of sandstorm in China in recent 47 years. J. Appl. Meteor. Sci. 2002, 13, 193–200. [Google Scholar]

- Hadj-Bachir, M.; de Souza, P.; Nordqvist, P.; Roy, N. Modelling of LIDAR sensor disturbances by solid airborne particles. arXiv 2021, arXiv:2105.04193. [Google Scholar]

- Ferrate, G.S.; Nakamura, L.H.; Andrade, F.R.; Rocha Filho, G.P.; Robson, E.; Meneguette, R.I. Brazilian Road’s Animals (BRA): An Image Dataset of Most Commonly Run Over Animals. In Proceedings of the 2022 35th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Natal, Brazil, 24–27 October 2022; Volume 1, pp. 246–251. [Google Scholar]

- Zhou, D. Real-Time Animal Detection System for Intelligent Vehicles. Ph.D. Thesis, Université d’Ottawa/University of Ottawa, Ottawa, ON, Canada, 2014. [Google Scholar]

- Huijser, M.P.; McGowan, P.; Hardy, A.; Kociolek, A.; Clevenger, A.; Smith, D.; Ament, R. Wildlife-Vehicle Collision Reduction Study: Report to Congress; Federal Highway: Washington, DC, USA, 2017. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Dahl, G.E.; Sainath, T.N.; Hinton, G.E. Improving deep neural networks for LVCSR using rectified linear units and dropout. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8609–8613. [Google Scholar]

- Agarap, A.F. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Zeiler, M.D.; Ranzato, M.; Monga, R.; Mao, M.; Yang, K.; Le, Q.V.; Nguyen, P.; Senior, A.; Vanhoucke, V.; Dean, J.; et al. On rectified linear units for speech processing. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 3517–3521. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the 30th International Conference on Machine Learning, ICML 2013, Atlanta, GA, USA, 16–21 June 2013; Volume 30, p. 3. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 1026–1034. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for activation functions. arXiv 2017, arXiv:1710.05941. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Humayun, M.; Ashfaq, F.; Jhanjhi, N.Z.; Alsadun, M.K. Traffic Management: Multi-Scale Vehicle Detection in Varying Weather Conditions Using YOLOv4 and Spatial Pyramid Pooling Network. Electronics 2022, 11, 2748. [Google Scholar] [CrossRef]

- Wang, R.; Zhao, H.; Xu, Z.; Ding, Y.; Li, G.; Zhang, Y.; Li, H. Real-time vehicle target detection in inclement weather conditions based on YOLOv4. Front. Neurorobot. 2023, 17, 1058723. [Google Scholar] [CrossRef]

- Li, X.; Wu, J. Extracting High-Precision Vehicle Motion Data from Unmanned Aerial Vehicle Video Captured under Various Weather Conditions. Remote Sens. 2022, 14, 5513. [Google Scholar] [CrossRef]

- Liu, W.; Ren, G.; Yu, R.; Guo, S.; Zhu, J.; Zhang, L. Image-adaptive YOLO for object detection in adverse weather conditions. In Proceedings of the AAAI Conference on Artificial Intelligence, Pomona, CA, USA, 24–28 October 2022; Volume 36, pp. 1792–1800. [Google Scholar]

- Huang, S.-C.; Le, T.-H.; Jaw, D.-W. DSNet: Joint Semantic Learning for Object Detection in Inclement Weather Conditions. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2623–2633. [Google Scholar] [CrossRef]

- Sakaridis, C.; Dai, D.; Van Gool, L. Semantic Foggy Scene Understanding with Synthetic Data. Int. J. Comput. Vis. 2018, 126, 973–992. [Google Scholar] [CrossRef]

- Sharma, T.; Debaque, B.; Duclos, N.; Chehri, A.; Kinder, B.; Fortier, P. Deep Learning-Based Object Detection and Scene Perception under Bad Weather Conditions. Electronics 2022, 11, 563. [Google Scholar] [CrossRef]

- Jung, H.-K.; Choi, G.-S. Improved YOLOv5: Efficient Object Detection Using Drone Images under Various Conditions. Appl. Sci. 2022, 12, 7255. [Google Scholar] [CrossRef]

- Abdulghani, A.M.A.; Dalveren, G.G.M. Moving Object Detection in Video with Algorithms YOLO and Faster R-CNN in Different Conditions. Avrupa Bilim Ve Teknol. Derg. 2022, 33, 40–54. [Google Scholar] [CrossRef]

- Zhang, C.; Eskandarian, A. A comparative analysis of object detection algorithms in naturalistic driving videos. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Online, 1–5 November 2021; American Society of Mechanical Engineers: New York, NY, USA, 2021; Volume 85628, p. V07BT07A018. [Google Scholar]

- Dazlee, N.M.A.A.; Khalil, S.A.; Abdul-Rahman, S.; Mutalib, S. Object detection for autonomous vehicles with sensor-based technology using yolo. Int. J. Intell. Syst. Appl. Eng. 2022, 10, 129–134. [Google Scholar] [CrossRef]

- Kenk, M.A.; Hassaballah, M. DAWN: Vehicle detection in adverse weather nature dataset. arXiv 2020, arXiv:2008.05402. [Google Scholar]

- Zoph, B.; Cubuk, E.D.; Ghiasi, G.; Lin, T.Y.; Shlens, J.; Le, Q.V. Learning data augmentation strategies for object detection. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 566–583. [Google Scholar]

- Volk, G.; Muller, S.; von Bernuth, A.; Hospach, D.; Bringmann, O. Towards Robust CNN-based Object Detection through Augmentation with Synthetic Rain Variations. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 285–292. [Google Scholar] [CrossRef]

- Hnewa, M.; Radha, H. Object Detection Under Rainy Conditions for Autonomous Vehicles: A Review of State-of-the-Art and Emerging Techniques. IEEE Signal Process. Mag. 2020, 38, 53–67. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. You only learn one representation: Unified network for multiple tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar]

- Lipkova, J.; Chen, R.J.; Chen, B.; Lu, M.Y.; Barbieri, M.; Shao, D.; Vaidya, A.J.; Chen, C.; Zhuang, L.; Williamson, D.F.; et al. Artificial intelligence for multimodal data integration in oncology. Cancer Cell 2022, 40, 1095–1110. [Google Scholar] [CrossRef] [PubMed]

- Zhou, W.; Liu, W.; Lei, J.; Luo, T.; Yu, L. Deep Binocular Fixation Prediction Using a Hierarchical Multimodal Fusion Network. IEEE Trans. Cogn. Dev. Syst. 2021, 15, 476–486. [Google Scholar] [CrossRef]

- Yang, F.; Peng, X.; Ghosh, G.; Shilon, R.; Ma, H.; Moore, E.; Predovic, G. Exploring deep multimodal fusion of text and photo for hate speech classification. In Proceedings of the Third Workshop on Abusive Language Online, Florence, Italy, 1 August 2019; pp. 11–18. [Google Scholar]

- Mou, L.; Zhou, C.; Zhao, P.; Nakisa, B.; Rastgoo, M.N.; Jain, R.; Gao, W. Driver stress detection via multimodal fusion using attention-based CNN-LSTM. Expert Syst. Appl. 2021, 173, 114693. [Google Scholar] [CrossRef]

- Zhao, X.; Sun, P.; Xu, Z.; Min, H.; Yu, H.K. Fusion of 3D LIDAR and Camera Data for Object Detection in Autonomous Vehicle Applications. IEEE Sens. J. 2020, 20, 4901–4913. [Google Scholar] [CrossRef]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. Pointpainting: Sequential fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4604–4612. [Google Scholar]

- Wang, B.; Wei, B.; Kang, Z.; Hu, L.; Li, C. Fast color balance and multi-path fusion for sandstorm image enhancement. Signal Image Video Process. 2021, 15, 637–644. [Google Scholar] [CrossRef]

- Shi, F.; Jia, Z.; Lai, H.; Song, S.; Wang, J. Sand Dust Images Enhancement Based on Red and Blue Channels. Sensors 2022, 22, 1918. [Google Scholar] [CrossRef]

- Valanarasu, J.M.J.; Yasarla, R.; Patel, V.M. Transweather: Transformer-based restoration of images degraded by adverse weather conditions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2353–2363. [Google Scholar]

| Reference | Dataset | Augmentation | Number of Classes | Model |

|---|---|---|---|---|

| [27] | DAWN | Yes | 1 | YOLOv4 |

| [28] | BDD-IW | Yes | 1 | YOLOv4 |

| [29] | MWVD | No | 1 | YOLOv5 |

| [30] | VOC RTTS | Yes | 5 | YOLOv3 |

| [31] | Foggy | No | 1 | DSNet |

| [33] | Collected dataset | No | 11 | YOLOv5 |

| [35] | Open Image | No | 4 | YOLOv4 Faster |

| [36] | COCO BDD100K | No | All COCO | YOLOv4 DSSD |

| [37] | KITTI | No | 3 | Tiny YOLO Complex YOLO |

| Ours | DAWN Aug. DAWN | No Yes | 6 3 | YOLO5 YOLOv7 |

| Ref. | Sandy | Foggy | Snowy | Rainy |

|---|---|---|---|---|

| [28] | ✕ | ✓ | ✓ | ✓ |

| [29] | ✕ | ✕ | ✓ | ✓ |

| [30] | ✕ | ✓ | ✕ | ✕ |

| [31] | ✕ | ✓ | ✕ | ✕ |

| [33] | ✕ | ✕ | ✕ | ✓ |

| [34] | ✕ | ✕ | ✓ | ✓ |

| [35] | ✕ | ✓ | ✓ | ✓ |

| Model | Function | Image Size | mAP | Precision | Recall |

|---|---|---|---|---|---|

| YOLO5s | SiLU | 640 | 80% | 79% | 76% |

| YOLO5s | ReLU | 640 | 75% | 59% | 55% |

| YOLO5s | LeakyReLU | 640 | 71% | 10% | 11% |

| YOLO5m | SiLU | 640 | 85% | 73% | 69% |

| YOLO5m | ReLU | 640 | 82% | 80% | 70% |

| YOLO5m | LeakyReLU | 640 | 88% | 97% | 12% |

| Augmentation | Value | Impact |

|---|---|---|

| Blur | 1.25 px | Averaging pixel values within neighboring ones. |

| Saturation | 50% | Changes the intensity of pixels. |

| Brightness | 20% | Image appears lighter. |

| Darkness | 20% | Image appears darker. |

| Noise | Random noise added | More obstacles added to the image. |

| Exposure | 15% | More resilient to lighting and camera setting changes. |

| Hue | 90% | Random adjustment of colors. |

| Grayscale | 25% | Converts image to single channel. |

| Model | mAP | Function | Car mAP | Person mAP | Bicycle mAP |

|---|---|---|---|---|---|

| YOLOv5s | 77% | SiLU | 82% | 84% | 64% |

| YOLOv5s | 73% | ReLU | 83% | 83% | 54% |

| YOLOv5s | 75% | LeakyReLU | 85% | 84% | 55% |

| YOLOv5m | 77% | SiLU | 85% | 87% | 58% |

| YOLOv5m | 77% | ReLU | 87% | 86% | 58% |

| YOLOv5m | 79% | LeakyReLU | 86% | 87% | 65% |

| YOLOv5l | 82% | SiLU | 86% | 87% | 73% |

| YOLOv5l | 78% | ReLU | 88% | 86% | 60% |

| YOLOv5l | 79% | LeakyReLU | 86% | 85% | 66% |

| YOLOv7 | 76% | LeakyReLU | 95% | 85% | 49% |

| YOLOv7 | 94% | SiLU | 96% | 89% | 97% |

| Activation Function | mAP |

|---|---|

| SiLU | 82% |

| ReLU | 76% |

| LeakyReLU | 77% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aloufi, N.; Alnori, A.; Thayananthan, V.; Basuhail, A. Object Detection Performance Evaluation for Autonomous Vehicles in Sandy Weather Environments. Appl. Sci. 2023, 13, 10249. https://doi.org/10.3390/app131810249

Aloufi N, Alnori A, Thayananthan V, Basuhail A. Object Detection Performance Evaluation for Autonomous Vehicles in Sandy Weather Environments. Applied Sciences. 2023; 13(18):10249. https://doi.org/10.3390/app131810249

Chicago/Turabian StyleAloufi, Nasser, Abdulaziz Alnori, Vijey Thayananthan, and Abdullah Basuhail. 2023. "Object Detection Performance Evaluation for Autonomous Vehicles in Sandy Weather Environments" Applied Sciences 13, no. 18: 10249. https://doi.org/10.3390/app131810249

APA StyleAloufi, N., Alnori, A., Thayananthan, V., & Basuhail, A. (2023). Object Detection Performance Evaluation for Autonomous Vehicles in Sandy Weather Environments. Applied Sciences, 13(18), 10249. https://doi.org/10.3390/app131810249