A Semi-Supervised Learning Approach for Automatic Detection and Fashion Product Category Prediction with Small Training Dataset Using FC-YOLOv4

Abstract

:1. Introduction

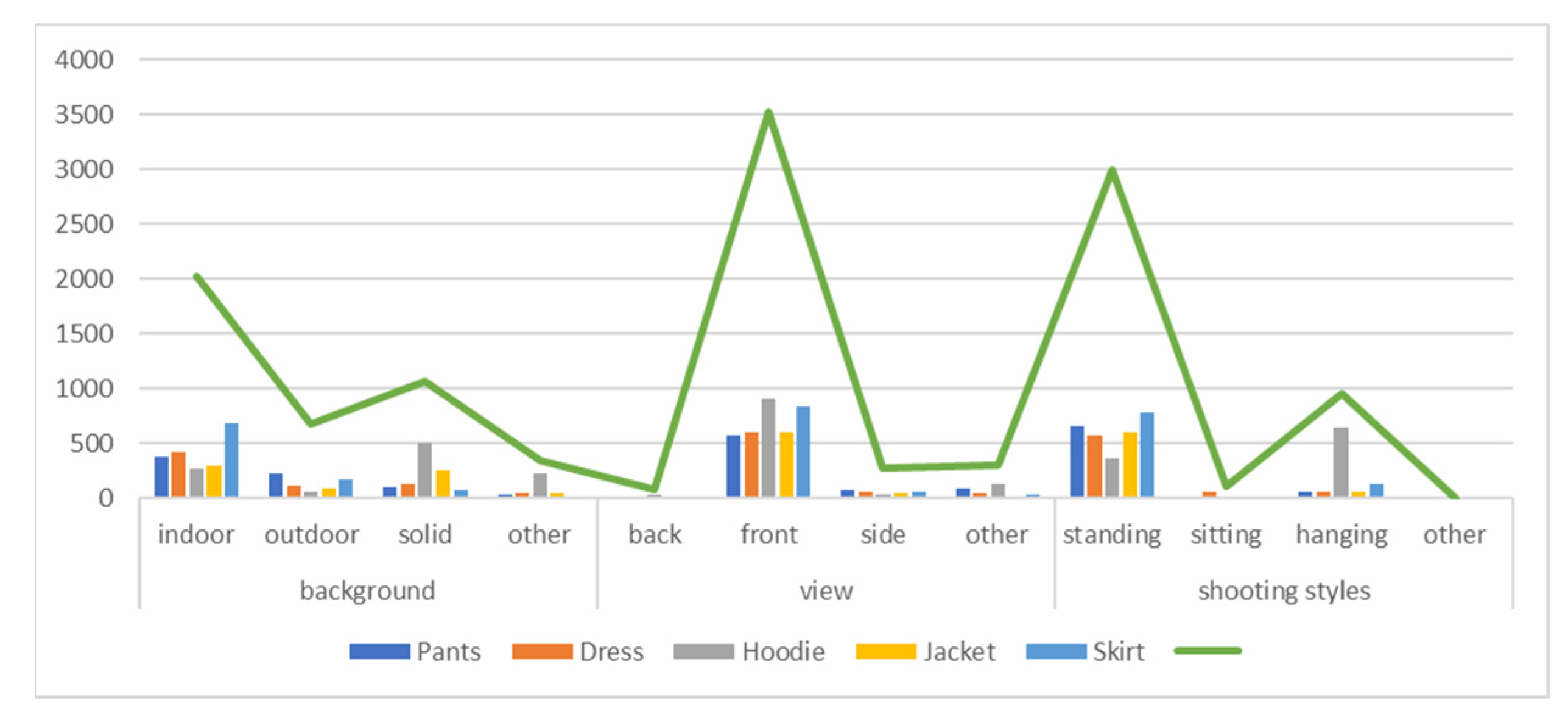

- We present a recently acquired, tiny dataset from the Shopee E-commerce (Thailand) website. Skirt, Dress, Pants, Hoodie, and Jacket, with a total of 4116 photographs, were compiled under the category “women.” Images of various resolutions, sizes, and positions are gathered in accordance with the website.

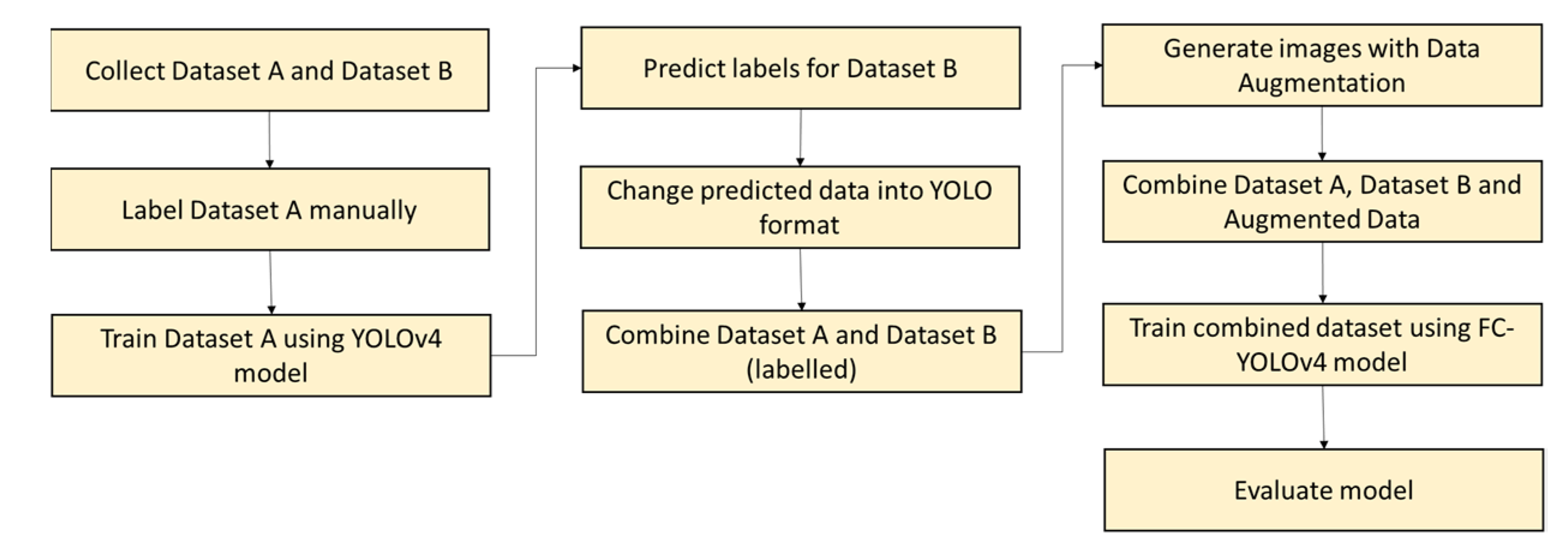

- We use the semi-supervised learning approach for automatically annotating data to save manual tasks and increase the number of images by using image augmentation to improve object detection accuracy.

- We propose an accurate image-based classification model utilizing FC-YOLOv4 that can classify Shopee E-commerce products’ images and identify their types.

- To bring network performance and feature improvements, we increase the quantity of short-circuiting and stacking.

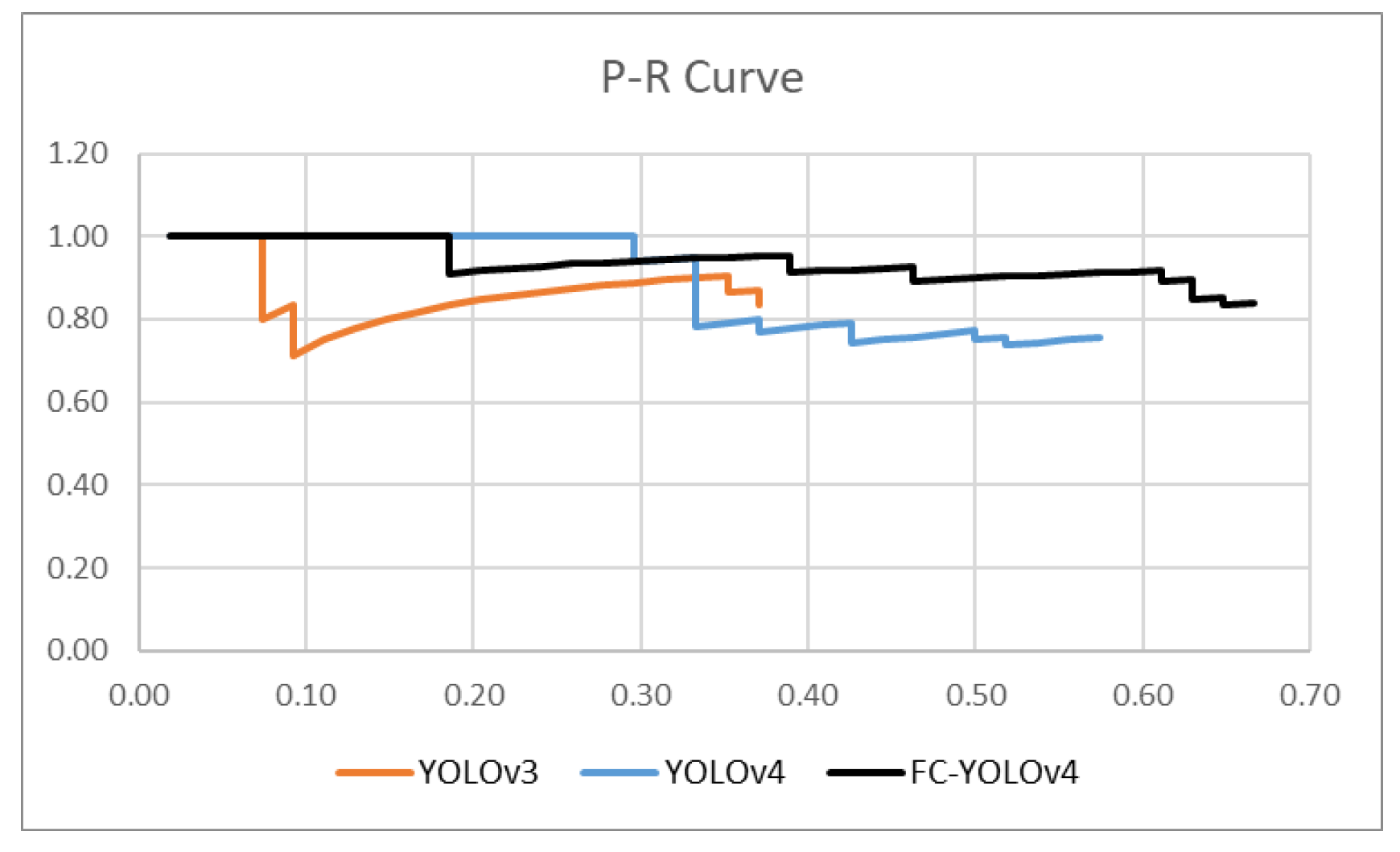

- To assess and evaluate the applicability and efficiency of the proposed method in fashion category recognition, we evaluated a model with a photo with a cluttered background and compared our FC-YOLOv4 model to YOLOv4 and YOLOv3 with detection time and accuracy as a benchmark.

2. Related Works

2.1. Research on Apparel Detection and Classification

2.2. State-of-the-Art Object Detection Algorithms

2.3. Development of YOLO Versions

3. Materials and Methods

3.1. Dataset Collection and Pre-Processing

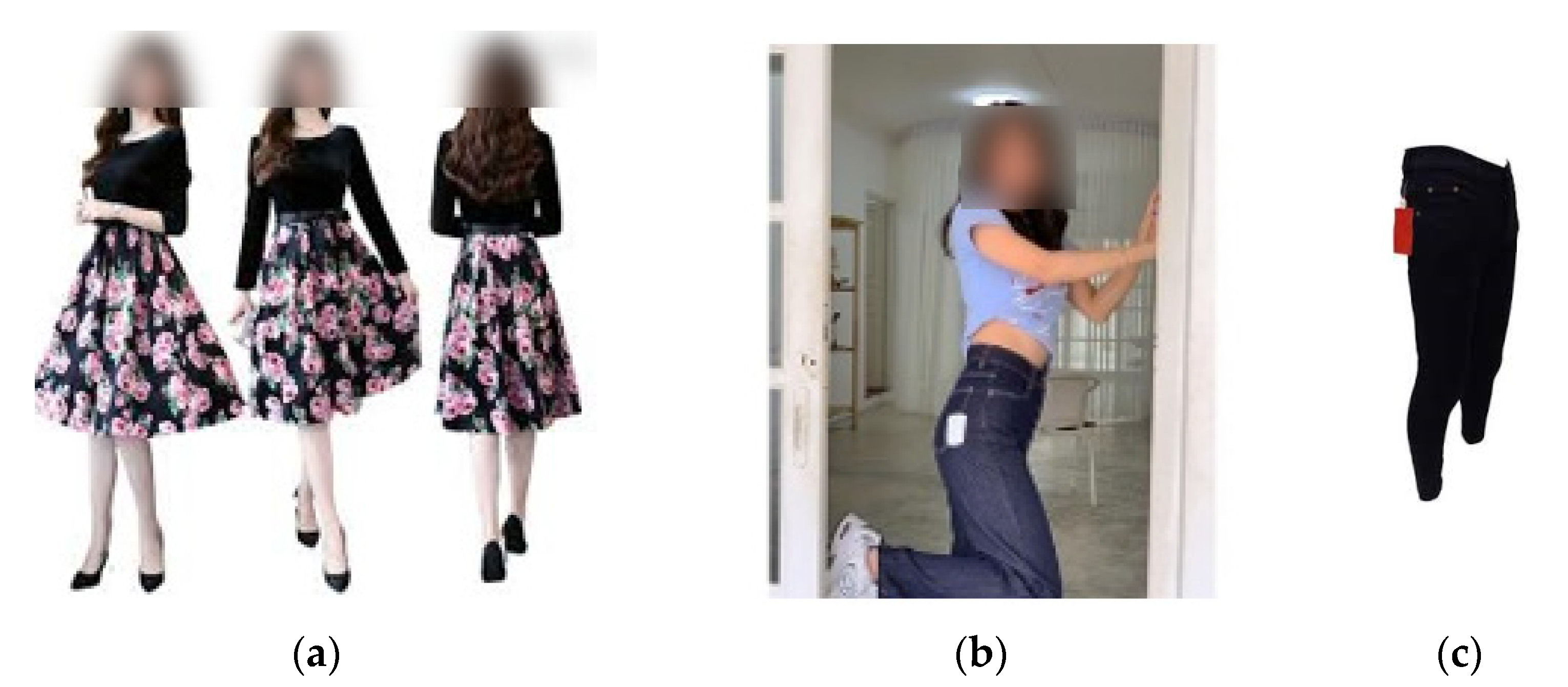

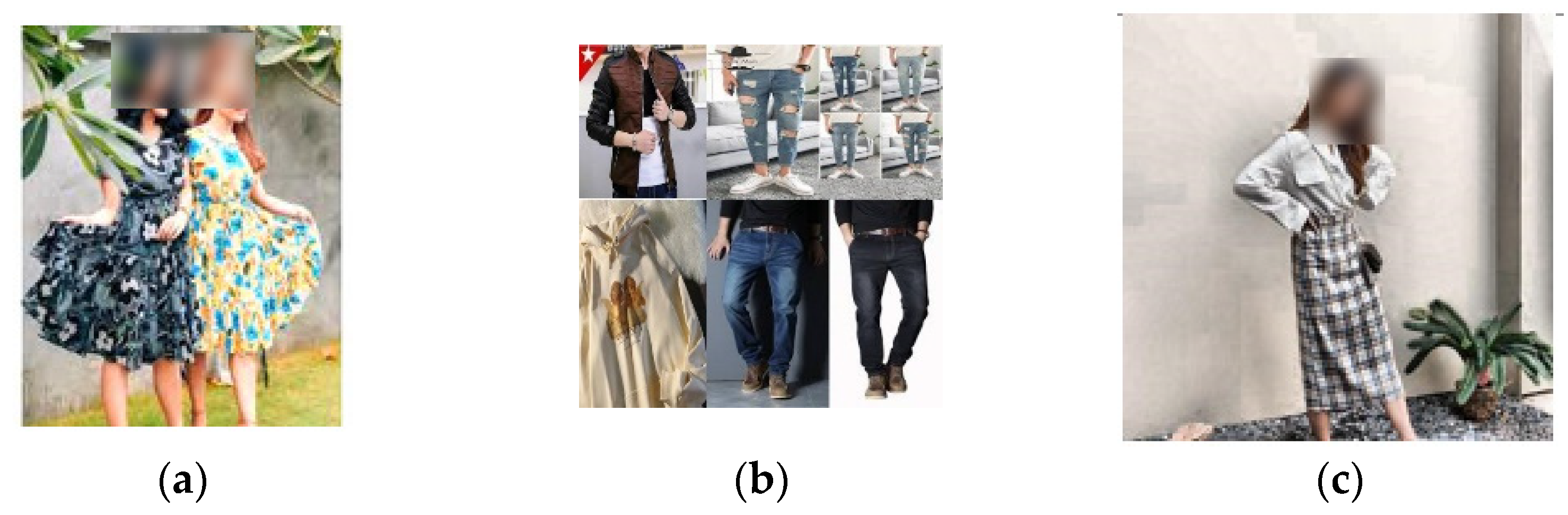

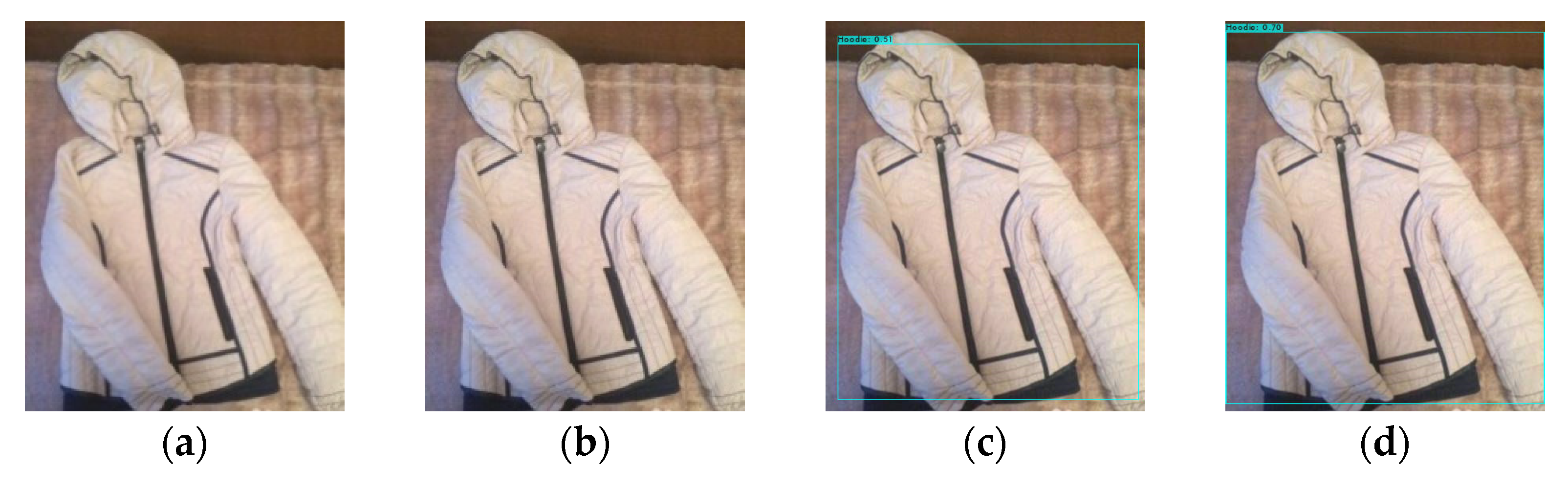

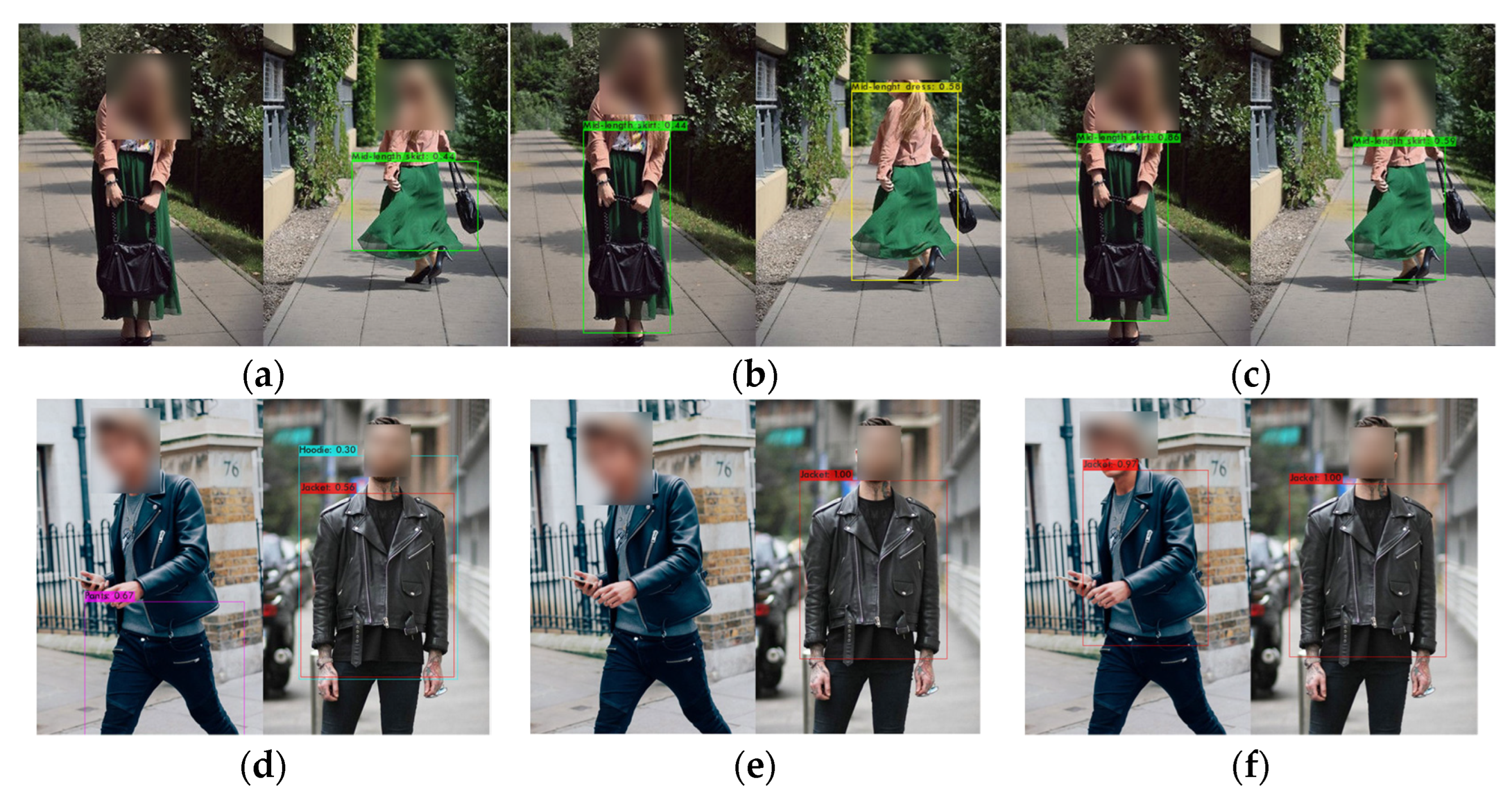

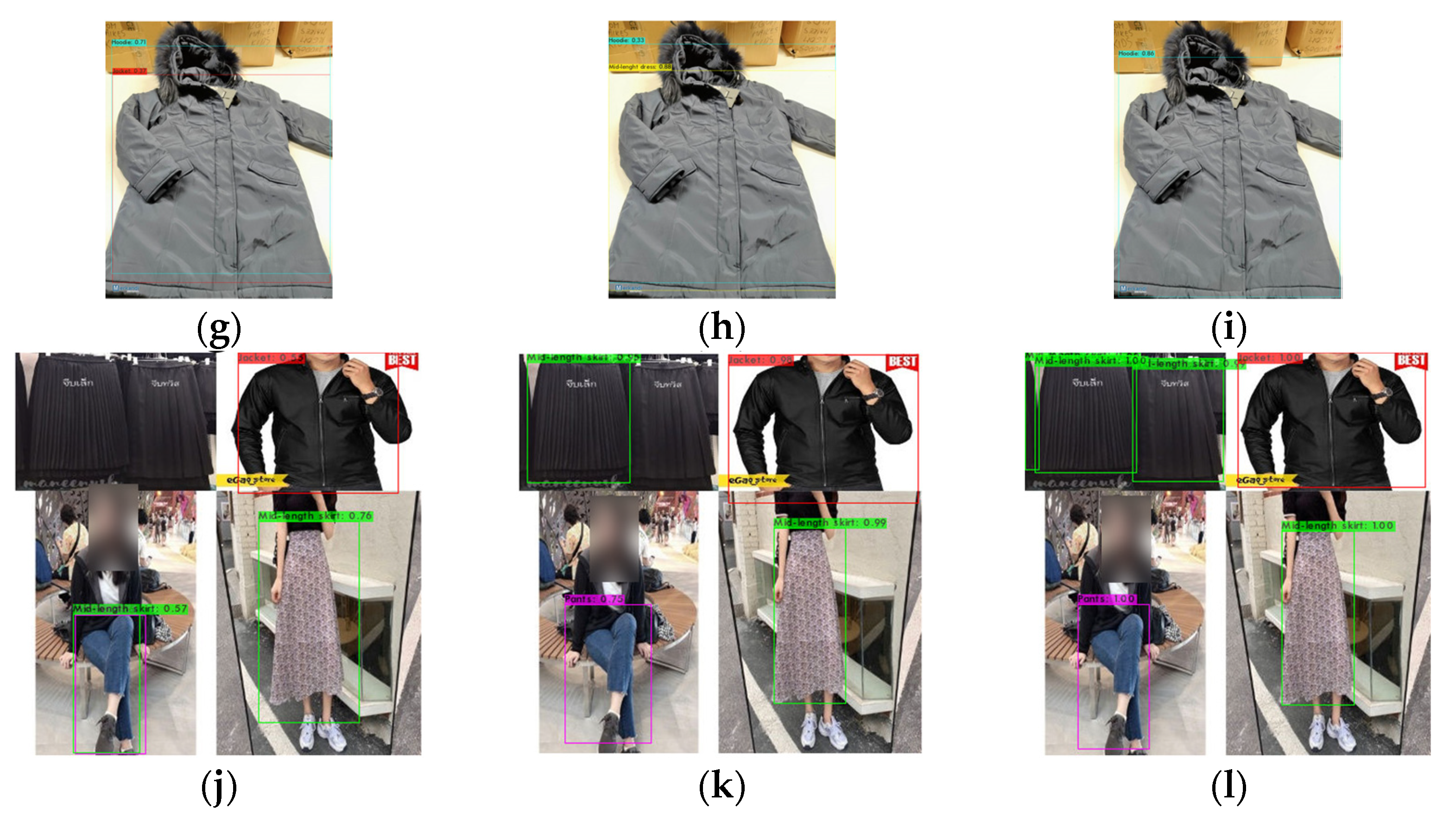

- As depicted in Figure 1a, depending on the point of view, the same clothes can be considered in different categories (e.g., skirt or dress category), and different clothing can be considered in the same category.

- Figure 1b illustrates that the clothing form can be simply modified by stretching, folding, hanging, and changing the model position.

- As demonstrated in Figure 1c, the same category can look different due to varying perspectives and illumination, cluttered backdrops, and being partially obscured by other things or people.

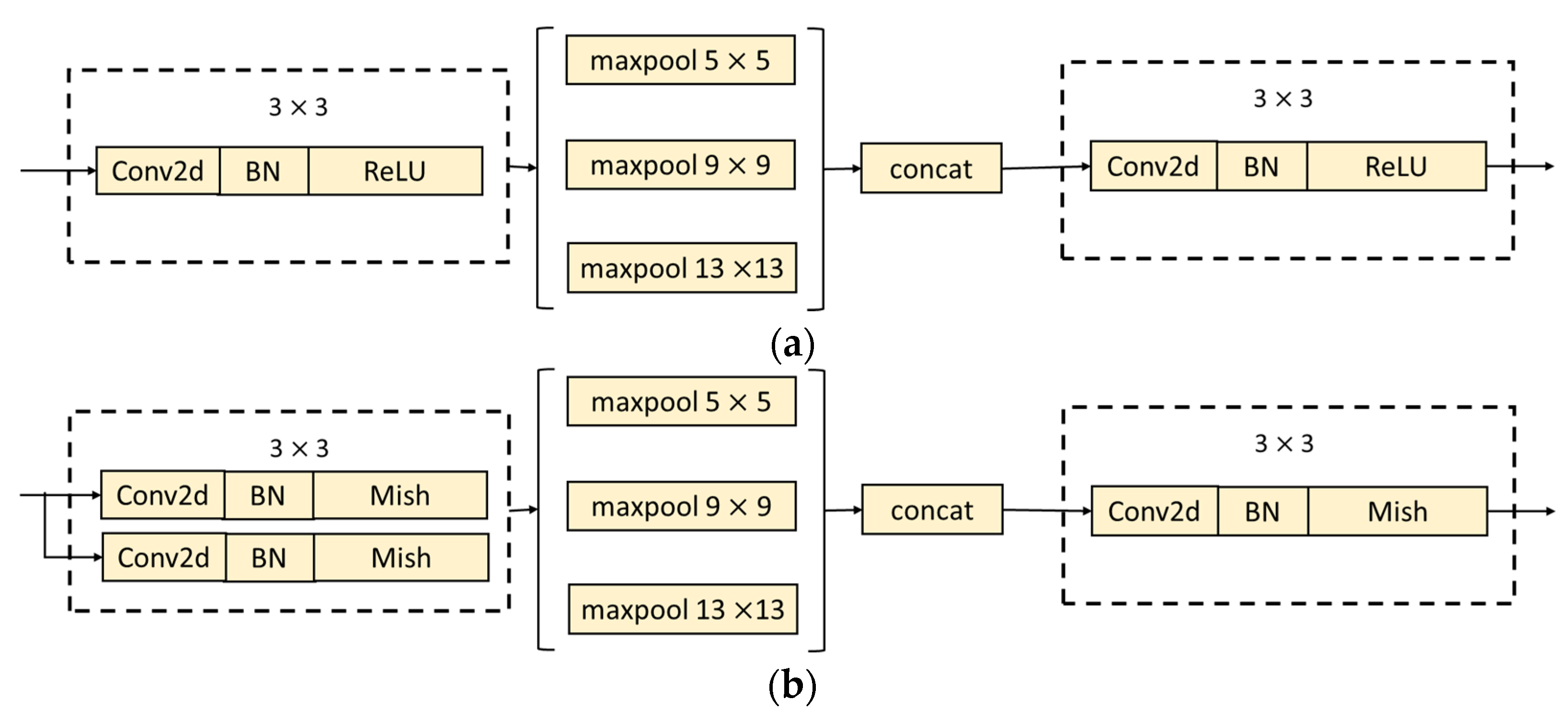

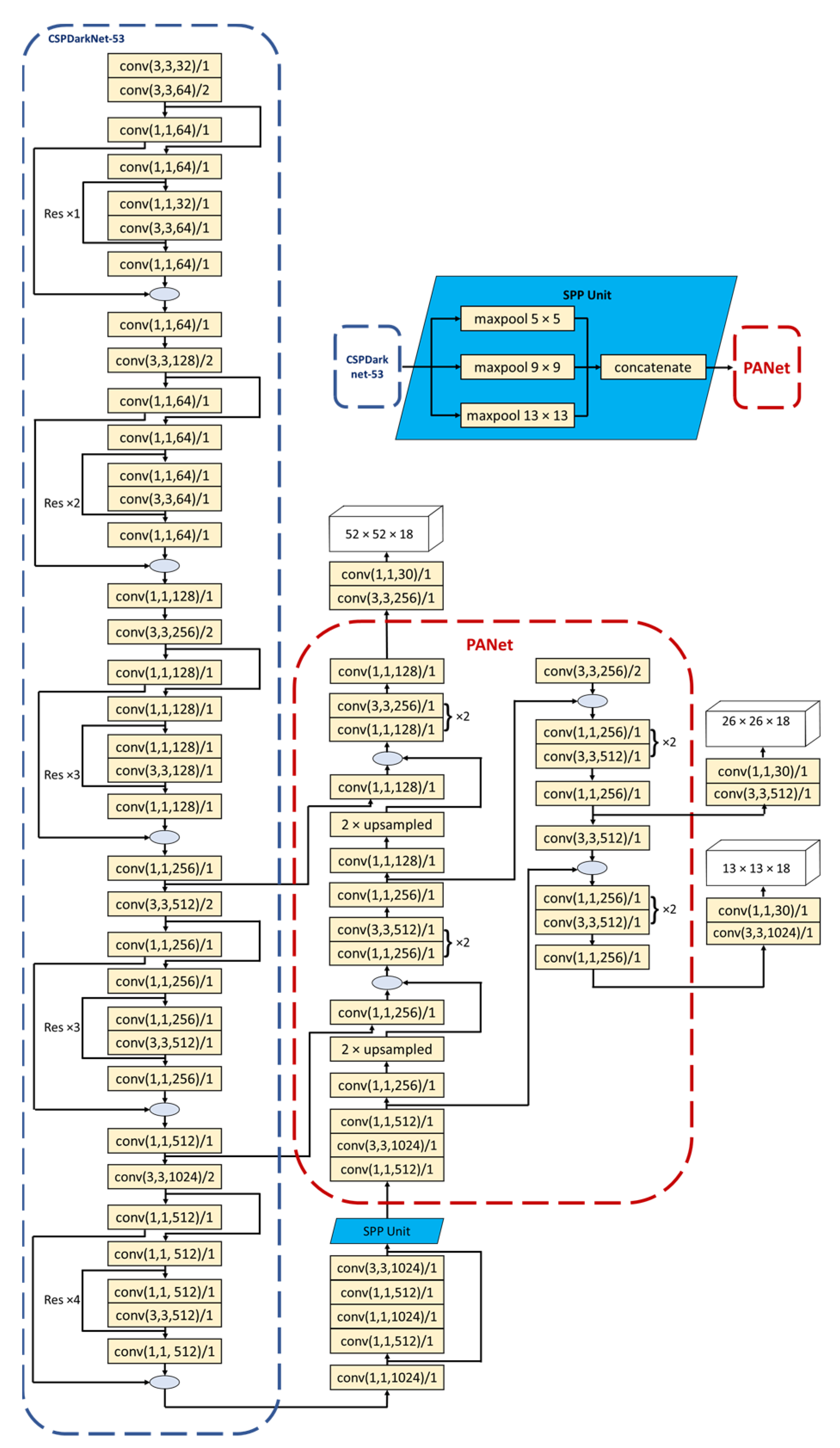

3.2. Traditional YOLOv4 Architecture

3.3. Modified Part of Proposed FC-YOLOv4 Architecture

3.4. Experimental Setup and Procedure Description

| Algorithm 1 The pseudocode of pseudo-labeling to change from detection output format to YOLO format |

| Input: the image and its corresponding text File |

| Output: the text file with YOLO bounding box format |

| Procedure: |

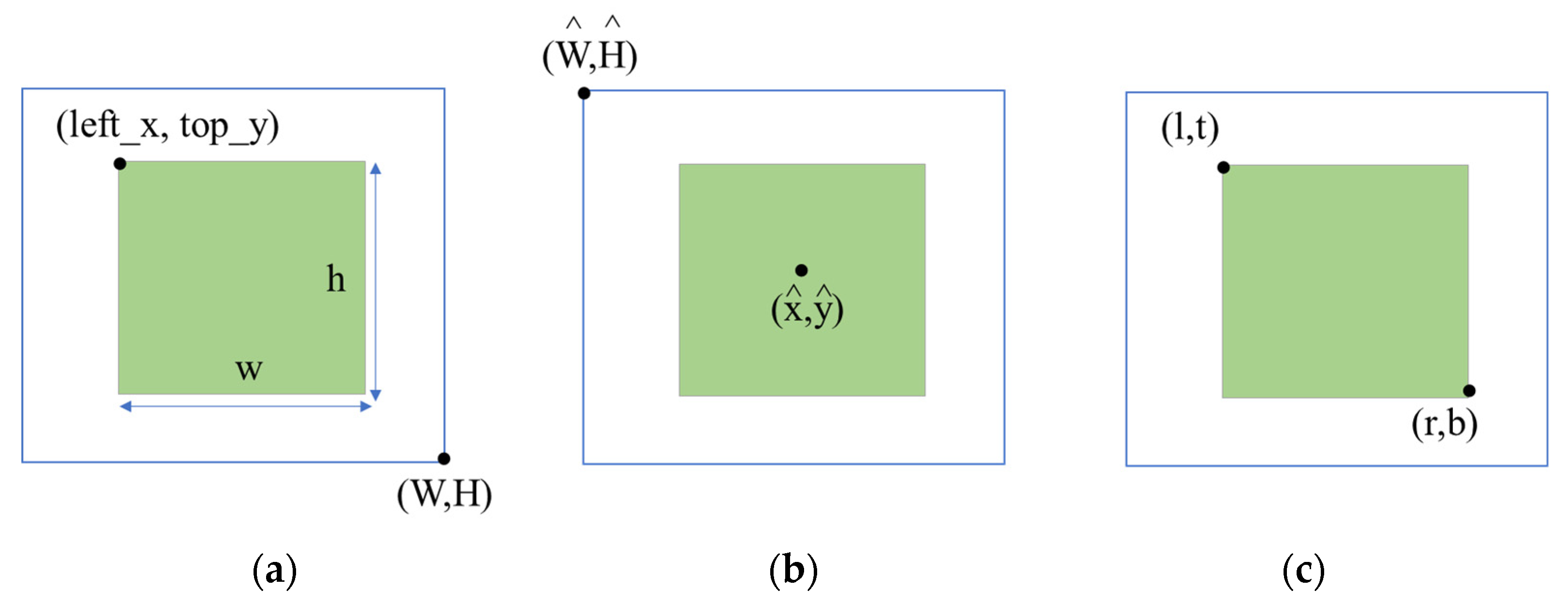

1. classes ← an array of categories list [’pants,’ ’mid-length dress,’ ’hoodie,’ ’jacket,’ ’mid-length skirt’] 2. H ← the height of the image 3. W ← the width of the image 4. read the text file 5. B ← an array of the total number of bounding box values from the text file 6. IF the length of B > 0: 7. FOR i = 0 to the length of B: 8. idx ← the value of the predicted classes of B[i] 9. left_x ← the value of the left_x of B[i] 10. top_y ← the value of the top_y of B[i] 11. w ← the value of the width of B[i] 12. h ← the value of the height of B[i] 13. a = the index of the idx value in classes [] 14. = (left_x + w/2)/W 15. = (top_y + h/2)/H 16. = w / W 17. = h / H 18. boundingBoxYOLO ←

concatenate ( a + "·" + + "" + + "·" + + "·" +) 19. write boundingBoxYOLO value in the text file 20. ENDIF |

4. Results and Discussion

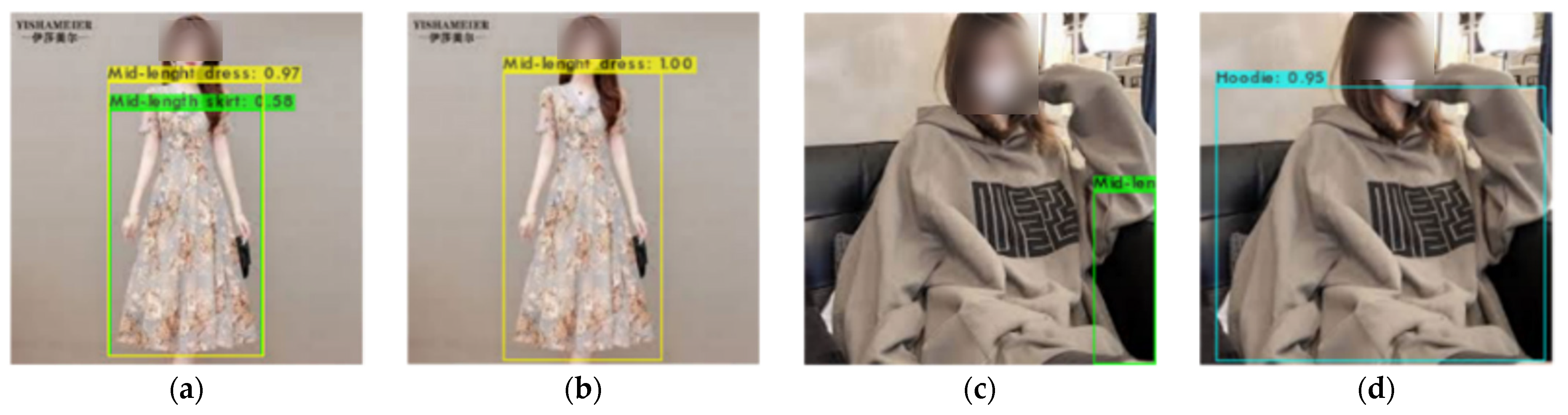

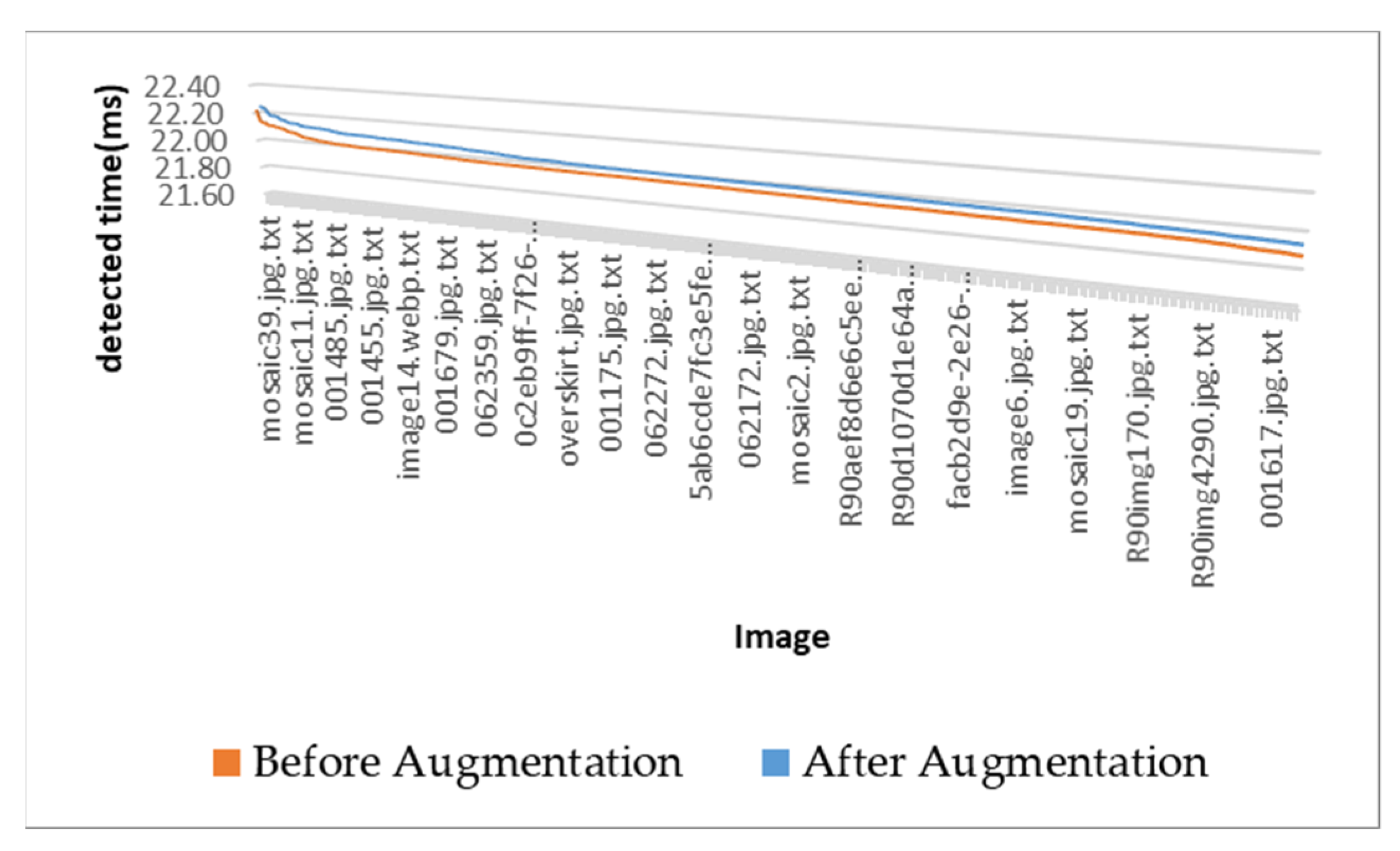

4.1. Comparison of before and after Pseudo-Labeling with YOLOv4

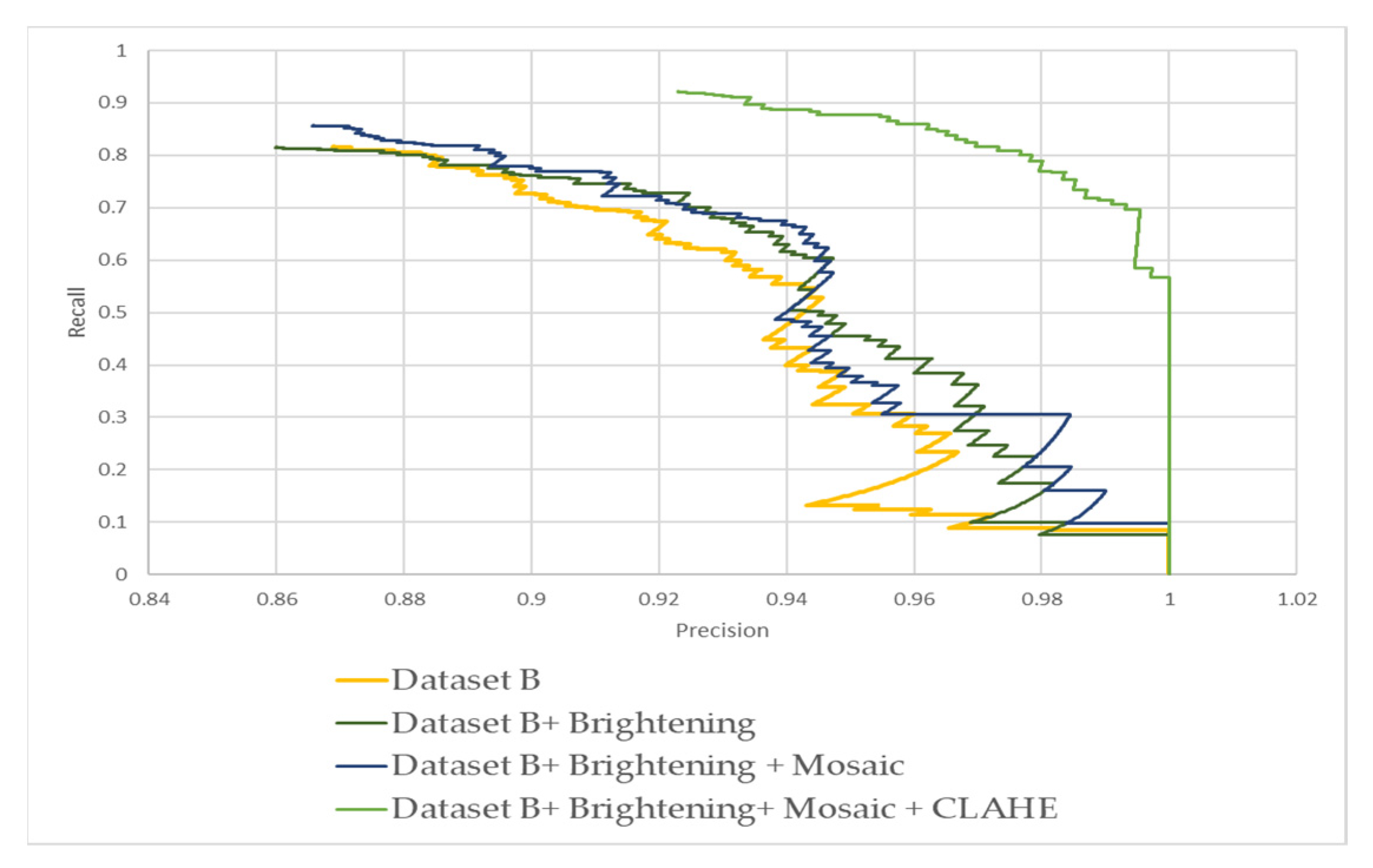

4.2. Comparison of Different Augmentation Effects on the Performance of FC-YOLOv4

4.3. Comparison of Our FC-YOLOv4 with YOLOv4 and YOLOv3

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Available online: https://www.Who.Int/News/Item/27-04-2020-Who-Timeline—COVID-19 (accessed on 12 January 2022).

- Kawasaki, T.; Wakashima, H.; Shibasaki, R. The Use of E-Commerce and the COVID-19 Outbreak: A Panel Data Analysis in Japan. Transp. Policy 2022, 115, 88–100. [Google Scholar] [CrossRef]

- Guthrie, C.; Fosso-Wamba, S.; Arnaud, J.B. Online Consumer Resilience during a Pandemic: An Exploratory Study of e-Commerce Behavior before, during and after a COVID-19 Lockdown. J. Retail. Consum. Serv. 2021, 61, 102570. [Google Scholar] [CrossRef]

- Agus, A.A.; Yudoko, G.; Mulyono, N.; Imaniya, T. E-Commerce Performance, Digital Marketing Capability and Supply Chain Capability within E-Commerce Platform: Longitudinal Study Before and After COVID-19. Int. J. Technol. 2021, 12, 360–370. [Google Scholar] [CrossRef]

- Choshin, M.; Ghaffari, A. An Investigation of the Impact of Effective Factors on the Success of E-Commerce in Small- and Medium-Sized Companies. Comput. Hum. Behav. 2017, 66, 67–74. [Google Scholar] [CrossRef]

- Thwe, Y.; Tungkasthan, A.; Jongsawat, N. Quality Analysis of Shopee Seller Portal by Using Category Recommendation System Approach. In Proceedings of the 2021 19th International Conference on ICT and Knowledge Engineering (ICT&KE), Bangkok, Thailand, 24 November 2021. [Google Scholar]

- Liu, Z.; Luo, P.; Qiu, S.; Wang, X.; Tang, X. DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1096–1104. [Google Scholar]

- Arulprakash, E.; Aruldoss, M. A Study on Generic Object Detection with Emphasis on Future Research Directions. J. King Saud Univ.—Comput. Inf. Sci. 2021, 33, 1–19. [Google Scholar] [CrossRef]

- Wu, H.; Gao, Y.; Guo, X.; Al-Halah, Z.; Rennie, S.; Grauman, K.; Feris, R. Fashion IQ: A New Dataset Towards Retrieving Images by Natural Language Feedback. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11307–11317. [Google Scholar]

- Huang, E.; Su, Z.; Zhou, F.; Wang, R. Learning Rebalanced Human Parsing Model from Imbalanced Datasets. Image Vis. Comput. 2020, 99, 103928. [Google Scholar] [CrossRef]

- Li, J.; Zhao, J.; Wei, Y.; Lang, C.; Li, Y.; Sim, T.; Yan, S.; Feng, J. Multiple-Human Parsing in the Wild. arXiv 2017, arXiv:1705.07206. [Google Scholar]

- Zhang, X.; Chen, Y.; Zhu, B.; Wang, J.; Tang, M. Semantic-Spatial Fusion Network for Human Parsing. Neurocomputing 2020, 402, 375–383. [Google Scholar] [CrossRef]

- Wang, W.; Xu, Y.; Shen, J.; Zhu, S.-C. Attentive Fashion Grammar Network for Fashion Landmark Detection and Clothing Category Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4271–4280. [Google Scholar]

- Zhang, H.; Huang, W.; Liu, L.; Xu, X. Clothes Collocation Recommendations by Compatibility Learning. In Proceedings of the 2018 IEEE International Conference on Web Services (ICWS)—Part of the 2018 IEEE World Congress on Services, San Francisco, CA, USA, 5 September 2018; pp. 179–186. [Google Scholar]

- Liu, L.; Zhang, H.; Xu, X.; Zhang, Z.; Yan, S. Collocating Clothes with Generative Adversarial Networks Cosupervised by Categories and Attributes: A Multidiscriminator Framework. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 3540–3554. [Google Scholar] [CrossRef]

- Mustaffa, M.R.; Wai, G.S.; Abdullah, L.N.; Nasharuddin, N.A. Dress Me up!: Content-Based Clothing Image Retrieval. In Proceedings of the 3rd International Conference on Cryptography, Security and Privacy, Trento, Italy, 19 January 2019; pp. 206–210. [Google Scholar]

- Park, S.; Shin, M.; Ham, S.; Choe, S.; Kang, Y. Study on Fashion Image Retrieval Methods for Efficient Fashion Visual Search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Kuang, Z.; Gao, Y.; Li, G.; Luo, P.; Chen, Y.; Lin, L.; Zhang, W. Fashion Retrieval via Graph Reasoning Networks on a Similarity Pyramid. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 3066–3075. [Google Scholar]

- Gupta, M.; Bhatnagar, C.; Jalal, A.S. Clothing Image Retrieval Based on Multiple Features for Smarter Shopping. Procedia Comput. Sci. 2018, 125, 143–148. [Google Scholar] [CrossRef]

- Adhiparasakthi Engineering College. Department of Electronics and Communication Engineering; Institute of Electrical and Electronics Engineers. Madras Section; Institute of Electrical and Electronics Engineers. In Proceedings of the 2018 IEEE International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 3–5 April 2018. [Google Scholar]

- Nascimento, J.C.; Marques, J.S. Performance Evaluation of Object Detection Algorithms for Video Surveillance. IEEE Trans. Multimed. 2006, 8, 761–773. [Google Scholar] [CrossRef]

- Hoda, M.N. INDIACom 10. In Proceedings of the 10th INDIACom; 2016 3rd International Conference on Computing for Sustainable Global Development, New Delhi, India, 16–18 March 2016. [Google Scholar]

- Joshi, K.A.; Thakore, D.G. A Survey on Moving Object Detection and Tracking in Video Surveillance System. Int. J. Soft Comput. Eng. 2012, 2, 44–48. [Google Scholar]

- Arnold, E.; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A Survey on 3D Object Detection Methods for Autonomous Driving Applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795. [Google Scholar] [CrossRef]

- Wu, B.; Iandola, F.; Jin, P.H.; Keutzer, K. SqueezeDet: Unified, Small, Low Power Fully Convolutional Neural Networks for Real-Time Object Detection for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 129–137. [Google Scholar]

- Li, B.; Ouyang, W.; Sheng, L.; Zeng, X.; Wang, X. GS3D: An Efficient 3D Object Detection Framework for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1019–1028. [Google Scholar]

- Li, J.; Liang, X.; Shen, S.; Xu, T.; Feng, J.; Yan, S. Scale-Aware Fast R-CNN for Pedestrian Detection. IEEE Trans. Multimed. 2018, 20, 985–996. [Google Scholar] [CrossRef]

- Angelova, A.; Krizhevsky, A.; Vanhoucke, V.; Ogale, A.; Ferguson, D. Real-Time Pedestrian Detection With Deep Network Cascades. In Proceedings of the British Machine Vision Conference (BMVC), Swansea, UK, 7–10 September 2015. [Google Scholar]

- Hosang, J.; Omran, M.; Benenson, R.; Schiele, B. Taking a Deeper Look at Pedestrians. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4073–4082. [Google Scholar]

- Hara, K.; Jagadeesh, V.; Piramuthu, R. Fashion Apparel Detection: The Role of Deep Convolutional Neural Network and Pose-Dependent Priors. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016. [Google Scholar]

- Zheng, S.; Hadi Kiapour, M.; Yang, F.; Piramuthu, R. ModaNet: A Large-Scale Street Fashion Dataset with Polygon Annotations. In Proceedings of the 2018 ACM Multimedia Conference, Seoul, Korea, 15 October 2018; pp. 1670–1678. [Google Scholar]

- Lao, B.; Jagadeesh, K. Convolutional Neural Networks for Fashion Classification and Object Detection. CCCV 2015 Comput. Vis. 2015, 546, 120–129. [Google Scholar]

- Brasoveanu, A.; Moodie, M.; Agrawal, R. Textual Evidence for the Perfunctoriness of Independent Medical Reviews. In Proceedings of the CEUR Workshop Proceedings, CEUR-WS, Bologna, Italy, 14–16 September 2020; Volume 2657. [Google Scholar]

- Hidayati, S.C.; You, C.W.; Cheng, W.H.; Hua, K.L. Learning and Recognition of Clothing Genres from Full-Body Images. IEEE Trans. Cybern. 2017, 48, 1647–1659. [Google Scholar] [CrossRef] [PubMed]

- Dong, Q.; Gong, S.; Zhu, X. Multi-Task Curriculum Transfer Deep Learning of Clothing Attributes. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 11 May 2017; pp. 520–529. [Google Scholar]

- Do, T.T.; Nguyen, A.; Reid, I. Affordancenet: An end-to-end deep learning approach for object affordance detection. In Proceedings of the Institute of Electrical and Electronics Engineers 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 5882–5889. [Google Scholar]

- Xiao, Y.; Tian, Z.; Yu, J.; Zhang, Y.; Liu, S.; Du, S.; Lan, X. A Review of Object Detection Based on Deep Learning. Multimed. Tools Appl. 2020, 79, 23729–23791. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Cheng, R. A Survey: Comparison between Convolutional Neural Network and YOLO in Image Identification. In Journal of Physics: Conference Series; Institute of Physics Publishing: Bristol, UK, 2020; Volume 1453. [Google Scholar]

- Kim, H.J.; Lee, D.H.; Niaz, A.; Kim, C.Y.; Memon, A.A.; Choi, K.N. Multiple-Clothing Detection and Fashion Landmark Estimation Using a Single-Stage Detector. IEEE Access 2021, 9, 11694–11704. [Google Scholar] [CrossRef]

- Lee, C.H.; Lin, C.W. A Two-Phase Fashion Apparel Detection Method Based on Yolov4. Appl. Sci. 2021, 11, 3782. [Google Scholar] [CrossRef]

- Li, N.; Cheng, B.; Zhang, J. A Cascade Model with Prior Knowledge for Bone Age Assessment. Appl. Sci. 2022, 12, 7371. [Google Scholar] [CrossRef]

- Chen, H.; Gallagher, A.; Girod, B. Describing Clothing by Semantic Attributes. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 609–623. [Google Scholar]

- Bossard, L.; Dantone, M.; Leistner, C.; Wengert, C.; Quack, T.; Gool, L.V. Apparel Classification with Style. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 321–335. [Google Scholar]

- Zhou, T.; Qi, S.; Wang, W.; Shen, J.; Zhu, S.C. Cascaded Parsing of Human-Object Interaction Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2827–2840. [Google Scholar] [CrossRef]

- Zhou, T.; Wang, W.; Liu, S.; Yang, Y.; Gool, L.V. Differentiable Multi-Granularity Human Representation Learning for Instance-Aware Human Semantic Parsing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 1622–1631. [Google Scholar]

- Chen, M.; Qin, Y.; Qi, L.; Sun, Y. Improving Fashion Landmark Detection by Dual Attention Feature Enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Kumar Addagarla, S. Single Stage Deep Transfer Learning Model for Apparel Detection and Classification for E-Commerce. Int. J. Electron. Commer. Stud. 2022, 13, 69–92. [Google Scholar] [CrossRef]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A Survey of Deep Learning-Based Object Detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Fu, L.; Duan, J.; Zou, X.; Lin, J.; Zhao, L.; Li, J.; Yang, Z. Fast and Accurate Detection of Banana Fruits in Complex Background Orchards. IEEE Access 2020, 8, 196835–196846. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22 December 2017; Volume 2017. [Google Scholar]

- Lohia -Guise, A. Bibliometric Analysis of One-Stage and Two-Stage Object Detection. Libr. Philos. Pract. 2021, 4910. Available online: https://digitalcommons.unl.edu/libphilprac/4910 (accessed on 17 January 2022).

- Fujii, K.; Kawamoto, K. Generative and Self-Supervised Domain Adaptation for One-Stage Object Detection. Array 2021, 11, 100071. [Google Scholar] [CrossRef]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. OverFeat: Integrated Recognition, Localization and Detection Using Convolutional Networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Springer: Berlin/Heidelberg, Germany, 2015; pp. 21–37. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Feng, Z.; Luo, X.; Yang, T.; Kita, K. An object detection system based on YOLOv2 in fashion apparel. In Proceedings of the Institute of Electrical and Electronics Engineers 2018 IEEE 4th International Conference on Computer and Communications (ICCC), IEEE, Chengdu, China, 7–10 December 2018; pp. 1532–1536. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Chai, E.; Ta, L.; Ma, Z.; Zhi, M. ERF-YOLO: A YOLO Algorithm Compatible with Fewer Parameters and Higher Accuracy. Image Vis. Comput. 2021, 116, 104317. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A Detection Algorithm for Cherry Fruits Based on the Improved YOLO-v4 Model. Neural Comput. Appl. 2021, 33, 1–15. [Google Scholar] [CrossRef]

- Mameli, M.; Paolanti, M.; Pietrini, R.; Pazzaglia, G.; Frontoni, E.; Zingaretti, P. Deep Learning Approaches for Fashion Knowledge Extraction from Social Media: A Review. IEEE Access 2022, 10, 1545–1576. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Liu, K.H.; Liu, T.J.; Wang, F. Cbl: A Clothing Brand Logo Dataset and a New Method for Clothing Brand Recognition. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 24 January 2021; Volume 2021, pp. 655–659. [Google Scholar]

- Jia, M.; Shi, M.; Sirotenko, M.; Cui, Y.; Cardie, C.; Hariharan, B.; Adam, H.; Belongie, S. Fashionpedia: Ontology, Segmentation, and an Attribute Localization Dataset. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 316–332. [Google Scholar]

- Tiwari, G.; Bhatnagar, B.L.; Tung, T.; Pons-Moll, G. SIZER: A Dataset and Model for Parsing 3D Clothing and Learning Size Sensitive 3D Clothing. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020. [Google Scholar]

- de Souza Inácio, A.; Lopes, H.S. Epynet: Efficient Pyramidal Network for Clothing Segmentation. IEEE Access 2020, 8, 187882–187892. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, H.; Jin, D.; Liu, Y.; Wu, C.-H.; Tan, J.; Xie, D.; Wang, J.; Wang, X. Fashion Captioning: Towards Generating Accurate Descriptions with Semantic Rewards. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Yamaguchi, K.; Hadi, M.; Luis, K.; Ortiz, E.; Berg, T.L. Parsing Clothing in Fashion Photographs. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3570–3577. [Google Scholar]

- Tang, Y.; Li, Y.; Borisyuk, F.; Liu, Y.; Malreddy, S.; Kirshner, S. Msuru: Large Scale e-Commerce Image Classification with Weakly Supervised Search Data. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2518–2526. [Google Scholar]

- Zhou, M.; Ding, Z.; Tang, J.; Yin, D. Micro Behaviors: A New Perspective in E-Commerce Recommender Systems. In Proceedings of the 11th ACM International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 2 February 2018; Volume 2018, pp. 727–735. [Google Scholar]

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Analysis of Recommendation Algorithms for E-Commerce. In Proceedings of the 2nd ACM Conference on Electronic Commerce, Minneapolis, MN, USA, 17–20 October 2000; pp. 158–167. [Google Scholar]

- Paraschakis, D.; Nilsson, B.J.; Hollander, J. Comparative Evaluation of Top-N Recommenders in e-Commerce: An Industrial Perspective. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; pp. 1024–1031. [Google Scholar]

- Lin, Y.C.; Das, P.; Trotman, A.; Kallumadi, S. A Dataset and Baselines for E-Commerce Product Categorization. In Proceedings of the 2019 ACM SIGIR International Conference on Theory of Information Retrieval, Santa Clara, CA, USA 2–5 October 2019; pp. 213–216. [Google Scholar]

- Available online: https://Open.Shopee.Com/ (accessed on 17 January 2022).

- Yamin Thwe Shopee Image Dataset (Thailand). IEEE Dataport 2022.

- Tzutalin LabelImg. Available online: https://github.com/heartexlabs/labelImg (accessed on 17 January 2022).

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Yeh, I.-H.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1904–1916. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2020, 52, 8574–8586. [Google Scholar] [CrossRef]

- Nguyen, Q.H.; Ly, H.B.; Ho, L.S.; Al-Ansari, N.; van Le, H.; Tran, V.Q.; Prakash, I.; Pham, B.T. Influence of Data Splitting on Performance of Machine Learning Models in Prediction of Shear Strength of Soil. Math. Probl. Eng. 2021, 2021, 4832864. [Google Scholar] [CrossRef]

- Available online: https://Github.Com/AlexeyAB/Darknet (accessed on 17 January 2022).

| Categories | Number of Images | Number of Bounding Boxes |

|---|---|---|

| Pants | 741 | 1212 |

| Dress | 699 | 1048 |

| Hoodie | 1059 | 1843 |

| Jacket | 684 | 977 |

| Skirt | 933 | 1685 |

| Total | 4116 | 6765 |

| Categories | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pants | 428 | 173 | 76 | 19 | 12 | 4 | 3 | 4 | 2 | |||||

| Dress | 485 | 142 | 41 | 11 | 5 | 7 | 4 | 1 | ||||||

| Hoodie | 725 | 177 | 54 | 46 | 25 | 18 | 5 | 4 | 6 | 2 | 2 | |||

| Jacket | 561 | 62 | 39 | 22 | 9 | 1 | 1 | 1 | ||||||

| Skirt | 605 | 177 | 63 | 18 | 19 | 13 | 16 | 7 | 4 | 3 | 1 | 2 | 1 | 1 |

| Input Image Size | Batch | Momentum | Initial Learning Rate | Decay | Training Steps |

|---|---|---|---|---|---|

| 416 × 416 | 64 | 0.949 | 0.001 | 0.0005 | 8000, 9000 |

| Before Pseudo-Labelling (Dataset A) | After Pseudo-Labelling (Dataset B) | Brightening | Mosaic | CLAHE | Total |

|---|---|---|---|---|---|

| 663 | 3453 | 12,348 | 428 | 3453 | 20,345 |

| Dataset Name | Dataset Content |

|---|---|

| Dataset A | Before Pseudo-labelling |

| Dataset B | After Pseudo-labelling |

| Dataset C | Dataset B + the Brightening Augmented Dataset |

| Dataset D | Dataset C + Mosaic |

| Dataset E | Dataset D + CLAHE |

| Before Pseudo-Labelling | After Pseudo-Labelling | |||||||

|---|---|---|---|---|---|---|---|---|

| Categories | AP | TP | FP | [email protected] | AP | TP | FP | [email protected] |

| Pants | 94.06% | 790 | 212 | 0.62 | 97.55% | 808 | 54 | 0.97 |

| Mid-length dress | 46.68% | 282 | 58 | 96.80% | 716 | 42 | ||

| Hoodie | 50.09% | 388 | 142 | 96.53% | 882 | 40 | ||

| Jacket | 61.75% | 270 | 22 | 97.19% | 512 | 36 | ||

| Mid-length skirt | 60.23% | 864 | 354 | 99.32% | 1526 | 58 | ||

| Total Parameters | Detection Time | Accuracy (mAp) | Size | |

|---|---|---|---|---|

| FC-YOLOv4 | 63,959,226 | 33 milliseconds | 95.87% | 266 MB |

| YOLOv4 | 63,959,226 | 32 milliseconds | 95.80% | 244 MB |

| YOLOv3 | 61,545,274 | 32 milliseconds | 55.67% | 234 MB |

| IOU | FC-YOLOv4 | YOLOv4 | YOLOv3 |

|---|---|---|---|

| 0 | 8111 | 8091 | 6119 |

| 0.1 | 7745 | 7766 | 4263 |

| 0.2 | 7583 | 7575 | 3674 |

| 0.3 | 7444 | 7440 | 3301 |

| 0.4 | 7307 | 7292 | 3008 |

| 0.5 | 7132 | 7127 | 2733 |

| 0.6 | 6957 | 6961 | 2442 |

| 0.7 | 6737 | 6705 | 2155 |

| 0.8 | 6403 | 6358 | 1794 |

| 0.9 | 5687 | 5613 | 1324 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thwe, Y.; Jongsawat, N.; Tungkasthan, A. A Semi-Supervised Learning Approach for Automatic Detection and Fashion Product Category Prediction with Small Training Dataset Using FC-YOLOv4. Appl. Sci. 2022, 12, 8068. https://doi.org/10.3390/app12168068

Thwe Y, Jongsawat N, Tungkasthan A. A Semi-Supervised Learning Approach for Automatic Detection and Fashion Product Category Prediction with Small Training Dataset Using FC-YOLOv4. Applied Sciences. 2022; 12(16):8068. https://doi.org/10.3390/app12168068

Chicago/Turabian StyleThwe, Yamin, Nipat Jongsawat, and Anucha Tungkasthan. 2022. "A Semi-Supervised Learning Approach for Automatic Detection and Fashion Product Category Prediction with Small Training Dataset Using FC-YOLOv4" Applied Sciences 12, no. 16: 8068. https://doi.org/10.3390/app12168068